DOI:10.32604/cmc.2022.028190

| Computers, Materials & Continua DOI:10.32604/cmc.2022.028190 |  |

| Article |

Robust and High Accuracy Algorithm for Detection of Pupil Images

1Electronics and Communications Engineering Department, Faculty of Engineering, MSA University, CO, 12585, Egypt

2Computer Engineering Department, College of Computer and Information Technology, Taif University, Taif, 21944, Saudi Arabia

3Faculty of Health and Rehabilitation Sciences, Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia

4Department of Electrical Engineering, College of Engineering, Taif University, Taif, 21944, Saudi Arabia

5Electronics and Communications Engineering Department, Faculty of Engineering, Sinai University, Arish, CO, 45511, Egypt

*Corresponding Author: Sherif S. M. Ghoneim. Email: s.ghoneim@tu.edu.sa

Received: 04 February 2022; Accepted: 21 March 2022

Abstract: Recently, many researchers have tried to develop a robust, fast, and accurate algorithm. This algorithm is for eye-tracking and detecting pupil position in many applications such as head-mounted eye tracking, gaze-based human-computer interaction, medical applications (such as deaf and diabetes patients), and attention analysis. Many real-world conditions challenge the eye appearance, such as illumination, reflections, and occasions. On the other hand, individual differences in eye physiology and other sources of noise, such as contact lenses or make-up. The present work introduces a robust pupil detection algorithm with and higher accuracy than the previous attempts for real-time analytics applications. The proposed circular hough transform with morphing canny edge detection for Pupillometery (CHMCEP) algorithm can detect even the blurred or noisy images by using different filtering methods in the pre-processing or start phase to remove the blur and noise and finally the second filtering process before the circular Hough transform for the center fitting to make sure better accuracy. The performance of the proposed CHMCEP algorithm was tested against recent pupil detection methods. Simulations and results show that the proposed CHMCEP algorithm achieved detection rates of 87.11, 78.54, 58, and 78 according to Świrski, ExCuSe, Else, and labeled pupils in the wild (LPW) data sets, respectively. These results show that the proposed approach performs better than the other pupil detection methods by a large margin by providing exact and robust pupil positions on challenging ordinary eye pictures.

Keywords: Pupil detection; eye tracking; pupil edge; morphing techniques; eye images dataset

Recently, pupil detection is one of the most important researches that have a lot of indoor and outdoor applications in our real-life [1]. Different pupil detection algorithms can be classified into (1) Indoor pupil detection algorithms that use captured eye images under different lab conditions using infrared light [2]. (2) Outdoor pupil detection algorithms that use eye images under natural environments or in real-world conditions, a lot of work has been developed for outdoor eye tracking applications such as driving [3] and shopping [4]. On the other hand, the pupil detection algorithm can be divided into two main parts: (1) pupil detection or pupil segmentation part which has different methods or operations to detect the pupil such as Down Sampling, Bright/Dark Pupil Difference, Image Threshold/Binarization, Morphing Technique, Edge Detection, Blob Detection. (2) Calculating the pupil center and the pupil radius part which has different methods to detect the pupil center and measure the pupil radius such as: Using Circle/Ellipse Fitting or Proposed Center of Mass algorithm [5]. There was a huge work done for detecting and calculating the pupil center and the pupil radius, and that can be summarized as the following: Kumar et al. [6], in which the pupil detection or pupil segmentation was done by using Morphing Technique, Edge Detection, Blob Detection for edges. Lin et al. [7], in which (1) pupil detection or pupil segmentation was done by using Down Sampling, Image Thresholding/Binarization, Morphing Technique, and Edge Detection. (2) Calculating the pupil center and the pupil radius was done by using the proposed Parallelogram Center of Mass algorithm. Agustin et al. [8], in which (1) pupil detection or pupil segmentation was done by using Image Thresholding/Binarization. (2) Calculating the pupil center and the pupil radius was done by using Ellipse Fitting using a random sample consensus (RANSAC) algorithm. Keil et al. [9], in which (1) pupil detection or pupil segmentation was done by using Image Thresholding/Binarization, Glint Edge Detection. (2) Calculating the pupil center and the pupil radius was done by using the proposed Center of Mass algorithm [1,5]. Sari et al. [10] in 2016, presented a study of algorithms of pupil diameter measurement, in which (1) pupil detection or pupil segmentation was done by using Image Thresholding/Binarization. (2) Calculating the pupil center and the pupil radius was done by using the Least-Square method fitting Circle Equation, Hough Transform is used to create pupil Circle and Proposed Center of Mass algorithm. Saif et al. [11] in 2017, proposed a study of pupil orientation and detection, in which (1) pupil detection or pupil segmentation was performed and (2) Calculating the pupil center and the pupil radius was done by using Circle Equation Algorithm to detect the pupil center and its contour. From the previous attempts, we can conclude that there is a tradeoff between the complexity of the code which increases accuracy and consuming time. Calculating the pupil center and the pupil radius part by using different methods such as Circle Fitting, Ellipse Fitting, RANSAC, Ellipse Fitting, or Proposed center of mass algorithm and that may be accomplished with Edge detection method will consume more processing time to get accurate results. The rest of the paper is organized as follows: Section 2 offers recent pupil detection methods, whereas Section 3 discusses the proposed algorithm. In Section 4, the data sets are presented. In Section 5, the results and their discussions are illustrated. The main conclusion points are drawn in Section 6.

2 Recent Pupil Detection Methods

In this section, we will discuss six recent methods of the pupil detection: ElSe [12], ExCuSe [13], Pupil Labs [14], SET [15], Starburst [16], and Świrski et al. [17].

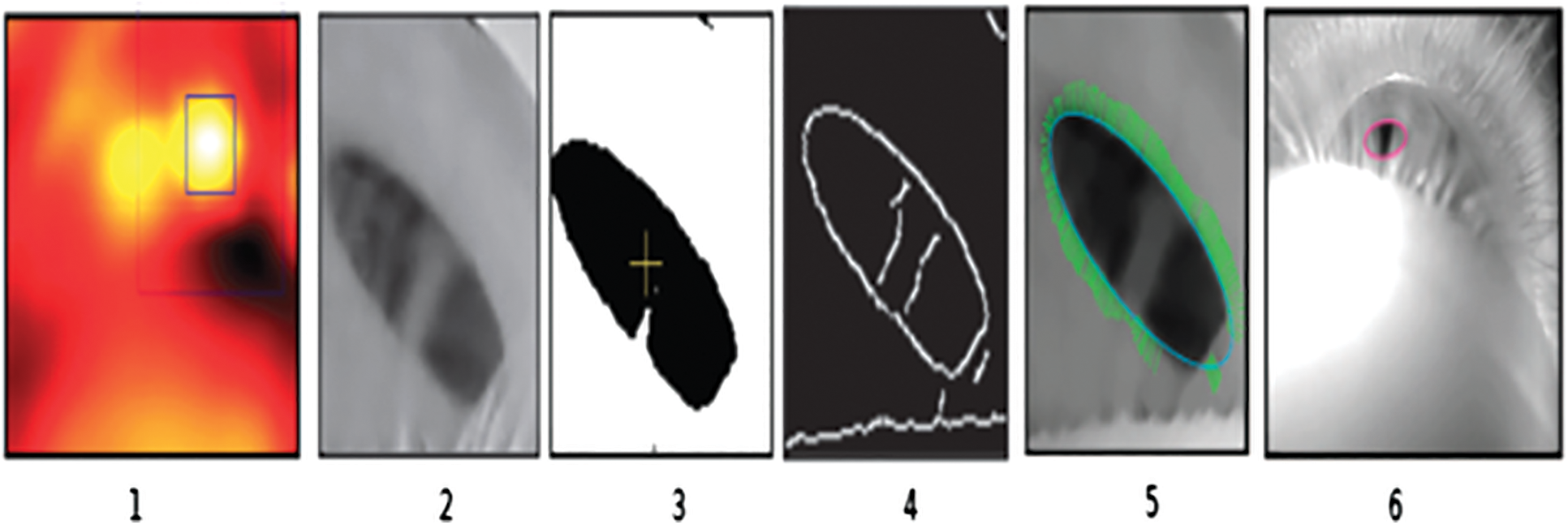

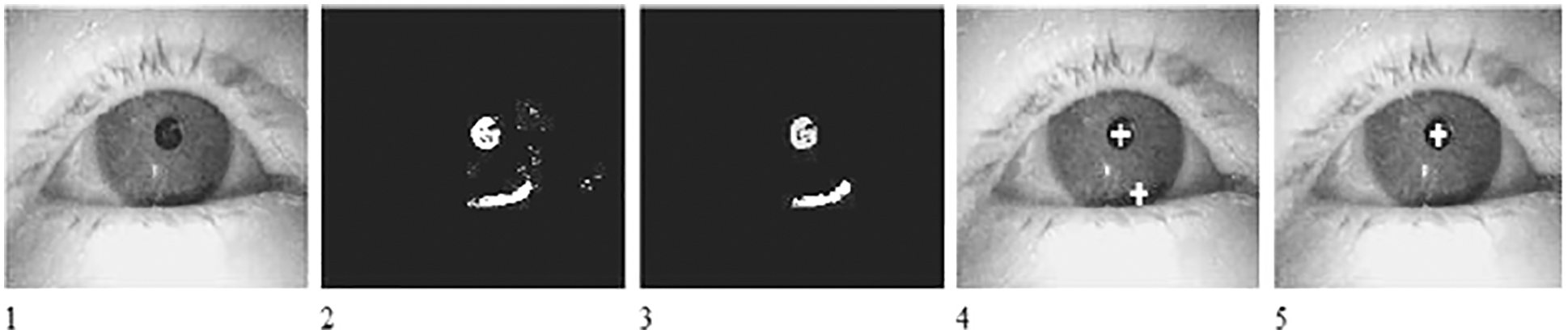

Świrski et al. [17] proposed an algorithm that follows the given steps as shown in Fig. 1. (1) convolution of the eye image with a Haar-like center-surround feature. (2) The region of the maximum response identifies the pupil region. (3) The pupil edge detection was done by using segmentation of the pupil region by k-means clustering of its histogram. (4) The segmented pupil region is passed through the Canny edge detector. (5) Random Sample Consensus (RANSAC) ellipse fitting is employed to detect the pupil. (6) Pupil detection.

Figure 1: Świrski et al. approach [17]

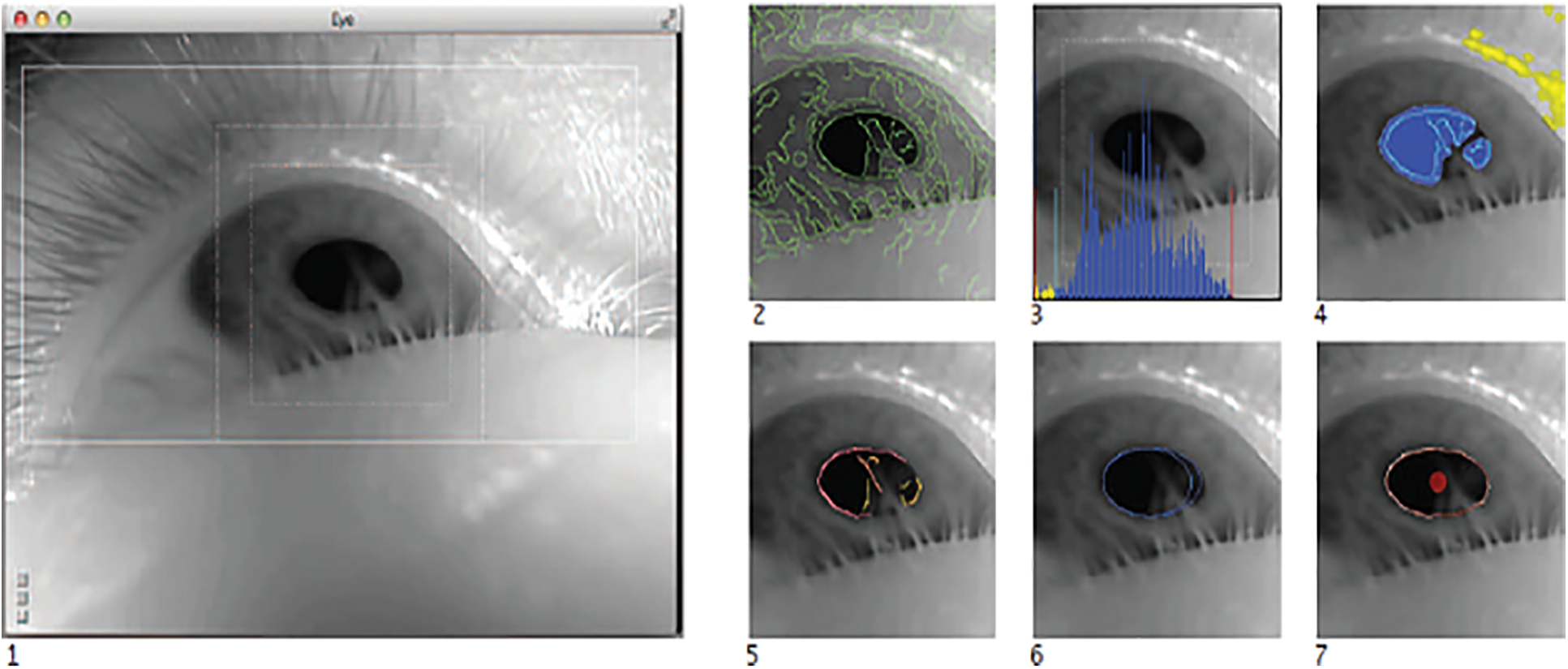

Pupil Labs [14], introduced a Pupil Labs detector used for a head-mounted eye-tracking platform Pupil as shown in Fig. 2. Pupil Labs detector algorithm starts by converting the eye image into the grayscale, the user region of interest (white stroke rectangle), and the initial region estimation of the pupil (white square and dashed line square) is found via the strongest response for a center-surround feature as proposed in Świrski et. al [17]. (2) Canny edge detection with color (green lines) is used to detect the edges in the eye image and filter these edges according to neighboring pixel intensity. (3) Based on setting a specified dark value by a user offset (where the dark value is the lowest peak in the histogram of pixel intensities in the eye image), the algorithm looks for darker areas. (4) Filtering edges are used to identify “dark” areas with color (blue) and to exclude spectral reflections with color (yellow). (5) Remaining edges are employed as sub-contours (multi-colored lines) based on curvature continuity criteria [18]. (6) Ellipse fitting is employed for the pupil ellipses with color (blue). (7) Finally, pupil ellipse with its center color (red).

Starburst [16] introduced an algorithm that follows the given steps shown in Fig. 3. (1) The input image was filtered and smoothed from noise by using a Gaussian filter. (2) The adaptive threshold is employed to identify the location of the corneal reflection, and the pupil edge contour was estimated by detecting the edge threshold values (starting positions) exceeding an edge threshold. (3) Each point is used to offer a new contour pupil. As a result, these points (edge threshold values) are utilized as un-used beginning focuses given from candidates for rays. These rays are going in the opposite direction. (4) The searching process is repeated iteratively until reaching convergence. (5) Finally, the RANSAC ellipse fitting estimates the pupil center.

The exclusive Curve Selector approach (ExCuSe) [13], proposed by Fuhl et al. 2015, is a recent method in which pupil detection is based on Edge Detection [19]. Morphing technique, the pupil center, and the pupil radius are calculated by using RANSAC ellipse fitting algorithm, as shown in Fig. 4. First, the input image is normalized, and its histogram can be calculated then the pupil can be estimated based on the maximum value of the bright histogram area is found. Fig. 4 shows the following (1) input image with many reflections. (2) Filtering image by Canny edge detection. (3) Morphing technique operators refine edges to be more thin edges instead of thick ones. (4) All remaining edges are smoothed and analyzed regarding their curvature, and orthogonal edges are removed using morphing operators. (5) For each remaining edge curve, the enclosed mean intensity value is calculated to choose the pupil curve with the lowest value (best edge). An ellipse is fitted to this curve, and its center is taken as the pupil center. (6) Input image without reflections. (7) The Coarse pupil is estimated based on the angular integral projection function (AIPF) [19]. (8) Canny edge detection is used to refine the pupil position. (9) The pupil edge position (white line) is estimated by using the optimized edges, which are the white dots (ray hits). (10) Estimating the pupil center. Be Sinusoidal Eye Tracker (SET) approach [15], proposed by Javadi et al. is a recent method in which a combination of manual and automatic estimation of the pupil center. SET starts by setting manually two parameters before the pupil detection process: (a) the threshold parameter to convert the input image to a binary image Fig. 5(2), (b) the size of the segments parameter to detect the pupil Fig. 5(3). The image is thresholded and then segmented. The Convex Hull method presented in [20] is employed to compute the segment borders (the image pixels are grouped into) for the segments with threshold values larger than a manually predefined threshold value. Then an ellipse is fitted to each extracted segment; Fig. 5(4). The pupil edge is estimated by selecting the ellipse that is closest to a circle Fig. 5(5).

Figure 2: The Pupil Labs approach [14]

Figure 3: The Starburst approach [15,16]

Figure 4: Exclusive Curve Selector (ExCuSe) approach [13]

Figure 5: SET approach [15]

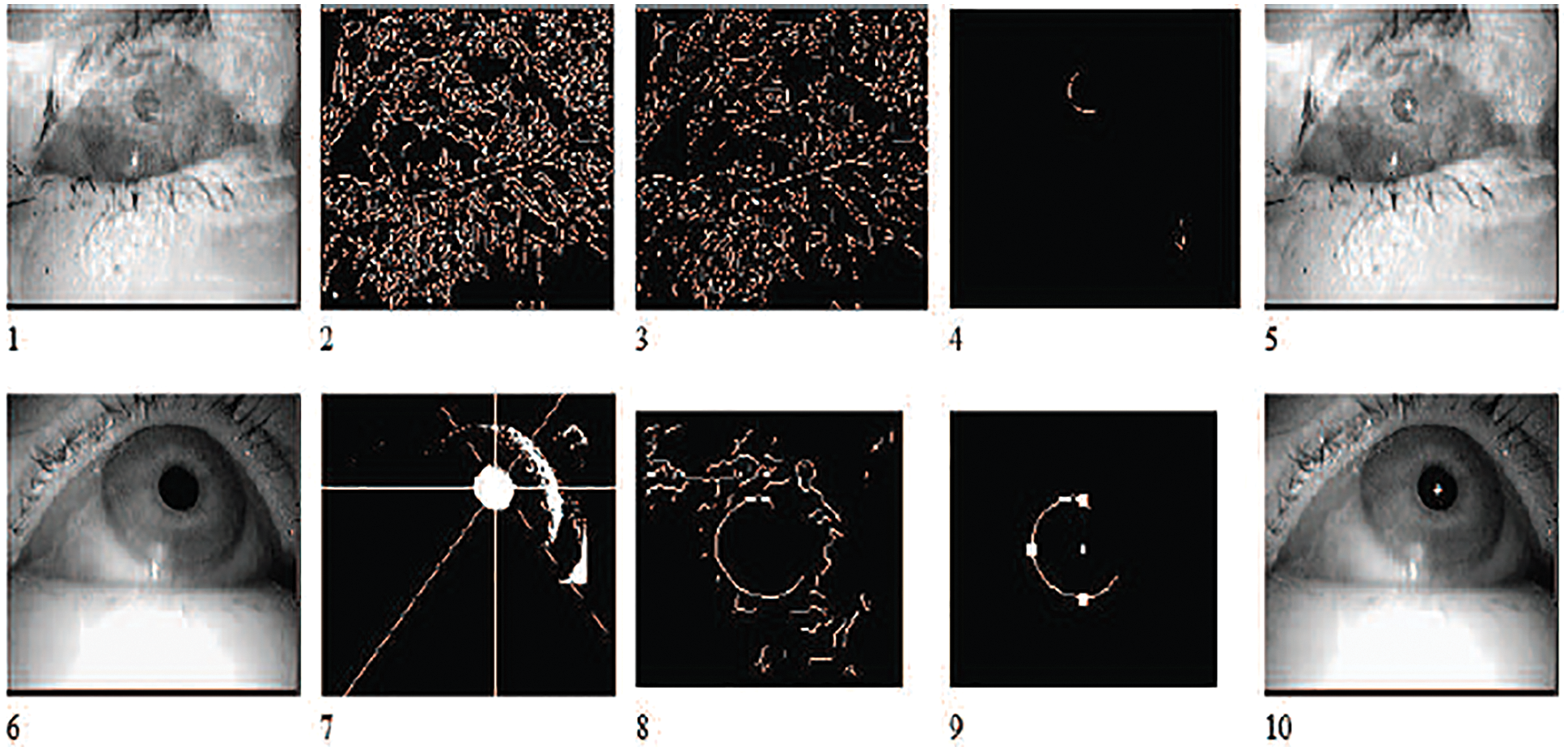

ANFIS Else [12], proposed by Fuhl et al. 2016. it’s a recent method in which pupil detection is based on edge detection (Canny edge detection algorithm), morphing technique operators, calculating the pupil center and the pupil radius by using an ellipse fitting algorithm. From Fig. 6, (1) input image. (2) applying a Canny edge filter for the input eye image, employing morphing technique operators to remove the edges that can’t satisfy the curvature property of the pupil edge. (3) keep and collect the other edges that satisfy the intensity degree and the curvature or elliptic property and fit an ellipse. (4) edges that have lower intensity values and higher circular ellipse values are chosen as the pupil boundaries. (5) If ElSe [12] fails to achieve a valid ellipse that describes the pupil edge, a second analysis is adopted in which the input image in (6) is downscaled by using to keep the dark regions as in (7) and also to reduce the blurred regions and noise that caused by eyelashes in the eye image. (8) employing the convolution between the image with two cascaded filters (a) a surface filter to calculate the area difference between an inner circle and a surrounding box, and (b) a mean filter. The ElSe [12] algorithm calculates the maximum threshold value for a pupil region to be a starting point of the refinement process for a pupil area estimation. (9) a starting point of the refinement process for a pupil area estimation is optimized on the full-scale image to avoid a distance error of the pupil center since it is selected in the downscaled image. (10) Calculating the pupil center and the pupil radius by using an ellipse fitting algorithm is based on a decision-based approach as described in [4].

Figure 6: Ellipse Selector approach (Else) [12]

3 The Proposed CHMCEP Algorithm

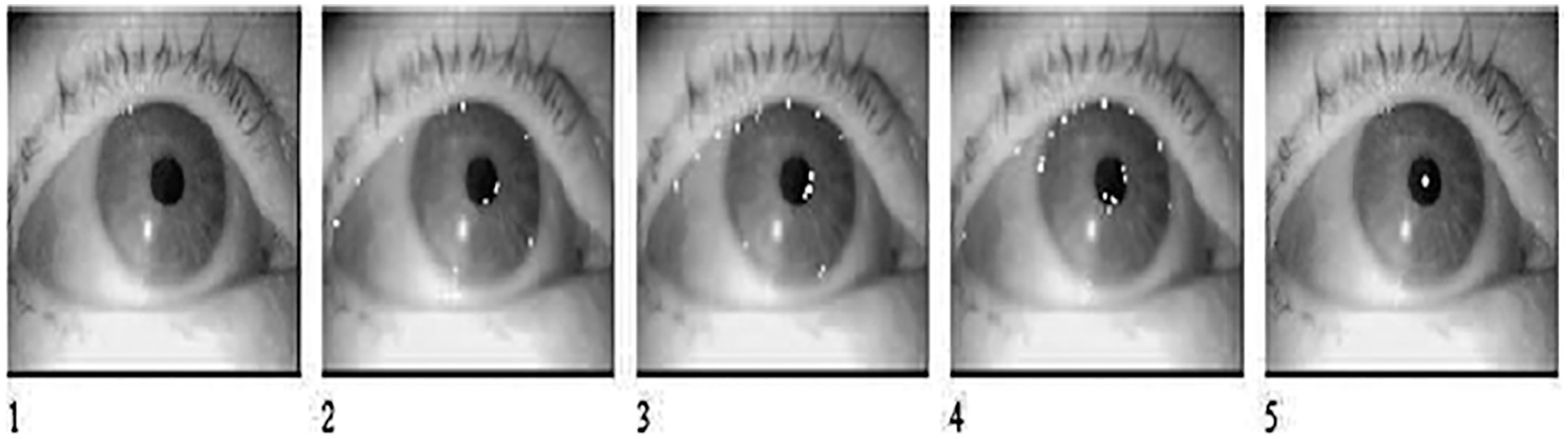

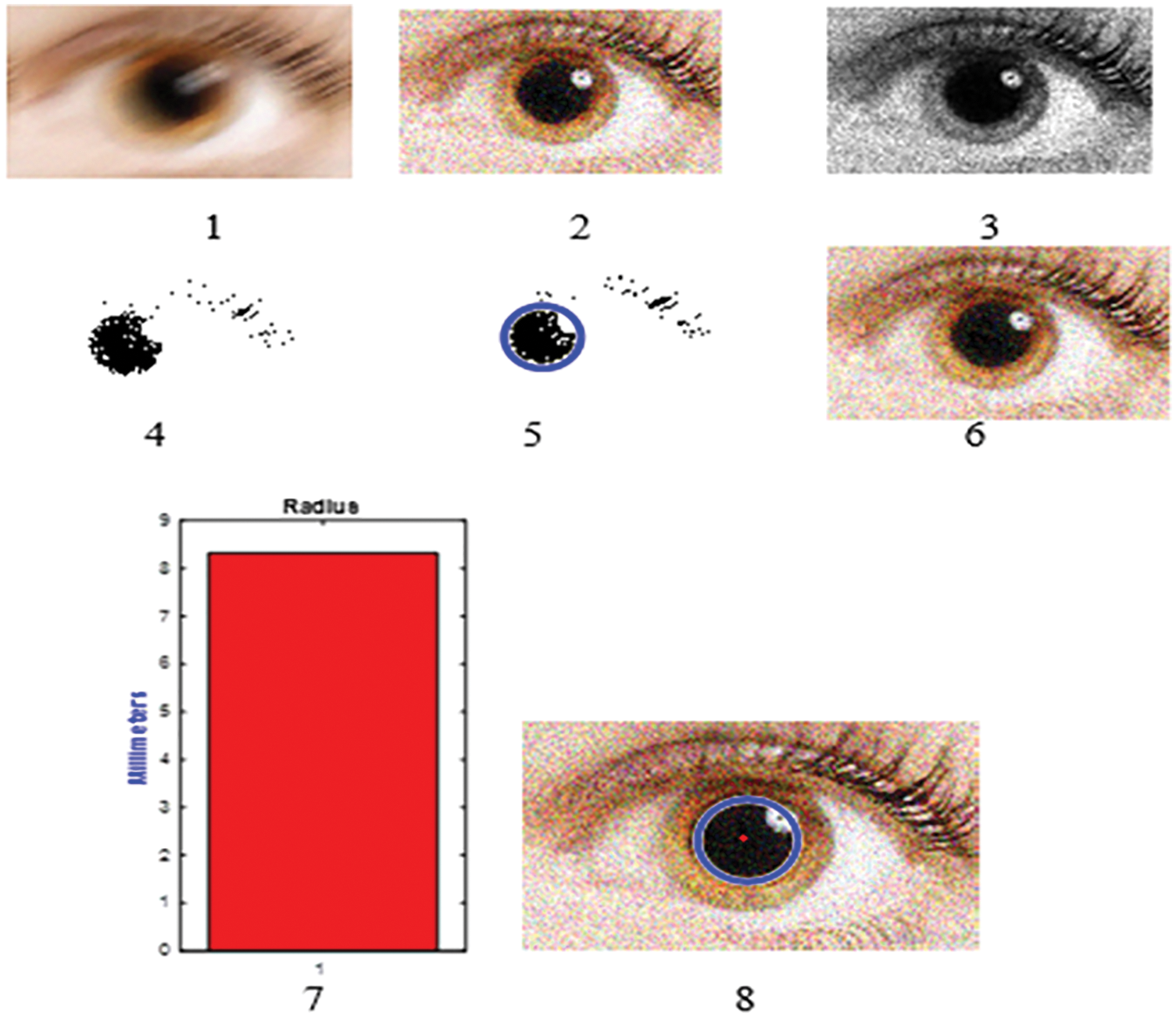

This paper introduces (CHMCEP) algorithm to detect the pupil edge and calculate the pupil center and radius with more accurate results than the other attempts. On the other hand, the proposed CHMCEP algorithm can detect even the blurred or noisy images by using different filtering methods at the first stage to remove the blur and noise and at the last stage, the second filtering process before the circular Hough transform for the center fitting to make sure better accuracy. Initially, the input image restoration is obtained by removing the blurred content and the additive noise to the input image as seen in Fig. 7(1), and that was done by using a convolution process with three different filters separately to get better performance: a motion filter to remove the blurred content, a mean filter for image noise cancellation, a Wiener filter for final smoothing of the input image to get better image restoration from blurs, and noise as seen in Fig. 7(2). Then the restored input image is converted from RGB scale to Grayscale image or the intensity image as seen in Fig. 7(3), and that enables the bright/dark pupil difference to show the eye limbus in the image in fixed case. The next step is the intensity image is converted to the binary image by finding gray threshold by using Otsu’s method which is based on the adaptive threshold selection [21] that provides the threshold value to convert the input image to the binary image, as seen in Fig. 7(4). Then the proposed CHMCEP algorithm uses the Canny edge detection algorithm [22] to refine the pupil position by detecting the edges in the eye image and filtering these edges according to neighboring pixel intensity. Then the algorithm employs the morphing technique operators to remove the edges that don’t satisfy the curvature or elliptic property of the pupil edge and collect the other edges that satisfy the intensity degree and the curvature or elliptic property to fit an ellipse. The algorithm selects the edges that have lower intensity values and higher circular or elliptic values as the pupil edge contour, as seen in Fig. 7(5). The proposed CHMCEP algorithm used four morphological techniques to refine the edges to be more thin edges and smoothed. These techniques enhance the pupil edge detection performance by removing the edge connections from the surrounding pupil edge. These techniques can be done by using the four morphing operators to ensure higher accuracy, Skeleton morphing, cleaning morphing, fill morphing, Spur morphing, as seen in Fig. 7(5). Finally, the proposed CHMCEP algorithm employs an image convolution with cascaded two filters such as Else algorithm [4,6] mean and a surface difference filter, and then it uses the threshold for pupil region extraction to get the center of mass calculation. Then the proposed CHMCEP algorithm detects the pupil center coordinates by using the circular Hough transform for the center fitting [23], as seen in Fig. 7(6). The calculation of pupil radius is illustrated in Fig. 7(7) and the result image pupil edge and center is shown in Fig. 7(8). Fig. 8 shows The proposed algorithm for detecting the normal, the blurred and noisy images.

Figure 7: The proposed algorithm process for pupil edge detection and center calculation for blur image. (1) blurred image with noise, (2) image restoration, (3) intensity image, (4) binary image, (5) edge detection with applying morphing techniques, (6) pupil center calculation, (7) pupil radius calculation, (8) result image pupil edge and centertecting the normal image and the blurred and noisy image

Figure 8: The proposed algorithm for detecting the normal image and the blurred and noisy image (1) blurred and noisy image (2) result image pupil edge and center (3) input image (4) result image pupil edge and center

Świrski et al. Data set [17] (indoor collected images) contains 600 manually labeled, high resolution (640 × 480 pixels) eye images for two subjects, its challenges in pupil detection come from the highly off-axial camera position and occlusion of the pupil by the eyelid. ExCuSe Data set [2] (outdoor collected images when on-road driving [23] and supermarket search task [4]) contains 38,401 high-quality, manually labeled eye images (384 × 288 pixels) from 17 different subjects. It has higher challenges in pupil detection since there are differences in illumination conditions and many reflections due to eyeglasses and contact lenses. ElSe Data set [4] contains 55,712 eye images (384 × 288 pixels) from 7 subjects wearing a Dikablis eye tracker during various tasks. Its challenges in pupil detection come from locking up or making shadows on the pupil by eyelids and eyelashes. ElSe Data set [4], XVIII, XIX, XX, XXI, and XXII eye images have low pupil contrast, motion blur, and reflections LPW [22] contain 66 high-quality eye videos that were recorded from 22 subjects using a head-mounted eye tracker [16], a total of 130,856 video frames, its challenge is that it covers a wide range of indoor and outdoor realistic illumination cases, wearing glasses, eye make-up, with variable skin tones, eye colors, and face shape.

We will compare the proposed CHMCEP algorithm with the previous state of art algorithms which are Starburst [16], SET [15], Świrski et al. [17], Pupil Labs [14], ExCuSe [2], and ElSe [4]. The performance is evaluated in terms of the detection rate for different pixel errors as in [24], where the detection rate was measured by calculating the number of the detected images with respect to the total number of the images on each data set used, and the pixel error represents (e) was calculated by Euclidean distance between detected pupil-center point (PCP), pd(xd, yd), and manually selected pupil-center point (PCP), pm(xm, ym) as shown in Eq. (1):

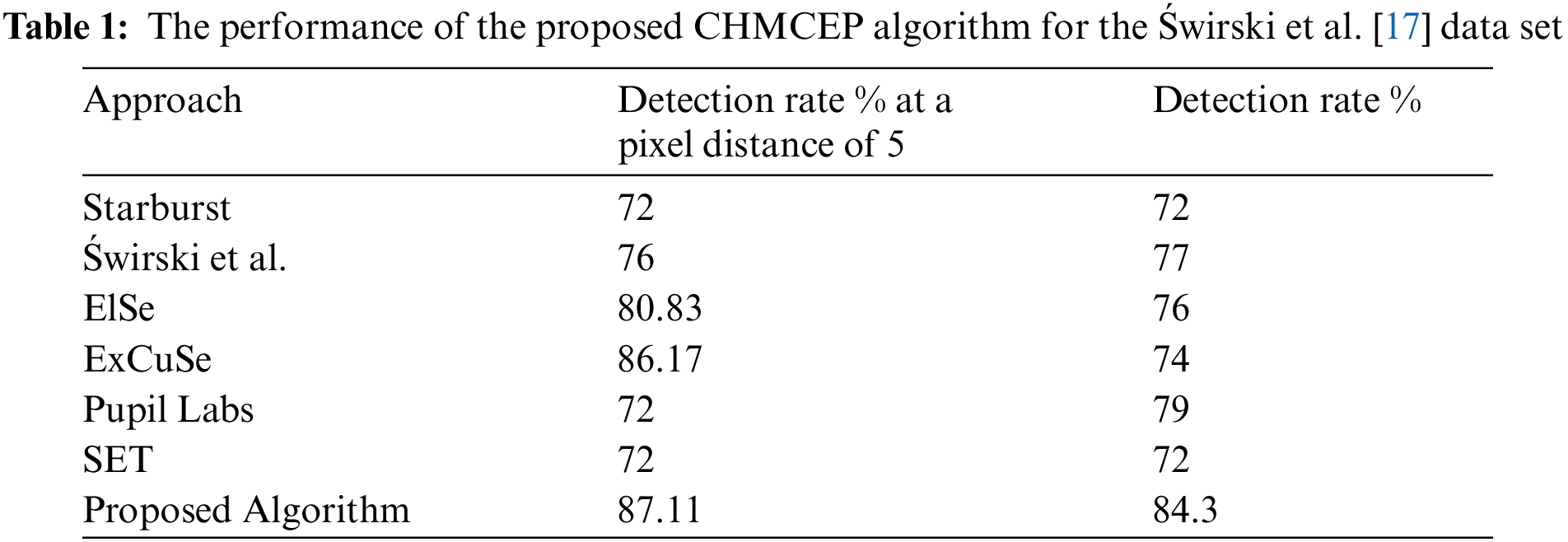

Fig. 9 illustrates the performance of the introduced CHMCEP algorithm for the Świrski et al. [17] data set as shown in [24]. The detection rates of ExCuSe and ElSe are better than the other state of the art where detection rate of the ExCuSe is 86.17% and ElSe is 80.83% and the proposed CHMCEP algorithm provides a better detection rate of 87.11%, and on the other hand, it reaches a detection rate approximately of 84.3% at a pixel distance of 5, while the other state of art beyond 70% as shown in Tab.1.

Figure 9: The proposed CHMCEP algorithm performance for the Świrski et al. [17] data set

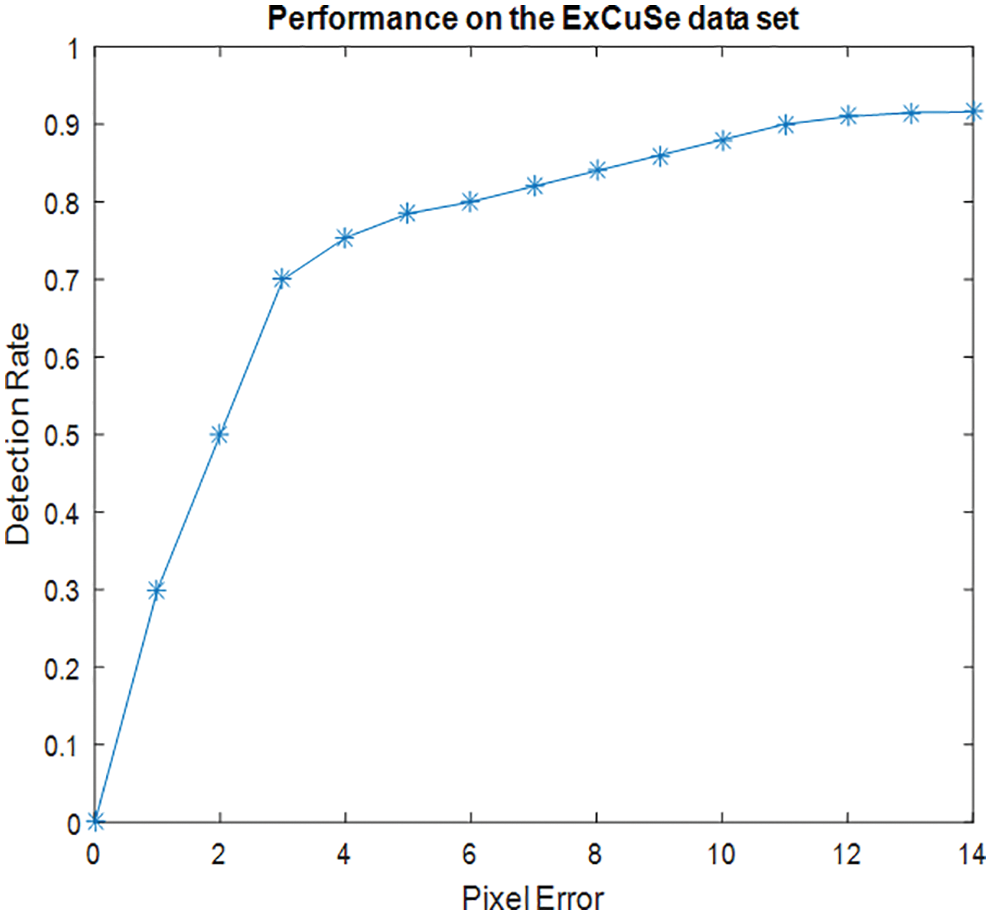

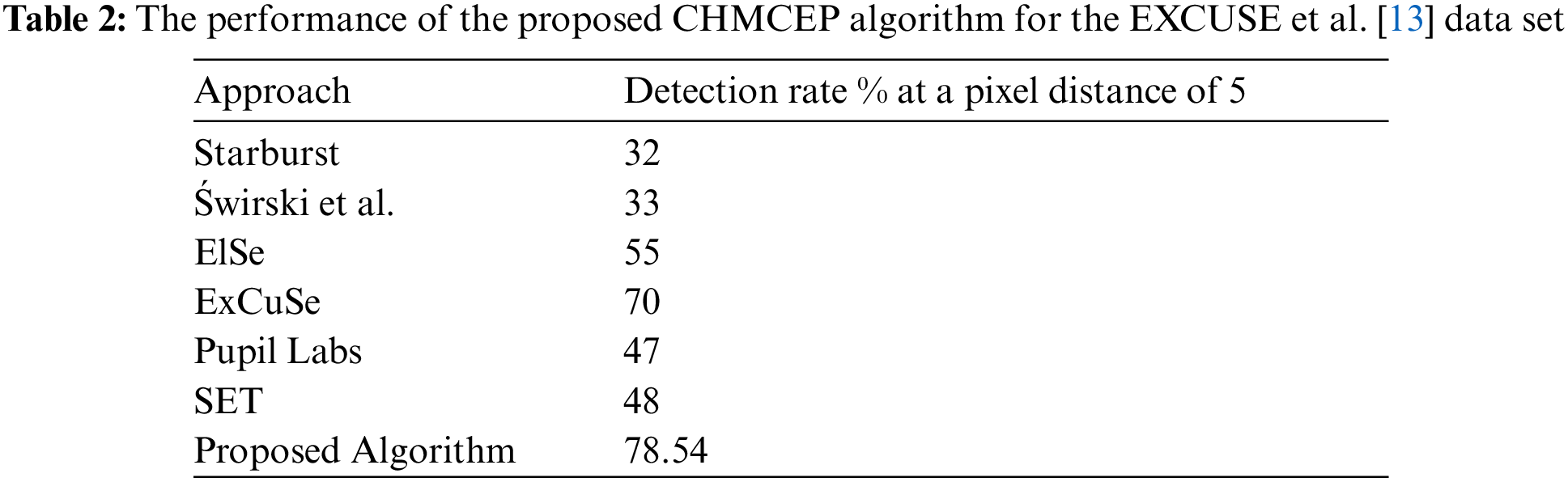

Fig. 10 illustrates the performance of the proposed CHMCEP algorithm for ExCuSe [13] data set as shown in [24]. The detection rates of ExCuSe and ElSe are better than the other state of the art where the ExCuSe is 55% and ElSe is 70%, whereas the remaining algorithms show detection rates below 30% at a pixel error of 5. The proposed CHMCEP algorithm provides a better detection rate of 78.54% at a pixel error of 5 as shown in Tab. 2.

Figure 10: The proposed CHMCEP algorithm performance for ExCuSe [13] data set

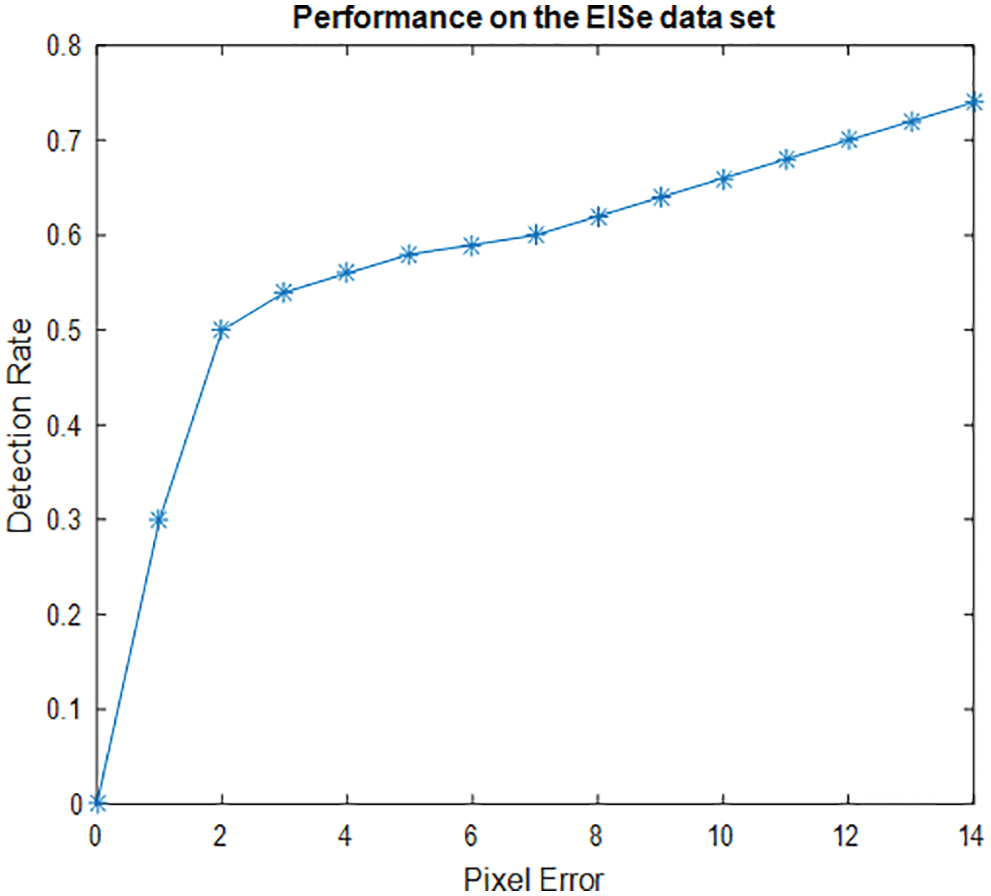

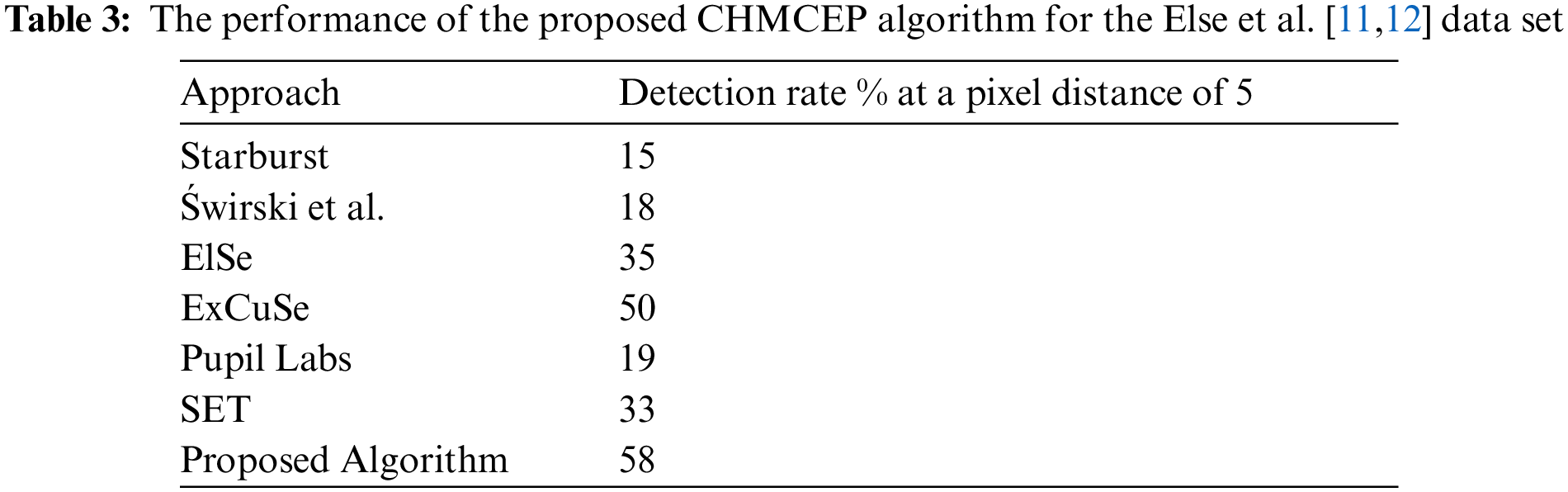

Fig.11 shows the performance of the proposed CHMCEP algorithm for ElSe [12] data set as shown in [25]. The detection rates of ExCuSe and ElSe are better than the other state of the art where the ExCuSe is 35% and ElSe is 50% because the remaining algorithms show detection rates of a maximum of 10% at a pixel error of 5. The proposed CHMCEP algorithm provides a better detection rate of 58% at a pixel error of 5 as shown in Tab. 3.

Figure 11: The proposed CHMCEP algorithm performance for Else [12] data set

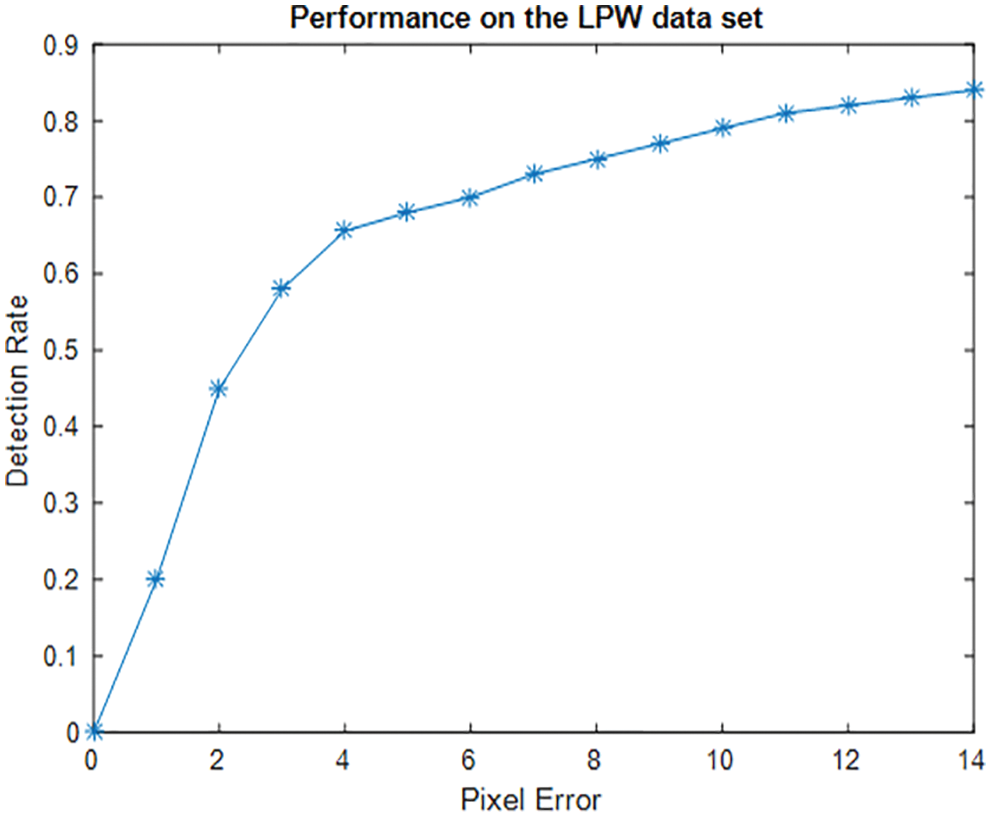

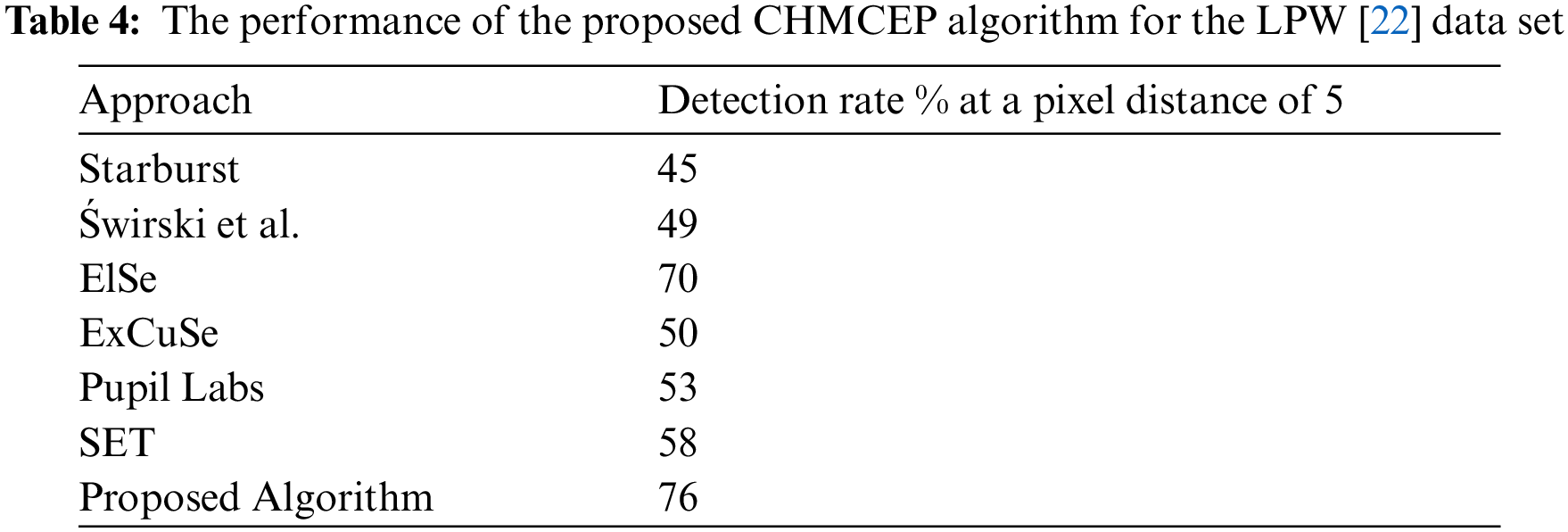

Fig. 12 illustrates the performance of the proposed CHMCEP algorithm for LPW [22] data set, as shown in [24], ElSe can be considered as the most robust algorithm when used in outdoor challenges, the detection rate of ElSe is 70% and it is 50% for ExCuSe and Swirksi, whereas the remaining algorithms show detection rates below 40% at a pixel error of 5. The proposed CHMCEP algorithm provides a better detection rate of 76% at a pixel error of 5 as shown in Tab. 4.

In the following Figs. (Figs. 9 to 12), we compare the performance of the proposed CHMCEP algorithm with the performance shown in Fig. 11 in [24] which shows the limitations of the six algorithms. Fig. 13 shows the limitations of the 6 algorithms for a Data set XIX. Data set XIX in Fig. 11 in [25] is determined by different reflections, which cause edges on the pupil but not at its boundary. Since most of the six works are based on edge sifting, they are exceptionally likely to fall flat in recognizing the student boundary. As a result, the discovery rates accomplished here are very destitute.

Figure 12: The The proposed CHMCEP algorithm performance for LPW [22] data set

Figure 13: The proposed CHMCEP algorithm performance for XIX data set

Fig. 14 shows the limitations of the 6 algorithms. Fig. 11 in [25] postures challenges related to destitute brightening conditions, driving in this way to an iris with low-intensity values. This makes it exceptionally troublesome to partition the understudy from the iris (e.g., within the Canny edge location the reactions are disposed of since they are as well moo). Moreover, this information set contains reflections, which have a negative effect on the edge afterward reaction. Whereas the calculations ElSe and Pardon accomplish location rates of around 45%, the remaining approaches can identify the student center as it were 10% of the eye pictures. Fig. 15 shows the limitations of the 6 algorithms. Fig. 11 in [25] is recorded from an exceedingly off-axial camera position.

Figure 14: The The proposed CHMCEP algorithm performance for XXI data set

Figure 15: The proposed CHMCEP algorithm performance for XXVIII data set

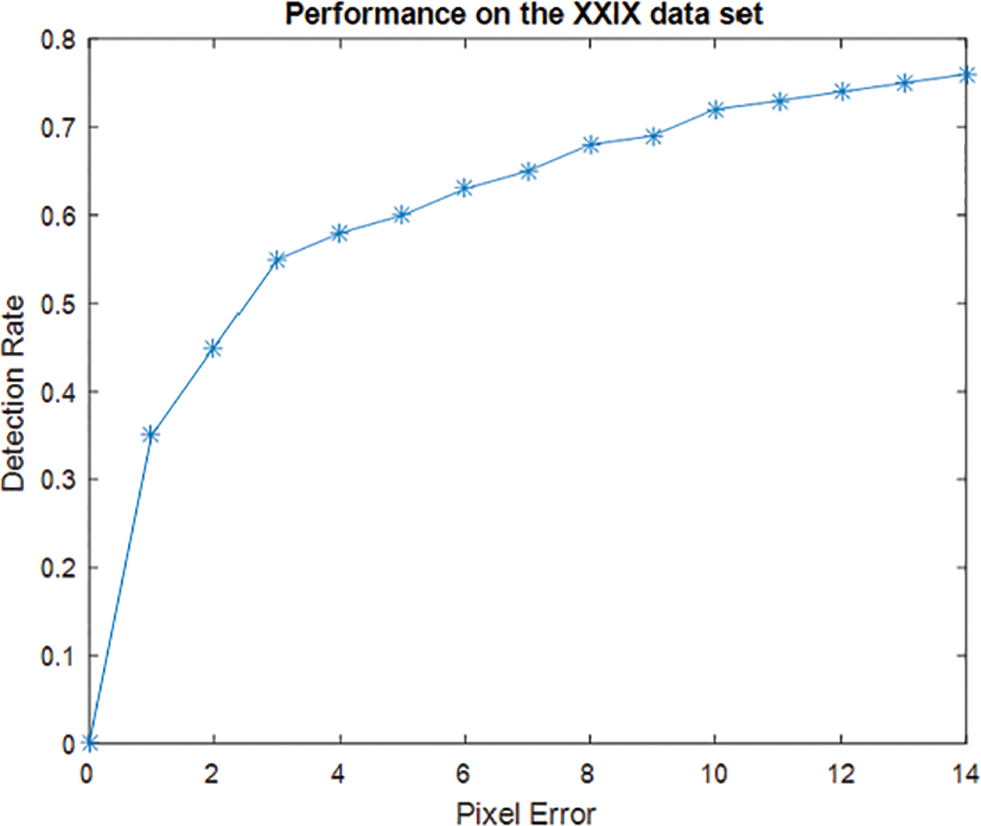

Fig. 16 shows the performance of the proposed CHMCEP algorithm for the last challenging Data set XXIX. In expansion, the outline of the subjects’ glasses covers the understudy, and most of the pictures are intensely obscured. These lead to unsuitable reactions from the Canny edge finder. As a result, the location rates are exceptionally destitute, e.g., ElSe (the finest performing calculation) can distinguish the student in as it were 25% of the eye pictures at a pixel mistake of 5. The proposed CHMCEP algorithm provides a better detection rate of 60% at a pixel error of 5.

Figure 16: The The proposed CHMCEP algorithm performance for XXIX data set

There is another contribution to the proposed CHMCEP algorithm. As seen, Fig. 17 shows the success cases of the proposed CHMCEP algorithm for detecting the pupil on eye images from Data sets XXI and XXIX other than the other attempts. The proposed CHMCEP algorithm has been tested and compared with the proposed pupil detection algorithm (PDA) of the work done in [26] by using two databases of eye images. Database (A) has 400 (Infra red) IR eye images with a resolution of 640 × 480 pixels, captured with the head-mounted device developed by [26], and Database (B) has 400 IR eye images with a resolution of 640 × 480 pixels from the available database Casia-Iris-Lamp [27]. The performance was tested by running and implementing Python 3 bindings OpenCV on the same machine as in [26].

Fig. 18 illustrates the performance of the introduced CHMCEP algorithm for the databases A and B in [26,27]. The proposed CHMCEP algorithm provides better performance than the PDA algorithm [1] and ExCuSe [2], where it achieves a detection rate of approximately 100% at 8 pixels than the PDA algorithm [28–31] that achieves a detection rate of approximately 100% at 10 pixels [32–37].

Figure 17: Success cases of the proposed CHMCEP algorithm for Data sets XXI and XXIX. The left column presents the input images and the right column presents the proposed CHMCEP algorithm

Figure 18: The The proposed CHMCEP algorithm performance for data bases A&B in [26,27]

The present work introduces a robust pupil detection algorithm with higher accuracy than the previous attempts ElSe [12], ExCuSe [13], Pupil Labs [14], SET [15], Starburst [16], and Świrski et al. [17] and it can be used in real-time analysis applications. The proposed CHMCEP algorithm can detect successfully the pupil of the blurred or noisy images by using different filtering methods at the first stage of the proposed CHMCEP algorithm. This is used to remove the blur and noise and finally the second filtering process before the circular Hough transforms for the center fitting to ensure better accuracy. From the simulations, we can conclude that the introduced CHMCEP algorithm has a better performance than ElSe [12] and the other attempts. On the other hand, the proposed CHMCEP algorithm uses two filtering stages of different filtering methods. This enables it to provide successful detection of the pupils of the data sets XXI (Bad illumination, Reflections, ElSe [12]) and XXIX (Border of glasses covering pupil, blurred images, LPW [22]) than the other attempts especially ElSe [12]. The problem of ElSe may become from choosing a pupil center position in the downscaled image causes a distance error of a pupil center position in the full-scale image, since the position optimization may be accomplished with error. The proposed shows a good performance on these challenging data sets that distinguished by reflections, blurred or poor illumination conditions.

Acknowledgement: The authors appreciate “TAIF UNIVERSITY RESEARCHERS SUPPORTING PROJECT, grant number TURSP-2020/345”, Taif University, Taif, Saudi Arabia for supporting this work.

Funding Statement: This research was funded by “TAIF UNIVERSITY RESEARCHERS SUPPORTING PROJECT, grant number TURSP-2020/345”, Taif University, Taif, Saudi Arabia.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. A. L. Bergera, G. Garde, S. Porta, R. Cabeza and A. Villanueva, “Accurate pupil center detection in off-the-shelf eye tracking systems using convolutional neural networks,” Sensors, vol. 21, no. 6847, pp. 1–14, 2021. [Google Scholar]

2. A. Pasarica, R. G. Bozomitu, V. Cehan, R. G. Lupu and C. Rotariu, “Pupil detection algorithms for eye tracking applications,” in Int. Symp. for Design and Technology in Electronic Packaging, 21st Int. Conf. on, Brasov, Romania, IEEE, 21, pp. 161–164, 2015. [Google Scholar]

3. C. Braunagel, W. Stolzmann, E. Kasneci and W. Rosenstiel, “Driver-activity recognition in the context of conditionally autonomous driving,” in IEEE 18th Int. Conf. on Intelligent Transportation Systems, Gran Canaria, Spain, vol. 18, pp. 1652–1657, 2015. [Google Scholar]

4. K. Sippel, E. Kasneci, K. Aehling, M. Heister, W. Rosenstiel et al., “Binocular glaucomatous visual field loss and its impact on visual exploration-a supermarket study,” PLOS, vol. 9, no. 8, pp. 1–7, 2014. [Google Scholar]

5. Q. Ali, I. Heldal, C. G. Helgesen, G. Krumina, C. Costescu et al., “Current challenges supporting school-aged children with vision problems: A rapid review,” Applied Sciences, vol. 11, no. 9673, pp. 1–23, 2021. [Google Scholar]

6. N. Kumar, S. Kohlbecher and E. Schneider, “A novel approach to video- based pupil tracking,” in Proc. of IEEE SMC, San Antonio, TX, USA, vol. 2, pp. 1–8, 2009. [Google Scholar]

7. L. Lin, L. Pan, L. F. Wei and L. Yu, “A robust and accurate detection of pupil images,” in Proc. of IEEE Biomedical Engineering and Informatics (BMEI), Yantai, China, vol. 3, pp. 70–74, 2010. [Google Scholar]

8. J. S. Agustin, E. Mollenbach and M. Barret, “Evaluation of a low-cost open-source gaze tracker,” in Proc. of ETRA, ACM, Austin, TX, USA, vol. 2010, pp. 77–80, 2010. [Google Scholar]

9. A. Keil, G. Albuquerque, K. Berger and M. Andreas, “Real-time gaze tracking with a consumer-grade video camera,” in Proc. of WSCG, Plzen, Czech Republic, vol. 2, pp. 1–6, 2010. [Google Scholar]

10. J. N. Sari, H. Adi, L. Edi, I. Santosa and R. Ferdiana, “A study of algorithms of pupil diameter measurement,” in 2nd Int. Conf. on Science and Technology-Computer (ICST), Yogyakarta. Indonesia, 2, pp. 1–6, 2016. [Google Scholar]

11. A. M. S. Saif and M. S. Hossain, “A study of pupil orientation and detection of pupil using circle algorithm: A review,” International Journal of Engineering Trends and Technology (IJETT), vol. 54, no. 1, pp. 12–16, 2017. [Google Scholar]

12. W. Fuhl, T. C. Santini, T. Kübler and E. Kasneci, “Else: Ellipse selection for robust pupil detection in real-world environments,” in Proc. of 9th Biennial ACM Symp. on Eye Tracking Research & Applications. ACM, New York. NY. USA, ETRA, 9, pp. 123–130, 2016. [Google Scholar]

13. W. Fuhl, T. K. ̈ubler, K. Sippel, W. Rosenstiel and E. Kasneci, “ExCuSe: Robust pupil detection in real-world scenarios,” in Int. Conf. on Computer Analysis of Images and Patterns, Valletta, Malta, Springer, 2015, pp. 39–51, 2015. [Google Scholar]

14. M. Kassner, W. Patera and A. Bulling, “An open-source platform for pervasive eye tracking and mobile gazebased interaction,” in Adjunct Proc. of the 2014 ACM Int. Joint Conf. on Pervasive and Ubiquitous Computing (UbiComp), Seattle Washington, USA, vol. 2014, pp. 1151–1160, 2014. [Google Scholar]

15. A. H. Javadi, Z. Hakimi, M. Barati, V. Walsh and L. Tcheang, “Set: A pupil detection method using sinusoidal approximation,” Frontiers in neuroengineering, vol. 8, no. 4, pp. 1–10, 2015. [Google Scholar]

16. D. Li, D. Winfield and D. J. Parkhurst, “Starburst: A hybrid algorithm for video-based eye tracking combining feature-based and model-based approaches,” in CVPR Workshops. IEEE Computer Society Conf. on Computer Vision and Pattern Recognition-Workshops, 2005, San Diego, CA, USA, IEEE, vol. 2005, pp. 79–86, 2005. [Google Scholar]

17. L. Świrski, A. Bulling and N. Dodgson, “Robust real-time pupil tracking in highly off-axis images,” in Proc. of the Symp. on Eye Tracking Research and Applications (ETRA), Santa Barbara California, USA, ACM, 2012, pp. 173–176, 2012. [Google Scholar]

18. S. Suzuki and K. Abe, “Topological structural analysis of digitized binary images by border following,” Computer Vision. Graphics, and Image Processing, vol. 1, no. 32, pp. 32–46, 1985. [Google Scholar]

19. G. J. Mohammed, B. R. Hong and A. A. Jarjes, “Accurate pupil features extraction based on new projection function,” Computing and Informatics, vol. 29, no. 4, pp. 663–680, 2012. [Google Scholar]

20. T. H. Cormen, C. E. Leiserson, R. L. Rivest and C. Stein, Introduction to algorithms, Third edition, Cambridge: The MIT press, pp. 1014–1047, 2009. [Google Scholar]

21. N. Ostu, “A threshold selection method from gray-level histograms,” IEEE Transactions on Systems, Man and Cybernetics, vol. 9, no. 1, pp. 62–66, 1979. [Google Scholar]

22. J. Cany, “A computational approach to edge detection,” IEEE Transactions on Pattern Analysis and Machine Intelligence. PAMI, vol. 8, no. 6, pp. 679–689, 1986. [Google Scholar]

23. N. Cherabit, F. Z. Chelali and A. Djeradi, “Circular hough transform for iris localization,” Science and Technology, vol. 2, no. 5, pp. 114–121, 2012. [Google Scholar]

24. E. Kasneci, K. Sippel, K. Aehlinh, M. Heister, W. Rosenstiel et al., “Driving with binocular visual field loss? A study on a supervised on-road parcours with simultaneous eye and head tracking,” PLoS ONE, vol. 9, no. 2, pp. 122–136, 2014. [Google Scholar]

25. F. Wolfgang, T. Marc, B. Andreas and K. Enkelejda, “Pupil detection in the wild: An evaluation of the state of the art in mobile head-mounted eye tracking,” Machine Vision and Applications, vol. 27, no. 8, pp. 1275–1288, 2016. [Google Scholar]

26. P. Bonteanu, A. Cracan, R. G. Bozomitu and G. Bonteanu, “A new robust pupil detection algorithm for eye tracking based human-computer interface,” in Int. Symp. on Signals. Circuits and Systems, Iasi, Romania, vol. 2019, pp. 1–4, 2019. [Google Scholar]

27. J. Žuniü, K. Hirota and P. L. Rosin, “A Hu moment invariant as a shape circularity measure,” Pattern Recognition, vol. 43, no. 1, pp. 47–57, 2010. [Google Scholar]

28. W. Sun, G. Z. Dai, X. R. Zhang, X. Z. He and X. Chen, “TBE-Net: A three-branch embedding network with part-aware ability and feature complementary learning for vehicle re-identification,” IEEE Transactions on Intelligent Transportation Systems, vol. 4, no. 9, pp. 1–13, 2021. [Google Scholar]

29. W. Sun, L. Dai, X. R. Zhang, P. S. Chang and X. Z. He, “RSOD: Real-time small object detection algorithm in UAV-based traffic monitoring,” Applied Intelligence, vol. 9, no. 10, pp. 1–16, 2021. [Google Scholar]

30. N. Zdarsky, S. Treue and M. Esghaei, “A deep learning-based approach to video-based eye tracking for human psychophysics,” Front Hum. Neurosci, vol. 15, no. 10, pp. 1–18, 2021. [Google Scholar]

31. S. Kim, M. Jeong and B. C. Ko, “Energy efficient pupil tracking based on rule distillation of cascade regression forest,” Sensors, vol. 20, no. 12, pp. 1–18, 2020. [Google Scholar]

32. K. I. Lee, J. H. Jeon and B. C. Song, “Deep learning-based pupil center detection for fast and accurate eye tracking system,” in European Conf. on Computer Vision, Berlin/Heidelberg, Germany, Springer, 12, pp. 36–52, 2020. [Google Scholar]

33. A. F. Klaib, N. O. Alsrehin, W. Y. Melhem, H. O. Bashtawi and A. A. Magableh, “Eye tracking algorithms, techniques, tools, and applications with an emphasis on machine learning and Internet of Things technologies,” Expert Systems With Applications, vol. 166, no. 21, pp. 166–181, 2021. [Google Scholar]

34. R. G. Lupu, R. G. Bozomitu, A. P˘as˘aric˘a and C. Rotariu, “Eye tracking user interface for Internet access used in assistive technology,” in Proc. of the 2017 E-Health and Bioengineering Conf. (EHB) 22–24 June 2017, Piscataway, NJ, USA, IEEE, 2017, pp. 659–662, 2017. [Google Scholar]

35. S. Said, S. Kork, T. Beyrouthy, M. Hassan, O. Abdellatif et al., “Real time eye tracking and detection—A driving assistance system,” Advances in Science, Technology and Engineering Systems Journal, vol. 3, no. 6, pp. 446–454, 2018. [Google Scholar]

36. M. Wedel, R. Pieters and R. V. Lans, Eye tracking methodology for research in consumer psychology. In: Handbook of Research Methods in Consumer Psychology. Vol. 2019. New York, NY, USA: Routledge, pp. 276–292, 2019. [Google Scholar]

37. M. Meißner, J. Pfeiffer, T. Pfeiffer and H. Oppewal, “Combining virtual reality and mobile eye tracking to provide a naturalistic experimental environment for shopper research,” Journal of Business Research, vol. 100, no. 4, pp. 445–458, 2019. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |