DOI:10.32604/cmc.2022.019706

| Computers, Materials & Continua DOI:10.32604/cmc.2022.019706 |  |

| Article |

IoMT-Enabled Fusion-Based Model to Predict Posture for Smart Healthcare Systems

1Faculty of Information Science and Technology, University Kebangsaan Malaysia, UKM, 43600, Selangor, Malaysia

2Skyline University College, Sharjah, United Arab Emirates

*Corresponding Author: Taher M. Ghazal. Email: ghazal1000@gmail.com

Received: 22 April 2021; Accepted: 26 August 2021

Abstract: Smart healthcare applications depend on data from wearable sensors (WSs) mounted on a patient’s body for frequent monitoring information. Healthcare systems depend on multi-level data for detecting illnesses and consequently delivering correct diagnostic measures. The collection of WS data and integration of that data for diagnostic purposes is a difficult task. This paper proposes an Errorless Data Fusion (EDF) approach to increase posture recognition accuracy. The research is based on a case study in a health organization. With the rise in smart healthcare systems, WS data fusion necessitates careful attention to provide sensitive analysis of the recognized illness. As a result, it is dependent on WS inputs and performs group analysis at a similar rate to improve diagnostic efficiency. Sensor breakdowns, the constant time factor, aggregation, and analysis results all cause errors, resulting in rejected or incorrect suggestions. This paper resolves this problem by using EDF, which is related to patient situational discovery through healthcare surveillance systems. Features of WS data are examined extensively using active and iterative learning to identify errors in specific postures. This technology improves position detection accuracy, analysis duration, and error rate, regardless of user movements. Wearable devices play a critical role in the management and treatment of patients. They can ensure that patients are provided with a unique treatment for their medical needs. This paper discusses the EDF technique for optimizing posture identification accuracy through multi-feature analysis. At first, the patients’ walking patterns are tracked at various time intervals. The characteristics are then evaluated in relation to the stored data using a random forest classifier.

Keywords: Data fusion (DF); posture recognition; healthcare systems (HCS); wearable sensor (WS); medical data; errorless data fusion (EDF)

A Wearable Sensor (WS) is utilized in the healthcare system to provide technical help as well as remote patient monitoring. The sensor is attached to the user’s body and detects their motions at various time intervals. The collected information is forwarded to a healthcare facility for suggested treatments for the remote user [1]. On the heterogeneous platform, data is shared and compared to a predefined dataset. The dataset is made up of a predefined set of medical data and patient information [2]. It is indicated that the patient has a history of communication with healthcare providers and therapy. As a result, medical data is sensitive and private, and must be secured against unauthorized access [3], which may pose a serious threat to the patient’s health. The WS monitors bodily function-related concerns at predetermined intervals and stores the data [4]. There may be errors and latency when storing the data, problems that must be addressed at the outset. In healthcare, WS data is an important element of safe data transfer [5].

The Microelectromechanical System (MEMS) is used in data analysis to improve the quality of medical data. It is used to help the caregivers acquire relevant data for providing appropriate treatment to the patient [6]. The data prediction is used to assess serial and current medical data. The data is then compared to current data [7]. It improves accuracy and enhances the security of medical data by creating a forecast. Data analysis (DA) is accomplished through the development of sensor-improved health information systems for decision-making. [8] The data is evaluated to see if it is relevant or not. It is carried out on time. The data collection and comparison are performed in a set period, and if there is a delay, an error occurs [5]. The data is received from the WS in order to complete the task within the specified period. It provides safe DA and transmits the outcomes to the HCS for evaluation [9].

Data fusion (DF) is performed for medical data collected through extraction and classification techniques. The retrieved data is categorized in order to decrease mistakes and improve the medical system’s accuracy [10]. Numerous data from the sensors are combined to achieve WS fusion. Sensor fusion is the process of merging integrated data that is less ambiguous. Three forms of DF can be used: low level, feature level, and decision level [11]. Low-level refers to the merging of two sensors’ information, while feature-level is defined as extracting medical data features [12]. Finally, using current medical data, decision-level DF is used to reach an appropriate judgment. Three fusion models are utilized for DA: reactive, proactive, and interactive [13].

In Section II, the relevant research conducted to date is discussed in order to provide an overview of the current scenario. Section III illustrates how the Errorless Data Fusion (EDF) approach is achieved. The collected data is sent for feature extraction, followed by the classification of features. Finally, the random forest algorithm is used to acquire optimum data fusion. In Section IV, a comparative study of the suggested EDF approach’s performance is given, which addresses the metrics identification accuracy, fusion error, and detection time. The objective of this work is to use Random Forest (RF) Machine Learning (ML) to enhance the precision of medical data by 20%. In this work, DF is accomplished by categorizing features in assigned time period, and forecasting is conducted through the use of an ML method.

Past research studies have helped in providing insight into the application of technology in healthcare institutions. These research studies have come up with the different findings that indicate the effectiveness of using the IoT to connect to various health devices and systems [14–19]. Wang et al. [20] proposed a hybrid sensory system for patients to monitor routine activities and identify walking patterns. For DF methods collected from the WS, human activity recognition is employed. The features are categorized using a Feature Selection (FS) approach based on data and Support Vector Machine (SVM). A long Short-Term Memory and Convolutional Neural Network (LSTM-CNN) [15] fusion system is presented to identify unusual posture by Gao et al. [21], utilizing a Wearable Inertial Measurement Unit (IMU). This study involves leg Euler angle data to calculate classification precision.

The rapid development in technology has led to significant changes in the healthcare system. Currently, healthcare organizations depend on advanced technology to deliver healthcare products to patients. The IoT has played a critical role in changing the healthcare system. The emergence of wearable sensors has led to significant improvement in patient care and treatment. It enables medical practitioners to monitor patients in the hospital or remotely. Various wearable devices are designed to meet the specific and unique needs of patients. The IoT connects the various wearable devices to the patients and thus helps in gathering important data and information that can be used to make proper healthcare decisions and facilitate the efficient treatment of the patient.

The concept of smart healthcare was introduced to provide proper solutions in the delivery of efficient healthcare. Smart healthcare is regarded as a smart infrastructure that typically uses WSs to help detect and perceive information and data from the patient. The gathered information is then transmitted through the IoT and processed using cloud computing and supercomputers. Additionally, it can coordinate the integrated social system in order to understand the dynamic management of human services. Smart healthcare normally uses technology that includes wearable devices, IoT, and the mobile internet to help obtain data, and connect to individuals, institutions, and materials that are associated with healthcare. The information gathered is actively managed and responds to the needs of the medical ecosystem in an intelligent manner.

Smart healthcare is made up of patients, physicians, research institutes, and hospitals. In fact, it is viewed as an organic whole including different aspects like illness control and monitoring, diagnosis, treatment, health decision-making, hospital administration, and medical research. IoT, cloud computing, mobile internet, 5G, big data, Artificial Intelligence (AI), and contemporary biotechnology are all examples of current Information Technology (IT) and are key components of SCH [14]. These technologies can be used to monitor a patient’s health via wearable devices. Wearable devices can also be by the patient to obtain medical assistance and services from one's own home. It also allows clinicians to handle medical data and information using an integrated information infrastructure that consists of a Laboratory Information Management System (LIMS), Electronic Medical Records (EMRs), an image archiving system, and other technologies. The adoption of Surgical Robots (SRs) and the mixed reality technique allows for more precise surgery. The usage of medical platforms can assist patients to have a better experience. Big data may also be used to examine a certain issue. The use of IoT and new technologies may decrease the risks and expenses of medical procedures and processes.

The application of technology such as AI, SRs, and mixed reality, has made the treatment and diagnosis of illnesses more efficient and intelligent. In most cases, the accuracy of AI diagnosis findings surpasses that of human doctors. ML-based systems are regarded as more accurate than the expert medical practitioners. In recent years, wearable IoT devices have been increasingly adopted in various application fields. The wearable devices are embedded or worn on the human body. The architecture of the wearable IoT network enables it to store important health information that facilitates the treatment and management of patients. The integration of several types of sensors into wearable IoT devices has led to significant improvement in their functionality. Smartwatches, for example, may be used for not just localization, entertainment, social networking, and payment, but also health and routine task tracking.

The software and hardware devices used in the healthcare system to support IoT can be exposed to certain challenges that can compromise their effectiveness. Unauthorized individuals’ access is one of the most significant challenges. Smart healthcare services, like any other internet-connected devices, are vulnerable to hackers [16]. Many wearable devices in the healthcare industry are subject to security threats and vulnerabilities. The intruders may obtain access to the IoT connected to numerous medical devices. They have access to and can change the data and information recorded on the devices. This may endanger the patients’ ability to get effective treatment. Furthermore, patient data may be compromised as a result of illegal access to the health organization’s computer network. An attacker may have access to the patient’s private and sensitive data. As a result, it is critical that cybersecurity measures are implemented to safeguard wearable devices from third-party attacks.

Zahra et al. [22] proposed multimodal sensor fusion (SF) to detect action in assembly production. Information from the wearable IMU and EMG is used for optimizing the training of CNN. Efficiency depends on the mixing as well as forecasting of SF data. Al-Amin et al. [23] developed a foot-mounted inertial sensor-based fusion method for detecting the posture of older people. The raw data is obtained using a hidden Markov model and a Neural Network (NN) in this study, which detects six categories of positions. Rule-based recognition using an optical motion seizure is the posture that is trained. Wang et al. [24] introduced deterministic learning based on DF to address various walking perspectives to detect human posture. The posture motion on spatio-temporal characteristics is extracted using the Radial Basis Function (RBF). For the identification of human posture, the deep Convolutional and Recurrent Neural Network (CRNN) is created.

The posture examination can provide insight on how to improve patient care and ensure enhanced quality care. The categorization of posture characteristics associated with knee, described by Fendri et al. [25] is based on posture analysis using deterministic learning. The patient’s knee has osteoarthritis (OA). It is common in asymptomatic (AS). The RBFNN analyzes postural patterns and increases accuracy by separating them. Nweke et al. [26] used two branches of CNN to create posture feature extraction and classification. For posture identification, a two-branch CNN (TCNN) is employed. Multi-Frequency Posture Energy Images (MF-GEIs) is being utilized to train the posture inputs. Posture energy image is being used to identify posture.

Abbas et al. [27] offer a method for detecting posture recognition. It utilizes a covariate factor for appropriate behavioral biometric characteristics. The semantic information is utilized to improve posture-based identification accuracy. The dynamic selection for the person is performed in this procedure, and the human components are selected to obtain semantic information.

To evaluate human behavior and decrease the rate of misrecognition, Tran et al. [28] suggested human action recognition for multi-sensor fusion. This approach for multi-sensor DF introduces a Multi-View Ensemble Algorithm (MVEA). The feature vector is generated using Logistic Regression (LR) and the K-Nearest Neighbor (K-NN) technique. By using Synthetic Oversampling Minority Techniques (SOMT), the class imbalance is reduced. Hanif et al. [29] presented a data augmentation method for identifying the posture. It utilizes the deep neural network from the inertial sensor. For efficient training, two types of methods are used: Arbitrary Time Deformation (ATD) and Stochastic Magnitude Perturbation (SMP). General postures are recognized by CNN.

Dawar et al. [30] use deep learning-based fusion to incorporate depth and inertial sensing for action identification. A camera recognizes the movement or gesture and captures depth pictures of postures in various angles. Posture in a favorable influence is identified via a decision-level fusion. Zou et al. [31] describe a system that integrates inertial and RGBD sensors for strong posture identification. Eigen posture’s color and depth from the accelerometer in eigenspace are included in posture data. The supervised classifier in this case uses a 3D dense trajectory to obtain greater identification accuracy.

The IoT, as represented by Fan et al. [32], is used to study attitude detection and data analysis. Through the creation of Fast Fourier Transformation (FFT), the goal of this project is to minimize error and frequency domain by 10%. To improve activity, human motion is evaluated by identifying posture.

Islam et al. [33] present data analytics for detecting bodily posture fatigue with the use of a WS. Fatigue is recognized via ML, and essential traits are selected based on its knowledge. Class dependencies are utilized to enhance accuracy when detecting fatigue.

The literature review will contribute immensely to the healthcare delivery system. It will monitor the patient from remote places and help in the facilitation of the treatment. The medical devices will help track the patients and record their health status at all times during and after the treatment. It is necessary for the healthcare professional to enact appropriate healthcare intervention measures to conform to the changes in technology. The proposed research study will also add the most recent information and data on the errorless data fusion technique, and therefore contribute to a new literature review on the topic.

3 Proposed Errorless Data Fusion Technique

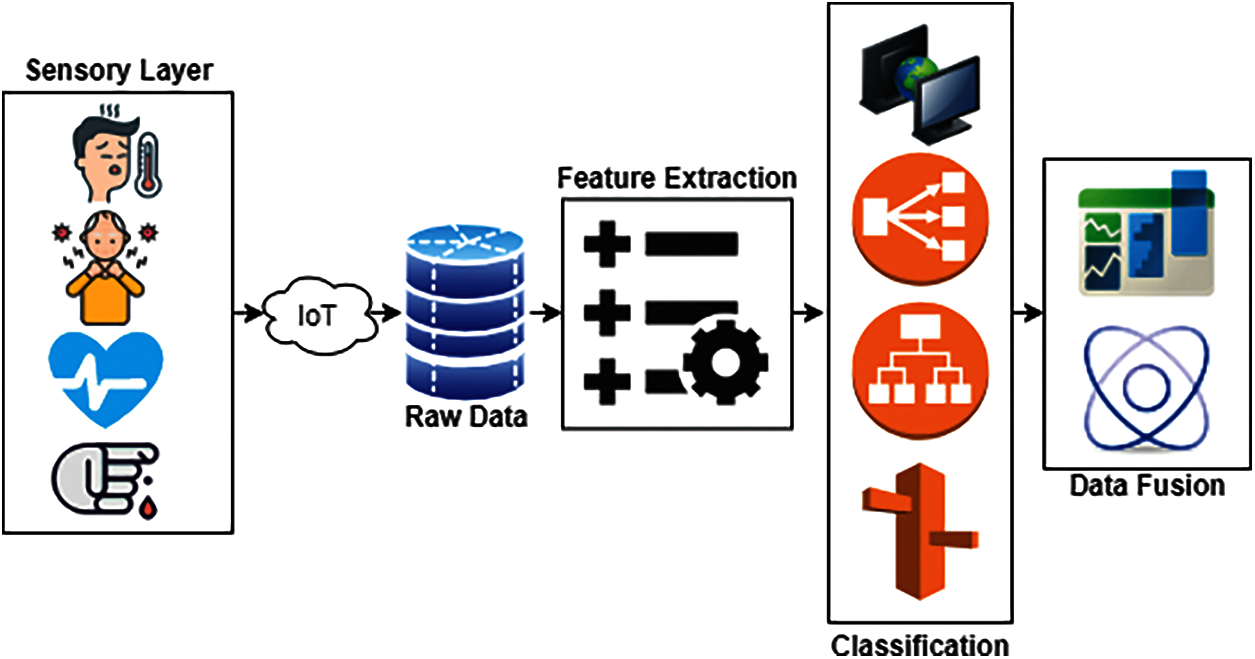

The patients’ bodies are fitted with a WS that detects their posture and transmits their walking pattern to the smart healthcare system. The goal of this project is to improve precision and eliminate errors in medical data by integrating Feature Extraction (FE) and Feature Classification (FC). For sequential analysis of walking patterns, DF is used to determine the least feature depending on the time interval. The suggested technique’s process flow is depicted in Fig. 1.

Figure 1: Flow diagram. Multiple sensors collect data from a patient at fixed intervals and forward data to perform feature extraction. Features are classified and passed on to apply data fusion algorithms

The FE is derived from the sensor using Eq. (1), and it incorporates data integrity, chaining, and data patterns.

The FE is performed by collecting the sensor data. Using that data,

The walking patterns are denoted by

The integrity of the medical data,

The three features are extracted in a better way for the classification of data; the above Eq. (3) is used to observe the fixed time DA. The calculation of

FE of the data is performed by detecting the WB of the patients, from which categorization is obtained by assessing Eq. (4).

The LF error is identified by assessing Eq. (2), and in this

The classification

Calculating Eq. (7), where

The characteristic of WB is used to determine integrity. The steps in which leg motions are computed are tracked in this way. The classification of WB is assessed using Eq. (8), and the sequence of incoming data is studied

The identification of DF is achieved by computing error-free data, and the LE is produced by using Eq. (9). The recognition is indicated as

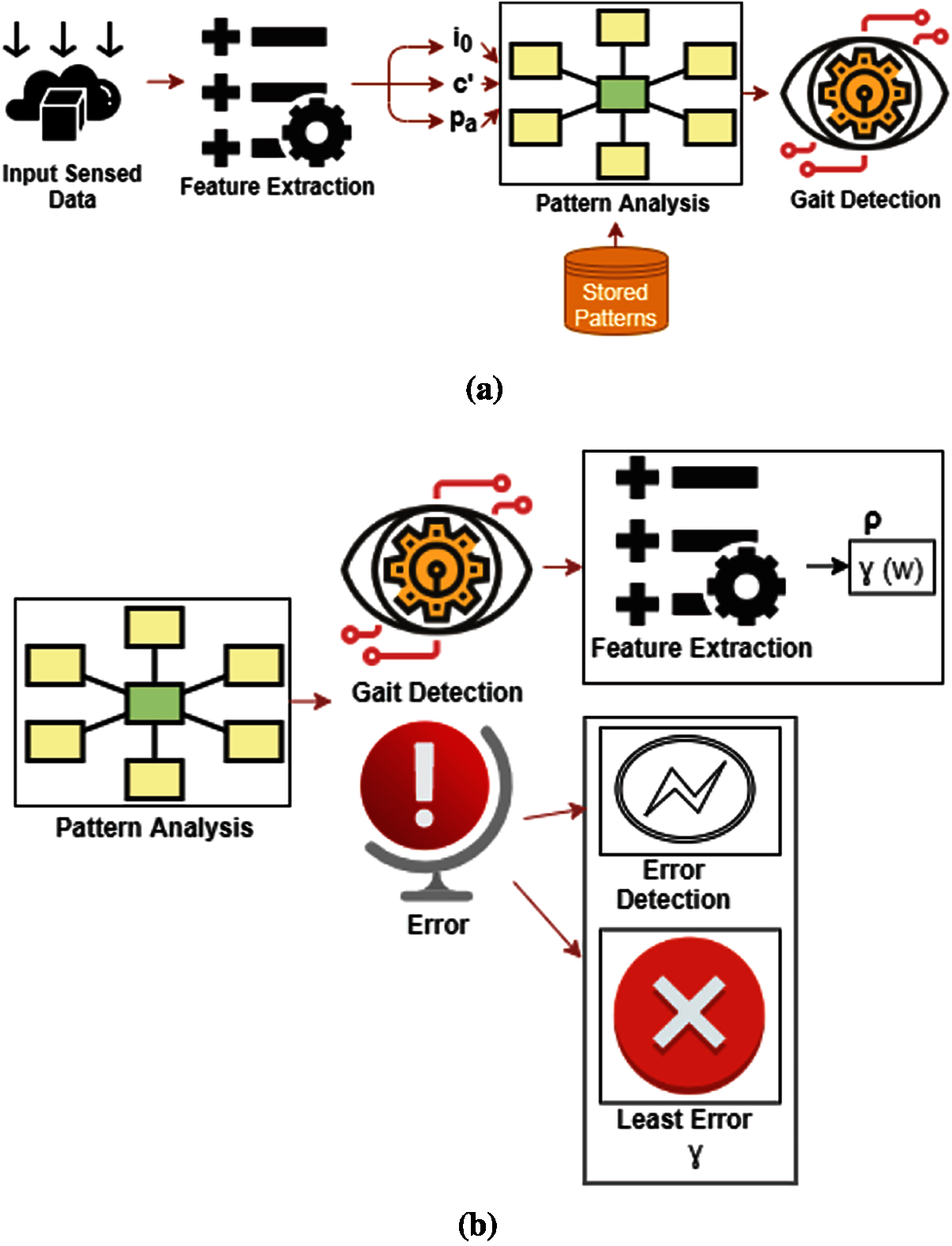

Figure 2: (a) The design classification procedure, with detection through pattern analysis and feature extraction. (b) Posture classification procedure, with detection through pattern analysis and feature extraction

Features are examined in

Walking is identified via DF and is utilized to perform the classification process. It is denoted by

3.1 Random Forest (RF) for Data Fusion

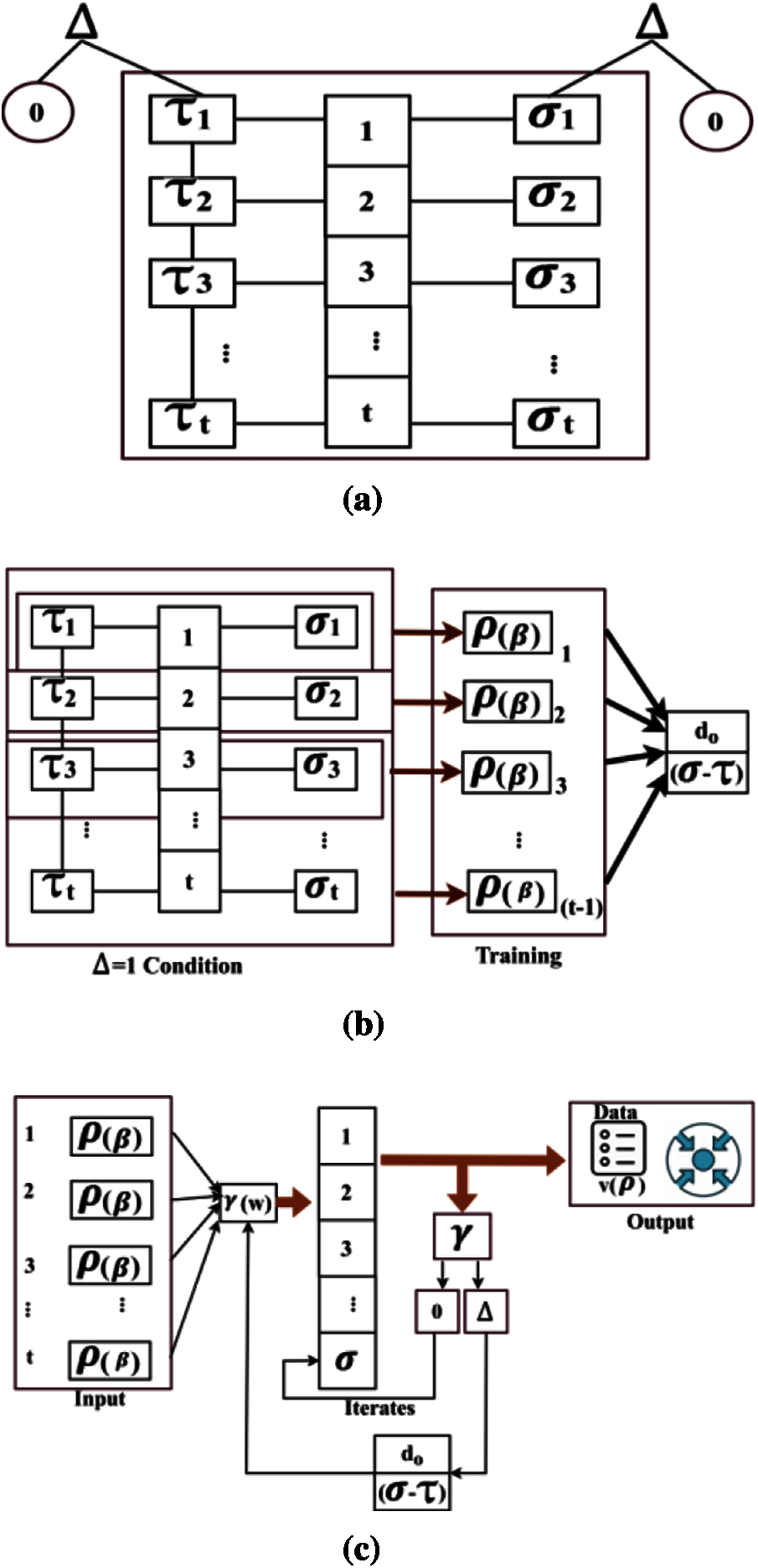

RF is utilized in DF, as well as classification, regression, and other task-based Decision Trees (DT). Regression focuses on forecasting, whereas classification is for classes. The classification-based RF is used to forecast the repeated analysis in this study. The root and leaf nodes of the RF are described by classification and DF. The root node data features are divided into

The computation of

Figure 3: (a) Prediction process used for data fusion, random forest tree Δ architecture. (b) Prediction process used for data fusion, training procedure. (c) Prediction process used for data fusion, split the root node data sorts into

DF shows

The prediction-based technique is used for the analysis, and it is indicated as

The detection for improved prediction is calculated by assessing Eq. (13), in which

In Eq. (14), when these two criteria are utilized, the RF is used to obtain higher precision, and the outcome is either larger than or less than 1. The computation

If there is a decrease in DF and prediction during classification,

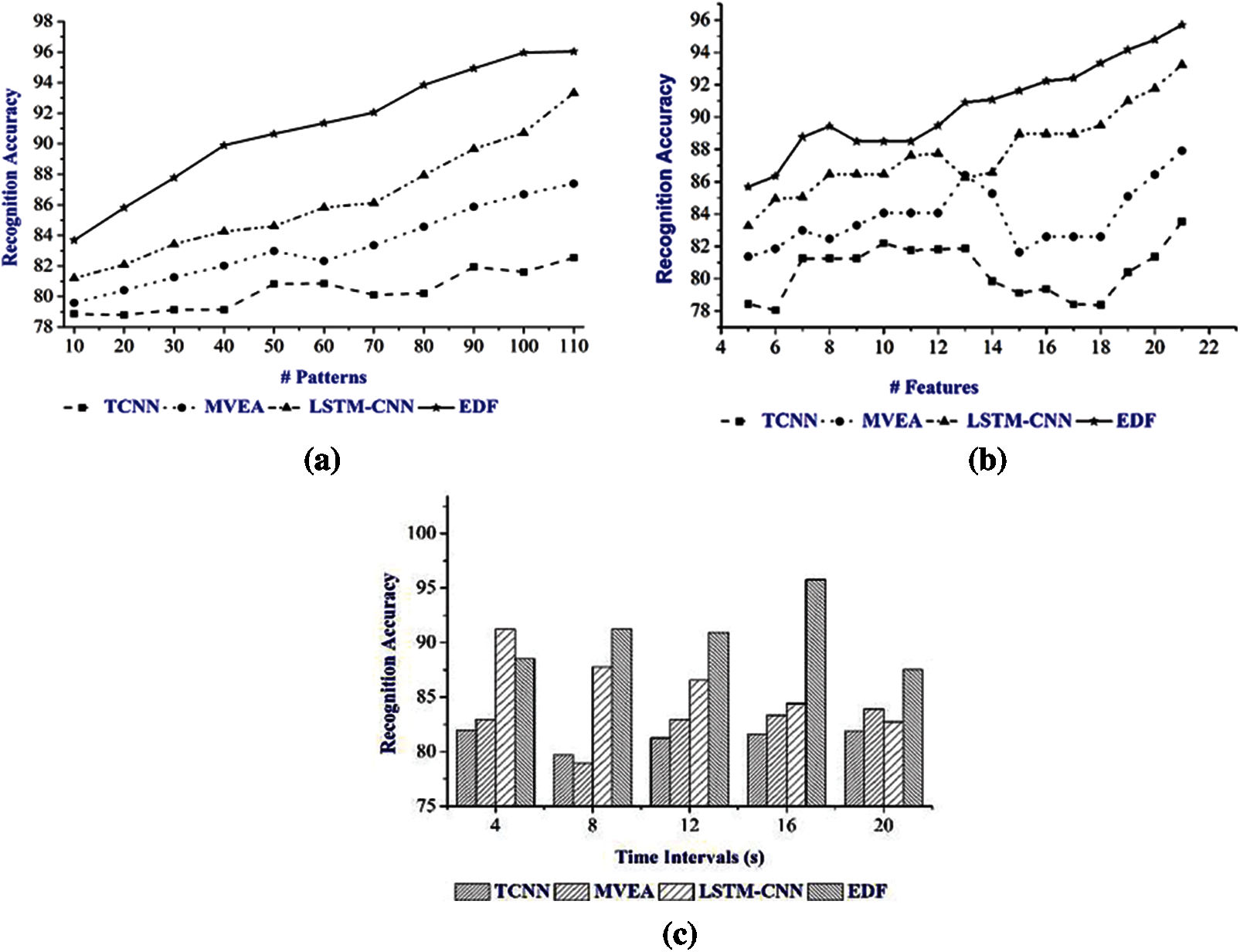

Through a comparison study, this section explains the performance evaluation of the suggested EDF approach. The comparison takes into account the metrics identification accuracy, fusion error, and recognition time. In this comparison study, the suggested EDF is added to the current techniques TCNN [20], MVEA [22], and LSTM-CNN [15]. The material in [34–36] is used to analyze the suggested approach. The above-mentioned metrics are estimated using Dataset A from the source, which is 16 MB in size and contains the posture patterns of 20 participants. In this study, five participants were chosen from a total of 20 to examine the recognition of 12 occurrences each. Sub1, Sub2…, Sub5 were the names of the subjects, and Sub1 and Sub4 were young subjects. Through training, 110 posture patterns were assessed on the front and back from the observing point, with a maximum of 20 features retrieved and a 4 s observation interval.

The recognition accuracy is examined in Fig. 4 by changing the patterns, features, and time intervals. While calculating

The above data was obtained by monitoring the movement of the patient at a different time interval. The patient was given a wearable device that was connected to the computer system of the client. The data collection was made possible through IoT technology. The IoT enables the connection between the patients and the hospitals. This ensured that there is sufficient information flow between the patient and doctors. The wearable device that was mounted on the patient recorded important information such as posture at various intervals. It shows the trend in the distribution of data, features, and time intervals. The data proves more authentic as compared to the benchmark paper. The dataset obtained has components that can be used by the healthcare organization to make important decisions regarding the treatment of the patient. It indicates how the fusion error can be reduced due to the adoption of new technology to support the medication of the patients.

By assessing

Figure 4: (a) Recognition accuracy for varying patterns. (b) Recognition accuracy for varying features. (c) Recognition accuracy for varying time intervals

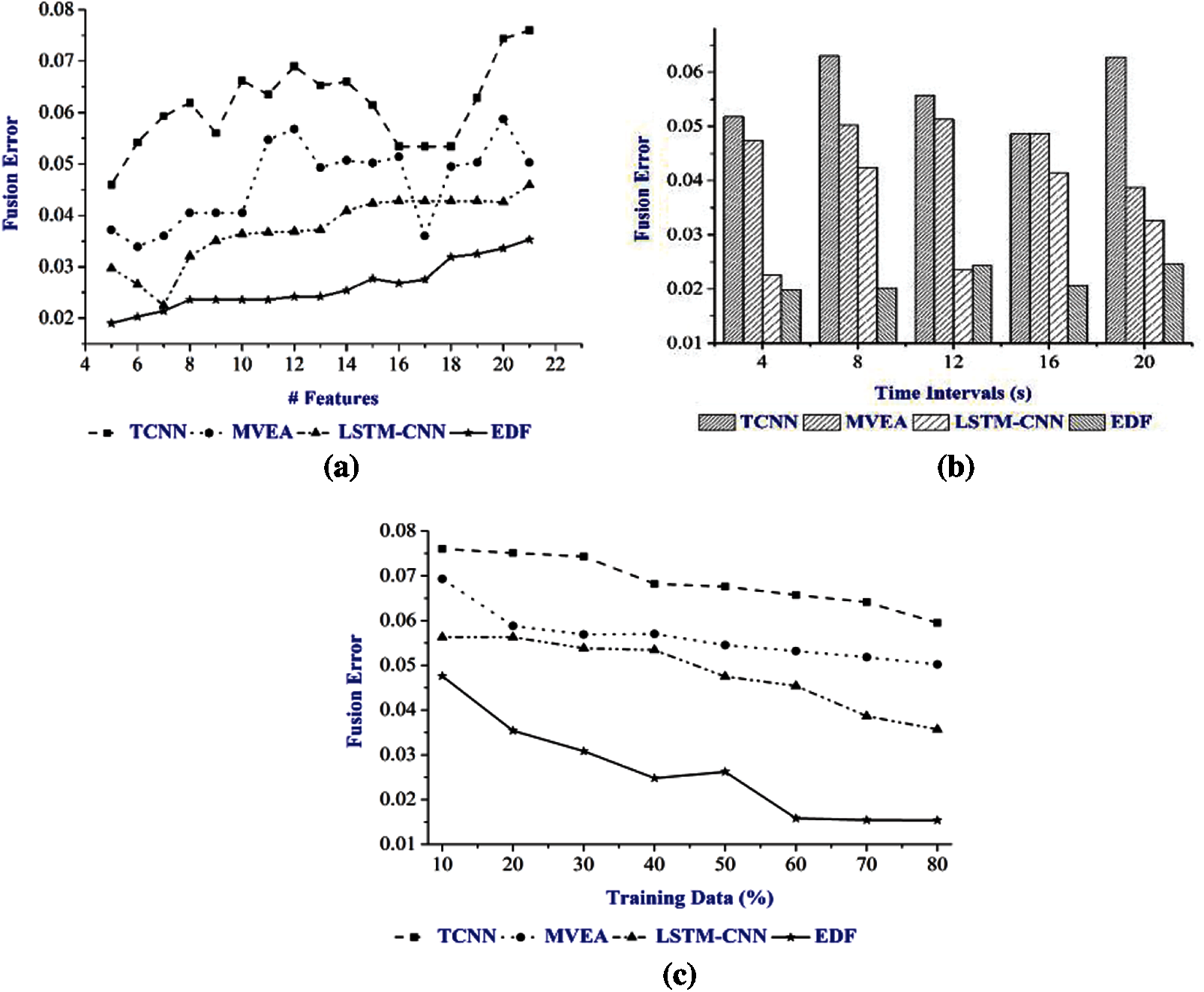

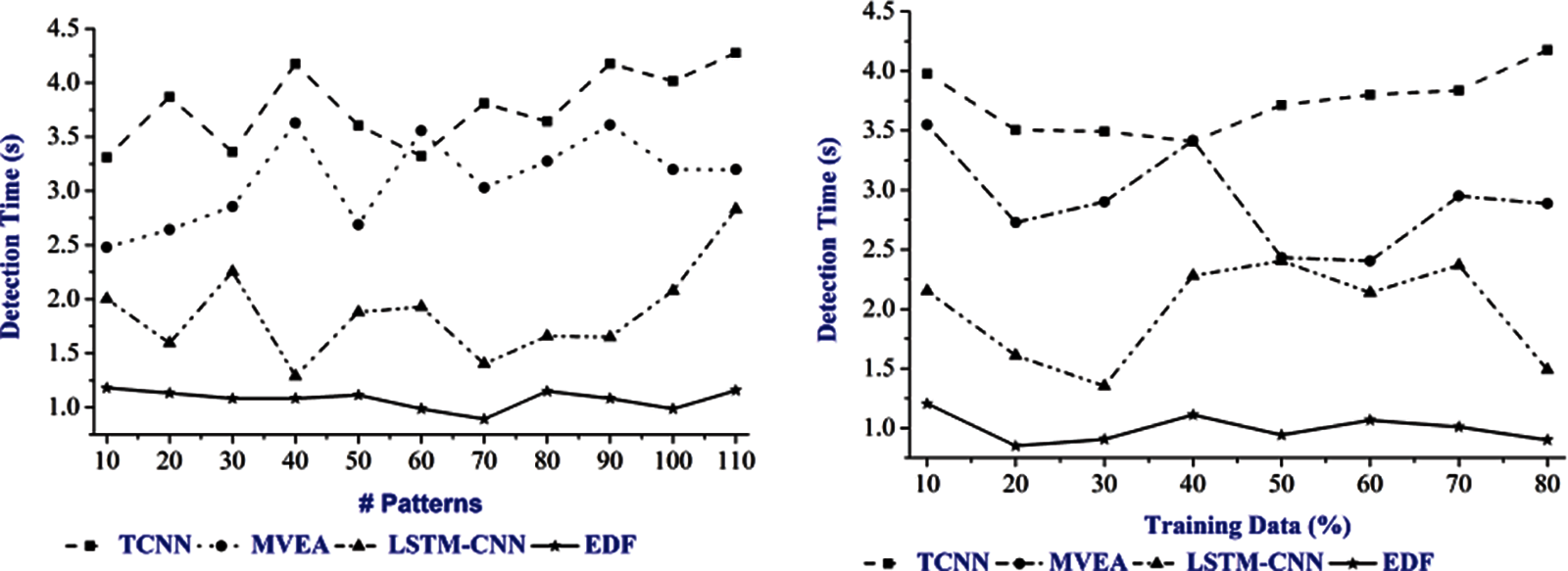

The proposed EDF demonstrates posture recognition in a short time span, with the LFE indicating DF from classification. It searches the walking patterns and training data at the particular time period,

Figure 5: (a) Fusion error for varying features. (b) Fusion error for varying time intervals. (c) Fusion error for varying training data

Figure 6: Detection time for inconsistent patterns and training data

The result of the study shows the relationship between the accuracy ratio and error. Accuracy and error are in inverse proportion, although it varies based on the pattern displayed by the subject in both directions. This estimation takes into account the subject’s height, speed, and pause time, as well as variations in orientation and motion angle, which affect precision and error. Tab. 2. shows five subjects’ accuracy under various features.

Sub1 and Sub5 have extremely precise movement patterns. This precision may be shown for a variety of features. This is due to the subjects’ identical patterns and maximal fused sensor data. Furthermore, because Sub1 and Sub4 are young, they take a shorter time to display various patterns. As a result, in the case of the two subjects, the extracted features are high. The correlation between these patterns is typically strong, and therefore the accuracy is higher. The error and patterns for the subjects are shown in Tab. 3.

The inverse relationship between the feature extraction and error is evident from the results shown in Tab. 3. The error is reduced as the number of FEs rise, which is done by attaining a large count of fused patterns. Changes in feature count help improve the correlation between multiple stored inputs, resulting in high posture identification in various scenarios. As a result, the accuracy of posture recognition improves, and the error is reduced. The calculation time for high fused patterns is long, but the computation time for Sub2 is long, due to multiple iterations.

The result of the research study indicates that the errorless data fusion approach can help address the unique needs of the patients. It allows the medical practitioners to monitor the posture of the patients and address their needs. The data collected from advanced medical devices is likely to improve patient care and enhance the delivery of healthcare.

The result of the research study shows the relationship between the accuracy ratio and error. Precision and error are in inverse proportion, while it is distinctive for both the headings, contingent upon the subject’s example. The height, speed, and pause time of the subjects are considered in this assessment, wherein the subject’s adjustments impact the accuracy and error. Tab. 2. shows the precision of the five subjects under various parameters.

The development examples of Sub1 and Sub5 shows a high precision. This is due to the comparative examples and the combined sensor information from the subjects. Sub1 and Sub4 are both young, and consequently, each subject is taken as an example in Tab. 3 to measure precision and accuracy. Therefore, the number of extracted features for Sub1 and Sub4 is high. The connection of these examples is expectedly high, and consequently, the precision is improved.

The inverse relationship between the feature extraction and error is evident from the result demonstrated in Tab. 3. As the feature extraction expands, the errors decrease; this is accomplished through a high number of intertwined designs. The Feature Check Adjustments help improve the connection between various sources of information, and subsequently, the acknowledgment of posture in various examples is high. The location of the posture enhances the precision, and consequently, the error rate decreases. The calculation time for high melded model is high, while now and again, the calculation time is high because of numerous repeats (for Sub2).

The research study results indicate that the EDF approach can help medical practitioners to monitor the posture of the patients and address their unique needs.

5 Limitations of the Proposed EDF Method

The EDF method has certain limitations, such as its inability to handle uncertainty and inconsistency. Combining data from many sources with a multisensory DF algorithm exploits the data redundancy to help minimize the uncertainty. These sources can lead to inconsistent data and poor fusion when the multisensory DF’s performance is less than that of each individual sensor.

The other limitation is the inability of EDF to address the diverse needs of the patients. The research focuses on a specific group of patients, which implies that it cannot address the needs of all the patients requiring specific treatment. The research focuses only on posture recognition accuracy and cannot be applied in other areas. It is thus important to improve the results of the study to ensure that EDF it covers other areas and concerns of the patients.

This paper discusses the EDF approach for increasing the accuracy of posture identification through multi-feature analysis. In the beginning, the patients’ walking patterns are observed at various time intervals. The characteristics are then evaluated in relation to the saved data utilizing an RF classifier. This procedure is dependent on several time periods in order for the iterations to efficiently detect classification mistakes. Finally, conditional training is utilized to fuse the disaggregated errorless data to find the posture pattern that fits the stored pattern. Patterns and features are frequently evaluated in this classification process, and conditional training is computed depending on the prior error in order to improve identification accuracy. The results reveal that the proposed approach improves accuracy while reducing fusion and detection errors as well as computation time.

Acknowledgement: Thanks to our families and colleagues who gave us moral support.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. H. Zhang and Y. Li, “A low-power dynamic-range relaxed analog front end for wearable heart rate and blood oximetry sensor,” IEEE Sensors Journal, vol. 19, no. 19, pp. 8387–8392, 2018. [Google Scholar]

2. F. Sun, C. Mao, X. Fan and Y. Li, “Accelerometer-based speed-adaptive gait authentication method for wearable IoT devices,” IEEE Internet of Things Journal, vol. 6, no. 1, pp. 820–830, 2018. [Google Scholar]

3. M. Jafarzadeh, S. Brooks, S. Yu, B. Prabhakaran and Y. Tadesse, “A wearable sensor vest for social humanoid robots with GPGPU, IoT, and modular software architecture,” Robotics and Autonomous Systems, vol. 139, pp. 103536, 2021. [Google Scholar]

4. C. P. Walmsley, S. A. Williams, T. Grisbrook, C. Elliott, C. Imms et al., “Measurement of upper limb range of motion using wearable sensors: A systematic review,” Sports Medicine Open, vol. 4, no. 1, pp. 1–22, 2018. [Google Scholar]

5. M. Guo and Z. Wang, “Segmentation and recognition of human motion sequences using wearable inertial sensors,” Multimedia Tools and Applications, vol. 77, no. 16, pp. 21201–21220, 2018. [Google Scholar]

6. C. Cui, G. B. Bian, Z. G. Hou, J. Zhao, G. Su et al., “Simultaneous recognition and assessment of post-stroke hemiparetic gait by fusing kinematic, kinetic, and electrophysiological data,” IEEE Transactions on Neural Systems & Rehabilitation Engineering, vol. 26, no. 4, pp. 856–864, 2018. [Google Scholar]

7. E. Dorschky, M. Nitschke, A. K. Seifer, A. J. V. D. Bogert and B. M. Eskofier, “Estimation of posture kinematics and kinetics from inertial sensor data using optimal control of musculoskeletal models,” Journal of Biomechanics, vol. 95, no. 8, pp. 109278–109288, 2019. [Google Scholar]

8. K. Hyodo, A. Kanamori, H. Kadone, T. Takahashi, M. Kajiwara et al., “Posture analysis comparing kinematic, kinetic, and muscle activation data of modern and conventional total knee arthroplasty,” Arthroplasty Today, vol. 6, no. 3, pp. 338–342, 2020. [Google Scholar]

9. E. Gianaria and M. Grangetto, “Robust posture identification using Kinect dynamic skeleton data,” Multimedia Tools and Applications, vol. 78, no. 10, pp. 13925–13948, 2018. [Google Scholar]

10. S. Qiu, L. Liu, Z. Wang, S. Li, H. Zhao et al., “Body sensor network-based posture quality assessment for clinical decision-support via multi-sensor fusion,” IEEE Access, vol. 7, pp. 59884–59894, 2019. [Google Scholar]

11. Y. Zhang, Y. Huang, X. Sun, Y. Zhao, X. Guo et al., “Static and dynamic human arm/hand gesture capturing and recognition via multi-information fusion of flexible strain sensors,” IEEE Sensors Journal, vol. 20, no. 12, pp. 6450–6459, 2020. [Google Scholar]

12. M. Muzammal, R. Talat, A. H. Sodhro and S. Pirbhulal, “A multi-sensor data fusion enabled ensemble approach for medical data from body sensor networks,” Information Fusion, vol. 53, pp. 155–164, 2020. [Google Scholar]

13. F. M. Castro, M. J. Marín-Jiménez, N. Guil and N. P. D. L. Blanca, “Multimodal feature fusion for CNN-based posture recognition: An empirical comparison,” Neural Computing and Applications, vol. 60, no. 2, pp. 1–9, 2020. [Google Scholar]

14. T. M. Ghazal, M. K. Hasan, R. Hassan, S. Islam, S. N. H. S. Abdullah et al., “Security vulnerabilities, attacks, threats and the proposed countermeasures for the internet of things applications,” Solid State Technology, vol. 63, no. 1, pp. 2513–2521, 2020. [Google Scholar]

15. A. Ghazvini, S. N. H. S. Abdullah, M. K. Hasan and D. Z. A. B. Kasim, “Crime spatiotemporal prediction with fused objective function in time delay neural network,” IEEE Access, vol. 8, pp. 115167–115183, 2020. [Google Scholar]

16. M. K. Hasan, M. M. Ahmed, S. S. Musa, S. Islam and S. N. Abdullah, “An improved dynamic thermal current rating model for PMU-based wide area measurement framework for reliability analysis utilizing sensor cloud system,” IEEE Access, vol. 18, no. 9, pp. 14446–14458, 2021. [Google Scholar]

17. A. H. M. Aman, E. Yadegaridehkordi, Z. S. Attarbashi, R. Hassan and Y. J. Park, “A survey on trend and classification of internet of things reviews,” IEEE Access, vol. 8, pp. 111763–111782, 2020. [Google Scholar]

18. R. Hassan, F. Qamar, M. K. Hasan, A. H. M. Aman and A. S. Ahmed, “Internet of things and its applications: A comprehensive survey,” Symmetry, vol. 12, no. 10, pp. 1674–1684, 2020. [Google Scholar]

19. A. Meri, M. K. Hasa and N. Safie, “Success factors affecting the healthcare professionals to utilize cloud computing services,” Asia-Pacific Journal of Information Technology and Multimedia, vol. 6, no. 2, pp. 31–42, 2017. [Google Scholar]

20. Y. Wang, S. Cang and H. Yu, “A data fusion-based hybrid sensory system for older people’s daily activity and daily routine recognition,” IEEE Sensors Journal, vol. 18, no. 16, pp. 6874–6888, 2018. [Google Scholar]

21. J. Gao, P. Gu, Q. Ren, J. Zhang and X. Song, “Abnormal posture recognition algorithm based on LSTM-CNN fusion network,” IEEE Access, vol. 7, pp. 163180–163190, 2019. [Google Scholar]

22. S. B. Zahra, M. A. Khan, S. Abbas, K. M. Khan, A. Ghamdi et al., “Marker-based and marker-less motion capturing video data: Person and activity identification comparison based on machine learning approaches,” Computers, Materials & Continua, vol. 66, no. 2, pp. 1269–1282, 2021. [Google Scholar]

23. M. Al-Amin, W. Tao, D. Doell, R. Lingard, Z. Yin et al., “Action recognition in manufacturing assembly using multimodal sensor fusion,” Procedia Manufacturing, vol. 39, no. 3, pp. 158–167, 2019. [Google Scholar]

24. X. Wang and J. Zhang, “Posture feature extraction and posture classification using two-branch CNN,” Multimedia Tools and Applications, vol. 79, no. 3–4, pp. 2917–2930, 2019. [Google Scholar]

25. E. Fendri, I. Chtourou and M. Hammami, “Posture-based person re-identification under covariate factors,” Pattern Analysis and Applications, vol. 22, no. 4, pp. 1629–1642, 2019. [Google Scholar]

26. H. F. Nweke, Y. W. Teh, G. Mujtaba, U. R. Alo and M. A. A. Garadi, “Multi-sensor fusion based on multiple classifier systems for human activity identification,” Human Centric Computing and Information Sciences, vol. 9, no. 1, pp. 1–14, 2019. [Google Scholar]

27. S. Abbas, M. A. Khan, A. Athar, S. A. Shan, A. Saeed et al., “Enabling smart city with intelligent congestion control using hops with a hybrid computational approach,” The Computer Journal, vol. 12, no. 8, pp. 286, 2020. [Google Scholar]

28. L. Tran and D. Choi, “Data augmentation for inertial sensor-based posture deep neural network,” IEEE Access, vol. 8, pp. 12364–12378, 2020. [Google Scholar]

29. M. Hanif, S. Abbas, M. A. Khan, N. Iqbal, Z. U. Rehman et al., “A novel and efficient multiple RGB images cipher based on chaotic system and circular shift operations,” IEEE Access, vol. 8, no. 8, pp. 146408–146427, 2020. [Google Scholar]

30. N. Dawar, S. Ostadabbas and N. Kehtarnavaz, “Data augmentation in deep learning-based fusion of depth and inertial sensing for action recognition,” IEEE Sensors Letters, vol. 3, no. 1, pp. 1–4, 2019. [Google Scholar]

31. Q. Zou, L. Ni, Q. Wang, Q. Li and S. Wang, “Robust posture recognition by integrating inertial and RGBD sensors,” IEEE Transactions on Cybernetics, vol. 48, no. 4, pp. 1136–1150, 2018. [Google Scholar]

32. Y. Fan, H. Jin, Y. Ge and N. Wang, “Wearable motion attitude detection and data analysis based on internet of things,” IEEE Access, vol. 8, pp. 1327–1338, 2020. [Google Scholar]

33. S. Islam, A. H. Abdalla and M. K. Hasan, “Novel multihoming-based flow mobility scheme for proxy NEMO environment: A numerical approach to analyse handoff performance,” Science Asia, vol. 1, no. 43, pp. 27–34, 2017. [Google Scholar]

34. S. Zheng, “Gait dataset,” 2009. retrived 31 December 2020. [Online]. Available: http://www.cbsr.ia.ac.cn/users/szheng/?page_id=71. [Google Scholar]

35. M. Akhtaruzzaman, M. K. Hasan, S. R. Kabir, S. N. Abdullah and M. J. Sadeq, “HSIC bottleneck based distributed deep learning model for load forecasting in smart grid with a comprehensive survey,” IEEE Access, vol. 7, no. 2, pp. 34567–34589, 2020. [Google Scholar]

36. M. K. Hasan, S. Islam, R. Sulaiman, S. Khan and A. H. Hashim, “Lightweight encryption technique to enhance medical image security on internet of medical things applications,” IEEE Access, vol. 24, no. 9, pp. 47731–47742, 2021. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |