DOI:10.32604/cmc.2022.022213

| Computers, Materials & Continua DOI:10.32604/cmc.2022.022213 |  |

| Article |

Dynamic Automated Infrastructure for Efficient Cloud Data Centre

1Department of Computer Science, King Khalid University-Sarat Abidha Campus, Abha, Saudi Arabia

2Department of Computer Engineering, King Khalid University, Abha, Saudi Arabia

*Corresponding Author: R. Dhaya. Email: dhayavel2005@gmail.com

Received: 31 July 2021; Accepted: 01 September 2021

Abstract: We propose a dynamic automated infrastructure model for the cloud data centre which is aimed as an efficient service stipulation for the enormous number of users. The data center and cloud computing technologies have been at the moment rendering attention to major research and development efforts by companies, governments, and academic and other research institutions. In that, the difficult task is to facilitate the infrastructure to construct the information available to application-driven services and make business-smart decisions. On the other hand, the challenges that remain are the provision of dynamic infrastructure for applications and information anywhere. Further, developing technologies to handle private cloud computing infrastructure and operations in a completely automated and secure way has been critical. As a result, the focus of this article is on service and infrastructure life cycle management. We also show how cloud users interact with the cloud, how they request services from the cloud, how they select cloud strategies to deliver the desired service, and how they analyze their cloud consumption.

Keywords: Dynamic; automated infrastructure model; cloud data centre; security; privacy; energy efficient

Dynamic infrastructure in a data cloud centre denotes combining resources that add computation, networking, and storage. Such a facility can automatically provide and adapt itself as per the workload request [1]. IT managers can also select to supervise the resources from time to time. Since the Datacenter automation process of a data center looks after scheduling, monitoring, maintenance, application delivery, etc, it has been supervised and processed with no human supervision [2]. Cloud infrastructure elaborates on the things that are required for cloud computing, the advantages of individual service access, automated infrastructure scaling, and dynamic. In general, complexity in data sharing from the data centre has been the key challenge. As business needs have been increasing rapidly, an enormous amount of data needs to be shared and stored after the process and bigger, this constant rise has provided data centers that are not cost-effective and difficult to manage [3]. The combination of virtualization and the cloud could help to minimize data center inefficiencies. Data centers are an energetic combination of cloud and non-cloud properties that stay in more corporeal points and do have a smart information system to move towards processing applications effectively [4]. The smart, secure, and autonomous data cloud center must have the following capabilities: The capabilities in a software structured region are dynamically and comprehensively controlled, allowing them to sense and adapt to design requirements in real-time [5]. Private and public clouds coexist in a distributed mode with conventional networks. A continuously available ecosystem that can endure equipment failure while continuing to operate Data technologies are used to understand and address business needs in cognitive cloud computing [6]. A global, regulated platform that unifies the control of IT and network infrastructure infrastructural facilities through a single platform. Whatever cloud-based data centres may be, there are fundamental constraints to what they can achieve with a restricted range of capabilities. The old virtual server philosophy must adapt as the demands for higher flexibility, heterogeneous nature, and real-time reactivity increase as a result of portability, social business, and data analytics [7]. The key issues in data cloud data centres are ineffective communication data centres to proficiently discuss and balance resources, no provision of multi-tiered contractual requirements to consumers and assure the quality of products and services, disintegrated “the secured network to deliver a powerful user experience, and automation and inflexibility of transforming data centre infrastructure into pools of resources [8].

This section of this paper explains the related papers that deal with the challenges and their solutions for private Multi-Data Centre Management Cloud Models.

Svorobej et al. (2015) offered a computerized technique for cloud computing topologies development, collecting data, and model development operations, as part of a range of services built and interconnected to facilitate these operations. Current telecommunication and cloud service providers have a distinct set of issues when it comes to communications infrastructure. These services necessitate networks that can efficiently support changes in strain or congestion characteristics, as well as based cloud installation and commissioning that is automated. This allows for more cost-effective service contributions as well as new profits. The consequences of a software-defined network test stage that merges data centre arrangement, long-distance optical networking, and other procedures under a single system operator are described in this study. DeCusatis et al. (2013) revealed computing resource reuse and dynamic wavelength assigning to adapt to evolving eligibility criteria, measured service, and other features of this technique. Awada et al. (2014) researched energy consumption patterns and found that by using appropriate optimizations guided by their energy consumption estimation, they save a quarter part of energy in cloud data midpoint. Their findings can also be used to evaluate energy consumption and enable transient and steady-state system-level management in cloud applications.

For many businesses, data centre power usage is one of the most expensive commodities [9]. Virtual-Machine integration, which minimizes the cost of physical devices (used in datacenters with heterogeneous resources), can be used to decrease power consumption in data centers with heterogeneous system servers, relating to Quality of Service (QoS) limitations. Smaeel et al. (2018) conducted a comprehensive review of the most modern methodologies and algorithms utilized in preemptive dynamic cooperative communications with an emphasis on energy usage. They proposed a broad model that can be applied to various stages of the cooling period. The dynamic resource allocation approach and the power saving approach, accordingly, were suggested by Chao-Tung Yang et al. (2019) to fix the challenges. To validate the change solution with live virtualization, they first created an architecture program based on Open Stack (VMs). They also developed an energy-saving method and allocated contextual features. Finally, they kept an eye on the state of the power transmission unit to keep track of the consumption and energy use. Finally, they discovered that the offered procedures are capable of producing not only effective use of VM capabilities, but it is also possible to preserve resources on a physical computer in the testing. V. Sarathy et al. (2010) suggested and defined source architecture for a network-centric datacenter network operations center that sponges and extends basic points from the telecom industry to the technology world, enabling flexibility, adaptability, dependability, and privacy. They also discussed a concrete evidence system that was developed to show how variable management information may be used to provide legitimate service assurance for network-centric computing architectures. P. Abar et al. (2014) developed an open-source cloud computing architecture centered on Open Stacking. The test cloud system's resource allocation capacity is marked using a clever assessment tool called “Cloud Rapid Experiments and Evaluation Tool.” Cloud benchmarking measurements are sent into a smart middleware layer of highly distributed multi-agents in actual environments. They preset certain unique policies for VM supply and functional block evaluation within a comprehensive cloud analysis and framework for modeling called “CloudSim” to appropriately govern the prototyping data's continuous function entry. Both solutions are solutions for implementing our ideas. Thousands of virtual servers can be scaled, so their development team was prepared. Yun Zhang et al. (2018) suggested a sense of hearing model based on the SVM-Grid, a thorough parameter-search approach built on a support vector machine, and the model uses statistical association and component analysis. To refine the program and increase power, approaches for detecting cloud failures and maintaining fault sample libraries are provided. The suggested approach can deliver more precise and effective detection accuracy for data centers when compared to certain comparable previous techniques. With the help of CloudSim, Singh et al. (2020) designed to reproduce the process of a data centre with various numbers of hosts over various opening hours to evaluate non-power-aware hosts’ energy usage. The above methodologies were then analyzed to indicate the varying degrees of energy use. The artificial intelligence examination of strategies for dynamic reallocation of virtual machines via Virtual Machine migration to present CPU performance was provided by Beloglazov et al. (2020). The results show that the proposed methodology saves a significant amount of energy while maintaining a high level of QoS. This validated the suggested resource system's future design and evaluation.

Beloglazov et al. (2012) designed a set of principles for energy-efficient cloud services. Research directions problems, as well as utilization of resources and assignment methods for energy-efficient operation of cloud data centers, are based on this framework. The suggested energy-aware allocation algorithms allocate data centre capacity to load requests in a way that increases the data organization's energy costs while maintaining the agreed-upon Quality of Service (QoS). For energy efficiency, Deng et al. (2016) presented a new operating system consolidation structure. This study offered an improving under load determination methodology for the under loaded host decision stage, which is based on the overloaded criterion of homes and the median usage of all dynamic hosts. The main two contributions by this author are a new plan based on an average utilization of the server farm called the Minimum Average Utilization Difference (MAUD) policy and a clear plan based on an average utilization of the data centre called the MAUD policy in the relocation target host selection stage. The suggested methodology could decrease expenses and SLA, according to the test findings. Under the hypothesis that the source of the issue is not known perfectly, but changes within defined limitations, Zola et al. (2015) created an arithmetical procedure as a resilient diverse numeral linear series. The representation permits the Network Controller to exchange between power usage and prevention of more catastrophic and rare variations of the doubtful input, according to a calculation. M. Dayarathna et al. (2016) reviewed the state-of-the-art methodologies for modeling and predicting energy usage in data centers and their components. They analyzed the existing knowledge on data center power modeling in-depth, spanning many concepts. This methodical methodology enables us to discover a variety of challenges that arise in power modeling at various levels of data quality. They end the study by highlighting significant difficulties in additional investigation into developing fast and useful data center power models based on all these findings. Khoshkholghi et al. (2017) suggested several strategies for dynamically consolidating VMs into a cloud hub. The goal is to maximize the use of CPU cores while lowering energy usage while adhering to SLAs for CPU, RAM, and bandwidth. Different ages and stages are used to verify the efficacy of the presented methods. The evaluation's findings clearly illustrate that the recommended algorithms reduce energy usage while maintaining an elevated stage of SLA observance. When compared to the benchmark techniques, the existing methodology can cut energy usage by up to 28 percent and enhance SLA by up to 87 percent. Liu et al. (2018) presented the EQVC technique, which comprises of four techniques that correspond to distinct stages in cooperative communications and selects duplicated VMs from hosts before they are overloaded and transfers the VMs to additional hosts to conserve force and assure QoS parameters. They also proposed a dedicated server with adaptable possessions to avoid hosts from becoming overburdened again. They experimented with different workload traces from a centralized database to demonstrate the efficacy of the proposed strategy and techniques. It is observed from the review of the literature that the existing papers talked about energy efficient cloud services and infrastructure for a common platform and they have been lacking dynamism in associating with dedicated or private data cloud centers. Hence, there is a need to develop an energy-efficient dynamic automated infrastructure model for the cloud data centre.

3 Proposed Dynamic Automated Infrastructure Model for Private Cloud Data Centre

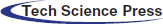

This section explains the novel energy-efficient dynamic automated connectivity model for private cloud data centers to identify the major issue of incentivizing energy-efficient distribution of resources in cloud computing data centers. To meet competing frameworks to demand server virtualization while saving energy, the energy-efficient dynamic automated infrastructure paradigm for private cloud data centre infrastructure is shown in Fig. 1. The five key players considered for the infrastructure block in the data cloud centre are as follows:

Figure 1: Energy-efficient dynamic automated infrastructure paradigm for private cloud data centre

Physical machinery: The prototype system for producing virtualized supplies to cover customer needs is provided by the underlying physical processing platforms.

Virtual cloud machinery: Numerous virtual machine workstations can be continuously established and paused in a given process to match acceptable demands, giving you the most versatility in configuring various usage partitions within the same physical server to suit varied service request requirements. On a single physical server, many virtual machines can run programs based on the various running systems at the same time [10]. Applications can be streamlined and underutilized components can indeed be placed on a lower stage, switched down, or programmed to function at reduced numbers to conserve energy by automatically relocating VMs among individual servers.

Dynamic automated private cloud infrastructure: It is a computer model that provides focused, information from a remote for a single corporate unit. It also adjusts itself autonomously to consumer preferences or tasks.

• Energy Observer: It monitors and decides which physical machines should be turned on or off.

• Service Scheduler: It involves assigning applications to virtual machines and establishing resource allocations for those virtual machines. It also calculates when to append or withdraw virtual machines to fill orders.

• VM Supervisor: It takes account of the deployment of virtual machines and their allocating of resources. It's also responsible for moving virtualization between actual systems.

• Account Manager: It inquiries are used to keep track of genuine resource consumption to calculate accounting practitioners. In addition, previous consumption data can also be used to enhance data decisions [11].

Customer Edge processing: These serves as a link between the private cloud and the end-users. To promote energy-efficient resource allocation, it is necessary to communicate the following parts:

• Demand needed: Based on the customer's quality of service constraints and energy-saving methods, he wants to negotiate with customers or dealers to conclude the Service Level Agreement with stated costs and consequences between the private cloud providers and customers.

• Service Details: Before choosing whether to accept or reject a received demand, understand and evaluate the customer's needs. As a result, it requires the most recent due to increased energy data from VM Management and Energy Management, correspondingly [12].

• Consumer Details: It collects selected customer attributes so that key customers can be given preferential treatment and are preferred over others.

• Pricing: It determines how customer details are priced to manage the production and consumption of computer resources and priority service deployments.

• Customers/Clients: Private Cloud user consumers or their intermediaries can send customer inquiries to the private Cloud from everywhere in the globe. It is vital to note that private Cloud service providers and customers of deployed services are not always the same thing. A consumer could be an organization that is implementing a Web service that has different demands according to how many “clients” it's being used [13]. Lack of Compliance, mismanagement of multiple clouds, under-performance in data sharing, lack of resources is the main issues of the existing system.

This investigation refers to the formation of a private cloud platform for private cloud data centers that provides energy-efficient monitoring and a dynamic automated infrastructure design. To lower the cost of private cloud data, VMware and related technologies, as well as third-party cloud platforms and solutions providers such as Elastic Computing Cloud of Amazon, Simple Storage Service, and Microsoft's Azure, will be substantially reused. We also make use of solutions, such as the Oracle database, for constructing private clouds for generalized client applications and connected software connectors to connect to various cloud management tools like Oracle Database and Amazon EC2 engine [14]. For the private cloud data centre, the design of an energy-aware dynamic automated server maintenance that leads to the reconfiguration of private cloud resources based on recently available resources and network status has also been done. A collection of data center resources in the form of computation, communication, and memory provide the dynamic data. Further, Dynamic automated infrastructure aligns IT with corporate policies to enhance quality, interoperability, information availability and throughput. On the other hand, increase the computing resources and the capacity to execute maintenance work on either physical cum virtual structures by eliminating business interruption without compromising IT costs.

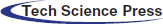

Figure 2: Resource management allocation for private cloud

4 Energy-Aware System Architecture for Private Cloud with Resource Allocation

To present the essential architecture, various physical servers in large private cloud data centers are being used here. The global and local administrators, as well as the dispatcher, make up the communication system design. The energy efficiency needs to be attained in such a way that an enhanced data center efficiency while employing cloud services is mostly responsible for the efficiency achievement. In addition, the cloud may handle multiple products at once, allowing it to more efficiently divide resources across a large number of consumers and hence the data cloud centre must be developed to use minimum energy for increasing the maximum efficiency. Local administrators provide global administrators with information on resource use and a list of VMs that have to be transferred. In this structure, the physical nodes are solitary or multi-cores, and computational performance can be measured. The procedure must be completed in several phases, as indicated in Fig. 2.

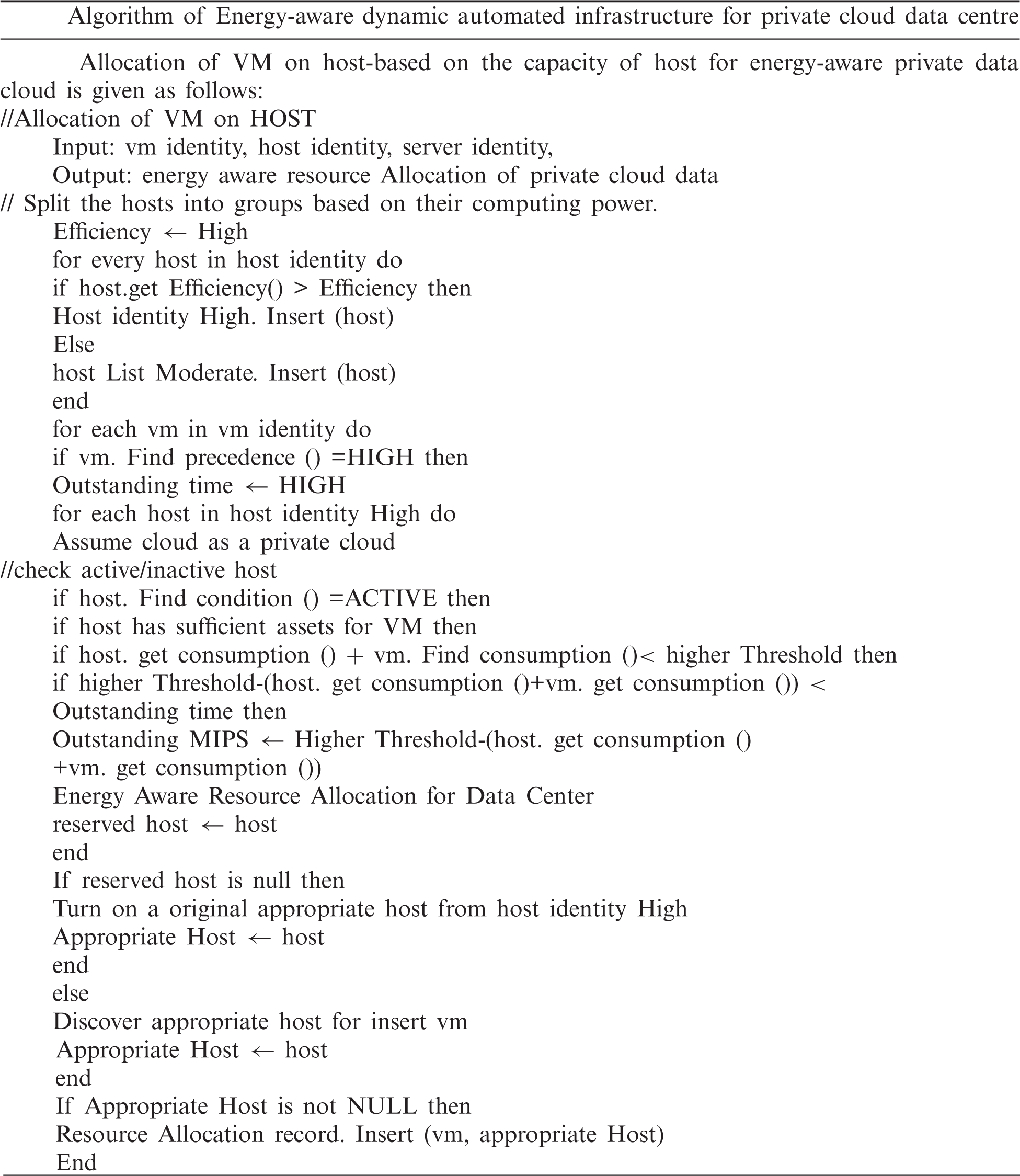

Primarily, the accessible private cloud hosts are categorized by increased computational ability private cloud servers and medium processing capability private cloud hosts based on the processing power of the private cloud. The following parameters are evaluated for every VM with a major priority in the list of private cloud servers with high processing power: (a) the private cloud server is fully operational in an idle state. b) The private cloud server must have sufficient resources to service the VM; c) the private cloud host should not become overburdened as a consequence of the VM's placement, and d) the VM should be installed on a host in a manner that the consequent exploitation approaches a higher threshold. If a private cloud host meets all of these criteria, the VM is deployed there. Eventually, a sleeping/idle private cloud server with large processing capabilities is triggered, and the VM is moved to this newly activated host.

5 Energy Efficiency in Automated Infrastructure for Private Cloud Data

Using Peer-to-Peer technologies, there is a need for greater private cloud data centre infrastructure. The increasing use of smart phones as receivers of multimodal web traffic is a prospective development that will make P2P more difficult to use. Many enterprises choose to outsource their data centers to specialist private cloud operators since monitoring host systems is time-consuming and costly. These various methods enter into contracts with their clients that include Service Level Agreements (SLAs), which incentivize them to assure that physical capability is available at all times. As a result, storage devices and energy are used inefficiently. Moreover, when operating a purely physical dedicated server was the only real alternative, shutting off complete systems and employing dynamic voltage management to save money was a possible alternative [15]. In this case, virtualization is the OS that interfaces immediately with the physical servers does not execute the real functions, but instead gives us the ability to build a VM container that imitates equipment on which another OS and any application can operate [16]. The ability to partition network resources into finer-grained virtualized environments that are logically fully independent from one another is a major benefit of virtualization.

QoE Measurement: The overall acceptability of a service provider, as evaluated qualitatively by the end-user, is used to determine QoE. The principles above can be utilized to determine certain boundaries for an acceptable QoE that act as a real deterioration in QoE when capacity is lowered [17]. The measure that is considered necessary here for the change in QoE to be justified in the context of internet viewing is a difference in loading time, i.e., milliseconds, as follows:

The form of the parabola can be predicted using the page load time (pl) by first interpreting that when the server capacity approaches 0, the predicted page load time grows endlessly long [18]. So, if the page load time is a consequence of server capacity x, it can be expressed as follows:

The estimated page load time must approximate a meaningful result S logarithmically.

As a result, the behavior of the expression (pl(y)), i.e., the average page load time, is likely to resemble a functional of the element:

It is possible to measure the lower limit S for the page load time for a certain machine if one capacity variable is configurable:

This is because the moment at which the improvement in QoE becomes insignificant when bandwidth is greatly raised is the most important. After that, it is probably best to figure out where the program's constraints have changed and start putting resources there [19].

Energy Efficiency Measures: The most generally used and acknowledged measurement for private cloud data centre energy performance is PUE, which is described by

In a virtualization context, a noteworthy statistic for server energy consumption has been proposed.

where PCstandBy + PChypervisorIdle represents the private cloud server power usage with no virtual machines on, K ⋅ NVM represents the amount of control NVM virtual machines consume at an idle state and q Uactive represents the quantity of power consumed by running claims. The following is used here as a statistic for virtualization network energy efficiency:

Nhost is the number of individual servers hosting the virtual machines, while NVM is the numeral of “standard” virtual machinery with a fixed numeral for computing memory capacity.

6 Experimental Results and Outcomes

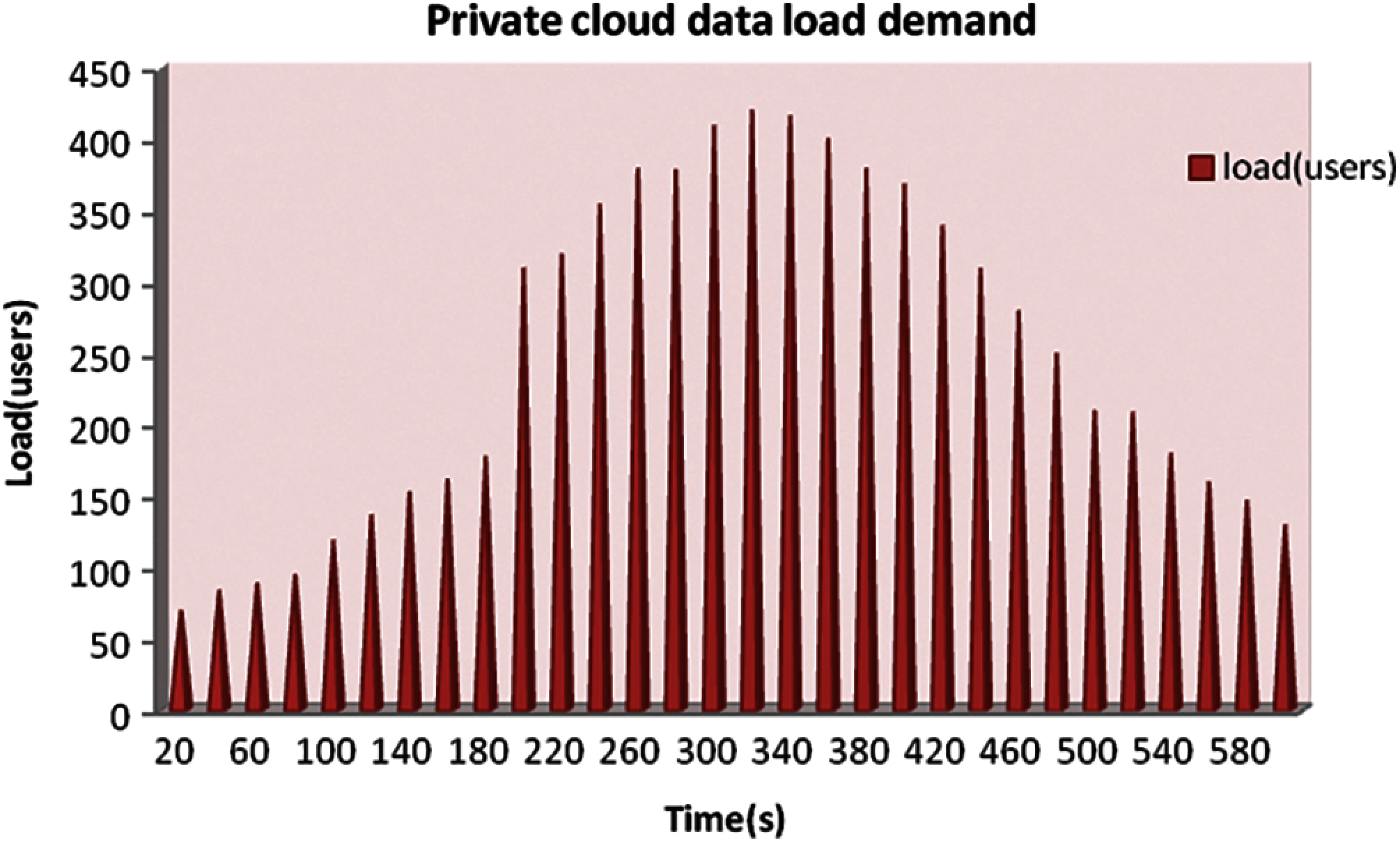

A private cloud data centre with 125 heterogeneity edge node devices is modeled for simulation. Each network is expected to feature one CPU core with a throughput of 1250, 2500, or 3750 MIPS, 8 GB of RAM, and 1 GB of internal storage space. The hosts’ energy efficiency is determined by the QoE and PUE models. According to this model, a host's power consumption ranges from 160 W at 0% CPU use to 275 W at 100% CPU use. One CPU core with 275, 550, 825, or 1100 Million Instructions per Second, 128 MB of Random Access memory, and 1 GB of storage are required for each VM. Users offer suggestions for the deployment of 250 heterogeneous VMs, which fill the virtual private data centre to maximum capacity. Each VM executes a software platform or other applications with a changing demand that is modeled to provide CPU utilization based on a random variable parameter. On a 275 MIPS CPU with full utilization, the application can run for 165,000 MIPS, which is equivalent to 12 min of processing. At first, VMs are assigned based on the specified features, anticipating that they will be fully utilized. Each process was conducted 12 epochs, and the learning outcome stood on the normal principles. A Dynamic Automated Infrastructure network testing device has been used to generate energy-efficient loading speeds in a private cloud data centre and capture reactions with scheduling. The private cloud data centre allows the creation of a changeable peak load in which the quantity of base load and volatility can be precisely regulated on a “number of consecutive users” premise, which is ideal for simulating a typical private cloud pressure environment. Unfortunately, the equipment has certain limitations when it comes to evaluating private cloud server connection speeds, as it only delivers median, highest, and least numbers. A pattern for a 12-minute testing period was created, with the base load of the private cloud data centre being 125 continuous clients at first, then 375, and eventually 250, with a 75 user turnover well above. Concurrent clients as a consequence of time interval are depicted in Fig. 3 as the private cloud data peak load. In the private cloud data load profile, each of these replicated clients initiates a signal of dynamic automated infrastructure demands to the host, simulating the behaviors of a cloud-based client fetching information from a website for a client. A study using the private cloud data load demand shown in Fig. 3 was run 14 times on the experimental setup with two variables. The number of server-side virtualization enabled from 1 to 7 and whether or not SSL encryption was enabled. Fig. 3 represents the number of concurrent clients waiting for a complete response from the cloud server as a function of time elapsed in the experiment. Poor communication data centers to competently communicate and manage capabilities, no availability of multi-tiered contractual obligations to clients, and no assurance of consumer satisfaction are the main limitations in data cloud data centers.

Figure 3: Private cloud data load demand

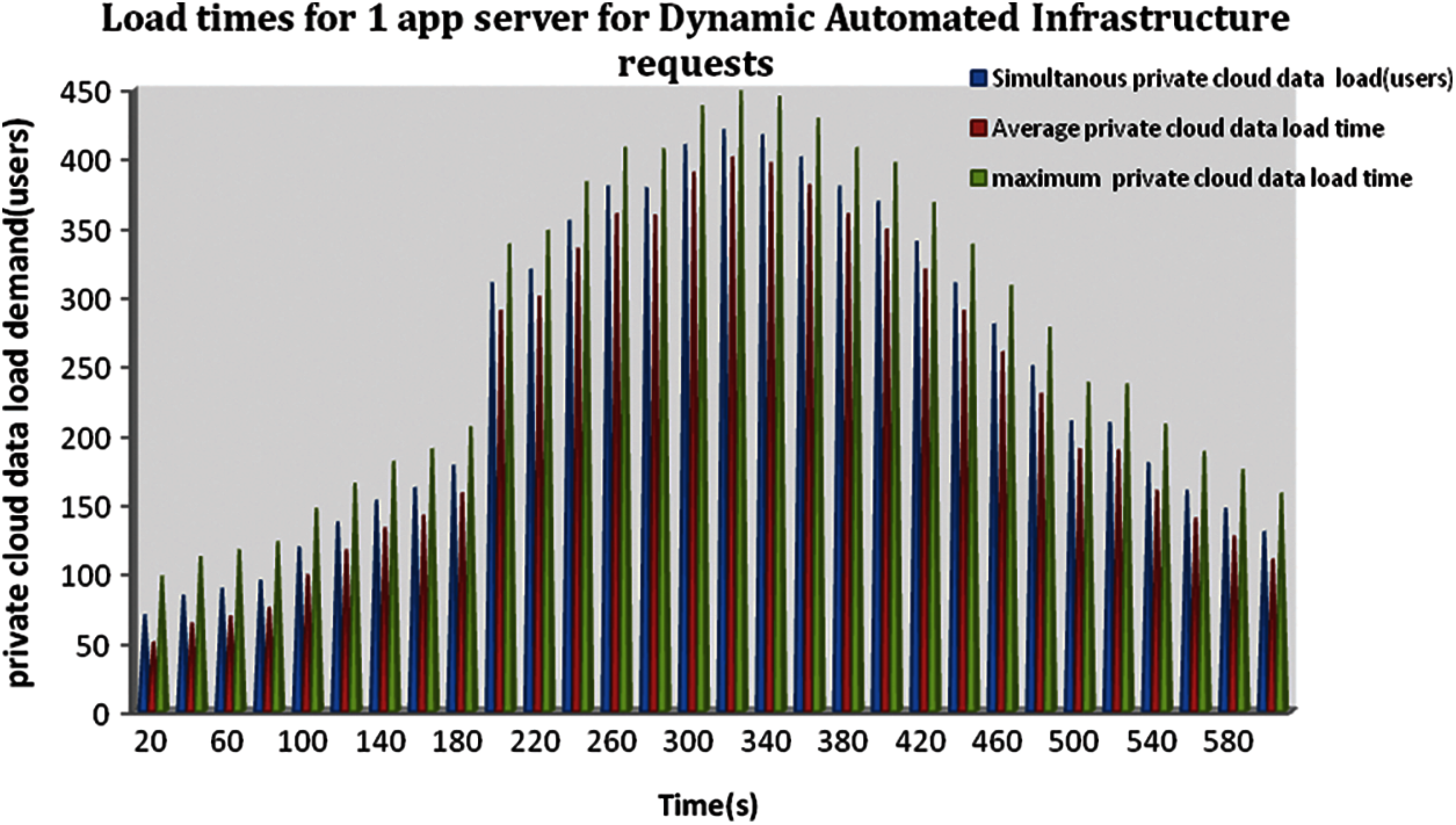

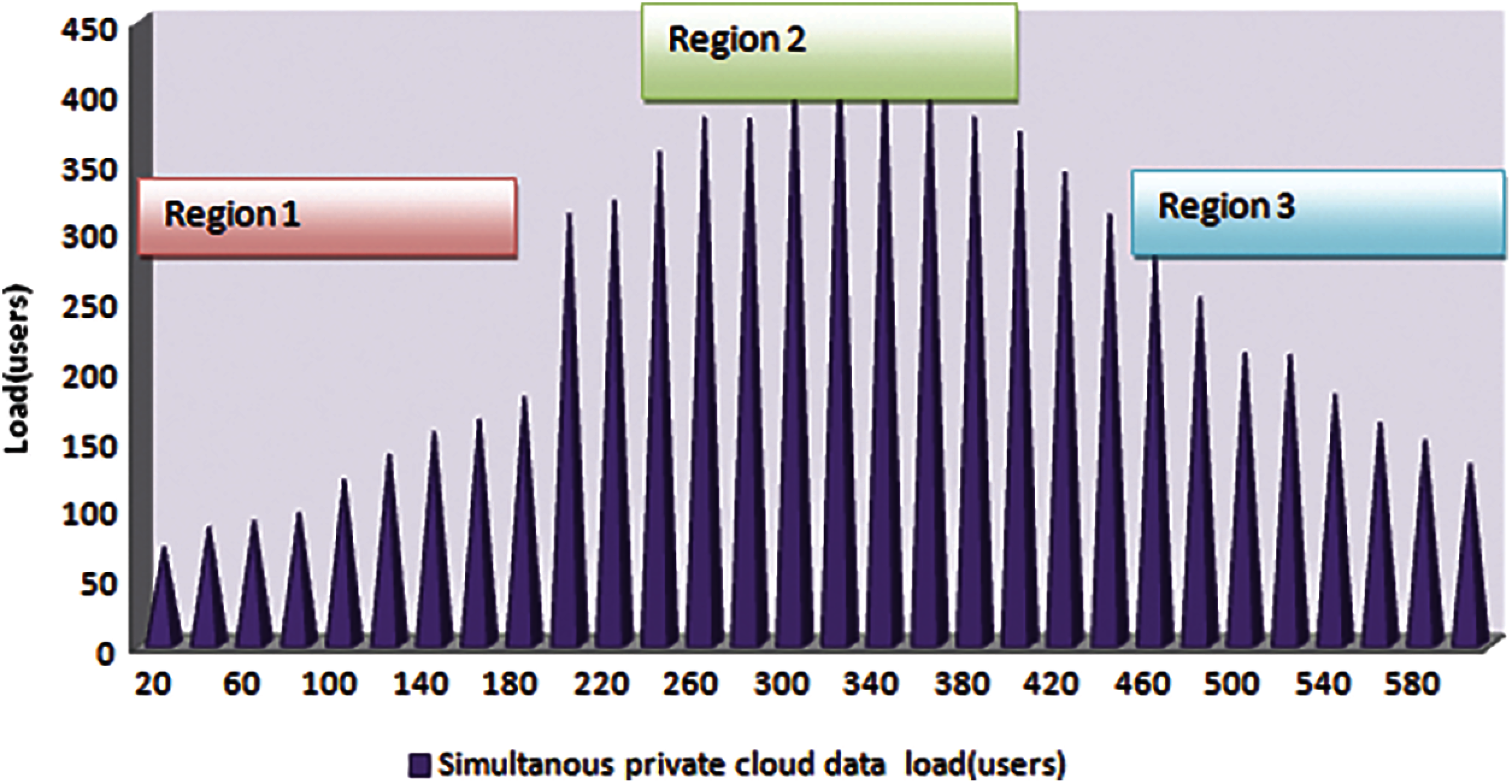

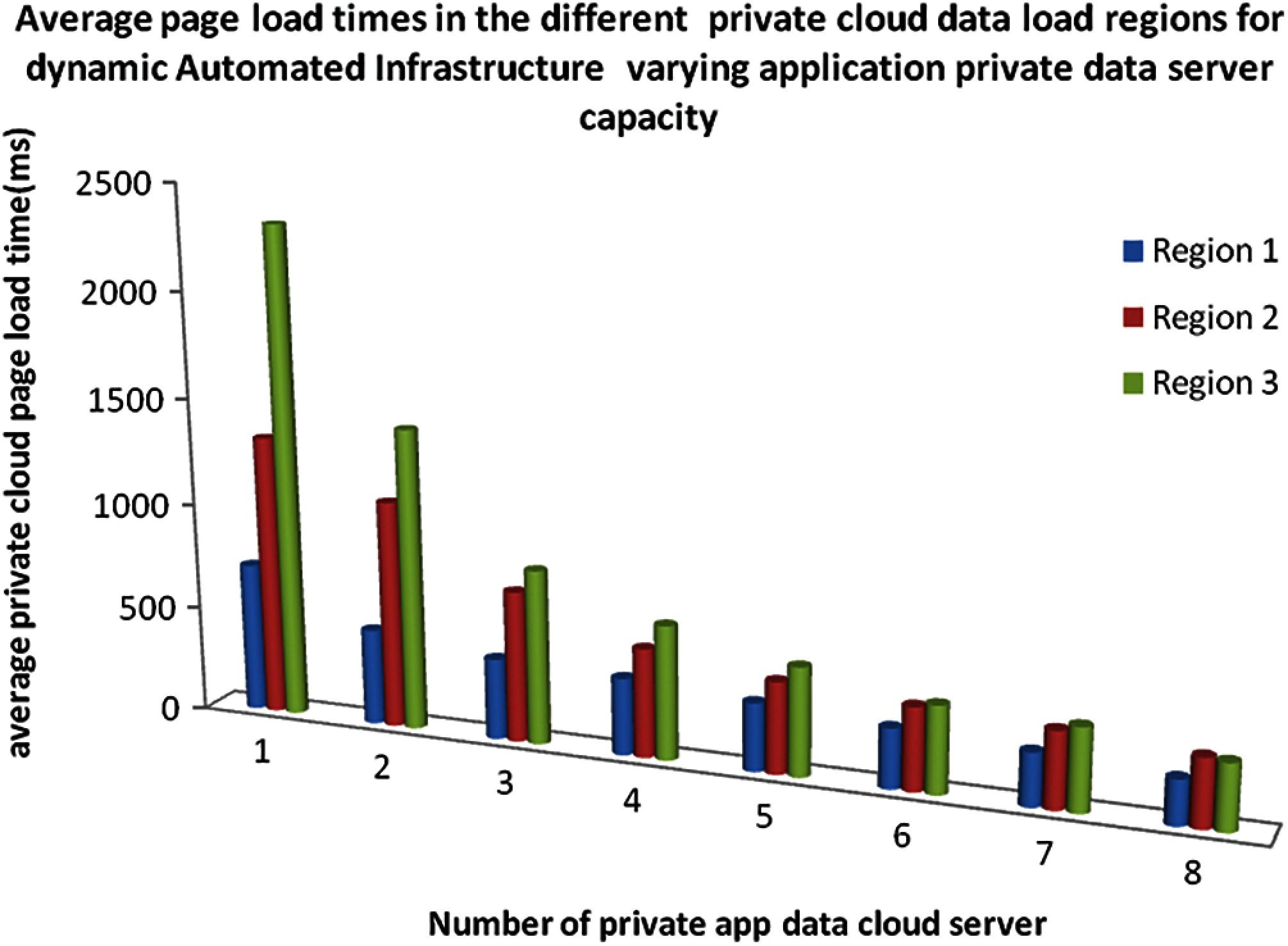

The mean private cloud load time was exposed as a role of the point in time, with the numeral of available customers for comparison. Further, the highest private cloud load time to approximation the aberrations, to best visualize the information gathered for this work. Fig. 4 depicts the load times for a single client machine for dynamic queries in the private cloud. Because there are various distinct phases of private cloud data load in a single number, it is a challenge to see the advancement of the QoE appropriately from Fig. 5 with region parts. Three 100-second time periods of relatively stable load are picked from the private cloud to properly summarize the results information and process the impact on QoE.

Figure 4: Load times for 1 app server for dynamic automated infrastructure requests

Figure 5: Visualization of the time regions from which private cloud data is averaged in the combined plot

Because there are lots of different periods of private cloud data load in a given graphic, it is impossible to see the evolution of the QoE correctly. Three 110-second time zones of approximately static rate were identified from the peak load according to Tab. 1 and Fig. 5 to properly summarize the outcome information and process the effects on QoE. The areas are chosen such that the systems would most likely be in stable equilibrium after the initial transients induced by the raising or lowering of the number of concurrent private cloud clients, as well as between median load zones. information stored in a private cloud. These outcomes of averaging load times in these zones are shown in Fig. 6 for dynamic automated infrastructure.

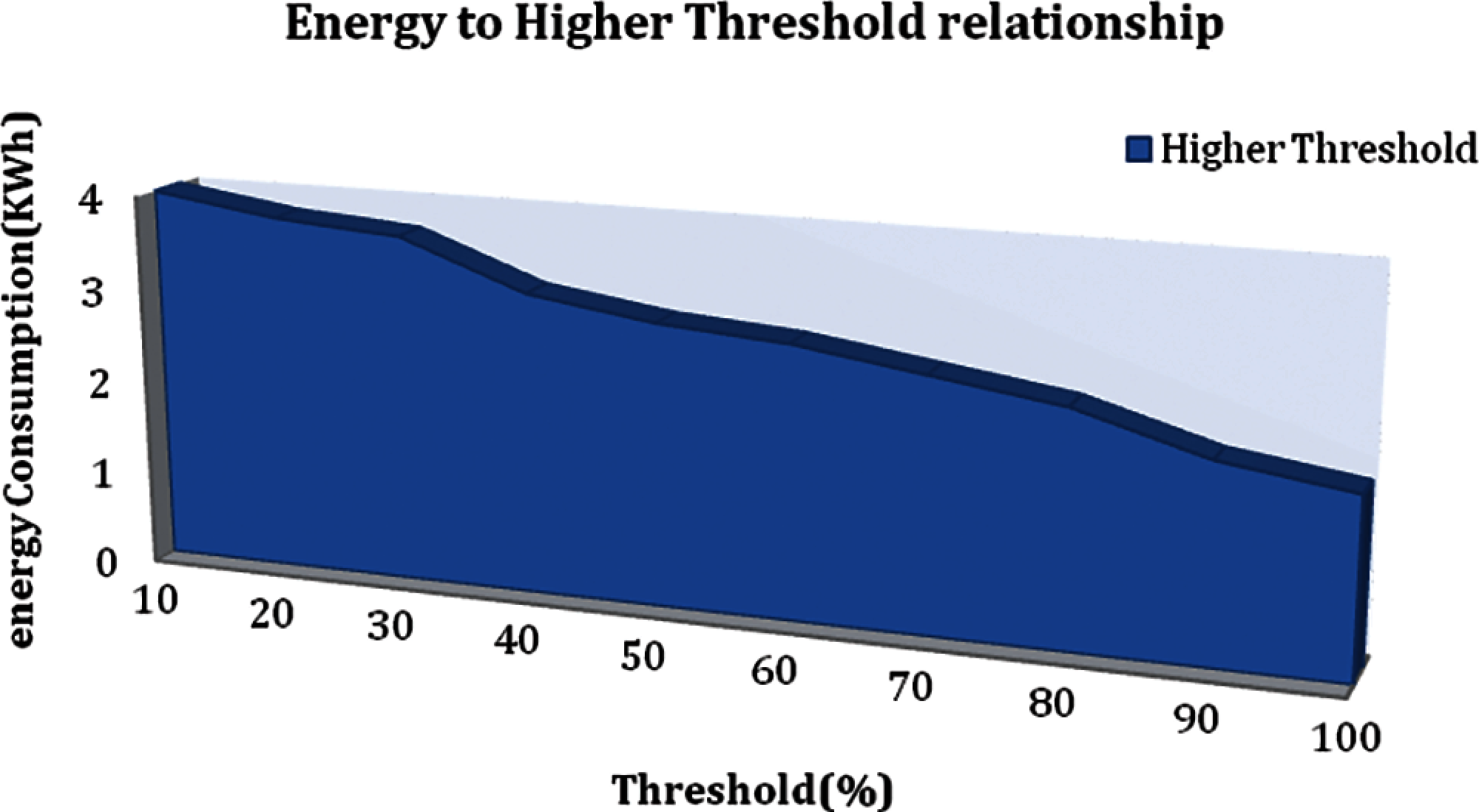

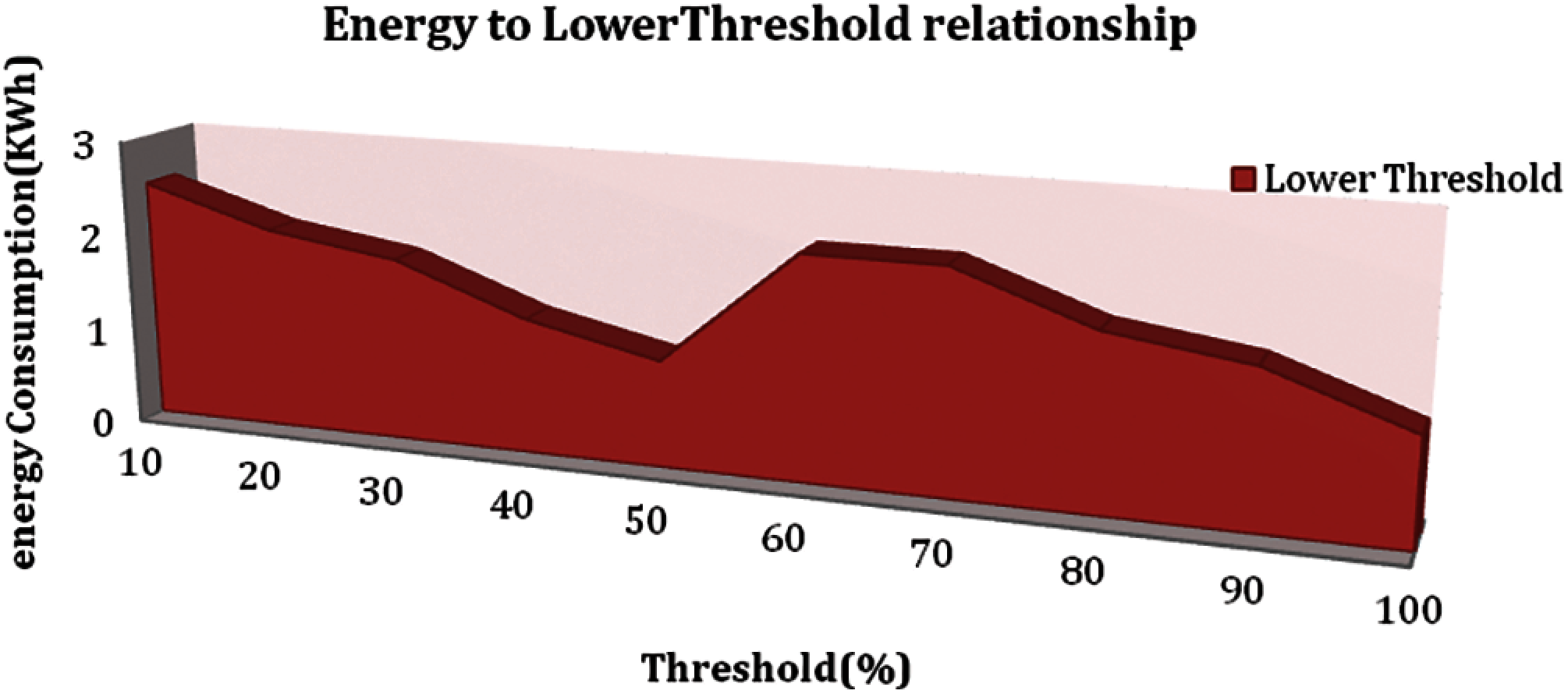

To review two-threshold policies, the optimum resources for utilization thresholds in terms of energy efficiency and given QoS must be determined. As a result, we simulated an energy-efficient strategy with various thresholds, altering both the average value of the thresholds and the spacing between both the highest and lowest thresholds. Figs. 7 and 8 report the comparison of applying this plan to reduce energy use that starts from higher to lower threshold values. Changing the base criterion from 10% to 80% and the higher criterion from 40% to 90% will yield the lowest energy usage values. The method of processing threshold intervals, nevertheless, is large. As a result, we examined the thresholds by the fraction of SLA breaches induced to arrive at concrete values, as few SLA breaches provide high QoS. The experiments show that with 38% as the spacing between utilization criteria, the minimal values of both attributes may be reached.

Figure 6: Average page load times in the different private cloud data load regions for dynamic automated infrastructure varying application private data server capacity

Figure 7: Energy to higher threshold relationship

Figure 8: Energy to lower threshold relationship

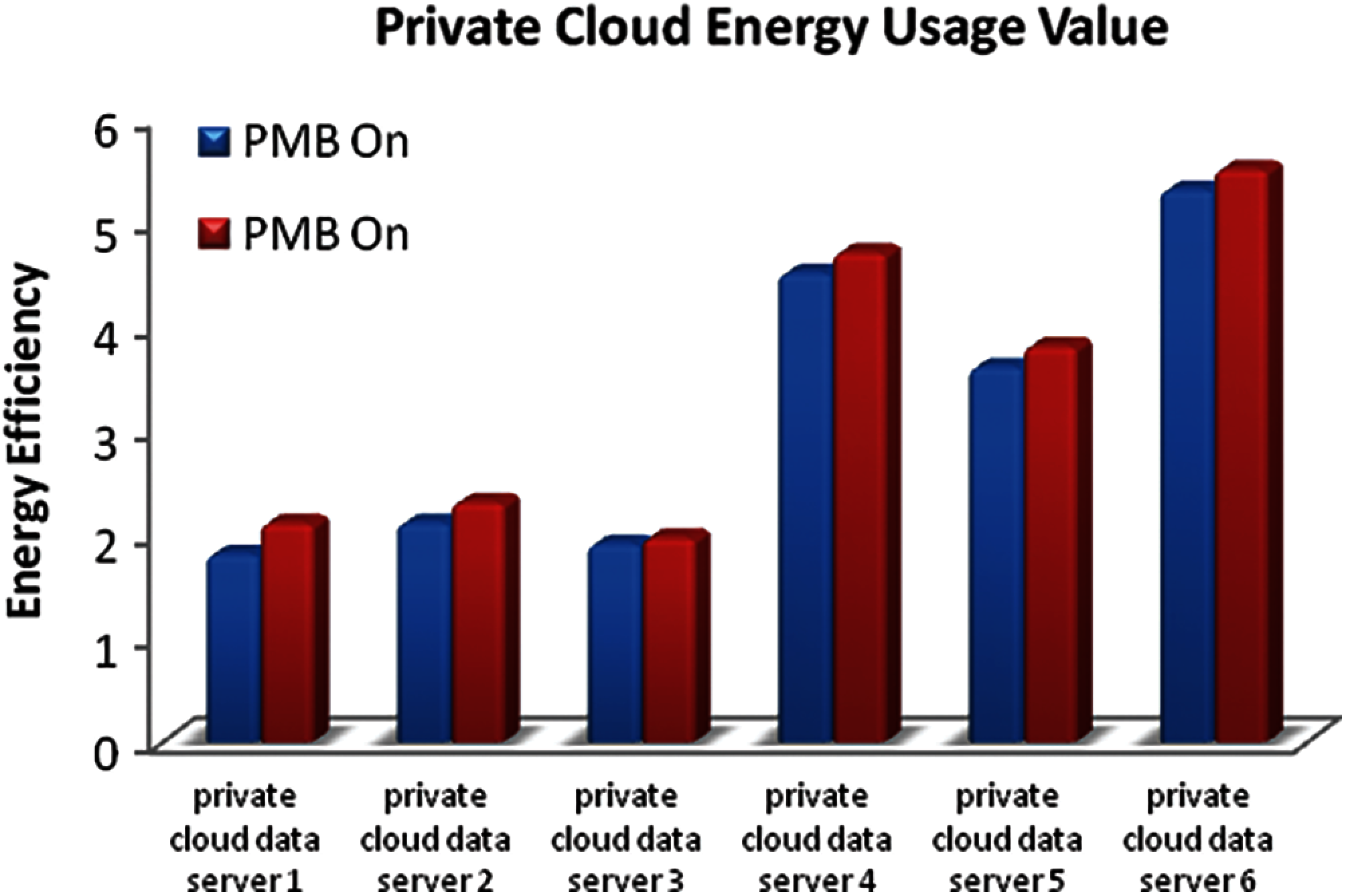

To build the physical data centre system, the network infrastructure environment is comprised of private cloud computing systems. We used the private cloud Data Center Manager energy management capabilities to maintain and analyze the server's energy consumption. In idle mode, a private cloud data server requires roughly 370 Watts. This value is used to determine the private data server's energy usage bias, and the acquired measurement material is used to produce the Energy Consumed value. Fig. 9 compares the Sample Energy Consumed value under the on and off conditions of the Power Management Broker (PMB) components over time. According to the findings, energy consumption managed using PMB based on Energy Consumed value definition and private data private cloud energy usage value can be reduced from 2% to 14.9%.

Figure 9: Private cloud energy usage value

The energy management strategies are used here efficiently at the architectural level for energy-efficient private cloud computing. The limitations include cloud computer integrations, which reduce cost of Physical Devices with energy consumption in data centers using common format servers while maintaining QoS.

The proposed energy-efficient cloud application management architecture could discover, identify and manage data warehouse components. Dynamic infrastructure depends mostly on programming regardless of their physical position inside one or more data centers as groups. Private organizations can create and manage several service tiers by identifying data centre assets, ensuring that more expensive applications get more computer resources. The presented architecture is also effectively involved in the process of collecting data categories, such as computation, communication, and storing, that can continuously provide and alter them as workloads need variation. This is referred to as dynamic architecture. In addition, dynamic convergence of VMs allows for up-to-date energy consumption and the thermal state of processing node energy efficiency has also been achieved due to authentication and authorization between VMs or network virtualization configurations.

The development of an energy-efficient dynamic automated infrastructure model for the cloud data centre is presented in this paper. An energy-aware dynamic automated infrastructure algorithm for private cloud data centres has been proposed. The Energy Efficiency Measures have also been experimented with by simulation. They include Private Cloud Energy Usage value, Energy to Lower Threshold relationship, Energy to Higher Threshold relationship, Average page load times in the different private cloud data load regions for dynamic Automated Infrastructure varying application private data server capacity, visualization of the time regions from which private cloud data is averaged in the combined plot, and load times for 1 app server for Dynamic Automated Infrastructure requests and private cloud The proposed energy-efficient resource management methods are proven to lower operating expenses without compromising the quality of service in a private cloud environment.

Funding Statement: This research work was fully supported by King Khalid University, Abha, Kingdom of Saudi Arabia, for funding this work through a Large Research Project under grant number RGP/161/42.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

Informed Consent: This article does not contain any studies with human participants, hence no informed consent is declared.

1. S. Svorobej, J. Byrne, P. Liston, P. J. Byrne, C. Stier et al., “Towards automated data-driven model creation for cloud computing simulation,” in Proc. of the 8th Int. Conf. on Simulation Tools and Techniques, Athens, Greece, pp. 248–255, 2015. [Google Scholar]

2. C. DeCusatis, M. Haley, T. Bundy, R. Cannistra, R. Wallner et al., “Dynamic, software-defined service provider network infrastructure and cloud drivers for Sdn adoption,” in 2013 IEEE Int. Conf. on Communications Workshops (ICCBudapest, Hungary, pp. 235–239, 2013. [Google Scholar]

3. A. Uchechukwu, K. Li and S. Yanming, “Energy consumption in cloud computing data centers,” International Journal of Cloud Computing and Services Science (IJ-CLOSER), vol. 3, no. 3, pp. 31–48, 2014. [Google Scholar]

4. S. Smaeel, R. Karim and A. Miri, “Proactive dynamic virtual-machine consolidation for energy conservation in cloud data centers,” Journal of Cloud Computing: Advances, Systems and Applications, vol. 7, no. 10, pp. 1–28, 2018. [Google Scholar]

5. C. T. Yang, S. T. Chen, J. C. Liu, Y. W. Chan, C. C. Chen et al., “An energy-efficient cloud system with novel dynamic resource allocation methods,” Journal of Supercomputing, vol. 75, pp. 4408–4429, 2019. [Google Scholar]

6. V. Sarathy, P. Narayan and R. Mikkilineni, “Next generation cloud computing architecture: enabling real-time dynamism for shared distributed physical infrastructure,” in 19th IEEE Int. Workshops on Enabling Technologies: Infrastructures for Collaborative Enterprises, Larissa, Greece, pp. 48–53, 2010. [Google Scholar]

7. S. Abar, P. Lemarinier, G. K. Theodoropoulos and G. M. P. OHare, “Automated dynamic resource provisioning and monitoring in virtualized large-scale datacenter,” in IEEE 28th Int. Conf. on Advanced Information Networking and Applications, Victoria, BC, Canada, pp. 961–970, 2014. [Google Scholar]

8. P. Zhang, S. Shu and M. Zhou, “An online fault detection model and strategies based on svm-grid in clouds,” IEEE/CAA Journal of Automated Sinica, vol. 5, no. 2, pp. 445–456, 2018. [Google Scholar]

9. B. P. Singh, S. A. Kumar, X. Z. Gao, M. Kohli and S. Katiyar, “A study on energy consumption of dvfs and simple vm consolidation policies in cloud computing data centers using cloudsim toolkit,” Wireless Personal Communication, vol. 112, pp. 729–741, 2020. [Google Scholar]

10. R. Buyya, A. Beloglazov and J. Abawajy, “Energy-efficient management of data center resources for cloud computing : vision, architectural elements, and open challenges cloud computing and distributed systems,” in Laboratory Department of Computer Science and Software Engineering, the University of Melbourne, Australia, pp. 1–12, 2020. [Google Scholar]

11. A. Beloglazov and R. Buyya, “Energy efficient resource management in virtualized cloud data centers,” in CCGrid 2010—10th IEEE/ACM Int. Conf. on Cluster, Cloud and Grid Computing, Melbourne, VIC, Australia, pp. 826–831, 2020. [Google Scholar]

12. A. Beloglazov, J. Abawajy and R. Buyya, “Energy-aware resource allocation heuristics for efficient management of data centers for cloud computing,” Future Generation Computer Systems, vol. 28, no.5, pp. 755–768, 2012. [Google Scholar]

13. D. Deng, K. He and Y. Chen, “Dynamic virtual machine consolidation for improving energy efficiency in cloud data centers,” in Proc. of the 2016 4th IEEE Int. Conf. on Computational Systems and Information Technology for Sustainable Solutions CCIS 2016, Beijing, China, pp. 366–370, 2016. [Google Scholar]

14. P. Malik, V. Yadav, A. Kumar, R. Kumar and G. Sahoo, “Method and framework for virtual machine consolidation without affectting QoS in cloud datacenters,” in 2016 IEEE Int. Conf. on Cloud Computing in Emerging Markets (CCEMBangalore, India, pp. 141–146, 2016. [Google Scholar]

15. E. Zola and A. J. Kassler, “Energy efficient virtual machine consolidation under uncertain input parameters for green data centers,” in IEEE 7th International Conference on Cloud Computing Technology and Science, CloudCom 2015, Vancouver, BC, Canada, pp. 436–439, 2015. [Google Scholar]

16. M. Dayarathna, Y. Wen and R. Fan, “Data center energy consumption modeling: A survey,” IEEE Communications Surveys and Tutorials, vol. 18, no. 1, pp. 732–794, 2016. [Google Scholar]

17. Y. Liu, X. Sun, W. Wei and W. Jing, “Enhancing energy-efficient and QoS dynamic virtual machine consolidation method in cloud environment,” IEEE Access, vol. 6, pp. 31224–31235, 2018. [Google Scholar]

18. M. A. Khoshkholghi, M. N. Derahman, A. Abdullah, S. Subramaniam and M. Othman, “Energy-efficient algorithms for dynamic virtual machine consolidation in cloud data centers,” IEEE Access, vol. 5, pp. 10709–10722, 2017. [Google Scholar]

19. R. Dhaya, R. Kanthavel and M. Mahalakshmi, “Enriched recognition and monitoring algorithm for private cloud data centre,” Soft Computing, pp. 1–11, 2021. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |