DOI:10.32604/cmc.2022.020820

| Computers, Materials & Continua DOI:10.32604/cmc.2022.020820 |  |

| Article |

Kernel Granulometric Texture Analysis and Light RES-ASPP-UNET Classification for Covid-19 Detection

1Department of Computer Science and Engineering, KPR Institute of Engineering and Technology, Coimbatore, 641407, India

2Department of Computer Science, CHRIST (Deemed to be University), Bangalore, 560029, India

3Department of Applied Cybernetics, Faculty of Science, University of Hradec Králové, 50003, Hradec Králové, Czech Republic

4Computer Sciences Department, College of Computer and Information Sciences, Princess Nourah Bint Abdulrahman University, Riyadh, KSA.P.o.Box: 84428, Postal Code:11671

*Corresponding Author: Ahmed Zohair Ibrahim. Email: azibrahim@pnu.edu.sa

Received: 09 June 2021; Accepted: 23 August 2021

Abstract: This research article proposes an automatic frame work for detecting COVID -19 at the early stage using chest X-ray image. It is an undeniable fact that coronovirus is a serious disease but the early detection of the virus present in human bodies can save lives. In recent times, there are so many research solutions that have been presented for early detection, but there is still a lack in need of right and even rich technology for its early detection. The proposed deep learning model analysis the pixels of every image and adjudges the presence of virus. The classifier is designed in such a way so that, it automatically detects the virus present in lungs using chest image. This approach uses an image texture analysis technique called granulometric mathematical model. Selected features are heuristically processed for optimization using novel multi scaling deep learning called light weight residual–atrous spatial pyramid pooling (LightRES-ASPP-Unet) Unet model. The proposed deep LightRES-ASPP-Unet technique has a higher level of contracting solution by extracting major level of image features. Moreover, the corona virus has been detected using high resolution output. In the framework, atrous spatial pyramid pooling (ASPP) method is employed at its bottom level for incorporating the deep multi scale features in to the discriminative mode. The architectural working starts from the selecting the features from the image using granulometric mathematical model and the selected features are optimized using LightRES-ASPP-Unet. ASPP in the analysis of images has performed better than the existing Unet model. The proposed algorithm has achieved 99.6% of accuracy in detecting the virus at its early stage.

Keywords: Deep residual learning; convolutional neural network; COVID-19; X-ray; principal component analysis; granulo metrics texture analysis

Covid -19 causes a severe immune problem. The second wave of covid-19 has caused more deaths due to the oxygen saturation. It's a well-known fact that the society has been highly affected in the second wave compared to the earlier one. The virus is affecting the alveoli cells in a rapid way resulting in oxygen saturation in a human body. Most of the deaths are occurred due to the influence of virus above 70%. If it is a milder influence i.e., below 15%, it is not a tedious process in saving the life of a human being. One of the lifesaving aspects of corona virus is its earlier detection in the lungs. It is very important to detect the Covid 19 at the earliest to prevent pneumonia [1] among the patients. Though vaccinations are stepping in to the society, it is not readily available for all the citizens. In order to keep the virus away from the human life, certain solutions can be adopted in terms of making the vaccine universally available, early prediction and prevention [2]. At present condition, the covid is detected through a primary technique called Polymerase Chain Reaction (PCR) test. It is considered as more reliable option for health care practitioners [3,4]. Beyond these advantages, PCR is very time consuming process and sometime its kits are not available at all parts. X-ray and CT images are alternatives to PCR [5,6].

Currently, the respiratory symptoms like fever, cough, dyspnea are diagnosed using nasopharyngeal swabs taken for RT-PCR tests [7,8]. The various clinical tests with different specimens like sputum, feces, broncho alveolar lavage (BAL) and extra pulmonary blood transmission reveals about the infections caused by Covid-19 [9]. Based on the immunity responses, anti covid proteins (antibodies) are detected as soon as possible. Sometimes, it takes nearly three weeks for the diagnosis due to the onset of diseases [10]. The chest image for detecting covid-19 provides a high sensitivity as like nasopharyngeal swab [11]. The radiology result helps the doctors to evaluate the stage of Covid 19 whether it is in emergency or mild state. The first line tool [12,13] for diagnosing the covid is Chest X-ray (CXR). Sometimes, there is a possibility for a radiologist to come to a false judgment whether it is Covid-19 or pneumonia. This makes the proposed work to choose x-ray chest image for detecting Covid in its earlier phase. The following are the main contributionsof the study.

(1) The kernel PCA with granulometric analysis perform texture analysis to bring clarity and resolution in the image.

(2) Light weight residual network is built with ASPP-Unet architecture to get beter classification accuracy.

(3) The CNN layers are worked with pixel training and test data for identifying covid infection in the lungs.

This paper is arranged as follows: section two deals with the literature survey and followed by methods section which describes the method used. Section 4 describes the obtained results for covid detection. Section five concludes the work with some suggestions for future enhancement.

The approach called “neuro-evolution” [14,15] is very interesting when it works based on the encoding of various network structures using heuristic genetic algorithm (GA) [16,17]. This optimal framework helps to enhance the network during the training process [18,19]. The deep neural networks explore the high accuracy output which is due to its training model pertinent to the classification pattern. There are many evolutionary classifiers for multi objective problems [20,21] and presently the nature inspired techniques like swarm intelligence [22] are emerged with convolutional networks for optimal results. Adaptive convolution structure for unsupervised progressive learning [23,24] is designed using pre trained Deep CNN which works automatically in the selection process [25].

Also, the automatic selection of CNN framework for image classification process [26,27] is done using parameters of CNN and GA. But the genetic algorithm processes with a higher complexity requires a large search space. Most of the draw backs of GA is overcome by hyper parameter optimization [28,29] with convolution of 3 layers as promising results. But this approach has more limitations and found that the three layer structures are not sufficient for all problems. Generally, the layers cannot be identified and it is even an unknown fact that how many layers could provide the optimum solution. Hence, it is understandable that during the training process and the optimization of operation [30], the layers must be dynamically increased with slow evolutionary approach. The above draw backs are overcome by the optimization of solution for early detection of COVID-19. The proposed method is designed for building fine-tuned DL model with texture analysis.

3 Methods Used for Texture Analysis and Classification

In the research, the LightRES-ASPP-Unet techniques for covid-19 infection classification from X-ray image were proposed. Similar to the other supervised classification techniques, the process included two stages; training and test stage. The training strategies unlike the conventional techniques, used a pair of images with patches. In the image, each pixel had a class label. By using the back propagation and network iteration, the optimal parameters were identified and learned. In the classification stage, the trained ASPP models were then applied on the input image for predicting the class of each pixel.

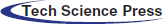

3.1 KPCA Feature (Attribute) Reduction

KPCA is an extended version of PCA in a nonlinear instance by the use of kernel method. KPCA takes a high order information of the original data with the use of kernel method and generalise the PCA as nonlinear in KPCA so as to achieve the generalization result as better. Also, it had extracted a greater number of principal components while using KPCA.

3.2 Granulometric (GLM) Texture Analysis

Although granulometric is not popular among the image feature selections, the advantages have been demonstrated by the effective experiments [31]. Generally, GLM is based on the morphological sequence by opening and closing of the functions. The main operation is to measure the difference between various successive images. Here, the quantification is permitted to the particles in various sizes. GLM was first implemented by Haas, matheron and serra. They implemented for local analysis and later it was improved by assigning the texture value to the individual pixel image. The accuracy in classifying the satellite images were showed in the previous studies. Analysis was performed using opening, closing and multiple structuring of elements. Depending up on the image, GLM showed different properties. The unknown advantages of GLM texture analysis are described below.

(1) Multi-scaling of images: This application can increase the sizes successively with morphological opening/closing operation. The output of the operation had indicated the presence of different texture sizes.

(2) Resistivity to edge effect: The object edges with low texture will enhance to higher values at end of the texture analysis which is termed as edge effect. All texture analysis methods had considered the images of spatial frequency as texture scale. If the edges at the images had a high spatial frequency, the exhibited image would be of high texture. But the proposed GLM was not based on the above blind principle. The texture analyses were performed on number of removed elements in the images. As a result, the edges that were not in the images were not recognized for higher textures.

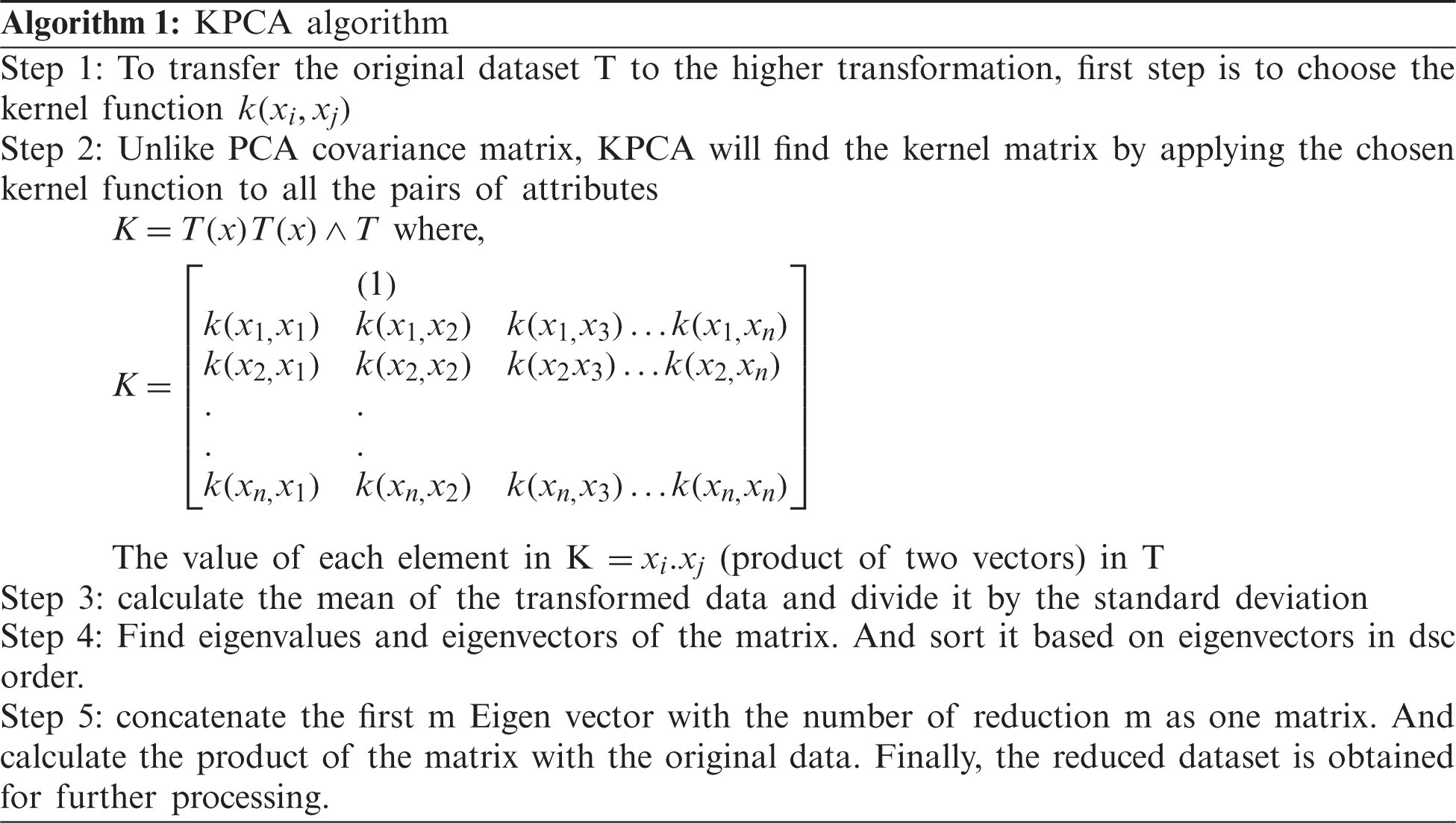

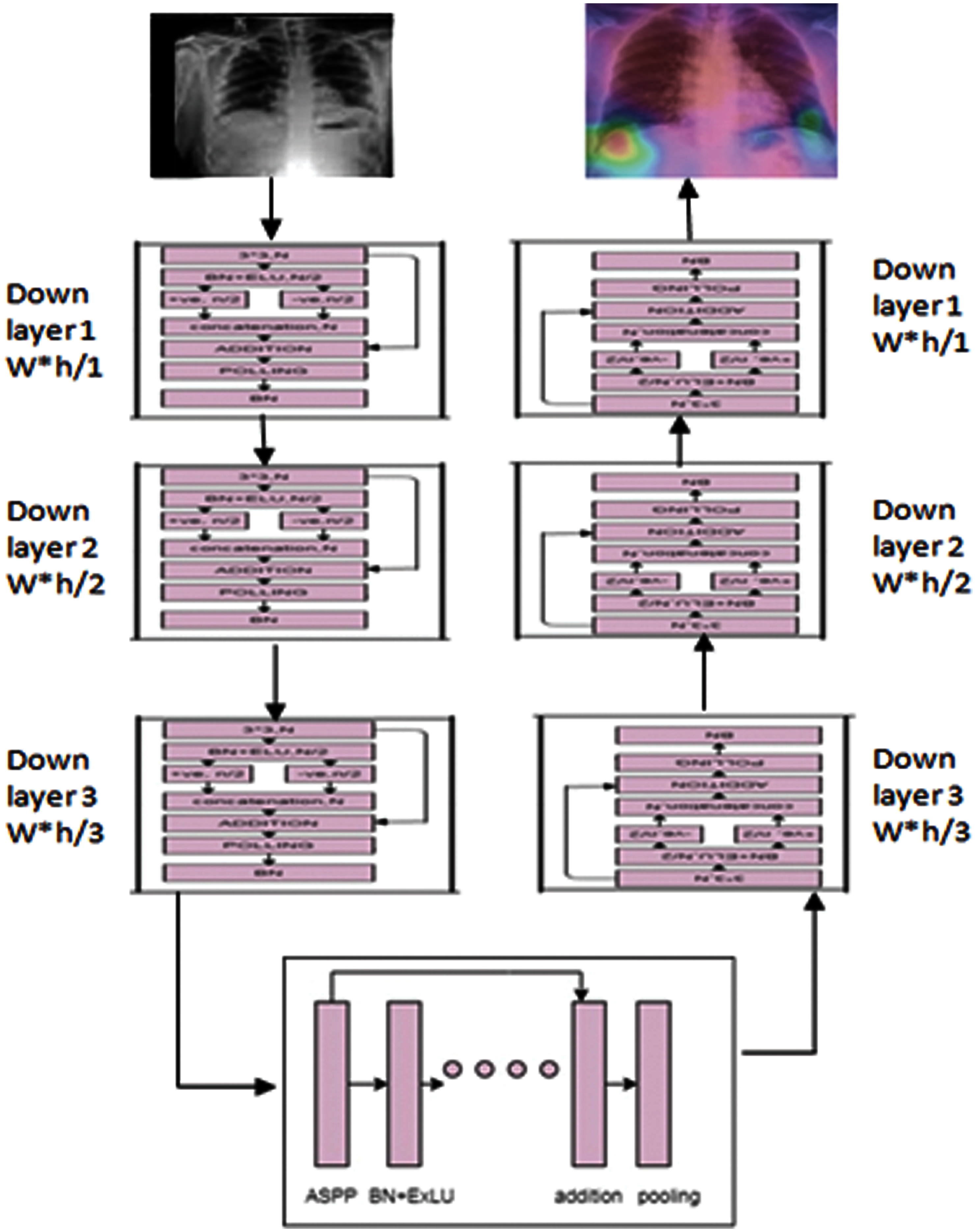

The typical working framework of the proposed system is given in Fig. 1. Firstly, the input dataset was processed using granulometric texture analysis with kernel principal component analysis. After that, the analysis had provided a high resolution image with good clarity. When the image was fed for training and testing of neural network, the high accuracy output was highly possible. The deep learning was based on the light weight residual processing in ASPP-Unet model. The dividing and concatenation process had reduced the system complexity by decreasing the parameters. Finally, the classification of covid +ve and –ve was done. ROI along with ACM were carried out for the images which automatically made the verification of classification. Thus, it is distinctly understood that the method would help for the early detection and treat the patient at the earliest.

The ASPP could perform multi scaling feature extraction. The higher level of extraction [32] had impressed more to design Atrous convolutional neural network for highly dense feature extraction of X-ray images. The Covid-19 has become a fast spreading virus. The second wave of Covid 19 asymptomatically is highly affecting the lungs and causing damages to the respiratory epithelial cells. From the study, ASPP had helped to extract the affected images by high accuracy. The atrous convolution in image processing can be given as in Eq. (1),

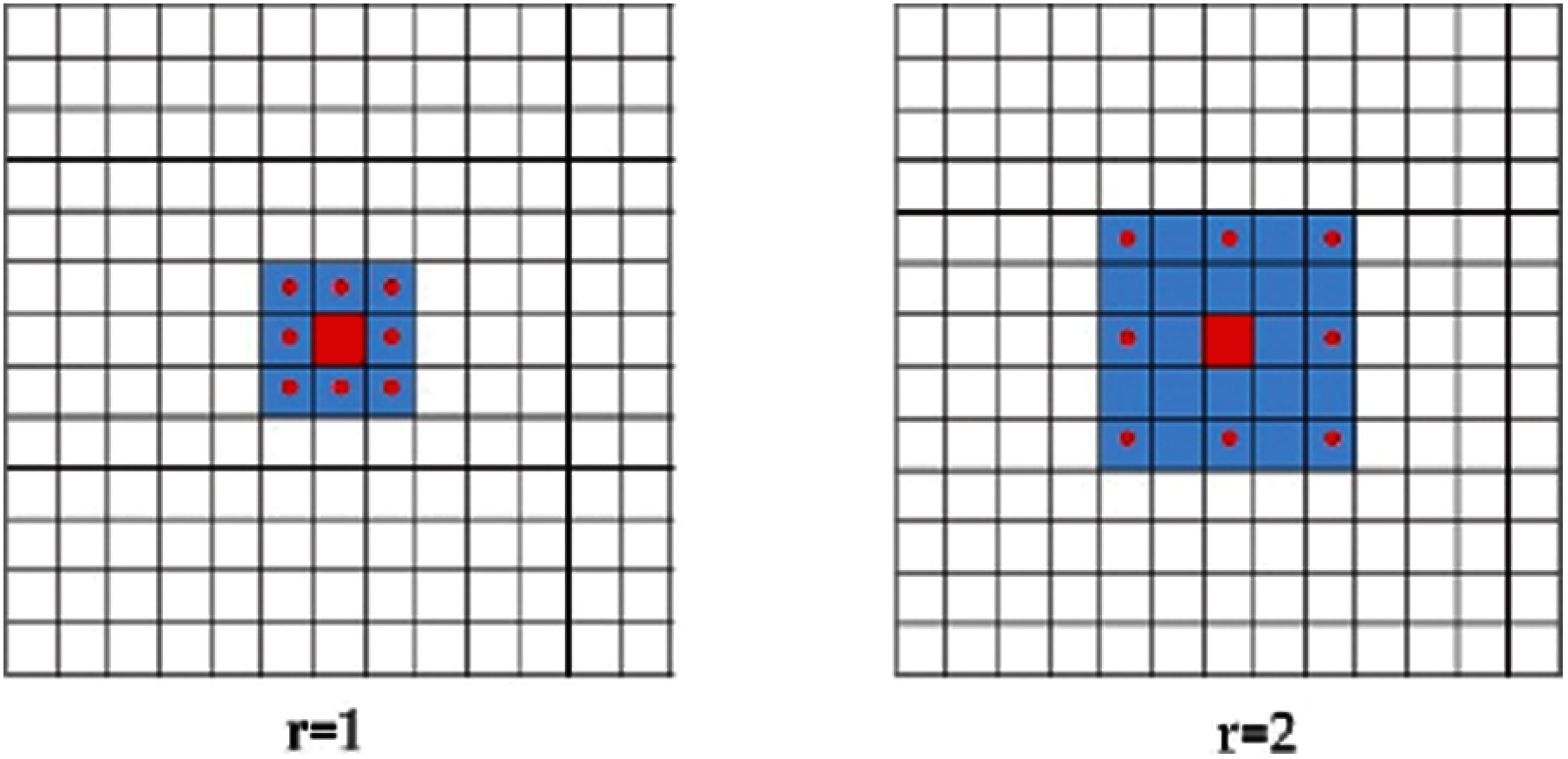

where b[i, j] is the output of atrous convolution, a[i, j] is the input signal, x represents of convolution kernel (filter) with length of p, and r represents corresponding parameter to the stride with sampled input. If rate (r) = 1, the atrous convolution is considered as the standard. Fig. 2 states the example for 3 ∗ 3 kernel image for atrous. Rate r = 1, 2 and 4 for the target pixel. If the virus attack is milder, the sampling rate will be lower as 1. If lungs alveoli are highly affected, it takes high rate of sampling for larger area view.

Figure 1: Proposed texture analysis and ASSP framework

Figure 2: Atrous convolution over 2D imagery with rate = 1 and 2. The red pixel denotes the target pixel and the red-dotted Pixels denote the pixels involved in convolution

The larger area of the image is covered by higher sampling rate. When compared to the standard convolution techniques, the atrous has required only the minimum parameters for viewing larger area. so atrous techniques could save more computational resources. ASPP inspires the same ideology and processes the multiple atrous convolutional layers with various parallel sampling rates. The final features are generated as map using multi-scaling capability.

U-Net is a type of convolutional neural network which is widely used in biomedical image segmentation. It was first used by Olaf Ronneberger in 201. U-net architecture was used by contraction and expansion path. High level features are extracted to contracting path using two steps by convolution and pooling process. Map reducing operation is performed in spatial resolution feature of the image. The path of expansion has used the upconvolution operation to restore the resolution of features in the map. In each level of contracting path, maps are transferring the features to level of analogous in the expansion path. It causes propagation of information in the network as contextual. By combining U-Net with ASPP novel ASPP-Unet architecture is proposed for detecting COVID-19 at the early stage with a high accuracy.

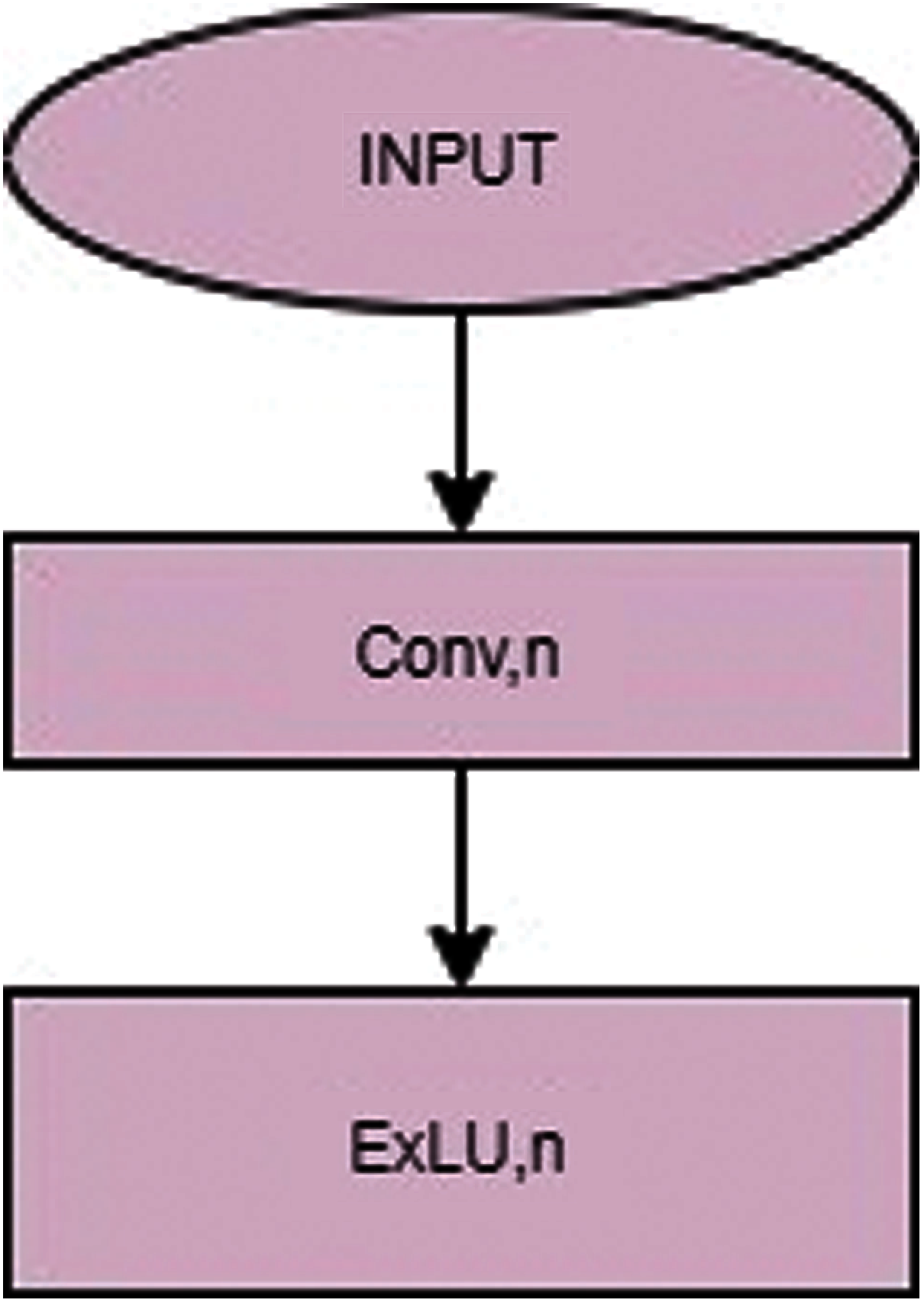

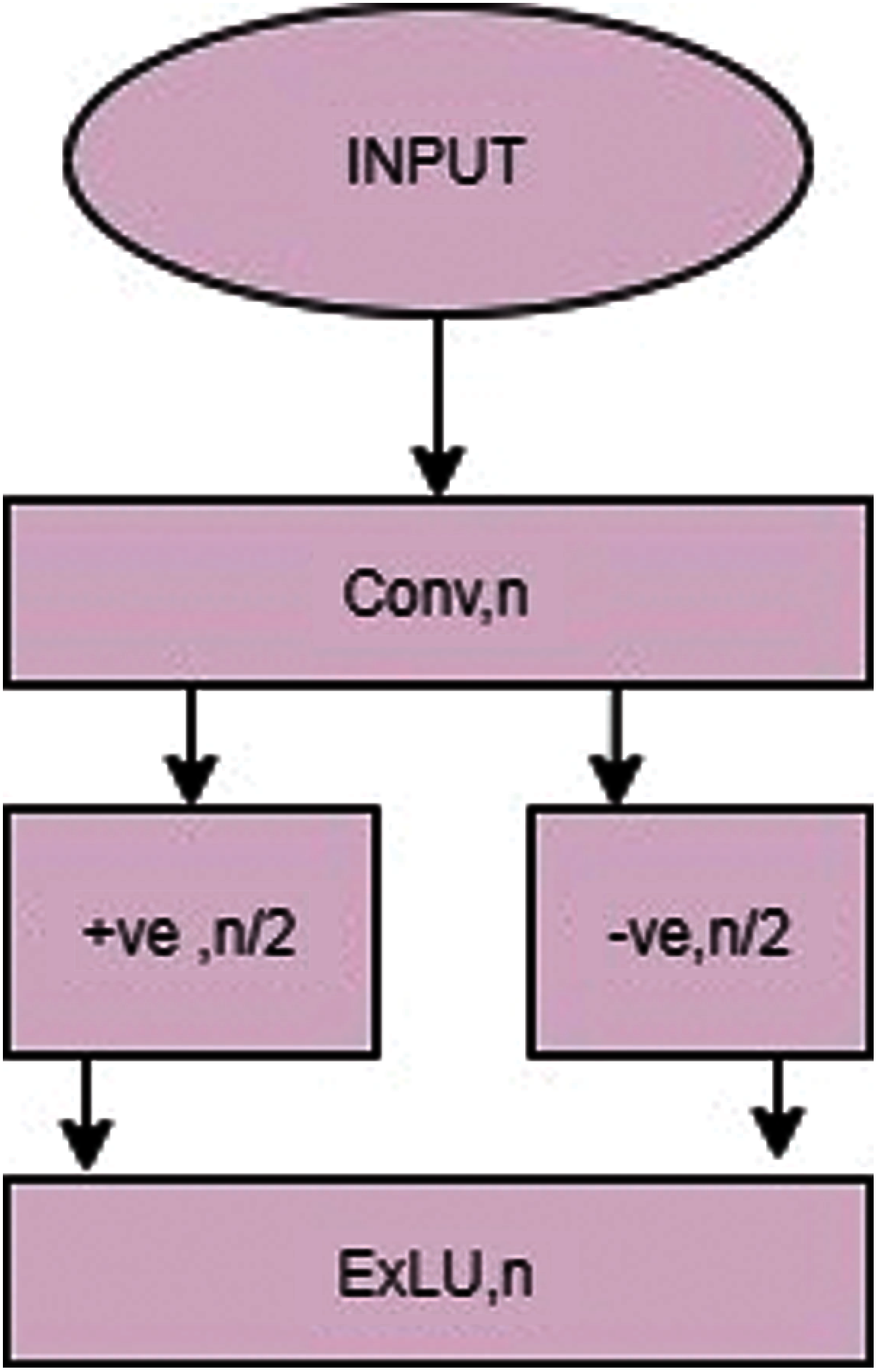

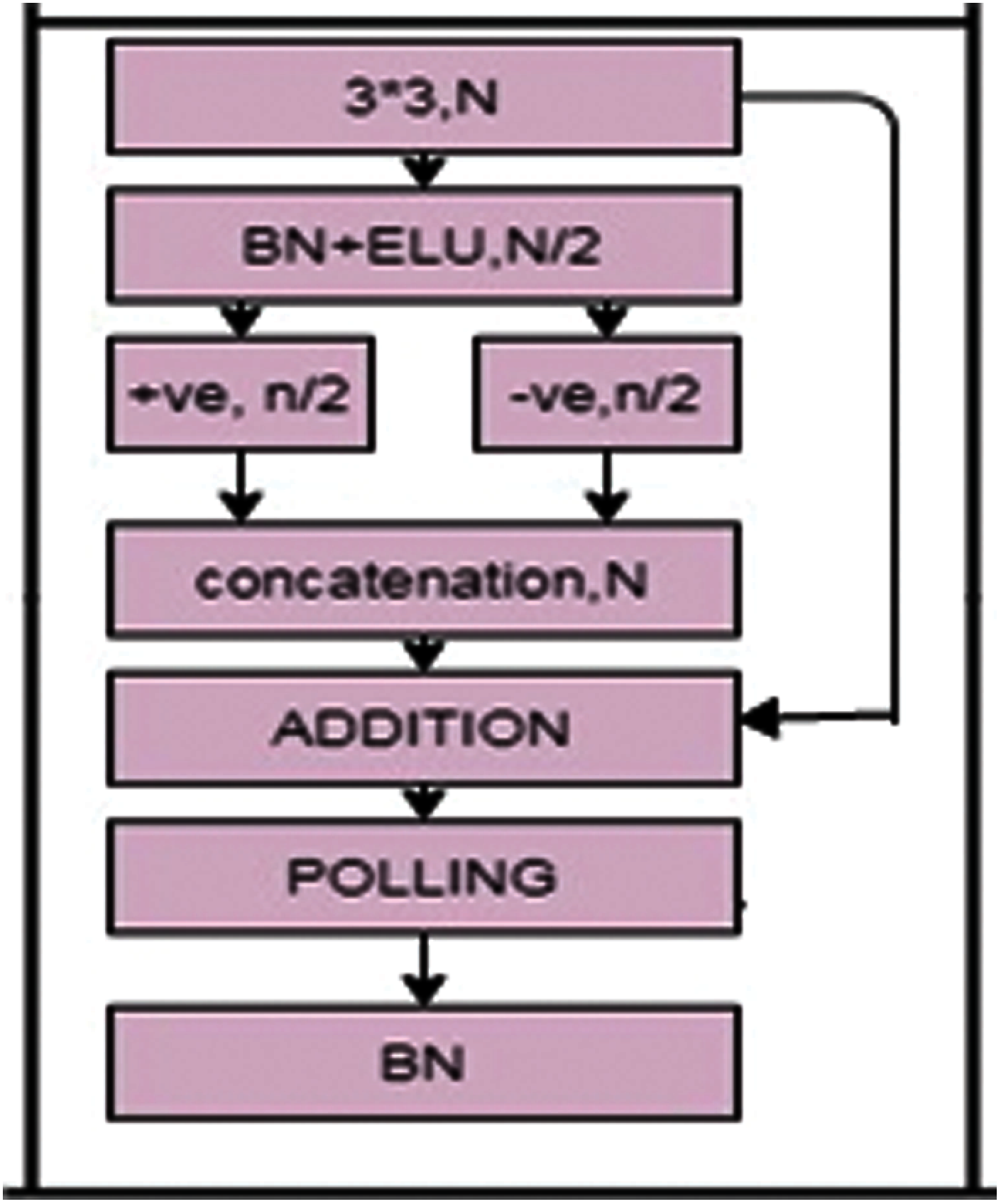

Fig. 3 classical CNN model is used in contracting path of U-Net. Each layer has sequential 3 × 3 unpadded convolutions. Each element has an activation function which performs convolutional operation. After one convolution operation, the output map of the feature is doubled. Fig. 4 shows the use of exponential linear unit (ExLU) equation as a primary function for activation as shown in Eq. (2),

where

Figure 3: Classical convolution

The problem of gradient elimination in the rectified linear unit by identifying the positive value is of great advantage in using the exponential linear unit (ExLU). It also reduces the computational complexity accelerating the learning in deep neural network. Before activating the exponential linear unit, it must normalize the input features using batch normalization before entering a layer. The batch normalization is based on the mean and the variance of the inputs. By shifting the covariates, training process is done at a high speed. In the contracting path, 2 ∗ 2 max pooling operation is processed between the upper and lower layer for down sampling. This helps to reduce the resolution in the feature maps.

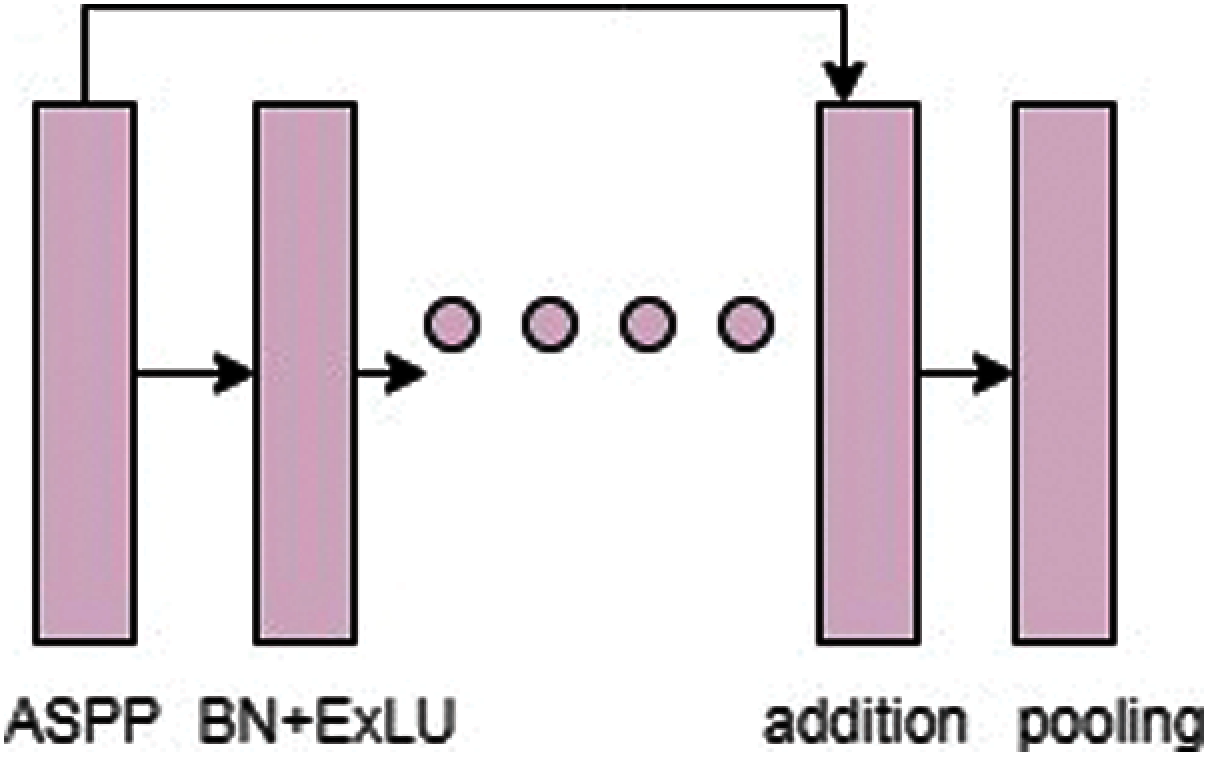

In the U-Net standard architecture, bottom layer is similar to the structure of down convolution layer where it contains three operations such as, 2 sequential convolution processes + normalization with batch process + exponential linear processing unit. In the proposed ASPP-Unet techniques, the bottom layer is processed with ASPP. As given in Fig. 5 6 of 3 ∗ 3 atrous convolution by applying various rates like r = 1, 2, 4, 8 and 16 are executed in parallel manner at the bottom layer for all the input features. Then all the output is combined by sum (+) operation. After summing the features, the standard convolution operation is performed and at final stage, the linear process with exponential function is performed.

Figure 4: ASPP-UNET

Figure 5: ASPP-Unet with deep residual learning

In this architecture, Fig. 6 has an expansive part which consists of up layer that is equal to down layer. First, the bottom layer is employed with 2 ∗ 2 up-convolution process in its contracting path. Inverse convolutional operation called transpose convolution is performed as an upconvolution strategy. The main process of using transpose is that it reduces the feature channels to halves and resolution is increased to the corresponding features in the down layer. Finally, the concatenation is performed in the feature map with mirrored feature using skip connection in the path of contraction. For supporting the concatenation operation, the feature maps are cropped to match the feature map at the up layers. The features are polished to a high resolution after concatenation operation by which contracting path features are combined with coarse features using up sampling operation. This creates an appropriate information for the propagation in the network. The output after concatenation operation is processed with batch normalization operation. Then it is computed with the exponential linear functions. As given in Fig. 2. The feature map is in the same size of up and down layers at analogous stage. At last, the output at the final layer in up has spatial resolution which is similar to the input image in the feature X-ray. Then, the convolution of 1 ∗ 1 is performed on the x-ray to map the up layer features in to number of classes. Next layer is softmax, which helps to transform the feature of x ray image to its probability level at different layer types. It means the area of bones, empty and affected spaces. ASPP-Unet procedure helps to preserve the large features in the image at contracting place. Further, it extends by U-shape pattern with symmetric properties. Another advantage of ASPP-Unet is that it can be scaled using parallel filters at various rates.

Figure 6: ASPP-Unet with light weight residual

3.5 Light RES-ASPP-Unet Architecture

The above operation had stated that ASPP-Unet architecture was highly dependent on more number of convolutional stages. Recently, many researchers’ have mentioned that the accuracy is inversely proportional to the increased number of convolutional operation. Sometimes, the user may have very small training samples in this case which in turn takes more time in convolution operation and reduce the accuracy too. The proposed method was focused on the accuracy for all data sizes. The drawbacks could be minimised by the residual method.

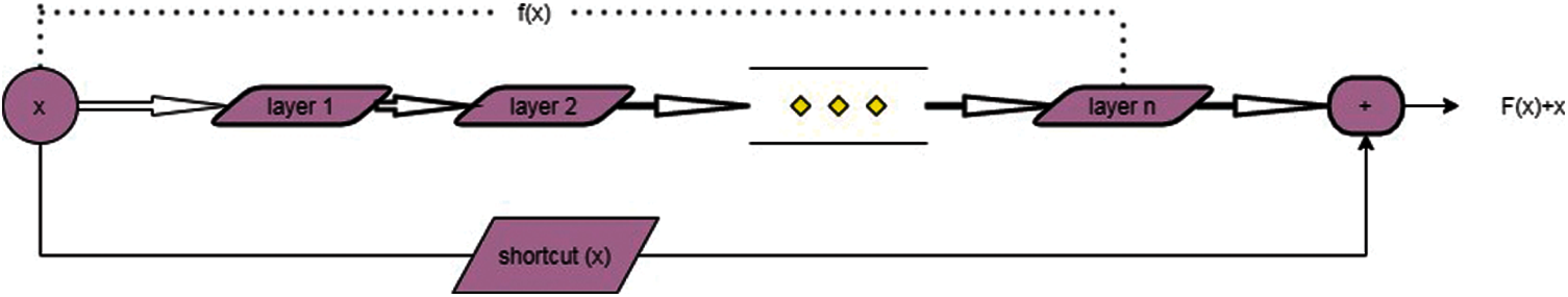

Deep residual learning: It was first introduced by Khishe et al. [33] in 2016. More convolutional operation in ASPP-Unet can reduce the accuracy of classifier. This problem can be solved by residual method inserting shortcut among the stacked layers to form residual blocks as given in Fig. 7. This same technique is added in our ASPP-Unet model as ASPP-Unet-RES model. This will insert the identity mapping at every layer from the input to the output. Finally, a residual block at each layer is given as;

where

Figure 7: Residual deep learning blocks

Fig. 8 explains how the residual blocks are combined at ASPP-Unet architecture. Here, there is a short connectivity for the identification of mapping between the shallow and the current layers. But still there is a computational complexity in residual learning techniques. Real time performance is very low due to high probability disturbances in various environments. The proposed system is aimed at meeting the demands of various applications such as portability, robustness and dynamic learning ability. The proposed light weight residual learning based ASPP-Unet (LightRES-ASPP-Unet) is implemented for better classification of the covid affected from x-ray. LightRES-ASPP-Unet produces the domain transform with frequency using wavelet packet transform techniques and a deep convolutionalneural network. The ordinary deep residual neural network can take out multiscaling information in X-ray image. The proposed Light RES-ASPP-Unet will have a good classification performance, knowledge of data-driven, and ability of high transfer-learning.

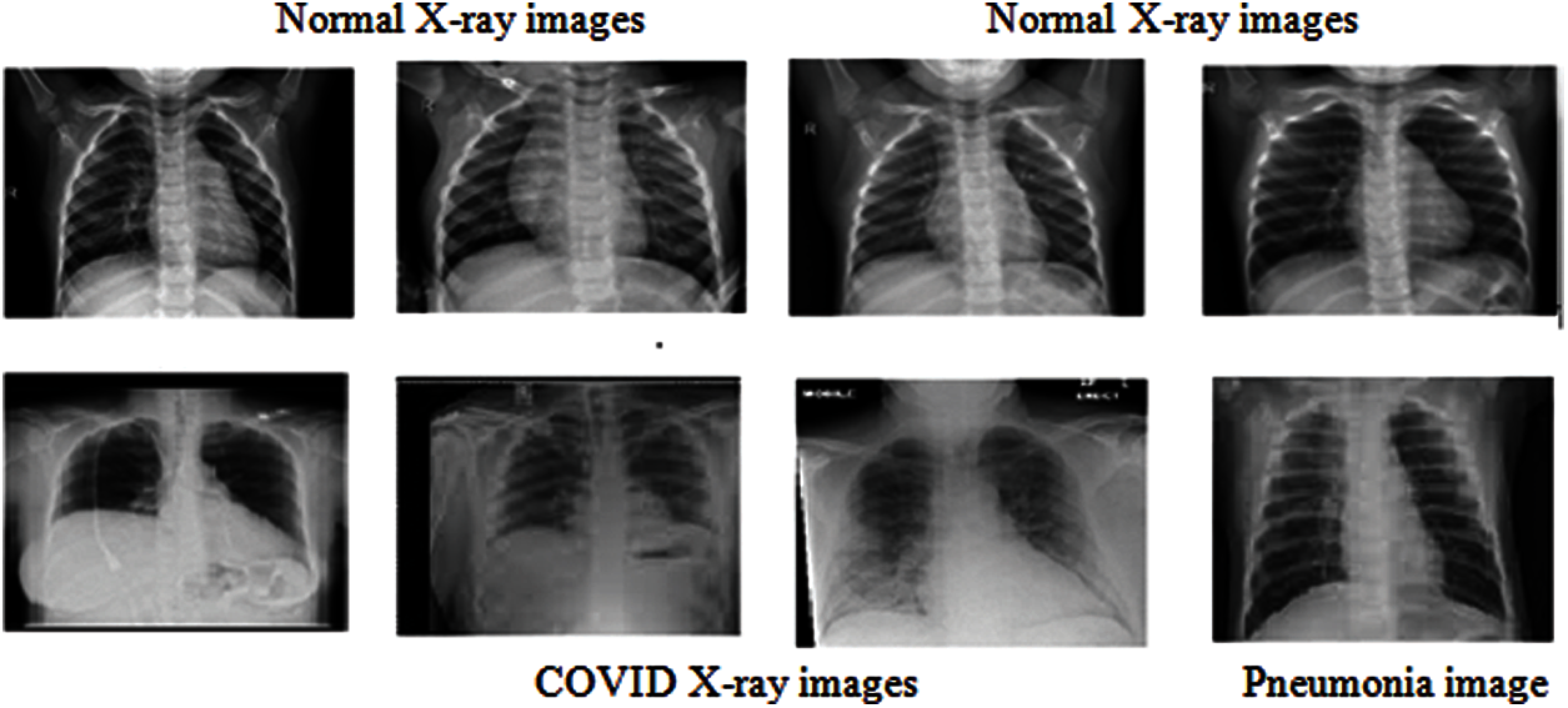

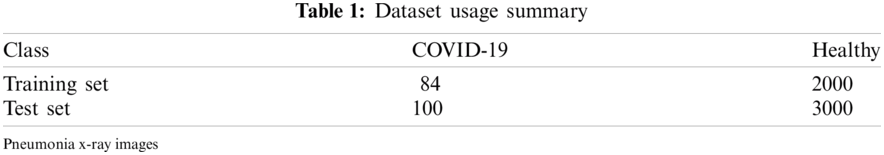

COVID-X-ray Dataset: It was planned to take 5K X-ray dataset of COVID. It consisted of 2085 images for training set and 3100 images for testing set samples which included X-Ray and CT images. As per may 2020 release, the database had consisted of 250 images of covid-19 attacked x-rays. Among 250, 203 include both anterior as well as posterior view. After updating the database, now the available images are preferred for detecting covid-19 and it is certified by radiologists. Experts had evaluated those 184 images out of 203 had a clear view of affected covid-19. Therefore 100 images were randomly included in the test set and the remaining 85 were included in the training set. Remaining images were of different view which was not taken in present experiment. Alternatively, it was the use of ChexPert dataset [34] as it was non Covid images. In the dataset 2, 24,316 x-ray of chest image were exhibited from nearly 65,250 patients. It could be noted that from the dataset, an image has included non covid entries, healthy entries and other diseases like pneumonia which makes the classifier to understand more and differentiate the covid-19 with the other lung diseases. From the dataset Fig. 9 2000 images of non covid were taken for training set and 3000 images of non covid for test set. Tab. 1 demonstrates the images used in the dataset for classification.

Figure 8: Generalized lightRES-ASPPunet

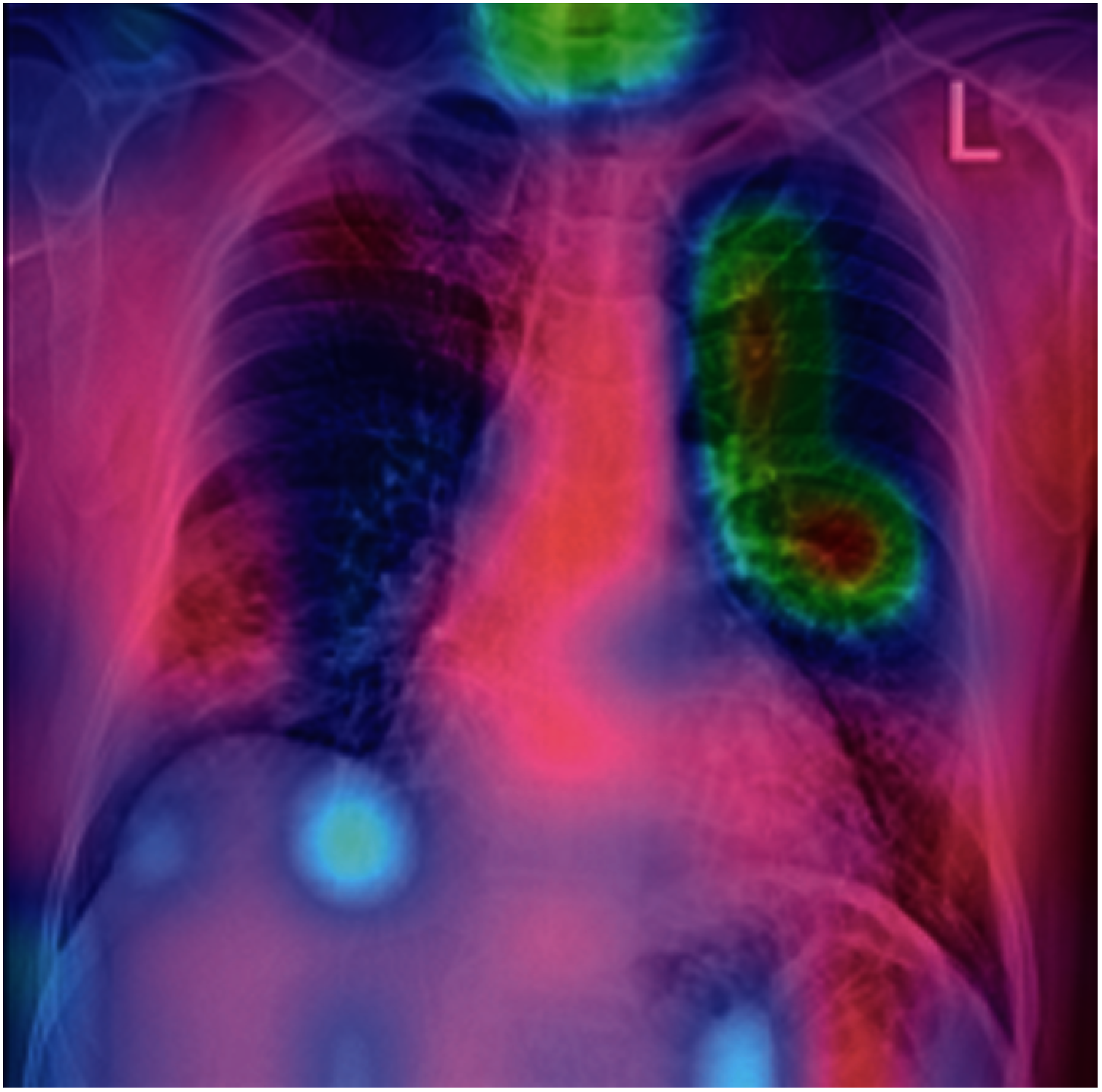

Granulometric texture analysis was carried out for the first 100 test images. The accuracy after texture analysis was highly improved by removing the noises and the other unwanted light effects in the images. It had achieved the accuracy of 99.81% in the image analysis. The sample of the output is given in the Fig. 9. The covid cases are detected by the proposed work as shown in the Fig. 10.

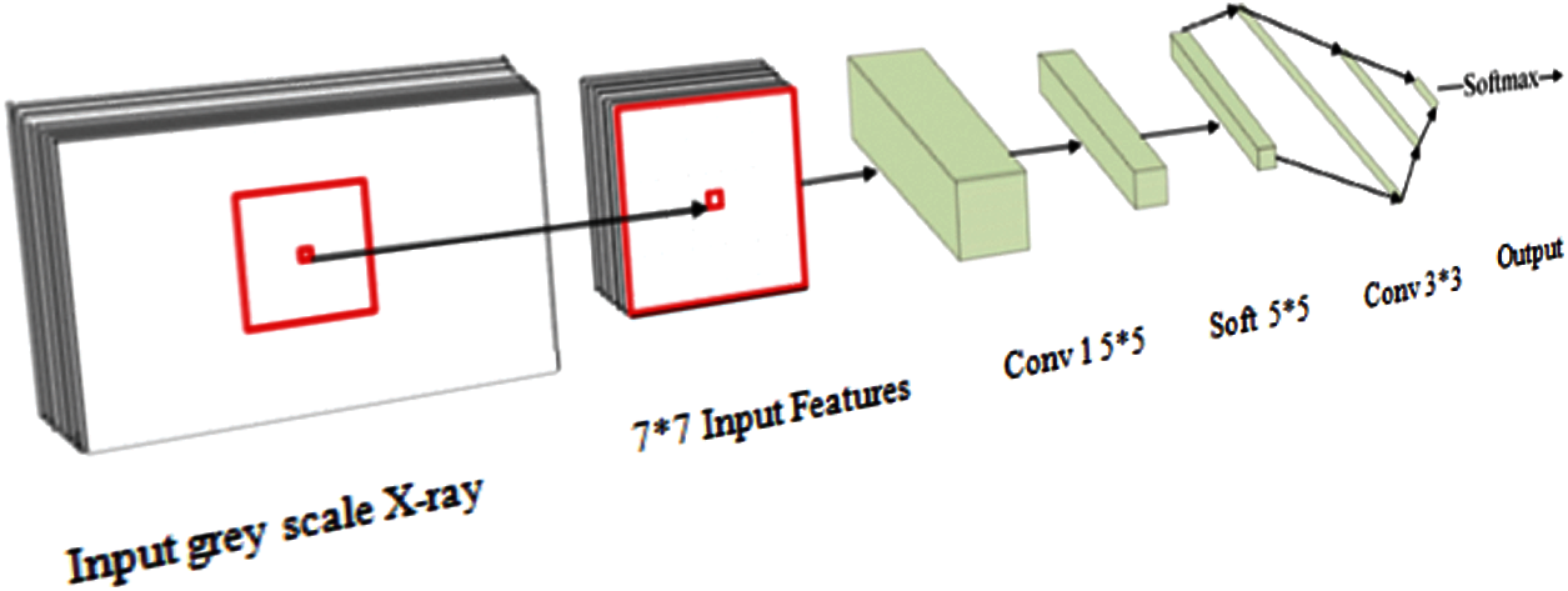

The training set neurons were trained with 2000 non covid images and 84 covid images. 100 convolutions were carried out with 13 ∗ 1 filter sizes with stride 4 ∗ 1. These results of 100 grey scale x-ray images were processed with 208,838 pixels. Important parameter in deep learning model was depth of the layers Fig. 11. Most of researchers have believed that the deep learning accuracy is mainly come from the hidden layers where the feature extraction is hierarchically done. The deep learning model with insufficient layer could degrade the performance of the output by vanishing the backward gradient with deeper layers. An initial feature map was considered as another parameter for improving the capacity of hidden layers. The initial features were represented as the feature map that occurred between the input image and the first hidden layer. From the research study, 5 to 11 layer depth was proved for the improvement of accuracy. In the proposed model, highest accuracy was obtained at the depth of 7 layers due to the usage of granulometric texture image input for CNN. All the experiments were implemented using tensor flow platform. The window size was used as 7 ∗ 7 for extracting the input images.

Figure 9: Dataset images

Figure 10: a) Input Covid X-ray b) after KPCA+Granulometric analysis

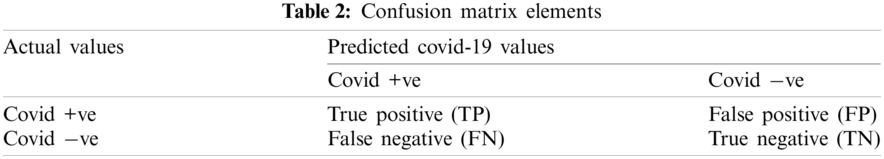

The output performance of the proposed classification is compared with the optiDCNN [34], Ensemble classification model Fig. 12. Accuracy and performance are judged through evaluation metrics to evaluate the efficiency and effectiveness of the proposed classification model using the test dataset. In this work, the performance of the proposed model is evaluated using the metrics such as accuracy, sensitivity and specificity using the equations with the use of confusion matrix elements in Tab. 2.

Figure 11: Sample covid detected output

Figure 12: CNN structure for proposed model

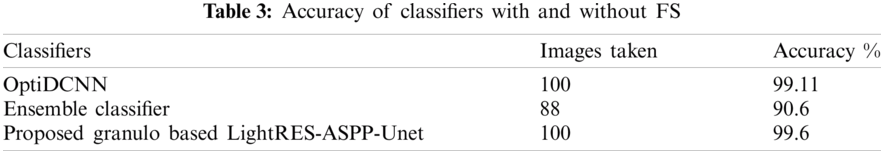

The experimental results of the proposed system had used 100 test set images from Granulometric texture analysis selection method and evaluated the accuracy of the classification using the two existing algorithms. These algorithms were implemented with the respective datasets that were used. The performance of the proposed classification algorithm is evaluated in the below Tab. 3.

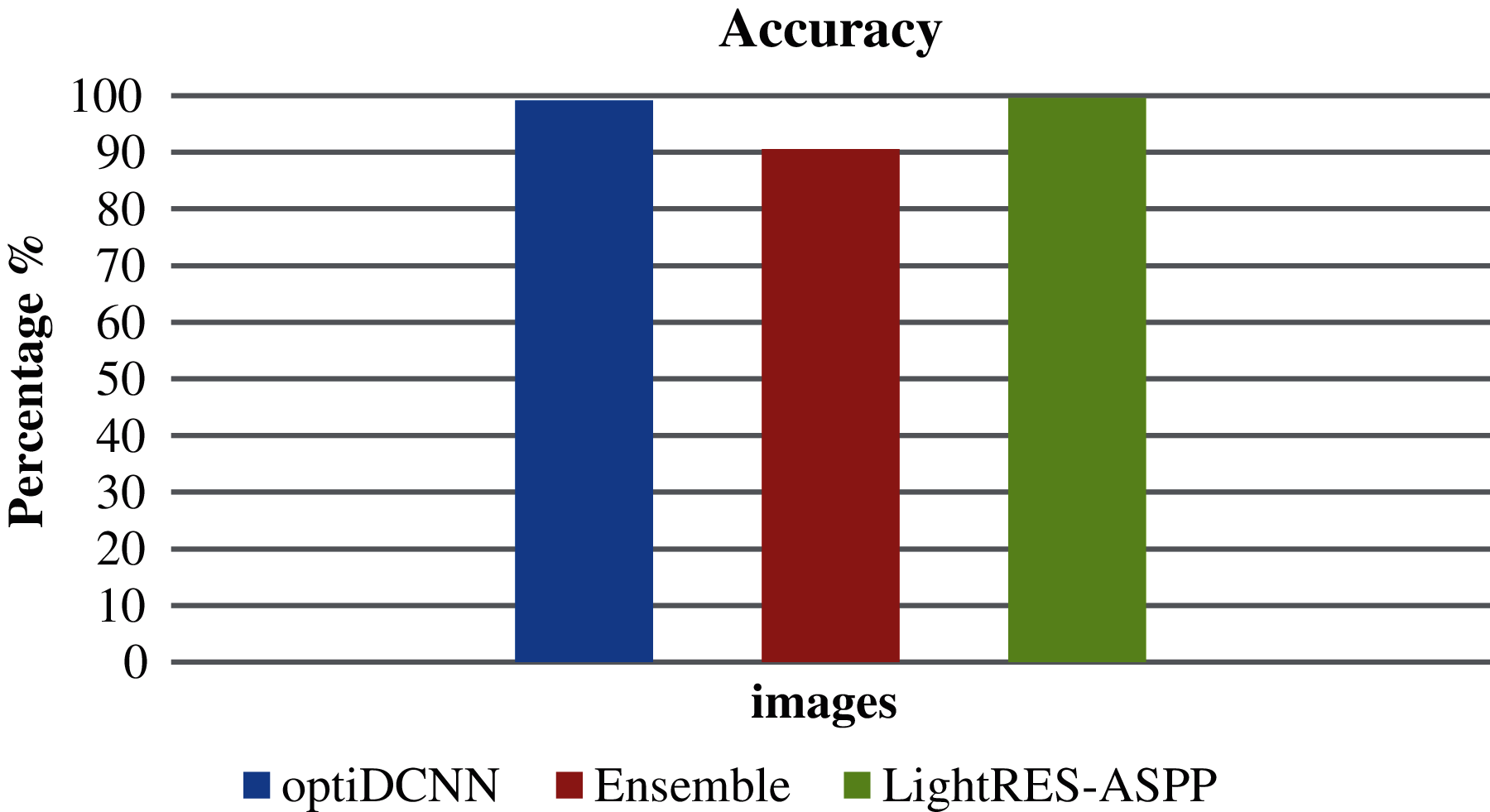

The above Tab. 3 describes the accuracy of proposed model with the existing methods. Both the existing methods without granulometric process cannot provide high accuracy. OptiDCNN classifier achieves good accuracy of 99.11% but when it is processed with granulometric, it may even get higher accuracy. Ensemble classifier can achieve only 90.6% with three machine learning algorithms. The proposed model. Fig. 13 which uses the granulometric texture analysis before high end classification model makes the final LightRES-ASPP-Unet classification with an accuracy of 99.6%.

Figure 13: Accuracy comparison

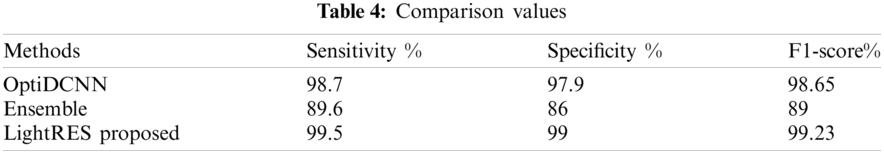

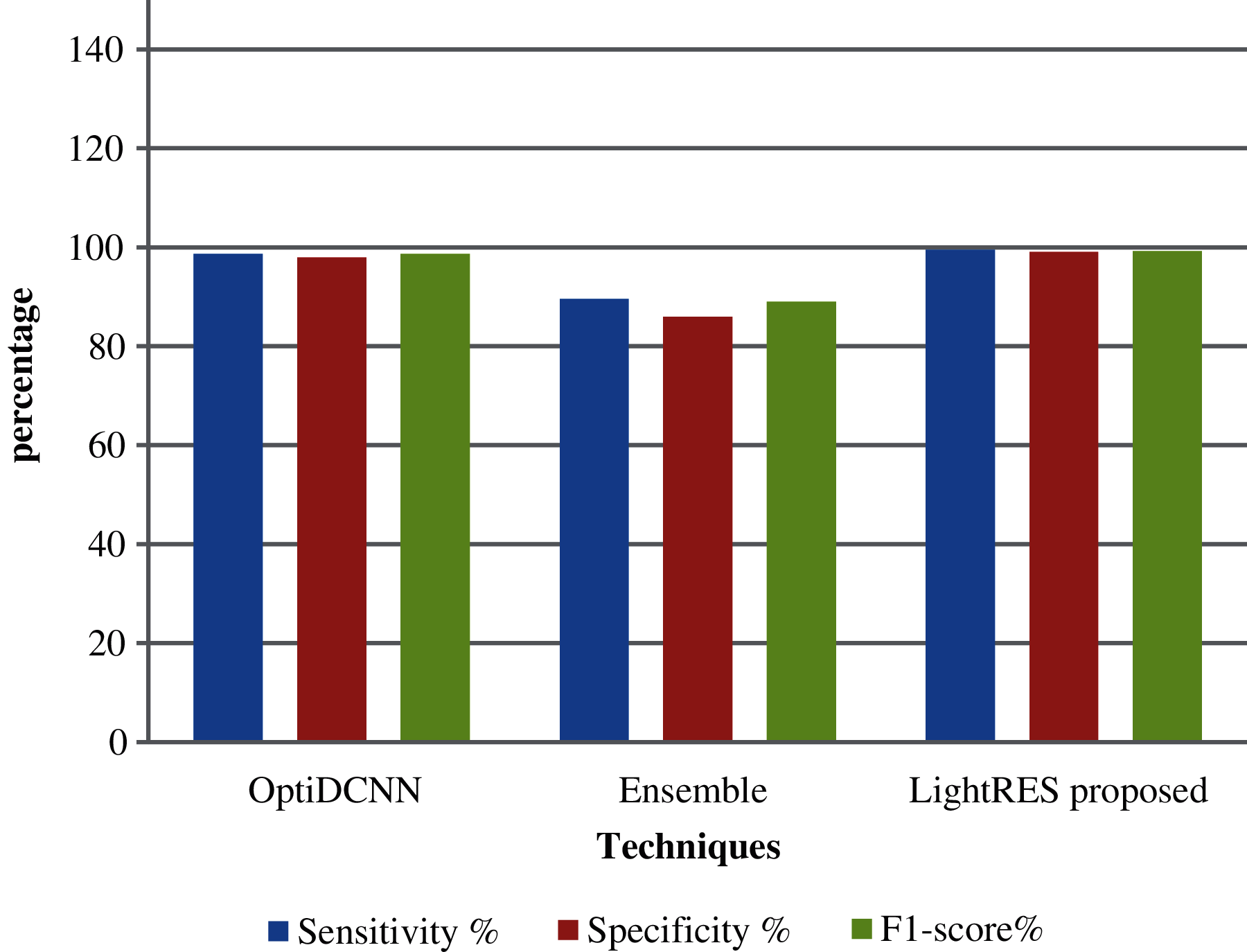

Sensitivity, specificity and F-score of the existing methods are compared with the proposed techniques. Tab. 4 shows the comparison values.

Fig. 14 shows the comparison of sensitivity, specificity and F1 score of existing and proposed techniques. The proposed system has achieved better results than the other existing techniques. The proposed Light RES-ASPP-Unet indicates the affected area with ROI process correctly at the end. ROI with ACM give a clear view on the output and has proved the predicted results as accurate. Various regions are highlighted in Covid-19 and normal cases. This proves that the proposed network classifications are very useful for medical officers and provide sensitive second opinion to the radiology teams. This helps to understand the deep neural network models and working principal.

Figure 14: Comparison of performance

In this research article, the proposed granulometric based light weight RES-ASPP-Unet model was used to detect the covid positive cases from chest X-Ray image. The model was carried out on the benchmark of covid 5K dataset and the results were evaluated by comparing the existing techniques. The output of the system was very competitive for the bench mark models. Various difficulties at different levels were addressed in the lightweight residual ASPP-Unet. The granulometric texture was very clear than the input scanned X-Ray. So, the texture images were given as input to the convolutional neural network architecture. The proposed neural architecture had processed the x-ray with 7 hidden layer depth with 3 ∗ 3 convolutional operations. The output was processed using ROI with ACM and clear detection of COVID was done with high accuracy of 99.6%. PCR test for covid detection had provided high false positive results. The time of computation could be varied based on the image resolution. Therefore, this specific limitation could be considered for future research problem. Finally, it is concluded that the X-RAY of chest image is true source for detecting the true positive results at the early stage and can save the life. In future, this work can be combined with optimization of algorithm for 100% accuracy.

Acknowledgement: This research was funded by the Deanship of Scientific Research at Princess Nourah bint Abdulrahman University through the Fast-track Research Funding Program.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors unanimously declare that they have no interest in reporting the present study.

1. G. Gabutti, E. D. Anchera, F. Sandri, M. Savio and A. Stefanati, “Coronavirus: Update related to the current outbreak of COVID-19,” Infectious Diseases and Therapy, vol. 9, no. 2, pp. 241–253, 2020. [Google Scholar]

2. M. K. Ün, E. Avşar and İD Akçalı, “An analytical method to create patient-specific deformed bone models using X-ray images and a healthy bone model,” Computers in Biology and Medicine, vol. 104, pp. 43–51, 2019. [Google Scholar]

3. M. Toğaçar, B. Ergen and Z. Cömert, “COVID-19 detection using deep learning models to exploit social mimic optimization and structured chest X-ray images using fuzzy color and stacking approaches,” Computers in Biology and Medicine, vol. 121, 2020. [Google Scholar]

4. L. García Ruesgas, R. Álvarez Cuervo, F. Valderrama Gual and J. I. Rojas Sola, “Projective geometric model for automatic determination of X-ray emitting source of a standard radiographic system,” Computers in Biology and Medicine, vol. 99, pp. 209–220, 2018. [Google Scholar]

5. S. M. Kengyelics, L. A. Treadgold and A. G. Davies, “X-ray system simulation software tools for radiology and radiography education,” Computers in Biology and Medicine, vol. 93, pp. 175–183, 2018. [Google Scholar]

6. D. Wang, B. Hu and M. D. Chang “Clinical characteristics of 138 hospitalized patients with 2019 novel coronavirus–infected pneumonia in wuhan, China,” Jama, vol. 323, no. 11, pp. 1061–1069, 2020. [Google Scholar]

7. R. Wölfel, V. M. Corman, W. Guggemos, M. Seilmaier, S. Zange et al., “Virological assessment of hospitalized patients with COVID-2019,” Nature, vol. 581, no. 7809, pp. 465–469, 2020. [Google Scholar]

8. W. Wang, Y. Xu and R. Gao, “Detection of SARS-coV-2 in different types of clinical specimens,” Jama, vol. 323, no. 18, pp. 1843–1844, 2020. [Google Scholar]

9. N. Sethuraman, S. S. Jeremiah and A. Ryo, “Interpreting diagnostic tests for SARS-coV-2,” Jama, vol. 323, no. 22, pp. 2249–2251, 2020. [Google Scholar]

10. T. Ai, Z. Yang, H. Hou, C. Zhan, C. Chen et al., “Correlation of chest CT and RT-pCR testing for coronavirus disease 2019 (COVID-19) in China: A report of 1014 cases,” Radiology, vol. 296, no. 2, pp. E32–E40, 2020. [Google Scholar]

11. H. Y. Frank Wong, S. Lam, A. Fong, S. T. Leung and T. W. Chin et al., “Frequency and distribution of chest radiographic findings in patients positive for COVID-19,” Radiology, vol. 296, no. 2, pp. E72–E78, 2020. [Google Scholar]

12. M. Umer Nasir, J. Roberts, N. Muller, F. Macri and M. F. Mohammed, “The role of emergency radiology in COVID-19 from preparedness to diagnosis,” Canadian Association of Radiologists Journal, vol. 71, no. 3, pp. 293–300, 2020. [Google Scholar]

13. J. M. Yeoh, F. Caraffini, E. Homapour, V. Santucci and A. Milani, “A clustering system for dynamic data streams based on metaheuristic optimisation,” Mathematics, vol. 7, no. 12, p. 1229, 2019. [Google Scholar]

14. D. Demirel, B. Cetinsaya, T. Halic, S. Kockara and S. Ahmadi, “Partition-based optimization model for generative anatomy modeling language,” BMC Bioinformatics, vol. 20, no. 2, pp. 99–114, 2019. [Google Scholar]

15. M. Cho, C. S. Dhir and J. Lee, “Hessian free optimization for learning deep multidimensional recurrent neural networks,” Advances in Neural Information Processing Systems, vol. 1509, pp. 03475–03484, 2015. [Google Scholar]

16. J. Florez Lozano, F. Caraffini, C. Parra and M. Gongora, “Cooperative and distributed decision-making in a multi-agent perception system for improvised land mines detection,” Information Fusion, vol. 64, pp. 32–49, 2020. [Google Scholar]

17. M. Khishe and M. Mosavi, “Classification of underwater acoustical dataset using neural network trained by chimp optimization algorithm,” Applied Acoustics, vol. 157, 2020. [Google Scholar]

18. A. E. Eiben and J. E. Smith, In Introduction to Evolutionary Computing (Natural Computing Series), Berlin, Heidelberg: Springer Berlin Heidelberg, 2003. [Google Scholar]

19. K. O. Stanley, J. Clune, J. Lehman and R. Miikkulainen, “Designing neural networks through neuroevolution,” Nature Machine Intelligence, vol. 1, no. 1, pp. 24–35, 2019. [Google Scholar]

20. W. Y. Lee, S. M. Park and K. B. Sim, “Optimal hyper parameter tuning of convolutional neural networks based on the parameter-setting-free harmony search algorithm,” Optik, vol. 172, pp. 359–367, 2018. [Google Scholar]

21. G. Rosa, J. Papa, A. Marana, W. Scheirer and D. Cox, “Fine-tuning convolutional neural networks using harmony search,” in Proc. CIARP, Montevideo, Uruguay, pp. 683–690, 2015. [Google Scholar]

22. S. Bak, P. Carr and J. F. Lalonde, “Domain adaptation through synthesis for unsupervised person re-identification,” in Proc. ECCV, Glasgow, United Kingdom, pp. 189–205, 2018. [Google Scholar]

23. H. Fan, L. Zheng, C. Yan and Y. Yang, “Unsupervised person re-identification: Clustering and fine-tuning,” ACM transactions on multimedia computing,” Communications, and Applications TOMM, vol. 14, no. 4, pp. 1–18, 2018. [Google Scholar]

24. X. Qian, Y. Fu, T. Xiang, W. Wang, J. Qiu et al., “Pose normalized image generation for person re-identification,” in Proc. ECCV, Glasgow, United Kingdom, pp. 650–667, 2018. [Google Scholar]

25. Y. Sun, B. Xue, M. Zhang, G. G. Yen and J. Lv, “Automatically designing CNN architectures using the genetic algorithm for image classification,” IEEE Transactions on Cybernetics, vol. 50, no. 9, pp. 3840–3854, 2020. [Google Scholar]

26. S. Maheswaran, B. Vivek, P. Sivaranjani, S. Sathesh and K. Pon Vignesh, “Development of machine learning based grain classification and sorting with machine vision approach for eco-friendly environment,” Journal of Green Engineering, vol. 10, no. 3, pp. 526–543, 2020. [Google Scholar]

27. M. Shanmugam and R. Asokan, “A machine-vision-based real-time sensor system to control weeds in agricultural fields,” Sensor Letters, vol. 13, no. 6, pp. 489–495, 2015. [Google Scholar]

28. S. R. Young, D. C. Rose, T. P. Karnowski, S. H. Lim and R. M. Patton, “Optimizing deep learning hyper-parameters through an evolutionary algorithm,” in Proc. MLHPC’ 15,ACM, Austin, Texas, pp. 1–5, 2015. [Google Scholar]

29. E. Real, S. Moore, A. Selle, S. Saxena,Y. Lenon Suematsu et al., “Large-scale evolution of image classifiers,” in Proc. ICML, Sydney, Australia, pp. 2902–2911, 2017. [Google Scholar]

30. P. Kupidura, “The comparison of different methods of texture analysis for their efficacy for land use classification in satellite imagery,” Remote Sensing, vol. 11, no. 10, pp. 1–20, 2019. [Google Scholar]

31. P. Zhang, Y. Ke, Z. Zhang, M. Wang, P. Li et al., “Urban land use and land cover classification using novel deep learning models based on high spatial resolution satellite imagery,” Sensors, vol. 18, no. 11, pp. 1–21, 2018. [Google Scholar]

32. S. Ma, W. Liu, W. Cai, Z. Shang and G. Liu, “Lightweight deep residual CNN for fault diagnosis of rotating machinery based on depthwise separable convolutions,” IEEE Access, vol. 7, pp. 57023–57036, 2019. [Google Scholar]

33. M. Khishe, F. Caraffini and S. Kuhn, “Evolving deep learning convolutional neural networks for early COVID-19 detection in chest X-ray images,” Mathematics, vol. 9, no. 9, pp. 1–18, 2021. [Google Scholar]

34. J. C. Gomes,V. A. Barbosa,V. A. Santana, J. Bandeira, M. Jorge Silva et al., “Texture analysis in the evaluation of covid-19 pneumonia in chest X-ray images: A proof of concept study,” Current Medical Imaging, vol. 12, pp. 1–21, 2020. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |