DOI:10.32604/cmc.2022.020866

| Computers, Materials & Continua DOI:10.32604/cmc.2022.020866 |  |

| Article |

Alzheimer Disease Detection Empowered with Transfer Learning

1Center for Cyber Security, Faculty of Information Science and Technology, Universiti Kebangsaan Malaysia (UKM), 43600 Bangi, Selangor, Malaysia

2School of Information Technology, Skyline University College, University City Sharjah, 1797, Sharjah, UAE

3School of Computer Science, National College of Business Administration & Economics, Lahore, 54000, Pakistan

4Department of Computer Science, Lahore Garrison University, Lahore, 54000, Pakistan

5Riphah School of Computing & Innovation, Faculty of Computing, Riphah International University, Lahore Campus, Lahore, 54000, Pakistan

6Pattern Recognition and Machine Learning Lab, Department of Software, Gachon University, Seongnam, 13557, Korea

*Corresponding Author: Muhammad Adnan Khan. Email: adnan@gachon.ac.kr

Received: 10 June 2021; Accepted: 11 July 2021

Abstract: Alzheimer's disease is a severe neuron disease that damages brain cells which leads to permanent loss of memory also called dementia. Many people die due to this disease every year because this is not curable but early detection of this disease can help restrain the spread. Alzheimer's is most common in elderly people in the age bracket of 65 and above. An automated system is required for early detection of disease that can detect and classify the disease into multiple Alzheimer classes. Deep learning and machine learning techniques are used to solve many medical problems like this. The proposed system Alzheimer Disease detection utilizes transfer learning on Multi-class classification using brain Medical resonance imagining (MRI) working to classify the images in four stages, Mild demented (MD), Moderate demented (MOD), Non-demented (ND), Very mild demented (VMD). Simulation results have shown that the proposed system model gives 91.70% accuracy. It also observed that the proposed system gives more accurate results as compared to previous approaches.

Keywords: Convolutional neural network (CNN); alzheimer's disease (AD); medical resonance imagining; mild demented

Alzheimer's disease (AD) is a disease that's caused due to the damage of memory cells permanently, most commonly referred to as dementia. It causes the death of nerve cells and loss of tissues throughout the brain, which results in memory loss. It imposes a bad impact on the execution of routine life tasks such as reading, speaking, or writing. Patients at the last stages of Alzheimer's Disease face more severe effects which include, respiratory system dysfunction and heart failure leading to death [1]. Accurate and early diagnosis of AD is not possible due to the improper medication that has been specified [2]. However, if it is diagnosed at an early stage, it can improve the patient's life with treatment. Its indicators grow slowly but it can worsen the condition of a patient over time when the human brain starts disorder [3]. A large number of people suffer from this disease every year, according to a recent estimate, one in 85 people will be suffering from AD by 2050 [4]. This disease is ranked as the world's 2nd most severe neurological disorder, dementia symptoms develop in approximately 60%–80% of the population who are diagnosed with Alzheimer's disease. For scaling dementia, the global deterioration scale (GDS) is commonly used. A clinical dementia rating (CDR) scale is also used because of its simplistic nature for collaboration between medical professionals and families [5].

In AD patients, the size of the ventricles increases in the brain, and the size of the cerebral cortex and hippocampus shrinks. When the size of the hippocampus is reduced the episodic memory and spatial memory are damaged. This damage between neurons leads to communication defects in planning, judgment, and short-term memory [6]. This reduction causes impairment of the synapses, neuron ends, and further cell loss. Many studies have been conducted for the classification and detection of AD at an early or later stage. For Alzheimer's disease detection, the most preliminary and common method is to examine brain MRI for analysis. Medical consultants examine these MRI scans to reveal the presence of disease. Multiple factors contribute to this examination like the existence of a tumor, brain matter or degeneration, etc. Due to the severity of this disease, there is a need to conduct thorough research in this area.

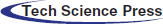

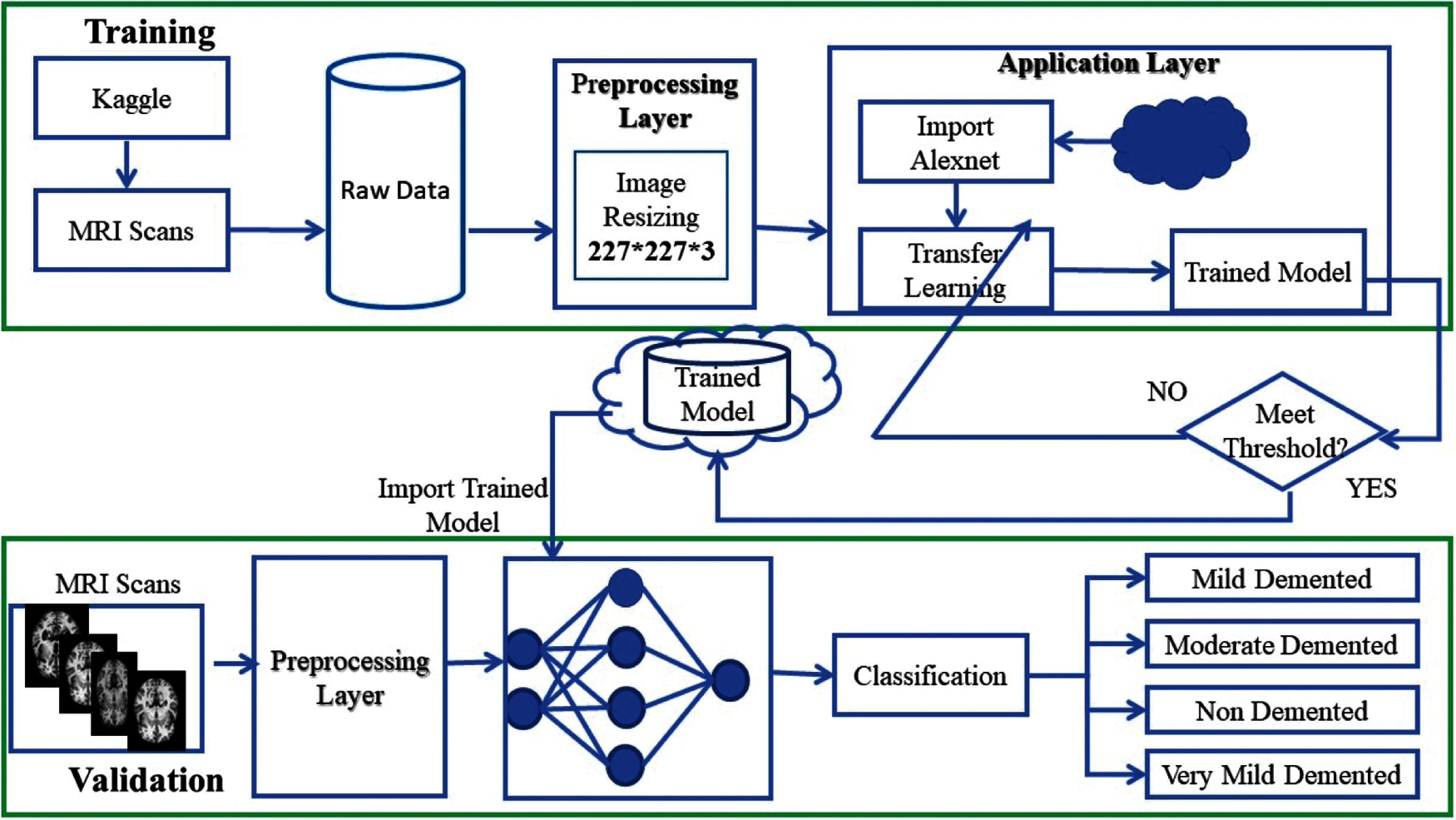

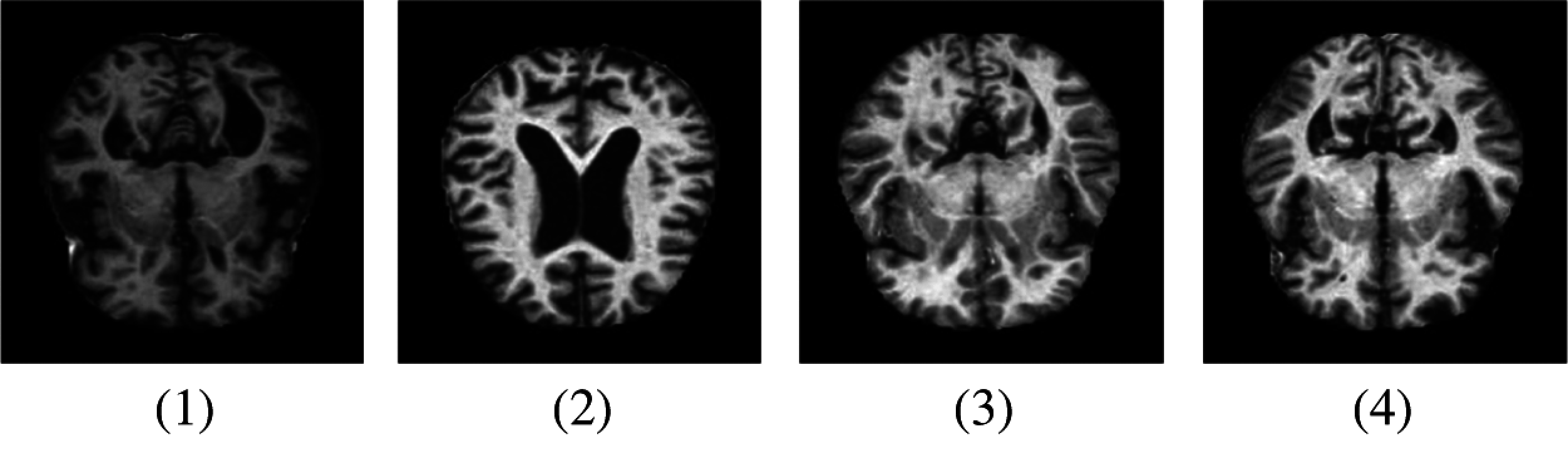

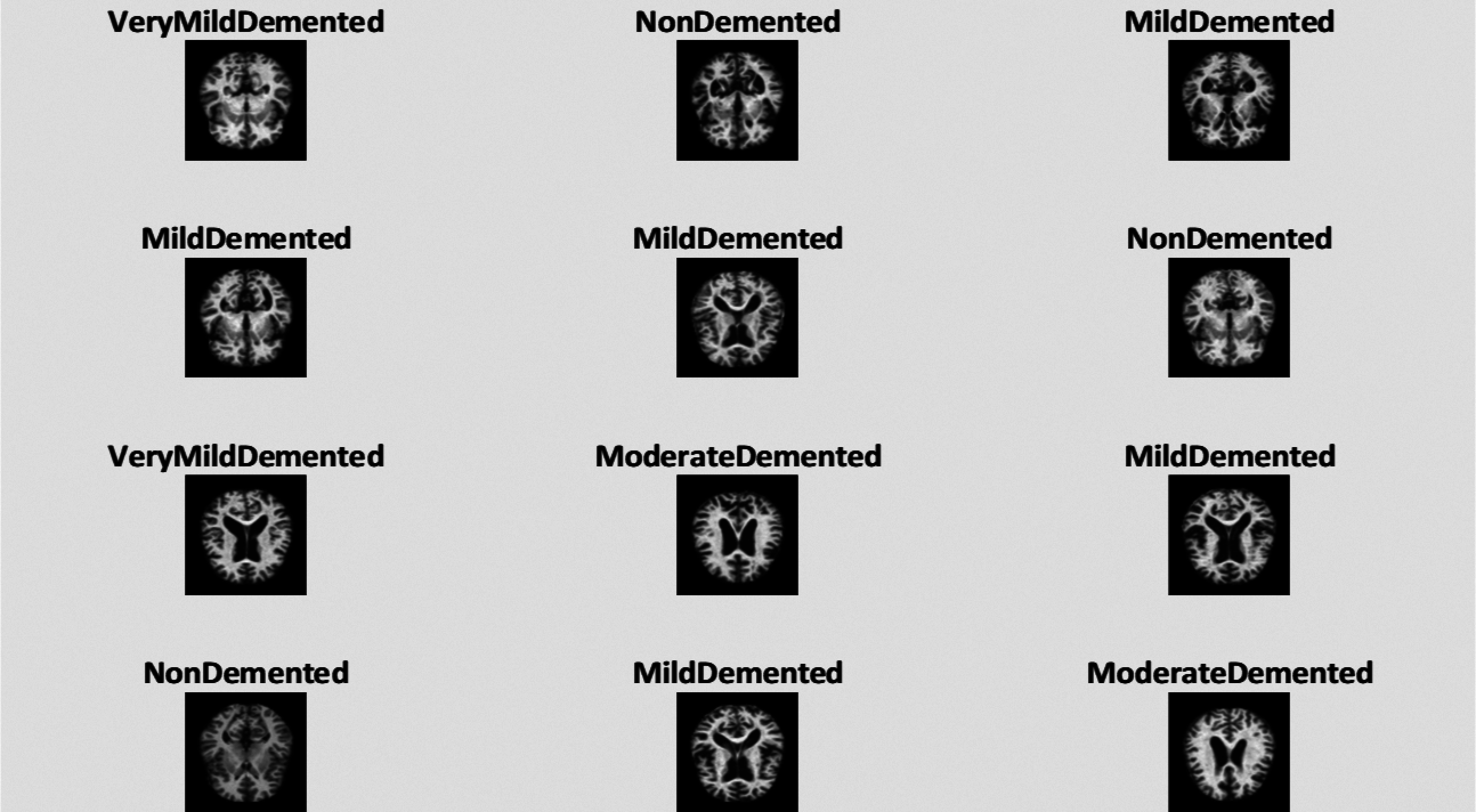

Deep learning and machine learning models have been effectively implemented in various fields of medical image analysis such as Mammography, Ultrasound, Microscopy, MRI, etc. [7]. Deep learning models show a significant result in several disease classifications and detections like heart, lung, brain, retina, breast, bone, etc. These models have shown prominent results in various medical fields, but little work has been done in AD detection. MRI data can be used to detect the early detection of AD which can help medical consultants to restrain the speed of disease in the brain [8]. In our research work, we have used an MRI dataset for the classification and detection of AD with a deep learning model. The sample images are shown in Fig. 1.

Figure 1: Samples of MRI images representing different AD stages. (1) MD; (2) MOD; (3) ND; (4) VMD

This paper is divided into 4 sections. Section 2 includes the previous studies that have been carried out so far, for the discovery of this disease. The Proposed system model (ADDTLA) and its outcomes are examined in Section 3 and the conclusion is discussed in Section 4.

Diagnosis of Alzheimer's disease is a challenging task for researchers at an early stage. For this purpose, various techniques such as deep CNN, machine learning, and deep learning-based algorithms are presented to solve different problems related to brain image data. Magnetic resonance imaging (MRI) is the main source that is used to diagnose Alzheimer's disease in clinical research. These can be used as the main source to perform multiple operations like feature extraction etc [9]. Before the advent of deep learning, machine learning-based techniques were used since 2000. The machine learning models have shown performance when combined with hand-crafted features which require domain experts to achieve high accuracy and maximum performance. After 2013, novel architectures of deeper models gained popularity especially in the field of medical image processing [10]. Multiple deep learning algorithms have been used for the detection of AD patients such as support vector machines on weighted MRI images or random forest classifiers for multimodal classification [11].

El-Dahshan et al. [12] used a hybrid technique for the detection and classification of disease in which the proposed hybrid technique consisted of three stages: feature extraction, dimensionality reduction, and classification. In the first stage, they collected the features related to MRI in the second stage by using principal component analysis (PCA) features of images were reduced and the last stage was dedicated to the development of two classifiers. The first classifier was based on the feed-forward neural network and the second was based on the k-nearest neighbor. The advantage of this classification was that it was easy to operate, rapid, inexpensive, and non-invasive. The limitation of this hybrid technique as it required fresh training every time whenever there is an increase in the image dataset. Ahmed et al. [13] proposed CNN based model with a patch-based classifier used for the detection of AD which minimized the computation cost and showed a great improvement towards the diagnosis of disease. Deep learning-based algorithms have been used to perform multiple operations on a dataset and extract the features directly from the input. These models are based on algorithms that have a hierarchical structure and multiple layers which increases the ability of feature representation on MRI images. Hong et al. [14] used LSTM (long short-term memory) which is a special kind of recurrent neural network (RNN) for the prediction of said disease. This algorithm uses previous information of patients that are linked with their current tasks and temporal data is meaningful for the early prediction in development of disease. This algorithm basically uses time series data in its layers and three layers are used e.g., cells, post-fully connected and pre-fully connected layers. They described prediction of disease rather than the classification.

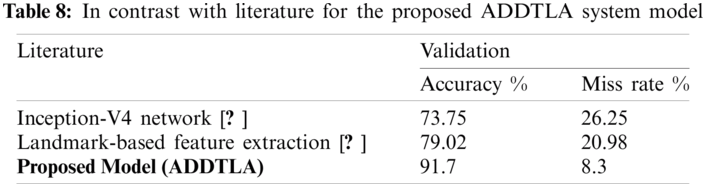

Islam et al. [15] proposed a deep convolutional network inspired by the Inception-V4 network for the early detection of disease. They used Inception-A, B, C module and Reduction-A, B module for processing and input and output of these layers passed through multiple filter concatenation processes. They used the open-access imaging studies (OASIS) dataset for training and testing and achieved overall 73.75% accuracy. Zhang et al. [16] developed a landmark-based feature extraction framework by using longitudinal structural MRI images for the diagnosis of AD. Guerrero et al. [17] introduced a feature extraction framework that is based on significant inter-subject variability. Sparse Regression models were used to drive Region of Interest (ROIs) for variable selection. The model proposed by the authors gave an overall accuracy of 71% and the limitation of their work is that these were binary classification. Ahmed et al. [18] proposed a model in which a hybrid feature vector was formed which incorporated the shape of the hippocampus and cortical thickness with the texture. Authors used a linear discriminant analysis (LDA) classification algorithm to classify the feature vectors combined with MRI scans. The proposed methodology was tested on the dataset obtained by Alzheimer's disease neuroimaging initiative (ADNI) and this multi-class classification problem gave an overall 62.7% of accuracy.

Transfer learning is used in various field like medical imaging, health care, software defect prediction etc. As the growth of deep learning increases, transfer learning is becoming integral part in many applications especially in the field of medical imaging. For dealing lack of data transfer learning technique is used. This method allows to use knowledge acquired from other tasks to handle new but similar problems effectively and quickly. In transfer learning different kinds of standard architectures are constructed and designed for ImageNet with their respective pretrained weights those are fine-tuned on medical tasks that range from identifying eye diseases, understanding chest X-rays, early detection of AD etc. Transfer learning-based approach is also used for pneumonia detection. It is among the top disease that cause deaths all over the world. It is difficult to detect pneumonia just by looking X-rays. Transfer learning approach is also used for the detection of retinopathy in eye image. This disease effects number of people who have diabetes from a longer period. It can cause blindness, but early detection and diagnosis can help control the disease.

The limitation of the previous research can be summarized as most of the work was related to binary classification and little research has been done in multiclass classification. Transfer learning is the novel and new approach for the classification of diseases that are not just limited to Alzheimer's disease but also on mammography, ultrasound, X-Ray, etc. Multiple studies and research are now being carried out for the classification of diseases by using novel transfer learning methods. Following are the main contributions in our proposed work which automatically classifies the Alzheimer stages in four different classes

For the classification of Alzheimer's disease, firstly we propose and assess a novel methodology that is transfer learning. Pretrained model AlexNet is customized and used in our proposed model to identify AD stages. Examining the brain images as input and classified them into multiple Alzheimer's stages as our transfer learning algorithm's output.

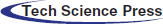

Artificial Intelligence brought a huge transformation in every field of information technology and the medical image processing field. Machine learning techniques were used to analyze and identify objects in medical images. In the last few years, numerous deep learning techniques are widely being used in medical image processing to identify and analyze the objects in medical images. The detection of AD at early stages by using deep learning techniques helps medical practitioners to find out its treatment. The disease cannot be cured but early detection can reduce its rapid spread. Multiple artificial intelligence techniques have been used for early diagnosis. Deep learning techniques are an effective source to help medical consultants to detect the disease at an initial stage. The application-level representation of the proposed system model is represented in Fig. 2.

Figure 2: Proposed model application-level architecture

The proposed ADDTLA system model accepts MRI scans that help in the early detection and classification of diseases that can be in different stages based on techniques of deep learning. It consists of two layers named preprocessing layer, and the application layer. The training data that consisted of MRI images are collected through the Kaggle repository and collected data is in raw form. The Pre-processing layer dealt with the raw data in which it converts the image in 227 * 227 * 3 (RGB) dimension. The second layer is the Application layer in which pre-trained model AlexNet is imported and customized for transfer learning. The model needs to retrain if learning criteria do not meet; else the trained model is stored on the cloud. A detailed description of the proposed system model is given in Fig. 3.

Figure 3: Detailed proposed ADDTLA system model

In the validation phase, MRI scans collected from sources are passed to Pre-processing layer. The Pre-processing layer changes the dimension of the images. Once Preprocessing is done the proposed system model imports data from the cloud for the intelligent classification of Alzheimer's disease. This intelligent system model detects and classifies AD in four classes if there exist symptoms of disease in patients the system refers to the doctor otherwise no need to visit the doctor for AD treatment.

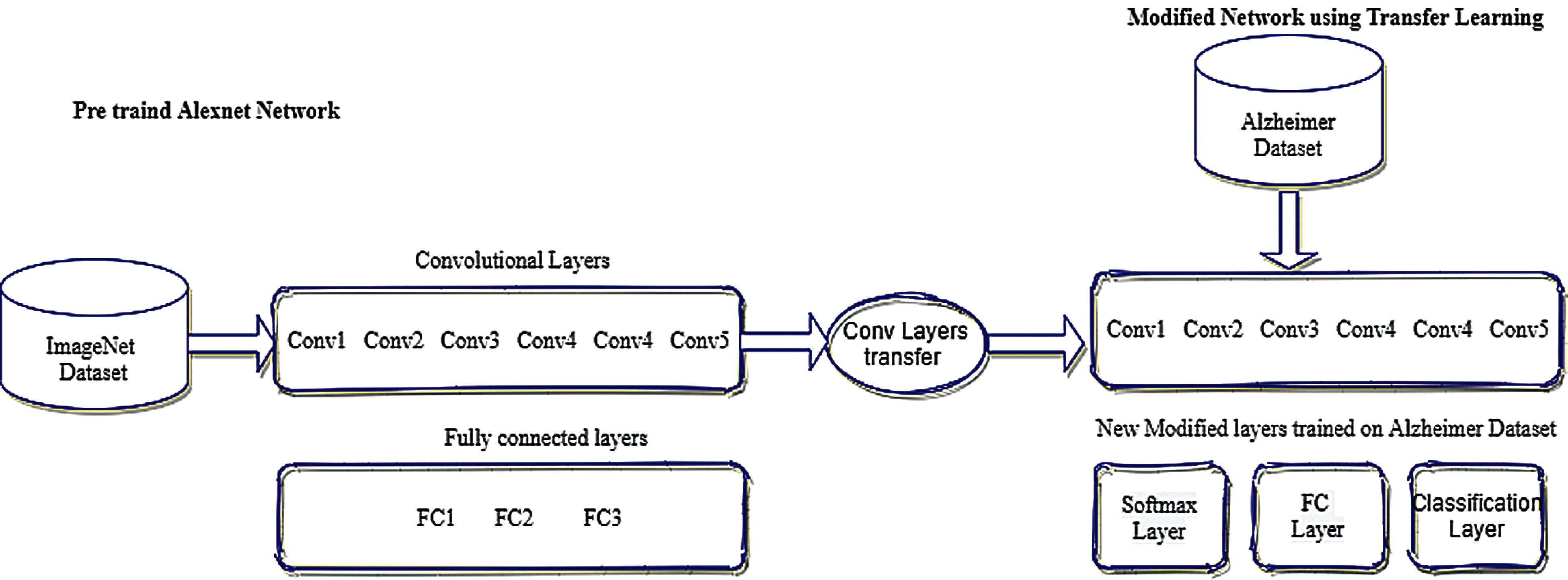

3.1 Transfer Learning (Modified Alexnet)

Deep learning is a very popular technique nowadays and is used in various fields of daily life for the prediction of diseases, agriculture, aeronautics, and transportation, etc. Different deep learning and Pretrained algorithms are used for various purposes. In this study, we have used a deep learning-based network which is pre-trained AlexNet used for transfer learning intended for the detection and classification of Alzheimer's disease.

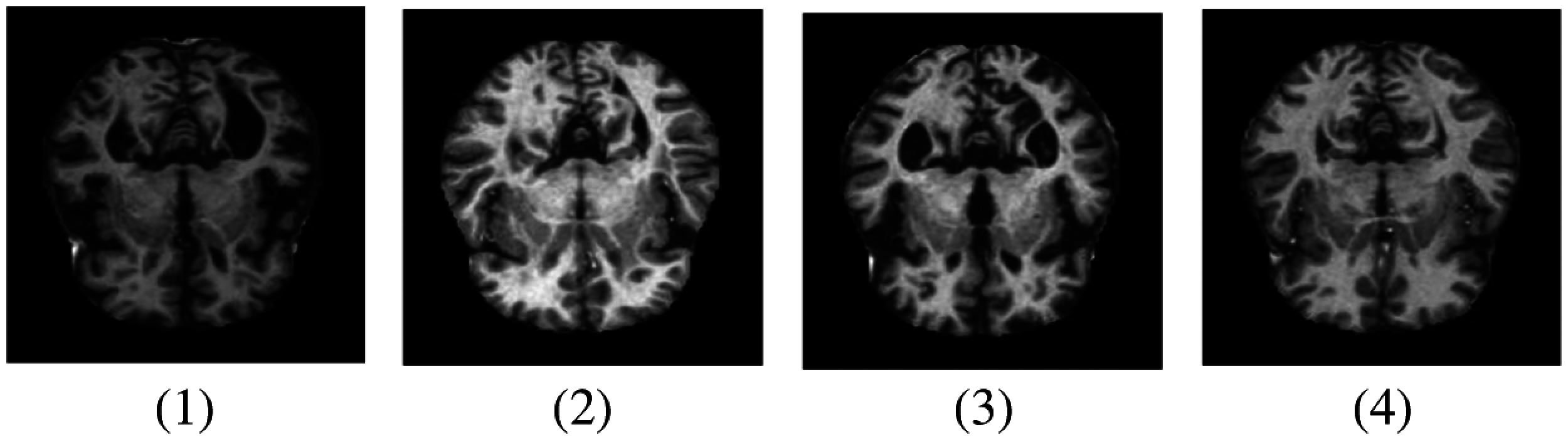

AlexNet is a pre-trained model in convolutional networks. Using a pre-trained model is known as transfer learning and this notion is frequently used in deep learning applications nowadays. The AlexNet algorithm is trained on a large number of datasets of ImageNet which were labeled images of more than 15 million [19]. We used a modified version of this AlexNet model in our proposed methodology. This network AlexNet is 8 layers deep with learnable parameters in which 5 layers are Convolutional layers with a combination of max pooling and 3 Fully Connected Layers. Each layer has a nonlinear activation function called ReLU. The input layer in the network reads images that came from the Pre-processed layer. Pre-processing of images is a necessary step to obtain proper datasets that can be done in many ways i.e., to enhance some Image features or image resizing. Image resizing is an essential step because images have different variated dimensions. Therefore, images were resized into 227 * 227 * 3 where 227 * 227 represents the height and width of the input images, and 3 demonstrates the number of channels. The MRI images representing different AD stages after Pre-processing are represented in Fig. 4.

Figure 4: Samples of MRI images after pre-processing. (1) MD; (2) MOD; (3) ND; (4) VMD

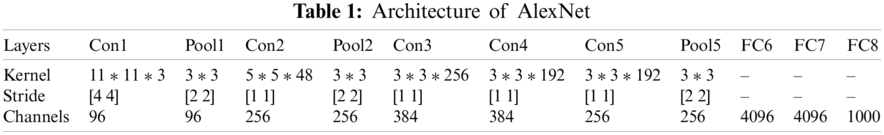

Fully connected layers which are at the end of the network learn the features of disease to label images into explicit classes, while the early Convolutional layers are used to extract the general features of images by applying filters e.g., edge detection, etc. and also preserve the spatial relationship among pixels. The architecture of the AlexNet network is given in Tab. 1.

Tab. 1 represents the architecture of pre-trained AlexNet which is composed of convolutional layers, pooling layers, and fully connected layers. AlexNet network which is a pre-trained CNN network and has a huge impact in the modern applications of deep learning is imported in our model for use [20]. This CNN network is customized according to our requirements and then these preprocessed images were passed to our proposed AlexNet transfer learning. The last three layers of the architecture are customized according to our problem statement and newly adapted layers are the output classification layer, fully connected layer, softmax layer. The modified network used for transfer learning is shown in Fig. 5

Figure 5: Modified network using transfer learning

The initial five layers of the network remain fixed that are already trained by using AlexNet (ImageNet), although the remaining adaptation layers are trained according to Alzheimer datasets [21]. The last three layers are configured according to the output class labels and can categorize the images into their particular class labels. The input parameters for fully connected layers are the size of the output which are composed of multiple classes. The output size of a fully connected layer is equivalent to the total number of class labels. Softmax layers are used to apply softmax functions on the input. During the training process, it is observed that generic features of the images like edge detection are represented by a convolutional layer while the class-specific features to differentiate between classes are learned by a fully connected layer. Therefore, fully connected layers are modified according to our class-specific features. This proposed model is trained on multi-class labels of AD to classify images in different class labels.

For training the network several different parameters can be used to prepare the network or multiple training options can also be given. For the sole purpose of achieving transfer learning, AlexNet layers are extracted (excluding the last three layers) and these last three layers are called fully connected layers, SoftMax layers, and output classification layers. Several parameters can be used as training options and these training options include Learning rate, Number of iterations, validation frequency, and Number of epochs. To train the network 1e–4 learning rate is used and the Number of iterations in one epoch is 39. The training was done on multiple epochs like 10, 20, 30, 35, 40. Stochastic gradient descent with momentum (SGDM) optimization algorithm is used for training. These training options are used by newly edited layers to absorb the features of the AD dataset. The training of the transfer learning algorithm is checked on various epochs like 10, 20, 30, 35, 40 and found out the optimal epoch was 40. The learning rate was also changed from 1e–1 to 1e–5 and found out the optimal learning rate was 1e–4. To meet the threshold value in terms of accuracy algorithm was retrained several times to achieve maximum accuracy. To measure the loss rate Cross-Entropy function was employed and the output size is determined by the number of classes.

The domain D contains mainly two components: Y represents the feature space, and P(Y) represents the corresponding marginal probability P(Y), where

Marginal probabilities along with their feature space would be different for two different domains. A task T in a domain D, also represented by two components, objective prediction function

Throughout the training process of features, Y labeled as Z the predictive function

The learning of predictive function

In the proposed (ADDTLA) system model target and source domains are dissimilar that implies the components to be different as well,

Customized trained models are placed on clouds and can be used to validate. During the validation stage, MRI scans are passed to the trained model. The trained model has all the features for processing of images that it learned in the training process, therefore, the trained model asses the images and classify the images in their respective classes which are moderate demented (MOD), mild demented (MD), non-demented (ND), very mild demented (VMD). Validation data consists of 20% data samples from original data on which simulations were performed. Hence it concluded the detection of AD stages in patients and transferring knowledge of large datasets contributes to the efficiency of Alzheimer's disease detection.

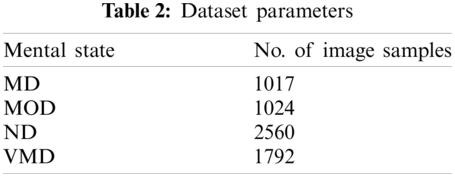

The target dataset was taken from a Kaggle repository that is publicly available [22]. This dataset consists of MRI scans of the brain of Mild Demented, Non-Demented, Very Mild Demented, and Moderate Demented. The proposed model was trained on an image dataset that covered multiple stages of AD. After augmentation, the number of image samples taken as input according to their classes can be seen in Tab. 2.

The proposed system model was developed by using Pre-trained AlexNet for the detection of AD at early stages. MATLAB 2020b is being used for the classification and results. The proposed (ADDTLA) model is further divided into training and validation phases. The results produced by our proposed model are evaluated by using evaluation metrics. 80% dataset is used for training in the training phase and 20% dataset is used for validation in the validation phase. Different performance parameters are used to evaluate the performance like sensitivity, specificity, precision, accuracy, False negative rate (FNR), False positive rate (FPR), miss rate, F1 Score, Likelihood ratio positive (LRP), and Likelihood ratio negative (LRN). These evaluation metrics are a good way to evaluate classification models and are used to quantify the performance of a predictive model. The results obtained from our proposed (ADDTLA) model can be evaluated by using different evaluation metrics [21].

The proposed system model (ADDTLA) classifies Alzheimer's disease in four different classes. MD represents the stage in which people may face memory loss of recent events, MOD signifies the stage in which people experience poor judgment and need help in daily activities, ND class represents the absence of disease, VMD characterizes the stage in which a person may function independently but feels memory lapses such as forgetting the location of everyday objects, etc.

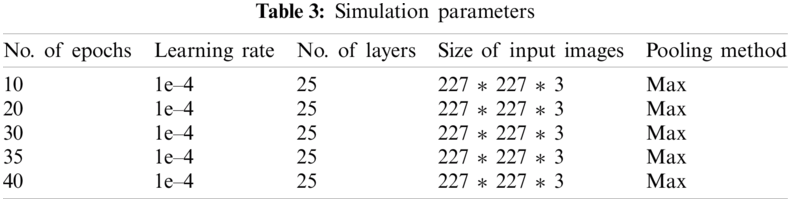

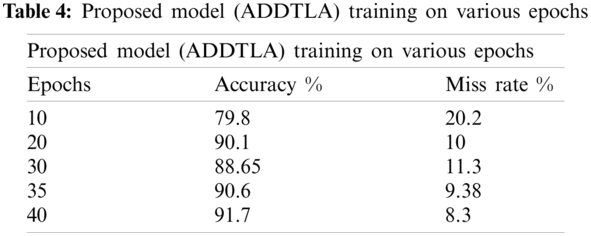

Tab. 3 represents simulation parameter values that are used for the proposed system model. The data was trained on multiple epochs like 10, 20, 30, 35, 40, and the optimal result accuracy of our model is 91.7% on 40 epochs. Our proposed model for detection and classification of the disease gave better accuracy as compared to the previous approach [16] in which their accuracy was 73%. Multiple parameters are used to train the algorithm on various epochs and learning rates. In our proposed (ADDTLA) system model, CNN-based parameters are used to train the model and to obtain the desired output. Multiple time model is trained to achieve optimal accuracy and loss rate on 10, 20, 30, 35, 40 epochs respectively.

Tab. 4 represents the accuracy and miss rate of the proposed (ADDTLA) model on different epochs like 10, 20, 30, 35, 40. The accuracy was 79.8% on 10 epochs, 90.1% on 20 epochs, 88.65% on 30 epochs, 90.6% on 35 epochs and 91.7% on 40 epochs. The accuracy gradually improved after trying various epochs therefore we achieved optimal accuracy of 91/7% at 40 epochs.

Fig. 6 shows the labeled images of AD in different classes by our proposed system (ADDTLA). 2 images are labeled as (VMD), 3 images as (ND), 5 images as (MD), 2 images as (MOD).

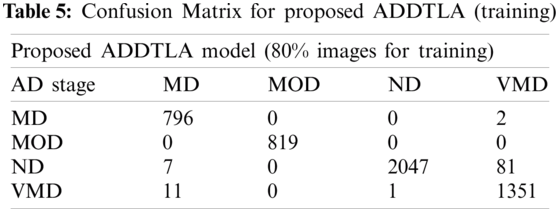

Tab. 5 represents the classification of the proposed (ADDTLA) for training on 40 epochs. For 40 epochs total no. of images was 6393 in which 5115 images were used for training and 1278 images used for validation. For the classification 798 images used in which 796 classified as (MD) and 2 images as (VMD), 819 used in which 819 classified as (MOD), 2135 used in which 2047 classified as (ND), and 7 or 81 images are classified as (ND) and (VMD) respectively, 1363 used in which 1351 classified as (VMD) and 11 images as (MD) and 1 image.

Figure 6: Classification of images by proposed ADDTLA model

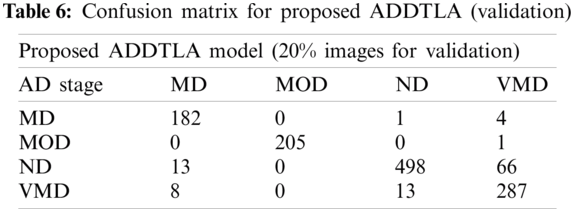

Tab. 6 shows the proposed (ADDTLA) confusion matrix during validation when we used 40 epochs. For the validation purpose on 40 epochs total no. 1278 images were used to validate the proposed model. In the case of MD, class 182 images are correctly identified as MD but 5 images are wrongly classified as ND and VMD. In the case of MOD, 205 images are correctly classified as MOD and 1 image is wrongly classified as VMD and in the case of ND class 498 images are correctly classified as ND and 79 images are wrongly classified in which 13 images are classified as MD and 66 images are classified as VMD and lastly in the class of VMD, 287 correctly identified as VMD and 21 images are wrongly identified as MD and ND.

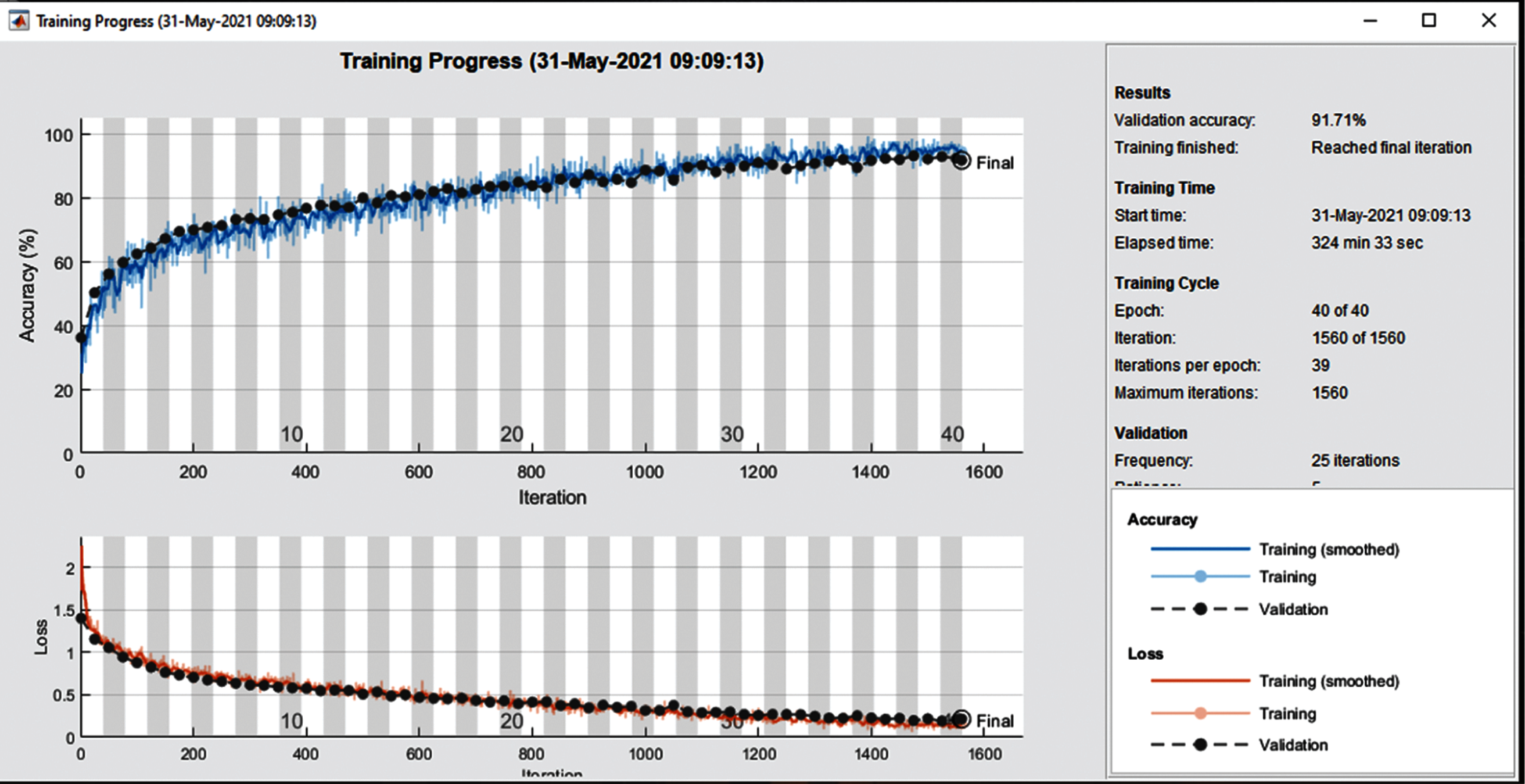

Fig. 7 shows the training accuracy plot that consists of iterations and epochs that shows the results on 40 epochs. The plot shows the percentage of accuracy that started from 1 epoch and completed the training at 40 epochs. At the start, the accuracy was low but gradually it increases as the number of epochs increase. To train the network 1e−4 learning rate is used and the number of iterations in one epoch is 39.

Figure 7: Training and loss rate on 40 epochs

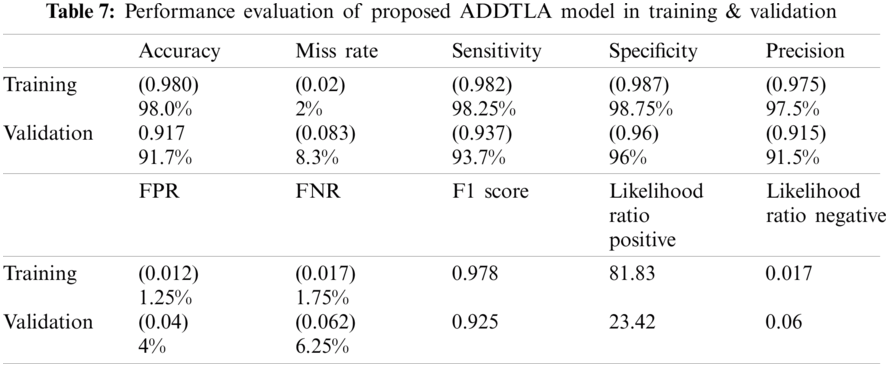

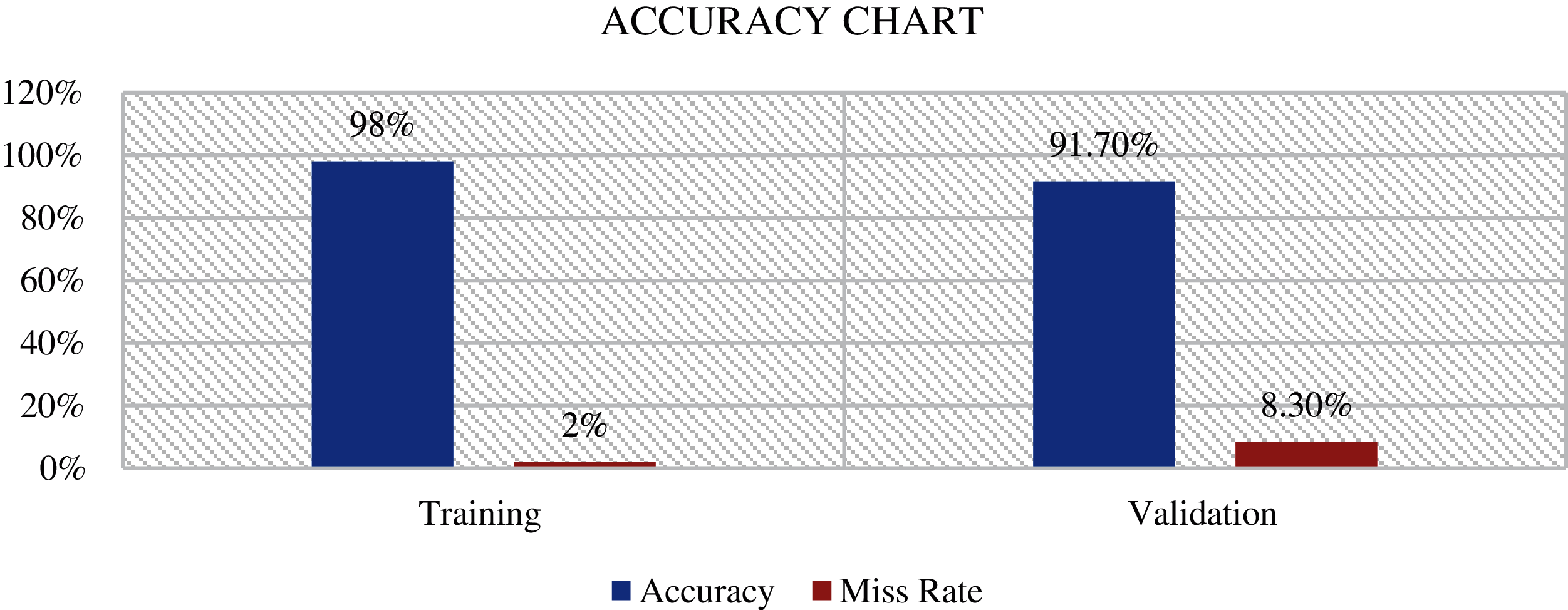

Tab. 7 represents the statistical measure of different parameters like accuracy, miss rate, sensitivity, specificity, precision, FPR, FNR, F1 Score, LRP, and LRN for proposed (ADDTLA) system model measures. The proposed (ADDTLA) system model provides an accuracy of 98% for training and 91.7% for validation on 40 epochs.

The values for accuracy, miss rate, sensitivity, specificity, precision, FPR, FNR, F1 Score, LRP, LRN are 98.0%, 2%, 98.25%, 98.75%, 97.5%, 1.25%, 1.75%, 0.978, 81.83, 0.017 respectively on 40 epochs for training. For the validation phase the values for accuracy, miss rate, sensitivity, specificity, precision, FPR, FNR, F1 Score, LRP, LRN are 91.7%, 8.3%, 93.7%, 96%, 91.5%, 4%, 6.25%, 0.925, 23.42, 0.06 respectively for 40 epochs.

Multiple approaches have been used in the past for the detection of AD, but transfer learning is the novel approach to detect the presence of a disease. Our proposed methodology achieved high accuracy in the detection of disease for a specific class. Thus, the early detection of disease can help medical consultants to provide treatment for restraining the spread of disease.

Tab. 8 shows the performance of the proposed system model with previously published approaches. It is observed that the proposed model gives 91.7% accuracy which is higher than as compared to published approaches e.g., Inception-V4 network [15] and Landmark-based feature extraction [16].

Fig. 8 shows the proposed model (ADDTLA) evaluation in terms of training and miss rate on 40 epochs. It can be seen that it gives 98% accuracy for the training phase and only a 2% miss rate. For the validation phase, it gives 91.70% accuracy and an 8.30% miss rate.

Figure 8: Proposed model ADDTLA accuracy chart

The early discovery and classification of AD in multiclass remains a challenging task. The classification frameworks and automated systems are needed for the appropriate treatment of the disease. In our work, we proposed a system based on a transfer learning classification model for multi-stage detection of Alzheimer's Disease. Our proposed algorithm used the pertained AlexNet for the problem, retrained the CNN, and validated on validation dataset which gave an accuracy of 91.7% for multi-class problems on 40 epochs. Our proposed system model (ADDTLA) does not require any hand-crafted features and it is fast or can easily handle small image datasets. In future work, the model accuracy can be analyzed to fine-tune all the convolutional layers, and also other pre-trained networks or CNN architectures can be further explored. Other datasets such as ADNI can also be used to work with, to accomplish comparable or better performance. Finally, supervised and unsupervised deep learning approaches can also be used for the detection of multi-class Alzheimer's disease.

Acknowledgement: The authors thank their families and colleagues for their continued support.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. I. Beheshti and H. Demirel, “Feature-ranking-based Alzheimer's disease classification from structural MRI,” Magnetic Resonance Imaging, vol. 34, no. 3, pp. 252–263, 2016. [Google Scholar]

2. A. Association, “Alzheimer's disease facts and figures include a special report on Alzheimer's detection in the primary care setting: Connecting patients and physicians,” Alzheimer's Dement, vol. 15, no. 3, pp. 321–387, 2019. [Google Scholar]

3. S. Afzal, “A data augmentation-based framework to handle class imbalance problem for Alzheimer's stage detection,” IEEE Access, vol. 7, pp. 115528–115539, 2019. [Google Scholar]

4. Y. Q. Wang, “Dementia in China (2015–2050) estimated using the 1% population sampling survey in 2015,” Geriatrics & Gerontology International, vol. 19, no. 11, pp. 1096–1100, 2019. [Google Scholar]

5. C. N. L. Schlaggar, O. L. D. Rosario, J. C. Morris, B. M. Ances, B. L. Schlaggar et al., “Adaptation of the clinical dementia rating scale for adults with down syndrome,” Journal of Neurodevelopmental Disorders, vol. 11, no. 1, pp. 1–10, 2019. [Google Scholar]

6. G. Umbach, “Time cells in the human hippocampus and entorhinal cortex support episodic memory,” Proceedings of the National Academy of Sciences of the United States of America, vol. 117, no. 45, pp. 28463–28474, 2020. [Google Scholar]

7. E. Moradi, A. Pepe, C. Gaser, H. Huttunen and J. Tohka, “Machine learning framework for early MRI-based Alzheimer's conversion prediction in MCI subjects,” Neuroimage, vol. 104, pp. 398–412, 2015. [Google Scholar]

8. E. E. Bron, “Standardized evaluation of algorithms for computer-aided diagnosis of dementia based on structural MRI: The caddementia challenge,” Neuroimage, vol. 111, pp. 562–579, 2015. [Google Scholar]

9. C. Plant, “Automated detection of brain atrophy patterns based on MRI for the prediction of Alzheimer's disease,” Neuroimage, vol. 50, no. 1, pp. 162–174, 2010. [Google Scholar]

10. G. Litjens, “A survey on deep learning in medical image analysis,” Medical Image Analysis, vol. 42, no. 12, pp. 60–88, 2017. [Google Scholar]

11. K. R. Gray, P. Aljabar, R. A. Heckemann, A. Hammers and D. Rueckert, “Random forest-based similarity measures for multi-modal classification of Alzheimer's disease,” Neuroimage, vol. 65, pp. 167–175, 2013. [Google Scholar]

12. E. S. A. E. Dahshan, T. Hosny and A. B. M. Salem, “Hybrid intelligent techniques for MRI brain images classification,” Digital Signal Processing: A Review Journal, vol. 20, no. 2, pp. 433–441, 2010. [Google Scholar]

13. S. Ahmed, “Ensembles of patch-based classifiers for diagnosis of Alzheimer diseases,” IEEE Access, vol. 7, pp. 73373–73383, 2019. [Google Scholar]

14. X. Hong, “Predicting Alzheimer's disease using LSTM,” IEEE Access, vol. 7, pp. 80893–80901, 2019. [Google Scholar]

15. J. Islam and Y. Zhang, “A novel deep learning based multi-class classification method for Alzheimer's disease detection using brain mri data,” Lecture Notes in Computer Science, vol. 10654, LNAI, no. 09, pp. 213–222, 2017. [Google Scholar]

16. J. Zhang, M. Liu, L. An, Y. Gao and D. Shen, “Alzheimer's disease diagnosis using landmark-based features from longitudinal structural MR images,” IEEE Journal of Biomedical and Health Informatics, vol. 21, no. 6, pp. 1607–1616, 2017. [Google Scholar]

17. R. Guerrero, R. Wolz, A. W. Rao and D. Rueckert, “Manifold population modeling as a neuro-imaging biomarker: Application to adni and adni-go,” Neuroimage, vol. 94, pp. 275–286, 2014. [Google Scholar]

18. O. B. Ahmed, M. Mizotin, J. B. Pineau, M. Allard, G. Catheline et al., “Alzheimer's disease diagnosis on structural MR images using circular harmonic functions descriptors on hippocampus and posterior cingulate cortex,” International Journal on Imaging and Image-Computing, vol. 44, pp. 13–25, 2015. [Google Scholar]

19. M. Z. Alom, “The history began from alexNet: A comprehensive survey on deep learning approaches,” ArXiv, vol. 18, pp. 1–12, 2018. [Google Scholar]

20. J. Schmidhuber, “Deep learning in neural networks: An overview,” Neural Networks, vol. 61, pp. 85–117, 2015. [Google Scholar]

21. N. Seliya, T. M. Khoshgoftaar and J. V. Hulse, “A study on the relationships of classifier performance metrics,” in 21st IEEE Int. Conf. on Tools with Artificial Intelligence, Newark, New Jersey, USA, pp. 59–66, 2009. [Google Scholar]

22. S. Dubey, 2019. https://www.kaggle.com/tourist55/alzheimers-dataset-4-class-of-images. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |