DOI:10.32604/cmc.2022.020571

| Computers, Materials & Continua DOI:10.32604/cmc.2022.020571 |  |

| Article |

Automatic Detection and Classification of Human Knee Osteoarthritis Using Convolutional Neural Networks

1Department of Medical Equipment Technology, College of Applied Medical Sciences, Majmaah University, Al Majmaah, 11952, Saudi Arabia

2Department of Chemical Engineering, Sethu Institute of Technology, Kariapatti, 626115, Tamilnadu, India

3Department of Physical Therapy, College of Applied Medical Sciences, Majmaah University, Al Majmaah, 11952, Saudi Arabia

4Department of Nursing, College of Applied Medical Sciences, Majmaah University, Al Majmaah, 11952, Saudi Arabia

*Corresponding Author: Mohamed Yacin Sikkandar. Email: m.sikkandar@mu.edu.sa

Received: 29 May 2021; Accepted: 15 July 2021

Abstract: Knee Osteoarthritis (KOA) is a degenerative knee joint disease caused by ‘wear and tear’ of ligaments between the femur and tibial bones. Clinically, KOA is classified into four grades ranging from 1 to 4 based on the degradation of the ligament in between these two bones and causes suffering from impaired movement. Identifying this space between bones through the anterior view of a knee X-ray image is solely subjective and challenging. Automatic classification of this process helps in the selection of suitable treatment processes and customized knee implants. In this research, a new automatic classification of KOA images based on unsupervised local center of mass (LCM) segmentation method and deep Siamese Convolutional Neural Network (CNN) is presented. First-order statistics and the GLCM matrix are used to extract KOA anatomical Features from segmented images. The network is trained on our clinical data with 75 iterations with automatic weight updates to improve its validation accuracy. The assessment performed on the LCM segmented KOA images shows that our network can efficiently detect knee osteoarthritis, achieving about 93.2% accuracy along with multi-class classification accuracy of 72.01% and quadratic weighted Kappa of 0.86.

Keywords: Osteoarthritis; segmentation; intensity value; unsupervised; neural network

The human knee is an important part of the body that helps to carry out mobility. Also, it is one of the complex and articulated joints of the body. Knee Osteoarthritis (KOA) is a common chronic condition known as degenerative knee joint disease caused by ‘wear and tear’ of ligaments between the femur and tibial bones [1]. KOA is one of the common causes of disability in older adults [2]. The World Health Organization (WHO) in its 2016 report on osteoarthritis says that 9.6% of men and 18.0% of women aged over 60 years have characteristic osteoarthritis. Among those, 80% have problems in mobility, and 25% of them were facing difficulty in carrying out their day-to-day activities [3]. According to a recent study, by 2032, the proportion of the population aged ≥45 with doctor-diagnosed OA is estimated to increase from 26.6% to 29.5% (any location), from 13.8% to 15.7% for the knee and 5.8%–6.9% for the hip [4]. In 2003, a study conducted on KOA in Al-Qaseem, Saudi Arabia region says that 13% adult population got affected by this disease and its prevalence steeply increases with increase in age reaching 30.8% in those aged 46–55 years and 60.6% in the age group 66–75 years [5]. KOA ranks among the top five causes of disability and represent an increasing economic burden to society, primarily through lost working hours and healthcare expenses [6]. Fig. 1 pictorially represents the normal knee joint and osteoarthritis knee joint. It is clinically very important to diagnose this joint accurately and identify the regions that are affected. There are modalities such as X-ray, CT, and MRI are used to scan these regions to measure the wear and tear and further clinical procedures such as implanting and total knee replacement.

Figure 1: Pictorial representation of the X-ray image of human normal knee joint and osteoarthritis knee joint

The diagnosis of OA is usually based on a clinical (physical) examination, the patient's medical history, and changes seen on plain X-rays. The Kellgren–Lawrence (KL) grading is the main radiography based grading criteria followed over 50 years for assessing the severity and progression of KOA and making treatment decisions [7]. Radiography (X-ray) imaging is the most traditional tool for the assessment of OA due to its low cost, availability, high contrast, and spatial resolution for bone and tissue [8]. Automatic detection of radiographic osteoarthritis in X-ray knee images based on the Kellgren-Lawrence (KL) classification grades was described by Shamir et al., in which simple weights were assigned to images and to predict the four grades of KL [9]. Many researchers have developed feature extraction algorithms and artificial neural networks for the automatic classification of KOA [10–12].

Machine-Learning based approaches have been surveyed by Kokkotis et al. [13] for the detection of knee osteoarthritis from X-rays. A computer-based system [14] was created that uses body kinetics as input and produces as the result not just an assessment of the knee osteoarthritis, also highlights the most discriminating parameters alongside a bunch of rules on how this choice was reached as the output. The chosen input parameters for the system were of the vertical, anterio-posterial, and medio-parallel GRFs, and, mean, push-off time, and slopes. Random Forest Regressors were used to map those boundaries through rule acceptance to the level of knee osteoarthritis. In another study, [15] straightforward and transparent computer-aided diagnosis method dependent on the Deep Siamese Convolutional Neural Network was employed to naturally score KOA seriousness based on the Kellgren-Lawrence scale. Using Deep Siamese Convolutional Neural Network for X-ray images with symmetry can reduce the number of learnable parameters. This method made the model more reliable and less sensitive to noise. Another way to identify the degree of knee osteoarthritis from radiographs utilizing deep convolutional neural networks (CNN) is proposed in [16]. The classification accuracy was essentially improved utilizing convolutional neural network models pre-trained on Image Net and re-training them using knee osteoarthritis X-ray images. A PC-vision-based framework was proposed by Saleem et al. [17] that can help the radiologists by studying the radiological manifestations in knee X-ray images for osteoarthritis. Various image processing tools were used on knee radiographs to improve the quality of the X-ray images. The knee region i.e., the tibio femoral joint is extracted with template matching. Then the knee joint space width is calculated, and the radiographs are classified using neural networks based on the normal knee joint space width. An efficient computer-aided image analysis strategy [18] based on weighted neighbor distances utilizing a compound hierarchy of algorithms speaking to morphology (WND-CHARM) was utilized to study sets of weight-bearing knee X-beams. This stated that WND-CHARM, a computer-based analysis tool can be used to find the development of osteoarthritis from normal knee X-ray images. Another methodology to automatically evaluate the degree of knee osteoarthritis utilizing X-ray images was proposed in [19], which involves two stages: first, automatically limiting the knee joints, using a fully convolutional neural network (FCN); next, arranging the confined knee joint images. Then a convolutional neural network (CNN) was trained to consequently evaluate the degree of knee osteoarthritis by weight updating of two-loss functions. This joint training of FCN and CNN further improves the general evaluation of knee osteoarthritis. A multi-modular AI-based osteoarthritis progression forecast model is presented in [20] that uses radiographic information, clinical assessment results, past clinical history of the patient, anthropometric information, and, alternatively, a radiologist's assertion (KL-grade). The significant finding of this investigation is that it is possible to predict knee osteoarthritis progression from a knee radiograph along with clinical information in a completely programmed way. A deep neural network was used for distinguishing the different types of osteoarthritis [21] utilizing patient's statistical information on clinical and health care data. Principal component analysis with quintile transformer scaling was employed to create highlights from the patients’ background clinical records and classify the degree of osteoarthritis [21]. Moreover, the findings of those techniques, level of accuracy in automatic classification is not as good as clinically expected. These aforementioned approaches have tried to deal with finding the degree of KOA like an image classification issue. The features from X-ray images can be found with the help of a computer-aided analysis that would quantify KOA severity, and also predict the development of knee OA. Deep learning technique especially CNN have recently shown extraordinary results in a variety of image recognition and classification tasks. Instead of direct radiographic features, in this work an adaptive learning feature representations using CNN is proposed and it can be better utilized for assessing KOA images and finding the degree of KOA prognosis. In this work, CNN is developed from scratch to automatically segment and classify the KOA with X-ray images as input. This involves three main steps 1. Automatic detection 2. Localization of ROI (tibio-femoral space) and 3. Extraction of features from ROI to classify KOA. Knee joint region width is a key feature in evaluating the degree and prognosis of KOA. In many of the KOA detection techniques, isolation of ROI is performed manually. Hand drawn contours and cropping of the joint area are typically for ROI isolation which is cumbersome, time consuming and highly subjective leads to human errors. In order to overcome this, unsupervised methods can be utilized without training information to segment ROI. The Local Center of Mass (LCM) methodology is an unsupervised image segmentation method that depends on the calculation of the local center of mass [22]. In this methodology, the pixels of a one-dimensional signal are grouped, which is used in an iterative algorithm for two-and three-dimensional image segmentation. LCM based strategy created less over-segmented regions when compared to other segmentation algorithms like a Gaussian-mixture-model-based hidden-Markov-random-field (GMM-HMRF) model, watershed segmentation, and the simple linear iterative clustering (SLIC) algorithm.

In this work, X-ray radiographic images of KOA will be segmented to identify ROI based on LCM method. Then feature extraction algorithms are applied namely the object statistics and texture features. CNN is trained using the features extracted from the segmented X-ray Image. This features were considered to accurately classify the KOA type and thereby add to the reliability of the results. After training, the network is tested for its accuracy. Post-training and testing phases, the CNN was used to classify the KOA of five classes namely; Grade 0 or normal, Grade 1, Grade 2, Grade 3, and Grade 4. The performance evaluations of proposed method is presented and compared with existing techniques. The paper is organized as five sections, first section explained about the various exiating methodology of detection of KOA followed by database used in the second section. Methodology carried in this research is given in third section. Results and conclusion is given in fourth and fifth section.

The digital human KOA X-ray images of 350 subjects consisting all four grades in the age group of 50 ± 30 years including both male and female were collected from Durma and Tumair General Hospital, Riyadh were used in this work. The X-ray images used in here composed of both bilateral images (radiograph of both the knees) and unilateral images (radiograph of only one knee). The bilateral images are partitioned into two individual images, each consisting of a single knee joint. All of the obtained radiographs are in antero-posterior view. These images were clinically and manually classified by clinical expert.

The investigation begins with preprocessing of Knee X-ray image data of all four grades of osteoarthritis, followed by segmentation using the Local center of mass algorithm, from which the knee joint is isolated as presented in Fig. 2. Then feature extraction and analysis are done for the knee joint. Features are extracted based on First order statistics and the GLCM matrix.

Figure 2: Flow chart of the proposed methodology

These features are used to train an artificial neural network classifier, namely Convolution Neural Network. Once training is completed, CNN can be used to classify the shown four degree of knee osteoarthritis in Fig. 3. The proposed algorithm is implemented in the MATLAB environment.

Figure 3: Knee X-ray images—(A) Normal, (B) Grade 1, (C) Grade 2, (D) Grade 3, (E) Grade 4

The input knee X-ray image is initially checked for the proper quality and then it is smoothened with anisotropic filter. All the input images are resized into 256 × 256 for the standardization of all the four categories of KOA.

3.2 Segmentation Using Local Center of Mass Algorithm and Extraction of Region of Interest

The local center of mass calculation method is used as an iterative algorithm for unsupervised segmentation of the KOA X-ray images. A label of an estimated local center of mass is assigned to pixels highlighting that center of mass, making the pixels in a similar locale eventually share the same label. The pixels corresponding to a common point form a group with a specific label. Here, the labeling of a pixel is done utilizing the data from the entire image which is depicts in Fig. 4. The detailed methodology of LCM segmentation technique has already been implemented and published [23,24]. An automatic cropping algorithm is then applied to isolate the knee joint space from the segmented image using colour scale maping technique, highlighting knee joint space (Fig. 5). Since the tibio femoral joint space is narrow, all the small colour contour segmented regions are isolated using related colour maping then they are extracted as one single region. This is then used in the feature extraction.

Figure 4: Segmentation of knee X-ray image using LCM

Figure 5: Isolation and extraction of ROI

3.3 Feature Extraction from Segmented ROI

3.3.1 First Order Statistics Based Approach—Histogram Based Features

First-order statistics measures are calculated from the original image values and don't consider pixel neighborhood relationships.

Histogram based method is based on the intensity value concentrations on all or part of an image represented as a histogram. Common parameter values include moments such as mean, variance, dispersion, mean square value or average energy, entropy, skewness, and kurtosis.

3.3.2 Spatial Frequencies Based Texture Analysis—Co-occurrence Matrix Based Features

The major statistical method used in image analysis is the one, dependent on the definition of the joint likelihood distributions of sets of pixels.

The second-order histogram is defined as the co-occurrence matrix hdθ(i, j). When divided by the total number of neighboring pixels R(d, θ) in the image, this matrix becomes the estimate of the joint probability, pdθ(i, j), of two pixels, a distance d apart along a given direction θ having particular (co-occurring) values i and j. Two forms of co-occurrence matrix exist—one symmetric where pairs separated by d and −d for a given direction θ are counted, and the other not symmetric where only pairs separated by distance d are counted.

This yields a square matrix of dimension equal to the number of intensity levels in the image, for each distance d and orientation θ. Due to the intensive nature of computations involved, often only the distances d = 1 and 2 pixels with angles θ = 0°, 45°, 90°, and 135° are considered.

As opposed to using a gray level co-occurrence matrix directly to gauge the textures of images, the matrices can be converted into simpler scalar measures inertia, correlation, entropy and homogeneity as given in the Eqs. (1)–(4).

3.4 Training the Neural Network with Obtained Features

In machine learning, a convolutional neural network (CNN, or ConvNet) is a class of deep, feed-forward artificial neural networks that has effectively been applied to analyze images. CNN's utilize a variation of multilayer perceptron's designed to minimize preprocessing.

Here, the structure of CNN includes two layers. The first is the feature extraction layer, where the input of each neuron is connected to the local responsive fields of the previous layer and extracts the required parameters. Once the parameters are isolated, the positional relationship between them and other parameters also will be resolved. The other is the feature map layer; each computing layer of the network is composed of a majority of the feature map. The structure of the feature map uses the sigmoid function as an activation function. Then there is the output layer that corresponds to the number of output classes. In this investigation one input layer, four hidden layers (feature map layers) composed of 8, 16, 32, 64 neurons in each layer and the output layer has five neurons, corresponding to Grades 1 through 4 and. The CNN is trained using the 280 X-ray images (80% of the X-ray dataset) from the database for 75 iterations with automatic weight updates to improve its validation accuracy. Fig. 6 shows the training and validation of CNN of the features obtained from segmented 280 X-ray images. The validation accuracy is obtained as 93.2% and the learning rate is 0.01.

Figure 6: Training results of the CNN for KOA classification

Post segmentation is the isolation and extraction of the ROI, i.e., the tibio-femoral joint space, which is done automatically by the algorithm. Figures below (Fig. 7) represent the ROI for Normal case, KOA Grade 1 through 4.

Figure 7: Isolated knee joint space and extracted region of interests for all cases

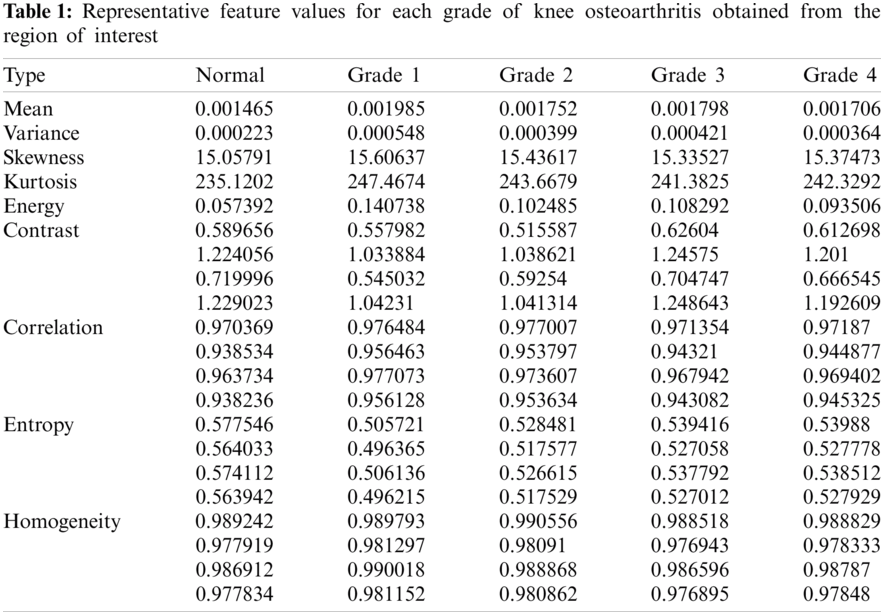

From ROI, first order statistical features and GLCM-based features were extracted. The Tab. 1 below describes the feature values obtained for the different grades of knee osteoarthritis, using which the CNN performs said classification.

4.1 Testing the CNN for Classification of Knee Osteoarthritis

Testing was done using 70 X-ray images, (20% of the X-ray dataset) to estimate the final performance of the CNN. The trained and tested CNN was then used for the identification of degree of knee osteoarthritis using test X-ray images.

The evaluation of the CNN classifier was performed on the knee radiograph database to study the performance of the proposed algorithm. The model was designed to automatically localize and isolate the knee joints from radiographs. The database was split into a training (80%) and test (20%) sets. The CNN was trained for 75 iterations depicts in Fig. 7 with automatic weight updates with a learning rate of 0.01. Considering the morphological variations of the knee joints, the automatic detection with this CCN was found to be highly accurate with a validation accuracy of 93.2%. Taking into account that the proposed method is fully automated, the computation time of this model is appreciable. The trained and tested CNN was then able to classify a knee X-ray image and identify the grade of osteoarthritis with the help of said features. Fig. 8 depicts the classification results and obtained feature values for a test X-ray image.

Figure 8: Classification results of the CNN for KOA and its obtained features

To evaluate the performance of the network, the Kappa coefficient and Multiclass accuracy were also calculated. This methodology yielded a multi-class classification accuracy of 72.01%, quadratic weighted Kappa of 0.86. These results highlight that this network has the ability to make accurate classification of KOA with X-ray images.

The investigation shows that it is possible to identify the grade of KOA based on the features extracted from the segmented radiograph images. In comparison to other publications, this method uses specific features related to the ROI, which can be correlated with the degree of KOA. Typically the isolation of the tibio-femoral joint (ROI) is a tedious task due to its irregular boundaries and manually performing the segmentation is quite difficult. Thus, by using an unsupervised segmentation algorithm, namely the Local Centre of Mass algorithm to segment the ROI has proven to be highly effective. This makes it easier to extract the structure metrics, i.e., the features from the knee joint space. Feature extraction from the region of interest has been incorporated to improve the predictive capabilities of the CNN and also with the aim to increase its computational efficiency.

The assessment performed on the local center of mass-based segmented knee X-ray images shows that this neural network can efficiently detect knee osteoarthritis, achieving about 93.20% accuracy and compared to the previous researches and methods, this algorithm has the highest multi-class classification accuracy of 72.01%, quadratic weighted Kappa of 0.86. Thus, the major finding of this investigation is that it is possible to predict KOA with high levels of accuracy. The limitation of this artificial neural network is that it requires large datasets to be trained and the decision process is often considered to be paradox, meaning it is not easy to understand how the decision is made. This model was trained using X-ray images that weren't categorized according to the age of the subjects. A future extension of this work can be done including the age factor of the subjects that would also influence the affliction of knee OA. Despite these limitations, the findings of this work imply that this feature-based analysis method can utilize X-ray images, to detect features present in the radiograph that are conclusive of KOA or its progression and thus can be utilized for diagnostic purposes. This paper proposed an automated strategy to detect knee osteoarthritis from x-ray images. The proposed approach has a few advantages. It can help subjects experiencing knee pain receive a quicker diagnosis. Also, medical services in general will profit by lessening the expenses of routine work. Thus, knee radiographs can be analyzed much faster and the algorithm decreases the danger of human error in diagnosis.

Funding Statement: The authors extend their appreciation to the Deputyship of Research and Innovation, Ministry of Education in Saudi Arabia for funding this research work through the Project Number IFP-2020-42.

Conflicts of Interest: The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

1. I. Haq, E. Murphy and J. Dacre, “Osteoarthritis,” Postgrad Medical Journal, vol. 79, pp. 377–383, 2003. [Google Scholar]

2. W. Laupattarakasem, M. Laopaiboon, P. Laupattarakasem and C. Sumananont, “Arthroscopic debridement for knee osteoarthritis,” Cochrane Database of System Review, vol. 1, no. CD005118, pp. 1–18, 2008. [Google Scholar]

3. J. C. Mora, R. Przkora and Y. C. Almeida, “Knee osteoarthritis: Pathophysiology and current treatment modalities,” Journal of Pain Research, vol. 11, pp. 2189–2196, 2018. [Google Scholar]

4. A. Turkiewicz, I. F. Petersson, J. Bjork, G. Hawker, L. E. Dahlberg et al., “Current and future impact of osteoarthritis on health care: A population-based study with projections to year 2032,” Osteoarthritis and Cartilage, vol. 22, no. 11, pp. 1826–1832, 2014. [Google Scholar]

5. A. S. Al-Arfaj, S. R. Alballa and S. A. Saleh, “Knee osteoarthritis in Al-Qaseem,” Saudi Medical Journal, vol. 24, no. 3, pp. 291–293, 2003, Saudi Arabia. [Google Scholar]

6. A. Maetzel, L. C. Li, J. Pencharz, G. Tomlinson and C. Bombardier, “The economic burden associated with osteoarthritis, rheumatoid arthritis, and hypertension: A comparative study,” Annals of the Rheumatic Diseases, vol. 63, no. 4, pp. 395–401, 2004. [Google Scholar]

7. J. H. Kellgren and J. S. Lawrence, “Radiological assessment of osteoarthrosis,” Annals of the Rheumatic Disease, vol. 16, no. 4, pp. 494–502, 1957. [Google Scholar]

8. H. Mingqian and E. Mark, “The role of radiology in the evolution of the understanding of articular disease,” Radiology, vol. 273, no. 2, pp. 1–22, 2014. [Google Scholar]

9. L. Shamir, S. M. Ling, W. W. Scott, A. Bos, N. Orlov et al., “Knee X-ray image analysis method for automated detection of osteoarthritis,” IEEE Transactions on Biomedical Engineering, vol. 56, no. 2, pp. 407–415, 2008. [Google Scholar]

10. D. Deokar, D. Dipali and G. Patil, “Effective feature extraction based automatic knee osteoarthritis detection and classification using neural network,” International Journal of Engineering and Techniques, vol. 1, no. 3, pp. 1–6, 2015. [Google Scholar]

11. J. Jayanthi, T. Jayasankar, N. Krishnaraj, N. B. Prakash, A. Sagai Francis Britto et al., “An intelligent particle swarm optimization with convolutional neural network for diabetic retinopathy classification model,” Journal of Medical Imaging and Health Informatics, vol. 11, no. 3, pp. 803–809, 2021. [Google Scholar]

12. D. Venugopal, T. Jayasankar, M. Y. Sikkandar, M. I. Waly, I. V. Pustokhina et al., “A novel deep neural network for intracranial haemorrhage detection and classification,” Computers Materials & Continua, vol. 68, no.3, pp. 2877–2893, 2021. [Google Scholar]

13. C. Kokkotis, S. Moustakidis, E. Papageorgiou, G. Giakas and D. E. Tsaopoulos, “Machine learning in knee osteoarthritis: A review,” Osteoarthritis and Cartilage Open, vol. 2, no. 3, pp. 1–13, 2020. [Google Scholar]

14. M. Kotti, L. D. Duffell, A. A. Faisal and A. H. McGregor, “Detecting knee osteoarthritis and its discriminating parameters using random forests,” Medical Engineering & Physics, vol. 43, pp. 19–29, 2017. [Google Scholar]

15. A. Tiulpin, J. Thevenot, E. Rahtu, P. Lehenkari and S. Saarakkala, “Automatic knee osteoarthritis diagnosis from plain radiographs: A deep learning-based approach,” Scientific Reports, vol. 8, no.1, pp. 1–10, 2018. [Google Scholar]

16. A. Joseph, K. McGuinness, E. Noel, O. Connor and K. Moran, “Quantifying radiographic knee osteoarthritis severity using deep convolutional neural networks,” in Proc. 23rd Int. Conf. on Pattern Recognition, Cancun, Mexico, pp. 1195–1200, 2016. [Google Scholar]

17. M. Saleem, M. S. Farid, S. Saleem and M. H. Khan, “X-ray image analysis for automated knee osteoarthritis detection,” Signal, Image and Video Processing, vol. 14, pp. 1079–1087, 2020. [Google Scholar]

18. M. Shari, M. Ling, W. Scott, M. Hochberg, L. Ferrucci et al., “Early detection of radiographic knee osteoarthritis using computer-aided analysis,” Osteoarthritis and Cartilage, vol. 17, no. 10, pp. 1307–1312, 2009. [Google Scholar]

19. J. Antony, K. McGuinness, K. Moran and N. E. O'Connor, “Automatic detection of knee joints and quantification of knee osteoarthritis severity using convolutional neural networks,” in Int. Conf. on Machine Learning and Data Mining in Pattern Recognition, Newyork, USA, pp. 376–390, 2017. [Google Scholar]

20. T. Aleksei, S. Klein, M. A. Bierma-Zeinstra, J. Thevenot, E. Rahtu et al., “Multimodal machine learning-based knee osteoarthritis progression prediction from plain radiographs and clinical data,” Scientific Reports, vol. 9, no. 1, pp. 1–11, 2019. [Google Scholar]

21. L. Jihye, J. Kim and S. Cheon, “A deep neural network-based method for early detection of osteoarthritis using statistical data,” International Journal of Environmental Research and Public Health, vol. 16, no. 7, pp. 1281, 2019. [Google Scholar]

22. A. Mukesh, G. Harisinghani, R. Weissleder and B. Fischl, “Unsupervised medical image segmentation based on the local center of mass,” Scientific Reports, vol. 8, no. 1, pp. 1–8, 2018. [Google Scholar]

23. Y. Li, X. Ning and Q. Lyu, “Construction of a knee osteoarthritis diagnostic system based on X-ray image processing,” Cluster Computing, vol. 22, no. 6, pp. 15533–15540, 2019. [Google Scholar]

24. I. Aganj, M. G. Harisinghani, R. Weissleder and B. Fischl, “Unsupervised medical image segmentation based on the local center of mass,” Scientific Reports, vol. 8, no. 1, pp. 1–8, 2018. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |