DOI:10.32604/cmc.2022.020265

| Computers, Materials & Continua DOI:10.32604/cmc.2022.020265 |  |

| Article |

An Efficient CNN-Based Hybrid Classification and Segmentation Approach for COVID-19 Detection

1Department of Information Technology, College of Computer and Information Sciences, Princess Nourah Bint Abdulrahman University, Riyadh 84428, Saudi Arabia

2Department of Electronics and Electrical Communications, Faculty of Electronic Engineering, Menoufia University, Menouf 32952, Egypt

3Department of Industrial Electronics and Control Engineering, Faculty of Electronic Engineering, Menoufia University, Menouf 32952, Egypt

4Department of Electronics and Communications Engineering, Faculty of Engineering, Zagazig University, Zagazig, Sharqia 44519, Egypt

*Corresponding author: Abeer D. Algarni. Email: adalqarni@pnu.edu.sa

Received: 18 May 2021; Accepted: 29 June 2021

Abstract: COVID-19 remains to proliferate precipitously in the world. It has significantly influenced public health, the world economy, and the persons’ lives. Hence, there is a need to speed up the diagnosis and precautions to deal with COVID-19 patients. With this explosion of this pandemic, there is a need for automated diagnosis tools to help specialists based on medical images. This paper presents a hybrid Convolutional Neural Network (CNN)-based classification and segmentation approach for COVID-19 detection from Computed Tomography (CT) images. The proposed approach is employed to classify and segment the COVID-19, pneumonia, and normal CT images. The classification stage is firstly applied to detect and classify the input medical CT images. Then, the segmentation stage is performed to distinguish between pneumonia and COVID-19 CT images. The classification stage is implemented based on a simple and efficient CNN deep learning model. This model comprises four Rectified Linear Units (ReLUs), four batch normalization layers, and four convolutional (Conv) layers. The Conv layer depends on filters with sizes of 64, 32, 16, and 8. A 2 × 2 window and a stride of 2 are employed in the utilized four max-pooling layers. A soft-max activation function and a Fully-Connected (FC) layer are utilized in the classification stage to perform the detection process. For the segmentation process, the Simplified Pulse Coupled Neural Network (SPCNN) is utilized in the proposed hybrid approach. The proposed segmentation approach is based on salient object detection to localize the COVID-19 or pneumonia region, accurately. To summarize the contributions of the paper, we can say that the classification process with a CNN model can be the first stage a highly-effective automated diagnosis system. Once the images are accepted by the system, it is possible to perform further processing through a segmentation process to isolate the regions of interest in the images. The region of interest can be assesses both automatically and through experts. This strategy helps so much in saving the time and efforts of specialists with the explosion of COVID-19 pandemic in the world. The proposed classification approach is applied for different scenarios of 80%, 70%, or 60% of the data for training and 20%, 30, or 40% of the data for testing, respectively. In these scenarios, the proposed approach achieves classification accuracies of 100%, 99.45%, and 98.55%, respectively. Thus, the obtained results demonstrate and prove the efficacy of the proposed approach for assisting the specialists in automated medical diagnosis services.

Keywords: COVID-19; segmentation; classification; CNN; SPCNN; CT images

COVID-19 started in September 2019 in China, and it is still spreading very rapidly. Hence, the researchers began to find effective solutions for the treatment of this disease. COVID-19 has a special nature in its spreading. The sneezing of infected persons participates mainly in the spread of COVID-19 through the small droplets resulting from this sneezing action. Another factor that helps in the wide spread of COVID-19 is simple touching of other persons or surfaces affected by the virus particles [1]. Hence, the virus can be transferred from the hand to nose or mouth and reach the lungs.

The explosion of infection all over the world has led to a very large number of infected persons with severe symptoms. This emergency case all over the world requires cooperation between the medical and engineering communities towards new tools for efficient diagnosis of COVID-19 cases. The medical community is interested in feasible diagnosis tools for COVID-19 cases. In addition, the severeness degree of symptoms needs to be determined also. This cannot be achieved without the utilization of medical imaging with the help of well-designed engineering solutions to allow automated diagnosis for the determination of the viral infection [2,3].

Several imaging techniques are used for acquiring chest images for suspected COVID-19 cases. Scans of the chest region with X-rays and Computed Tomography (CT) are requested for all suspected COVID-19 patients. Generally, X-ray imaging depends on the interaction between photons and electrons. An X-ray beam is directed towards the lung. Different densities of tissues give different absorption levels. The X-ray imaging has some advantages such as flexibility, low cost, and high speed of the image acquisition process. Unfortunately, X-ray images are not suitable to determine the degree of severeness of viral infection as this type of images has low resolution by nature. This is attributed to the working principles of the X-ray imaging system using overlapping projections [4,5].

On the other hand, another modality of imaging that can be used for giving decisions about suspected COVID-19 cases is CT imaging. Generally, CT images are generated by collecting several angular projections of X-ray images. Different reconstruction algorithms are used for the generation of CT images. Some artifacts may appear in the obtained CT images such as ringing, high attenuation around edges, ghosting, and stitching. These artifacts need some sort of pre-processing prior to the automated diagnosis process. The classification into normal, pneumonia, and COVID-19 cases can be performed based on CT images, and it is expected to yield better results than those obtained with X-ray images. The reason is that in several CT images for COVID-19 cases, there are ground glass patterned areas in the images. If these areas are determined accurately, this will help in the diagnosis process. In addition, CT scans can produce an integrated view of the lungs. Hence, it is possible to rate the degree of infection with COVID-19 [6,7].

The correct and rapid diagnosis of the COVID-19 is very substantial for effective patient care, successful treatment planning and quarantine precautions. Deep Learning (DL) models are the most excellent option for medical image classification. They are utilized to obtain the main features of medical images automatically, and hence perform the classification process. The basic idea is to use Conv layers for creating feature maps. Different filter masks with different orientations are used in the convolution processes to create feature maps. These maps are processed afterwards with pooling operations as a tool for feature reduction. The objective is to use the most representative features for the image classification process and eliminate any manual feature extraction in the whole classification process.

As the depth of the CNN model is increased the merits of DL are better exploited, and the most appropriate features are exploited in the classification process. The DL models are very appropriate for operation on medical images. Several DL models have been introduced and are being developed currently for the classification of medical images of different natures that are acquired with different modalities of imaging. For example, the classification of confocal microscopy retinal images with DL models have been considered in [8–10]. In addition, a summary of the existing directions for the classification of different types of medical images with DL was presented in [11]. In addition, the authors of [12,13] investigated the classification of medical images obtained for lungs as a part of the respiratory system with DL models.

The main contribution of this work is the introduction of a hybrid segmentation and classification approach for CT COVID-19 images. It is employed to classify and segment the COVID-19, pneumonia, and normal CT images. The suggested classification approach based on a simple CNN DL model is employed to differentiate between CT image classes. The suggested SPCNN-based segmentation approach is performed to distinguish between pneumonia and COVID-19 CT images. This segmentation approach demonstrates the infected region, precisely. The proposed hybrid approach gives a good performance in the segmentation and classification processes. It achieves high classification accuracies with different training and testing ratios.

The remainder of this work is arranged as follows. The related studies are reviewed in Section 2. The proposed hybrid CNN-based segmentation and classification approach is introduced in Section 3. The utilized medical dataset is described in Section 4. Comprehensive simulation tests to validate the proposed hybrid approach are given in Section 5. Finally, the concluding remarks and recommendations for future research work in this area are summarized in Section 6.

Joshi et al. [14] presented a technique for detecting COVID-19 that depends on DL to work on chest X-ray images. The samples of images are pre-processed, cropped to eliminate the redundant regions and resized to allow successful classification processes. In addition, an augmentation process is performed for the expansion of the amount of data used in the training process. The data augmentation is a common scenario in all applications of DL that are trained on limited-size data. The DarkNet-53 DL model was presented in [14] to perform the classification process.

Dastider et al. [15] designed a hybrid CNN-LSTM model to work on COVID-19 images for efficient detection. This model works on ultrasonic images. A modified CNN model is implemented for feature extraction and classification of images through an auto-encoder strategy. In parallel, an LSTM model is also used for the same purpose. The final classification result is obtained through a voting strategy applied on the obtained feature vectors obtained from both classifiers.

Rohila et al. [16] presented an attempt to detect COVID-19 depending on DL and a segmentation process of CT images. This attempt presented a ReCOV-101 model and a deep CNN model developed from the ResNet-101 for the classification purpose. A classification accuracy of 94.9% was achieved without segmentation, and an accuracy of 90.1% was accomplished with segmentation.

Pathak et al. [17] presented a DL model for the classification of COVID-19 based on transfer learning on CT images. Two deep transfer learning models have been introduced and applied within the classification scenario. The ResNet-50 has been used as a feature extraction tool, while the pre-trained ImageNet model is employed for training with COVID-19 images within the classification model. This work achieved training and testing accuracies of 96.2264% and 93.0189%, respectively.

Wang et al. [18] introduced an Artificial Intelligence (AI) model for CT image analysis in order to take decisions about infected cases. The stages of this model begin with data collection to have balanced patterns for the training process. After that, data annotation is performed to give suitable data for the training process. The training on the labeled data is performed with high accuracy. Model assessment is performed to validate the suggested model. Once validated, the model can be transferred to the deployment stage.

Vidal et al. [19] designed a multi-stage model based on transfer learning concepts. This model has been used for lung segmentation and localization in X-ray images. It consists of two stages. In the first stage, the convolution filters are adapted to be appropriate for operation on MR images. The second stage is applied to refine the weights of the radiographs obtained from portable devices. Gao et al. [20] proposed a dual-branch network for the segmentation and classification processes for COVID-19 detection depending on CT images. Firstly, a U-Net is implemented for lung segmentation in order to determine lung contours. Then, the classification process is implemented in multi-level and multi-slice scenarios. Final classification results are obtained through decision fusion.

He et al. [21] introduced a segmentation and classification scenario for COVID-19 cases from 3D CT images. Several image pre-processing steps are applied to the 3D CT images. Multiple decisions are obtained with the M2UNet presented by the authors. Decision fusion is implemented to obtain the final classification result. Sethy et al. [22] used the AlexNet, VGG16, and VGG19 pre-trained models for feature extraction from medical images. They adopted a Support Vector Machine (SVM) for the classification process. This model succeeded in the classification of pneumonia from chest X-ray images.

3 Proposed Hybrid Classification-Segmentation Approach for COVID-19 Detection

Deep learning is a new AI strategy that can be used efficiently with medical images. It is widely used for the segmentation and classification of medical images based on CNNs. Generally, a CNN has several layers. These layers include Conv layers that help to extract features. In addition, activation functions are used to obtain feature maps. After that, pooling layers are used for feature reduction. The output features from the pooling layer are fed to the Fully-Connected (FC) layer that performs the classification process and gives the final decision. The adaptive back-propagation algorithm is used in the training process in order to perform the classification task. The proposed approach is employed to classify and segment CT images for the identification of COVID-19, pneumonia, and normal cases from these images. The classification stage is performed first to classify all cases from CT images. Then, the segmentation stage is performed to differentiate between COVID-19 and pneumonia CT images.

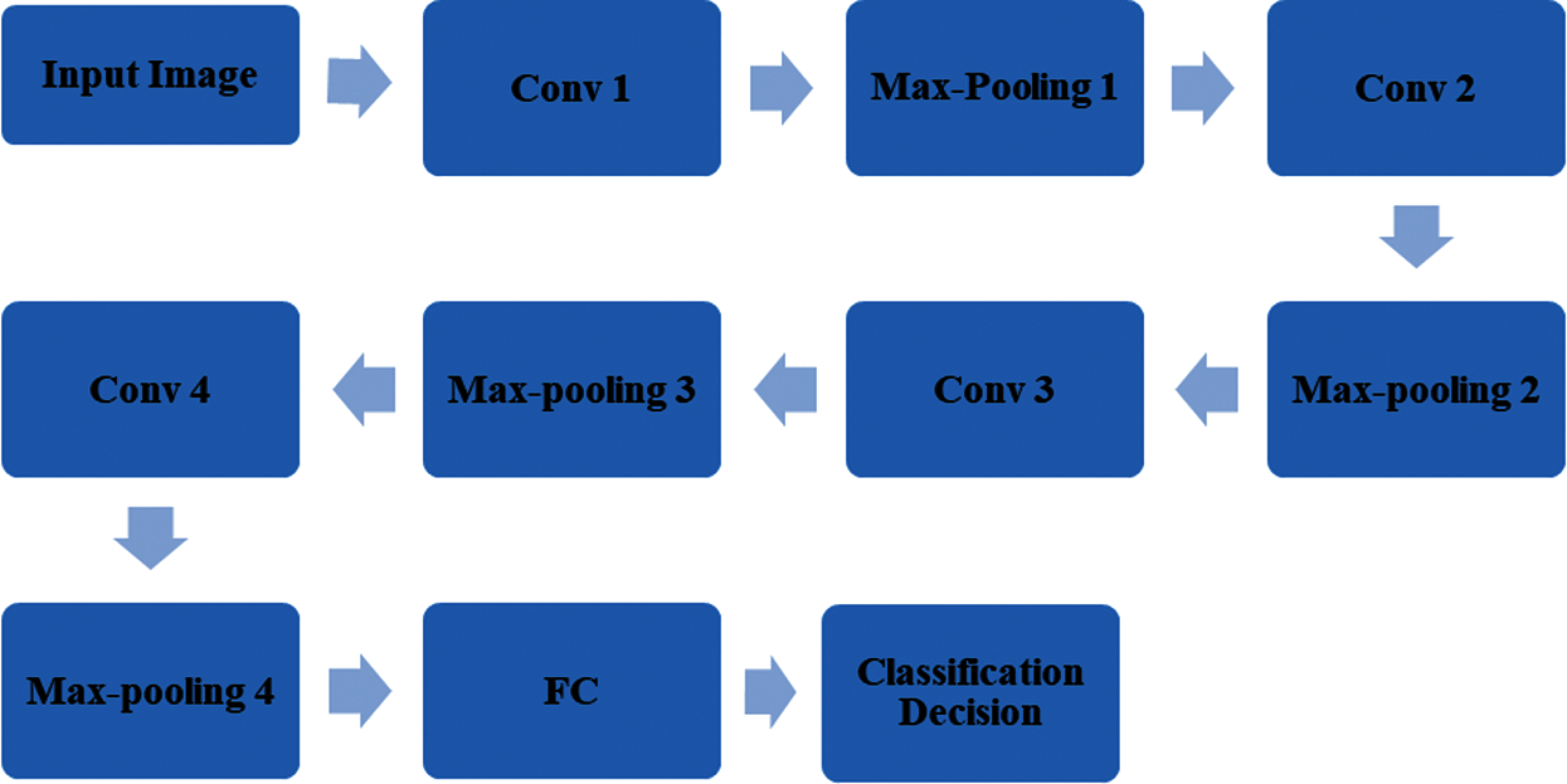

The classification stage is applied based on a simple and efficient CNN model developed from scratch. The proposed CNN model is composed of four Conv layers, four batch normalization layers, and four ReLU layers. The filter dimensions of Conv layers are 8, 16, 32, and 64 in consequence. Four max-pooling layers are implemented with a stride of 2 and a window size of 2 × 2. The classification layer consists of a fully-connected network and a soft-max activation function. The size of the input image is 512 × 512. The SPCNN is applied for the segmentation process. The proposed segmentation approach is based on salient object detection to localize the COVID-19 or pneumonia regions, accurately. Fig. 1 illustrates the building blocks of the proposed hybrid approach.

Figure 1: Block diagram of the proposed hybrid approach

The Conv layer is a significant part of the CNN that is used to extract features from the input images. It works through the utilization of different convolution filter masks with different characteristics and orientations. These masks work based on the concepts of matched filter theory. They maximize the spatial activities that are matched to the filter mask. Non- linearity of any classification model is a guarantee for its success. That is why non-linear activation functions are required in the CNNs. In addition, a pooling strategy is required to guarantee feature reduction, while maintaining the effective features to be used subsequently [23,24]. The pooling layer output is fed into the FC layer that is responsible for obtaining the final decision. As illustrated in Fig. 2 for the proposed approach, all these operations are included. Maximum pooling is adopted. For a size of pooling S, the output of the pooling operation at the jth local receptive field of the ith pooling layer is given as:

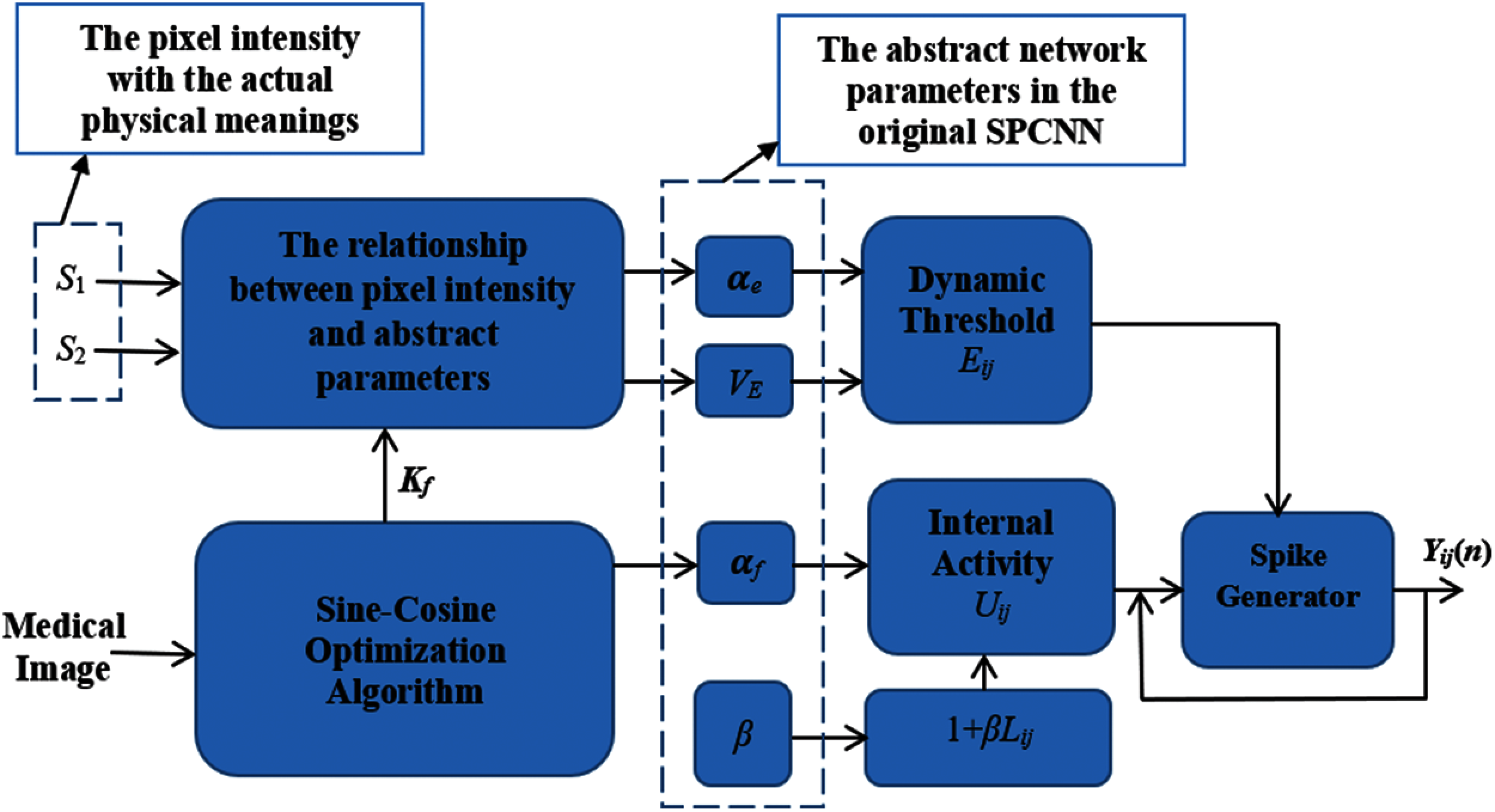

A Simplified Pulse Coupled Neural Network (SPCNN) is used to segment the COVID-19 and pneumonia CT images. It is based on salient object detection to determine the region of interest [25–27]. The settings of the SPCNN are changed in the proposed model to intensity-of-pixel settings. The segmentation process consists of two steps. Firstly, the image is traced pixel-by-pixel to generate adaptive parameters based on image local activity levels. Then, the optimization parameters are updated based on sine-cosine operators. Fig. 3 illustrates the proposed SPCNN-based segmentation approach.

Figure 2: Steps of the proposed CNN-based classification approach

Figure 3: Steps of the proposed SPCNN-based segmentation approach

For the general form of the SPCNN, the parameters including the adaptive threshold

The following condition is maintained.

The following condition at step l+1 is also maintained.

The dynamic threshold would increase to the value given in Eq. (6), and then keep decreasing.

Suppose that

Suppose that S1, S2 are the pixel values, and knowing the β and VE values, the challenge turns into solving for kf and ke. So, this problem is considered as an objective optimization problem.

Based on Eqs. (12) and (13), the optimization constraint is given as:

where lim

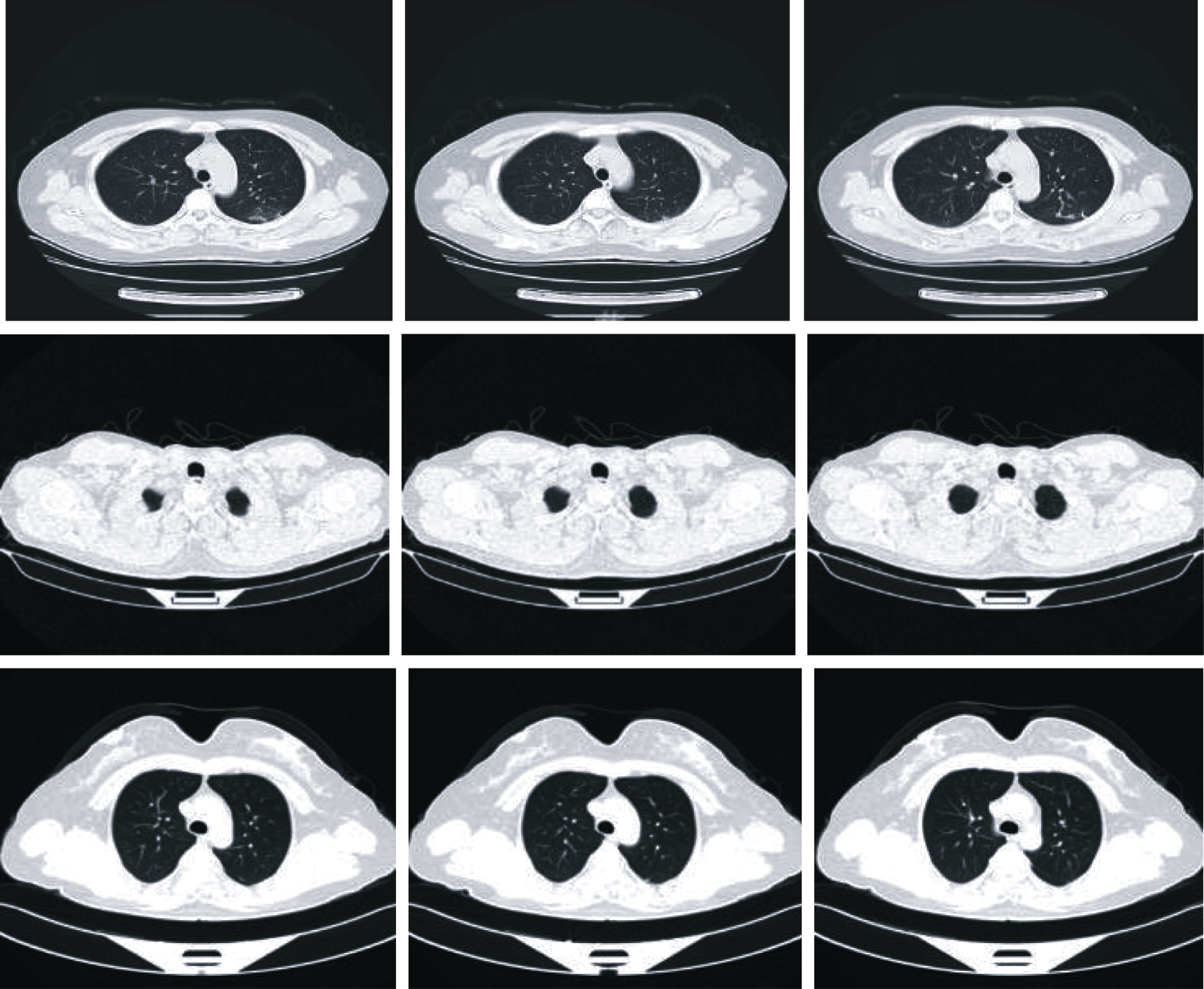

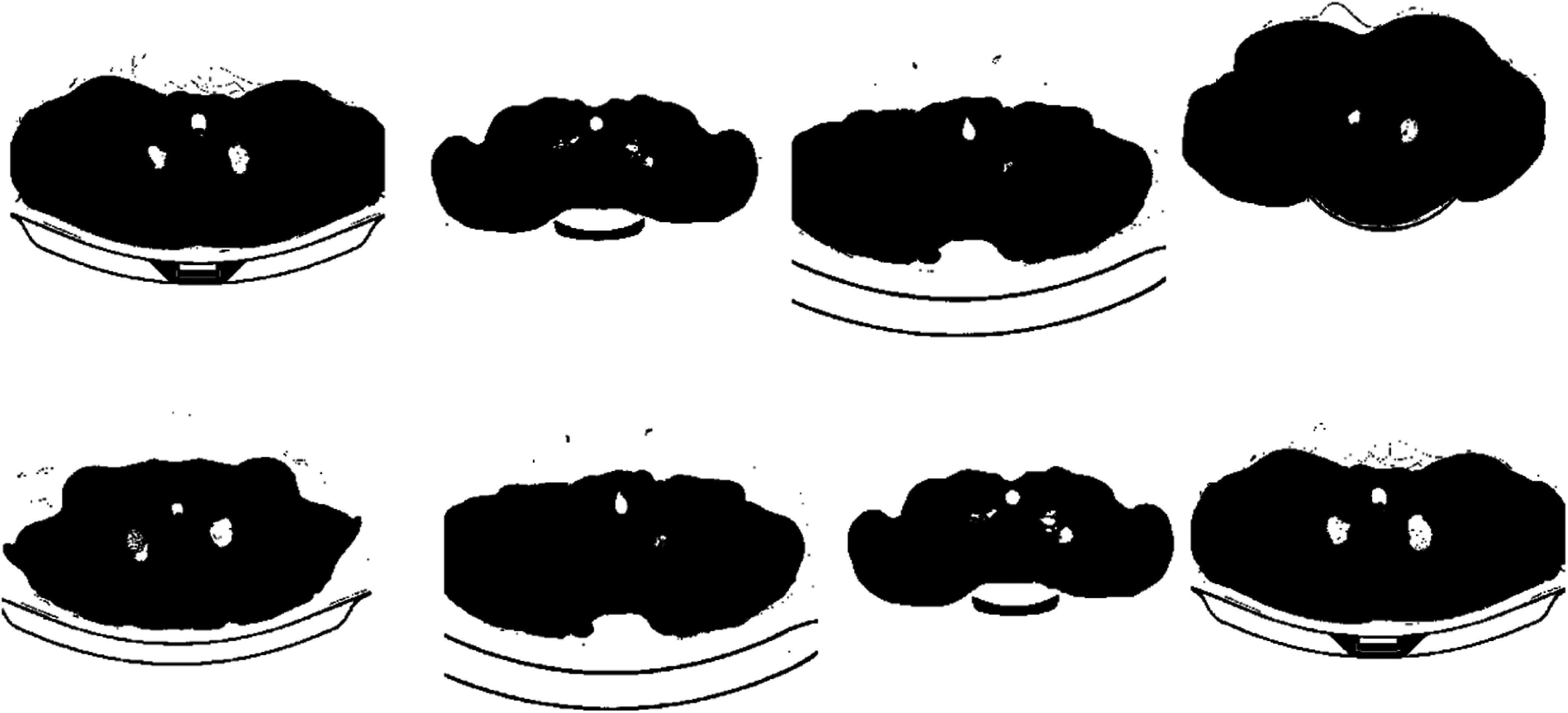

The proposed approach is performed on a data collected from Kaggle dataset [6]. The CT images are utilized to validate the proposed approach, and they are used for multi-class classification for different cases including COVID-19, pneumonia, and normal cases. The proposed approach is tested using several ratios of training and testing (80% for training and 20% for testing, 70% for training and 30% for testing, and 60% for training and 40% for testing). The proposed approach succeeds and achieves high accuracies for all examined training and testing ratios. Fig. 4 gives examples of the different considered cases.

Figure 4: Examples of the considered COVID-19, pneumonia, and normal cases

5 Simulation Results and Discussions

The proposed hybrid approach is applied for the segmentation and classification purposes on chest CT images. The classification stage is applied firstly to discriminate between COVID-19, pneumonia, and normal CT images. Then, the segmentation stage is employed for COVID-19 and pneumonia classification of CT images. Finally, the target region is localized. The confusion matrix, depending on different evaluation metrics, is used to evaluate the performance improvement of the proposed approach. It is noticed from the training and validation analysis that the proposed approach succeeds in the classification process with high accuracy for all training and testing ratios.

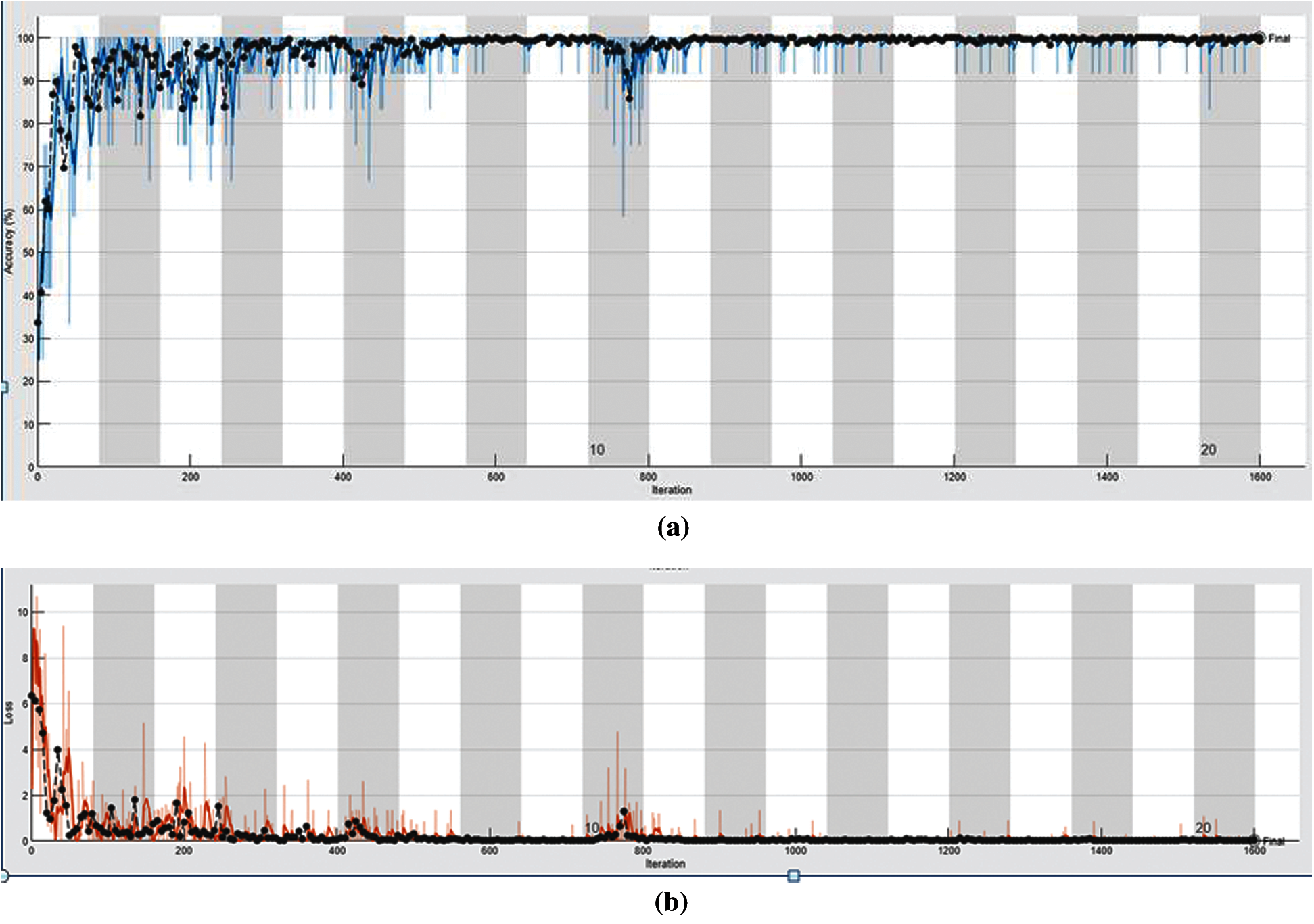

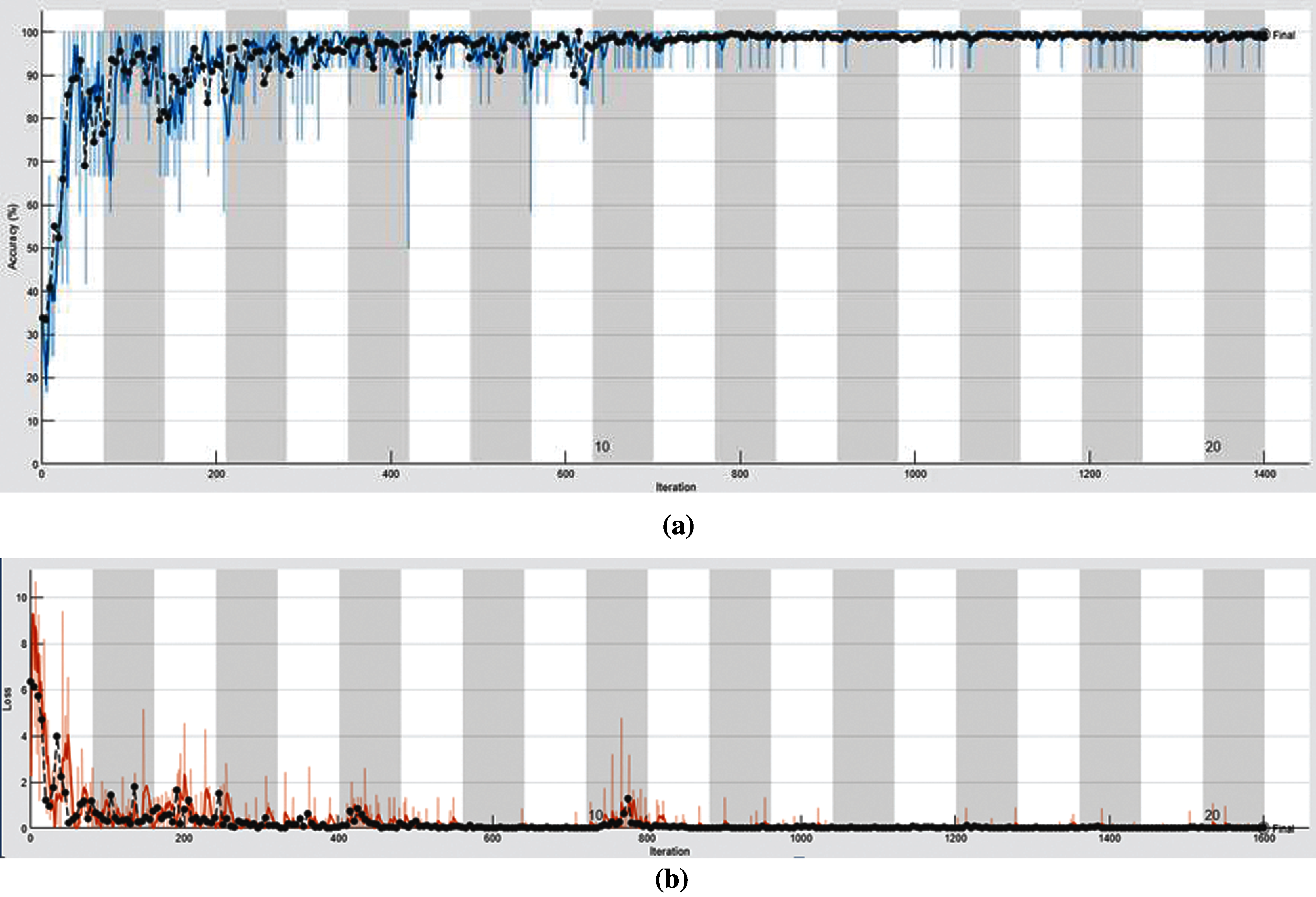

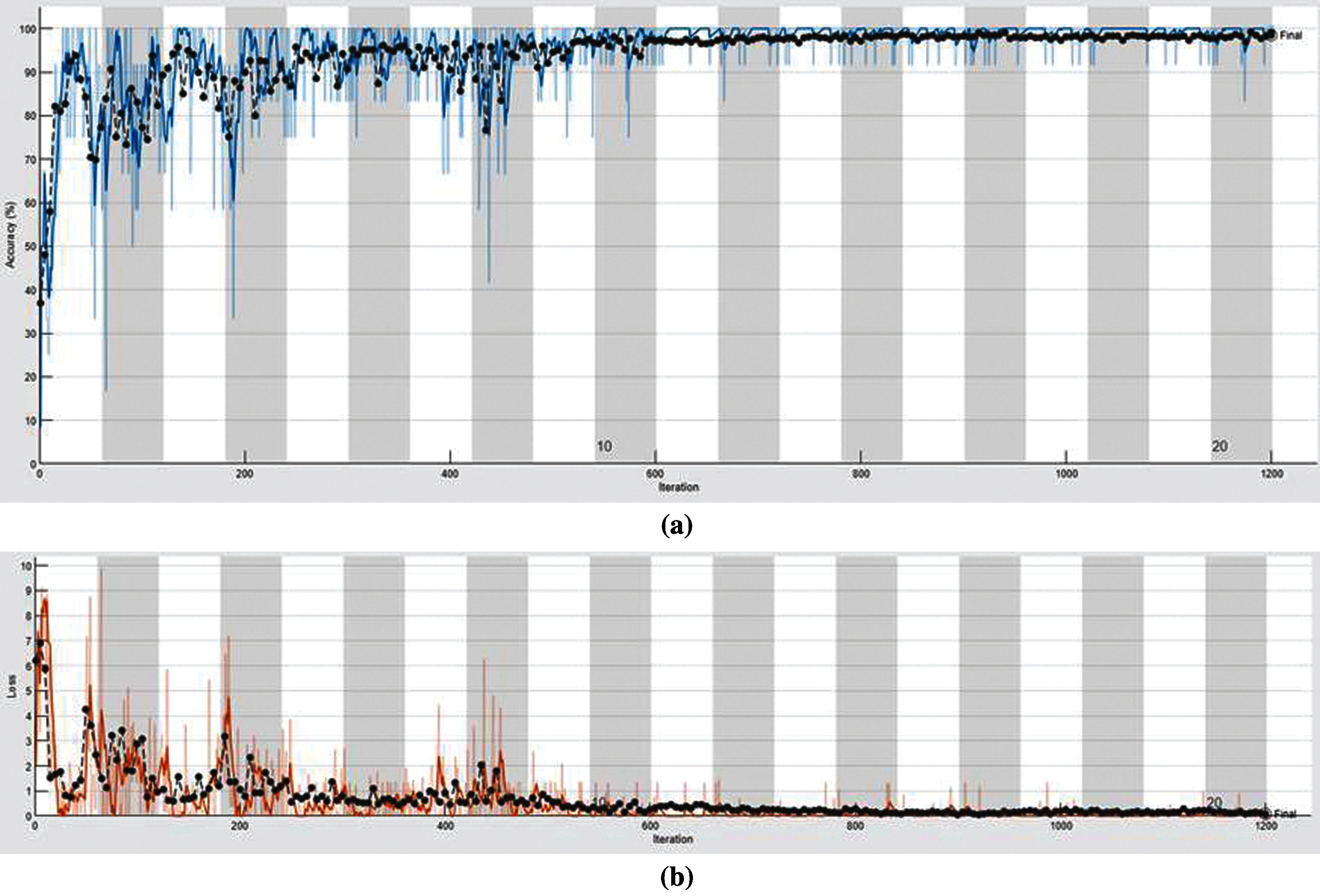

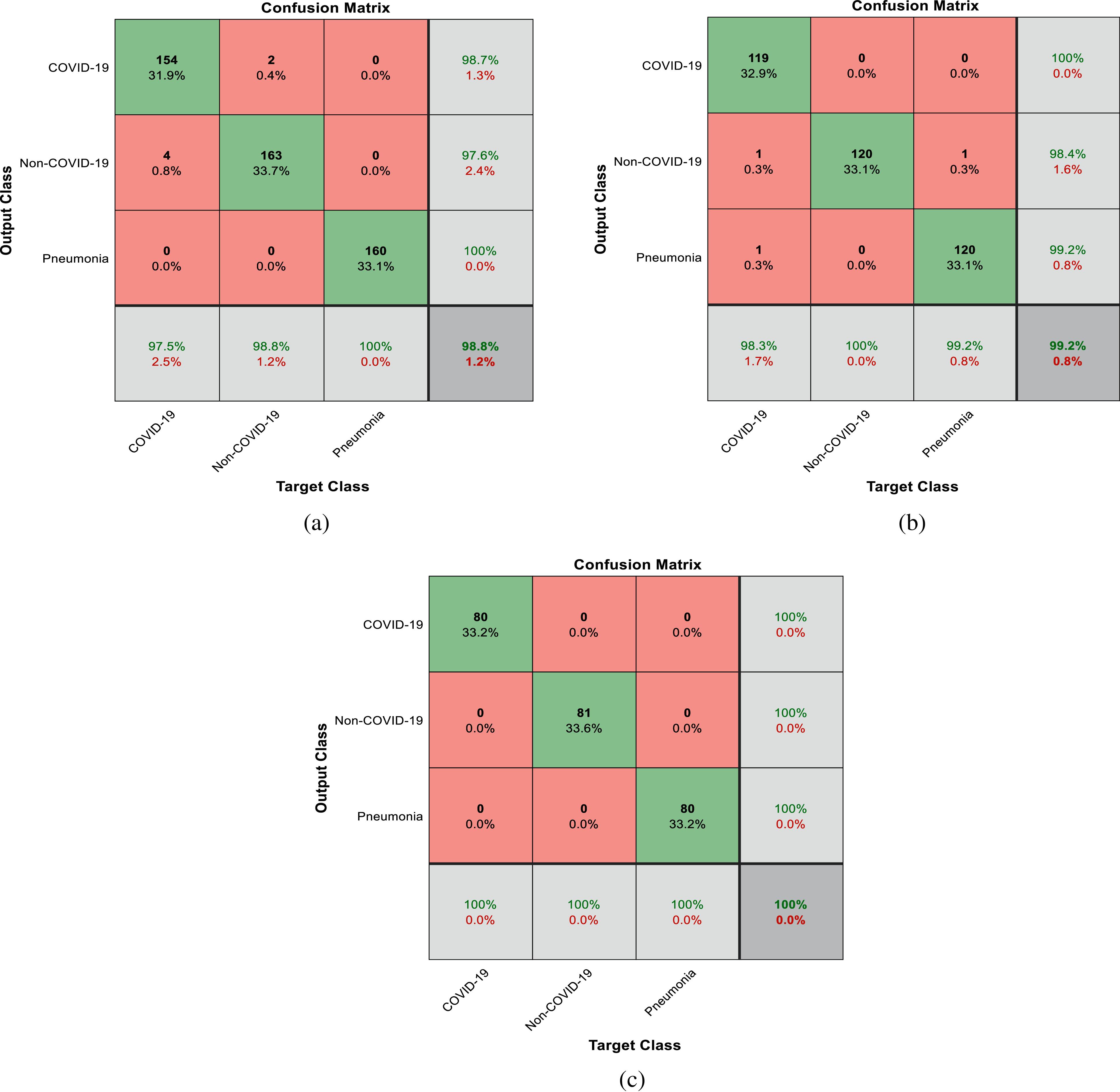

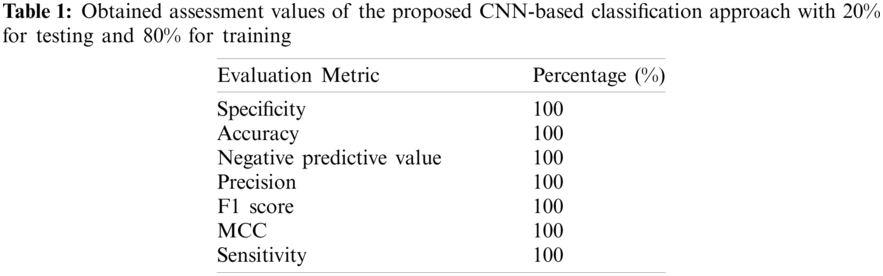

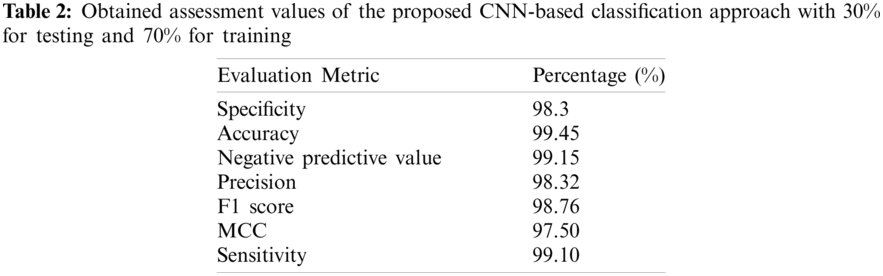

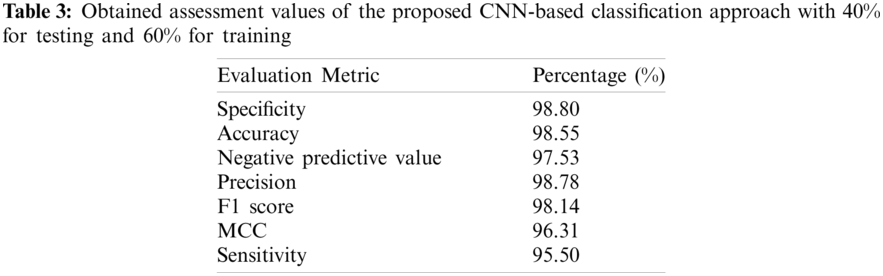

The assessment metrics for the proposed approach include accuracy, sensitivity, specificity, precision, Negative Predictive Value (NPV), F1 score, and Matthews Correlation Coefficient (MCC). The expressions of these metrics are given in Eqs. (15) to (21). The TN (True Negative), TP (True Positive), TN (False Negative), and FP (False Positive) are used in the estimation of the used metric values [28,29]. It is clear that the proposed approach is more efficient from the accuracy perspective, when compared to other traditional approaches. In addition, the proposed approach guarantees high reliability of the classification process. Fig. 5 illustrates the obtained accuracy and loss curves for the scenario of 80% of the data used for training and the other 20% used for testing. Fig. 6 gives similar results, but with 70% of the data used for training and 30% for testing. Moreover, Fig. 7 reveals the results of the scenario of 60% of the data used for training and the remaining 40% used for testing. Fig. 8 shows the obtained confusion matrices of the proposed classification approach with the different examined training and testing ratios. It is clear that the proposed approach achieves classification accuracies of 100%, 99.45% and 98.55% for the 80%, 70% and 60% for training and 20%, 30% and 40% for testing, respectively.

Figure 5: Accuracy and loss curves for the CNN classification model with 20% for testing and 80% for training, (a) accuracy curve, (b) loss curve

Figure 6: The accuracy and loss curves for the CNN classification model with 30% for testing and 70% for training, (a) accuracy curve, (b) loss curve

Tabs. 1 to 3 show the obtained values of the utilized evaluation metrics for the proposed classification approach at various training and testing ratios. Tab. 1 shows the obtained values of the utilized assessment metrics of the proposed CNN-based classification approach with 20% for testing and 80% for training. Tab. 2 illustrates the obtained values of the utilized assessment metrics of the proposed CNN-based classification approach with 30% for testing and 70% for training. Tab. 3 indicates the obtained values of the utilized assessment metrics of the proposed CNN-based classification approach with 40% for testing and 60% for training.

Figure 7: The accuracy and loss curves for the CNN classification model with 40% for testing and 60% for training, (a) accuracy curve, (b) loss curve

It is demonstrated from the obtained outcomes that the proposed CNN-based classification approach achieves high detection accuracy levels and low cross-entropy loss levels for all examined training and testing sets. In addition, the acquired values for the whole tested assessment metrics confirmed this good performance efficiency of the proposed classification approach as presented in Tabs. 1 to 3.

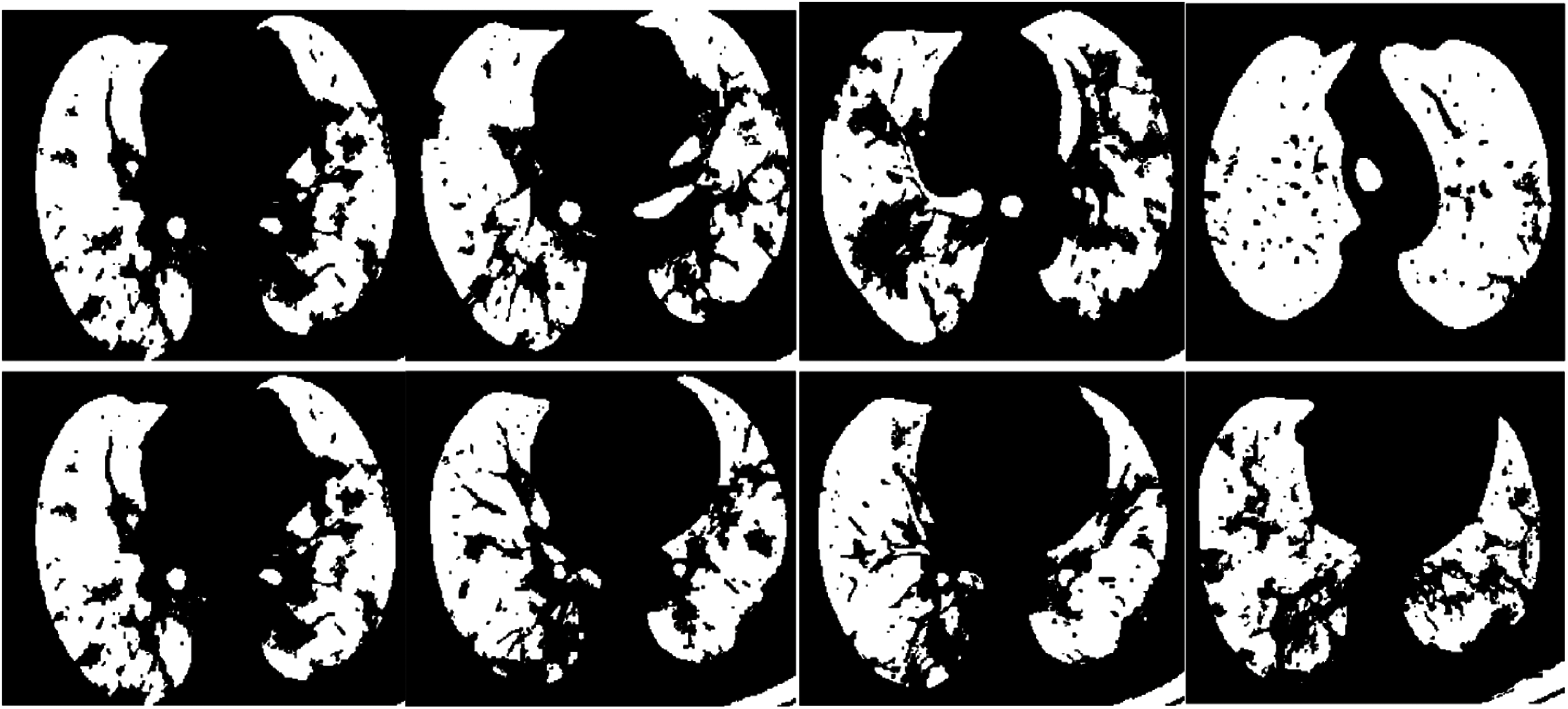

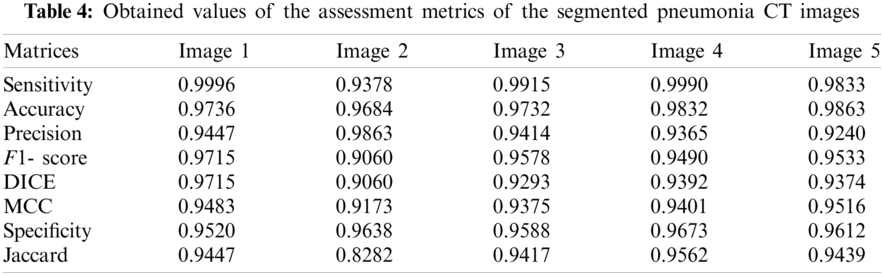

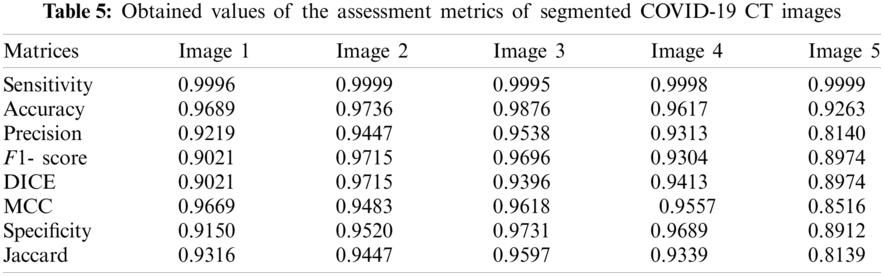

The proposed SPCNN-based segmentation approach is employed after applying the classification process. Pneumonia and COVID-19 images are segmented to identify the area of interest, precisely. The main objective of this segmentation stage is to assist the specialists in diagnosing the disease in an automated manner and discovering the proper therapy for COVID-19 cases. Figs. 9 and 10 clarify samples of segmented pneumonia and COVID-19 CT images, respectively. Tabs. 4 and 5 show the obtained accuracy, precision, sensitivity, F1 score, DICE, MCC, specificity, and Jaccard [28,29] assessment metrics of the proposed SPCNN-based segmentation approach for pneumonia and COVID-19 CT images. The obtained visual results reveal the high difference between pneumonia and COVID-19 cases in segmented CT images. Additionally, the attained values of the assessment metrics of the segmented CT images confirm the high efficiency and accuracy of the proposed SPCNN-based segmentation approach as presented in Tabs. 4 and 5.

Figure 8: The obtained confusion matrices, (a) 40% for testing and 60% for training, (b) 30% for testing and 70% for training, (c) 20% for testing and 80% for training

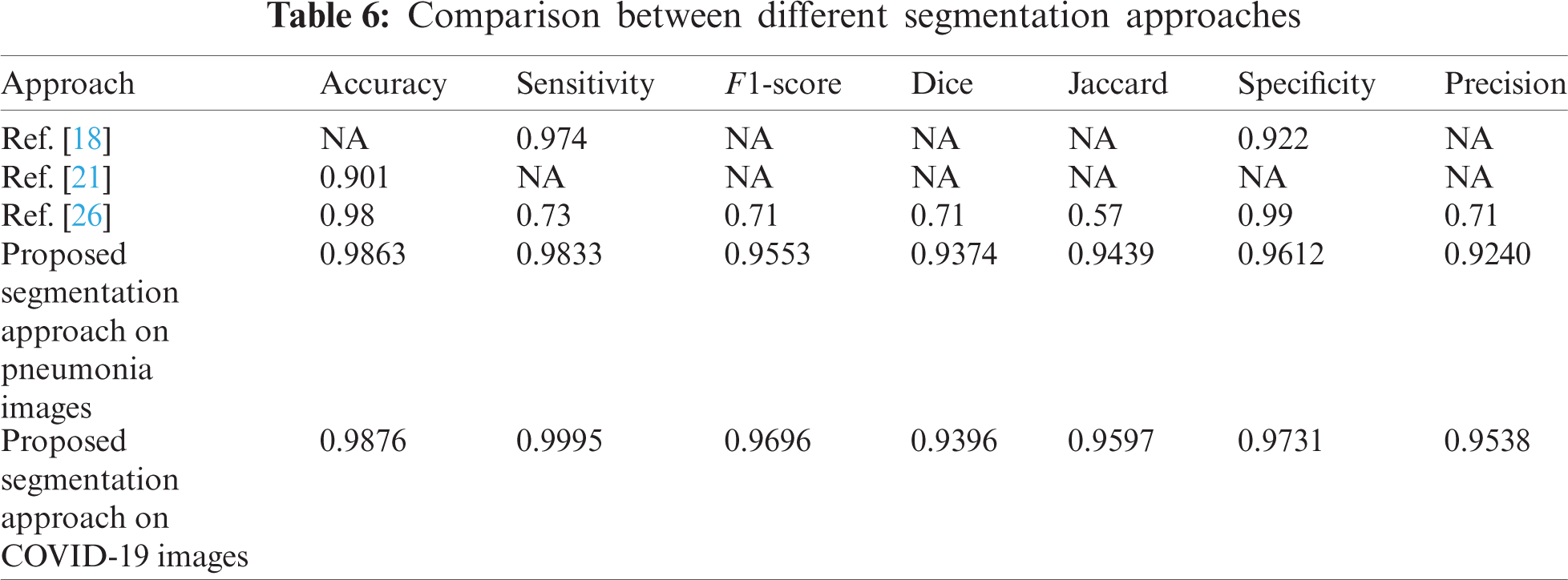

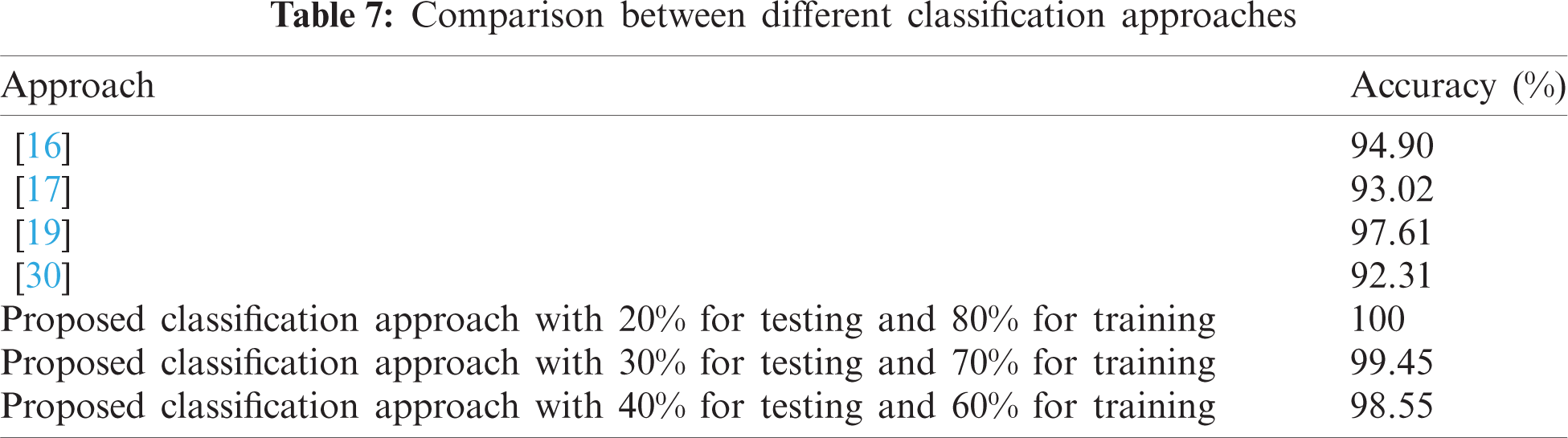

Tab. 6 gives a comparison of different segmentation approaches including the proposed one. In addition, Tab. 7 gives a comparison of different classification approaches including the proposed one. It is observed that the proposed hybrid approach for segmentation and classification is more precise than the other segmentation and classification approaches.

Figure 9: Samples of the resulting segmented pneumonia images

Figure 10: Samples of the resulting segmented COVID-19 images

This paper introduced an efficient hybrid approach to classify and segment pneumonia, COVID-19, and normal CT images. Firstly, a simple CNN-based classification approach developed from scratch was introduced. This CNN-based model is composed of four max-pooling layers, four Conv layers, and an output classification layer. Then, an efficient SPCNN-based segmentation approach was employed to segment pneumonia and COVID-19 CT images. The obtained outcomes proved that the proposed hybrid approach achieves high performance and recommended accuracy levels for the segmentation and classification processes. The obtained results of the proposed segmentation and classification approaches are superior compared to the conventional segmentation and classification ones. In the future work, we can incorporate innovative DL-based transfer learning models for the segmentation and classification processes on X-ray and CT images for performing a more effective COVID-19 detection process for automated diagnosis applications. In addition, multi-stage DL models for feature extraction will be investigated to improve the classification performance on large medical datasets. Furthermore, a single image super-resolution stage can be tested for improving the classification performance.

Acknowledgement: The authors extend their appreciation to the Deputyship for Research & Innovation, Ministry of Education in Saudi Arabia for funding this research work through the project number (PNU-DRI-Targeted-20-027).

Funding Statement: The authors extend their appreciation to the Deputyship for Research & Innovation, Ministry of Education in Saudi Arabia for funding this research work through the project Number (PNU-DRI-Targeted-20-027).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. M. Chung, B. Adam, X. Mei, N. Zhang, M. Huang et al., “CT imaging features of 2019 novel coronavirus (2019-nCoV),” Radiology, vol. 295, no. 1, pp. 202–207, 2020. [Google Scholar]

2. X. Xie, Z. Zhong, W. Zhao, C. Zheng, F. Wang et al., “Chest CT for typical coronavirus disease 2019 (COVID-19) pneumonia: Relationship to negative RT-pCR testing,” Radiology, vol. 296, no. 2, pp. 41–45, 2020. [Google Scholar]

3. T. Ai, Z. Yang, H. Hou, C. Zhan, C. Chen et al., “Correlation of chest CT and RT-pCR testing for coronavirus disease 2019 (COVID-19) in China: A report of 1014 cases,” Radiology, vol. 296, no. 2, pp. 32–40, 2020. [Google Scholar]

4. S. Roy, W. Menapace, S. Oei, B. Luijten, E. Fini et al., “Deep learning for classification and localization of COVID-19 markers in point-of-care lung ultrasound,” IEEE Transactions on Medical Imaging, vol. 39, no. 8, pp. 2676–2687, 2020. [Google Scholar]

5. F. Shi, J. Wang, J. Shi, Z. Wu, Q. Wang et al., “Review of artificial intelligence techniques in imaging data acquisition, segmentation and diagnosis for COVID-19,” IEEE Reviews in Biomedical Engineering, vol. 14, pp. 4–15, 2021. [Google Scholar]

6. D. Litmanovich, M. Chung, R. Kirkbride, G. Kicska and J. Kanne, “Review of chest radiograph findings of COVID-19 pneumonia and suggested reporting language,” Journal of Thoracic Imaging, vol. 35, no. 6, pp. 354–360, 2020. [Google Scholar]

7. M. Hammer, C. Raptis, T. Henry, A. Shah, S. Bhalla et al., “Challenges in the interpretation and application of typical imaging features of COVID-19,” The Lancet Respiratory Medicine, vol. 8, no. 6, pp. 534–536, 2020. [Google Scholar]

8. N. El-Hag, A. Sedik, W. El-Shafai, H. El-Hoseny, A. Khalaf et al., “Classification of retinal images based on convolutional neural network,” Microscopy Research and Technique, vol. 84, no. 3, pp. 394–414, 2021. [Google Scholar]

9. S. Dutta, B. Manideep, S. Basha, R. Caytiles and N. Iyengar, “Classification of diabetic retinopathy images by using deep learning models,” International Journal of Grid and Distributed Computing, vol. 11, no. 1, pp. 89–106, 2018. [Google Scholar]

10. T. Shanthi and R. Sabeenian, “Modified alexnet architecture for classification of diabetic retinopathy images,” Computers & Electrical Engineering, vol. 76, pp. 56–64, 2019. [Google Scholar]

11. F. Altaf, S. Islam, N. Akhtar and N. Janjua, “Going deep in medical image analysis: Concepts, methods, challenges, and future directions,” IEEE Access, vol. 7, pp. 99540–99572, 2019. [Google Scholar]

12. T. Rahman, M. Chowdhury, A. Khandakar, K. Islam, K. Islam et al., “Transfer learning with deep convolutional neural network (cnn) for pneumonia detection using chest X-ray,” Applied Sciences, vol. 10, no. 9, pp. 1–19, 2020. [Google Scholar]

13. J. Ferreira, D. Cardenas, R. Moreno, M. Rebelo, J. Krieger et al., “Multi-view ensemble convolutional neural network to improve classification of pneumonia in low contrast chest x-ray images,” in Proc. 42nd Annual Int. Conf. of the IEEE Engineering in Medicine & Biology Society (EMBCMontreal, QC, Canada, pp. 1238–1241, 2020. [Google Scholar]

14. R. Joshi, S. Yadav, V. Pathak, H. Malhotra, H. Khokhar et al., “A deep learning-based COVID-19 automatic diagnostic framework using chest X-ray images,” Biocybernetics and Biomedical Engineering, vol. 41, no. 1, pp. 239–254, 2021. [Google Scholar]

15. A. Dastider, F. Sadik and S. Fattah, “An integrated autoencoder-based hybrid CNN-lSTM model for COVID-19 severity prediction from lung ultrasound,” Computers in Biology and Medicine, vol. 132, no. 104296, pp. 1–19, 2021. [Google Scholar]

16. V. Rohila, N. Gupta, A. Kaul and D. Sharma, “Deep learning assisted COVID-19 detection using full CT-scans,” Internet of Things, vol. 14, no. 100377, pp. 1–18, 2021. [Google Scholar]

17. Y. Pathak, P. Shukla, A. Tiwari, S. Stalin and S. Singh, “Deep transfer learning based classification model for COVID-19 disease,” IRBM, vol. 5, pp. 1–19, 2020. [Google Scholar]

18. B. Wang, S. Jin, Q. Yan, H. Xu, C. Luo et al., “AI-Assisted CT imaging analysis for COVID-19 screening: Building and deploying a medical AI system,” Applied Soft Computing, vol. 98, no. 106897, pp. 1–14, 2021. [Google Scholar]

19. P. Vidal, J. Moura, J. Novo and M. Ortega, “Multi-stage transfer learning for lung segmentation using portable X-ray devices for patients with COVID-19,” Expert Systems with Applications, vol. 173, no. 114677, pp. 1–15, 2021. [Google Scholar]

20. K. Gao, J. Su, Z. Jiang, L. Zeng, Z. Feng et al., “Dual-branch combination network (DCNTowards accurate diagnosis and lesion segmentation of COVID-19 using CT images,” Medical Image Analysis, vol. 67, no. 101836, pp. 1–13, 2021. [Google Scholar]

21. K. He, W. Zhao, X. Xie, W. Ji, M. Liu et al., “Synergistic learning of lung lobe segmentation and hierarchical multi-instance classification for automated severity assessment of COVID-19 in CT images,” Pattern Recognition, vol. 113, no. 107828, pp. 1–17, 2021. [Google Scholar]

22. P. Sethy, B. Negi and N. Bhoi, “Detection of coronavirus (COVID-19) based on deep features and support vector machine,” International Journal of Computer Applications, vol. 157, no. 1, pp. 24–27, 2020. [Google Scholar]

23. C. Huang, S. Ni and G. Chen, “A Layer-Based Structured Design of CNN on FPGA” in Proc. of IEEE 12th Int. Conf. on ASIC (ASICONGuiyang, China, pp. 1037–1040, 2017. [Google Scholar]

24. S. Albawi, T. Mohammed and S. Al-Zawi, “Understanding of a convolutional neural network,” in Proc. of IEEE Int. Conf. on Engineering and Technology (ICETAntalya, Turkey, pp. 1–6, 2017. [Google Scholar]

25. J. Shi, Q. Yan, L. Xu and J. Jia, “Hierarchical image saliency detection on extended CSSD,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 38, no. 4, pp. 717–729, 2015. [Google Scholar]

26. A. Oulefki, S. Agaian, T. Trongtirakul and A. Laouar, “Automatic COVID-19 lung infected region segmentation and measurement using CT-scans images,” Pattern Recognition, vol. 114, no. 107747, pp. 1–19, 2021. [Google Scholar]

27. A. Joshi, M. Khan, S. Soomro, A. Niaz, B. Han et al., “SRIS: Saliency-based region detection and image segmentation of covid-19 infected cases,” IEEE Access, vol. 8, pp. 190487–190503, 2020. [Google Scholar]

28. A. Taha and A. Hanbury, “Metrics for evaluating 3D medical image segmentation: Analysis, selection, and tool,” BMC Medical Imaging, vol. 15, no. 1, pp. 1–28, 2015. [Google Scholar]

29. M. Mielle, M. Magnusson and A. J. Lilienthal, “A method to segment maps from different modalities using free space layout maoris: map of ripples segmentation,” in Proc. IEEE Int. Conf. on Robotics and Automation (ICRABrisbane, QLD, Australia, pp. 4993–4999, 2018. [Google Scholar]

30. R. Jain, P. Nagrath, G. Kataria, V. Kaushik and D. Hemanth, “Pneumonia detection in chest X-ray images using convolutional neural networks and transfer learning,” Measurement, vol. 165, no. 108046, pp. 202–222, 2020. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |