DOI:10.32604/cmc.2022.019760

| Computers, Materials & Continua DOI:10.32604/cmc.2022.019760 |  |

| Article |

QoS Based Cloud Security Evaluation Using Neuro Fuzzy Model

1Department of Computer Science, Virtual University of Pakistan, Lahore, 54000, Pakistan

2Department of Computer Science, Lahore Garrison University, Lahore, 54000, Pakistan

3Department of Statistics and Computer science, University of veterinary and animal sciences, Lahore, 54000, Pakistan

4Department of Industrial Engineering, Faculty of Engineering, Rabigh, King Abdulaziz University, Jeddah, 21589, Saudi Arabia

5Department of Information Systems, Faculty of Computing and Information Technology-Rabigh, King Abdulaziz University, Jeddah, 21589, Saudi Arabia

*Corresponding Author: Muhammad Hamid, Email: muhammad.hamid@uvas.edu.pk

Received: 24 April 2021; Accepted: 31 May 2021

Abstract: Cloud systems are tools and software for cloud computing that are deployed on the Internet or a cloud computing network, and users can use them at any time. After assessing and choosing cloud providers, however, customers confront the variety and difficulty of quality of service (QoS). To increase customer retention and engagement success rates, it is critical to research and develops an accurate and objective evaluation model. Cloud is the emerging environment for distributed services at various layers. Due to the benefits of this environment, globally cloud is being taken as a standard environment for individuals as well as for the corporate sector as it reduces capital expenditure and provides secure, accessible, and manageable services to all stakeholders but Cloud computing has security challenges, including vulnerability for clients and association acknowledgment, that delay the rapid adoption of computing models. Allocation of resources in the Cloud is difficult because resources provide numerous measures of quality of service. In this paper, the proposed resource allocation approach is based on attribute QoS Scoring that takes into account parameters the reputation of the asset, task completion time, task completion ratio, and resource loading. This article is focused on the cloud service’s security, cloud reliability, and could performance. In this paper, the machine learning algorithm neuro-fuzzy has been used to address the cloud security issues to measure the parameter security and privacy, trust issues. The findings reveal that the ANFIS-dependent parameters are primarily designed to discern anomalies in cloud security and features output normally yields better results and guarantees data consistency and computational power.

Keywords: Cloud computing; performance; quality of service; machine learning; neuro-fuzzy

Businesses and organizations are increasingly relying on the cloud to deliver services. When it comes to the cloud, there are some critical factors to consider: affordability, security, accessibility, and integrity. The seriousness of the repercussions of any cloud attack could result in downtime, resulting in financial and reputational damage. In 2002, an advanced system is introduced that is Amazon Web Services created by Amazon. It is a cloud service that fulfills the need for storage for computation. Cloud service provider establishment is Elastic Computing cloud as a commercial web service. By using that one service small companies rent out the computer and run their computer applications. Being all the development, Google Microsoft and many giants also jumped into the competitions and introduced IT services [1].

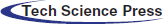

Cloud computing is a new technology that is introduced nowadays with different cloud services and development modes as shown in Fig. 1. It provided extensible services that are easily accessible on the compound devices without bothering that how to install the services which are being used, how to run the setup, and finally how to maintain it. Due to the current economic scale, cloud service providers offer the best services of the cloud to provide the novelty headway and skill junction that is secured, self-recovered and strong. So, the organizations built trust and measured the edge that is received by using such arrangements. In this assessment strategy, the organization needs to notice the effects of security and protection against the data. Cloud computing is a storage medium where we can store our data and files over the internet. This medium renders that data is more secure and thread-free and by using a cloud system, we can access as many websites anywhere over the internet. Cloud computing is the system where we can compute and arrange our data and information more quickly and in a more reliable way so that anybody can access it from anywhere in the world [2].

Figure 1: Cloud computing deployment and services model

Cloud technology also serves as a backup mechanism, allowing us to store backup files for all of our essential files on the cloud network. Nowadays cloud computing is being used by many users so that many users can get the advantage of its dynamic services and applications wherever they are. Cloud computing often provides server software backups, so you can rely on it in the event that some software fails, so cloning it is a huge benefit [3].

1.1 Cloud Computing Service Model

The most used service models through which the cloud system provides different services to the user’s consumers are following:

1.1.1 Infrastructure as a Service

Infrastructure is just support or foundation which is used to provide these types of services to your customers and cloud users like by using infrastructure you can give resources to the equipments that are used in different works like virtualization, storage area, networking etc. You can easily give these resources or facilities to your customers and cloud users to enhance your services. You can increase your storage area and the network speed available [4].

Platform as a service is a worldview for conveying working frameworks and it is used to provide the cloud users all the facilities through internet which is a world view network where we can access and download anything, but the difference is quite simple that these facilities are provide by the cloud services providers and you just need to pay for what you used on cloud. PaaS gives a stage apparatus to test, create, and have applications in a similar domain [5].

Software as a service is used in which the user does not need to download any application on its own computer because now the SaaS is available through which we can access any application not just only on our own computer but at any place. It is a model in which the other person through internet gets access the other persons and they can use all these applications on internet. It also decreases the expenses of installation, provisioning.

There are four models of cloud deployment:

A private cloud is a specific model of distributed computing that includes a particular and secure cloud-based condition in which just the predetermined customer can work. Similarly, as with other cloud models, private mists will give figuring power as an administration inside a virtualized domain utilizing a basic pool of physical processing asset. In any case, under the private cloud display, the cloud (the pool of asset) is just open by a solitary association giving that association more noteworthy control and security.

The public cloud is mostly known as the model with no-fault ponder which cloud organizations are given in a virtualized circumstance, create using pooling shared physical resources accessible over an open framework, for instance, the web. To some degree they can be portrayed instead of private fogs which ring-fence the pool of crucial handling resources, influencing an unmistakable cloud to the stage having only a single affiliation approach. Open fogs, regardless, offer organizations to the different client using the same shared establishment [6].

A hybrid cloud is a joint cloud advantage utilizing both private and open patches of fog to perform specific limits inside a comparative affiliation. All conveyed figuring organizations should offer certain efficiencies to differentiating degrees, yet open cloud organizations are most likely going to be efficiencies obtained by using open cloud organizations for all non-sensitive operations, simply relying upon a private cloud where they require it and ensure that most of their stages are perfectly planned.

A society cloud in figuring is a communication effort in which structure is shared between a couple of relationships from a specific gathering with fundamental concerns for security, consistency, and paying little respect to whether regulated inside or by a community and encouraged inside or remotely. This is controlled and used by a social occasion of affiliations having a shared interest. The costs are distributed among a fewer number of customers than an open cloud (however more than a private cloud), so only a segment of the cost venture stores capacity of dispersed registering are making sense of it.

1.3 Essential Characteristics of Cloud Computing

According to the national institute of Standard and Technology, cloud computing is defined by the following qualities:

1.3.1 Resource Pooling in Cloud Computing

IT term that expresses cloud computing environment is “Resource pooling” which serves many clients or tenants with compatible services. Some familiar terms involve elasticity, involving “dynamic provisioning” service, and “on-demand self-service”. The tenants can change the level of services without a contract provider. Resource pooling is put into place to give an adaptable system that is involved in cloud computing and in software as a service.

The expansive system alludes to the assets facilitated inside non-public cloud organizations that are accessible through an extensive variety of gadgets, like tablets, PCs, Macintosh, and Smartphones. As a greater number of representatives utilize smartphones, tablets, and different gadgets with an online network, they are required to get to organization assets and keep on working from these gadgets. In a private cloud, secure information is provided just to the representatives of the organization.

Refers to the administrations in which the provider measures, screens plan to organizations for multiple purposes, like charging, convincing usage for advantages, general perceptive course of action. The national foundation of benchmarking and innovation recognizes measured administration as one of five components of a definition of distributed computing [7].

To provide scalable service “Rapid elasticity” is used in the cloud computing environment as needed by the consumer. Rapid elasticity, or the capacity to deliver flexible resources, is a cloud computing concept for scalable provisioning. One of the five basic facets of cloud computing, according to experts, is this kind of scalable model. Rapid elasticity, or the capacity to deliver flexible resources, is a cloud computing concept for scalable provisioning. One of the five basic facets of cloud computing, according to experts, is this kind of scalable model. Because of technological advances, cloud computing has emerged as promising computing IT paradigm. It provides low-cost on-demand applications with no customer involvement. More and more businesses are turning to cloud as their primary computing medium, rather than the conventional storage mechanism. Cloud computing platforms such as Amazon Web Services (AWS), Google, and others have become a part of everyday life, and most people use them without ever realizing it. The cloud’s appeal stems from its pay-as-you-go platform, which offers low costs and guaranteed quality of service (QoS).

The aftermath of swift Cloud Computing emergence resulted into the increasing demand of such solutions which can exploit the cloud attributes and properties without undermining the effectiveness of current systems and applications. Instead of replacing applications, cloud provides the provisioning of application/system migration with multiple execution stages. With the help of platform as a service, it becomes possible to put forward the administrative controls on every layer i.e., infrastructure, platform, software and finally at individual services. In this regard, cloud management becomes a far more complex and fundamental segment than application development, networking or even application migration, because all the administrative units are supplementary to each other [8].

Another important aspect of cloud computing is the rise of unstructured or opensource activities from design to development level, that helps in developing applications/services or more specifically such program routines which are required to utilize cloud benefits in terms of services, virtualization, and integration. This designing and programming segment has also changed the concept of distributed computing with far broader and more complex environment for conventional as well as modern opensource codification. As in the recent past, the upcoming future is also designing its vital parameters around cloud computing, portable, remotely accessible, interoperable applications with the assertion of IoT and other data units [9].

This reviewer tries to give an alternate point of view of the cloud application through an individual learning administration viewpoint and its structure in the distributed computing condition. The incredible buildup about distributed computing and diverse array of definitions and criteria concentrating on primarily three unique layers of administrations being framework, stage, and programming [10].

Distributed computing identifies the changes of the workload and sustenance of resources in accordance with the run time respectively. Despite having a lot more resources as compared to the conventional environment, still, cloud service providers don’t feel ease at managing the resources at multiple layers. herefore, the role of three critical services as mentioned above become more critical and essential to ensure the basic functioning of a cloud system. In this regard, Ghobadi and Ali propounded the resource furnishing methodology for cloud computing that contingent upon the synthesis of the autonomic computing. This leads to the point of generating the dynamic sustenance of the resources in the cloud computing [11].

“Wang explained the framework like apache spark” which is getting famous for processing large swathes of information either in a neighborhood or a cloud-deployed cluster in performance to the present frameworks that focus more on modeling the wide variety of VMs. Many of the spark components and layers are loosely coupled in Apache Spark’s well-defined layered architecture. This architecture is then supplemented by a number of extensions and libraries. [12].

“As per the explanation given by kashif regardingthe concept of HPC execution” clarity, we assess the present execution of HPC applications in the current cloud frameworks and after that talk about different systems to alleviate obstruction, virtualization overhead and issues because of shared assets in the cloud. Distributed computing is a server-based model that gives a shared pool of assets to the customers to access from a remote area. Be that as it may, due to the interconnect transmission capacity and heterogeneity associated with the cloud benefit, HPC applications give poor execution in the cloud [13].

Distributed computing enables clients internet administrations on a pay-as-you-go basis. Selvaraj explained The rapid increase in demand for computing power through science, corporate, and web-based applications has resulted in the construction of massive data centers that consume massive quantities of electricity. We suggest an energy-efficient resource management solution for virtualized Cloud data centers that lowers operating costs while maintaining the necessary level of service.. Cloud server farms have entering assignments by giving assets, for example, CPU, RAM, stockpiling, and data transmission. Along these lines, inquire about network wanted to give vitality proficient arrangement that reduces the effect of the previously mentioned issues [14].

Zhang explored the predictive cloud resource management framework for enterprise workload’s examination and proposed an imaginative prescient Asset Administration System (PRMF) to beat the downsides of the receptive Cloud asset administration approach. Execution of PRMF was contrasted and that of a responsive approach by sending a timesheet application on the Cloud resolved. Best-fit model was utilized for anticipating key certainty level of the anticipated esteem ruptures the genuine metric. For tests performed in the present examination, the approach performed superior to the receptive approach while provisioning cases and the constant investigations [15].

It is a type of hybrid system of AI by elaborating as Artificial Neural Networks classified by different parameters of fuzzy. A fuzzy set Z of set R can be defined as a set of ordered pair can be in Eq. (1).

where R is a set of considerations in which a fuzzy set Z is defined as

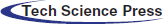

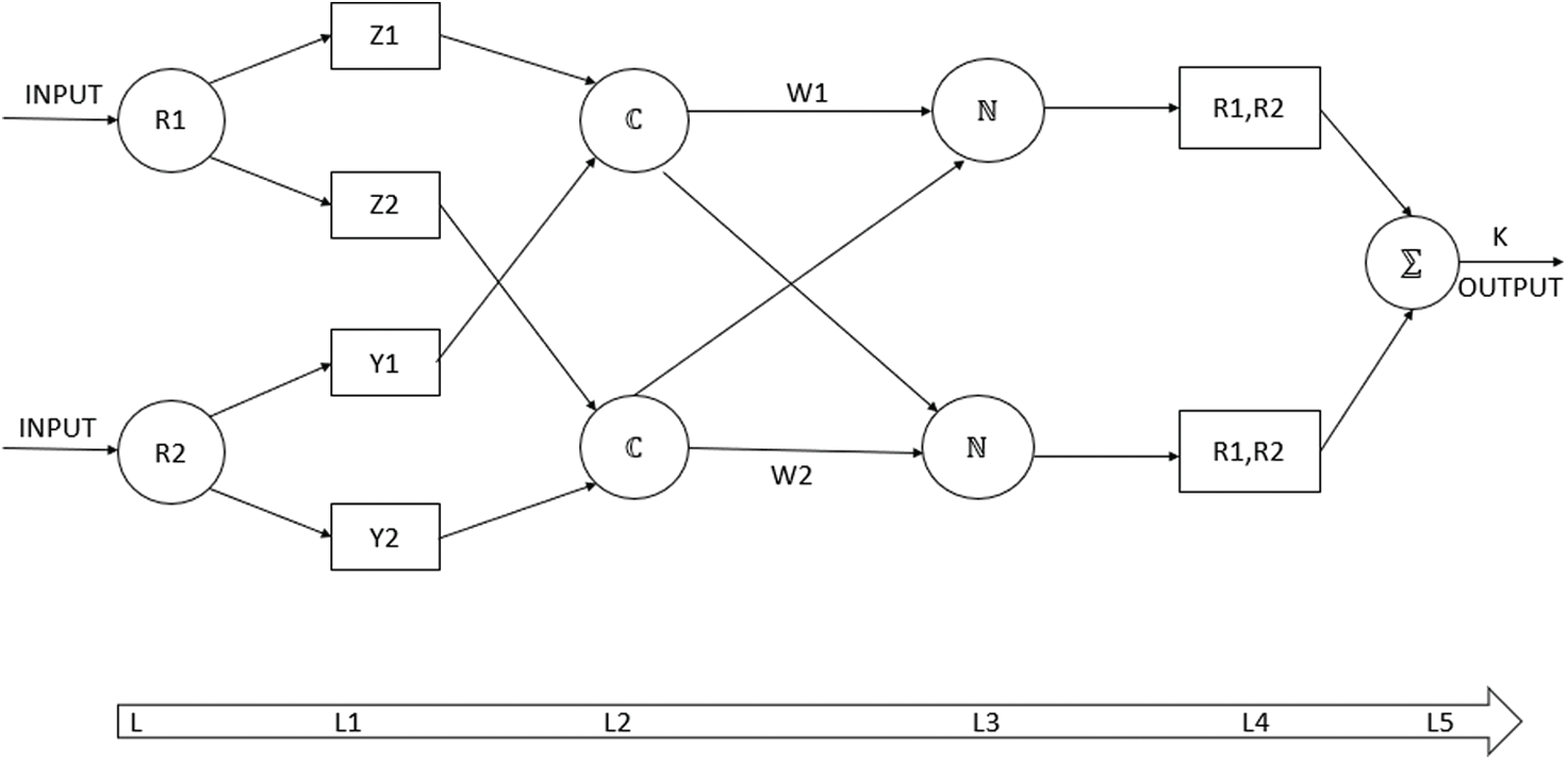

Numbers of fuzzy sets can be represented as convex normalized fuzzy because the element in the fuzzy sets must belong to the degree of 1 by normalizing it. Ability to enhance the model of fuzzy neural network is commonly used in the automatic procedure of representing input parameters and elaborate some specific rules and predicting output for that. In Fig. 2 shows the structure of neuro fuzzy system are:

Figure 2: Neuro fuzzy model

ANFIS explains the detailed structure of the Neuro-Fuzzy Inference system. The idea behind ANFIS is totally based on the method of fuzzy modeling and learning according to the given dataset. The basic introduction of fuzzy system in neural network is to use the neural networks as a learning ability for improving the performance of model which gave better results . Parameters of the membership function of fuzzy inference system are calculated according to the input-output data part as shown in Fig. 3.

Figure 3: Adaptive neuro-fuzzy inference system

The adaptive neuro-fuzzy inference system operates in the following ways. Represented fuzzy logic sets onto the first node here is the variables as an input variable so the degree of the membership is calculated in Eq. (3).

where

For finding the logic about result according to information using the tool for conducting experiments is MATLAB the learning of data is performed internally in this tool and pre-processing generates the result. In the methodology apply the neuro-fuzzy through this select member of the interface that performs their work and enables the system to get some results.

Accessibility learning and forecast have a significant influence in choosing one of the different cloud services. This segment depicts the neuro-fuzzy inference-based prediction model utilized for accessibility learning and expectation. This model predicts what is to come to a heap of cloud services and uses it as an indicator to choose the computing services for the remaining load of uses. This methodology guarantees better flexibility within the sight of the time-differing heaps of cloud services.

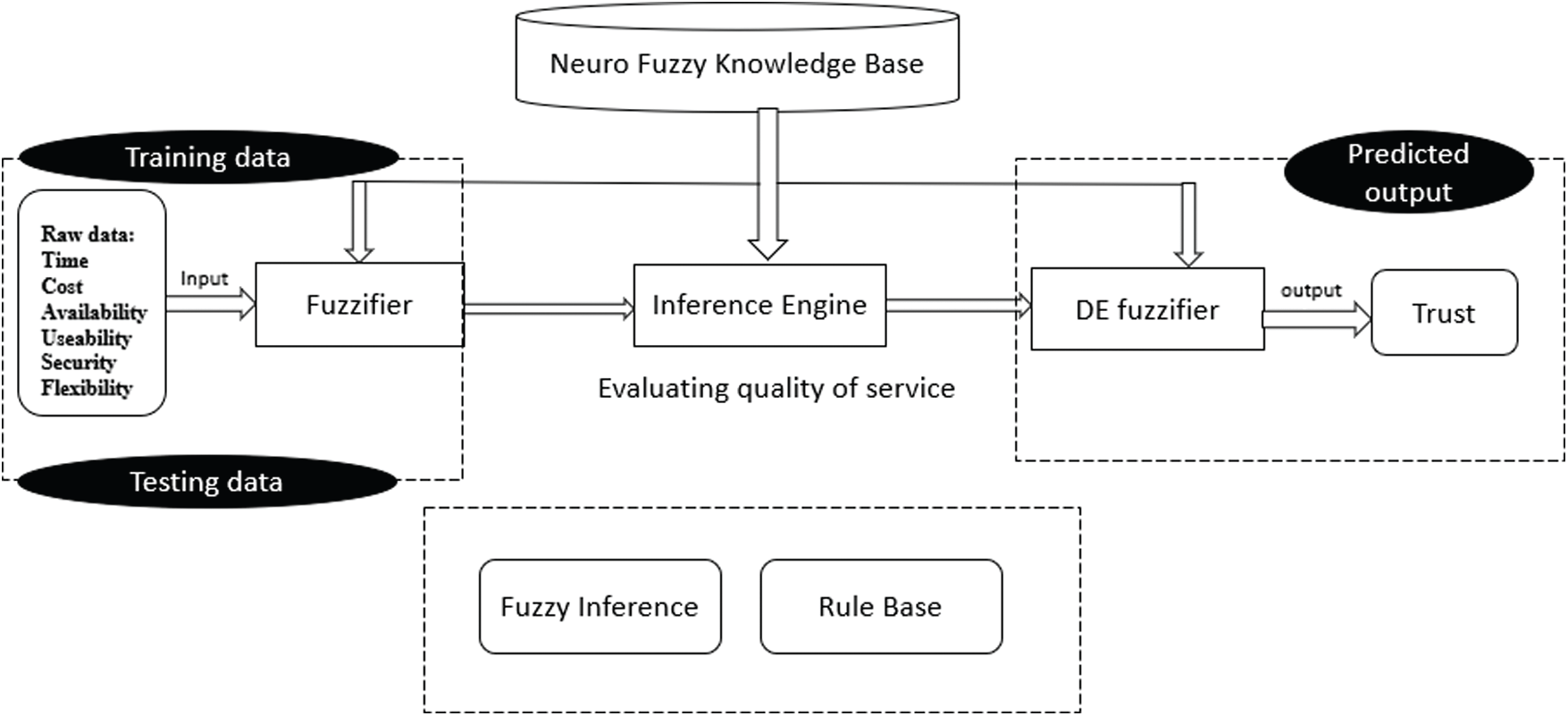

Fig. 4 illustrates the architectural model of evaluating cloud quality of service using neuro-fuzzy inference predicting trust. This model depends upon three instances training data, testing data, and predicted output. These parts are further classified into some subparts. In training and testing, the data category needs raw data as an input with some attributes time, cost, availability, useability, security, flexibility. After that, these input parameters pass through the fuzzifier and then go to the Inference Engine (IE). This IE is further divided into two parts: Fuzzy Inference (FI) and Rule Base (RB). This fuzzy inference engine is used to evaluate the quality of cloud service after all of this has occurred. The second portion is likewise separated into two subparts, DE fuzzifier and the output is in the form of trust, which is referred to as predicted output.

Figure 4: A proposed neuro-fuzzy inference-based prediction model

In this segment, data needs to be trained. This input parameter is declared as a variable and this variable is containing the data element as an input parameter raw data of the dataset and import to the neuro-fuzzy designer window for training all the data elements. All the data is plotted onto the screen of neuro-fuzzy. After plotting, these input parameters need testing. Testing is important to check if the data is accurate or not. If all the values that the system gives to the designer are inside the circle instance it means the dataset is accurate and effective for further use. Required input parameters are converted into variable by the fuzzifier by using the membership function which is in the knowledge base of neuro-fuzzy. Inference Engine (IE) is further classified into two subparts a) Fuzzy Inference (FI) and b) Rule Base (RU). By using if-then rules this entire engine is used to perform its entire functionality and convert input into meaning output based on some rules. It converts the fuzzy output into the crisp with the use of the membership function. There are five methods of DE fuzzifier which are cento of area, the bisector of area, mean of maximum, smallest of maximum, largest of maximum.

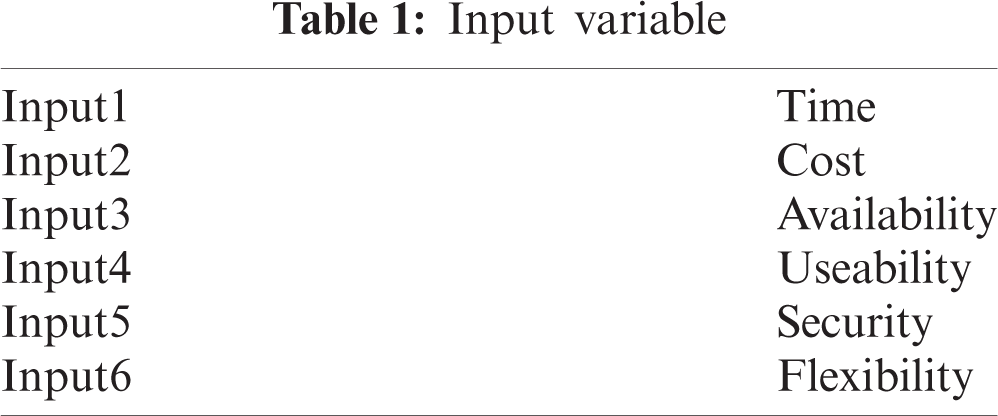

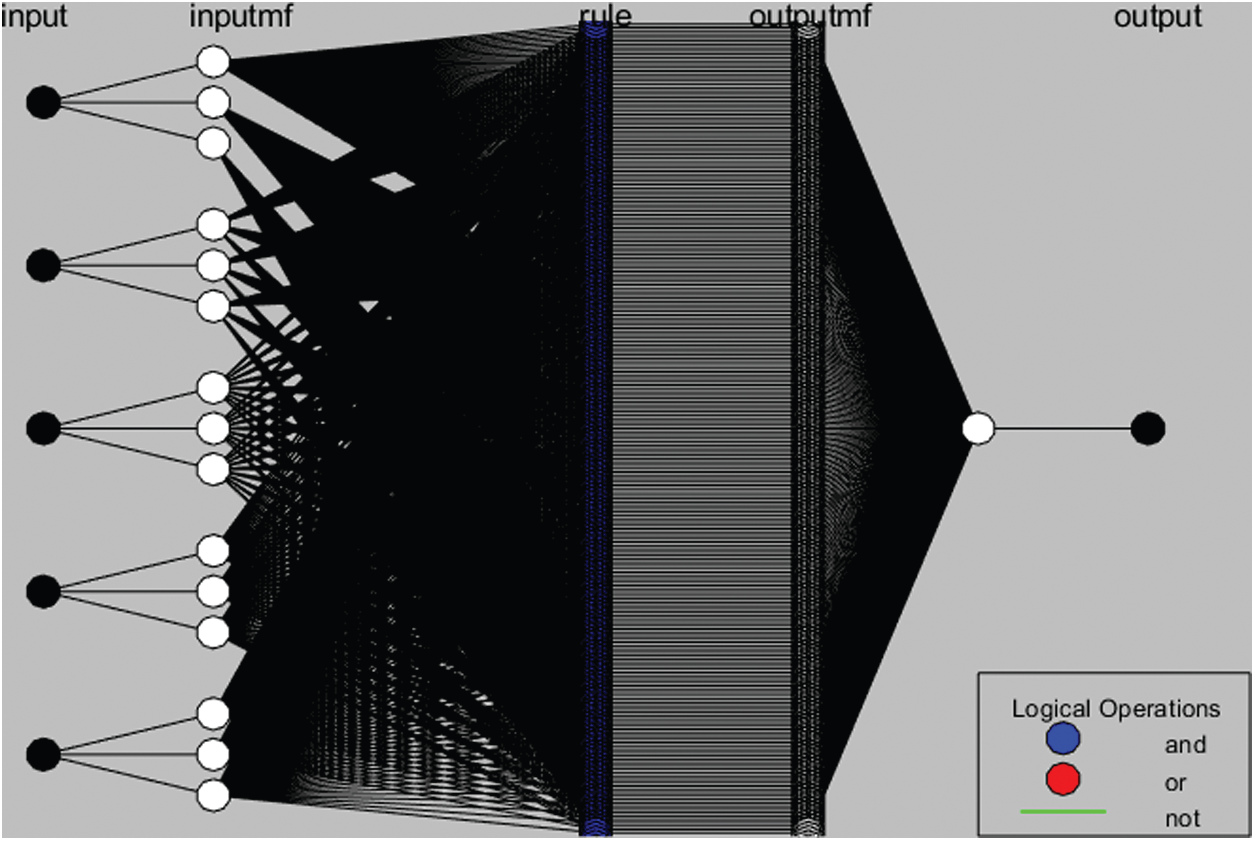

Selection of different parameters based on consumer preferences should be given an image in the cloud dynamism. Cloud services are a border term that is used by defining their properties of cloud computing. Some input parameters are used as shown in Tab. 1.

Fuzzy inference is a process through which given inputs are matched or mapped with the output using fuzzy logic. In this process, we use fuzzy operators, membership functions, and if-then rules. Fuzzy inference can be applying in many fields such as in controlling automatically, data classification, for making decisions, and in expert systems, for the individual models in a neural network, the structure of fuzzy models be mapped shown in Fig. 5.

Figure 5: Neural network of proposed QoS parameter

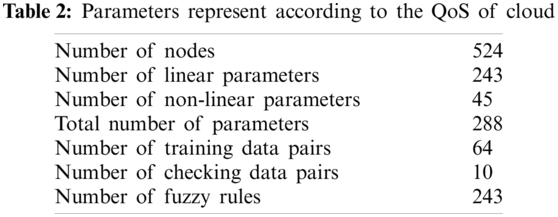

The number of nodes is 524 and the number of linear parameters is 243 in total as shown in Tab. 2. The output membership functions are of the constant type technique, membership type trimf output type become constant and system become hybrid. After the data testing phase, the neural organization has been tried based on information yield collections that were not utilized during the preparation model. Testing empowers the check of the working of the neuro-fuzzy model.

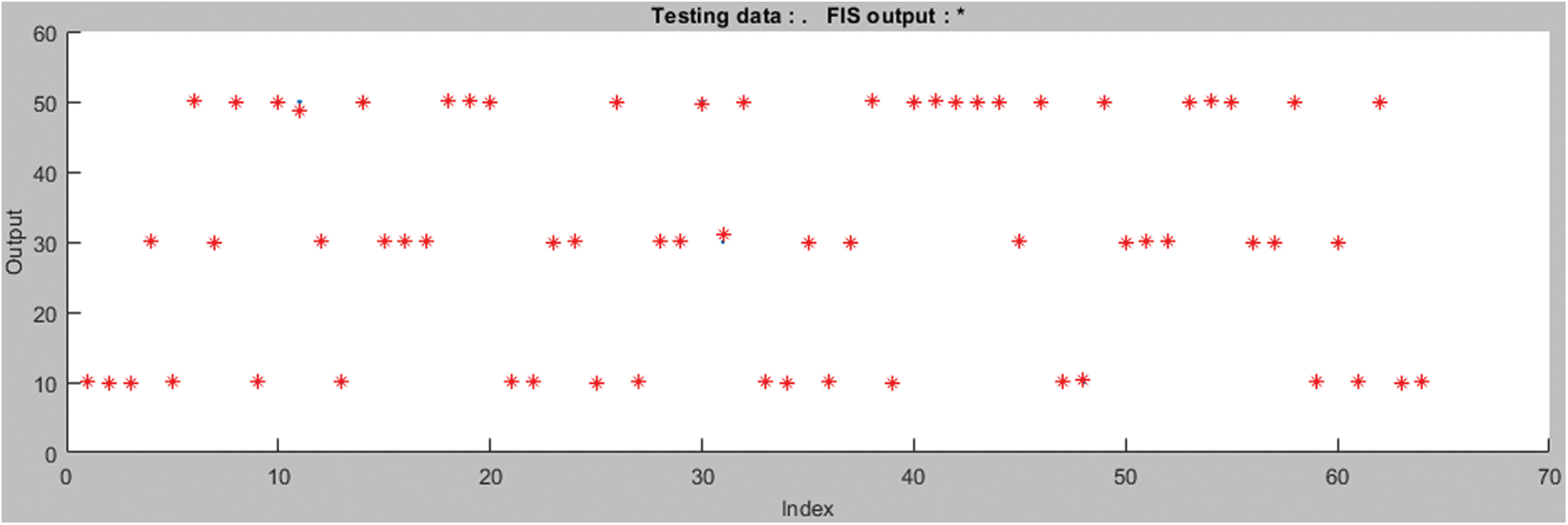

Yield information, which were created by the organization, are contrasted and the accessible information. In evaluating quality of service of cloud computing the average error in training data is 0.21926 shown in Fig. 6 data is collected from some expert members and decision is made upon by the model take only 3 epochs out of 5 input variables. Testing out of 5 input variables cases represented the model of quality-of-service works done by derivations within limitation provide the tolerance and then the proposed model gives a valid result in the form of output. The average error of training data is 0.0555 is shown in the Fig. 7.

Figure 6: Training data of neural network

Figure 7: Testing data of fuzzy inference system

In Fig. 7 shows computing the average error of training data is 0.0555. all the data is plotted on the x-axis and y-axis and during training all the scatted data should be train on the exact way.

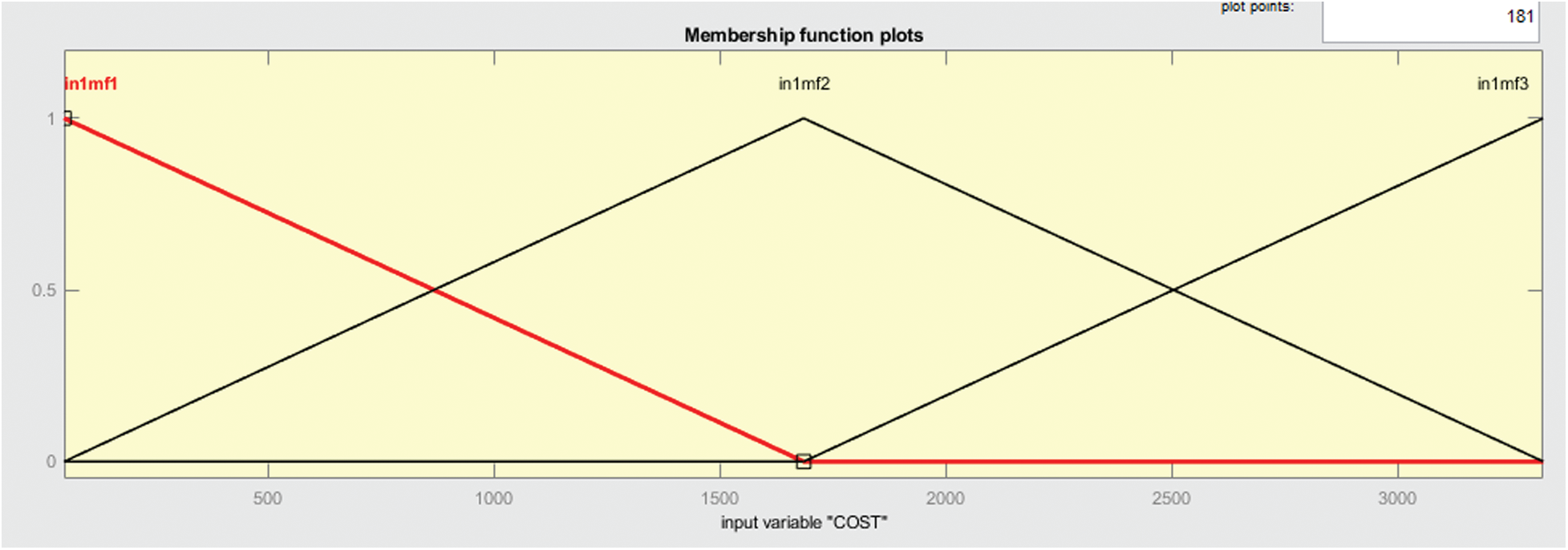

First step is making the principle about fuzzy logic in the research. Fuzzy inference system includes the basic element (structure, input and output variables, number of membership function are the same about ANFIS. If the fuzzy logic system is developed, various values for the input parameters are generated randomly and the fuzzy system is used to get the QOS value for those input variables and the result write the training file. This training file will be used by the Neural Network to train neurons. At the core, then, the Neuro Fuzzy System was developed. Fuzzy membership function in fuzzy QoS cloud is shown below in Fig. 8.

Figure 8: Membership function of input variable cost

In Fig. 8 shows the membership function that is of type trim and here the division depends upon 3 values. This membership function is of input variable cost.

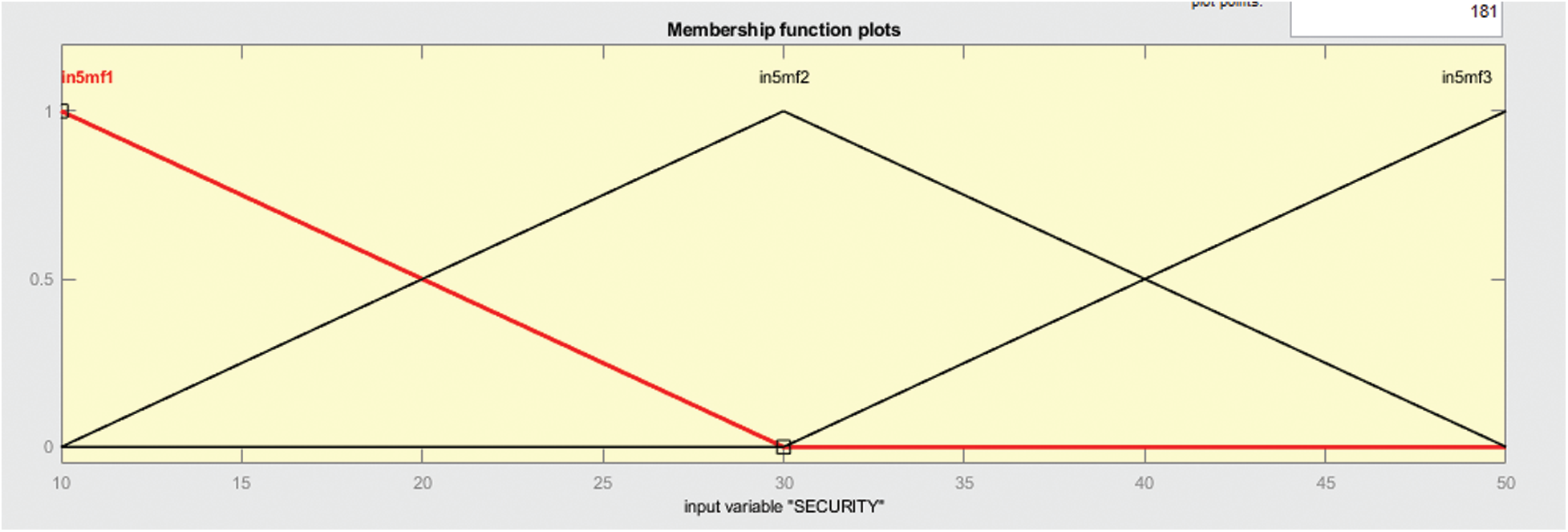

In Fig. 9 shows the membership function that is of type trim and here the division depends upon 3 values. This membership function is of input variable security.

Figure 9: Membership function of input variable security

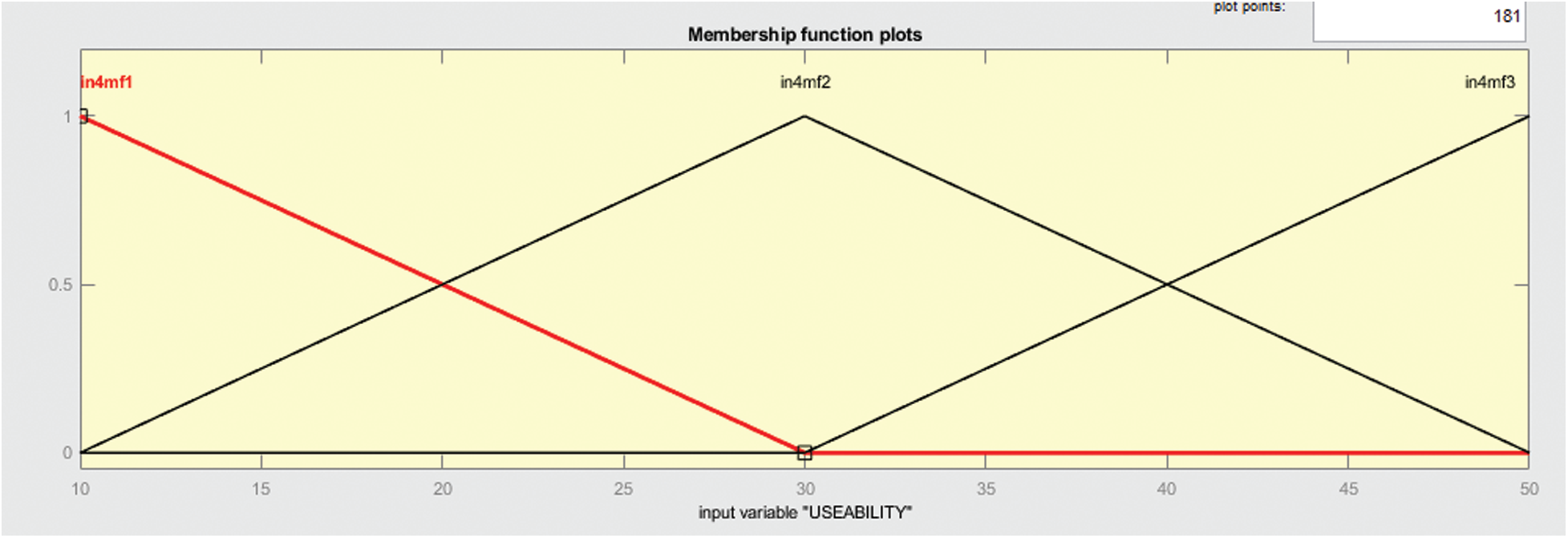

In Fig. 10 shows the membership function that is of type trim and here the division depends upon 3 values. This membership function is of input variable usability. Graphical representation is to be summarized of the predicted output is shown in the figure below.

Figure 10: Membership function of input variable useability

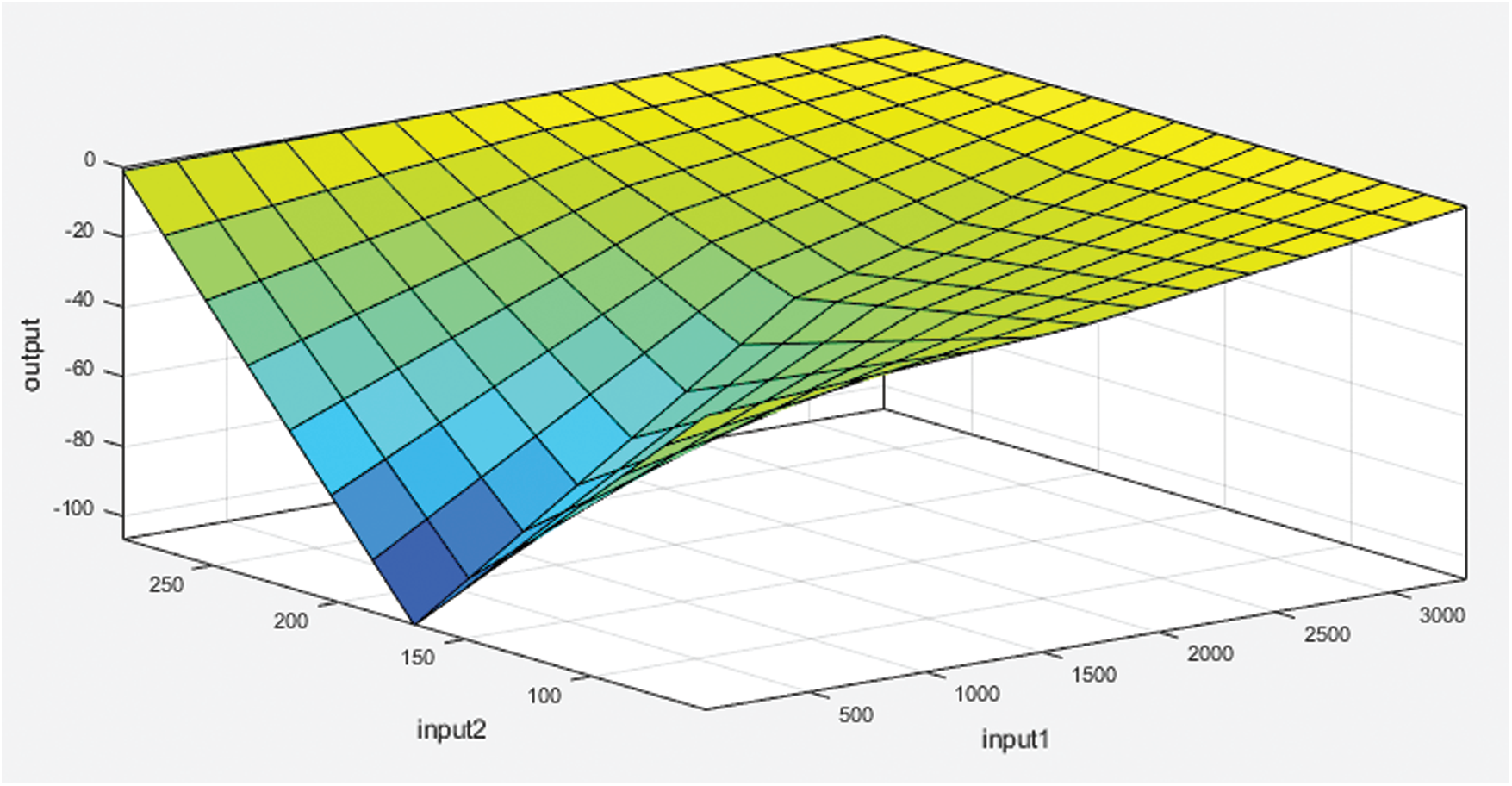

In Fig. 11 above here is one graph to shows the working of the data about QoS to manipulate the trust. In this graph yellow color shows the partial trust on the entire system about the value lays between x and y-axis.

Figure 11: Surface diagram of proposed trust model

This research provides the core constituents of a cloud quality of service parameters like usability and availability while other parameters related to the required time, cost, and security are dynamic and can be learned by the model using artificial intelligence and machine learning to gain autonomous behavior that results into the flexibility evolution. Data management solutions alone are becoming very expensive and are unable to cope with the reality of everlasting data complexity. Taxonomies for data flexibility, for both non-cloud and cloud computing networks, have a significant nature considering the quality of services. The prior implementation centered on the distribution of resources considering one reputation value ignores resource heterogeneity. Hence, the proposed resource allocation approach is to measure the credibility score with a focus on QoS parameters, based on several attributes. Estimation calculation of the optimal time period for training the neural feed-forward Network to boost QoS parameters with more than one parameter in a cloud environment. Our experiment demonstrates that the modeling methodology of QoS based cloud security evaluation, as well as the model itself, can be very successful in providing the requisite structured logic to cloud application owners regarding the configuration of their security policies, and thus assisting them in specifying security policy that minimizes QoS violations.

Acknowledgement: Thanks to our families and colleagues, who provided moral support.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding this study.

1. B. Shargabi, S. Jawarneh and S. Hayajneh, “A cloudlet based security and trust model for e-government web services,” Journal of Theoretical and Applied Information Technology, vol. 98, no. 1, pp. 27–37, 2020. [Google Scholar]

2. J. Sun, “Security and privacy protection in cloud computing: Discussions and challenges,” Journal of Theoretical and Applied Information Technology, vol. 160, no. 4, pp. 102642–1022650, 2020. [Google Scholar]

3. A. Awan, M. Shiraz, M. U. Hashmi, Q. Shaheen and A. Ditta, “Secure framework enhancing AES algorithm in cloud computing,” Security Communication Networks, vol. 20, no. 2, pp. 1–16, 2020. [Google Scholar]

4. L. Deyi, C. Guisheng and Z. Haisu, “Analysis of hot topics in cloud computing,” ZTE Communication, vol. 8, no. 4, pp. 1–5, 2020. [Google Scholar]

5. H. Ali, “Key factors increasing trust in cloud computing applications in the kingdom of bahrain,” International Journal of Computer Digital System, vol. 9, no. 2, pp. 309–317, 2020. [Google Scholar]

6. S. Mehraj and M. T. Banday, “Establishing a zero trust strategy in cloud computing environment,” in Proc. of 2020 Int. Conf. on Computer Communication Informatics, Coimbatore, India, pp. 20–25, 2020. [Google Scholar]

7. J. Pavlik, V. Sobeslav and J. Horalek, “Statistics and analysis of service availability in cloud computing,” in ACM’s Int. Conf. Proceedings Series, Ottawa, Canada, pp. 310–313, 2014. [Google Scholar]

8. J. Sun, “Research on the tradeoff between privacy and trust in cloud computing,” IEEE Access, vol. 7, pp. 10428–10441, 2019. [Google Scholar]

9. A. Li, “Privacy, security and trust issues in cloud computing,” International Journal of Computer Science Engineering, vol. 6, no. 10, pp. 29–32, 2019. [Google Scholar]

10. E. Sayyed, “Trust model for dependable file exchange in cloud computing,” International Journal of Computer Application, vol. 180, no. 49, pp. 22–27, 2018. [Google Scholar]

11. P. Kumar, S. Lokesh, G. Chandra and P. Parthasarathy, “Cloud and IoT based disease prediction and diagnosis system for healthcare using fuzzy neural classifier,” Future Generation Computer System, vol. 86, no. 3, pp. 527–534, 2018. [Google Scholar]

12. Y. Wang, J. Wen, W. Zhou and F. Luo, “A novel dynamic cloud service trust evaluation model in cloud computing,” in Proc. of 17th IEEE Int. Conf. Trust Security Privacy Computer Communication, New York, USA, pp. 10–15, 2018. [Google Scholar]

13. A. Kashif, A. Memon, S. Siddiqui, R. Balouch and R. Batra, “Architectural design of trusted platform for IaaS cloud computing,” International Journal of Cloud Application Computer, vol. 8, no. 2, pp. 47–65, 2018. [Google Scholar]

14. A. Selvaraj and S. Sundararajan, “Evidence-based trust evaluation system for cloud services using fuzzy logic,” International Journal of Fuzzy System, vol. 19, no. 2, pp. 329–337, 2017. [Google Scholar]

15. P. Zhang, M. Zhou and G. Fortino, “Security and trust issues in fog computing: A survey,” Future Generation Computer System, vol. 88, no. 1, pp. 16–27, 2018. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |