DOI:10.32604/cmc.2021.019059

| Computers, Materials & Continua DOI:10.32604/cmc.2021.019059 |  |

| Article |

Intelligent IoT-Aided Early Sound Detection of Red Palm Weevils

1College of Computing and Information Technology, Shaqra University, Shaqra, 11961, Saudi Arabia

2Department of Industrial Electronics and Control Engineering, Faculty of Electronic Engineering, Menoufia University, Egypt

3Faculty of Science, Sohag University, Sohag, 82524, Egypt

4Department of Physics, College of Sciences, University of Bisha, Bisha, 61922, Saudi Arabia

5Department of Mathematics, College of Sciences, University of Bisha, Bisha, 61922, Saudi Arabia

6Department of Computer and Information Science, CeRDaS, Universiti Teknologi Petronas, Malaysia

*Corresponding Author: Omar Reyad. Email: oreyad@science.sohag.edu.eg

Received: 31 March 2021; Accepted: 09 May 2021

Abstract: Smart precision agriculture utilizes modern information and wireless communication technologies to achieve challenging agricultural processes. Therefore, Internet of Things (IoT) technology can be applied to monitor and detect harmful insect pests such as red palm weevils (RPWs) in the farms of date palm trees. In this paper, we propose a new IoT-based framework for early sound detection of RPWs using fine-tuned transfer learning classifier, namely InceptionResNet-V2. The sound sensors, namely TreeVibes devices are carefully mounted on each palm trunk to setup wireless sensor networks in the farm. Palm trees are labeled based on the sensor node number to identify the infested cases. Then, the acquired audio signals are sent to a cloud server for further on-line analysis by our fine-tuned deep transfer learning model, i.e., InceptionResNet-V2. The proposed infestation classifier has been successfully validated on the public TreeVibes database. It includes total short recordings of 1754 samples, such that the clean and infested signals are 1754 and 731 samples, respectively. Compared to other deep learning models in the literature, our proposed InceptionResNet-V2 classifier achieved the best performance on the public database of TreeVibes audio recordings. The resulted classification accuracy score was 97.18%. Using 10-fold cross validation, the fine-tuned InceptionResNet-V2 achieved the best average accuracy score and standard deviation of 94.53% and ±1.69, respectively. Applying the proposed intelligent IoT-aided detection system of RPWs in date palm farms is the main prospect of this research work.

Keywords: Red palm weevils; smart precision agriculture; internet of things; artificial intelligence

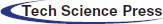

Date palm trees are not only food but also one of the main income sources in the Arab world countries, especially in Saudi Arabia [1]. Although date palm is the oldest known cultivated tree, it is recently confronted with several contemporary issues. The red palm weevil (RPW) or Rhynchophorus ferrugineus, which originated in Asia and was first discovered in the Gulf region in the 1980s, has caused enormous losses to date palm farmers [2]. Over the last four decades, RPW has steadily extended its global influence. This species quickly spread across the Middle East’s Gulf area, North Africa’s Maghreb countries, and Europe’s Mediterranean basin countries as shown in Fig. 1. The elimination of highly infested trees alone costs the Gulf countries and the Middle East around 8 million dollars per year as reported by the Food and Agriculture Organization (FAO) [3].

Figure 1: The red palm weevil (RPW) global geographical distribution

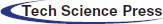

As a result, seeking a cost-effective solution to the RPW dilemma would not only save the “blessed” palm tree but will also assist farmers and governments in reversing the mounting financial losses that have arisen. Decision-makers, academics, and farmers generally believe that early recognition could save thousands/millions of healthy trees by using easy steps to quarantine infested trees and secure non-infested trees and offshoots [4]. Accordingly, primary disease discovery could theoretically assist in the victory over the RPW. Since obvious early symptoms of invasion occur only once it is too late to save the tree, the red palm weevil poses a significant threat to palm tree preservation. The weevil’s vague behavior and inherent biological characteristics have made it very difficult to be recognized and thereby managed. There are multiple characteristics of the RPW characterize its growing stages as depicted in Fig. 2 [5]. Nowadays, the elements of RPW management systems have many weaknesses and difficulties. Early recognition of the weevil infestation, limitations of biological control measures in field circumstances, and a lack of farmer engagement in control operations are some of these issues [6]. Therefore, the early detection of such predators does provide the best chance of eradicating them and minimizing the likelihood of palm tree damages. Fortunately, with the advancement in artificial neural networks (ANN) technology, the detection possibility of RPW in its early stages could be achieved [7].

Figure 2: RPW life cycle

Visual examination of the tree for symptoms appearance, identification of the sound made by nourishment larvae as well as chemical analysis of volatile signatures generated by infested date palms are considered the most known RPW early detection approaches [8]. Also, thermal imaging to monitor temperature changes in invaded palms is another classical method to detect RPW. The most expensive approach is sound detection and the most reliable one is based on the observation of symptoms [9]. So instead of focusing on conventional detection approaches, there is a need to develop computational solutions for finding and controlling RPW species that are both accurate and permanent. It is also important to figure out what main characteristics to look for when identifying plagued palm trees. Lately, a fusion of computer science, sensors, and advanced electronic technology is being used to construct reasonable and fast mechanisms for automatic recognition of RPW. Acoustic devices, X-ray imaging, remote sensing tools, and radio telemetry are among the most significant and promising new trends to control the RPW [10].

Smart precision agriculture exploits modern information and wireless communication technologies to achieve challenging agricultural processes and/or regular tasks automatically [11,12]. For instance, Internet of Things (IoT) has been applied in real-life applications of agriculture, such as precision management of water irrigation, crop diseases and insect pests [13–15]. IoT mainly relies on wireless sensor network (WSN) to measure and collect environmental parameters like soil moisture and humidity. Then, these collected data can be saved or analyzed to assist decisions of specialists or famers, and/or to operate water irrigation pumps [11]. Artificial intelligence (AI) techniques such as machine learning and deep learning models [16,17] have been recently employed to analyze acquired agricultural and environmental data. Crop health monitoring presents a major aspect of smart precision agriculture [18], specifically identifying the infectious status of insect pests in the farm. Traditional techniques and manual detection of insect pests are insufficient, time-consuming and relatively expensive. Therefore, early detection of the plant pests is a high priority for farmers to use suitable pesticides, avoiding the loss of crops [19]. Hence, the focus of this study is proposing a new solution for continuous health monitoring of date palm trees against RPWs by using IoT and deep learning models. Transfer learning approach overcomes the drawback of traditional deep learning methods in case of small dataset and limited resources for training phase. The main idea of this approach is transferring the knowledge from a similar task, and again using the pre-trained deep learning model for achieving another task with minimal computation power [20]. Advantages of transfer learning technique have been widely exploited in several applications, e.g., medical and healthcare systems [21,22], industrial manufacturing [23], and robotic systems [24]. Moreover, transfer learning models showed significant results of achieving smart water irrigation [11] and plant diseases and pest’s classification [25] in the field of agriculture. Convolutional neural networks (CNNs) are still the most popular deep learning method. Residual neural networks (Resnet) [26], MobileNet [27] and Xception [28] are three of well-known pretrained models based on transfer learning approach. This paper presents a new IoT-based sound detection system of RPWs using deep transfer models of residual inception networks. The main contributions of this study are presented as follows.

• Proposing a new intelligent detection system of RPW sounds at early stage of infectious date tree palms using IoT.

• Developing a transfer learning-based classifier to accomplish accurate sound identification of RPWs.

• Conducting extensive tests and comparative study of our proposed method with other methods in previous studies to validate the advanced capabilities of our early detection system of RPW.

The reminder of this paper is divided into the following sections. Section 2 introduces a review of previous studies including different machine and deep learning models for identifying RPWs. The public sound dataset of RPWs, architecture of transfer learning models and description of our proposed RPW detection system are presented in Section 3. Evaluation results and discussions of deep learning classifiers to detect clean and infested palm trees are given in Sections 4 and 5, respectively. Section 6 presents conclusions and outlook of this study.

Deep learning (DL) is a cutting-edge machine learning (ML) technology that does quite well in various tasks such as image classification, scene analysis, fruit detection, yield estimation, and many others [29]. DL can create new features from a restricted range of features in the testing dataset, which is considered one of the key advantages over other ML algorithms. Convolutional Neural Networks (CNN), Fully Convolutional Networks (FCN), Recursive Neural Networks (RNN), Deep Belief Networks (DBN) and Deep Neural Networks (DNN) are examples of DL architectures that have been widely deployed to a variety of research domains [30]. Recently, several DL techniques have been applied to different agricultural-based methods with increasing significance. Researchers in [31] performed a survey of several DL techniques applied to different agricultural issues. The authors examined the models employed, the data source, the hardware utilized, and the probability of real-time deployment to investigate future integration with autonomous robotic mechanisms.

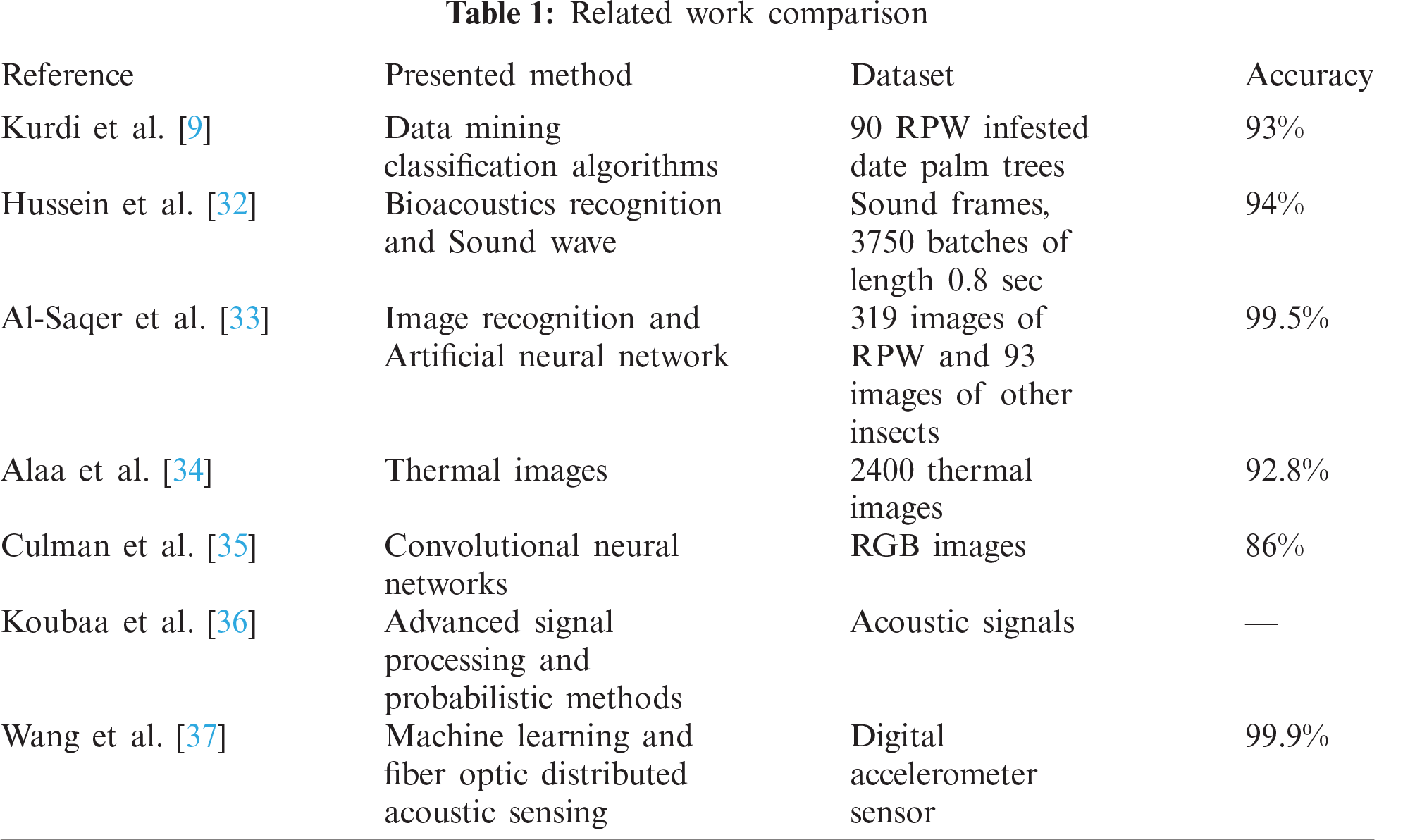

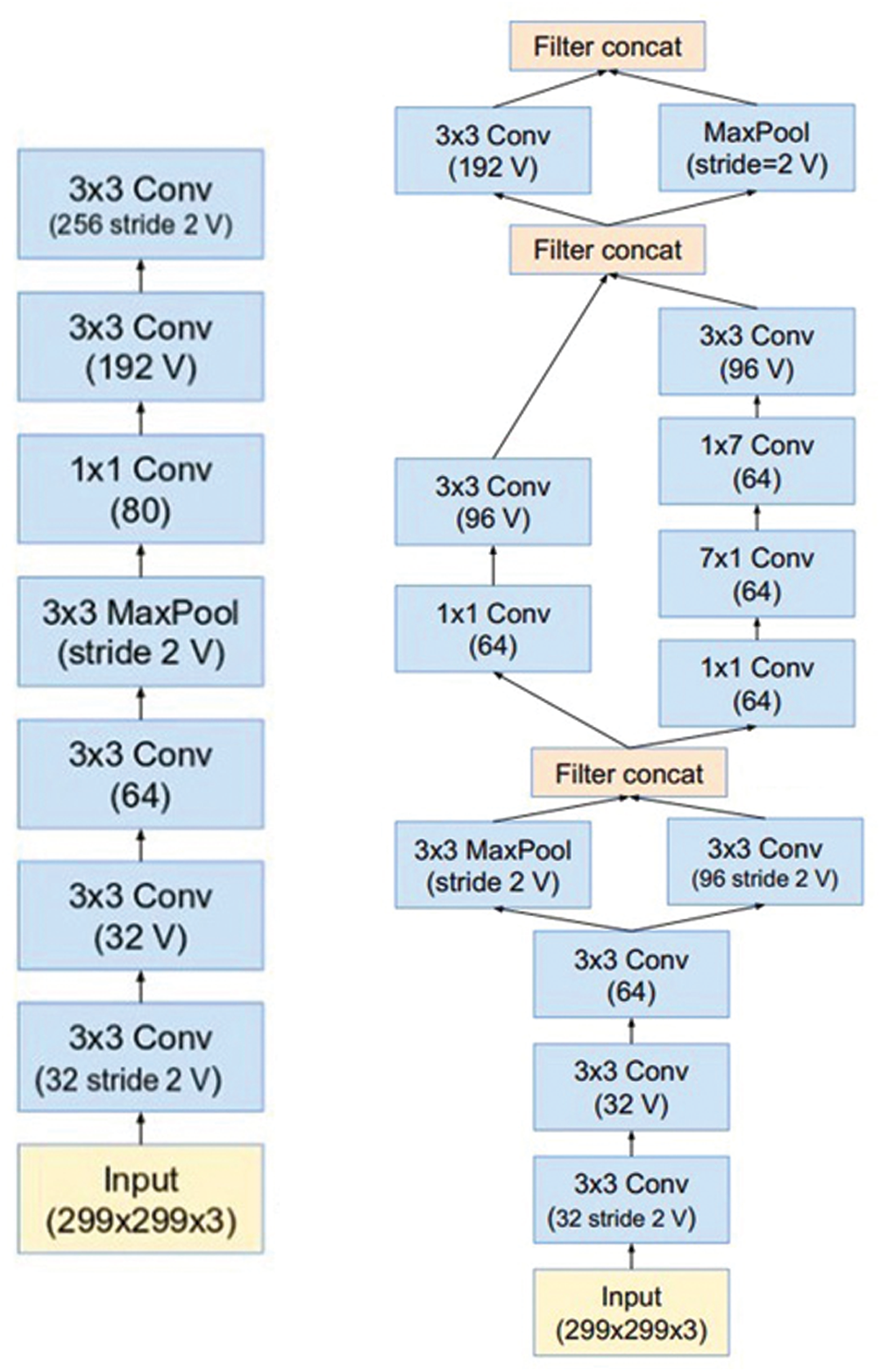

The authors in [9] tested the ability of ten cutting-edge data mining classification models to forecast RPW infections in its early phases, before major tree damage occurs, using plant-size and temperature measurements obtained from individual trees. The experimental results demonstrated that using data mining, RPW infestations could be expected with an accuracy of up to 93%, a precision of above 87%, a recall of 100%, and an F-measure of greater than 93%. To identify the presence of RPW using its own bioacoustics features, a new signal processing platform has been designed [32]. An analysis of the parameters for selecting the best time frame length as well as window feature is given. The findings indicate that the established method with the selected representative characteristics is more effective. The authors in [33] proposed a study to create algorithms that can classify the RPW and differentiate it from other insects present in palm tree habitats using image recognition and artificial neural network (ANN) techniques. It was discovered that an ANN of three-layer using the Conjugate Gradient with Powell/Beale Restarts method is the most effective for identifying the RPW. In [34], the normal and thermal images of palm trees have been used to detect RPW, blight spots and leaf spots diseases. CNN was used to distinguish between blight spots and leaf spots infection, and support vector machine (SVM) was used to distinguish between the leaf spots and RPW pests. The accuracy ratio success rates for the CNN and SVM algorithms were 97.9% and 92.8 percent, respectively. Based on remote images from the Alicante area in Spain, researchers in [35] introduced the first region-wide geographical collection of Phoenix dactylifera and Phoenix canariensis palm trees. The presented detection model, which was created using RetinaNet, offers a quick and easy way to map isolated and densely dispersed date and canary palms and other Phoenix palms as well. In order to monitor palms remotely, an IoT-based smart prototype has been also suggested for the early detection of red palm weevil invasion [36]. The data is collected using accelerometer sensors, then signal processing and statistical methods are applied to analyze this data and define the attack fingerprint. In [37], a solution for early identification of RPW in large farms is presented using a hybrid of ML and fiber-optic distributed acoustic sensing (DAS) system. The obtained results showed that ANN with 99.9% and CNN with 99.7% accuracy values can effectively distinguish between healthy and infested palm trees. Tab. 1 summarizes the related work review and their main characteristics. It is evident from the literature that image-based methods had high accuracy values compared sound-based ones. This motivates this work to consider the improvement of sound detection models.

3.1 TreeVibes Device and Dataset

Sounds of RPW and other borers are collected using the TreeVibes recording device, as depicted in Fig. 3 [38]. It is a public database and is freely available at http://www.kaggle.com/potamitis/treevibes (last accessed on 20 February 2021). The piezoelectric crystal as an embedded microphone has been used for sensing the vibrations inside the trees, i.e., sounds of the borers including the RPWs. The acquired signal can be converted to short audio signals to be stored and transmitted through wireless IoT networks and a cloud server. An example of mean spectral profile for three different sounds of a RPW is shown in Fig. 3b [39]. The TreeVibes device cannot count the number of borers or their location in the palm tree, but it is able to detect their feeding sounds within 1.5 to 2 m radius of a spherical region [38]. Therefore, the positioning of TreeVibes sensing device on the trunk of palm tree is crucial to achieve the expected early detection performance.

Figure 3: (a) TreeVibes device for recording palm tree sounds of pests [35], and (b) Graphical representation of three different impulses of detected RPW sounds

The TreeVibes database includes 35 folders with short and annotated audio recordings. The sampling frequency of these recordings is 8.0 kHz. All tree vibration sounds have been saved in a wave format. The proposed classifier was trained and tested on 26 and 9 folders, respectively. The infested data represent the potential sounds of feeding and/or moving wood-boring pests, such as Rhynchophorus ferrugineus (Red palm weevil), Aromia bungii (Red necked longicorn) and Xylotrechus chinensis [35]. In this study, we assumed the infested status of date palm trees is caused by RPWs only.

This section gives an overview of the proposed transfer learning models for identifying the health status of palm trees as follows. First, different version architectures of Inception models are described, highlighting the main features of each transfer learning model. Second, merging between Inception and Resnet architectures in the Inception-Resnet model is also presented, showing a comparison between two structures of Inception-Resnet-V1 and V2.

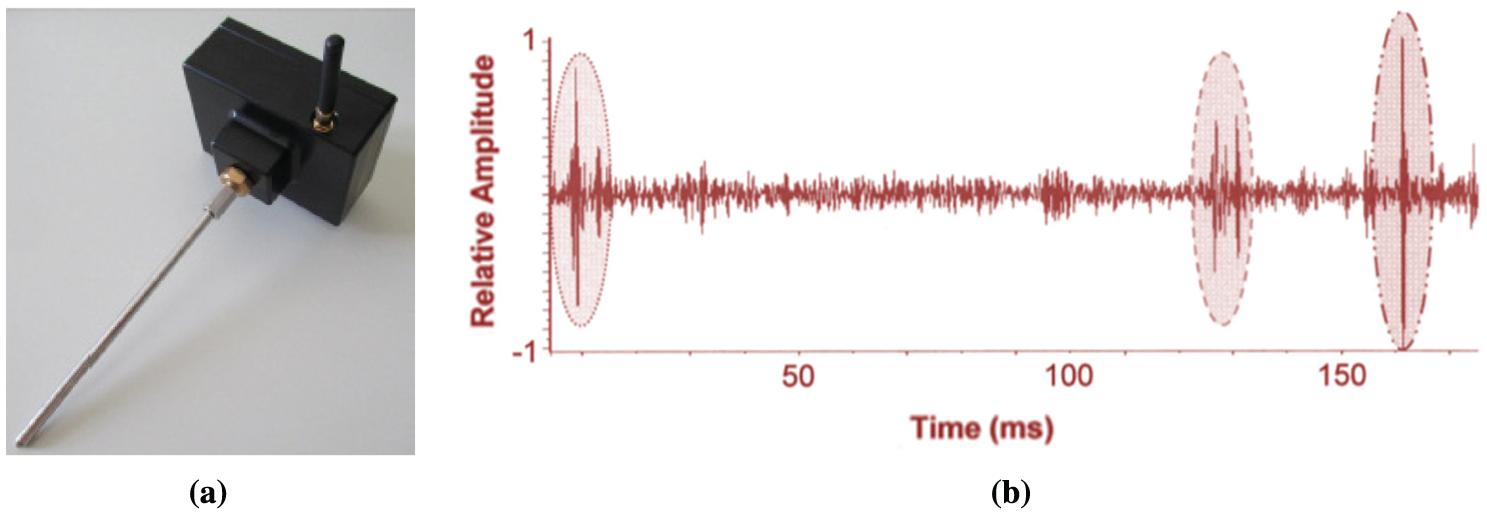

Fig. 4 depicts main architectures for two versions of Inception model. The first version of Inception classifier was introduced by Szegedy et al. [40] to achieve advancement over the state-of-the art classifiers on the ImageNet Large-Scale Visual Recognition Challenge 2014 (ILSVRC14). Inception-V1 improved the accuracy performance of detection and classification by increasing the depth and width layers of the CNN model at constant computational cost. The optimized Inception-V1 architecture was based on the Hebbian principle and multi-scale processing. Inception-V1 with a dimension reduction of 22 layers CNN is also called GoogleNet [40], as shown in Fig. 4a.

Figure 4: (a) Inception-V1 with dimensions reduction (GoogleNet) [40], and (b) Inception-V2 [41]

Inception-V2 and V3 were introduced in 2016 by the same Google research group, as shown in Fig. 4b [41]. The 5

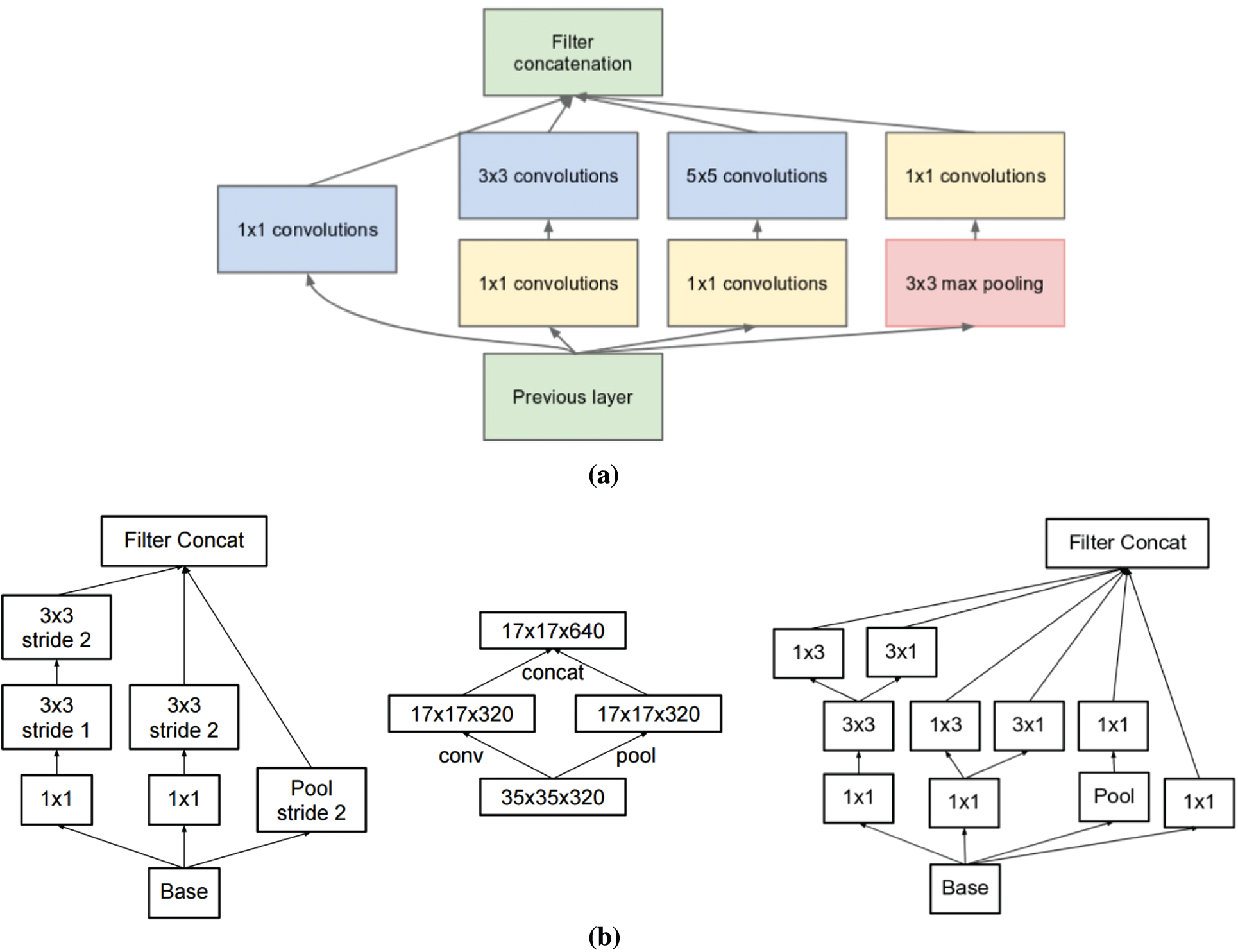

Inception-ResNet and Inception-V4 were presented to validate the positive influence of residual connections on deep learning-based classification [42]. Here, these models of Inception were modified through “Reduction Blocks”, changing the width and the height of its grid network architecture. The functionality of reduction blocks was inspired by the outstanding performance of residual neural network, namely ResNet [26]. The hybrid Inception and ResNet module resulted two sub-versions, namely InceptionResNet-V1 and V2 [42], as depicted in Fig. 5.

Both InceptionResNet-V1 and V2 have the same structural modules and reduction blocks. Nevertheless, the computational costs of Inception-ResNet-V1 and V2 are similar to the computing budgets of Inception-V3 and Inception-V4, respectively. The hyper-parameter settings such as optimizer and batch size present the only difference between these two Inception-ResNets.

Figure 5: Architectures of InceptionResNet-V1(left), and InceptionResNet-V2 (right) [42]

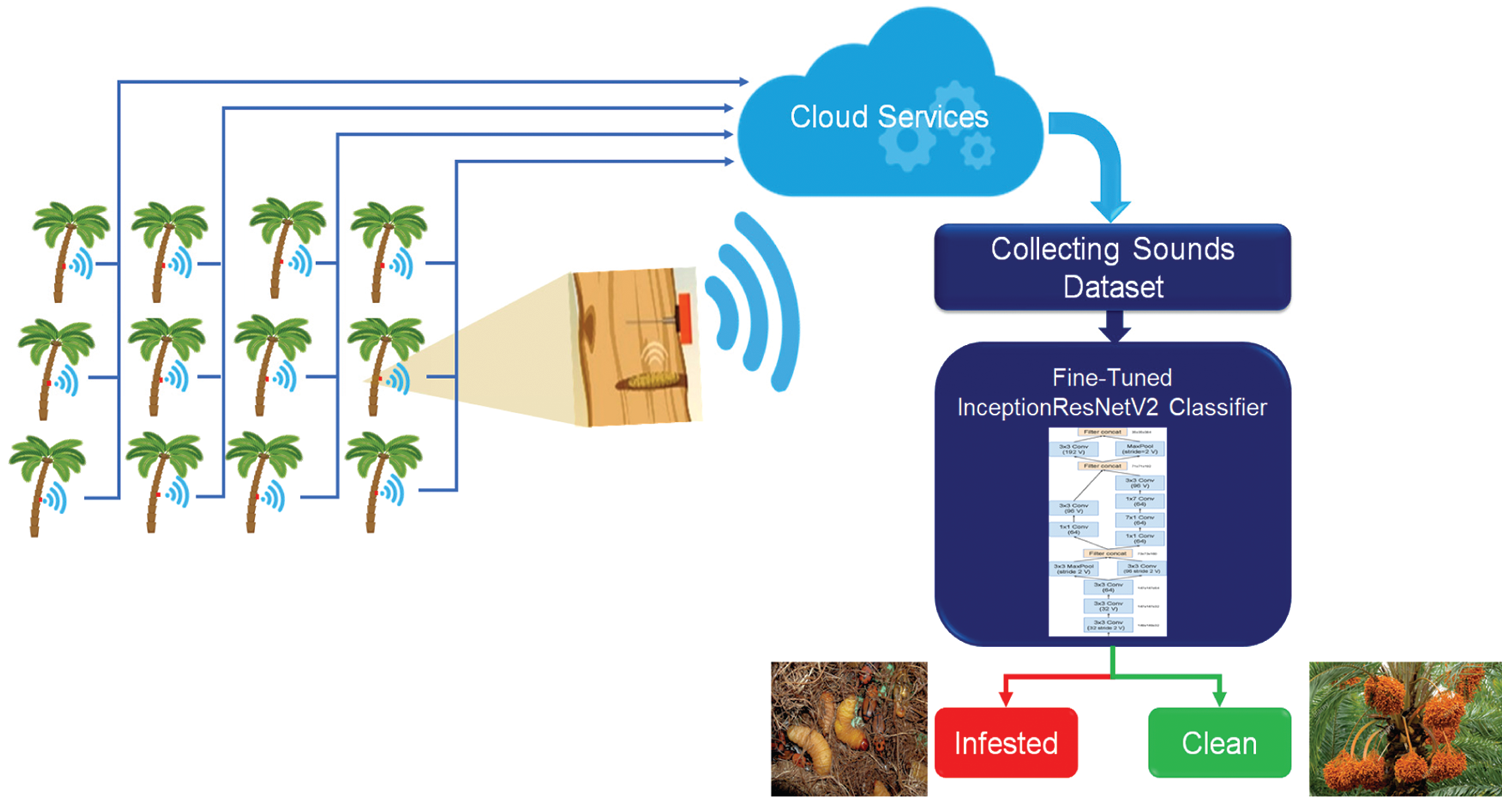

3.3 Proposed RPW Detection System

Schematic diagram of our proposed intelligent IoT-aided detection system of RPWs is shown in Fig. 6. The proposed RPW detection system includes three main modules as follows. First, TreeVibes sensor devices are carefully mounted on each palm trunk to setup wireless sensor network in the farm. Palm trees are labeled based on the sensor node number in the wireless network to identify the infested cases successfully. Moreover, the infested tree location can be monitored on the farm map by using a global positioning system (GPS) associated with the TreeVibes device [38]. Second, a cloud server received wireless acquired sound signals of palm trees. These audio data on the cloud server can be stored for further on-line analysis by our fine-tuned deep transfer learning model, i.e., InceptionResNet-V2, as depicted in Fig. 6. Third, the binary classification task of clean and infested trees can be automatically done either on the cloud server or by a computer system of the user. In the field, there are many different sounds that can be recorded. For instance, agricultural environment includes bird or animal vocalizations, rains and wind sounds, and voices of farm workers. Therefore, it may be a challenging task to extract sounds of RPW inside trees from these external noisy signals in the field. However, characteristics of generated audio signal by the RPW borers are distinguished impulsive trains (see Fig. 3b). That plays an important role to enhance the accurate performance of proposed Inception-ResNet-V2 classifier, as presented in Section 4.

Figure 6: Schematic diagram of proposed IoT-based detection system of RPW sounds using fine-tuned InceptionResNet-V2 Classifier

3.4 Performance Analysis Metrics

The performance of fine-tuned InceptionResNet-V2 classifier was analyzed for identifying the RPW infestation using the following evaluation metrics. The cross-validation estimation [43] is used to build a confusion matrix. A 2

All sounds of RPW and other borers collected from the public dataset [38] have been scaled to 224

Classification procedure of infested and clean palm trees has been automatically done using the proposed InceptionResNet-V2 model, as shown above in Fig. 6. The sound recordings of pests database have been randomly 80%–20% split for conducting training, validation and test phases. The value of each hyperparameter is carefully tuned for the proposed InceptionResNet-V2 as follows. Number of epochs and batch size are 40 and 60, respectively. The learning rate is 0.001. An update of stochastic Adam optimizer [45], namely Adamax has been applied to accomplish targeted convergence during training step. Softmax activation function of the output classifier layer was used to identify infested and clean classes of palm tree sounds. Moreover, these hyperparameter values have been also used for other transfer learning models to justify the classification performance of our proposed InceptionResNet-V2 model with other deep learning classifiers in this study.

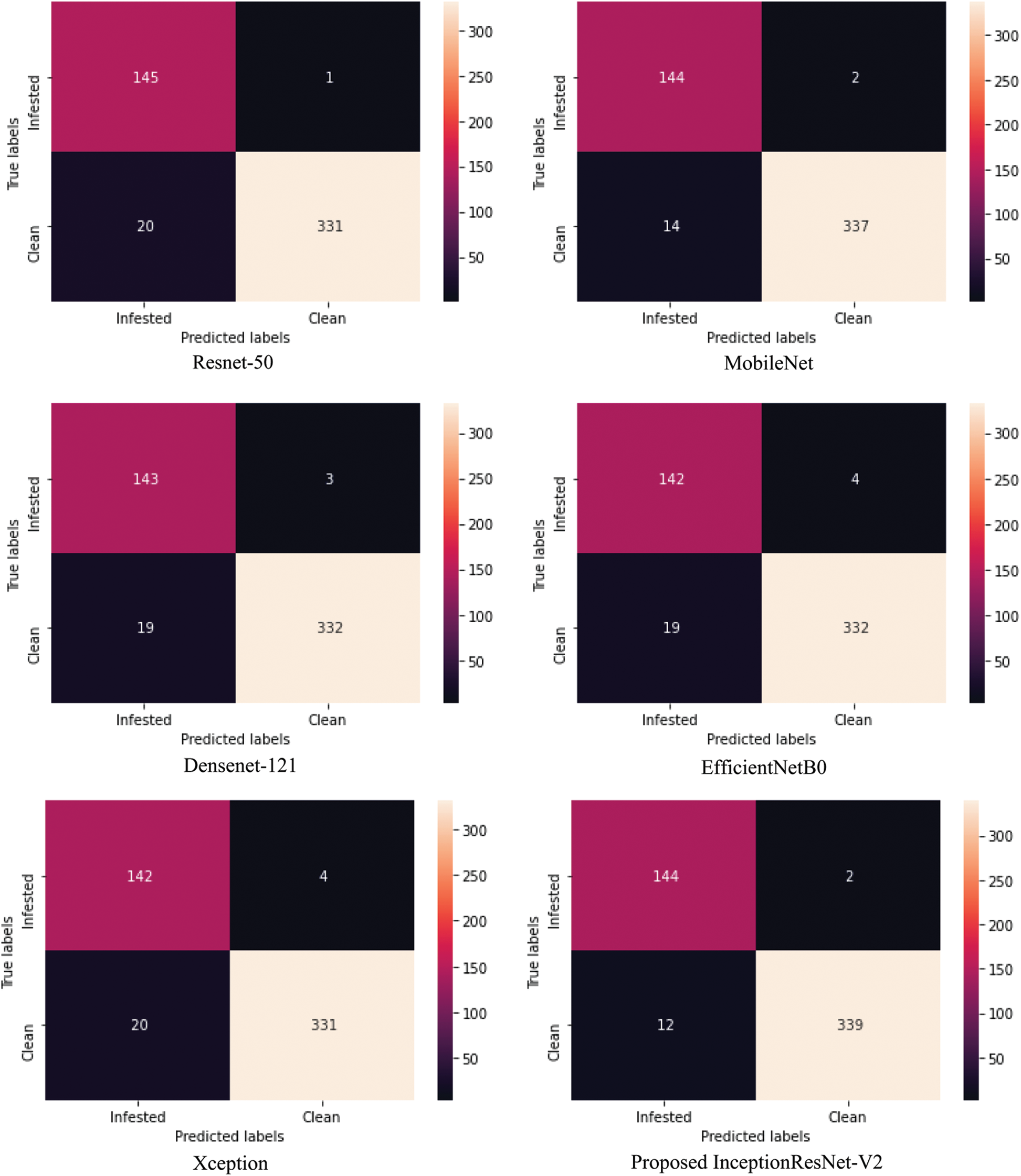

Fig. 7 shows the resulted confusion matrics of six transfer learning models, which are Resnet-50, MobileNet, Densnet-121, EfficientNetB0, Xception and our proposed InceptionResNet-V2. The total number of tested recoding sounds are 497 such that infested and clean sounds are 146 and 351 samples, respectively. Our proposed InceptionResNet-V2 showed superior classification performance with the highest accuracy score of 97.18% and 14 samples of infested and clean are only misclassified. Resnet-50 presents the most accurate classifier of infested cases, but it fails to identify 20 clean samples correctly. Three models of Densenet-121, EfficientNetB0 and Xception showed approximately equal classification accuracy rates of 95.58%, 95.37% and 95.17%, respectively. MobileNet represents the second-best RPW sounds classifier with accuracy score of 96.78%.

Figure 7: Confusion matrix results of all tested transfer learning classifiers for identifying RPW infestation and clean palm trees

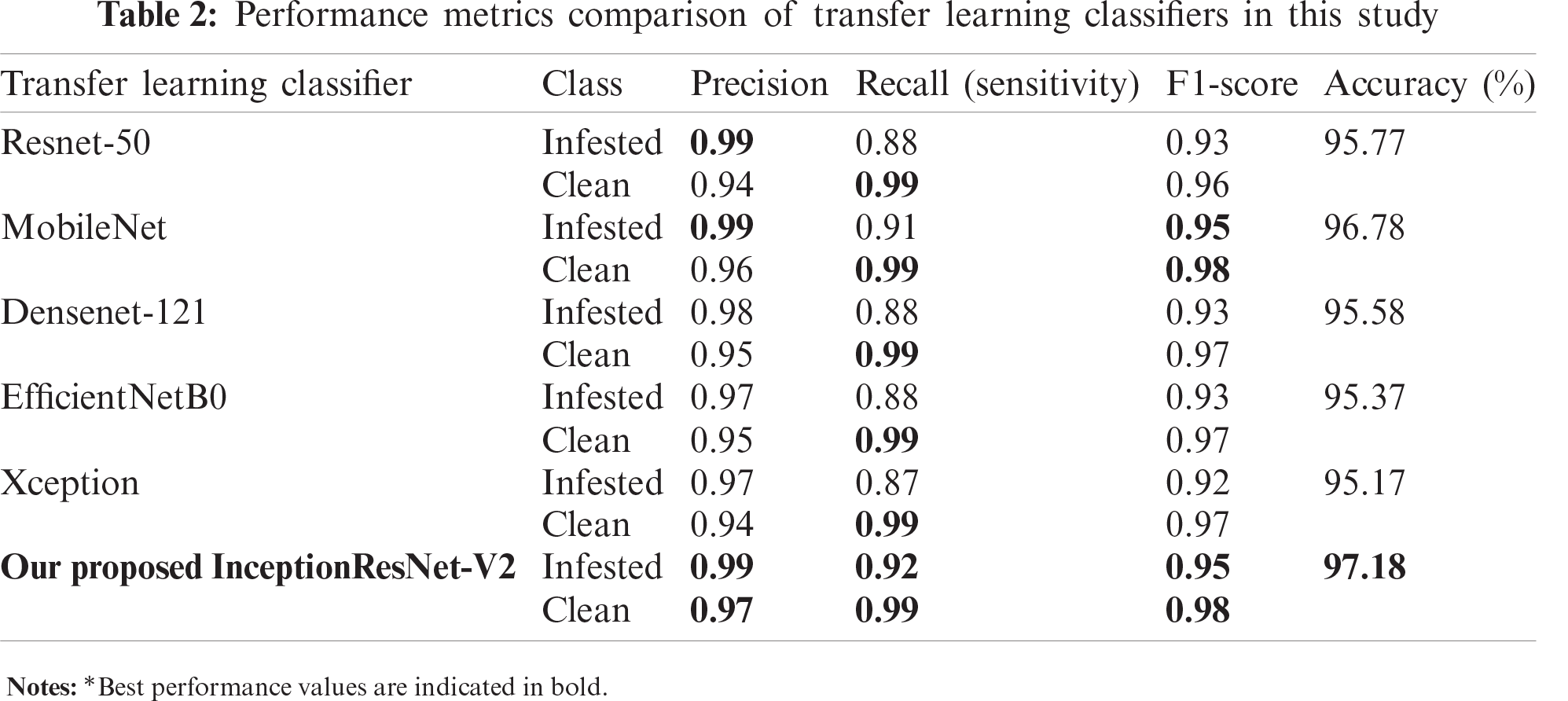

Results of four performance evaluation metrics, which are accuracy, precision, recall and F1-score in Eqs. (1)–(4) are reported for each transfer learning classifier, as illustrated in Tab. 2. Our proposed Inception-ResNet-V2 showed the best values for all tested infested and clean cases with accuracy score of 97.18. MobileNet is still the second-best classifier and achieved accuracy score of 96.78%, while Xception model showed relatively the lowest value of classification accuracy 95.17%.

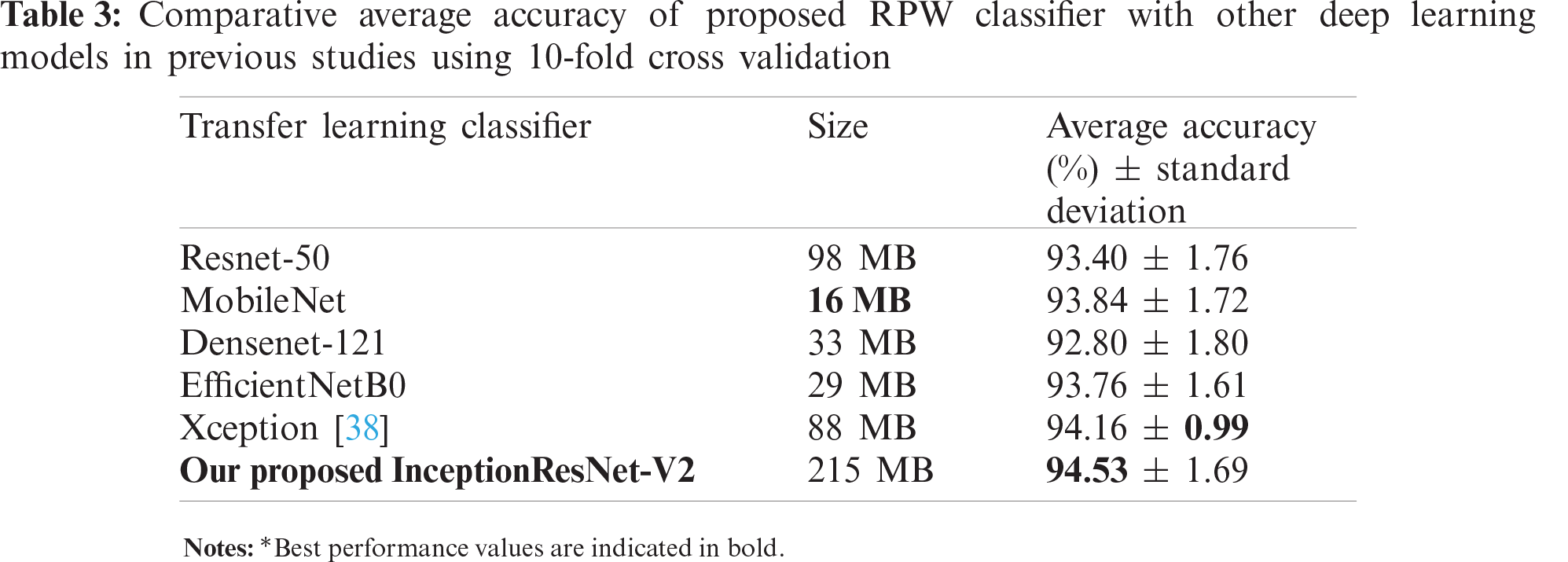

Furthermore, Tab. 3 illustrates a comparison between our proposed classifier and five deep learning models in previous studies to identify the health monitoring status of palm trees. InceptionResnet-V2 outperforms other methods in previous studies, but its size is large (215 MB). Xception model showed minimum value of standard deviation (±0.99). Nevertheless, the MobileNet classifier constitutes a smallest-size advantage of 16 MB and a good average accuracy of 93.84 ± 1.72.

Advanced IoT-based health monitoring of the date palm trees becomes essential for saving crop productivity and preventing high tree mortality caused by RPWs. Automatic early detection of RPW infestation can be achieved by acquired vibrating sounds of feeding and/or moving RPWs via the TreeVibes sensing device [38], as shown in Fig. 6. In addition, deployed deep learning models such as fine-tuned InceptionResNet-V2 classifier can achieve a good classification performance to identify infestation and clean tree status accurately, as illustrated in Tabs. 2 and 3.

In this study, our proposed InceptionResNet-V2 classifier was compared with five transfer learning models, namely Resnet-50, MobileNet, Densenet-121, EfficientNetB0 and Xception. These five models have been previously investigated by Rigakis et al. [38], showing that Xception model is the top rank classifier of pest sounds inside trunks of trees. It achieved average classification accuracy of 94.16% with minimal standard deviation of ±0.99 based on 10-fold cross validation. In contrast, the fine-tuned InceptionResNet-V2 demonstrated a competitive classification accuracy of 94.53% with higher standard deviation of ±1.69, as presented in Tab. 3. The only limitation of our proposed classification model is its large size of 215 MB. That required additional resources to accomplish the early detection task of RPWs in the proposed IoT network framework, as depicted in Fig. 6. However, using cloud computing services can solve the above problem of limited hardware resources and the availability of GPUs at end users.

Although security and privacy issues of IoT-based smart farming have been discussed in recent studies [12–14], these issues are not considered in this study. Because a single security protocol of IoT-systems in agriculture is still not sufficient to prevent leakage of information [12]. However, basic requirements of secure IoT-based agricultural systems can be fulfilled, i.e., authentication, access control and confidentiality of the stakeholders. Other integrated sub-systems such as protection, fault-diagnosis and reaction systems against danger and cyberattacks should be also considered in the security model of smart precision agriculture systems. The above security requirements and sub-systems will be considered in the future version of our proposed intelligent IoT-based detection system of RPW sounds.

In addition, selecting the hyperparameter values of transfer learning models is an iterative complicated process to accomplish the targeted task. Therefore, recent studies suggested the utilization of bio-inspired optimization techniques such as whale optimization algorithm (WOA) [46] and adaptive particle swarm optimization (APSO) [47] to automate the design of deep neural networks, with increasing required training computing cost. Nevertheless, our proposed InceptionResNet-V2 still achieved best classification performance for RPW sounds detection, as illustrated in Tabs. 2 and 3.

In this study, a new IoT-based early detection system of RPWs has been developed based on acquired sounds of palm trees. The TreeVibes sensing device was used to acquire and record short vibration sounds of RPWs inside palm trees. Here, the role of cloud services is to save these recoding sounds and forward them for deep learning classifiers at the end user, as shown in Fig. 6. Deep transfer leaning model, namely InceptionResNetV2 was fine-tuned to distinguish between clean and infested trees, as depicted in Fig. 5. Using 10-fold cross validation, the developed classifier showed a superior performance over other transfer learning models in previous studies, achieving accuracy score of 94.53 ± 1.69 as given Tab. 3.

In future work, we aim to minimize the computing power resources by exploiting cloud computing services [48] to accomplish the early detection task of RPWs on a developed mobile application for guiding specialists and farmers. Also, we are working on enhancing the classification accuracy of our proposed system by using other advanced deep learning models, e.g., generative adversarial neural networks (GANs) [49], considering the security and privacy aspects of open IoT network communications [50,51] for sending sound data of date palm trees safely.

Acknowledgement: The authors extend their appreciation to the Deputyship for Research & Innovation, Ministry of Education in Saudi Arabia for funding this research work through the project number (UB-26-1442).

Funding Statement: This research received the support from the Deputyship for Research & Innovation, Ministry of Education in Saudi Arabia through the project number (UB-26-1442).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. S. M. Aleid, J. M. Al-Khayri and A. M. Al-Bahrany, “Date palm status and perspective in Saudi Arabia,” in Date Palm Genetic Resources and Utilization. vol. 2. Asia and Europe: Springer, pp. 49–95, 2015. [Google Scholar]

2. N. A. Alanazi, “Isolation and identification of bacteria associated with red palm weevil, rhynchophorus ferrugineus from hail region, northern Saudi Arabia,” Bioscience Biotechnology Research Communications, vol. 12, no. 2, pp. 266–274, 2019. [Google Scholar]

3. I. Ashry, Y. Mao, Y. Al-Fehaid, A. Al-Shawaf, M. Al-Bagshi et al., “Early detection of red palm weevil using distributed optical sensor,” Scientific Reports, vol. 10, no. 3155, pp. 1–8, 2020. [Google Scholar]

4. E. E. Omran, “Nano-technology for real-time control of the red palm weevil under climate change,” in Climate Change Impacts on Agriculture and Food Security in Egypt. Berlin, Germany: Springer Water, pp. 321–344, 2020. [Google Scholar]

5. W. A. Kubar, H. A. Sahito, T. Kousar, N. A. Mallah, F. A. Jatoi et al., “Biology of red palm weevil on different date palm varieties under laboratory conditions,” Innovative Techniques in Agriculture, vol. 1, no. 3, pp. 130–140, 2017. [Google Scholar]

6. H. A. F. El-Shafie and J. R. Faleiro, “Red palm weevil rhynchophorus ferrugineus (Coleoptera: CurculionidaeGlobal invasion, current management options, challenges and future prospects,” in Invasive Species-Introduction Pathways, Economic Impact, and Possible Management Options. London, UK: IntechOpen, pp. 1–29, 2020. [Google Scholar]

7. H. A. Eldin, K. Waleed, M. Samir, M. Tarek, H. Sobeah et al., “A survey on detection of Red Palm Weevil inside palm trees: Challenges and applications,” in Software and Information Engineering. 9th Int. Conf. 2020, Cairo, Egypt, pp. 119–125, 2020. [Google Scholar]

8. N. M. Al-Dosary, S. Al-Dobai and J. R. Faleiro, “Review of the management of red palm weevil rhynchophorus ferrugineus olivier in date palm Pheonix dactylifera L,” Emirates Journal of Food and Agriculture, vol. 28, no. 1, pp. 34–44, 2016. [Google Scholar]

9. H. Kurdi, A. Al-Aldawsari, I. Al-Turaiki and A. S. Aldawood, “Early detection of red palm weevil, rhynchophorus ferrugineus (olivierinfestation using data mining,” Plants, vol. 10, no. 1, pp. 1–8, 2021. [Google Scholar]

10. M. E. A. Mohammed, H. A. F. El-Shafie and M. R. Alhajhoj, “Recent trends in the early detection of the invasive red palm weevil, rhynchophorus ferrugineus (Olivier),” in Invasive Species-introduction Pathways, Economic Impact, and Possible Panagement Options. London, UK: IntechOpen, pp. 1–16, 2020. [Google Scholar]

11. M. E. Karar, M. al-Rasheed, A. Al-Rasheed and O. Reyad, “IoT and neural network-based water pumping control system for smart irrigation,” Information Sciences Letters, vol. 9, no. 2, pp. 107–112, 2020. [Google Scholar]

12. I. A. Elgendy, W.-Z. Zhang, Y. Zeng, H. He, Y.-C. Tian et al., “Efficient and secure multi-user multi-task computation offloading for mobile-edge computing in mobile IoT networks,” IEEE Transactions on Network and Service Management, vol. 17, no. 4, pp. 2410–2422, 2020. [Google Scholar]

13. A. A. Abd EL-Latif, B. Abd-El-Atty, E. M. Abou-Nassar and S. E. Venegas-Andraca, “Controlled alternate quantum walks based privacy preserving healthcare images in Internet of Things,” Optics & Laser Technology, vol. 124, pp. 105942, 2020. [Google Scholar]

14. M. S. Farooq, S. Riaz, A. Abid, K. Abid and M. A. Naeem, “A survey on the role of IoT in agriculture for the implementation of smart farming,” IEEE Access, vol. 7, pp. 156237–156271, 2019. [Google Scholar]

15. R. Gad, M. Talha, A. A. Abd El-Latif, M. Zorkany, A. El-Sayed et al., “Iris recognition using multi-algorithmic approaches for cognitive internet of things (CIoT) framework,” Future Generation Computer Systems, vol. 89, pp. 178–191, 2018. [Google Scholar]

16. I. A. Elgendy, W. Z. Zhang, H. He, B. B. Gupta and A. A. Abd El-Latif, “Joint computation offloading and task caching for multi-user and multi-task MEC systems: Reinforcement learning-based algorithms,” Wireless Networks, vol. 27, pp. 2023–2038, 2021. [Google Scholar]

17. M. Hammad, A. M. Iliyasu, A. Subasi, E. S. L. Ho and A. A. Abd El-Latif, “A multitier deep learning model for Arrhythmia detection,” IEEE Transactions on Instrumentation and Measurement, vol. 70, pp. 1–9, 2021. [Google Scholar]

18. M. Ayaz, M. Ammad-Uddin, Z. Sharif, A. Mansour and E. M. Aggoune, “Internet-of-things (IoT)-based smart agriculture: Toward making the fields talk,” IEEE Access, vol. 7, pp. 129551–129583, 2019. [Google Scholar]

19. K. Thenmozhi and U. S. Reddy, “Crop pest classification based on deep convolutional neural network and transfer learning,” Computers and Electronics in Agriculture, vol. 164, no. 4, pp. 104906, 2019. [Google Scholar]

20. X. Li, Y. Grandvalet, F. Davoine, J. Cheng, Y. Cui et al., “Transfer learning in computer vision tasks: Remember where you come from,” Image and Vision Computing, vol. 93, no. 2, pp. 103853, 2020. [Google Scholar]

21. M. E. Karar, E. E.-D. Hemdan and M. A. Shouman, “Cascaded deep learning classifiers for computer-aided diagnosis of COVID-19 and pneumonia diseases in X-ray scans,” Complex & Intelligent Systems, vol. 7, no. 1, pp. 235–247, 2021. [Google Scholar]

22. R. A. Zeineldin, M. E. Karar, J. Coburger, C. R. Wirtz and O. Burgert, “DeepSeg: Deep neural network framework for automatic brain tumor segmentation using magnetic resonance FLAIR images,” International Journal of Computer Assisted Radiology and Surgery, vol. 15, no. 6, pp. 909–920, 2020. [Google Scholar]

23. B. Liu, Y. Zhang, J. Lv, A. Majeed, C.-H. Chen et al., “A cost-effective manufacturing process recognition approach based on deep transfer learning for CPS enabled shop-floor,” Robotics and Computer-Integrated Manufacturing, vol. 70, pp. 102128, 2021. [Google Scholar]

24. A. I. Károly, P. Galambos, J. Kuti and I. J. Rudas, “Deep learning in robotics: Survey on model structures and training strategies,” IEEE Transactions on Systems, Man, and Cybernetics: Systems, vol. 51, no. 1, pp. 266–279, 2021. [Google Scholar]

25. S. H. Lee, H. Goëau, P. Bonnet and A. Joly, “New perspectives on plant disease characterization based on deep learning,” Computers and Electronics in Agriculture, vol. 170, pp. 105220, 2020. [Google Scholar]

26. K. He, X. Zhang, S. Ren and J. Sun, “Deep residual learning for image recognition,” in Proc. of the IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, Las Vegas, USA, vol. 2016, pp. 770–778, 2016. [Google Scholar]

27. C. Bi, J. Wang, Y. Duan, B. Fu, J.-R. Kang et al., “MobileNet based Apple leaf diseases identification,” Mobile Networks and Applications, vol. 85, pp. 45, 2020. [Google Scholar]

28. F. Chollet, “Xception: Deep learning with depthwise separable convolutions,” in 2017 IEEE Conf. on Computer Vision and Pattern Recognition, Honolulu, USA, pp. 1800–1807, 2017. [Google Scholar]

29. A. Koirala, K. B. Walsh, Z. Wang and C. McCarthy, “Deep learning–method overview and review of use for fruit detection and yield estimation,” Computers and Electronics in Agriculture, vol. 162, pp. 219–234, 2019. [Google Scholar]

30. M. H. Saleem, S. Khanchi, J. Potgieter and K. M. Arif, “Image-based plant disease identification by deep learning meta-architectures,” Plants, vol. 9, no. 11, pp. 1–23, 2020. [Google Scholar]

31. L. Santos, F. N. Santos, P. M. Oliveira and P. Shinde, “Deep learning applications in agriculture: A short review,” Fourth Iberian Robotics Conf., ROBOT 2019, Advances in Intelligent Systems and Computing, vol. 1092, pp. 139–151, 2020. [Google Scholar]

32. W. B. Hussein, M. A. Hussein and T. Becker, “Application of the signal processing technology in the detection of red palm weevil,” in 17th European Signal Processing Conf., Glasgow, UK, pp. 1597–1601, 2009. [Google Scholar]

33. S. M. Al-Saqer and G. M. Hassan, “Artificial neural networks based red palm weevil (rynchophorus ferrugineous, olivier) recognition system,” American Journal of Agricultural and Biological Sciences, vol. 6, no. 3, pp. 356–364, 2011. [Google Scholar]

34. H. Alaa, K. Waleed, M. Samir, M. Tarek, H. Sobeah et al., “An intelligent approach for detecting palm trees diseases using image processing and machine learning,” Int. J. of Advanced Computer Science and Applications, vol. 11, no. 7, pp. 434–441, 2020. [Google Scholar]

35. M. Culman, S. Delalieux and K. V. Tricht, “Individual palm tree detection using deep learning on RGB imagery to support tree inventory,” Remote Sensing, vol. 12, no. 21, pp. 1–30, 2020. [Google Scholar]

36. A. Koubaa, A. Aldawood, B. Saeed, A. Hadid, M. Ahmed et al., “Smart palm: An IoT framework for red palm weevil early detection,” Agronomy, vol. 10, no. 7, pp. 1–21, 2020. [Google Scholar]

37. B. Wang, Y. Mao, I. Ashry, Y. Al-Fehaid, A. Al-Shawaf et al., “Towards detecting red palm weevil using machine learning and fiber optic distributed acoustic sensing,” Sensors, vol. 21, no. 5, pp. 1–14, 2021. [Google Scholar]

38. I. Rigakis, I. Potamitis, N.-A. Tatlas, S. M. Potirakis and S. Ntalampiras, “TreeVibes: Modern tools for global monitoring of trees for borers,” Smart Cities, vol. 4, no. 1, pp. 271–285, 2021. [Google Scholar]

39. W. M. Richard, “Recent developments in the use of acoustic sensors and signal processing tools to target early infestations of Red Palm Weevil in agricultural environments,” Florida Entomologist, vol. 94, no. 4, pp. 761–765, 2011. [Google Scholar]

40. C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed et al., “Going deeper with convolutions,” Proc. of the IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, vol. 2015, pp. 1–9, 2015. [Google Scholar]

41. C. Szegedy, V. Vanhoucke, S. Ioffe, J. Shlens and Z. Wojna, “Rethinking the Inception Architecture for Computer Vision,” in 2016 IEEE Conf. on Computer Vision and Pattern Recognition, Las Vegas, USA, pp. 2818–2826, 2016. [Google Scholar]

42. C. Szegedy, S. Ioffe, V. Vanhoucke and A. A. Alemi, “Inception-v4, inception-ResNet and the impact of residual connections on learning,” in Proc. of the Thirty-First AAAI Conf. on Artificial Intelligence, San Francisco, California, USA, 2017. [Google Scholar]

43. M. Sokolova and G. Lapalme, “A systematic analysis of performance measures for classification tasks,” Information Processing & Management, vol. 45, no. 4, pp. 427–437, 2009. [Google Scholar]

44. A. Gulli, A. Kapoor and S. Pal, Deep Learning with TensorFlow 2 and Keras: Regression, ConvNets, GANs, RNNs, NLP, and more with TensorFlow 2 and the Keras API, 2nd ed., Birmingham, Mumbai: Packt Publishing, 2019. [Google Scholar]

45. D. P. Kingma and J. J. C. Ba, “Adam: A method for stochastic optimization,” arXiv, vol. abs/1412.6980, pp. 1–15, 2015. [Google Scholar]

46. S. Mirjalili and A. Lewis, “The whale optimization algorithm,” Advances in Engineering Software, vol. 95, no. 12, pp. 51–67, 2016. [Google Scholar]

47. Z. Zhan, J. Zhang, Y. Li and H. S. Chung, “Adaptive particle swarm optimization,” IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), vol. 39, no. 6, pp. 1362–1381, 2009. [Google Scholar]

48. M. E. Karar, F. Alsunaydi, S. Albusaymi and S. Alotaibi, “A new mobile application of agricultural pests recognition using deep learning in cloud computing system,” Alexandria Engineering Journal, vol. 60, no. 5, pp. 4423–4432, 2021. [Google Scholar]

49. J. Li, Y. Shen and C. Yang, “An adversarial generative network for crop classification from remote sensing timeseries images,” Remote Sensing, vol. 13, no. 1, pp. 1–15, 2021. [Google Scholar]

50. F. Nife, Z. Kotulski and O. Reyad, “New SDN-oriented distributed network security system,” Appl. Math. Inf. Sci., vol. 12, no. 4, pp. 673–683, 2018. [Google Scholar]

51. O. Reyad and M. E. Karar, “Secure CT-image encryption for COVID-19 infections using HBBS-based multiple key-streams,” Arabian Journal for Science and Engineering, vol. 46, no. 4, pp. 3581–3593, 2021. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |