DOI:10.32604/cmc.2021.017337

| Computers, Materials & Continua DOI:10.32604/cmc.2021.017337 |  |

| Article |

Screening of COVID-19 Patients Using Deep Learning and IoT Framework

1Manipal University Jaipur, India

2Bennett University, Greater Noida, India

3Thapar Institute of Engineering and Technology, Patiala, India

4Medical Convergence Research Center, Wonkwang University, Iksan, Korea

5Department of Computer Science and Engineering, Soonchunhyang University, Asan, Korea

6Department of Computer Science, HITEC University, Taxila, Pakistan

*Corresponding Author: Yunyoung Nam. Email: ynam@sch.ac.kr

Received: 27 January 2021; Accepted: 14 April 2021

Abstract: In March 2020, the World Health Organization declared the coronavirus disease (COVID-19) outbreak as a pandemic due to its uncontrolled global spread. Reverse transcription polymerase chain reaction is a laboratory test that is widely used for the diagnosis of this deadly disease. However, the limited availability of testing kits and qualified staff and the drastically increasing number of cases have hampered massive testing. To handle COVID-19 testing problems, we apply the Internet of Things and artificial intelligence to achieve self-adaptive, secure, and fast resource allocation, real-time tracking, remote screening, and patient monitoring. In addition, we implement a cloud platform for efficient spectrum utilization. Thus, we propose a cloud-based intelligent system for remote COVID-19 screening using cognitive-radio-based Internet of Things and deep learning. Specifically, a deep learning technique recognizes radiographic patterns in chest computed tomography (CT) scans. To this end, contrast-limited adaptive histogram equalization is applied to an input CT scan followed by bilateral filtering to enhance the spatial quality. The image quality assessment of the CT scan is performed using the blind/referenceless image spatial quality evaluator. Then, a deep transfer learning model, VGG-16, is trained to diagnose a suspected CT scan as either COVID-19 positive or negative. Experimental results demonstrate that the proposed VGG-16 model outperforms existing COVID-19 screening models regarding accuracy, sensitivity, and specificity. The results obtained from the proposed system can be verified by doctors and sent to remote places through the Internet.

Keywords: Medical image analysis; transfer learning; vgg-16; image processing system pipeline; quantitative evaluation; internet of things

In 2019, Wuhan city in China emerged as the epicenter of a deadly virus denominated severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2), which causes the coronavirus disease (COVID-19) [1]. COVID-19 is a highly contagious disease that was declared a pandemic by the World Health Organization on March 11, 2020 [2,3]. According to the Centers for Disease Control and Prevention of the USA, COVID-19 is a respiratory illness whose common symptoms include dry cough, fever, muscle pain, and fatigue [4]. Due to the unavailability of any effective therapeutic vaccine or drug, COVID-19 may affect people’s lungs, breathing tract, and other body organs and systems [5]. Therefore, advanced methods are being developed to handle this pandemic using recent technologies such as artificial intelligence and the Internet of Things (IoT) [6].

COVID-19 can be accurately diagnosed by reverse transcription polymerase chain reaction (RT-PCR), which requires nose and throat swab samples [7]. As SARS-CoV-2 has RNA (ribonucleic acid), its genetic material can be converted into DNA (deoxyribonucleic acid) for detection, in the process known as reverse transcription. However, RT-PCR has a low sensitivity, and the high number of false negatives can be counterproductive to mitigate the pandemic. In addition, RT-PCR is a time-consuming test [8]. Alternatively, radiological imaging can be used to overcome these problems [9]. For instance, chest computed tomography (CT) scans have enabled a higher sensitivity and thus fewer false negatives than RT-PCR for COVID-19 detection [10,11].

Radiographic patterns obtained from chest CT scans can be detected using image processing and artificial intelligence techniques [12,13]. Although CT scanners are widely available in most medical centers, radiologists face a burden because they are required to determine radiographic patterns of COVID-19 and then contact patients for treatment and further analysis. This process is time-consuming and error-prone. Hence, an automatic detection system for remote COVID-19 screening should be developed. Such system may incorporate cloud computing, IoT, and deep learning technologies to minimize human labor and reduce the screening time [14]. Deep learning has shown great success in medical imaging [15,16]. For remote screening, IoT networking can be used to acquire the samples and transmit the results to other locations for further analysis. Moreover, cognitive radio can be adopted for optimum utilization of the communication spectrum according to the environment and frequency channels available.

We use cloud computing, cognitive-radio-based IoT, and deep learning for remote COVID-19 screening to improve detection and provide high-quality-of-service wireless communication by using the available transmission bandwidth using an intelligent method. Using the proposed system, samples can be obtained and analyzed remotely to inform patients about their results through communication networks, which can also support follow-up and medical assistance. To the best of our knowledge, scarce research on COVID-19 detection using chest CT scans using deep learning and IoT has been conducted [17], and the significance of efficient communication between devices for data collection and diagnosis has been neglected.

The proposed IoT-based deep learning model for COVID-19 detection has the following characteristics:

• Contrast limited adaptive histogram equalization (CLAHE) and bilateral filters are used for preprocessing to enhance the spatial and spectral information of CT scans.

• The blind/referenceless image spatial quality evaluator (BRISQUE) is used to determine the preprocessing performance.

• The VGG-16 deep transfer learning model is trained to classify suspected CT scans as either COVID-19 positive or negative.

• An IoT-enabled cloud platform transmits detection information remotely. Specifically, the platform uses deep learning to recognize radiographic patterns and transfers the results to remote locations through communication networks.

• For better resource allocation and efficient spectrum utilization between the IoT devices, cognitive radio is adopted for intelligent self-adapting operation through the transmission media.

The performance of the proposed COVID-19 detection model is compared with similar state-of-the-art deep transfer learning models, such as XceptionNet, DenseNet, and a convolutional neural network (CNN).

The remainder of this paper is organized as follows. In Section 2, we analyze related work. Section 3 presents the formulation of the proposed model and implementation of the COVID-19 detection system. Experimental results and the corresponding discussion are presented in Section 4. Finally, we draw conclusions in Section 5.

Chest CT scans have been used for early detection of COVID-19 using various pattern recognition and deep learning techniques [18]. Ahmed et al. [19] proposed a fractional-order marine predators algorithm implemented in an artificial neural network for X-Ray image classification to diagnose COVID-19. The algorithm achieved 98% accuracy using a hybrid classification model. Li et al. [12] analyzed COVID-19 manifestations in chest CT scans of approximately 100 patients, finding that multifocal peripheral ground glass and mixed consolidation are highly suspicious features of COVID-19. Rajinikanth et al. [20] developed an Otsu-based system to segment COVID-19 infection regions on CT scans and used the pixel ratio of infected regions to determine the disease severity.

Features related to COVID-19 on CT scans cannot be extracted manually. Singh et al. [8] studied CT scans and classified them using a differential-evolution-based CNN, which outperformed previous models by 2.09% regarding sensitivity. Li et al. [21] conducted a severity-based classification on CT scans of 78 patients and found a total severity score for diagnosing severe–critical COVID-19 cases of 0.918. However, no clinical information of the patients was included in the study, and the dataset was imbalanced due to the smaller number of severe COVID-19 cases.

Various IoT-enabled technologies related to COVID-19 have been proposed. Adly et al. [5] reviewed various contributions to prevent the spread of COVID-19. They found that artificial intelligence has been used for COVID-19 modeling and simulation, resource allocation, and cloud-based applications with support of networking devices. Sun et al. [22] proposed a four-layer IoT and blockchain method to obtain remote information from patients, such as electrocardiography signals and motion signals. The signals were transmitted via a secure network to the application layer of a mobile device. They focused on securing transmission using hash functions of blockchain, thereby preventing threats and attacks during communication. Ahmed et al. [23] reviewed the cognitive Internet of Medical Things focusing on the COVID-19 pandemic. Machine-to-machine communication was performed using a wireless network.

Overall, CT scans seem suitable for the accurate COVID-19 diagnosis. This motivated us to develop a system based on deep learning and IoT techniques for COVID-19 detection from chest CT scans.

3 Proposed COVID-19 Detection System

Recently, various image processing, deep learning, and IoT-based techniques have been used to screen suspected patients with COVID-19 from their CT scans by identifying representative radiographic patterns. However, the available datasets are neither extensive nor diverse. Moreover, few studies have addressed remote COVID-19 diagnosis using machine learning and intelligent networking for optimal spectrum utilization during communication. Thus, we introduce a cognitive-radio-based deep learning technique leveraging IoT technologies for remote COVID-19 screening from CT scans. The machine-to-machine connection in the network is designed to enable contactless communication between institutions, doctors, and patients.

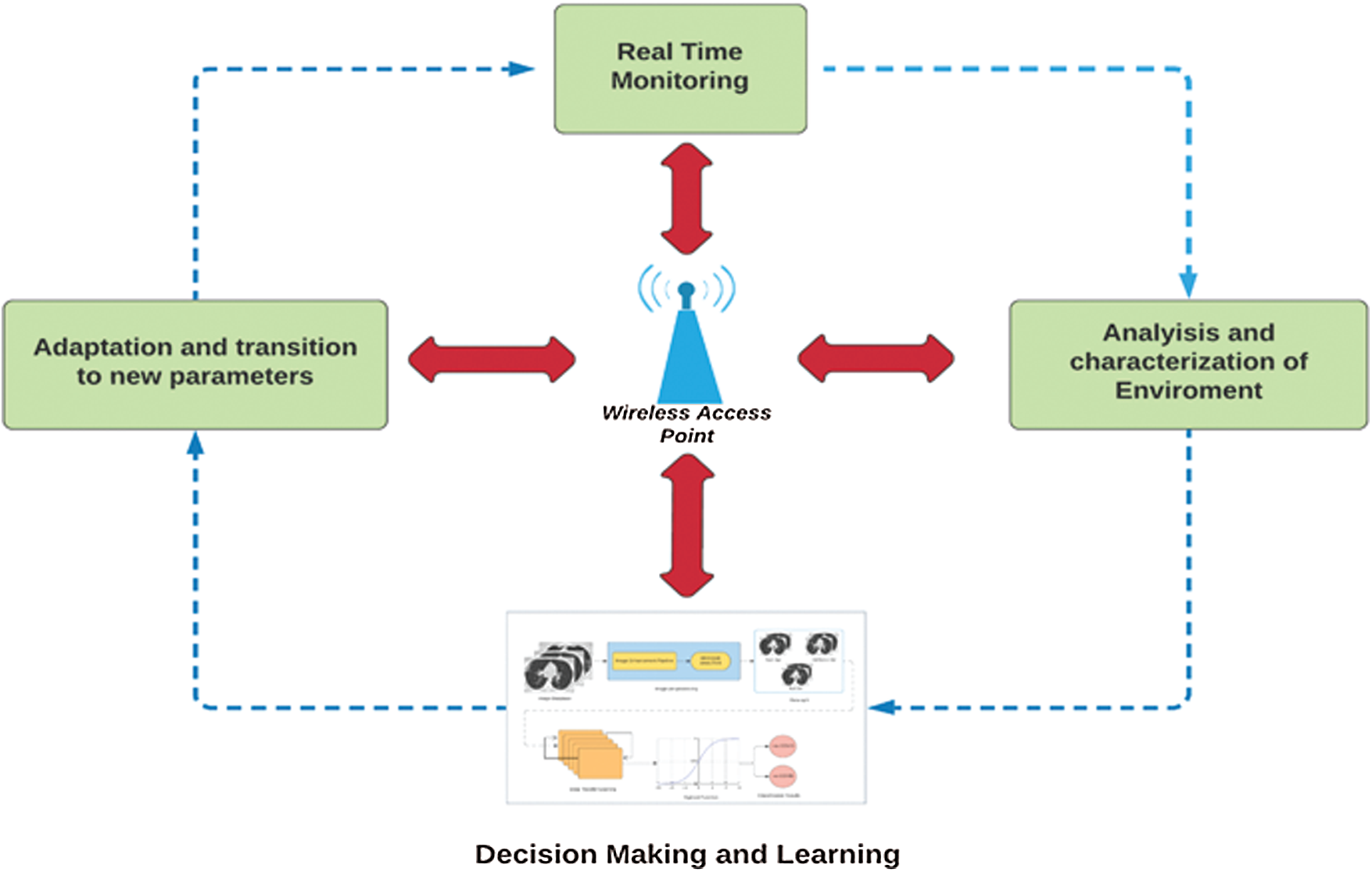

Fig. 1 shows the block diagram of the proposed intelligent cognitive radio network cycle for efficient resource management and communication across channels. Cognitive-radio-based models efficiently use the bandwidth to enable machine-to-machine communication. The cycle begins with the real-time monitoring of samples via different network devices and health applications. To ensure robust transmission, we analyze various environmental characteristics, such as throughput and bit rate. To achieve a high quality of service, we use different supervised and unsupervised methodologies during decision-making for the efficient selection of the available bandwidth during communication.

Figure 1: Proposed Internet of things (IoT) based cognitive cycle

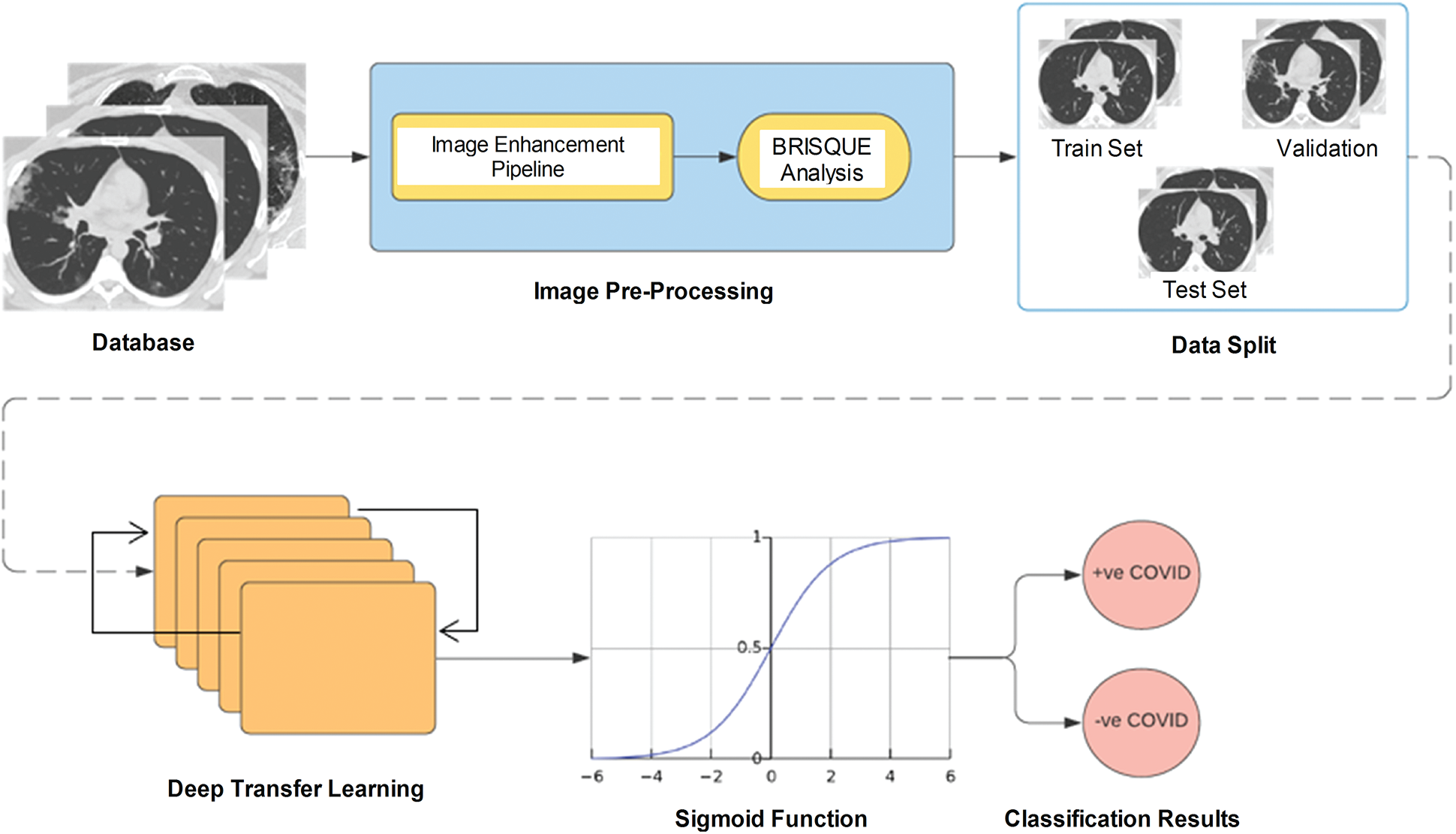

We adopt a deep transfer learning model for COVID-19 detection. The results are wirelessly transmitted to other systems after adapting the parameters to the available spectrum of the cognitive radio networks, and the cycle continues. Fig. 2 shows the block diagram of the deep learning technique. First, in the diagnostic system, a preprocessing pipeline is established by applying CLAHE, a bilateral filter, and morphological operations to each CT scan. To determine the image quality, the BRISQUE is applied to the preprocessed images. In this study, we split a dataset into training, test, and validation sets. Then, a modified VGG-16 model was used for feature extraction, and CT scans were classified as either COVID-19 positive or negative. The acquired results were transmitted to other devices via IoT networking for further analysis.

Figure 2: Proposed decision-making model for feature extraction

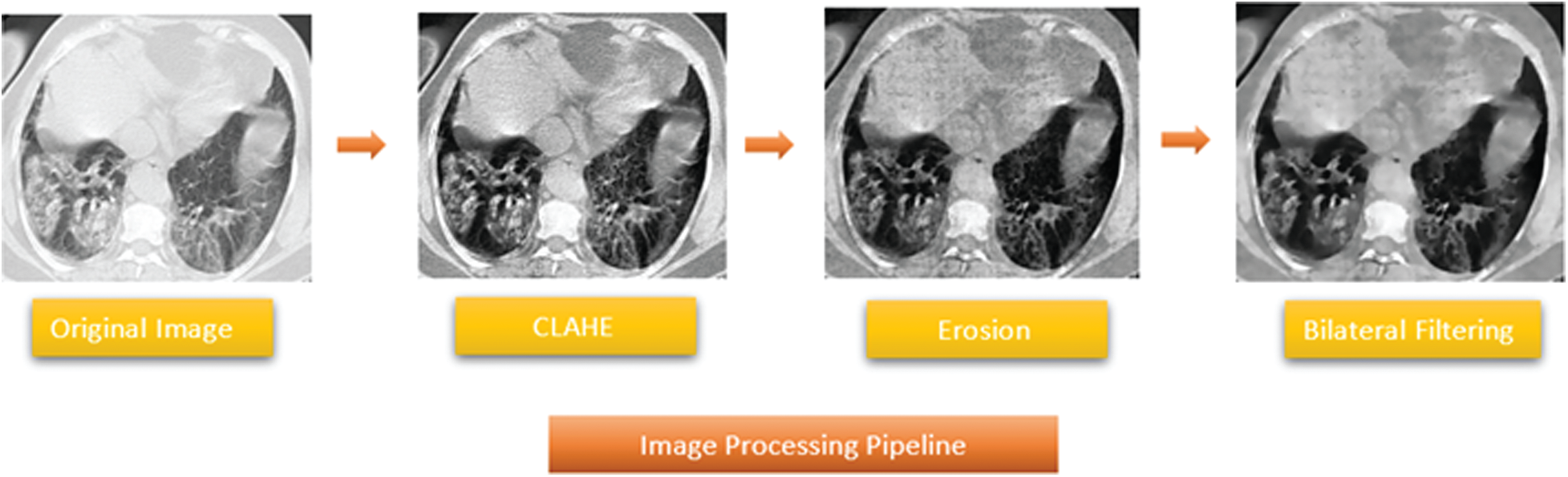

The proposed COVID-19 detection method consists of four main steps, namely, CLAHE, morphological operations, bilateral filtering, and deep transfer learning, as detailed below.

Step 1: Contrast limited adaptive histogram equalization:

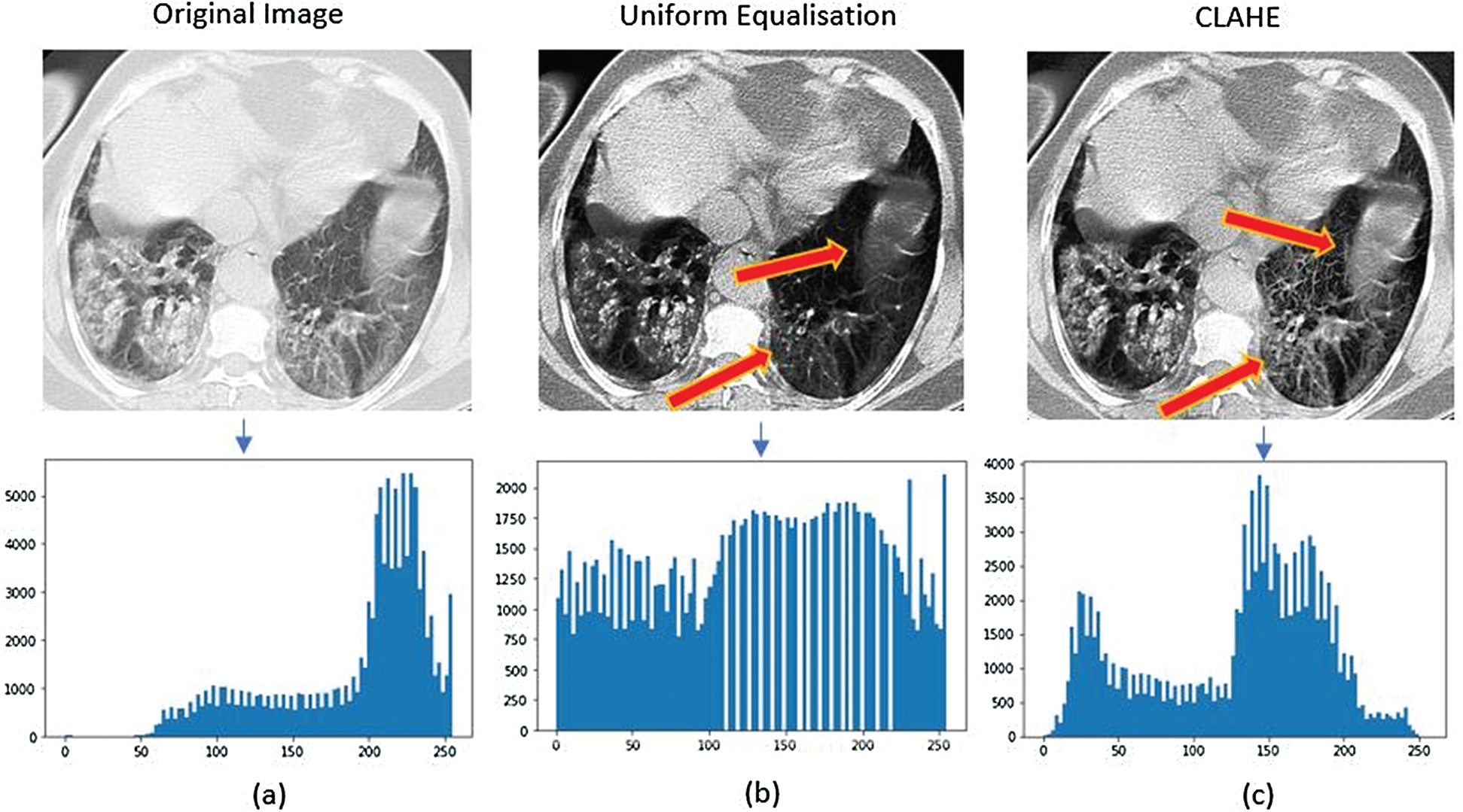

CLAHE is an adaptive image enhancement technique [24]. Individual mapping is performed on a specified neighborhood of a pixel, whose size must be set manually during computation [25]. In some cases, noise is amplified in the neighborhood of a pixel owing to homogeneous intensities, resulting in high-peak histograms. CLAHE prevents noise amplification by defining a clip limit, whose value can be determined by setting the limiting slope of intensity mapping. In this study, we considered a 4 × 4 convolution filter with a clip limit of 2. Histogram bins above this limit were clipped and distributed uniformly. In addition, bilinear interpolation was applied to recombine the tiles for mapping [26]. Fig. 3 illustrates the performance of CLAHE used in this study and uniform histogram equalization. Tile-based equalization using CLAHE (Fig. 3c) preserves the regional integrity of the candidate features indicated by arrows. In Fig. 3b, these features are hardly visible because they were equalized according to the global contrast. Thus, CLAHE outperforms similar methods regarding contrast enhancement.

Figure 3: Illustration of the applied Histogram Equalization technique on Chest CT-scan images. (a) The low contrast original image having the symptoms of COVID-19, (b) The image after applying the uniform Histogram Equalization, (c) The image after the application of CLAHE

Step 2: Morphological operations and bilateral filtering:

The next operation in the image processing pipeline is shrinking pixels using morphological filtering. Morphological operations use a structuring element known as kernel to transform an image by using its texture, shape, and other geometric features [26]. The kernel is an N × N integer matrix that performs pixel erosion. Structuring element z is fit over grayscale image i to produce output image g:

When the kernel is superimposed over the pixels inside image i, the center pixel is given the value of 1 and the neighboring pixels are deleted (value of 0). The boundaries of the individual regions can be determined by subtracting the original image from the eroded image. The kernel size and maximum number of iterations are hyperparameters that can be tuned manually.

Then, bilateral filtering is applied to the images for edge preservation and denoising. The bilateral filter replaces the intensity of each pixel with the neighboring weighted average intensity. The spatial and intensity terms can be defined as follows:

where B denotes the resulting image, Wp and P represent the normalization term and image pixel, respectively, q represents the coordinates of the pixel to be filtered (i.e., current pixel), Iq and Ip are the intensities of pixels q and p, respectively,

Figure 4: Proposed image processing pipeline

Step 3. Feature extraction:

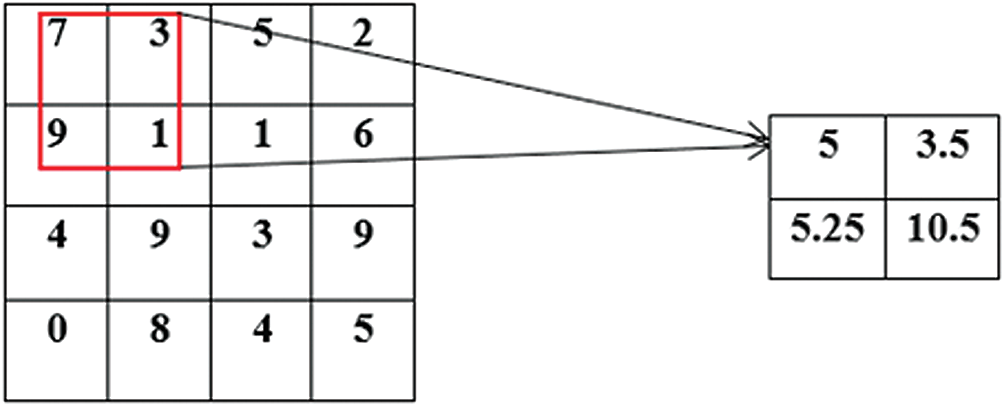

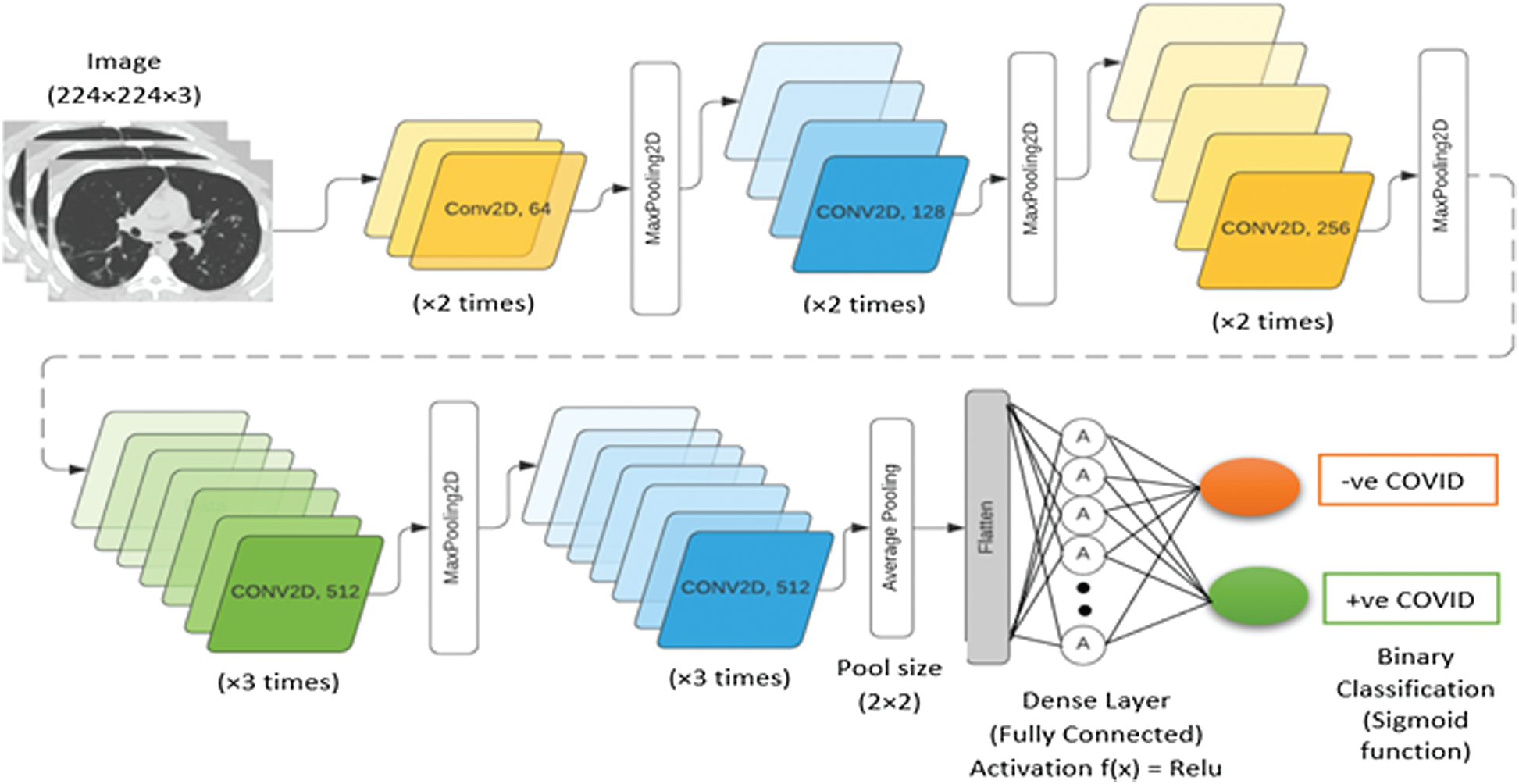

In this study, we modified a VGG-16 model for automatic feature extraction and COVID-19 detection from CT scans. The VGG-16 model allows to transfer learned weights and minimize the computation time. The modified model accepts an input of dimension Ni × 224 × 224 × 3, where Ni is the number of three-channel RGB images (CT scans) of 224 × 224 pixels. The model consists of numerous partially connected layers. The VGG-16 model improves the AlexNet for transfer learning due to the kernel size of a 3 × 3 filter with a predefined stride of 1. In addition, various 1 × 1 convolutions are used in the VGG-16 model to reduce the filter dimensionality for nonlinearity. Maximum pooling of size 2 × 2 downsamples the data to reduce the model complexity during both training and updating of the model weights in subsequent layers. In the modified VGG-16 model, the last few dense layers of the original model are removed and replaced by an average pooling layer, as shown in Fig. 5. In addition, the fully connected layers are replaced by another average pooling layer. Fig. 6 shows the modified architecture of the VGG-16 model.

Figure 5: A demonstration of feature down sampling by an AveragePooling2D filter (red block)

Figure 6: Architecture of proposed modified VGG-16 model

To validate the performance of the proposed VGG-16 model, it was compared with existing transfer learning techniques (e.g., DenseNet-201, XceptionNet) on the SARS-CoV-2 CT-scan dataset [27].

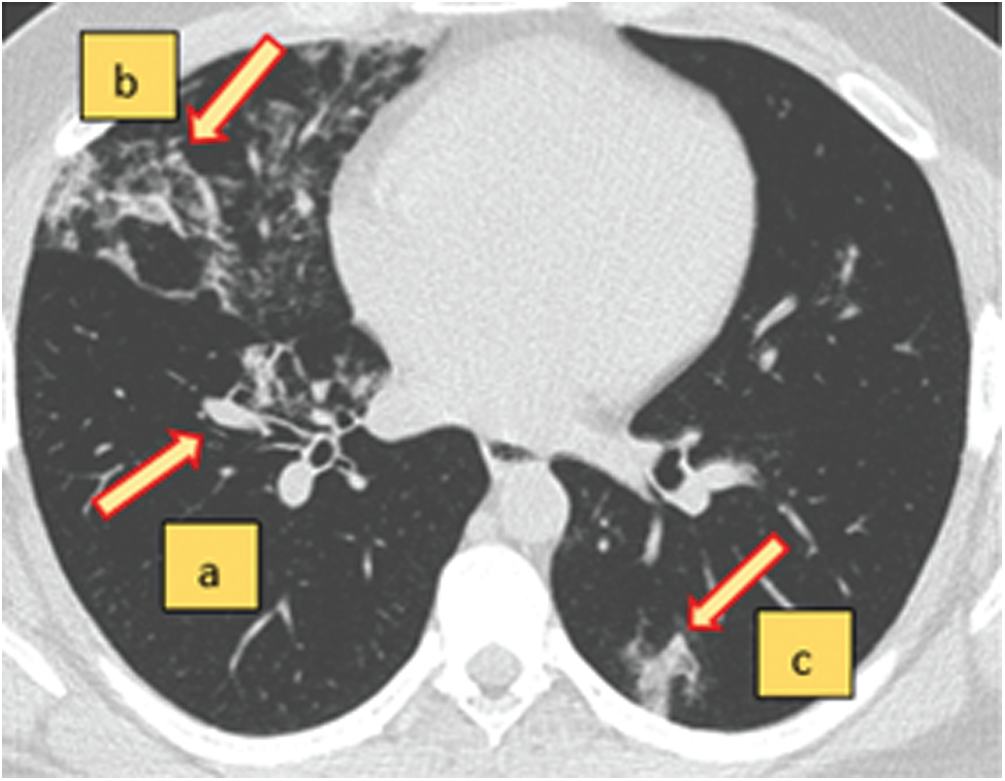

The SARS-CoV-2 CT-scan dataset [27] was used to evaluate the proposed COVID-19 detection system. Fig. 7 shows the interpretation of a sample chest CT scan with COVID-19 positive features indicated by arrows. A total of 1688 COVID-19 chest CT scans were used for evaluation. Fig. 8 shows sample CT scans from the dataset. The samples were split into 65% for the training set, 20% for the validation set, and 15% for the test set. To determine the model performance, it should be tested on unseen data. Therefore, the dataset was split for training, validation, and testing. To further improve the diversity of the CT scans for training, we used data augmentation by applying operations of rotation, shifting, and flipping (Fig. 9). The validation and test sets are not augmented to determine the detection error on real data.

Figure 7: An axial view of a chest CT-scan image with characteristics of COVID-19 (+ve) disease (a–c). The red arrows indicate the regions of abnormal manifestations. (a) Onset of lung lesion formation near the centre. (b) Peripherally distributed consolidation with GGO. (c) Shadow of ground glass opacities (GGO) in the lower right region of the CT-scan

Figure 8: Sample images of COVID-19 chest CT-scans from the SARS-CoV-2 dataset

Figure 9: Sample images of data augmentation of the CT-scan images (Rotation angle = 74 Degrees)

The proposed COVID-19 detection method was implemented on a computer equipped with an Intel Core i7 processor, 8 GB RAM, and a NVIDIA GeForce GTX1060 graphics card. The model weights were updated using stochastic gradient descend with a learning rate of 9 × 10−2. The learning rate reduced exponentially by a factor of one-hundredth after each epoch to obtain the global optimum during backpropagation. This method prevents missing the global optimum and falling in a local minimum. The number of epochs was set to 20. The images enhanced after preprocessing were analyzed using the BRISQUE [28].

We analyzed the performance of the proposed system quantitatively using the BRISQUE, model accuracy, loss error, sensitivity, and specificity. The analysis was performed on the CT scans after image enhancement and deep transfer learning.

4.3.1 Analysis of Image Enhancement Phase

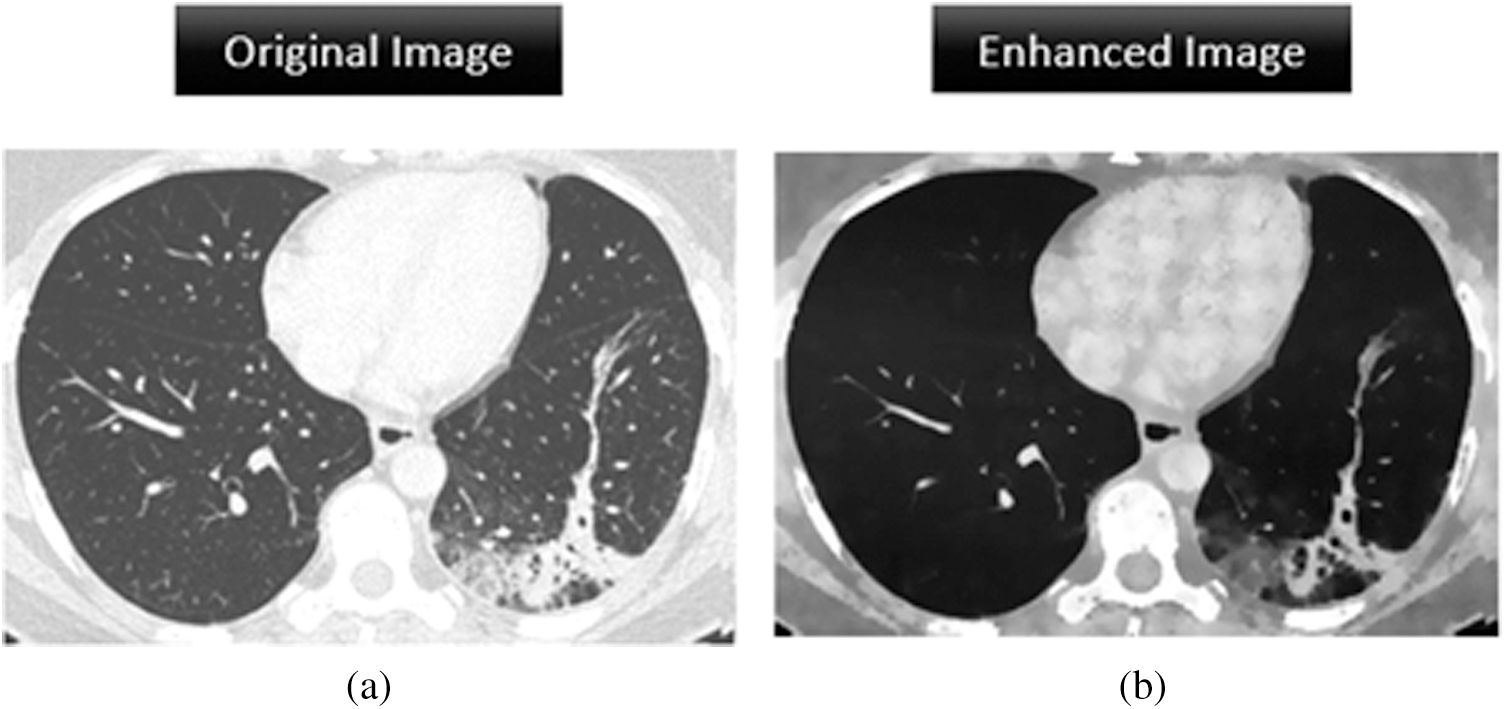

The BRISQUE is used for automatic image analysis in the spatial domain. We implemented image quality assessment in MathWorks MATLAB 2019a. The BRISQUE has been applied to magnetic resonance images [29] demonstrating evaluation reliability for medical images. Fig. 10 shows the image quality results of a chest CT scan before and after enhancement. The BRISQUE score falls from 135.25 to 46.25 after enhancement, representing a 65% decrease that demonstrates the effectiveness of the proposed image enhancement pipeline, which provides suitable images for COVID-19 detection.

4.3.2 Analysis of Deep Transfer Learning Phase

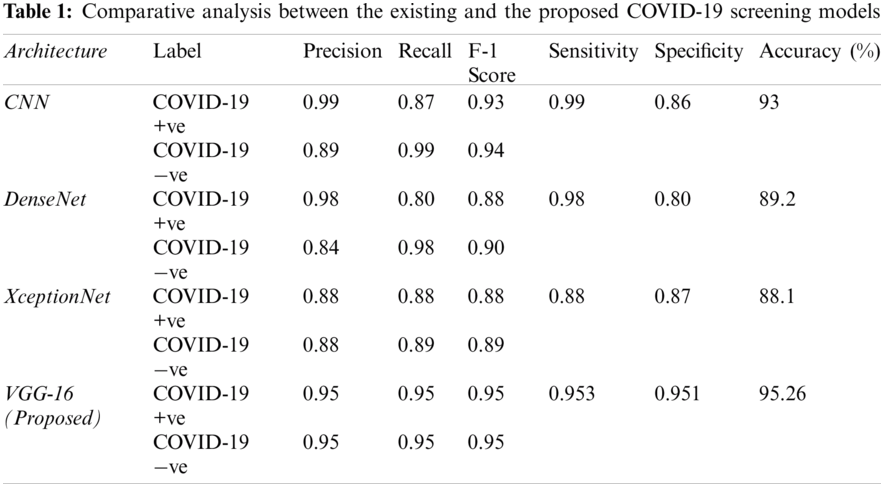

We then analyzed the modified VGG-16 model quantitatively. Deep transfer learning for COVID-19 detection has been applied in various studies [11–13]. We considered the large-scale SARS-CoV-2 CT-scan dataset with 1688 samples for evaluation and obtained various performance measures, such as the F1 score, recall, sensitivity, specificity, precision, receiver operating characteristic (ROC) curve, and area under the curve (AUC). The proposed deep learning model yielded a training accuracy of 96%, validation accuracy of 95.8%, and test accuracy of 95.26%.

Figure 10: Image enhancement analysis of the original image and the processed image by assigning a BRISQUE score. (a) BRISQUE score = 135.25 (b) BRISQUE score = 46.25

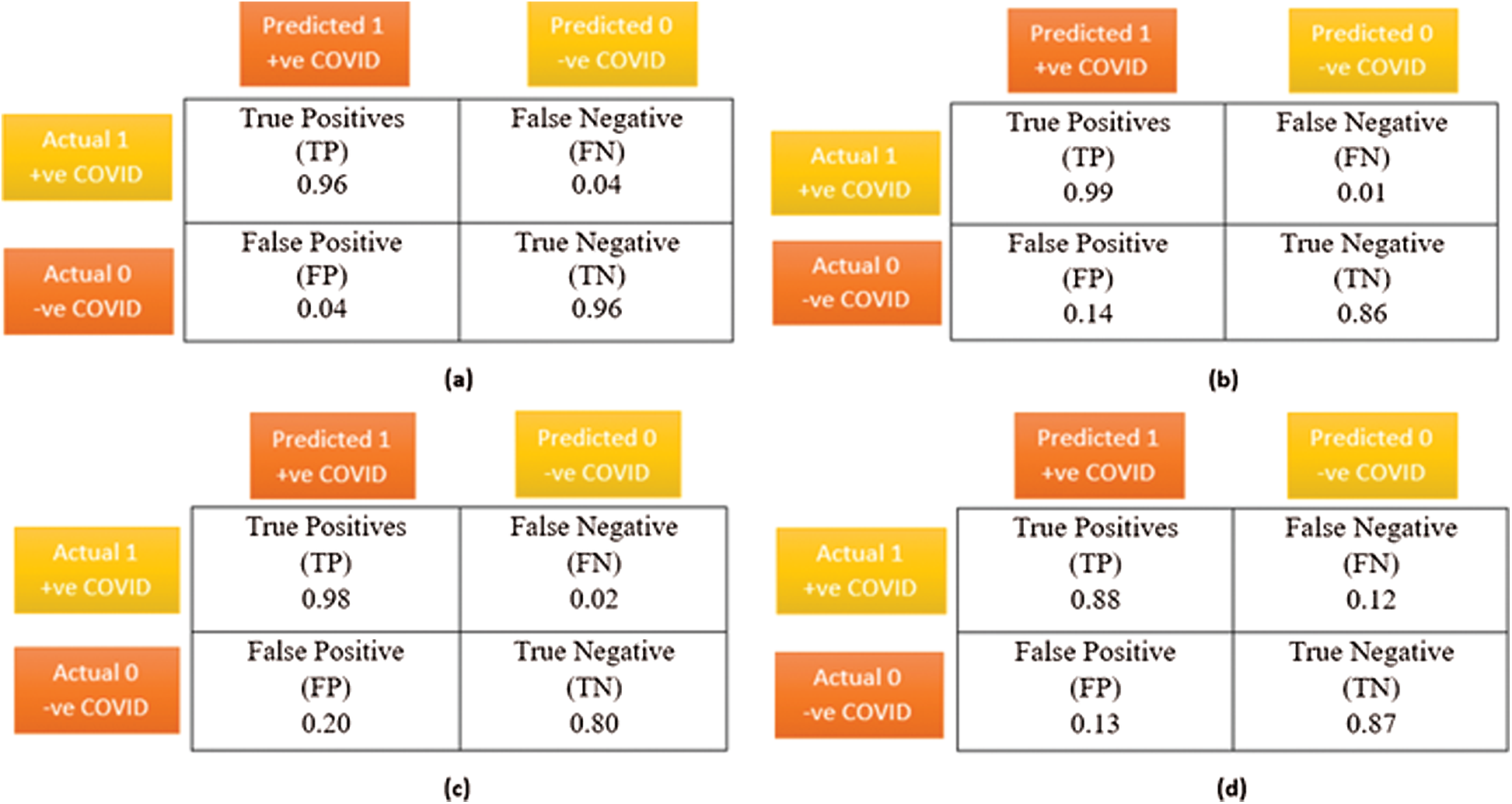

Fig. 11 shows the confusion matrices of the modified VGG-16 model and similar models including DenseNet, XceptionNet, and a CNN. The proposed VGG-16 model outperforms the other models with more true positives and fewer false negatives. False negatives indicate that we incorrectly predicted a patient with COVID-19 as not presenting the disease. In a pandemic context, many false negatives could be dangerous owing to the unaware spread of the disease. The proposed model had a false negative ratio of only 0.04.

We also determined the sensitivity and specificity to test the reliability of the proposed model. Sensitivity (Sn), also known as the true positive rate or recall, deals directly with the prediction of the positive cases correctly. It can be expressed as:

Specificity (Sp) represents the true negatives, that is, people not having COVID-19 being correctly identified as healthy. It can be expressed as:

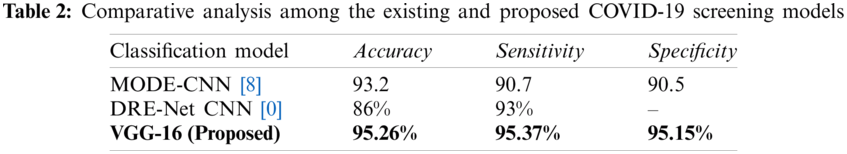

An accurate and dependable model should achieve high sensitivity and specificity. The proposed model attained Sn and Sp values of 95.37% and 95.15%, respectively. Thus, the proposed model provides high rates of true positives and true negatives. In addition, the precision relates the true positives and false positives as follows:

The F1 score is another important measure to validate models with imbalanced classes. It is the harmonic mean of precision and recall. Therefore, it evaluates a model by depicting a correlation between these two measures as follows:

Figure 11: A comparative visualization of the confusion matrix analysis, (a) Proposed VGG-16 DTL model, (b) CNN model, (c) DenseNet DTL model, (d) XceptionNet DTL model

Tab. 1 lists the results of the proposed and similar COVID-19 detection models. The proposed VGG-16 model achieved better F1 score, precision, and recall values than the comparison models. In addition, the proposed model achieved training, validation, and test accuracies of 96%, 95.8%, and 95.26%, respectively. The specificity values of the XceptionNet and DenseNet models were 87% and 80%, respectively, being much lower than the specificity of the proposed model, indicating fewer false positives provided by the proposed model. Although the CNN achieved the highest sensitivity of 99%, its accuracy and specificity were relatively low, being 93% and 86%, respectively.

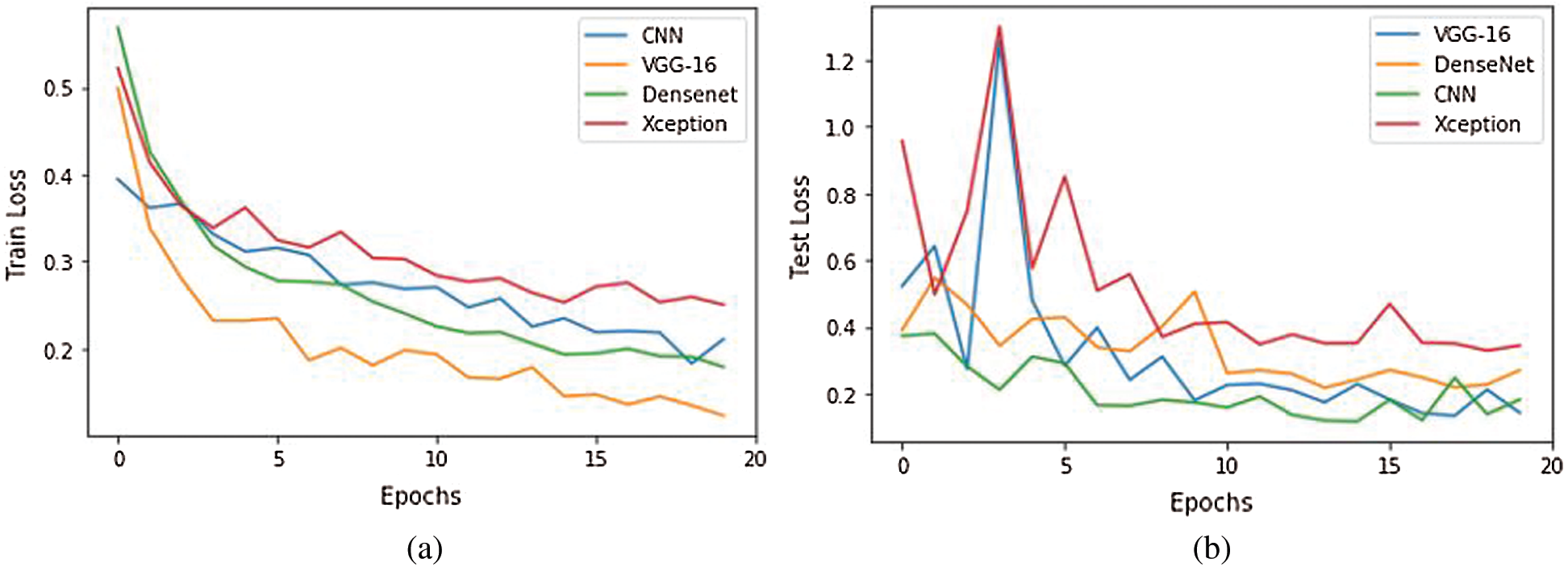

To further analyze the proposed model, we obtained the learning curves to identify aspects such as overfitting, underfitting, and bias. Fig. 12 shows the loss curves during training and testing. The proposed model suitably converged after 20 epochs in both phases. In Fig. 12b, during epochs 2 and 3 of testing, the proposed VGG-16 and XceptionNet showed an uneven convergence of loss. This may be due to random classification or noise. In subsequent epochs, the testing error decreased uniformly with negligible fluctuations. Remarkably, the proposed VGG-16 model achieved the lowest loss curve with the least training error of 0.16 and testing error of 0.17.

Figure 12: Training and validation loss analysis during 20 epochs of the competitive model. (a) Train loss, (b) Validation loss

The ROC curve of the proposed model shows the tradeoff between the true and false positive rates. It also indicates the separability between COVID-19 positive and negative cases [8] in the binary classifier. Fig. 13 shows the ROC curve of the proposed model. The model achieved an AUC of 0.99, being superior to the other deep transfer learning models (i.e., XceptionNet and DenseNet).

Figure 13: Receiver operating curve of the proposed VGG-16 model with AUC = 0.9952

4.4 Comparisons with Other Deep Transfer Learning Models

Tab. 2 lists the performance of the proposed model and the state-of-the-art MODE-CNN [8] and DRE-Net CNN [30] models. The proposed model outperforms these model regarding accuracy, sensitivity, and specificity. Thus, the proposed model can be used to accurately detect COVID-19 from CT scans.

We propose a system for remote screening of COVID-19 using deep learning techniques and IoT-based cognitive radio networks. The adaptability of the cloud-based platform for COVID-19 screening would enable contactless interaction with the patients by sending the samples and results to medical institutions and other locations via IoT networking devices for further analysis. As bandwidth availability is a major issue, cognitive-radio-based transmission is suitable for transmission because it can adapt to environmental conditions, enabling effective communication between connected IoT devices. The cognitive-radio-based spectrum utilization technique combined with artificial intelligence may largely reduce the diagnostic and transmission latency. We recognize radiographic patterns using a modified VGG-16 model. To ensure a high quality of service during feature extraction, a separate image enhancement pipeline is implemented. The proposed model successfully classifies CT scans as corresponding to COVID-19 positive or negative cases with an accuracy of 95.26%. The model also reduces the false negative rate to 0.05%, outperforming similar models. The accuracy achieved by the proposed VGG-16 model is higher than that of the transfer learning models DenseNet and XceptionNet by 15.26% and 8.26%, respectively. Various limitations of our study remain to be addressed. When networking devices are combined with artificial intelligence, security breaches and malicious attacks by hackers become major concerns. In future work, the IoT-based system can be endowed with a secure mode for data transmission using recent technologies such as blockchain for data encryption, thus preventing unauthorized data access during communication. Deep learning models achieve high performance with large datasets. Thus, a larger dataset for COVID-19 screening may further improve detection. The clinical information of the patients, such as age, medical history, and stage of disease, was not available, impeding the severity evaluation of COVID-19 positive cases based solely on the CT scans. In future work, we will extend the system to classify CT scans and assign a severity scores. The results will be transmitted through a cloud-based platform for contactless COVID-19 screening.

Funding Statement: This study was supported by the grant of the National Research Foundation of Korea (NRF 2016M3A9E9942010), the grants of the Korea Health Technology R&D Project through the Korea Health Industry Development Institute (KHIDI), funded by the Ministry of Health & Welfare (HI18C1216), and the Soonchunhyang University Research Fund.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. M. A. Khan, S. Kadry, Y.-D. Zhang, T. Akram, M. Sharif et al., “Prediction of COVID-19-pneumonia based on selected deep features and one class kernel extreme learning machine,” Computers & Electrical Engineering, vol. 5, no. 2, pp. 106960, 2020. [Google Scholar]

2. P. Shi, Y. Gao, Y. Shen, E. Chen, H. Chen et al., “Characteristics and evaluation of the effectiveness of monitoring and control measures for the first 69 Patients with COVID-19 from 18 January 2020 to 2 March in Wuxi,” China Sustainable Cities and Society, vol. 64, pp. 102559, 2020. [Google Scholar]

3. H. T. Rauf, M. I. U. Lali, M. A. Khan, S. Kadry, H. Alolaiyan et al., “Time series forecasting of COVID-19 transmission in Asia Pacific countries using deep neural networks,” Personal and Ubiquitous Computing, vol. 2, pp. 1–18, 2020. [Google Scholar]

4. T. Akram, M. Attique, S. Gul, A. Shahzad, M. Altaf et al., “A novel framework for rapid diagnosis of COVID-19 on computed tomography scans,” Pattern Analysis and Applications, vol. 11, pp. 1–14, 2021. [Google Scholar]

5. A. S. Adly, A. S. Adly and M. S. Adly, “Approaches based on artificial intelligence and the internet of intelligent things to prevent the spread of COVID-19: Scoping review,” Journal of Medical Internet Research, vol. 22, pp. e19104, 2020. [Google Scholar]

6. M. A. Khan, N. Hussain, A. Majid, M. Alhaisoni, S. A. C. Bukhari et al., “Classification of positive COVID-19 CT scans using deep learning,” Computers, Materials and Continua, vol. 66, pp. 1–15, 2021. [Google Scholar]

7. S. Sannigrahi, F. Pilla, B. Basu, A. S. Basu and A. Molter, “Examining the association between socio-demographic composition and COVID-19 fatalities in the European region using spatial regression approach,” Sustainable Cities and Society, vol. 62, pp. 102418, 2020. [Google Scholar]

8. D. Singh, V. Kumar and M. Kaur, “Classification of COVID-19 patients from chest CT images using multi-objective differential evolution–based convolutional neural networks,” European Journal of Clinical Microbiology & Infectious Diseases, vol. 39, pp. 1379–1389, 2020. [Google Scholar]

9. X. Xie, Z. Zhong, W. Zhao, C. Zheng, F. Wang et al., “Chest CT for typical coronavirus disease 2019 (COVID-19) pneumonia: Relationship to negative RT-PCR testing,” Radiology, vol. 296, pp. E41–E45, 2020. [Google Scholar]

10. A. Bernheim, X. Mei, M. Huang, Y. Yang, Z. A. Fayad et al., “Chest CT findings in coronavirus disease-19 (COVID-19Relationship to duration of infection,” Radiology, vol. 6, pp. 200463, 2020. [Google Scholar]

11. X. Yu, S. Lu, L. Guo, S.-H. Wang and Y.-D. Zhang, “ResGNet-C: A graph convolutional neural network for detection of COVID-19,” Neurocomputing, vol. 7, pp. 1–26, 2020. [Google Scholar]

12. X. Li, W. Zeng, X. Li, H. Chen, L. Shi et al., “CT imaging changes of corona virus disease 2019 (COVID-19A multi-center study in Southwest China,” Journal of Translational mMedicine, vol. 18, pp. 1–8, 2020. [Google Scholar]

13. S.-H. Wang, D. R. Nayak, D. S. Guttery, X. Zhang and Y.-D. Zhang, “COVID-19 classification by CCSHNet with deep fusion using transfer learning and discriminant correlation analysis,” Information Fusion, vol. 68, pp. 131–148, 2020. [Google Scholar]

14. X. Yu, S.-H. Wang and Y.-D. Zhang, “CGNet: A graph-knowledge embedded convolutional neural network for detection of pneumonia,” Information Processing & Management, vol. 58, pp. 102411, 2020. [Google Scholar]

15. M. A. Khan, Y.-D. Zhang, M. Sharif and T. Akram, “Pixels to classes: Intelligent learning framework for multiclass skin lesion localization and classification,” Computers & Electrical Engineering, vol. 90, pp. 106956, 2020. [Google Scholar]

16. M. A. Khan, T. Akram, Y.-D. Zhang and M. Sharif, “Attributes based skin lesion detection and recognition: A mask RCNN and transfer learning-based deep learning framework,” Pattern Recognition Letters, vol. 11, pp. 1–7, 2021. [Google Scholar]

17. S.-H. Wang, V. V. Govindaraj, J. M. Górriz, X. Zhang and Y.-D. Zhang, “Covid-19 classification by FGCNet with deep feature fusion from graph convolutional network and convolutional neural network,” Information Fusion, vol. 67, pp. 208–229, 2020. [Google Scholar]

18. Z. Wang, Q. Liu and Q. Dou, “Contrastive cross-site learning with redesigned net for COVID-19 CT classification,” IEEE Journal of Biomedical and Health Informatics, vol. 24, pp. 2806–2813, 2020. [Google Scholar]

19. T. S. Ahmed, D. Yousri, A. A. Ewees, M. A. A. Al-Qaness, R. Damasevicius et al., “COVID-19 image classification using deep features and fractional-order marine predators algorithm,” Scientific Reports, vol. 10, no. 1, pp. 1–15, 2020. [Google Scholar]

20. V. Rajinikanth, N. Dey, A. N. J. Raj, A. E. Hassanien, K. Santosh et al., “Harmony-search and otsu based system for coronavirus disease (COVID-19) detection using lung CT scan images,” arXiv preprint arXiv: 2004.03431, 2020. [Google Scholar]

21. K. Li, Y. Fang, W. Li, C. Pan, P. Qin et al., “CT image visual quantitative evaluation and clinical classification of coronavirus disease (COVID-19),” European Radiology, vol. 30, pp. 4407–4416, 2020. [Google Scholar]

22. C. Sun and Z. Zhai, “The efficacy of social distance and ventilation effectiveness in preventing COVID-19 transmission,” Sustainable Cities and Society, vol. 62, pp. 102390, 2020. [Google Scholar]

23. I. Ahmed, M. Ahmad, J. J. Rodrigues, G. Jeon and S. Din, “A deep learning-based social distance monitoring framework for COVID-19,” Sustainable Cities and Society, vol. 65, pp. 102571, 2020. [Google Scholar]

24. T. Tan, K. Sim and C. P. Tso, “Image enhancement using background brightness preserving histogram equalisation,” Electronics Letters, vol. 48, pp. 155–157, 2012. [Google Scholar]

25. A. M. Reza, “Realization of the contrast limited adaptive histogram equalization (CLAHE) for real-time image enhancement,” Journal of VLSI Signal Processing Ssystems for Signal, Image and Video Technology, vol. 38, pp. 35–44, 2004. [Google Scholar]

26. S. M. Pizer, E. P. Amburn, J. D. Austin, R. Cromartie, A. Geselowitz et al., “Adaptive histogram equalization and its variations,” Computer Vision, Graphics, and Image Processing, vol. 39, pp. 355–368, 1987. [Google Scholar]

27. P. Angelov and E. Almeida Soares, “SARS-CoV-2 CT-scan dataset: A large dataset of real patients CT scans for SARS-CoV-2 identification,” MedRxiv, 2020. [Google Scholar]

28. A. Mittal, A. K. Moorthy and A. C. Bovik, “No-reference image quality assessment in the spatial domain,” IEEE Transactions on Image Processing, vol. 21, pp. 4695–4708, 2012. [Google Scholar]

29. L. S. Chow and H. Rajagopal, “Modified-BRISQUE as no reference image quality assessment for structural MR images,” Magnetic Resonance Imaging, vol. 43, pp. 74–87, 2017. [Google Scholar]

30. Y. Song, S. Zheng, L. Li, X. Zhang, X. Zhang et al., “Deep learning enables accurate diagnosis of novel coronavirus (COVID-19) with CT images,” MedRxiv, 2020. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |