DOI:10.32604/cmc.2021.017454

| Computers, Materials & Continua DOI:10.32604/cmc.2021.017454 |  |

| Article |

Visibility Enhancement of Scene Images Degraded by Foggy Weather Condition: An Application to Video Surveillance

1Department of Computer Science, Shaheed Zulfikar Ali Bhutto Institute of Science and Technology, Islamabad, 44000, Pakistan

2Department of Computer Science, College of Computers and Information Technology, Taif University, Taif, 21944, Saudi Arabia

3College of Applied Computer Science, King Saud University (Almuzahmiyah Campus), Riyadh, 11543, Saudi Arabia

4Department of Computer Science and Information Systems, College of Business Studies, PAAET, 12062, Kuwait

*Corresponding Author: Abdulrahman M. Qahtani. Email: amqahtani@tu.edu.sa

Received: 30 January 2021; Accepted: 08 March 2021

Abstract: In recent years, video surveillance application played a significant role in our daily lives. Images taken during foggy and haze weather conditions for video surveillance application lose their authenticity and hence reduces the visibility. The reason behind visibility enhancement of foggy and haze images is to help numerous computer and machine vision applications such as satellite imagery, object detection, target killing, and surveillance. To remove fog and enhance visibility, a number of visibility enhancement algorithms and methods have been proposed in the past. However, these techniques suffer from several limitations that place strong obstacles to the real world outdoor computer vision applications. The existing techniques do not perform well when images contain heavy fog, large white region and strong atmospheric light. This research work proposed a new framework to defog and dehaze the image in order to enhance the visibility of foggy and haze images. The proposed framework is based on a Conditional generative adversarial network (CGAN) with two networks; generator and discriminator, each having distinct properties. The generator network generates fog-free images from foggy images and discriminator network distinguishes between the restored image and the original fog-free image. Experiments are conducted on FRIDA dataset and haze images. To assess the performance of the proposed method on fog dataset, we use PSNR and SSIM, and for Haze dataset use e, r−, and

Keywords: Video surveillance; degraded images; image restoration; transmission map; visibility enhancement

Restoring fogy and hazed images is important for various computer vision applications in outdoor scenes. Fog reduces visibility drastically and causes various computer vision systems to likely fail. Therefore to remove fog, haze and enhance the visibility of an image is very important because an image can be used for many purposes such as surveillance, highway driving aircraft take-off, landing, object tracking, object identification and others various fields. Image defogging and dehazing are important for the field of outdoor computer vision systems. The defogging algorithms are very important there are number of situations in which dehazing algorithms are needed such as, traffic used, tourist everywhere especially in winter and hilly areas where fog, rain and haze are very common. Poor visibility not only degrades the perceptual images quality of the image but also affects the performance of computer vision applications [1]. Images captured in foggy and haze weather condition lose their reality such as image contrast and image true color details [2]. It is the natural marvels which decrease the color contrast and surface color of object with deference to the distance from the sight object. Due to this poor contrast and low visibility degraded images create difficulty in various real time applications.

In bad weather condition, images are not clear seen due to atmospheric light and attenuation in the atmosphere. In the presence of atmospheric particles, the atmospheric light intensity gets observed in atmosphere. Due to less light coming from scene object the image contrast become decrease and image color become blur, which are strong obstacle to a poor visual perception of the images [3]. Visibility enhancement of haze and foggy image are effective role playing in various real times out door computer vision applications. Such as robot navigation, transportation, monitoring in outdoor scene, object tracking, object identification and Remote sensing systems [4]. There are a number of outdoor images taken in foggy weather condition reduced visibility and drastically degraded [5]. He et al. [6] proposed a DCP dark channel prior method. In foggy and haze weather condition captured images have low intensity. DCP was used to estimate transmission map. Almost the technique gives good result but when the image is in gray scale the technique does not work well. Another drawback of this technique which is used to selected as the color of the pixel and the largest dark channel value which is 0.1% for the estimation of atmospheric light of the haze and fog image. Fellow et al. [7] proposed Generative Adversarial Network (GAN).The GAN consist of two networks discriminator and generator each have distinct properties. The generator networks take input foggy and haze image and generate fog free image. The goal of discriminator network is to differentiate between original fog and haze image and generated fog and haze image.

The reason behind enhancing the visibility of foggy and haze images is to help numerous computer vision and machine vision applications such as, satellite imagery, object detection, target killing and surveillance. It should be essential for the systems to be able to enhance visibility of foggy and haze images and also necessary for the several real time computer vision applications. It is not only sufficient to classify the visibility of images. Identification of dehazed image such as color, brightness, texture is also necessary to adjust the contrast of images. It could be a great input and solution to the real-world computer vision applications. Images captured in inclement weather condition are always poor visibility in foggy and haze condition. This is because light reflected from sight object is distributed in the atmosphere less light receiving from camera. Due to presence of aerosols such as fog, dust and water droplets are mixed with the light which is the ambient light limited into the vision. When fog, haze, mist, rain snow is present in the atmosphere, interruption of very fine droplets in the fog sources blocking and scattering of the light through medium. In worldwide during winter many trains and flight are affected due to fog. Due to bad weather and poor visibility, driving vehicle and road sign system are being affected. However; it is a dire necessity to propose image defogging and dehazing framework to discriminate between foggy image and fog free images. We proposed conditional generative adversarial network that can directly remove haze and fog from an image. In the proposed Method CGAN consists of two networks generators and discriminator. The generator networks take input foggy image and generate fog-free image. The goal of discriminator network is to differentiate between original fog free image and restored image. According to researcher’s atmospheric light and Transmission map is an important step in image defogging/dehazing. In proposed method the generator network directly estimate transmission map, atmospheric light and scene Radiance without producing any hallo artifacts.

Figure 1: An illustrative example of an image defogging approach (a) the input fog image (b) the defog image

The remainder of this paper is organized as follows. Section 2 provides the literature review of related work done in the context of this research. Section 3 is dedicated to explaining the proposed model with the help of a hypothetical example. Section 4 presents experimental results of the proposed methodology and comparison with state-of-art methods. Finally, the conclusion is presented in Section 5.

In this section, briefly review existing literature. Several image visibility enhancement methods, algorithms, techniques and framework have been proposed to enhance visibility of foggy and haze images. This study presents related work for enhancing both foggy and haze images. Many researchers presented visibility enhancement of foggy and haze images methods in the past. A review of these is categorized and given in the following sections:

Images taken in different environmental conditions have caused color shift and localized light problem. Huang et al. [8] introduced three modules Color analysis (CA), visibility restoration (VR) and hybrid dark channel prior (HDCP). The HDCP module was used to estimate atmospheric light. The CA module was based on the gray assumption technique to determine the intensity of the RGB color space of captured images and color shift information. The VR module restores a high quality of the foggy free image. The aim of this study was to remove the fog and haze for better visibility and safety. For experiment purpose, FRIDA data set was used. Three performance metrics were used such as e, r−, and

In foggy weather condition captured images have low contrast. Negru et al. [9] proposed contrast restoration approach based on a koschmiders law. To estimate atmospheric veil color image was converted into gray scale image. The atmospheric veil V was a smooth function which gave the amount of white background when subtracted from the colored images. Median filter was used to remove noise from image and preserve the fine details of edges. The koschmiders law computes and restored luminance of a background object. The proposed model works on day time fog and also enhanced the contrast of foggy images. For experimental purpose used FRIDA dataset. The two performances metric was used to measure image restoration quality such as r− and e. Remove fog from foggy images is a difficult task in computer vision. To overcome these drawbacks, Guo et al. [10] proposed a Markov random field (MRF) framework. The graph based

Outdoor images were often degraded visibility and produced gray or bluish hue in weather degraded images. Nair et al. [12] proposed an algorithm using Gaussian filter for center surrounded de-haze images. The training images consist of three different color spaces images. Tai et al. [13] proposed a method which consists of two parts atmospheric scattering model and McCartney. The method consists of two phases transmission assumption haze similarities block. Guided filter was used to estimate transmission map and atmospheric light. The Fog Road Image Database (FRIDA) was used for experiment purpose. To find results of de-haze image restoration rate, three performance parameter e, r−, and

Visibility enhancement methods usually cannot restore images color cast problem an image contrast due to poor approximation of haze thickness. Chai et al. [15] proposed a visibility enhancement method Laplacian strategy. The method consists of two modules such as image visibility restoration (IVR) module and Haze thickness estimation (THE) module. The IVR module recovers brightness in the fog free image. For the test, 1586 real world images were used. Three well-known performance metrics were used such as e, r−, and

Traditional foggy and haze removal method fails to restore sky region images. Zhu et al. [16] introduced a new method (F-LDCP) Fusion Luminance and Dark Channel Prior techniques to bring back the original images from the haze. The proposed method comprises of three steps (i) transmission map correction (ii) atmospheric light (iii) soft segmentation method. The aim of this study was to recover long and short images with the sky to access the techniques. For experiment used 60 UAV images. To assess the performance of method used two metrics PNSR and SSIM. After experiment the method performed well and preserved the naturalness details of sky region images. Luan et al. [17] introduced a defogging method based on learning framework. In dehazing section median filter was used to estimate atmospheric light. Seven different quality based foggy feature were extracted. These features include MIC feature, MSE feature, HIS feature, WEB feature, MEA feature and SAT feature. The Michelson contrast feature (MIC) was used for periodic patterns and texture. The web contrast feature (WEB) defined normal difference between color object and background. The histogram feature (HIS) frequently used as a parameter for image quality. The saturation feature (SAT) was the ratio of minimum and maximum of pixels. The real world 427 outdoor foggy images were used for the experiment purposed. Three metrics were used e, r− and

Images taken in poor weather condition lose the image contrast. Li et al. [18] proposed a defogging method conditional generative adversarial network CGAN. The generator network generates the input haze images. Li et al. [19] proposed a defog images using CNN cascaded convolutional neural network. The medium transmission map was estimated through deny connected CNN and global atmospheric light was estimated through weight CNN.

In this research, different paradigm has been reviewed that were proposed by researchers for visibility enhancement of different foggy and haze images [8–19]. Therefore, these techniques do not perform well when images having heavy fog, large white region and strong atmospheric light. Therefore, the defogged and dehazed images contained low contrast and low visibility. As a powerful class of deep learning CGAN can directly remove fog, haze and enhance visibility of bad weather degraded foggy and haze images. The proposed CGAN consists of two networks generators and discriminator. The generator networks take input foggy image and generate fog-free image. The goal of discriminator network is to differentiate between original fog free image and restored image.

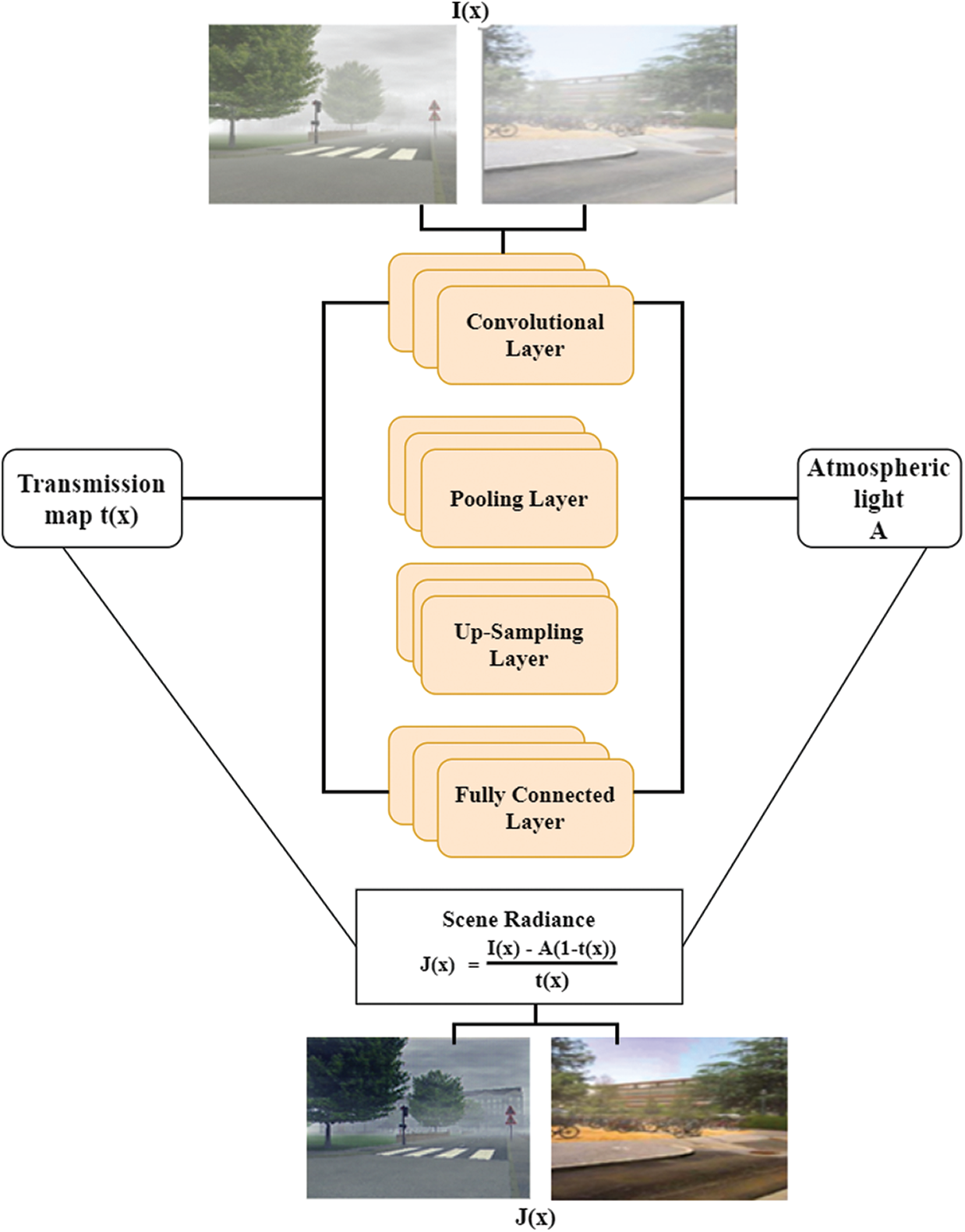

As the weaknesses of earlier work have been discussed in previous chapter so in order to overcome those weaknesses, a defogging and dehazing framework has been proposed. The overall flowchart of the proposed methodology for the visibility enhancement of foggy and haze images are shown in Fig. 2. The proposed framework is novel in terms of the issues it has addressed collectively. Thus the purpose research is to overcome the problem of low-visibility and it is also very beneficial for National Highway Traffic safety Administration system (NHTSAS), remote sensing system, Traffic monitoring system, and object recognition system. First time CGAN is used to remove fog from images. In pre-processing phase, median filter is used to remove noise from images to produce quality results. Median filter is a nonlinear filter its removes noise from bad weather degraded both foggy and haze images. There are various number of filters used in image processing. The Median filter has remove noise preserves edges, make image smooth and maintains image color details. The main function of median filter it take less time to compute the results. After pre-processing CGAN is used. The proposed CGAN consist of two networks generator network and discriminator network. The purpose of generator network takes input haze and fog image to generate haze and fog free image. In proposed method the generator network directly estimate atmospheric light and transmission. The discriminator network distinguishes generated image and original fog and haze free image.

Figure 2: Proposed framework for image dehazing/defogging

In computer vision and image processing, the generally using image formation model and atmospheric scattering model: [10]

In above Eq. (1), I(x) represent the fog image and J(x) is the scene radiance which is restored fog and haze free image. Where air light is demoted by A and transmission map is represented by t(x). Where atmospheric scattering coefficient is

Due to the existence of mist, fog, snow, haze and rain in outdoor captured images reduced visibility during foggy and haze weather conditions. To enhance visibility and quality result, it is important to remove noise from foggy and haze image. We cannot enhance visibility of foggy and haze image in the existence of noise its lower the performance of computer vision applications. Image processing essential part of visibility of foggy and haze images. In pre-processing phase, Median filter is applied on input foggy and haze image. Median filter is a nonlinear filter its removes noise from bad weather degraded both foggy and haze image. The Median filter has remove noise preserves edges, make image smooth and maintains image color details. The main purpose of the median filter is to improve the image quality that has been corrupted by noise.

3.2 Conditional Generative Adversarial Network

Once pre-processing is done CGAN is applied. The proposed framework conditional generative adversarial network is a combination of two networks generator network and discriminator network.

The generator network directly estimates atmospheric light and transmission map. The generator network consists of three steps atmospheric light, Transmission map, and Scene Radiance.

Transmission Map: The generator network architecture is as shown in Fig. 3. In generator network used four layers such as a convolutional layer, pooling layer, up-sampling layer, and fully connected layer. The generator network used these layers to calculate the transmission map. In generator network, the first convolutional layer has 16 filters with kernel size

Atmospheric Light: The atmospheric light component aims to estimate atmospheric light A. As shown in Fig. 3. The generator network used four layers to estimate atmospheric light A, which are convolutional layer, pooling layer, up-sampling layer and dense layer. The up-sampling layer consists of a

Scene Radiance: After estimating atmospheric light and transmission map the scene radiance is recovered by the following equation. The purpose of scene radiance is to the combination of the atmospheric light A and the transmission map t(x), fog image I(x), and generated restored image J(x) from the following Eq. (3).

Figure 3: Generator network architecture

The Discriminator network architecture is as shown in Fig. 4. The purpose of the discriminator network is to differentiate between original fog-free image and the generating image. The basic operation of the discriminator is convolutional, batch normalization; Leaky Relu and sigmoid function is the final layer of the discriminator [18]. Finally, the discriminator distinguishes the original fog-free image and restored the fog-free image.

Figure 4: Discriminator network architecture

We implemented our proposed method on the PC Intel (R) Core (TM) GPU@3.20 GHz processor, 16.0 GB RAM and window 10 pro the 2.2.8 open-cv. We trained the proposed method on NVIDIA TITANX GPU and model is coded on python using tensor flow framework. The proposed framework was implemented using on PyCharm. The pytorch are also used for the implementation of CGAN. We used conditional generative adversarial network to remove fog and haze from images. It almost took 6 hours to train the dataset. We performed experiment of two of commonly used datasets. The Two datasets are FRIDA (fog road image database) and Haze images [20]. The FRIDA comprises of 18 urban road scene and 90 synthetic images. In FRIDA dataset four foggy images and their depth maps and different type of fog was added on each images which is a cloudy fog, heterogeneous fog etc. The Haze images contain 35 haze images and 35 haze free images which have different kind of scenes. All the training images are resized

I. FRIDA (Fog Road Image Database):

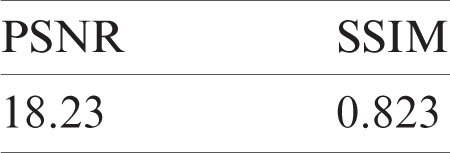

To assess the performance of proposed method on FRIDA [20] dataset used two performance metrics PSNR and SSIM. The peak to signal noise ratio is used to measure the fog ratio in foggy image and fog free image. The structure similarity index measures the similarity between original fog free image and restored image. MSE is a mean square error and MAXf is the maximum signal value that exist in original image. From below Eq. (5) i(x, y) represent the brightness comparison function which is measure the familiarity of two image, c(x, y) is a contrast function between two images and s(x, y) denote is the structure comparison function and correlation coefficient between two images. The higher values of SSIM and PSNR show the good quality result and better image quality and enhance visibility of the restored reconstructed image.

II Haze Dataset:

To validate and find image restoration rate of proposed method on Haze images used three performance indicators e, r− and

4.2.1 Experimental Result on FRIDA Dataset

To assess the performance of proposed experiments are performed on the FRIDA dataset which consist of eighteen urban roads of foggy scene and 90 synthetic images. PSNR and SSIM are used as performance metrics. Tab. 1 shows the values of PSNR and SSIM achieved by proposed framework.

Table 1: Result of proposed method on FRIDA dataset

To validate the performance of proposed method, comparative analysis is presented in Tab. 2. Where comparison is performed based on PSNR and SSIM, we can observed from Tab. 2 the proposed method achieved higher values of SSIM and PSNR than existing methods. The comparison result also presented graphically in Fig. 8. One can observe that proposed method have greater values of performance metrics such as PSNR and SSIM then existing method. The reason behind the success of proposed method is correct calculation of atmospheric light and transmission map and preprocessing to good defogging result and visual color. According to our proposed method the generator network accurately estimate atmospheric light and transmission map. Due to accurate valuation of these two modules get good defogging and dehazing results. There are numbers of methods, techniques and algorithms used to remove fog from images but we used first time deep learning framework CGAN to remove fog and enhance visibility of bad weather degraded foggy image. According to our knowledge there is no any existing literature available that has applied CGAN on foggy image. The defogging result of proposed method and other methods are shown in Figs. 5–7. The resultant image produced by proposed method is closer the original fog free ground truth image. We notice that the defogging results of existing methods still slight fog and low visibility. The comparison results of SSIM and PSNR are as shown in Tab. 2, which is clearly shows that the proposed method performed well, and enhance contrast and visual quality than existing methods. The experimental result of the road scene images are as shown in below Figs. 5–7d. It can be detected from that the defogging result generated by proposed method on Figs. 5–7d. The restored image have increase contrast and image true color details and enhanced visibility. The resultant image produced is closer the original fog free image. It can be observed that the defogging results of existing methods still have slight fog.

Table 2: PSNR and SSIM comparative analysis of proposed methodon FRIDA dataset with previous techniques

Figure 5: Defogging result of proposed method in comparison with Huang et al. [8] based on Fog data. (a) Original Fog free image. (b) Foggy image. (c) HDCP [8]. (d) Proposed method

We compared the performance of proposed method with existing three methods. Figs. 5–7 (a) represent the original fog free image (b) represent input fog image. Fig. 5c restoration result of HDCP method. As we can see that the result generated from Fig. 5c, our restored image has closed to ground truth image, vivid color and true image color details. HDCP. Reference [8] results are as shown in Fig. 5c. This method did not perfectly remove fog and there is large scale of gray level present in image. After defogging it produce dim and noisy sky and reduced contrast. The restored image look too darker and image have low visibility due to wrong estimation of transmission map. Vector quantization. Reference [13] results are presented in Fig. 6c the bottom part of image mostly consists of road its enhanced visibility, but the bottom part of image is too dark. The restored image still exist artifacts and scene problem, for region few color vanishes and did not effectively removed fog the generated image have low visibility and over enhanced. The result generated by our approach are as shown in Fig. 6d. The prosed approach effectively removed fog and which are closer to ground truth fog free image and visual pleasing quality. Reference retrieval. Reference [14] results are presented in Fig. 7c. We can see that the restored image has blurred and image sharp details are also destroying, image contain low contrast. This method has produced good defogging results for image contain low and heavy fog region. As the results produced by our approach as shown in Fig. 7d our generated image slight fog but image contain high contrast and enhanced visibility. Due to accurate calculation of atmospheric light and transmission map get good defogging results, true color details and context information of fog region. The comparison results of SSIM and PSNR are shown in above Tab. 2. According to Tab. 2, the higher SSIM and PSNR values manufactured by proposed method and lower values generated by existing methods. During experiments we observed that the proposed framework process an image within seconds.

Figure 6: Defogging result of proposed method in comparison with Tai et al. [13] based on Fog data set. (a) Original Fog free image. (b) Foggy image. (c) Vector quantization [13]. (d) Proposed method

Figure 7: Defogging result of proposed method in comparison with Yuan et al. [14] based on Fog data set. (a) Original Fog free image. (b) Foggy image. (c) Reference retrieval [14]. (d) Proposed method

Figure 8: Comparison graph of PNSR and SSIM metrics of FRIDA dataset

4.2.2 Experimental Result on Haze images

Visual result of CGAN are carried out based on the experiment performed on the Haze dataset which consist of 35 haze images and 35 haze free images. The performance result of e, r−

Table 3: Result of proposed method on Haze dataset

To assess the performance of proposed method with existing dehazing methods the comparative analysis are presented in Tab. 4. Comparison is performed based on e, r− and

Five types of haze images are chosen from test set to perform the comparison results. In Figs. 9–12, first image is ground truth haze free image and second image is input haze image. As shown in Fig. 9, algorithms [17,21–23] are more appropriate after being processed by existing methods and significantly removed fog. The bottom part of image is road the restored image of existing methods has been low contrast and less visible edges almost the entire image have saturation. But the result produced by proposed method in Fig. 9 which have high contrast, more visible edges and texture information. The results presented by existing methods Guo et al. [10], Zhao et al. [11], Fattal [24], and Kumari et al. [25] as shown in Fig. 10, visibilities are enhanced by existing methods the result generated by method [24] still haze exist and image contain low contrast. The result generated by proposed method as shown in Fig. 10 the restored image high contrast and brightness. We can observe that the restoration rate produced by state-of-art method He et al. [6], Nair et al. [12], Tan [26] and Liu et al. [27] as shown in Fig. 11 did not perfectly removed haze, large number of artifacts and image have too dark region due to wrong calculation of transmission map. The result presented by prosed method in generated image which have no artifacts, increase contrast and image fine details. These methods usually insufficient estimation of the haze thickness for this reasons the generated image cannot good satisfactory restoration rate and visible edges information which are displayed in Fig. 11. As shown in Fig. 11 the result produced by Fattal [24] did not remove haze perfectly and the restored image has low visibility. As shown in Fig. 12e the result generated by Kumari almost the technique remove haze but the restored image contains high contrast. The result generated by Guo [10] and Zhao et al. [11] are with better visibility there are almost haze removed. As shown in Fig. 10 the method Nair et al. [12] and Tan [26] did not remove haze and in the restored image haze still exists. The resultant image is too dark as compared to original haze free image. The result produced by He et al. [6] and Liu et al. [27] has almost removed haze but in the restored image there exists artifacts and low contrast. As shown in Fig. 10 the result generated by our method and other methods such as He et al. [6], Yuan et al. [14] and Liu et al. [27] almost the techniques remove haze perfectly but the resultant image has low visibility and scene problem.

Table 4: Comparison Result of performance measure e, r−, and

Figure 9: Comparison results of various dehazing methods and proposed method. (a) Original Haze free image, (b) Haze image, (c) The algorithm [17]. (d) The algorithm [21]. (e) The algorithm [22]. (f) The algorithm [23]. (g) Proposed algorithm

Figure 10: Dehazing result of proposed method and state-of-art methods. (a) Original image. (b) Haze image. (c) Guo et al. [10]. (d) Zhao et al. [11]. (e) Fattal [24]. (f) Kumari et al. [25]. (g) Proposed

Figure 11: Dehazing result of proposed method and state-of-art methods. (a) Original image. (b) Haze image. (c) He et al. [6]. (d) Nair et al. [12]. (e) Tan [26]. (f) Liu et al. [27]. (g) Proposed

Figure 12: Dehazing result of proposed method in comparison with other state-of-art methods. (a) Original image. (b) Haze image. (c) He et al. [6]. (d) Fei et al. [14]. (e) Liu et al. [27]. (f) Proposed

In this paper, we have proposed an efficient framework for image dehazing and defogging by using CGAN. The proposed CGAN is a combination of two networks; Generator and Discriminator. The generator network produces a clear image from input foggy and haze image and it also preserves the structure and detailed information of an input image. The proposed generator network consists of three parts atmospheric light, transmission map and scene radiance. The generator network effectively estimates these three parts. The discriminator network distinguishes input fog and haze-free image and restored image. The experimental results demonstrated that the proposed framework performed well and achieved good image restoration rate than the existing state-of-art-techniques. In future, the proposed framework can be used for thick haze, night time foggy and haze images. Now a days, fog is a big reason for road accidents. In the winter, due to the heavy and thick fog, automobile drivers faced sight problem which causes accidents. The automated framework with increased efficiency can help drivers to see the clear vision during foggy weather.

Funding Statement: We deeply acknowledge Taif University for Supporting and funding this study through Taif University Researchers Supporting Project number (TURSP-2020/115), Taif University, Taif, Saudi Arabia.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. S. Goswami, J. Kumar and J. Goswami, “A hybrid approach for visibility enhancement in foggy image,” in 2nd Int. Conf. on Computing of Sustainable Global Development, New Delhi, India, pp. 175–180, 2015. [Google Scholar]

2. N. Nadare and S. Agrwal, “Contrast enhancement algorithm for foggy images,” International Journal of Electrical & Electronic Engineering, vol. 6, pp. 2250–2255, 2016. [Google Scholar]

3. M. Imtiyaz and A. Khosa, “Visibility enhancement with single image fog removal scheme suing a post-processing technique,” in 4th Int. Conf. on Signal Processing and Integrated Networks (SPINNoida, India, pp. 280–285, 2017. [Google Scholar]

4. B. Hai, S. Chai, C. Young and S. Yeh, “Haze removal using radial basis function networks for visibility restoration applications,” IEEE Transactions on Neural Networks and Learning Systems, vol. 29, pp. 3828–3838, 2017. [Google Scholar]

5. X. Liu, H. Zhang, Y. Tang and X. Du, “Scene—adaptive single image dehazing via open dark channel prior,” IET Image Processing, vol. 10, no. 11, pp. 877–884, 2016. [Google Scholar]

6. K. He, J. Sun and X. Tang, “Single image haze removal using dark channel prior,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 33, no. 12, pp. 2341–2353, 2011. [Google Scholar]

7. G. Fellow, J. J. Abadie, M. Mirza, B. Xu, D. W. Farley et al., “Generative adversarial nets,” in Advances in Neural Information Processing Systems (NIPSBangkok, Thailand, pp. 2672–2680, 2014. [Google Scholar]

8. S. C. Huang, B. B. Chern and Y. J. Cheng, “An efficient visibility enhancement algorithm for road scenes captured by intelligent transportation system,” IEEE Transaction on Intelligent Transportation System, vol. 15, no. 5, pp. 2321–2332, 2014. [Google Scholar]

9. M. Negru, S. Nedevshi and R. Loan Peter, “Exponential contrast restoration in fog conditions for driving assistance,” IEEE Transaction on Intelligent Transportation System, vol. 16, no. 4, pp. 2257–2268, 2015. [Google Scholar]

10. F. Guo, J. Tang and H. Peng, “A Markov random field model for the restoration of foggy images,” International Journal of Advanced Robotic System, vol. 11, no. 6, pp. 92, 2014. [Google Scholar]

11. H. Zhao, C. Xaio, J. Yu and X. Xu, “Single image fog removal based on local extrema,” IEEE/CAA Journal of Automatic Sinica, vol. 2, no. 2, pp. 158–165, 2015. [Google Scholar]

12. D. Nair and P. Sankara, “Color image dehazing using surrounded filter and dark channel priors,” Journal of Visual Communication & Image Representation, vol. 50, pp. 9–15, 2018. [Google Scholar]

13. S. C. Tai, T. C. T. Sai and J. C. Wen, “Single image dehazing based on vector quantization,” International Journal of Computers and Applications, vol. 37, no. 3–4, pp. 83–93, 2015. [Google Scholar]

14. F. Yuan and H. Huang, “Image haze removal via reference retrieval and scene prior,” IEEE Transactions on Image Processing, vol. 27, no. 9, pp. 4395–4409, 2018. [Google Scholar]

15. S. Chai, J. H. Ye and B. H. Chen, “An advanced single image visibility restoration algorithms for real –world hazy scenes,” IEEE Transactions on Industrial Electronics, vol. 6, no. 5, pp. 2962–2972, 2015. [Google Scholar]

16. Y. Zhu, G. Tang, X. Zhang, J. Jiang and Q. Tian, “Haze removal method for natural restoration of images with sky,” Journal of Neurocomputing, vol. 275, no. 12, pp. 499–510, 2018. [Google Scholar]

17. Z. Luan, Y. Shang, X. Zhou, Z. Shao, G. Guo et al., “Fast single image dehazing based on regression model,” Journal of Neurocomputing, vol. 245, no. 5, pp. 10–22, 2017. [Google Scholar]

18. R. Li, J. Pan and L. Tang, “Single image dehazing via conditional generative adversarial network,” in Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, pp. 8202–8211, 2018. [Google Scholar]

19. C. Li, J. Guo, F. Porikli, H. Fu and Y. Pang, “A cascaded convolutional neural network for single image dehazing,” IEEE Access, vol. 6, pp. 24887, 2018. [Google Scholar]

20. “A fast single image haze removal using color attenuation prior,” IEEE Transaction on Image Processing, vol. 24, no. 11, pp. 3522–3533, 2015. [Google Scholar]

21. Q. Zhu, J. Mai and L. Shao, “A fast single image haze removal using color attenuation prior,” IEEE Transection on Image Processing, vol. 24, no. 11, pp. 3522–3533, 2015. [Google Scholar]

22. W. Ren, S. Liu, H. Zhang, J. pan, X. Cao et al., “Single image dehazing via multi-Scale convolutional neural network,” in European Conf. on Computer Vision, Las Vegas, NV, USA, pp. 154–159, 2016. [Google Scholar]

23. D. Berman, T. Treibitz and S. Avidan, “Non-local image dehazing,” in Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, Sydney, Australia, pp. 1674–1682, 2016. [Google Scholar]

24. R. Fattal, “Single image dehazing,” IEEE Int. Conf. on Information Science and Technology, vol. 27, no. 3, pp. 721–729, 2008. [Google Scholar]

25. A. Kumari and S. K. Sahoo, “Real time visibility enhancement for single image haze removal,” Procedia Computer Science, vol. 54, no. 3, pp. 501–507, 2015. [Google Scholar]

26. R. T. Tan, “Visibility in bad weather from a single image,” in IEEE Conf. on Computer Vision and Pattern Recognition, Anchorage, AK, USA, pp. 1–8, 2008. [Google Scholar]

27. X. Liu, Y. Chen, X. You and Y. Tang, “Efficient single image dehazing and denoising an efficient multi-scale correlated wavelet approach,” Computer Vision and Image Understanding, vol. 162, no. 8, pp. 23–33, 2017. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |