DOI:10.32604/cmc.2021.016362

| Computers, Materials & Continua DOI:10.32604/cmc.2021.016362 |  |

| Article |

Phase Error Compensation of Three-Dimensional Reconstruction Combined with Hilbert Transform

1School of Mechanical Engineering, North China University of Water Conservancy and Hydroelectric Power, Zhengzhou, 450045, China

2Department of Computer Science, Ball State University, Muncie, 47306, IN, USA

*Corresponding Author: Tao Zhang. Email: ztncwu@126.com

Received: 31 December 2020; Accepted: 01 March 2021

Abstract: Nonlinear response is an important factor affecting the accuracy of three-dimensional image measurement based on the fringe structured light method. A phase compensation algorithm combined with a Hilbert transform is proposed to reduce the phase error caused by the nonlinear response of a digital projector in the three-dimensional measurement system of fringe structured light. According to the analysis of the influence of Gamma distortion on the phase calculation, the algorithm establishes the relationship model between phase error and harmonic coefficient, introduces phase shift to the signal, and keeps the signal amplitude constant while filtering out the DC component. The phase error is converted to the transform domain, and compared with the numeric value in the space domain. The algorithm is combined with a spiral phase function to optimize the Hilbert transform, so as to eliminate external noise, enhance the image quality, and get an accurate phase value. Experimental results show that the proposed method can effectively improve the accuracy and speed of phase measurement. By performing phase error compensation for free-form surface objects, the phase error is reduced by about 26%, and about 27% of the image reconstruction time is saved, which further demonstrates the feasibility and effectiveness of the method.

Keywords: Three-dimensional reconstruction; structured light; Hilbert transform; phase compensation

Structured light three-dimensional (3D) measurement technology, with non-contact, high-speed, and high-precision measurement, has become a commonly used tool [1–4] in areas such as machine vision, virtual reality, reverse engineering, and industrial measurement. Structured light technology projects a sequence of fringe images on the surface of the measurement object, and the fringe is deformed by the contour of the object. The phase calculation [5–7] of the collected fringe image can realize reconstruction of the three-dimensional contour of the object. However, due to the influence of instrument design, gamma nonlinear distortion [8] between the projector and camera will produce measurement phase errors, and the collected grating fringes will not be an ideal cosine function, but a function with certain distortion. To improve the accuracy of phase calculation requires compensation for the phase error caused by the gamma nonlinear distortion. This has been the topic of much research, mainly of methods of curve calibration, imaging defocusing, and phase error modeling.

Curve calibration makes no changes to the projection fringe pattern, but compensates for the phase error in the phase calculation process. First, the method calibrates a brightness transfer function from projector to camera, and performs a gamma inverse transformation when generating the pattern image to realize advance correction of the input value of the projected image, or gamma correction of a distorted fringe image. Huang et al. [9] established the grayscale mapping relationship between the input fringe image and the fringe image collected by a camera, and deduced the nonlinear phase error caused by nonlinear system response. Guo et al. [10] proposed a technique for gamma correction based on the statistical characteristics of fringe images. Gamma and phase values can be estimated simultaneously through the normalized cumulative histogram of fringe images. Liu et al. [11] modeled the phase error caused by gamma distortion, deduced the relationship between high-order harmonic phase and gamma value, obtained the high-order harmonic phase through a multi-step phase shift, and calibrated the gamma coefficient and performed gamma correction. Li et al. [12] considered the defocusing effect of the projector in the phase error model, for more accurate gamma calibration.

Phase error compensation corrects the projection pattern so that the collected fringe image has an approximately sinusoidal intensity distribution. The phase error is calibrated in advance according to its inherent regularity, and the calculated distortion phase is compensated to obtain the correct phase. Zhang et al. [13] obtained a regular phase error distribution through statistical analysis of the experimental data of a three-step phase shift method, and established a lookup table to compensate for the phase error. Pan et al. [14] pointed out that harmonics higher than the fifth order rarely appear in the digital fringe projection 3D measurement system, so the response signal containing the fifth harmonic is used to derive the phase error model of three-, four-, and five-step phase shift methods. These phase error models are used to design error iteration algorithms, and to calculate the optimal phase.

The defocus imaging method uses the suppression effect of image defocus on high frequency and reduces the high-order harmonic energy of the captured image, thereby reducing the phase error. The method generates a low-pass filter through the defocus of a projector to obtain a fringe image without gamma distortion, thereby avoiding the gamma effect. Zhang et al. [15] used projector defocusing technology to effectively reduce the non-sinusoidal error of fringe images. Zheng et al. [16] binarized grayscale fringes and processed the defocus of the projector to obtain high-quality phase information.

In summary, whether to establish a phase reference, calibrate the gamma value, or defocus projection, auxiliary conditions are needed for phase error compensation. For example, curve calibration and phase error compensation need to quantify the nonlinear response of a system, and defocus imaging method needs to adjust the optical parameters of a system, and both procedures affect a method’s flexibility and robustness.

Current nonlinear phase-error compensation methods all require auxiliary conditions, such as phase reference construction, gamma calibration, and response curve fitting, which affect the flexibility and robustness of the method. This paper presents an adaptive phase error compensation method based on a Hilbert transform. The method introduces a

2.1 Structured Light 3D Reconstruction Error Model

In the structured light 3D reconstruction system, the grating fringes, which are obtained with gamma distortion on the projection reference plane, can be expressed as

where

According to the principle of least squares, the image data can calculate the package phase information, which can be expressed as

Due to the nonlinear characteristics of the projection line, the fringe pixel intensity produces nonlinear error. The resulting phase difference is expressed as

Substituting Eq. (2) in Eq. (3), the phase difference can be obtained as

The relationship [16] between phase error and GN −1 is given by

where

The absolute value of Gk decreases significantly with the harmonic order k. Since GN −1 decreases rapidly with the increase of the number of phase shift steps, and the effect of GN+1 on phase error correction is negligible, considering that the N-order harmonics can already meet the accuracy requirements, Eq. (5) is simplified as

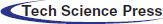

Eq. (7) shows that the phase error is a periodic function related to ideal phase

2.2 Hilbert Transform Compensates Phase Error

The Hilbert transform does not need to rely on external auxiliary conditions to introduce a phase shift in the image signal to compensate for the phase. The Hilbert transform of the phase-shift fringe image is expressed as

where

Due to the nonlinear response, the transformed fringe image also contains high-order harmonics, so the actual transformed image is given by

According to Eqs. (2)–(7), the actual phase of the transform domain is

The phase error in the transform domain is the deviation between the actual phase and true phase. According to the phase derivation formula in the space domain, the phase error is given by

Like the phase error distribution in the space domain, the phase error in the transform domain is a periodic function related to the number of ideal phase shift steps N and the gamma value. By comparing the phase error models in the space domain and transform domain, it can be seen that their amplitudes are equal, but the phase difference is half a period, i.e., the sign is opposite. Therefore, the phase error can be compensated for the help of the Hilbert transform.

Let

It can be seen from Eq. (12) that the phase error of the average phase is still a periodic distribution, and its frequency is twice the phase error of the spatial domain. By solving the derivative of the phase errors in Eqs. (7), (11), and (12), the corresponding maximum phase error is obtained. Because

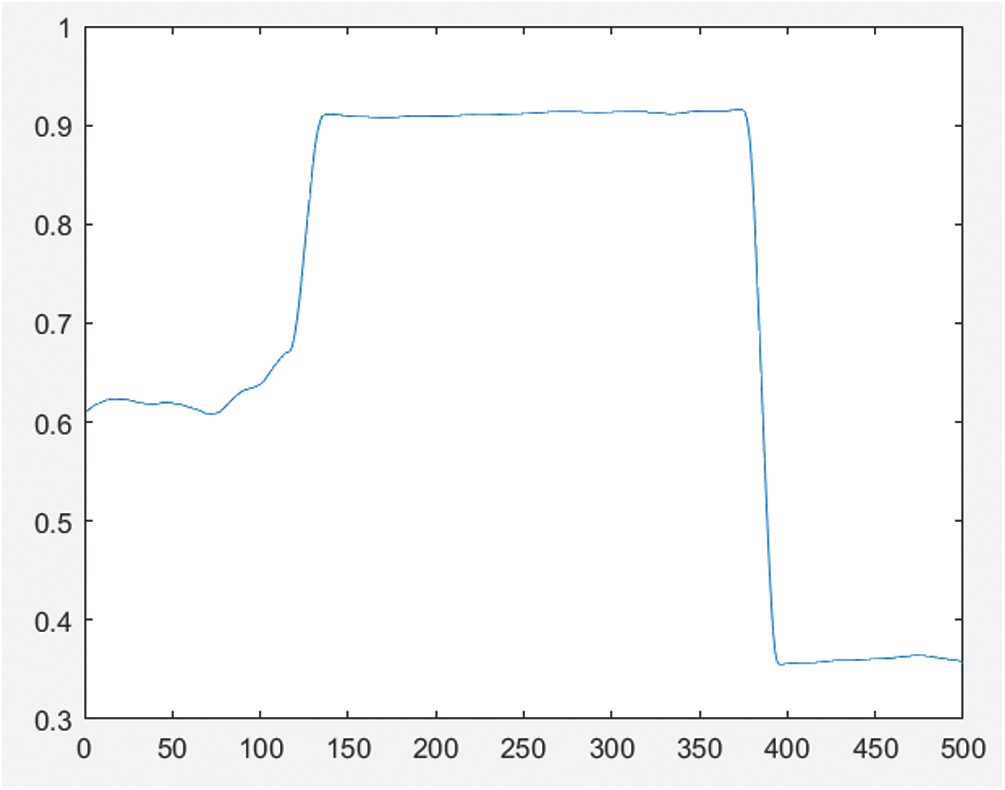

Figure 1: Phase error comparison

2.3 Image Denoising Based on Spiral Phase Function

In an ideal state, the effect of Hilbert transform recovery is good, and there is no need to introduce auxiliary images for processing, but in the Hilbert transform fringe image, there is still a small amount of noise mixed in the eigenmode function components. If one simply applies global mean filtering to the fringes, the overall image will become blurred. Instead of improving the quality, it will reduce the resolution. Moreover, in order to adapt to the needs of images, the Hilbert transform is extended to a two-dimensional space, and its sign function will cause high anisotropy, which cannot meet the requirement of scale invariance. Combining the spiral phase function

According to Eq. (13), the two-dimensional Hilbert transform operator can be derived as

The optimization of the Hilbert transform based on the spiral phase is as follows.

(1) Use the Hilbert spiral to calculate the amplitude distribution of each selected BIMF component and smooth it.

(2) Set a threshold to identify the noise area, and specify that the part whose amplitude distribution is lower than the threshold is noise; the part above the threshold is ignored and not processed.

(3) Smooth the image locally. The identified noise part is subjected to local mean filtering, and the non-noise part is directly used for image reconstruction without processing.

3 Experimental Results and Analysis

To verify the performance of the algorithm, we constructed a structured light 3D measurement system composed of a DLP digital projector BenQ es6299 projector with

Figure 2: Experimental environment

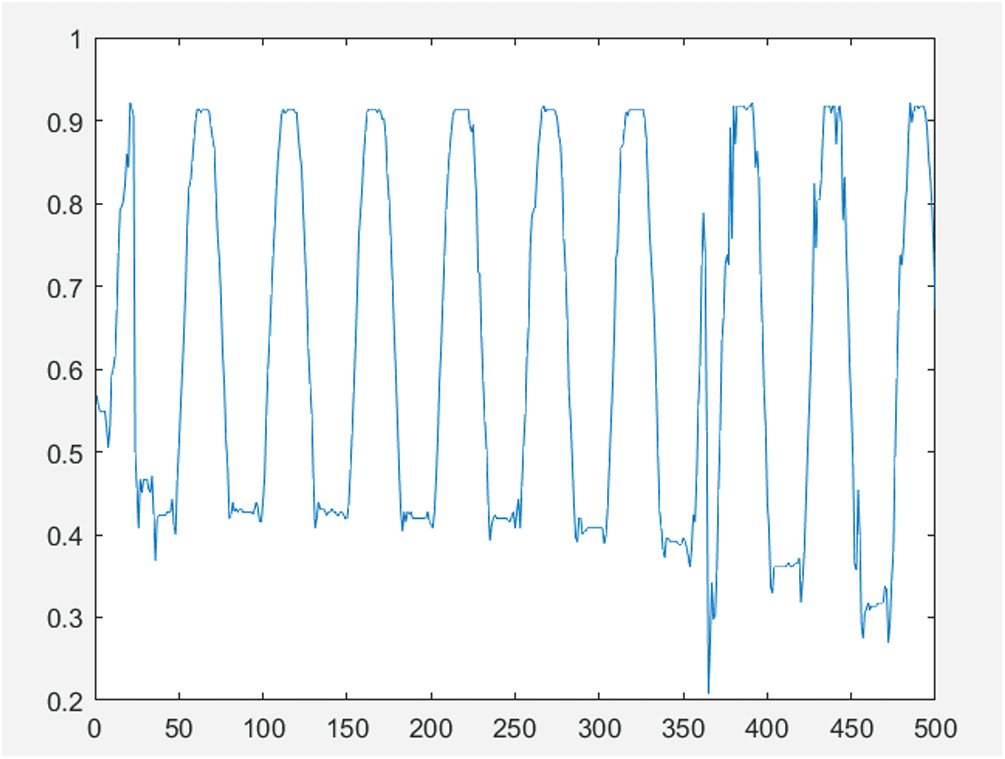

The system first generated projection grating fringes, where

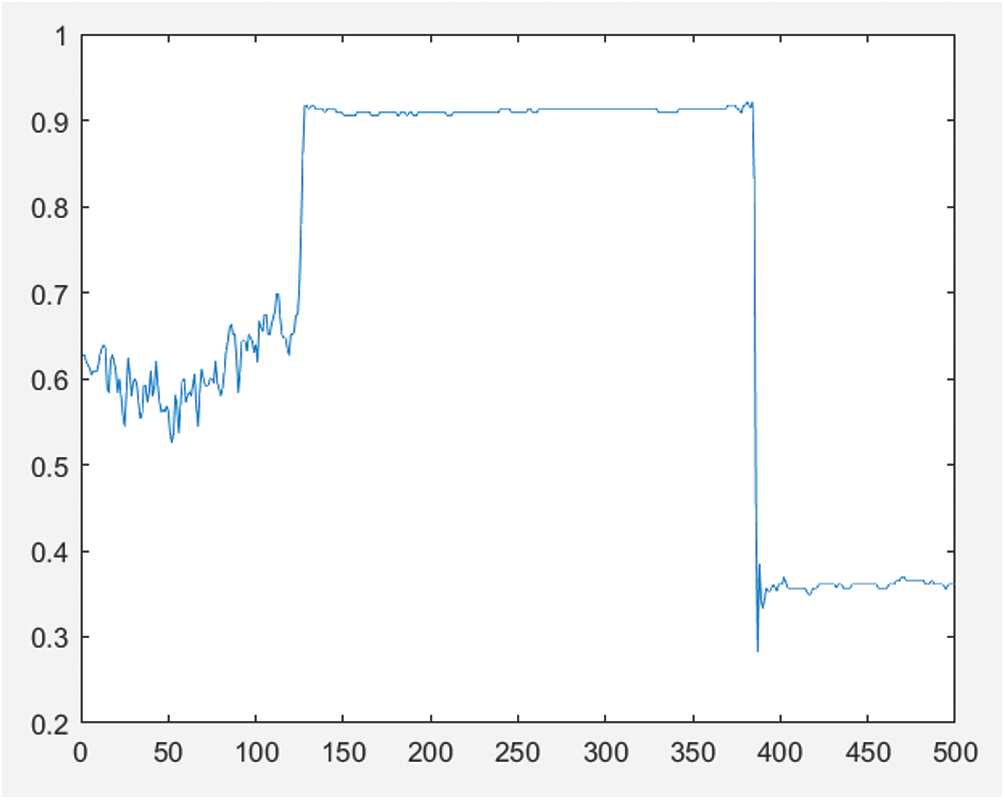

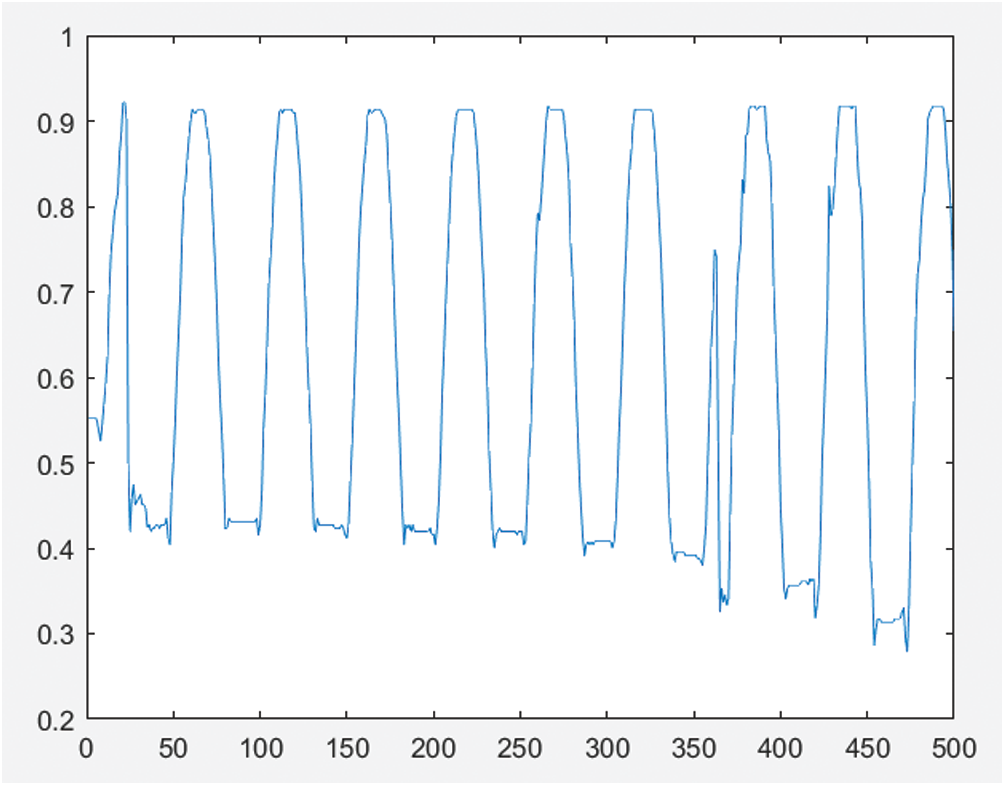

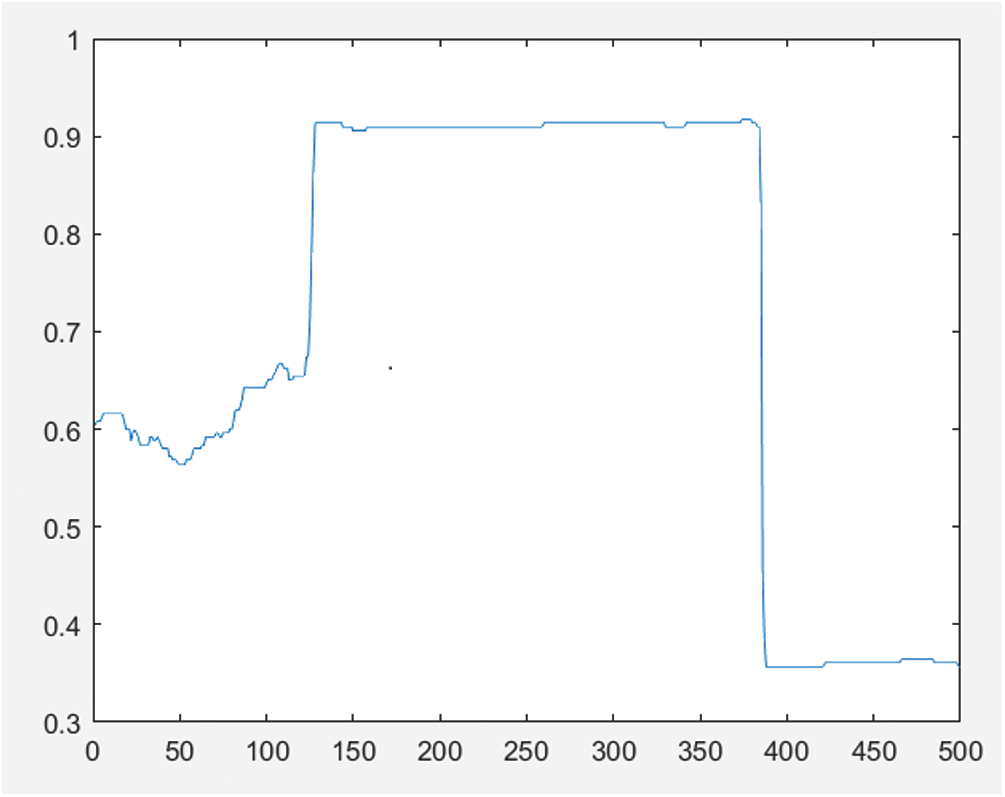

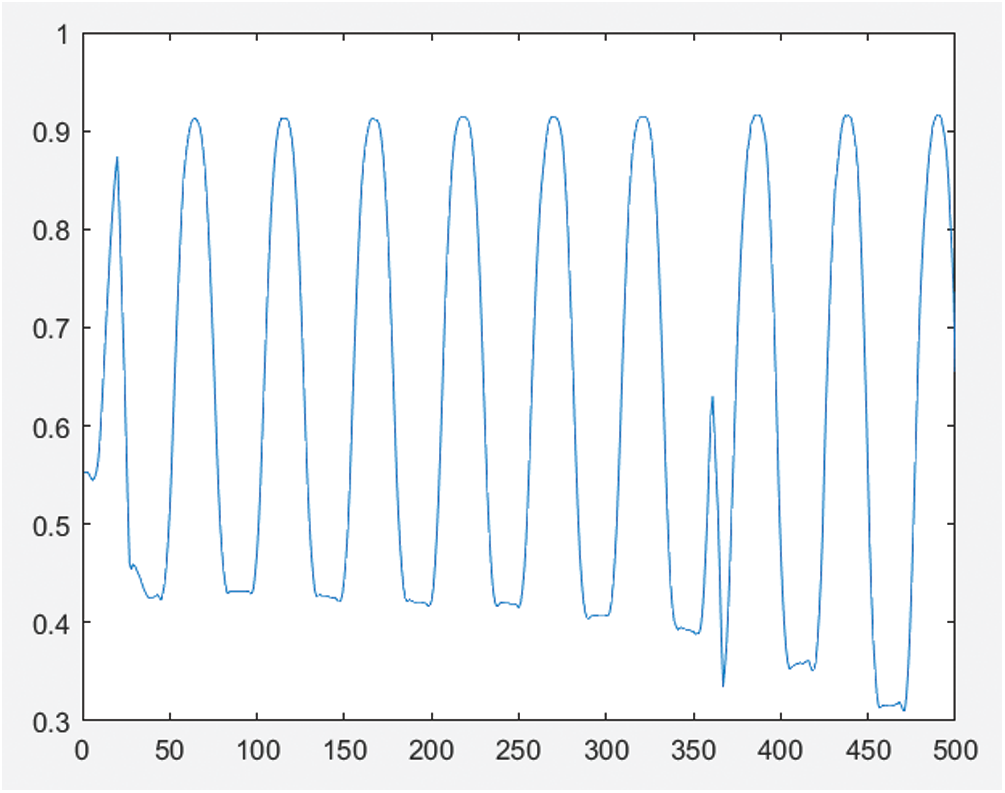

Figs. 4 and 5 show the sinusoidal fringe curve without phase compensation and the generated object profile, respectively. Figs. 6 and 7 are the sinusoidal fringe curve and the generated object contour after phase compensation using the Hilbert transform, respectively. After compensation, the phase error was reduced, but due to the influence of other error sources, such as sensor noise, quantization error, and ambient light interference, the extracted object contour still had a small amount of error. Figs. 8 and 9 are respectively the sinusoidal fringe curve and the generated object profile after phase compensation using the method in this paper. Because the image quality was improved before the Hilbert transform, the phase error of the sine fringe was greatly reduced, the object contour became smooth, and the noise was eliminated.

Figure 3: Image with stripe structured light

Figure 4: Sinusoidal fringe curve without phase compensation

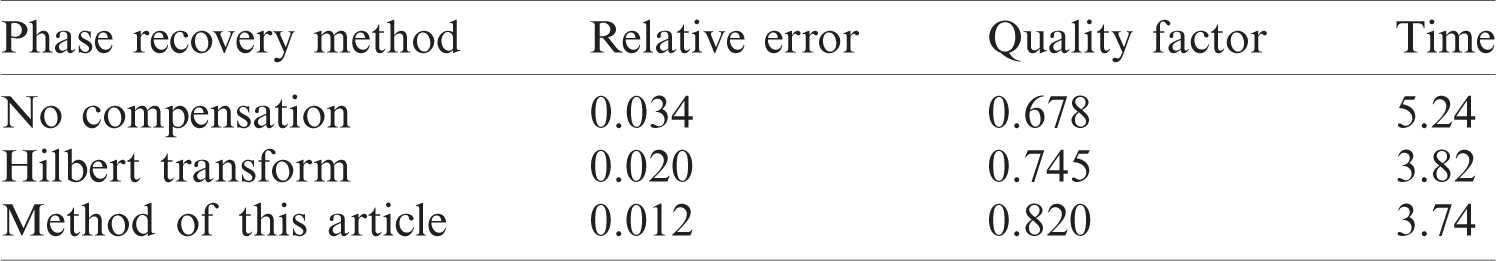

To more accurately reflect the effect of phase recovery, the relative error RMSE and image quality factor Q are introduced as evaluation criteria. The relative error RMSE is the expected value of the square of the image error,

where x and y are the original signal and compensation signal, respectively, and

Figure 5: Object profile in cross-sectional direction

Figure 6: Sinusoidal fringes using Hilbert transform

where

Figure 7: Object profile in cross-sectional direction

Figure 8: Sinusoidal fringe curve using this method

Figure 9: Object profile in cross-sectional direction

Table 1: Comparison of phase recovery of various methods

Gamma nonlinearity may result in phase error in a structured light 3D reconstruction system. The phase model and phase error model of gamma distortion are derived from the analysis of the relationship between the gamma distortion and phase error. A nonlinear phase error compensation method based on a Hilbert transform was proposed, making use of the property of the Hilbert transform that induces a phase shift of 2

Acknowledgement: The authors thank Dr. Jinxing Niu for his suggestions. The authors thank the anonymous reviewers and the editor for the instructive suggestions that significantly improved the quality of this paper. We thank LetPub (www.letpub.com) for its linguistic assistance during the preparation of this manuscript.

Funding Statement: This work is funded by the Scientific and Technological Projects of Henan Province under Grant 152102210115.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. Y. C. Wang, K. Liu and Q. Hao, “Robust active stereo vision using Kullback–Leibler divergence,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 34, no. 3, pp. 548–563, 2012. [Google Scholar]

2. Y. Chou, W. Liao, Y. Chen, M. Chang and P. T. Lin, “A distributed heterogeneous inspection system for high performance inline surface defect detection,” Intelligent Automation & Soft Computing, vol. 25, no. 1, pp. 79–90, 2019. [Google Scholar]

3. J. Niu, Y. Jiang, Y. Fu, T. Zhang and N. Masini, “Image deblurring of video surveillance system in rainy environment,” Computers, Materials & Continua, vol. 65, no. 1, pp. 807–816, 2020. [Google Scholar]

4. H. D. Bian and K. Liu, “Robustly decoding multiple-line-structured light in temporal Fourier domain for fast and accurate three-dimensional reconstruction,” Optical Engineering, vol. 55, no. 9, pp. 93110, 2016. [Google Scholar]

5. J. Wang, A. C. Sankaranarayanan and M. Gupta, “Dual structured light 3D using a 1D sensor,” Computer Vision, vol. 9, no. 17, pp. 383–398, 2016. [Google Scholar]

6. Q. Wang, C. Yang, S. Wu and Y. Wang, “Lever arm compensation of autonomous underwater vehicle for fast transfer alignment,” Computers, Materials & Continua, vol. 59, no. 1, pp. 105–118, 2019. [Google Scholar]

7. W. Sun, H. Du, S. Nie and X. He, “Traffic sign recognition method integrating multi-layer features and kernel extreme learning machine classifier,” Computers, Materials & Continua, vol. 60, no. 1, pp. 147–161, 2019. [Google Scholar]

8. X. Zhang, S. Zhou, J. Fang and Y. Ni, “Pattern recognition of construction bidding system based on image processing,” Computer Systems Science and Engineering, vol. 35, no. 4, pp. 247–256, 2020. [Google Scholar]

9. T. Huang, B. Pan and D. Nguyen, “Generic gamma correction for accuracy enhancement in fringe-projection profilometry,” Optics Letters, vol. 35, no. 12, pp. 1992–1994, 2012. [Google Scholar]

10. H. W. Guo, H. T. He and M. Y. Chen, “Gamma correction for digital fringe projection profilometry,” Applied Optics, vol. 43, no. 14, pp. 2906–2914, 2004. [Google Scholar]

11. K. Liu, Y. Wang and D. L. Lau, “Gamma model and its analysis for phase measuring profilometry,” Journal of Optical Society of America A-Optics Image Science and Vision, vol. 27, no. 3, pp. 553–562, 2010. [Google Scholar]

12. Z. Li and L. Li, “Gamma-distorted fringe image modeling and accurate gamma correction for fast phase measuring profilometry,” Optics Letters, vol. 36, no. 2, pp. 154–156, 2011. [Google Scholar]

13. W. Zhang, L. D. Yu, W. S. Li and H. J. Xia, “Black-box phase error compensation for digital phase-shifting profilometry,” IEEE Transactions on Instrumentation and Measurement, vol. 66, no. 10, pp. 2755–2761, 2017. [Google Scholar]

14. B. Pan, Q. Kemao, L. Huang and A. Asundi, “Phase error analysis and compensation for nonsinusoidal waveforms in phase-shifting digital fringe projection profilometry,” Optics Letters, vol. 34, no. 4, pp. 416–418, 2009. [Google Scholar]

15. J. R. Zhang, Y. J. Zhang, B. Chen and B. C. Dai, “Full-field phase error analysis and compensation for nonsinusoidal waveforms in phase shifting profilometry with projector defocusing,” Optics Communications, vol. 430, no. 1, pp. 467–478, 2019. [Google Scholar]

16. D. L. Zheng, F. P. Da, K. M. Qian and H. S. Seah, “Phase error analysis and compensation for phase shifting profilometry with projector defocusing,” Applied Optics, vol. 55, no. 21, pp. 5721–5728, 2016. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |