DOI:10.32604/cmc.2021.015417

| Computers, Materials & Continua DOI:10.32604/cmc.2021.015417 |  |

| Article |

Digital Forensics for Skulls Classification in Physical Anthropology Collection Management

1Department of Information and Library Science, Airlangga University, Surabaya, 60286, Indonesia

2Department of Anthropology, Airlangga University, Surabaya, 60286, Indonesia

3Department of Anatomy and Histology, Airlangga University, Surabaya, 60286, Indonesia

4School of Computing and Digital Technology, Birmingham City University, Birmingham, B4 7XG, United Kingdom

**Corresponding Author: Imam Yuadi. Email: imam.yuadi@fisip.unair.ac.id

Received: 19 November 2020; Accepted: 11 March 2021

Abstract: The size, shape, and physical characteristics of the human skull are distinct when considering individual humans. In physical anthropology, the accurate management of skull collections is crucial for storing and maintaining collections in a cost-effective manner. For example, labeling skulls inaccurately or attaching printed labels to skulls can affect the authenticity of collections. Given the multiple issues associated with the manual identification of skulls, we propose an automatic human skull classification approach that uses a support vector machine and different feature extraction methods such as gray-level co-occurrence matrix features, Gabor features, fractal features, discrete wavelet transforms, and combinations of features. Each underlying facial bone exhibits unique characteristics essential to the face's physical structure that could be exploited for identification. Therefore, we developed an automatic recognition method to classify human skulls for consistent identification compared with traditional classification approaches. Using our proposed approach, we were able to achieve an accuracy of 92.3–99.5% in the classification of human skulls with mandibles and an accuracy of 91.4–99.9% in the classification of human skills without mandibles. Our study represents a step forward in the construction of an effective automatic human skull identification system with a classification process that achieves satisfactory performance for a limited dataset of skull images.

Keywords: Discrete wavelet transform; Gabor; gray-level co-occurrence matrix; human skulls; physical anthropology; support vector machine

Researchers in digital forensics commonly deal with a series of activities, including collecting, examining, identifying, and analyzing the digital artefacts required for obtaining evidence regarding physical object authenticity [1]. Several research challenges are associated with the digital forensic attributes found during physical anthropology investigation. One prevalent example is the management of skull collections in museums, which will benefit future research and education. A skull cataloging and retrieval system is a major component of skull collection management. Within this system, skulls with lost labels can be identified via an investigation process. This process includes labeling the collection in the form of a call number attached to each skull. This ensures that the skulls belong to a specific collection and facilitates their identification. This is equally important for proper documentation, development, maintenance, and enhancement of existing collections and making them available to curators who want to use them according to classification standards [2].

However, the utilization of ink streaks on skulls to apply an alphanumeric code can damage the authenticity of the skull as a study material. Hence, skull collection management necessitates a certain approach to maintain the authenticity of the collection and avoid damage through the use of chemicals. Attaching stickers with the call number is an alternative. However, this method also has drawbacks because stickers can become loose, fall off, and become fixed to other skulls.

Therefore, it is challenging to increase the number of new skull collections because of difficulties associated with their storage and collection. Skulls can include those separated from the mandible. Labelling errors are a major problem when human skulls and other skeletal collections in an anthropology forensics laboratory are ink out. Apart from the loss of labels attached to new bone collections, the mixing of old bone collections with new bones and high usage factors are challenges that must be overcome in skull collection management.

The use of digital cameras by anthropologists and other researchers to classify human bones is currently limited to manual investigation and comparison. Although some previous studies have applied automatic methodologies, such as machine learning, to identify human skulls, the majority of the samples were obtained via computerized tomography (CT) scans of living participants. These samples have limited relevance with respect to the analysis of skulls of dead subjects, as required in physical anthropology forensics.

1.2 Contributions of This Work

In this study, we investigate a digital forensics approach for the physical anthropological investigation of skulls of dead humans based on their specific characteristics. Our main contributions are as follows. First, the significance of this work lies in the application of machine learning and data analytics knowledge to the new domain of physical anthropology collection management and addressing its unique challenges. Second, given the aforementioned problems introduced by manual labeling techniques, this study aims to evaluate the relevant contrasting features of human skulls and build skull-based identities from various positions via automatic classification. Third, our work proposes automatic classification of the skull beneath the human face that would allow curators to identify features based on skeletal characteristics. This technique would potentially assist in the management of museum collections or the laboratory storage of skulls; skulls could be identified without being manually marked or labeled, thereby maintaining their authenticity.

This study is inspired by face recognition technology. The structure of the mandible, mouth, nose, forehead, and the overall features associated with the human skull can be recognized using various means and properties. Based on the availability of these properties, face recognition can be conducted by comparing different facial images and classifying the faces using a support vector machine (SVM). The study [3] has applied SVMs to identify human faces, achieving face prediction accuracy rates of >95%. These studies applied different feature processing methods to acquire relevant statistical values before classification. Specifically, Benitez-Garcia et al. [4] and Hu [5] applied the discrete wavelet transform (DWT) for feature extraction to identify a human face. Eleyan [6] used the wavelet transform, whereas Dabbaghchian implemented the discrete cosine transform (DCT) for human face analysis [7]. Krisshna et al. [8] used transform domain feature extraction combined with feature selection to improve the accuracy of prediction. In contrast, Gautam et al. [9] proposed image decomposition using Haar wavelet transforms through a classification approach in which the quantization transform and the split-up window of facial images were combined. Faces were classified with backpropagation neural networks and distinguished from other faces using feature extraction when considering a grayscale morphology. In addition, a combined feature extraction of Gaussian and Gabor features has been applied to enhance the verification rate of face recognition [10].

As observed in the present study, the effective combination of different feature filters is a step forward in using machine learning to conduct investigations in physical anthropology and its sub-areas. Researchers in the physical anthropology field often focus on analyzing the data characteristic obtained from the skulls of dead humans; this characteristic has rarely been found in previous studies on automatic face recognition. Therefore, this work offers a new perspective on the application of machine learning to physical anthropology and tackling its challenges, i.e., the limited physical collection of skulls of dead humans, variation in the completeness of skull construction, and deterioration of the skull condition over time. All these challenges are obstacles to the training of appropriate machine learning techniques and obtaining appropriate feature extraction is the key to achieve the learning objective, successful facial classification.

The remaining sections of this manuscript are as follows. Section 2 presents related works. Section 3 discusses the skull structure that provides the initial information for skull classification. Section 4 presents our main research approach and contribution to developing a machine learning-based automatic classification platform for classifying human skulls in physical anthropology. Section 5 reports our experimental results and validates our research approach. Finally, we summarize the main results of this research and directions of our future work in Section 6.

There is increasing demand for an image classification system that can perform automatic facial recognition tasks [11–13]. Several studies have investigated facial recognition and facial perception. Automatic facial processing [11] is a reliable method and realistic approach for facial recognition. It benefits from the use of deep neural networks [12], dictionary learning [13], and automatic partial learning. These tools can be utilized to create a practical face dataset using inexpensive digital cameras or video recorders. Several studies have also addressed human recognition based on various body images captured using cameras.

Elmahmudi et al. [14] studied face recognition through facial rotation of different face components, i.e., the cheeks, mouth, eyes, and nose, and by exploiting a convolutional neural network (CNN) and feature extraction prior to SVM classification. Duan et al. [15] investigated partial face recognition using a combination of robust points to match the Gabor ternary patterns with the local key points. Several studies [16–18] have used CNNs to extract complementary facial features and derive face representations from the layers in the deep neural network, thereby achieving highly accurate results.

Furthermore, Chen et al. [16] applied similarity learning using a polynomial feature map to represent the matching of each sub-region including the face, body, and feet to investigate the similarity learning for person re-identification based on different regions. All the feature maps were then injected into a unified framework. This technique was also used by Wu et al. [17], who combined deep CNNs and gait-based human identification. They examined various scenarios, namely cross-walking and cross-view conditions with differences in pre-processing and network architecture. Koo et al. [18] studied human recognition through a multimodal method by analyzing the face and body, using a deep CNN.

A previous anthropology study [19] provided complete information for facial identification by investigating skull objects in different positions. In forensic anthropology, experts use bones and skulls to identify missing people via facial reconstruction and to determine their sex [20]. The identification of craniofacial superimposition can provide forensic evidence about the sex and specific identity of a living human. Furthermore, tooth structure provides information about food consumed. Craniofacial superimposition is based on a skeletal residue, which can provide forensic artefacts prior to identification. Therefore, the skull overlay process is applied by experts to examine the ante-mortem digital figures popular in skull morphology analysis [21].

The so-called computational forensics method is a specific to the forensic anthropology approach [22]. In this area of research, Bewes et al. [23] adapted neural networks for determining sex on the basis of human skulls using data obtained from hospital CT scans. Furthermore, an automatic classification method for determining gender was developed by Walker [24], who investigated and visually assessed modern American skulls based on five skull traits. He used discriminant function analysis to determine sex based on pelvic morphology. He evaluated sexual dimorphic traits to determine sex. By using a logistic model, it can be seen that the classification accuracy rate is 88% for modern skulls with a note that a negligible sex bias of 0.1% exists. Another study on the skulls of white European Americans was conducted by Williams and Rogers [25], who accurately identified more than 80% of skulls. Angelis [26] developed another method to predict soft face thickness for face classification.

As observed in most of the above studies, automatic face recognition is mainly focused on the analysis of data obtained from living humans, be it in the form of digital camera or CT images. Even though the physical characteristics for facial identification and computational forensics for gender classification have been investigated in the anthropology literature, automated digital tools that are robust in terms of facial identification appear to be lacking. Thus, this work is a step forward in developing an automated tool by incorporating machine learning and knowledge about robust features.

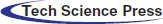

In principle, the facial skeleton or viscerocranium comprises the anterior, lower, and skull bones, namely, facial tissue, and other structures that form the human face. It comprises various types of bones, which are derived from the branchial arches interconnected among the bones of the eyes, sinuses, nose, and oral cavity and are in unity with the calvarias bones [27]. Naturally, the viscerocranium encompasses several bones, which are illustrated in Fig. 1 and are organized as follows.

1) Frontal—This bone comprises the squamous, which tends to be vertical, and the orbital bones, which are oriented horizontally. The squamous forms part of the human forehead, and the orbital part is the part of the bone that supports the eyes and nose.

2) Nasal—The paired nasal bones have different sizes and shapes but tend to be small ovals. These bones unite the cartilage located in the nasofrontal and upper parts of the lateral cartilages to form the human nose and consists of two neurocraniums and two viscerocraniums.

3) Vomer—The vomer bone is a single facial bone with an unpaired midline attached to an inferior part of the sphenoid bone. It articulates with the ethmoid, namely, the two maxillary bones and two palatine bones, forming the nasal septum.

4) Zygomatic—The zygomatic bone is the cheekbone positioned on the lateral side and forms the cheeks of a human. This bone has three surfaces, i.e., the orbital, temporal, and lateral surfaces. It articulates directly with the remaining four bones, i.e., the temporal, sphenoid, frontal, and maxilla bones.

5) Maxilla—This is often referred to as the upper jaw bone and is a paired bone that has four processes, i.e., the zygomatic, alveolar, frontal, and palatine processes. This bone supports the teeth in the upper jaw but does not move like the lower jaw or mandible.

6) Mandible—The mandible is the lower jaw bone or movable cranial bone, which is the largest and strongest facial bone. It can open and close a human's mouth. The mandible has two basic bones, i.e., the alveolar part and the mandible base, located in the anterior part of the lower jaw bone. Furthermore, it has two surfaces and two borders [28].

Figure 1: Structure of a human skull

In the following subsections, we describe the systematic design steps adopted for developing an automatic intelligent human skull recognition system using data collection and processing, feature extraction filters, and skull classification to obtain maximum prediction accuracy.

4.1 Tools and Software Platform

We used hardware and software platforms that would allow us to meet the objectives of this study and conduct forensic tests on human skulls. First, we used a DSC-HX300 digital camera (Sony Corp., Japan) equipped with high-resolution Carl Zeiss lenses for obtaining the skull images. Then, we applied Matlab software version R2013a to convert the image data into a numeric form. Finally, we implemented an SVM classifier with Eclips SDK in Java for skull classification. To run the aforementioned software, we used a personal computer with the following specifications: Intel Core i5 Processor equipped with 8 GB of RAM, using the Windows XP operating system.

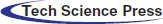

Fig. 2 presents the framework used for digital forensics when investigating the characteristics of human skulls in this work. It indicates the step-by-step investigation procedures, beginning with the digitalization of skull data and ending with skull identification. This process is explained in detail below:

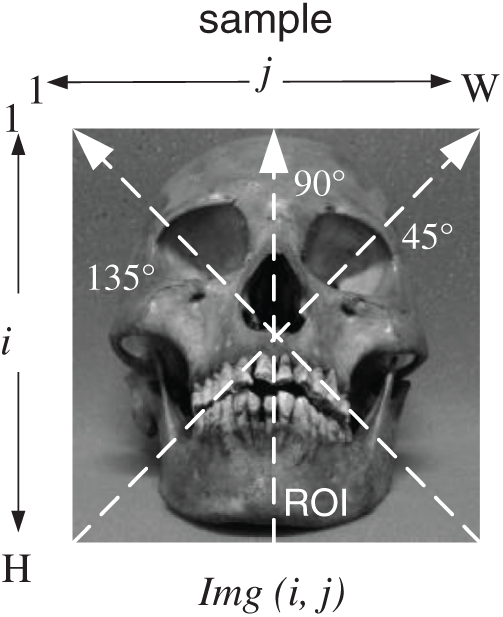

1) Digitizing human skulls: In the first step, skulls were digitized by taking their photos from various angles using a digital camera. Thus, images of the face or front, left, right, bottom, and top areas could be obtained. The obtained results were then documented and saved as digital image files. Fig. 4 presents the region of interest (ROI) of an image sample. This figure shows the skull area corresponding to a set of pixels, where (i,j) denotes a spatial location index within the picture.

2) Feature extraction: This step was conducted to obtain certain values from skull images via feature filtering or extraction based on pixel characteristics and other criteria. Various feature filters were applied to compare the accuracy rates of the implemented filters. This was the major image processing activity prior to the segmentation and classification steps. We considered four different feature-filtering techniques to determine the relevant features and extract their corresponding values from the images. We conducted a texture analysis approach using this feature filter before classifying the human skulls. Four feature filters were separately applied to obtain a different accuracy rate for classification. For this study, we used 22 feature-level, co-occurrence matrices (GLCM), 12 features of the discrete wavelet transform (DWT), 48 Gabor features, and 24 fractal features or segmentation-based fractal texture analyses (SFTA). In total, we used 106 features. The filters were applied to analyze 24 images of skulls at various rotation angles (from 1° to 360°); each image was extracted with these filters to obtain a different statistical decomposition. Therefore, each skull image produced a minimum of 360 images to be extracted through the deployment of various filters before classification.

3) Classification: The support vector machine (SVM) is a widely applied method developed by Awad and Khanna [29] for data classification and regression. This method can maximize the distance between several data classes even when applied to a high-dimensional input space. It also has the ability to process and group images based on patterns, which is an advantage of the SVM, especially against the drawback of dimensionality. Furthermore, the SVM can solve the problem of limited training and can minimize the parameter associated with its structure based on its ability to work on nonlinear problems by adding a high-dimensional kernel [30]. The SVM works by finding the best hyperplanes to classify the different space classes in the input space. Classification can be conducted by finding a hyperplane that separates groups or classes through margins and maximum points. Therefore, it can run on nonlinear kernel data with nonlinear kernel functions by mapping the product point from lower to higher dimensions. In this study, radial basis function (RBF)-based kernels were selected to build a nonlinear classifier for identifying 24 different types of skull. More specifically, we applied the RBF-based kernel function used in a previous study to build this SVM-based classifier [31], i.e.,

Figure 2: Framework used for digital forensics when investigating the characteristics of human skulls

Feature extraction involves the transformation of data. The derivative values from original data are transformed into variable data with statistical values that can be further processed. Here, we used the following techniques for feature extraction.

1) GLCM is a popular filter for texture analysis. It captures information regarding the gray-value spatial distribution in an image and the image texture's corresponding frequency at given specified angles and distances. Feature extraction using GLCM is conducted based on the estimated probability density function of a pixel using a co-occurrence matrix along with its pixel pairs, where features can be statistically and numerically quantified [32]. Four angular directions are considered during matrix generation for feature extraction. Specifically, the statistical characteristics are calculated in the 0°, 45°, 90°, and 135° directions.

Fig. 3 presents direction (horizontal and vertical orientations) as a spatial representation based on different reference pixels. Let us assume that reference pixel i is defined with a 45° orientation based on which an adjacent pixel can be located. The direction of the pixel is calculated when considering pixel j next to pixel i, as demonstrated by Tsai et al. [33]. Following this work, Fig. 4 illustrates the ROI of human skulls showing the pixels generated by GLCM in gray color, as captured by Eq. (1).

Thus, pixels are labeled as “1” if they belong to the ROI and “0” otherwise. From Eq. (1), we can obtain the predictable values from the normalized GLCM.

Here, (i,j) denotes the index of the pixel in the image, and Img (i,j) denotes the probability of the pixel index (i,j). GLCM can generate 22 texture features, as explained in detail by Tsai et al.

2) Wavelet features. A digital image comprises many pixels that can be represented in a two-dimensional (2D) matrix. Outside the spatial domain, an image can be represented in the frequency domain using a spectrum method called the DWT. In several studies (e.g., [34,35]), the feature sets are focused on 2D-scale wavelets because of their underlying functions. The feature filter direction follows subsampling with two factors, and each sub-band is equivalent to the output filters, which contain several samples compared with the main 2D matrix. The filtered processing outputs are considered to be the DWT coefficients. This filter set of DWT coefficients, as shown in Fig. 4, contains 12 statistical features that include kurtosis (HH, LH, HL, and LL sub-bands), standard deviation, and skewness.

3) Gabor features. Gabor filters are shaped through dilation and rotation in a single kernel with several parameters. The corresponding filter function is used as a kernel to obtain a dictionary filter for analyzing the texture images. The 2D Gabor filter has several benefits in a spatial domain, such as a number of different scales and orientations allows for feature extraction and also, invariance for rotation, illumination, and translation involving the Gaussian kernel function [36] modulated by complex sinusoidal waves [37,38]. Inspired by these works, we used the function in Eq. (3) to extract human skull images.

Here, parameter

4) Fractal features are considered when evaluating images with similar textures. Features are obtained from the fractal dimensions of the transformed images obtained from the boundary of segmented image structures and grayscale images. Fractal features can be used to compute the fractal dimension for any surface roughness. Furthermore, they can be used to evaluate the gray image and compare various textures. Fractal dimensions can be realized as a measure of irregularity or heterogeneity. If an object has self-similarity properties, then the entire set of minimized subsets will have the same properties. In this study, the boundaries of the feature vector were used to measure fractals. The measurement is represented as Δ (x, y), and can be expressed as follows:

Figure 3: Estimation of texture orientation from a skull image. The pixel of GLCM (n, m) from four different regions of interest (ROIs), where the spatial location of the skull image is indicated by i and j. At a point, pixel separation (W and H) is applied as W = 0 and H = 1 to obtain the number of gray-level pixels n and m

Figure 4: Discrete wavelet transform image decomposition for (a) one and (b) two levels of resolution

This measurement function is similar to the one in Costa et al. [39], except, instead of N8 (x, y), N4 [(x, y)] is used to denote a grayscale skull image that has a vector size threshold of 4 in related to (x, y) in a group of pixels. For binary decomposition, they applied a thresholding mechanism to the input image. In this study, we applied a four-connected pixel in the case of threshold segmentation to (x, y). Thus, 24 features could be extracted.

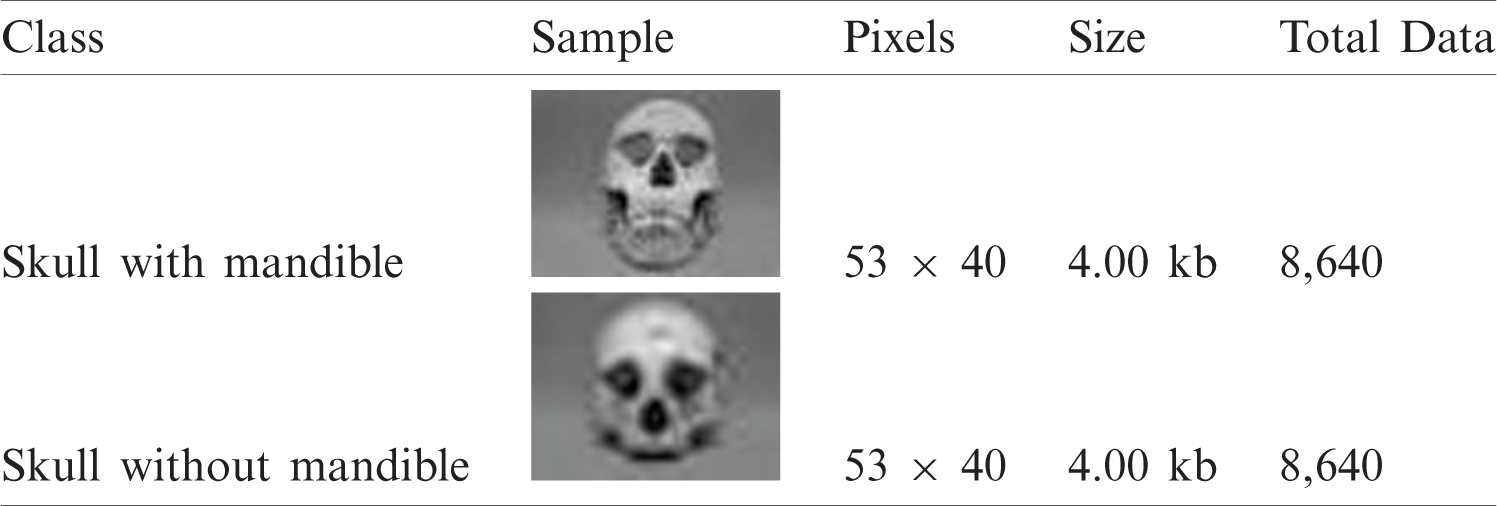

In this study, human skulls were categorized based on their mandibles. We validated and compared the samples’ unique characteristics (not only skulls with mandibles but also those without mandibles), as shown in Fig. 5. To obtain fair research results, we considered 24 skulls with mandibles and 24 skulls without mandibles to define our target classes for classification. We then took pictures of the samples using the aforementioned digital camera. The skulls were obtained from the Physical Anthropology Laboratory at Airlangga University. The original skull images can be accessed from http://fisip.unair.ac.id/researchdata/Skulls/.

Figure 5: Skulls with a mandible (top) and without a mandible (bottom)

We experimented with seven different angles for the images of skulls with and without mandibles: front, top, and back angles, as well as 45° right-angle, 45° left-angle, 90° right-angle, and 90° left-angle rotations. Then, we rotated the image step-by-step by 360°; each degree of rotation produced one sample image that was stored as the input sample for machine learning. For example, the front angle was rotated by 360°, and thus we analyzed 360 data samples. Subsequently, we converted all the images to grayscale in jpeg (jpg) format, set a pixel size of 53 × 40 for each image, and set the file size to 4 kb. Tab. 1 details the 360 processed sample images for each skull image that were obtained via rotation. The total number of images used in this experiment was 8,640. We classified 24 skull images as the target class of classification. The result of a given experiment was the average of ten rounds of the given experiment. For each round of an experiment, a set of 300 images was uniformly sampled.

Table 1: Data sample of human skulls

In this experiment, it was conducted by dividing into training and testing data with a ratio of 2:1. There were ten sets and each set comprised 300 images selected for training data and another 150 images for test data.

The limitations of this study were difficulty in obtaining experimental data and using camera settings to ensure the same resolution when capturing skull images. Another limitation was that seven different angles were considered to perform comparisons between skulls with and without mandibles. Because of the difficulty associated with finding research objects, this study focused on the classification of 24 skulls, which were all in an incomplete condition, especially those that had teeth attached.

As described previously, we considered two different digital skull images: skulls with mandibles and skulls without mandibles. We first applied each feature extraction filter separately to clearly understand the factors influencing the experimental results. This process was followed by combining all the feature extraction filters. The following subsections discuss the application of filters and obtained classification accuracy.

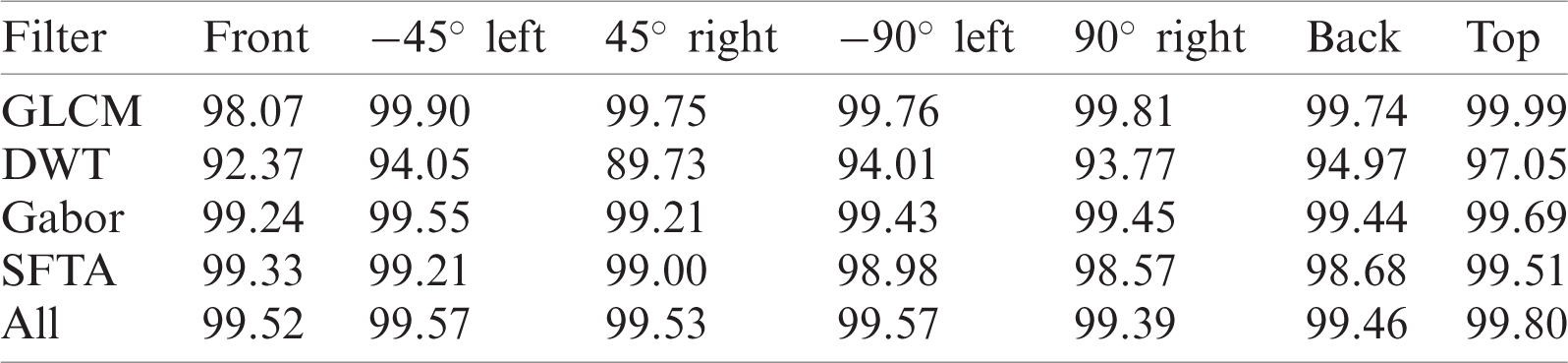

5.1 Experiment i: Identification of Skulls with Mandibles

In Experiment I, we considered the images of human skulls with mandibles and examined them from different angles as shown in Fig. 6. Prior to classification, the feature extraction techniques (see Section 4) were applied to the images, and Matlab was used to obtain the numerical values of the generated features. Then, we exported the numerical values on the basis of a filter set into a MySQL database for future referencing. Subsequently, we performed image-driven skull classification using the SVM implemented in a Java programming environment to compute the accuracy of the classification task on the basis of a given set of features. We considered all the individual treatments of each feature extraction filter and the combined effect. The accuracy rates of predicting the skulls from different angles are presented in Tab. 2.

The detailed steps of this experiment were as follows.

(1) Step 1: We used 24 sets of images extracted using various extraction filters. Each resulting set of images contained 360 transformed images obtained by rotating the original image via one-degree rotation per step. From all the available images, we selected 200 skull images as training data and 100 skull images as testing data. Our four extraction filtering techniques were then applied for feature extraction.

(2) Step 2: We ran the SVM to predict human skulls with mandibles using the four filtering techniques individually and then a combination of all four filters.

(3) Step 3: We conducted a series of image testing steps on the basis of the appropriate model constructed in Step (2) for human skulls with mandibles.

(4) Finally, we repeated Steps (1)–(3) nine more times (for a total of ten replicates) and obtained the average performance.

The classification of skulls differed in accuracy across the seven angles of interest. Evidently, each filter had a different accuracy even though the within-filter results were numerically stable. Gabor feature extraction was stable, i.e., higher than 90%, making it the superior feature filter among the four considered techniques. In contrast, the DWT filter resulted in an accuracy rate as low as 89.73%. Conversely, the GLCM, Gabor, and fractal filters consistently achieved a classification accuracy >98%. With prediction accuracies that were mostly >90%, all four filters are promising tools for assisting the SVM in automatically classifying human skulls for physical anthropology applications.

Figure 6: Various angles used for depicting images: (a) front angle, (b) 45° right-angle rotation, (c) −45° left-angle rotation, (d) 90° right-angle rotation, (e) −90° left-angle rotation, (f) top angle, and (g) back angle

Table 2: Accuracy of prediction (%) for human skulls with mandibles

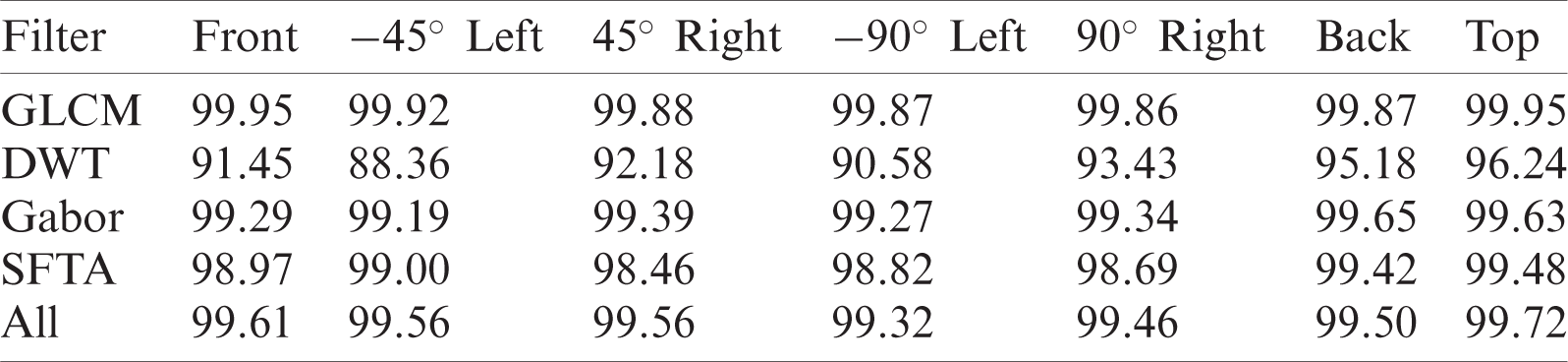

5.2 Experiment II: identification of Skulls Without Mandibles

We also conducted identifications of skulls without mandibles to evaluate the robustness of our classification system.

Tab. 3 presents the performance accuracy of the five filters for human skulls without mandibles (we selected 24 out of 99 available samples in this table). The classification results obtained using the SVM varied according to the different feature extraction filters. Overall, the GLCM filter offered superior prediction capabilities, achieving higher than 99% accuracy for all the angular positions of the skulls. The discrete wavelet transform had the lowest accuracy. Almost all filters had prediction accuracies >90%, except for DWT at −45° left (88.36%). The prediction accuracy was 99.61% when we combined the features from all the filters.

Table 3: Accuracy prediction (%) for human skulls without mandibles

In automatic human skull classification, the implementation of feature extraction and the combination of different feature filters play a significant role in the accumulation of relevant features. Each filter can produce several features. A classification system with diverse results can be produced by using four different filters and combining all generated features. For example, in this study, the use of GLCM comprising 22 features resulted in a classification accuracy rate of 99.86–99.95% depending on the angular position of the skull. Conversely, DWT feature extraction had a much lower accuracy rate of 88.36–96.24%.

5.3 Experiment III: Different Resolutions for Skull Classification

We also used different electronic imaging devices to compare and validate the results of the previous experiments in which we used a high-resolution camera; however, in Experiment III, we used a mobile camera (NOKIA 3.1 plus) with a lower resolution. We used the same experimental approach but captured the skull front angle images with different lens sizes for camera resolutions of 2, 4, and 9 MP.

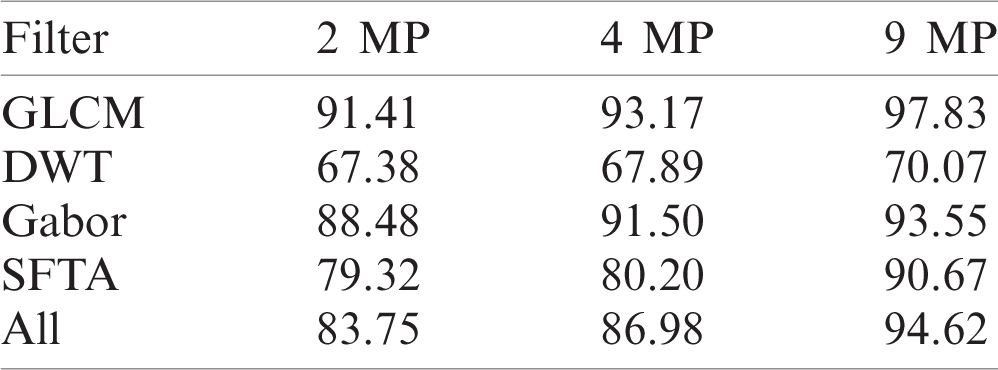

Tab. 4 presents the accuracies obtained when identifying human skulls using three different camera resolutions. The accuracy of predictions increased with increasing resolution. For example, a 2-MP camera resolution resulted in a prediction accuracy of 91.41% for GLCM, lower than those for a 4-MP resolution (93.17%) and a 9-MP resolution (97.83%).

Table 4: Accuracy prediction (%) for different resolutions

Our experimental results indicate that the classification of skulls with mandibles was as accurate as that of skulls without mandibles. However, the required calculation time for processing the images of skulls with mandibles was shorter than that for skulls without mandibles.

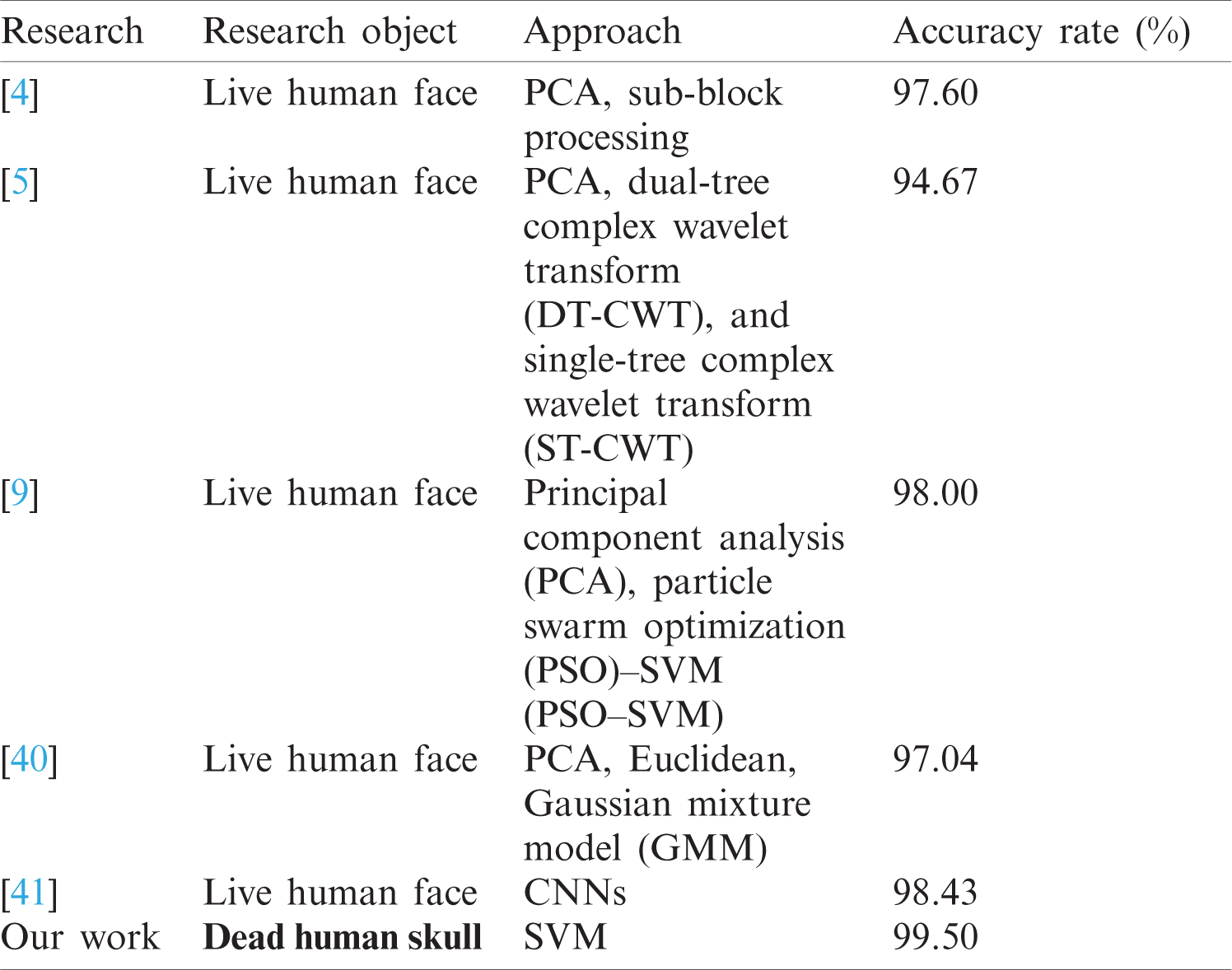

This study extends the analysis and framework for the identification of human faces reported in previous studies [4–5,9], and [40–41] but uses a different approach to the classification of human skulls. The results from previous studies are summarized in Tab. 5 for further comparison of identification accuracy. The majority of these approaches achieved an average accuracy higher than 90%. The lowest accuracy was observed with the method used by Hu et.al (94.67%) [5]. Other studies exhibited much better accuracies, with averages >95%. The most accurate approach was obtained via research with CNNs [41], resulting in an accuracy of 98.43%. Other approaches, such as principal component analysis, Euclidean, and Gaussian mixture model [40], also exhibited a high accuracy. Nevertheless, our method of analyzing human skulls rather than the faces of living persons resulted in even higher accuracies. Using the framework presented in Fig. 2, we obtained a high classification accuracy when identifying skulls. Thus, our novel approach could be a promising application in digital forensics with respect to human skull identification.

Table 5: Results of different face recognition approaches

Unlike human face recognition research, one of the major challenges associated with the present study was the acquisition of human skull data. This is because the skull is an inanimate object that must be moved to obtain data from various angles. This movement was achieved by manually turning the skull to appropriate angles to obtain images from various positions. This is highly challenging, especially when the skull is in an incomplete condition.

Moreover, variation in the amount of training data can impact the accuracy of the classification task. It is thus of interest to investigate how various training dataset sizes can affect the performance of SVM classification. The prediction accuracy rates for skulls with and without mandibles show that the amount of training and testing data affects the prediction accuracy. For example, with the GLCM filter, when we used only one training data item to predict skulls with mandibles, we obtained an accuracy rate of 18.33%. However, when we used 100 training data items, the accuracy rate was 97.03%. Thus, a greater amount of applied training data will result in a higher accuracy.

Skulls generally have one dominant texture and color but may have different shapes and sizes even if the skulls share ancestry. However, if the bones are buried in different soils (for example, clay or calcareous soils), they will have different colors.

In this forensic study, we applied a digital camera to digitize the skulls. The implementation of different digitizing tools will affect the level of accuracy, especially regarding image resolution. Therefore, in further research, we recommend the use of advanced digital technology capabilities such as, postmortem computed tomography (PMCT) and angiography, as well as X-rays.

This study focused on only 24 human skulls with mandibles and 24 skulls without mandibles because of the limitations and difficulties in obtaining sample data in physical anthropology. However, we also conducted experiments on other skulls without mandibles (99 skulls) even though with some bone structures were incomplete when they were discovered. Therefore, we only focused on the classification of skull faces. Our results were similar to those obtained from Experiments III, although the level of accuracy was slightly higher than those in previous experiments.

We developed an automatic computerized digital forensics approach for human skull identification using feature extraction in tandem with an SVM. We applied a digital forensics framework to classify human skulls with and without mandibles. We tested four different feature extraction filters for feature extraction that resulted in different classification accuracies. GCLM achieved the maximum accuracy with features generated from Gabor and fractal features (>99%). In contrast, DWT features resulted in identification prediction accuracies <95%. The combination of the four feature extraction techniques produced an accuracy rate >99% for skulls both with and without mandibles. Thus, every human skull has unique features that can be used to distinguish its identity in forensics applications, especially in physical anthropology collection management.

We can identify several future directions for research related to skull identification. For future work, it will be necessary to optimize the combined feature extraction and classification method and to explore other feature extraction techniques and classification methods for performance comparisons. Utilizing additional skull data when using the CNN method could be the main focus for such future research. Furthermore, the determination of the age and gender associated with the skulls will greatly assist researchers in identifying humans who disappeared due to natural disasters or who were victims of criminal activities.

Funding Statement: The work of I. Yuadi and A. T. Asyhari has been supported in part by Universitas Airlangga through International Collaboration Funding (Mobility Staff Exchange).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. M. Pollitt, M. Caloyannides, J. Novotny and S. Shenoi, “Digital forensics: Operational, legal and research issues,” Data and Applications Security XVII. IFIP International Federation for Information Processing, vol. 142. Boston: Springer, pp. 393–403, 2004. [Google Scholar]

2. D. Antoine and E. Taylor, “Collection care: Handling, storing and transporting human remains,” in Regarding the Dead: Human Remains in the British Museum. Chapter 5, Section 2, London, United Kingdom: British Museum, pp. 43, 2014. [Google Scholar]

3. J. Wei, Z. Jian-qi and Z. Xiang, “Face recognition method based on support vector machine and particle swarm optimization,” Expert Systems with Applications, vol. 38, no. 4, pp. 4390–4393, 2011. [Google Scholar]

4. G. B. Garcia, J. O. Mercado, G. S. Perez, M. N. Miyatake and H. P. Meana, “A sub-block-based eigen phases algorithm with optimum sub-block size,” Knowledge-Based Systems, vol. 37, pp. 415–426, 2013. [Google Scholar]

5. H. Hu, “Variable lighting face recognition using discrete wavelet transform,” Pattern Recognition Letters, vol. 32, no. 13, pp. 1526–1534, 2011. [Google Scholar]

6. A. Eleyan, H. Özkaramanli and H. Demirel, “Complex wavelet transform-based face recognition,” EURASIP Journal on Advances in Signal Processing, vol. 2008, no. 185281, pp. 1–13, 2009. [Google Scholar]

7. S. Dabbaghchian, M. P. Ghaemmaghami and A. Aghagolzadeha, “Feature extraction using discrete cosine transform and discrimination power analysis with a face recognition technology,” Pattern Recognition, vol. 43, no. 4, pp. 1431–1440, 2010. [Google Scholar]

8. N. L. A. Krisshna, V. K. Deepak, K. Manikantan and S. Ramachandran, “Face recognition using transform domain feature extraction and PSO-based feature selection,” Applied Soft Computing, vol. 22, pp. 141–161, 2014. [Google Scholar]

9. K. Gautam, N. Quadri, A. Pareek and S. S. Choudhary, “A face recognition system based on back propagation neural network using haar wavelet transform and morphology,” in Emerging Trends in Computing and Communication, vol. 298, pp. 87–94, 2014. [Google Scholar]

10. J. Olivares-Mercado, G. Sanchez-Perez, M. Nakano-Miyatake and H. Perez-Meana, “Feature extraction and face verification using Gabor and Gaussian mixture models,” in MICAI 2007: Advances in Artificial Intelligence, Aguascalientes, Mexico, pp. 769–778, 2007. [Google Scholar]

11. B. Kamgar-Parsi, W. Lawson and B. Kamgar-Parsi, “Toward development of a face recognition system for watchlist surveillance,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 33, no. 10, pp. 1925–1937, 2011. [Google Scholar]

12. F. Schroff, D. Kalenichenko and J. Philbin, “Facenet: A unified embedding for face recognition and clustering,” in IEEE Conf. on Computer Vision and Pattern Recognition (CVPRBoston, MA, USA, pp. 815–823, 2015. [Google Scholar]

13. M. Liao and X. Gu, “Face recognition based on dictionary learning and subspace learning,” in Digital Signal Processing, vol. 90, pp. 110–124, 2019. [Google Scholar]

14. A. Elmahmudi and H. Ugail, “Deep face recognition using imperfect facial data,” Future Generation Computer Systems, vol. 99, pp. 213–225, 2019. [Google Scholar]

15. Y. Duan, J. Lu, J. Feng and J. Zhou, “Topology preserving structural matching for automatic partial face recognition,” IEEE Transactions on Information Forensics and Security, vol. 13, no. 7, pp. 823–1837, 2018. [Google Scholar]

16. D. Chen, Z. Yuan, B. Chen and N. Zheng, “Similarity learning with spatial constraints for person re-identification,” in Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, pp. 1268–1277, 2016. [Google Scholar]

17. Z. Wu, Y. Huang, L. Wang, X. Wang and T. Tan, “A comprehensive study on cross-view gait based human identification with deep CNNs,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 39, no. 2, pp. 209–226, 2017. [Google Scholar]

18. J. H. Koo, S. W. Cho, N. R. Baek, M. C. Kim and K. R. Park, “CNN-Based multimodal human recognition in surveillance environments,” Sensors (Basel), vol. 18, no. 9, pp. 3040, Sept. 2018. [Google Scholar]

19. M. Cummaudo, M. Guerzoni, L. Marasciuolo, D. Gibelli, A. Cigada et al., “Pitfalls at the root of facial assessment on photographs: A quantitative study of accuracy in positioning facial landmarks,” International Journal of Legal Medicine, vol. 127, no. 3, pp. 699–706, 2013. [Google Scholar]

20. C. Cattaneo, “Forensic anthropology: Developments of a classical discipline in the new millennium,” Forensic Science International, vol. 165, no. 2–3, pp. 185–193, 2007. [Google Scholar]

21. B. R. Campomanes-Álvarez, O. Ibáñez, F. Navarro, I. Alemán, M. Botella et al., “Computer vision and soft computing for automatic skull-face overlay in craniofacial superimposition,” Forensics Science International, vol. 245, pp. 77–86, 2014. [Google Scholar]

22. K. Franke and S. N. Srihari, “Computational forensics: an overview,” In Lecture Notes in Computer Science, vol. 5158. Springer, Berlin, Heidelberg, 2008. [Google Scholar]

23. J. Bewes, A. Low, A. Morphett, F. D. Pate and M. Henneberg, “Artificial intelligence for sex determination of skeletal remains: Application of a deep learning artificial neural network to human skulls,” Journal of Forensic and Legal Medicine, vol. 62, pp. 40–43, 2019. [Google Scholar]

24. P. L. Walker, “Sexing skulls using discriminant function analysis of visually assessed traits,” American Journal of Physical Anthropology, vol. 136, no. 1, pp. 39–50, 2008. [Google Scholar]

25. B. A. Williams and T. Rogers, “Evaluating the accuracy and precision of cranial morphological traits for sex determination,” Journal Forensic Sciences, vol. 51, no. 4, pp. 729–35, 2006. [Google Scholar]

26. D. D. Angelis, R. Sala, A. Cantatore, M. Grandi and C. Cattaneo, “A new computer-assisted technique to aid personal identification,” International Journal of Legal Medicine, vol. 123, no. 4, pp. 351–356, 2009. [Google Scholar]

27. D. G. Steele and C. A. Bramblett, The Anatomy and Biology of the Human Skeleton, Texas A & M University Press, College Station, 1988. [Google Scholar]

28. R. Budd, The Human Skull: An Interactive Anatomy Reference Guide, Toronto, Harrison Foster publications, 2016. [Google Scholar]

29. M. Awad and R. Khanna, “Support Vector Regression,” Efficient Learning Machines, Apress, Berkeley, CA, pp. 67–80, 2015. [Google Scholar]

30. C. Gold and P. Sollich, “Model selection for support vector machine classification,” Neurocomputing, vol. 55, no. 1–2, pp. 221–249, 2003. [Google Scholar]

31. C. W. Hsu, C. C. Chang and C. J. Lin, A Practical Guide to Support Vector Classification, Taipei: National Taiwan University, 2003. http://www.csie.ntu.edu.tw/∼cjlin/papers/guide/guide.pdf. Accessed: December 16, 2019. [Google Scholar]

32. W. S. Chen, R. H. Huang and L. Hsieh, “Iris recognition using 3d co-occurrence matrix,” In Advances in Biometrics. ICB 2009, Lecture Notes in Computer Science, vol. 5558. Springer, Berlin, Heidelberg, 2009. [Google Scholar]

33. M. J. Tsai, J. S. Yin, I. Yuadi and J. Liu J. “Digital forensics of printed source identification for Chinese characters,” Multimedia Tools Application, vol. 73, pp. 2129–2155, 2014. [Google Scholar]

34. R. C. Gonzales and R. E. Woods, In Digital Image Processing, 3rd Ed. Prentice-Hall, New Jersey, 2006. [Google Scholar]

35. L. Chun-Lin, A Tutorial of the Wavelet Transforms, National Taiwan University, Feb. 2010. [online] Available: http://disp.ee.ntu.edu.tw/tutorial/WaveletTutorial.pdf. Accessed: 11 September 2019. [Google Scholar]

36. J. G. Daugman, “Complete discrete 2-D gabor transforms by neural networks for image analysis and compression,” IEEE Transactions on Acoustics, Speech, and Signal Processing, vol. 36, pp. 1169–1179, 1988. [Google Scholar]

37. R. J. Schalkoff, Digital Image Processing and Computer Vision, John Wiley & Sons, Australia, 1989. [Google Scholar]

38. J. G. Daugman, “High confidence visual recognition of persons by a test of statistical independence,” in IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 15, pp. 1148–1161, 1993. [Google Scholar]

39. A. F. Costa, G. Humpire-Mamani and A. J. M. Traina, “An efficient algorithm for fractal analysis of textures,” 2012 25th SIBGRAPI Conf. on graphics, patterns and images, 2–25 August, Ouro Preto. pp. 39–46, 2012. [Google Scholar]

40. J. O. Mercado, K. Hotta, H. Takahashi, M. N. Miyatake and K. T. Medina, “Improving the eigen phase method for face recognition,” IEICE Electronics Express, vol. 6, no. 15, pp. 1112–1117, 2009. [Google Scholar]

41. C. Ding and D. Tao, “Robust face recognition via multimodal deep face representation,” IEEE Transactions on Multimedia, vol. 17, no. 11, pp. 2049–2058, 2015. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |