DOI:10.32604/cmc.2021.016108

| Computers, Materials & Continua DOI:10.32604/cmc.2021.016108 |  |

| Article |

An Enhanced Jacobi Precoder for Downlink Massive MIMO Systems

Department of Information and Communication Engineering, and Convergence Engineering for Intelligent Drone, Sejong University, Seoul, 05006, Korea

*Corresponding Author: Song Hyoung-Kyu. Email: songhk@sejong.ac.kr

Received: 23 December 2020; Accepted: 26 January 2021

Abstract: Linear precoding methods such as zero-forcing (ZF) are near optimal for downlink massive multi-user multiple input multiple output (MIMO) systems due to their asymptotic channel property. However, as the number of users increases, the computational complexity of obtaining the inverse matrix of the gram matrix increases. For solving the computational complexity problem, this paper proposes an improved Jacobi (JC)-based precoder to improve error performance of the conventional JC in the downlink massive MIMO systems. The conventional JC was studied for solving the high computational complexity of the ZF algorithm and was able to achieve parallel implementation. However, the conventional JC has poor error performance when the number of users increases, which means that the diagonal dominance component of the gram matrix is reduced. In this paper, the preconditioning method is proposed to improve the error performance. Before executing the JC, the condition number of the linear equation and spectrum radius of the iteration matrix are reduced by multiplying the preconditioning matrix of the linear equation. To further reduce the condition number of the linear equation, this paper proposes a polynomial expansion precondition matrix that supplements diagonal components. The results show that the proposed method provides better performance than other iterative methods and has similar performance to the ZF.

Keywords: Jacobi (JC); massive MIMO; precondition; polynomial expansion; linear precoding

MIMO technology has been widely used in wireless communication. The emerging technology among MIMO systems is massive MIMO, which uses hundreds of antennas in a base station (BS) and transmits data to many users. Because a large number of antennas is used in a BS, massive MIMO systems have greater spectral efficiency than conventional ones. For multi-user data transmission in downlink systems, a BS performs precoding in advance to eliminate inter-user interference. The ZF is the optimal method in the downlink massive MIMO systems since the channel property has asymptotic orthogonality [1–5]. However, the computational complexity of ZF is

To solve the above problem and improve performance, this paper proposes a precondition Jacobi (PJC)-based precoder. The convergence rate is increased due to the use of precondition matrix multiplication, which is performed in each iteration., and as such allowing fast convergence. To accelerate convergence and obtain accurate solutions, the precondition matrix should satisfy three conditions. First, it should be an accurate approximate inverse matrix of the gram matrix. Second, the equation requires reasonable computational complexity to create it. Third, the total iterative equation should be solved easily with it. This paper proposes a polynomial expansion using the RI to obtain the precondition matrix. Moreover, it proposes a modified iteration equation to reduce the computational complexity by avoiding matrix–matrix multiplication.

The remainder of this paper is organized as follows. Section 2 presents the model for downlink massive MIMO systems. Section 3 explains the conventional JC. In Section 4, the PJC is proposed and compared with other iterative methods in regard to computational complexity. Section 5 provides convergence rate and simulation results. Lastly, Section 6 presents a conclusion.

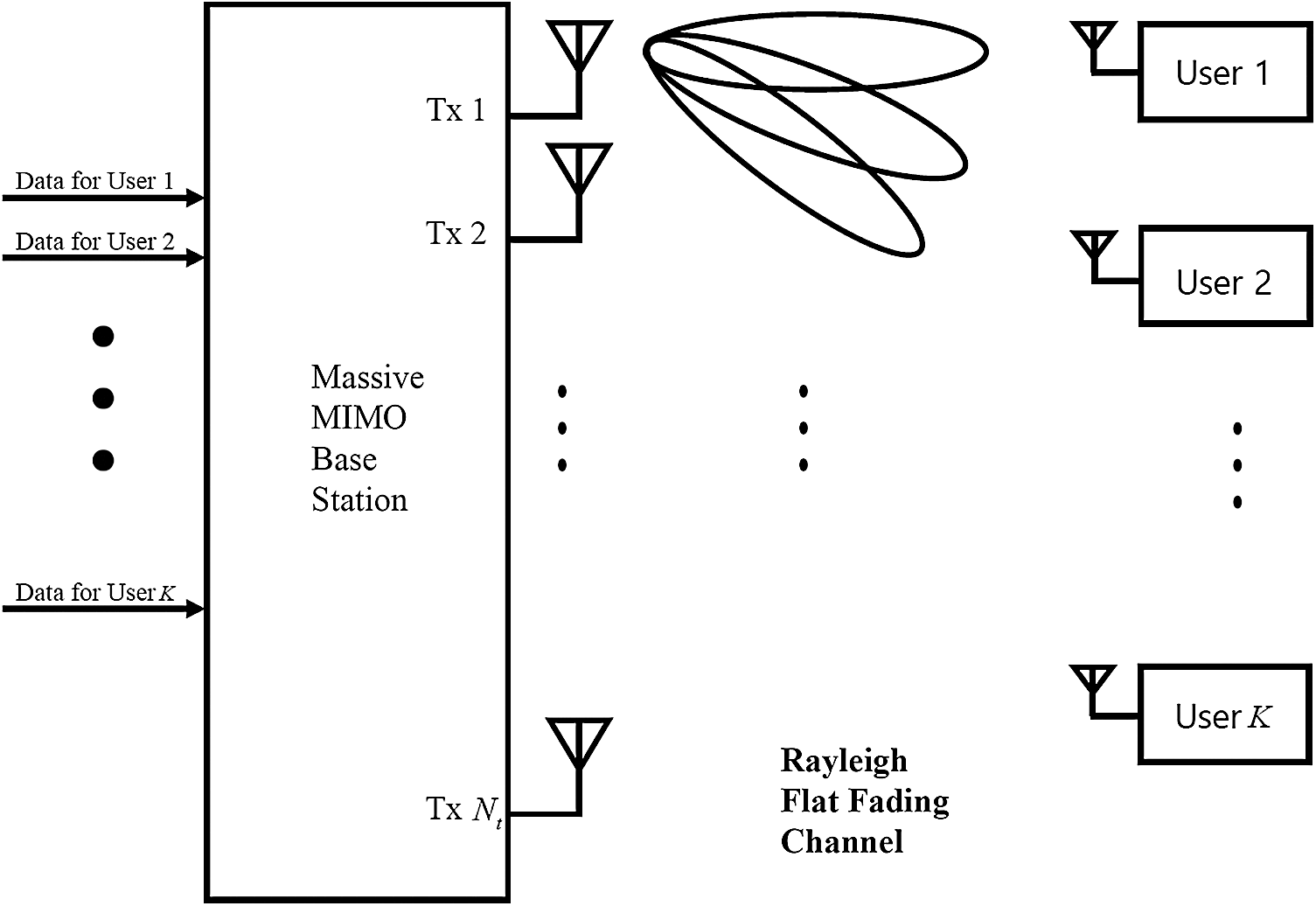

This paper considers the downlink massive MIMO system. The number of BS antennas is Nt, and the total number of users with a single antenna is K. The downlink massive MIMO system model is shown in Fig. 1. The channel matrix between the number of BS antennas and the number of total users is as follows:

where hij is the i -th element of

where

Figure 1: The downlink massive MIMO system model

This paper uses the JC to avoid the direct inversion of gram matrix

where

where

where

After the final iteration, the transmit symbol vector

where

In this section, the proposed precondition matrix in the JC is explained. It aims to improve the bit error rate (BER) performance of the downlink massive MIMO systems. Research related to the JC such as the CJ, the SJ and the DJ has been studied. However, the CJ and the SJ have complex division processes, which are the most expensive operations. Additionally, the DJ provides low error performance in an environment with a large number of users since the convergence rate is low and the condition number of the linear equation is high.

In order to solve the above problem, this paper proposes the PJC. It has two steps. First, the precondition matrix is computed by the simplest polynomial expansion up to the first-order term. When it exceeds the second-order term, the computational complexity is

4.1 Proposed Precondition Matrix

The preconditioning process reduces the condition number of the linear equation. The convergence rate is increased as the condition number of the linear equation is decreased [20]. To reduce the condition number of the linear equation, the precondition matrix is computed by polynomial expansion. The equation of polynomial expansion is as follows:

In order to converge into the inverse matrix, the matrix

where

The preconditioning process accelerates the convergence rate as the condition number of

4.2 Proposed JC Method with Precondition Matrix

In order to accelerate the convergence of the iterative method, the precondition matrix is applied to the JC. The linear equation multiplied by the precondition matrix is as follows:

where

where

However, (10) should calculate the upper and lower triangular components of

where

Consequently, the proposed algorithm for designing PJC precoders is summarized as follows:

Step 1: Input parameter

Step 2: Initialization

Step 3: Calculate the diagonal component of

Step 4: Calculate JC precoder

Step 4: ZF precoding

4.3 Computational Complexity Analysis

The computational complexity is an important indicator for evaluating the performance of an iterative method. This paper defines the computational complexity as the required number of multiplications. Tab. 1 shows the computational complexity of the PJC and several conventional methods. It is more than twice that of the RI, the JC, and the DJ since the computational complexity

Table 1: The computational complexity of the PJC, the RI, the JC, the DJ, and the ZF

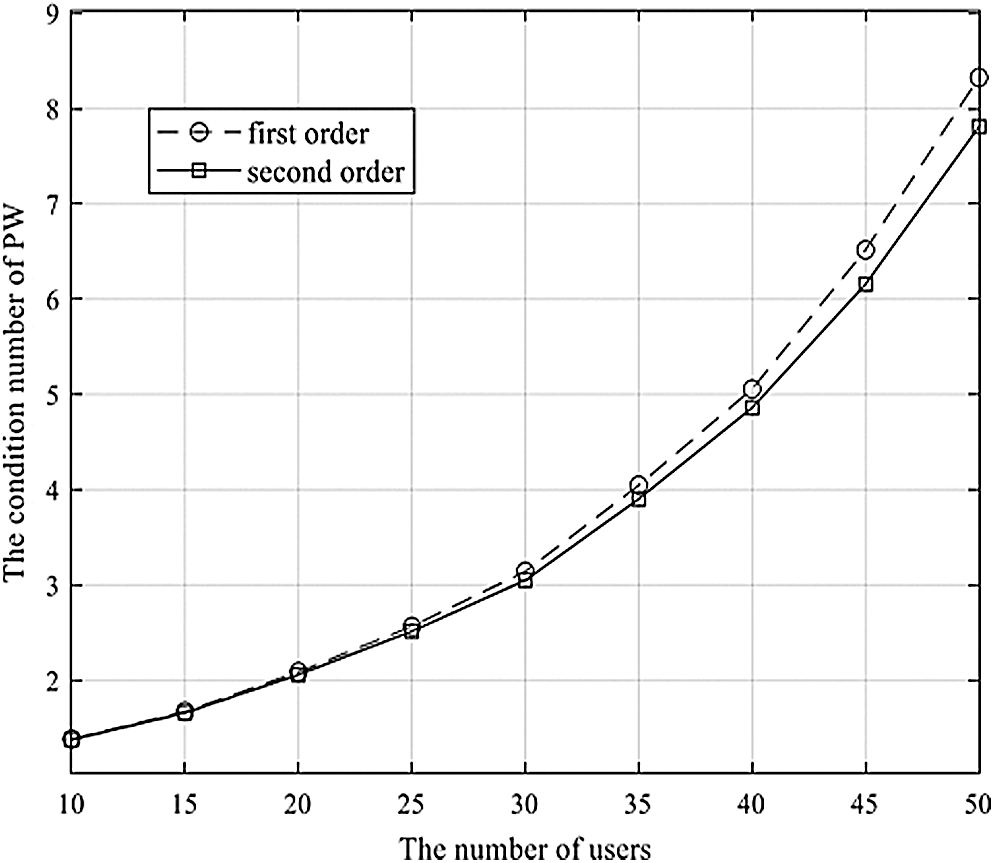

The convergence rates of different iteration methods can be compared by calculating the spectral radius corresponding to each iteration matrix as well as the condition numbers of linear equations. The condition number of linear equation is obtained by calculating the largest singular value of a linear equation. To reduce the condition number of a linear equation, the precondition matrix similar to the inverse matrix of

Fig. 2 shows the condition number of

It is shown that the condition number of the precondition matrix with the second-order term is lower than that with the first-order term because the precondition matrix is slightly more similar to the inverse matrix of

Figure 2: The condition numbers of linear equations, multiplied by the precondition matrix when Nt = 100

To evaluate the convergence rate, the iterative method equation is expressed as

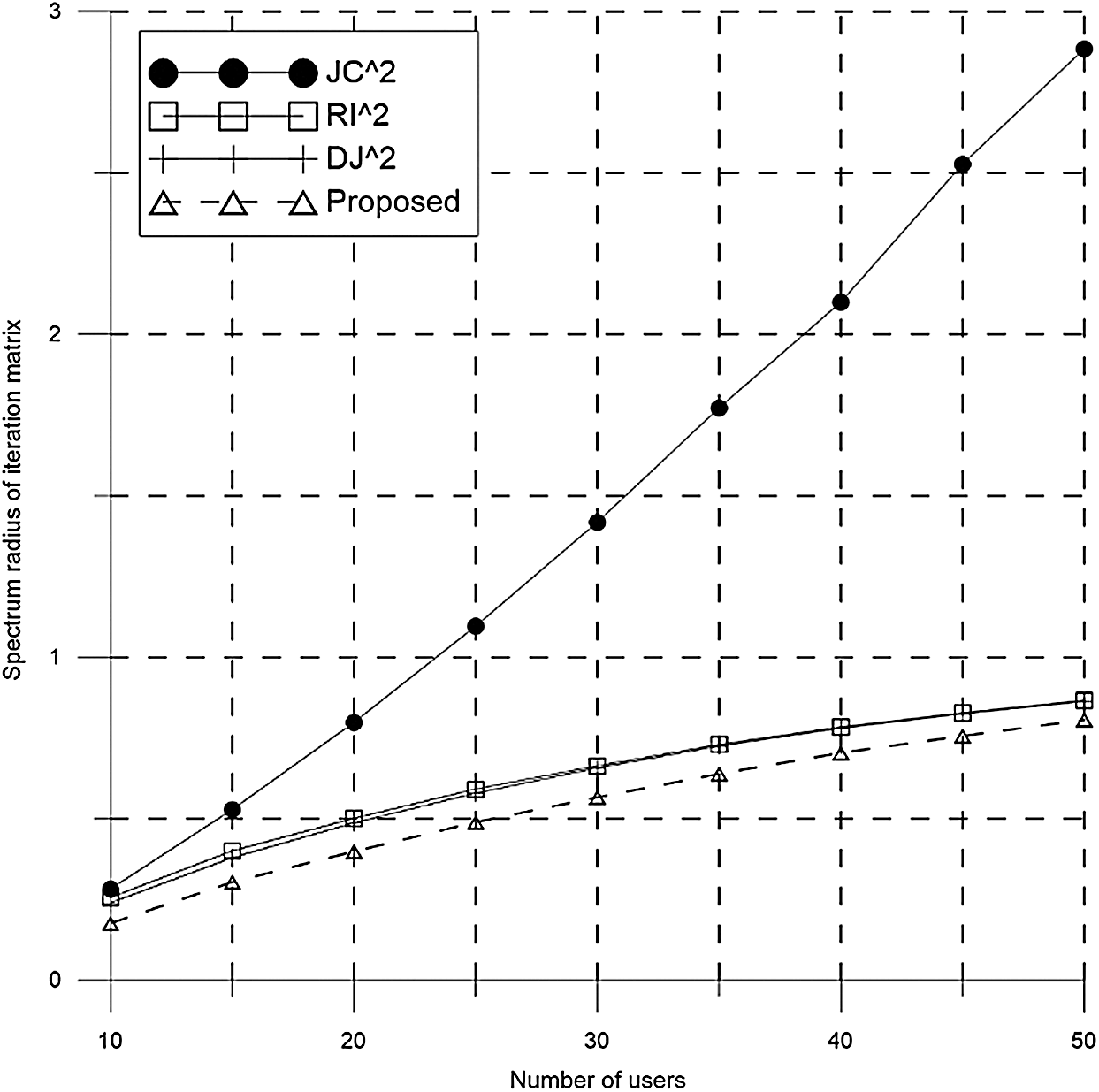

In order to have similar computational complexity with the PJC, the iterative matrix of the RI, the DJ, and the PJC is squared. Generally, when

Figure 3: Spectrum radii of iteration matrices of several iterative methods when Nt = 100

This paper uses ZF as an optimal precoder. In MU MIMO systems, the minimum mean square error (MMSE) precoder provides better error performance than the ZF precoder. However, in the massive MIMO systems, the ZF precoder shows the same error performance as the MMSE precoder. In order to evaluate performance, the BER performances are measured. In all simulations, the number of BS antennas is fixed at 100 for the downlink massive MIMO systems. The downlink channel is modeled by the Rayleigh flat fading channel. Moreover, it is assumed that the BS knows perfect channel state information (CSI) for all users.

Fig. 4 shows the BER performances for the RI, the PJC, and the ZF precoding methods in quadrature phase shift keying (QPSK). Since the number of diagonally dominant components of

Figure 4: BER performance comparison of RI, PJC, and ZF precoding methods in QPSK(a) Nt = 100, K = 30 (b) Nt = 100, K = 40 (c) Nt = 100, K = 50

Fig. 5 shows the BER performances for the DJ, the PJC, and the ZF precoding methods in 16 quadrature amplitude modulation (QAM). Generally, the results presented in Fig. 5 are similar to those in Fig. 4. It can observed that the JC suffers from low reliability. In Fig. 5a, the performances of the PJC and the DJ are increased as the number of iterations is increased. Since

Figure 5: BER performance comparison of the DJ, the PJC, and the ZF precoding methods in 16QAM(a) Nt = 100, K = 30 (b) Nt = 100, K = 40 (c) Nt = 100, K = 50

The PJC obtains these promising results since the precondition matrix is multiplied with the linear equation to reduce the condition number of the linear equation and the spectral radius of the iterative matrix. Overall, it performs better than the RI and the DJ for the BER performance in environments with many users.

The iterative method is one solution to solve the computational complexity problem in the massive MIMO systems. This paper proposes an improved the JC-based precoder in the massive MIMO systems with a large number of users. The error performance is improved by using the precondition matrix. In order to accelerate convergence, the precondition matrix is multiplied with the linear equation. Then, the PJC is transformed to avoid matrix–matrix multiplication. None of the elements of the matrix that are multiplied with the precondition matrix and the gram matrix are required. Although the computational complexity of the PJC is increased compared to traditional methods, it overcomes the poor performance of the conventional JC when

Funding Statement: This research was supported by the MSIT (Ministry of Science and ICT), Korea, under the ITRC (Information Technology Research Center) support program (IITP-2019-2018-0-01423) supervised by the IITP (Institute for Information & communications Technology Promotion), and supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (2020R1A6A1A03038540).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. F. Rusek, D. Persson, B. K. Lau, E. G. Larsson, T. L. Marzetta et al. (2013). , “Scaling up MIMO: Opportunities and challenges with very large arrays,” IEEE Signal Processing Magazine, vol. 30, no. 1, pp. 40–60. [Google Scholar]

2. E. G. Larsson, O. Edfors, F. Tufvesson and T. L. Marzetta. (2014). “Massive MIMO for next generation wireless systems,” IEEE Communications Magazine, vol. 52, no. 2, pp. 186–195. [Google Scholar]

3. L. Lu, G. Y. Li, A. L. Swindlehurst, A. Ashikhmin and R. Zhang. (2014). “An overview of massive MIMO: Benefits and challenges,” IEEE Journal of Selected Topics in Signal Processing, vol. 8, no. 5, pp. 742–758. [Google Scholar]

4. T. L. Marzetta. (2015). “Massive MIMO: An introduction,” Bell Labs Technical Journal, vol. 20, pp. 11–22. [Google Scholar]

5. E. Björnson, E. G. Larsson and T. L. Marzetta. (2016). “Massive MIMO: Ten myths and one critical question,” IEEE Communications Magazine, vol. 54, no. 2, pp. 114–123. [Google Scholar]

6. M. A. Albreem, M. Juntti and S. Shahabuddin. (2019). “Massive MIMO detection techniques: A survey,” IEEE Communications Surveys & Tutorials, vol. 21, no. 4, pp. 3109–3132. [Google Scholar]

7. Z. Zhang, J. Wu, X. Mal, Y. Dong, Y. Wang et al. (2016). , “Reviews of recent progress on low-complexity linear detection via iterative algorithms for massive MIMO systems,” in 2016 IEEE/CIC Int. Conf. on Communications in China (ICCC WorkshopsChengdu, pp. 1–6. [Google Scholar]

8. N. Fatema, G. Hua, Y. Xiang, D. Peng and I. Natgunanathan. (2018). “Massive MIMO linear precoding: A survey,” IEEE Systems Journal, vol. 12, no. 4, pp. 3920–3931. [Google Scholar]

9. O. Gustafsson, E. Bertilsson, J. Klasson and C. Ingemarsson. (2017). “Approximate Neumann series or exact matrix inversion for massive MIMO?,” in 2017 IEEE 24th Symp. on Computer Arithmetic (ARITHLondon, pp. 62–63. [Google Scholar]

10. D. Zhu, B. Li and P. Liang. (2015). “On the matrix inversion approximation based on Neumann series in massive MIMO systems,” in 2015 IEEE Int. Conf. on Communications (ICCLondon, pp. 1763–1769. [Google Scholar]

11. X. Gao, L. Dai, J. Zhang, S. Han and I. Chih-Lin. (2015). “Capacity-approaching linear precoding with low-complexity for large-scale MIMO systems,” in 2015 IEEE Int. Conf. on Communications (ICCLondon, pp. 1577–1582. [Google Scholar]

12. X. Gao, L. Dai, Y. Hu, Z. Wang and Z. Wang. (2014). “Matrix inversion-less signal detection using SOR method for uplink large-scale MIMO systems,” in 2014 IEEE Global Communications Conf., Austin, TX, pp. 3291–3295. [Google Scholar]

13. T. Xie, L. Dai, X. Gao, X. Dai and Y. Zhao. (2016). “Low-complexity SSOR-based precoding for massive MIMO systems,” IEEE Communications Letters, vol. 20, no. 4, pp. 744–747. [Google Scholar]

14. B. Kang, J. Yoon and J. Park. (2017). “Low-complexity massive MIMO detectors based on Richardson method,” Etri Journal, vol. 39, no. 3, pp. 326–335. [Google Scholar]

15. Y. Lee. (2017). “Decision-aided Jacobi iteration for signal detection in massive MIMO systems,” Electronics Letters, vol. 53, no. 23, pp. 1552–1554. [Google Scholar]

16. W. Song, X. Chen, L. Wang and X. Lu. (2016). “Joint conjugate gradient and Jacobi iteration based low complexity precoding for massive MIMO systems,” in 2016 IEEE/CIC Int. Conf. on Communications in China (ICCCChengdu, pp. 1–5. [Google Scholar]

17. X. Qin, Z. Yan and G. He. (2016). “A near-optimal detection scheme based on joint steepest descent and Jacobi method for uplink massive MIMO systems,” IEEE Communications Letters, vol. 20, no. 2, pp. 276–279. [Google Scholar]

18. Y. Zhang, A. Yu, X. Tan, Z. Zhang, X. You et al. (2018). , “Adaptive damped Jacobi detector and architecture for massive MIMO uplink,” in 2018 IEEE Asia Pacific Conf. on Circuits and Systems (APCCASChengdu, pp. 203–206. [Google Scholar]

19. J. Ro, W. Lee, J. Ha and H. Song. (2019). “An efficient precoding method for improved downlink massive MIMO system,” IEEE Access, vol. 7, pp. 112318–112326. [Google Scholar]

20. A. Pyzara, B. Bylina and J. Bylina. (2011). “The influence of a matrix condition number on iterative methods’ convergence,” in 2011 Federated Conf. on Computer Science and Information Systems (FedCSISSzczecin, pp. 459–464. [Google Scholar]

21. F. Jin, Q. Liu, H. Liu and P. Wu. (2019). “A low complexity signal detection scheme based on improved newton iteration for massive MIMO systems,” IEEE Communications Letters, vol. 23, no. 4, pp. 748–751. [Google Scholar]

22. A. Ruperee and S. Nema. (2019). “Time shifted pilot signal transmission with pilot hopping to improve the uplink performance of massive MIMO systems for next generation network,” KSII Transactions on Internet and Information Systems, vol. 13, no. 9, pp. 4390–4407. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |