DOI:10.32604/cmc.2021.013663

| Computers, Materials & Continua DOI:10.32604/cmc.2021.013663 |  |

| Article |

Fuzzy Based Adaptive Deblocking Filters at Low-Bitrate HEVC Videos for Communication Networks

1Department of Electronics and Communication Engineering, IKG-Punjab Technical University, Jalandhar, 144603, India

2Department of Electronics and Communication Engineering, DAVIET, Jalandhar, India

*Corresponding Author: Anudeep Gandam. Email: Gandam.anu@gmail.com

Received: 16 August 2020; Accepted: 17 October 2020

Abstract: In-loop filtering significantly helps detect and remove blocking artifacts across block boundaries in low bitrate coded High Efficiency Video Coding (HEVC) frames and improves its subjective visual quality in multimedia services over communication networks. However, on faster processing of the complex videos at a low bitrate, some visible artifacts considerably degrade the picture quality. In this paper, we proposed a four-step fuzzy based adaptive deblocking filter selection technique. The proposed method removes the quantization noise, blocking artifacts and corner outliers efficiently for HEVC coded videos even at low bit-rate. We have considered Y (luma), U (chroma-blue), and V (chroma-red) components parallelly. Finally, we have developed a fuzzy system to detect blocking artifacts and use adaptive filters as per requirement in all four quadrants, namely up  , down

, down  , up

, up  , and down

, and down  across horizontal and vertical block boundaries. In this context, experimentation is done on a wide variety of videos. An objective and subjective analysis is carried out with MATLAB software and Human Visual System (HVS). The proposed method substantially outperforms existing post-processing deblocking techniques in terms of YPSNR and BD_rate. In the proposed method, we achieved 0.32–0.97 dB values of YPSNR. Our method achieved a BD_rate of +1.69% for the luma component, −0.18% (U) and −1.99% (V) for chroma components, respectively, with respect to the state-of-the-art methods. The proposed method proves to have low computational complexity and has better parallel processing, hence suitable for a real-time system in the near future.

across horizontal and vertical block boundaries. In this context, experimentation is done on a wide variety of videos. An objective and subjective analysis is carried out with MATLAB software and Human Visual System (HVS). The proposed method substantially outperforms existing post-processing deblocking techniques in terms of YPSNR and BD_rate. In the proposed method, we achieved 0.32–0.97 dB values of YPSNR. Our method achieved a BD_rate of +1.69% for the luma component, −0.18% (U) and −1.99% (V) for chroma components, respectively, with respect to the state-of-the-art methods. The proposed method proves to have low computational complexity and has better parallel processing, hence suitable for a real-time system in the near future.

Keywords: Adaptive deblocking filters; high efficiency video coding; blocking artifacts; corner outliers; bitrate; YPSNR; BD_rate

There is a requirement for large bandwidth in high-definition video content in the present era of multimedia applications [1–5]. Researchers have an incredibly challenging task to save bandwidth by performing adequate compression without affecting the visual contents over low bandwidth networks. Due to the large bandwidth capacity of video contents, performing a high-end compression significantly affects the video’s perceptual quality. The high demand for watching streaming videos online widens the horizon of video coding; thereby, it has become a promising research area. The conventional H.264/AVC (Advanced Video coding) is a joint effort of ITU-T (International Telecommunication Union), and MPEG (Motion Picture Expert Group) groups preceded by the H.263 video coding standard in the year of 2003 [3,4]. Later, H.264/AVC gained attention to become adaptive in the industry-standard [5–7]. The extensive design analysis proves that H.264 can be more efficient in achieving 50% compression efficiency than its legacy versions [8,9]. In the current scenario, video compression has witnessed a wide range of potential applications implementing the H.264/AVC video coding protocol [10–13]. Despite having all these qualities, the variation in the embedded design of specific mobile devices poses challenges in processing H.264/AVC standard efficiently [1,3,11,13]. It further leads to an exorbitant cost of computation. The dynamic behavior of mobile networks also causes overhead in power-constrained mobile devices while processing H.264 [14–20]. Hence, there exists a major associated trade-off between high-end compression, power consumption, and design complexities. Our proposed study aims at optimizing the conventional High Efficiency Video Coding (HEVC) standard to alleviate blocking artifacts and corner outliers. We have incorporated the optimization by integrating deblocking filters with a fuzzy approach while preserving coded videos’ perceptual quality [3–5,21–23].

Many researchers have proposed different methods in the last decade to alleviate the corner outlier and blocking artifacts. The various methods include post-processing algorithms [5–20] and in-loop filtering methods [10–15], which efficiently removes blocking artifacts. Numerous researches have studied variable intra-coding prediction properties of HEVC standard: Huang et al. [6] introduced a deblocking approach for improving the perceptual video quality of H.264 standard. Hannuksela et al. [7] presented different features of H.264/AVC. The author introduced the International Standardization Organization (ISO)/IEC (International Electro-technical Commission) along with ITU-T as standardized encoding and decoding techniques. The main disadvantage of such an approach is the lack of maximal freedom in implementing different applications without degrading image or video quality, implementation time, and cost. Dias et al. [8] proposed a rate distortion-based hypothesis to improve the quantization rate by HEVC. The authors investigated the Mean opinion score (MOS) and multimedia video quality assessment. Tang et al. [9] introduced HEVC video compression. Trzcianowski et al. [10] has reported a similar type of work. Chen et al. [11] demonstrated a vast survey of Ultra High Definition (UHD) document utilization and its implementation in the proposed systems. The authors achieved around 64% bitrate using the HEVC standard. He et al. [12] presented an estimation technique for UHD video data with minimum computational cost using VLSI design frameworks. Ahn et al. [13] proposed a parallel processing technique for the HEVC standard for quality improvement, whereas Blasi et al. [14] implemented the movement compensation technique to identify the complexity of coding performance using adaptive precision.

In the HEVC method, a frame is divided into a code tree of variable samples ( ,

,  ,

,  ) and further divided into smaller blocks known as Coding Units (CU). The size of the Prediction Unit (PU), as well as the Transform Unit (TU), increases with the increase in the size of the Large Coding Unit (LCU), which results in some annoying artifacts even after applying in-loop filtering. Moreover, the in-loop filters cannot remove corner outliers due to its 1-D filtering properties [6–14]. On the other hand, post-processing techniques are more flexible and can be applied to any standards like MPEG 4/AVC and HEVC. The rectification of blocking artifacts can be achieved by using different post-processing approaches such as frequency domain analysis [15–17,19], Projection Onto Convex Sets (POCS) [16,17], Wavelet-based techniques [18], estimation theory [20,21], and filtering approach [8–22]. The most common method is to apply a low-pass filter across the block boundaries to remove artifacts. The main disadvantage of the spatial filtering technique is over smoothing, attributed to its low pass properties. Kim et al. [17] presented a POCS based post-processing technique to remove blocking artifacts. POCS is more complex and requires high computations due to more iteration steps performed during Discrete Cosine Transform (DCT) as well as Inverse Discrete Cosine Transform (IDCT). Singh et al. [18] produced a DCT based filtration method for the smooth region. However, it has a poor performance. Hu et al. [20] proposed a Singular Valued Decomposition (SVD) technique. On the other hand, Yang et al. proposed an iteration-based Fields of Experts (FoE) technique. Due to its iterative approach, this method is not useful in the real-time image/video applications. The main drawback of FoE is associated with high computational complexity, as it works efficiently for an optimum (

) and further divided into smaller blocks known as Coding Units (CU). The size of the Prediction Unit (PU), as well as the Transform Unit (TU), increases with the increase in the size of the Large Coding Unit (LCU), which results in some annoying artifacts even after applying in-loop filtering. Moreover, the in-loop filters cannot remove corner outliers due to its 1-D filtering properties [6–14]. On the other hand, post-processing techniques are more flexible and can be applied to any standards like MPEG 4/AVC and HEVC. The rectification of blocking artifacts can be achieved by using different post-processing approaches such as frequency domain analysis [15–17,19], Projection Onto Convex Sets (POCS) [16,17], Wavelet-based techniques [18], estimation theory [20,21], and filtering approach [8–22]. The most common method is to apply a low-pass filter across the block boundaries to remove artifacts. The main disadvantage of the spatial filtering technique is over smoothing, attributed to its low pass properties. Kim et al. [17] presented a POCS based post-processing technique to remove blocking artifacts. POCS is more complex and requires high computations due to more iteration steps performed during Discrete Cosine Transform (DCT) as well as Inverse Discrete Cosine Transform (IDCT). Singh et al. [18] produced a DCT based filtration method for the smooth region. However, it has a poor performance. Hu et al. [20] proposed a Singular Valued Decomposition (SVD) technique. On the other hand, Yang et al. proposed an iteration-based Fields of Experts (FoE) technique. Due to its iterative approach, this method is not useful in the real-time image/video applications. The main drawback of FoE is associated with high computational complexity, as it works efficiently for an optimum ( ) block size only. The techniques discussed in the literature can alleviate blocking artifacts to a maximum extent; however, the subjective performance at a low bitrate is far-flung from expectation.

) block size only. The techniques discussed in the literature can alleviate blocking artifacts to a maximum extent; however, the subjective performance at a low bitrate is far-flung from expectation.

1.2 Motivation and Contribution

In the HEVC method, blocking artifacts are observed at low bitrates due to its large block size, different partition blocks, different chroma, and luma components [22–31]. De-blocking Filter (DBF) used for the chroma component is simple. However, its performance is low in the chroma components as compared to the luma component. Thus, artifacts arising due to the chroma component still needs to be explored [25–32].

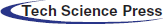

The paper proposes a new four steps deblocking fuzzy filter selection technique to mitigate HEVC coding problems. Initially, we remove the quantization noise to avoid the wrong selection of the deblocking filter. Secondly, this work develops an efficient adaptive blocking artifact and corner outlier detection method. Finally, we introduce a novel fuzzy-based adaptive filter selection technique to simultaneously alleviate blocking artifacts and corner outliers. The complete approach works on HEVC luma and chroma components.

The rest of the paper is organized as follows: In Section 2, the proposed algorithm has been introduced, Section 3 gives results and discussion, and Section 4 concludes this research paper.

In this section, at the initial state, the quantization error is eliminated with the help of a pre-processing spatial filter, and an adaptive deblocking algorithm is implemented after that. Fuzzy filter selection for different regions is introduced along with simultaneously detecting and removing the corner outliers.

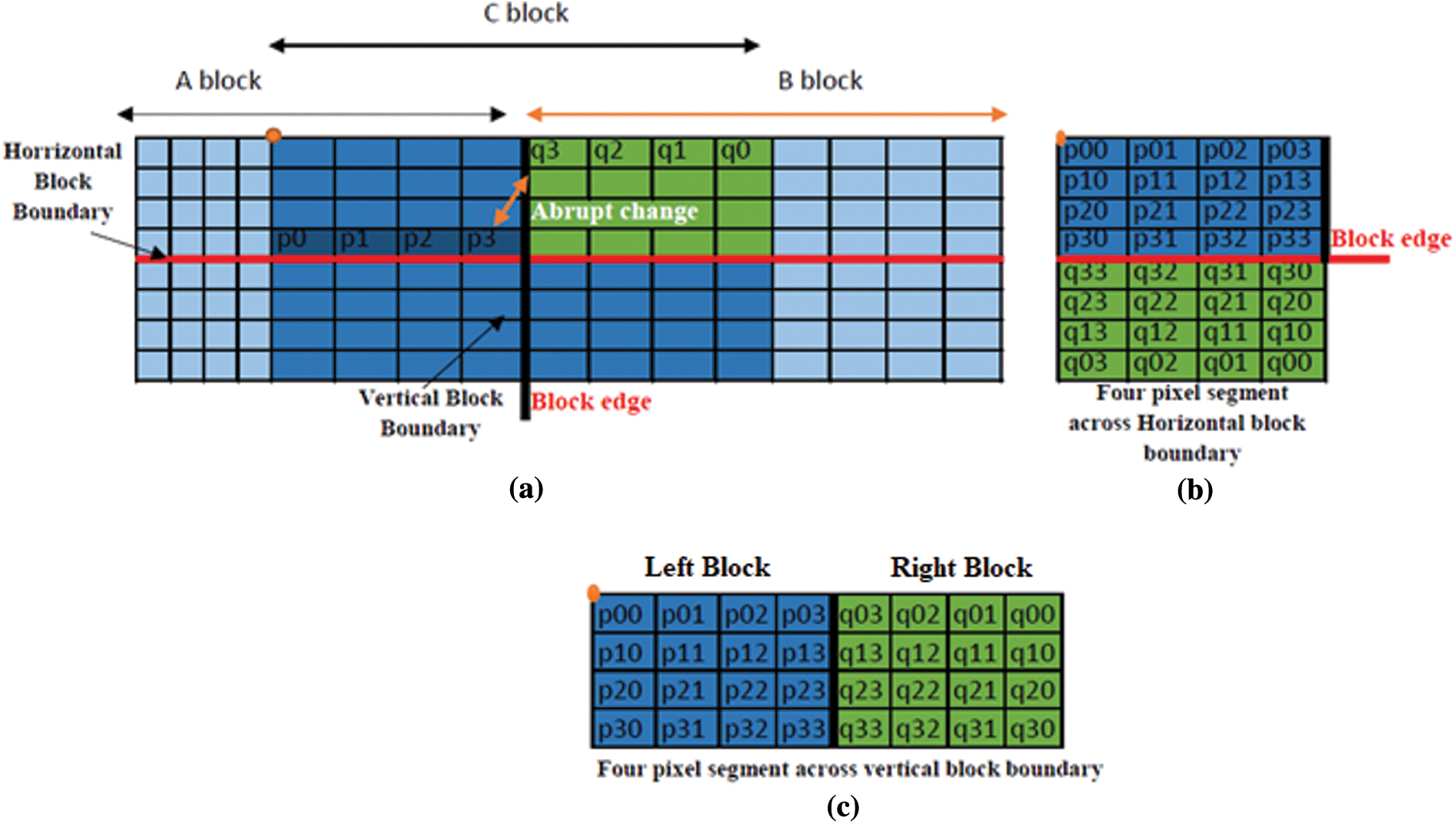

The proposed technique aims at removing an abrupt signal change in the consecutive frames. The subjective quality of the HEVC coded frames is significantly improved by removing all types of artifacts. The proposed technique is explained in Fig. 1. The details of each block are explained subsequently in the subsection of the proposed method.

Figure 1: Block diagram of the proposed algorithm

2.1 Removal of Quantization Error using Spatial Filtering

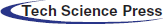

Humans are always sensitive to abrupt changes in the signal in case of decoded frames. Fig. 2 depicts a pixel with an abrupt high or low contrast values than its neighboring pixels. The mean filter is applied to remove such kind of discontinuities. S defines a set of eight surrounding pixels, and the ninth pixel (p11) with an abrupt change is under consideration [9].

Figure 2:  block of neighboring pixels with discontinuities

block of neighboring pixels with discontinuities

Let the pixel (p11) has a quantization signal; then it must satisfy the following conditions: Mathematically:

where N is neighboring pixels, M is median, and  is a threshold value. On the other hand, (Tp/f) is the threshold value to calculate the dissimilarity between two adjacent frames or pixels.

is a threshold value. On the other hand, (Tp/f) is the threshold value to calculate the dissimilarity between two adjacent frames or pixels.

We consider ( ), (

), ( ) and (

) and ( ). If Eqs. (1)–(3) are satisfied by (p11), then it is observed that the pixel has a large signal value, and it will be replaced with the mean of all the eight neighboring pixels to remove undesired noise as mentioned in the below relation

). If Eqs. (1)–(3) are satisfied by (p11), then it is observed that the pixel has a large signal value, and it will be replaced with the mean of all the eight neighboring pixels to remove undesired noise as mentioned in the below relation

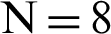

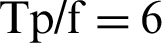

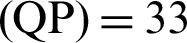

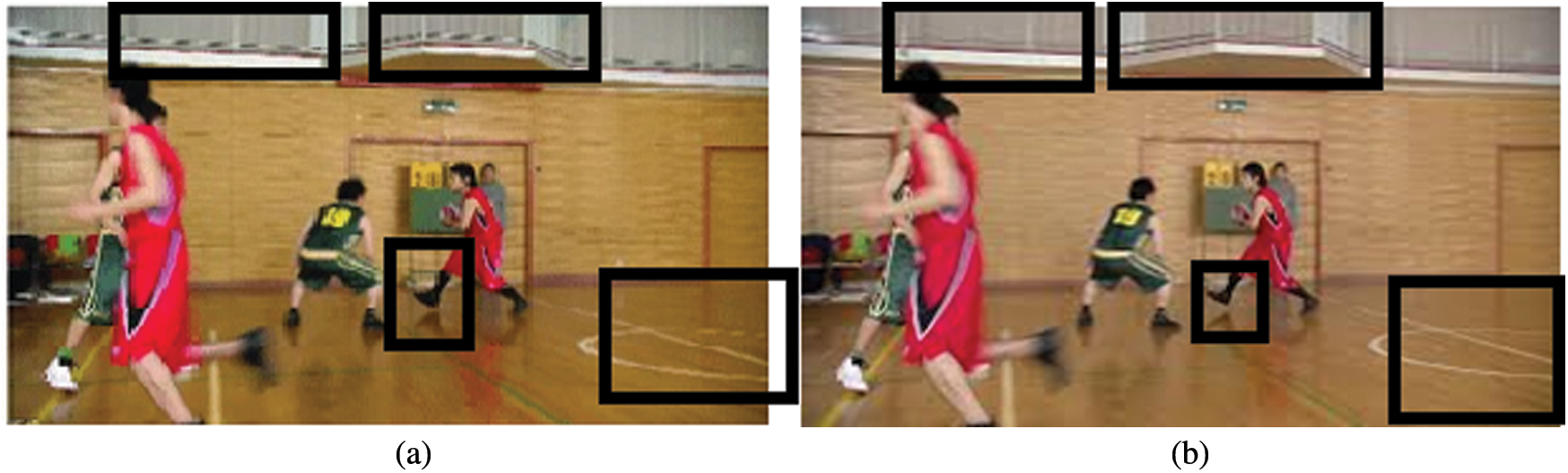

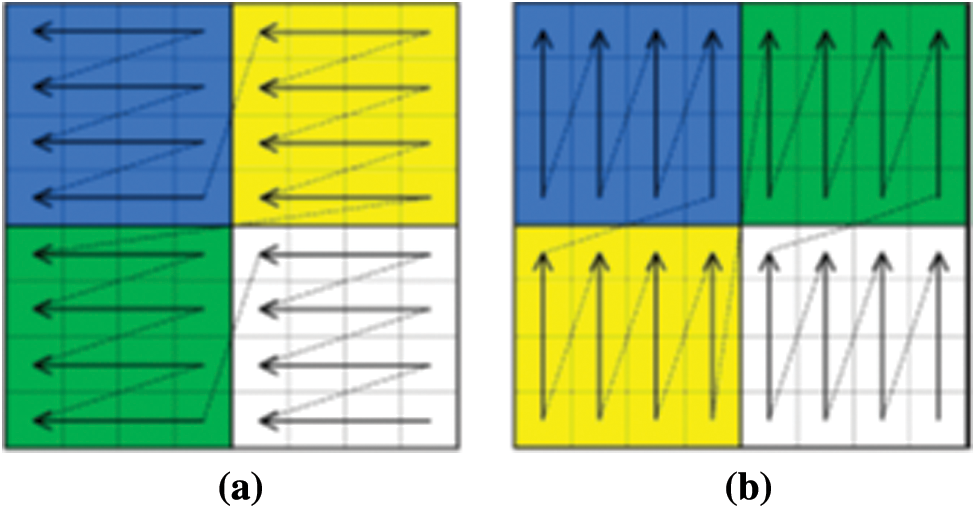

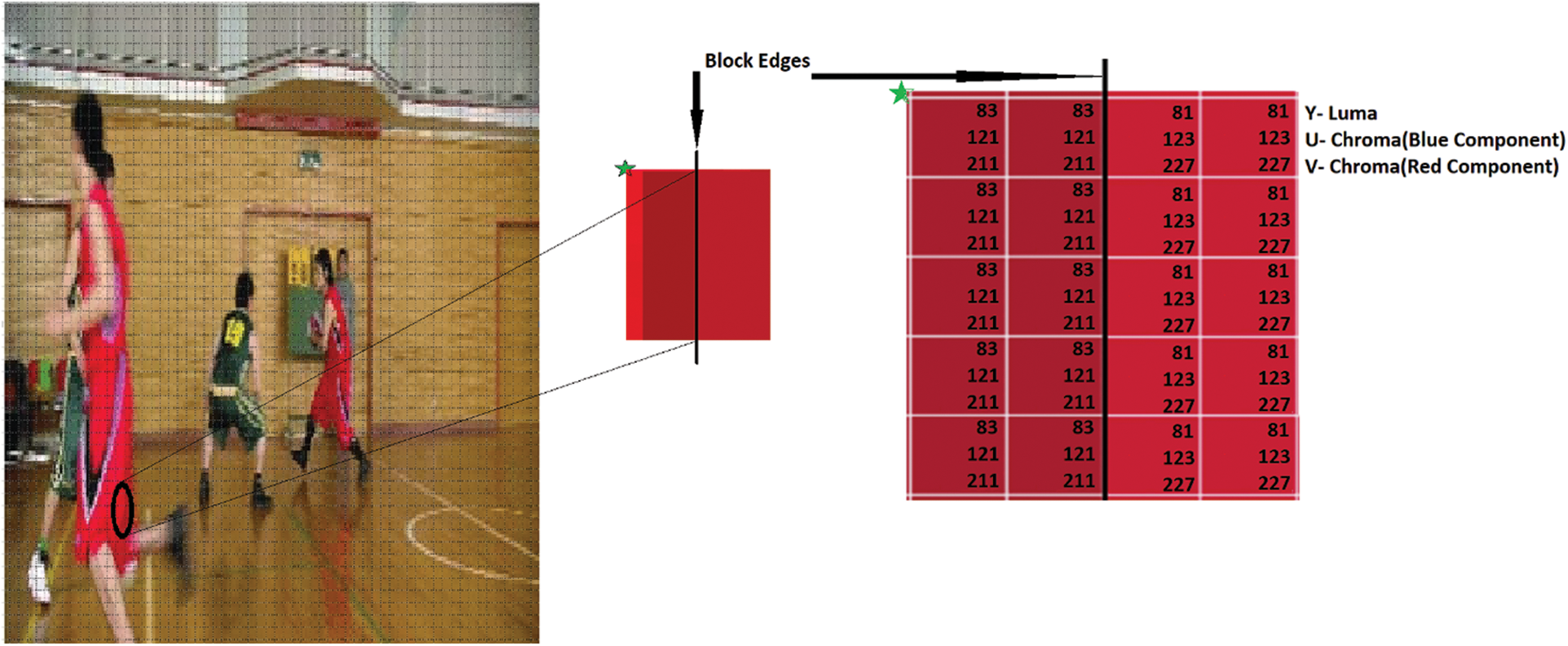

The subjective analysis of HEVC decoded image compressed at Quantization Parameter  is shown in Fig. 3.

is shown in Fig. 3.

Figure 3: (a) Basketball HEVC decoded images at  . (b) Output after removal of quantization error

. (b) Output after removal of quantization error

2.2 Blocking Artifacts Detection and Removal

Independent coding and decoding of blocks create discontinuities across block boundaries. The size of the prediction block varies from ( ) to (

) to ( ) for standard HEVC. LCU is used for the smooth part of the picture, whereas images with intricate details prefer small blocks to large blocks. In traditional HEVC, (

) for standard HEVC. LCU is used for the smooth part of the picture, whereas images with intricate details prefer small blocks to large blocks. In traditional HEVC, ( ) block size was used in the deblocking filter to remove blocking artifacts. We process (

) block size was used in the deblocking filter to remove blocking artifacts. We process ( ) grid to alleviate blocking artifacts while preventing spatial dependencies across the picture edges.

) grid to alleviate blocking artifacts while preventing spatial dependencies across the picture edges.

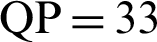

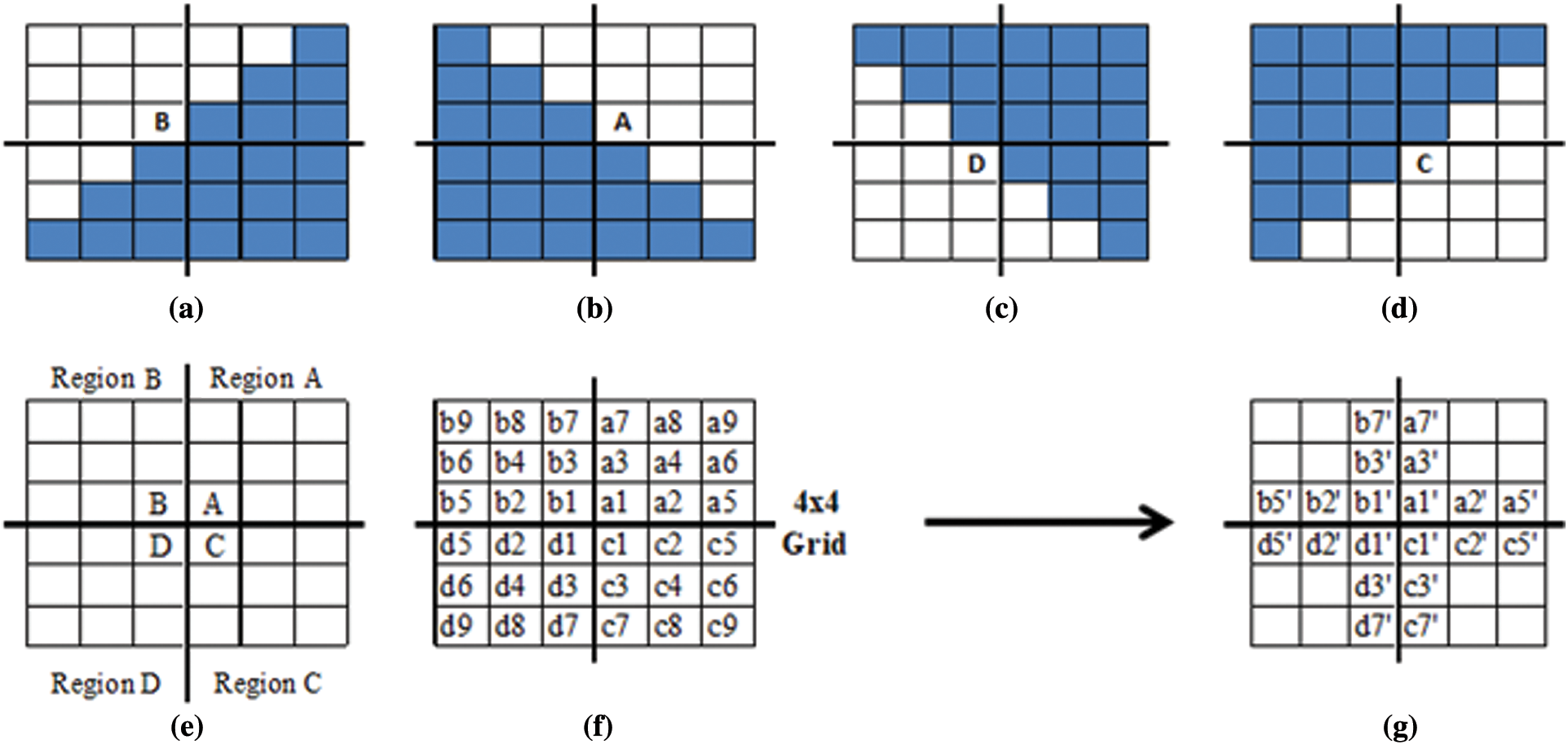

Fig. 4a depicts the blocks A and block B of size ( ) across horizontal as well as vertical block boundaries by constructing another block C by using four elements from each block and form (

) across horizontal as well as vertical block boundaries by constructing another block C by using four elements from each block and form ( ) grid as shown in Figs. 4b and 4c respectively. Fig. 4a shows a 1-D signal on each side of block boundaries. Fig. 4b depicts the (

) grid as shown in Figs. 4b and 4c respectively. Fig. 4a shows a 1-D signal on each side of block boundaries. Fig. 4b depicts the ( ) grid of pixels across the horizontal block boundary. Similarly, Fig. 4c shows a (

) grid of pixels across the horizontal block boundary. Similarly, Fig. 4c shows a ( ) grid across the vertical block boundary.

) grid across the vertical block boundary.

Figure 4: Blocking artifact representation. (a) Depicts a 1-D signal level of four pixels on either side of block edge (b) ( ) pixel segment grid across the horizontal block boundary (c) (

) pixel segment grid across the horizontal block boundary (c) ( ) pixel segment grid across the vertical block boundary

) pixel segment grid across the vertical block boundary

Fig. 5 depicts the proposed method’s flowchart, which further illustrates blocking artifacts and corner outlier detection and removal. Although the HEVC method works on variable block sizes, to reduce its complexity, HEVC generally detects artifacts along ( ) block only and enhances its overall processing time. However, blocking artifacts across some prediction units like (

) block only and enhances its overall processing time. However, blocking artifacts across some prediction units like ( ), (

), ( ) is ignored and reduces its efficiency. This paper is processing every prediction unit across the block edges using a (

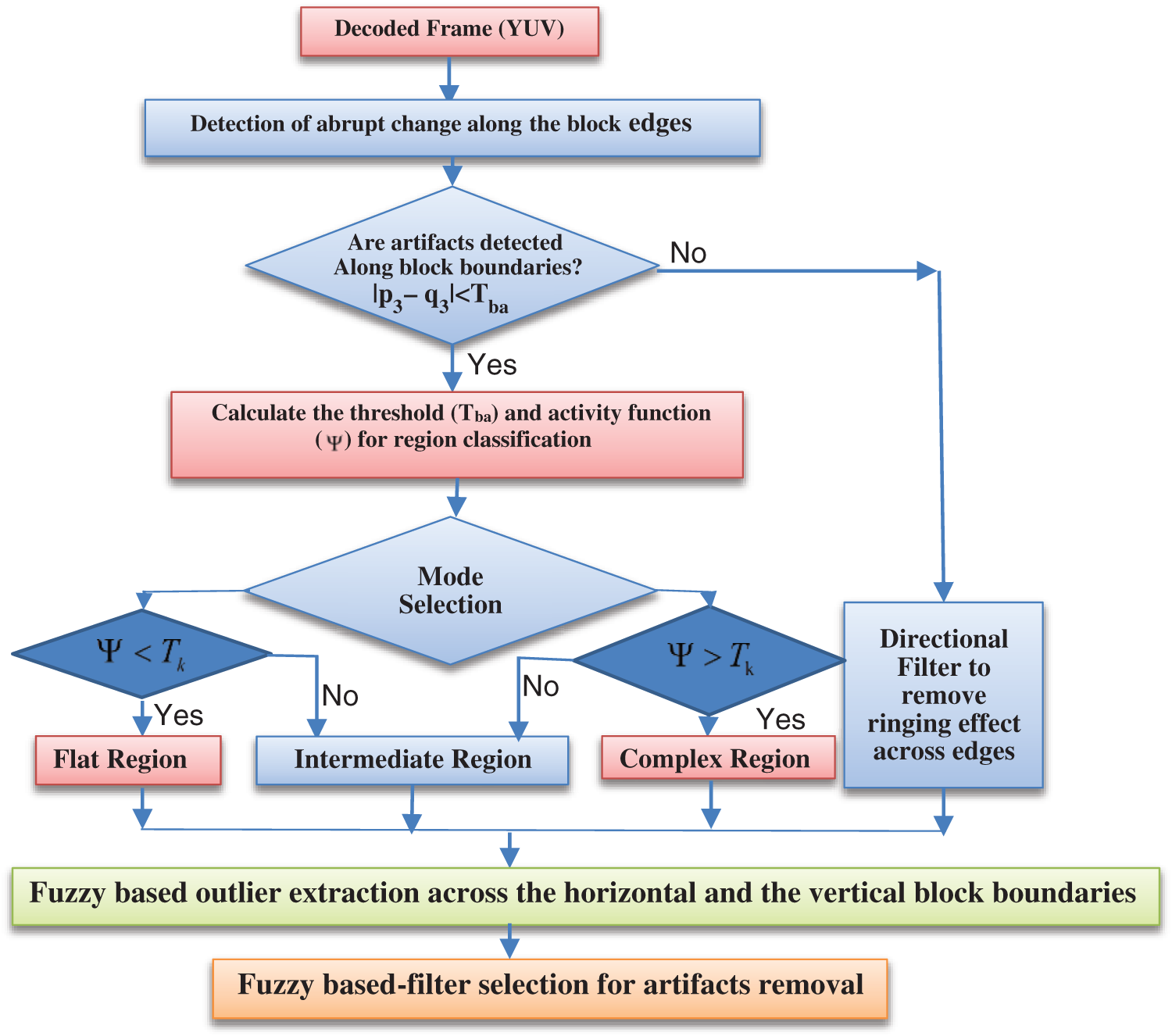

) is ignored and reduces its efficiency. This paper is processing every prediction unit across the block edges using a ( ) grid. Each row of the grid is processed independently using a horizontal and vertical interlaced scan pattern, as shown in Fig. 6.

) grid. Each row of the grid is processed independently using a horizontal and vertical interlaced scan pattern, as shown in Fig. 6.

Figure 5: Flow chart of the proposed method

Figure 6: HEVC horizontal as well as vertical scan pattern [23]. (a) Horizontal (b) vertical

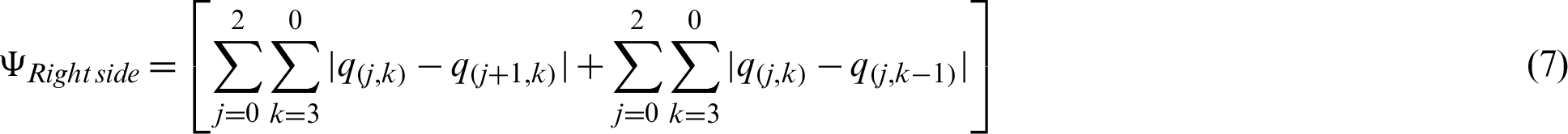

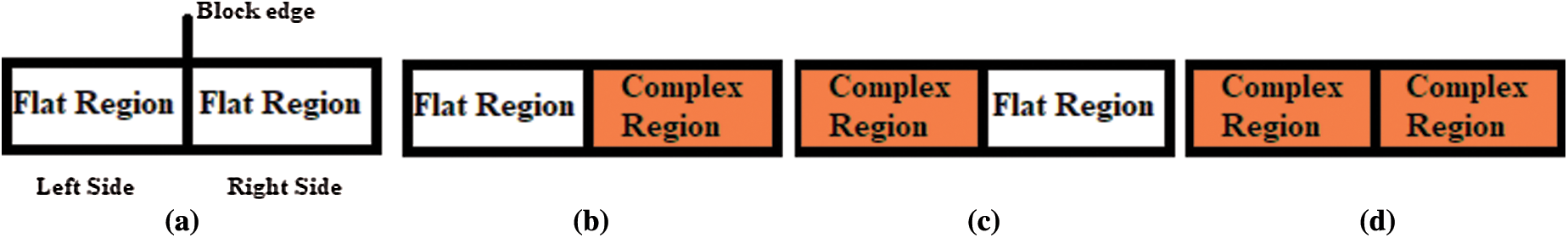

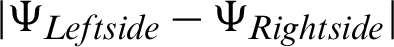

If there is an abrupt change in the pixel value across bilateral edges, then  should be greater than the threshold value (Tba). The threshold (Tba) value of the blocking artifact is set as

should be greater than the threshold value (Tba). The threshold (Tba) value of the blocking artifact is set as  for the flat region and

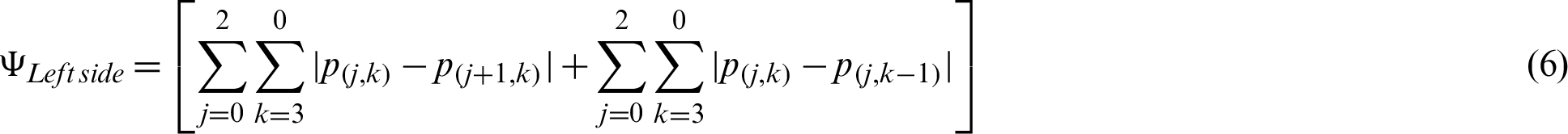

for the flat region and  for the complex region. The region complexity of Fig. 4a is calculated by Eqs. (5) and (6) using (

for the complex region. The region complexity of Fig. 4a is calculated by Eqs. (5) and (6) using ( ) grid. From Fig. 4c, the average gradient or boundary activity function of left-side horizontal block edge pixels are represented by

) grid. From Fig. 4c, the average gradient or boundary activity function of left-side horizontal block edge pixels are represented by  . Similarly, the average gradient or boundary activity function of right-side horizontal block edge pixels are represented by

. Similarly, the average gradient or boundary activity function of right-side horizontal block edge pixels are represented by  and is calculated as

and is calculated as

where Tp/f = 6, the same threshold was used earlier to eliminate the quantization error. If the condition (5)–(8) are satisfied, then the region is considered as flat region, as shown in Fig. 7a.

Figure 7: Types of artifacts (a)  (b)

(b)  (c)

(c)  (d)

(d)

For the intermediate regions, as shown in Figs. 7b and 7c,  should be small enough, such that it must satisfy at least one of the conditions stated in Eqs. (9) and (10).

should be small enough, such that it must satisfy at least one of the conditions stated in Eqs. (9) and (10).

where as  .

.

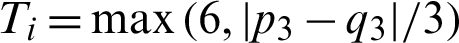

In a particular case, when a small object appears across the edges of a complex region, which significantly disturbs the frame’s perceptual quality, as shown in Fig. 7(d), Eq. (12) is calculated. We considered pixels p2 and q2 instead of p3 and q3 to avoid disturbance to natural edges along horizontal, diagonal, and vertical block boundaries.

If the difference between p3 and q3 is enormous, an intermediate threshold (Ti) value will change adaptively, as shown in Eq. (12).

Such that

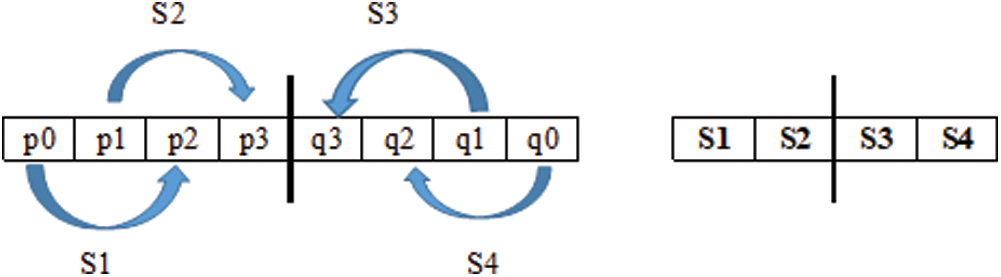

For each ( ) block, the absolute difference between S1 and S2, as well as S3 and S4, should be greater than Ti as shown in Fig. 8. If Eq. (12) is satisfied for more than one row in a (

) block, the absolute difference between S1 and S2, as well as S3 and S4, should be greater than Ti as shown in Fig. 8. If Eq. (12) is satisfied for more than one row in a ( ) grid, then the complete block of size (

) grid, then the complete block of size ( ) is considered as blocking edge.

) is considered as blocking edge.

Figure 8: Representation of small object edges

2.3 Detection of Corner Outliers

Fig. 9 illustrates corner outlier across block boundaries with a block size of ( ) for simplicity; a few pixels are considered [5–10]. Different corner outliers are determined (upper

) for simplicity; a few pixels are considered [5–10]. Different corner outliers are determined (upper  , upper

, upper  , lower

, lower  , and lower

, and lower  ). The amount of data lost during compression is detailed by (QP). The pixels (a4), (b4), (c4), and (d4) represent the center pixel, as shown in Fig. 9f.

). The amount of data lost during compression is detailed by (QP). The pixels (a4), (b4), (c4), and (d4) represent the center pixel, as shown in Fig. 9f.

• • Region ‘A’ (upper 135  )

)

Figure 9: Corner outlier classification, representation, and its compensation, (a) upper  (b) upper

(b) upper  (c) lower

(c) lower  (d) lower

(d) lower  (e) block arrangement, (f) arrangement of pixels in blocks (g) corner outlier compensation by coordination of corner point

(e) block arrangement, (f) arrangement of pixels in blocks (g) corner outlier compensation by coordination of corner point

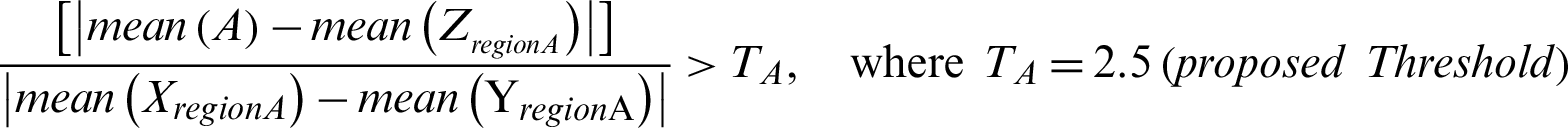

If region ‘A’ has different frequency components from all other regions (B, C, and D), as shown in Fig. 9b, ‘A’ should have a corner outlier. It should satisfy Eq. (13)

If

& &

& &

if Region A is Smooth

ASmooth <min (4, max {Corner outlier pixel in all regions} * QP)

where

Similarly, we can detect corner outliers in regions B, C, and D, as explained in Region A.

2.4 Removal of Corner Outliers

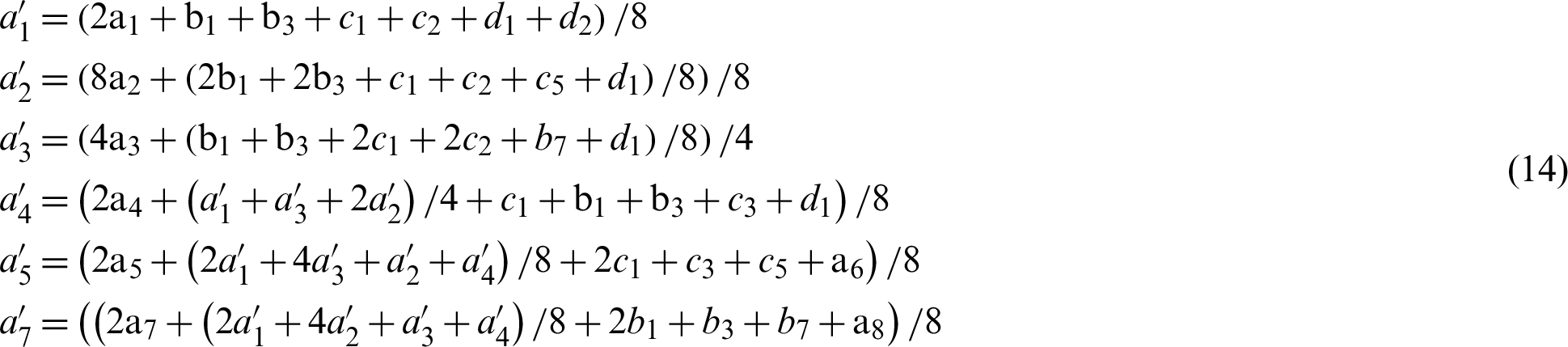

The pixels with high frequency compared to its neighboring pixels cause stair-shaped discontinuities and are updated using Eq. (14)

For regions B, C, and D similar approach is being used while taking Fig. 9f into consideration.

2.5 Adaptive Filtering for Blocking Artifacts

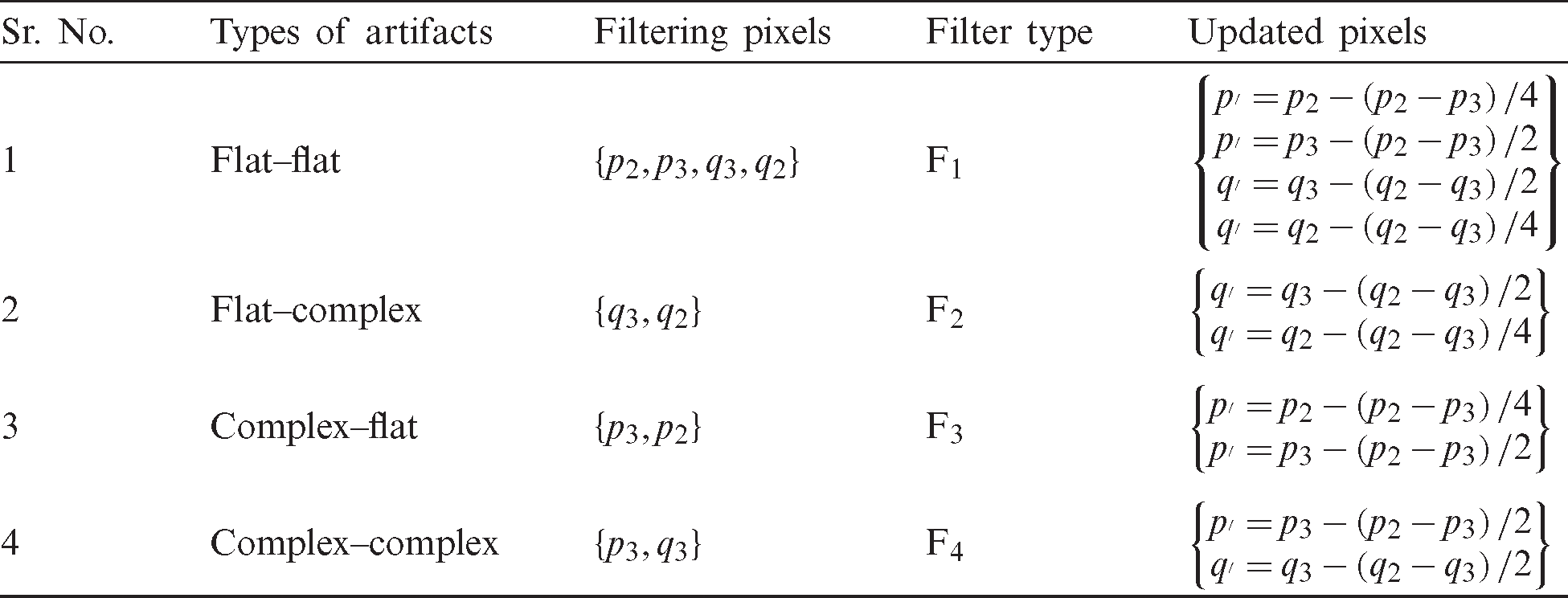

The filters designed for various types of blocking artifacts are processed from top to bottom, starting from the pixels near the block boundaries on both the sides of block edges, as shown in Fig. 6. The filtration process must use the updated pixels as determined in Eq. (14). A strong filter is used in a flat region, a weak filter is sufficient for a complex region, and a medium filter gives outstanding results in the intermediate region. Tab. 1 represents the final selection of pixels and the corresponding filter type.

Table 1: Filter pixel selection and corresponding adaptive filter design

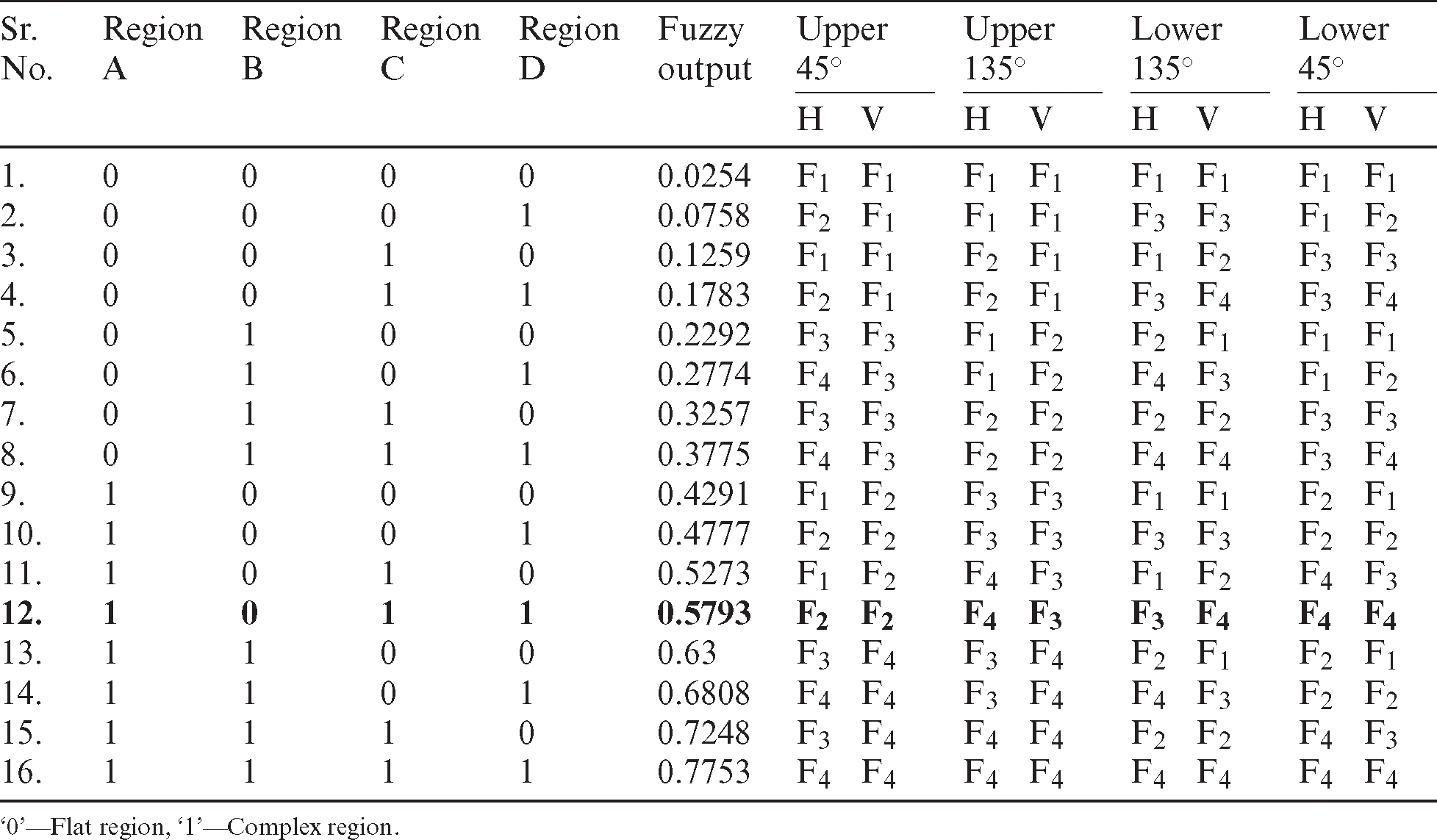

2.6 Fuzzy Filter Selection for Horizontal as well as Vertical Block Edges

This subsection proposes an efficient yet straight forward and fast fuzzy filter selection model. It will remove blocking artifacts in HEVC video sequences and reduce corner outliers. This will enhance the overall perceptual quality of the HEVC video frame processed from QCIF to FHD (1080p) with frame rates 30 and 60 fps. The given fuzzy approach also applies to real-time captured videos. It gives an efficient selection of filters with a low computational cost. Tab. 2 explains the detailed working of fuzzy filter selection. The proposed work uses the Mamdani fuzzy filter selection model with inputs as blocking artifacts across regions A, B, C, D, and corner outliers’ representation for upper  , upper

, upper  , lower

, lower  , lower

, lower  for horizontal as well as vertical block boundaries simultaneously. The output of the proposed fuzzy system varies between 0–1, and the filters are selected accordingly.

for horizontal as well as vertical block boundaries simultaneously. The output of the proposed fuzzy system varies between 0–1, and the filters are selected accordingly.

Table 2: Fuzzy filter selection approach for different regions and corner outliers across the horizontal (H) and the vertical (V) block edges

Tab. 2 is explained by considering the row 12 of the table in which ABCD is 1011. As shown in Fig. 9a, for Upper  , pixel B will be considered. The pixels adjacent s to B are A and D, as seen in Fig. 9e. The pixel combination across the horizontal block boundary is B (0) and D (1), the region is flat-complex, and hence, filter F2 will be selected as shown in Tab. 1. The pixel combination across the vertical block boundary is B (0) and A (1), a flat-complex region, which means the fuzzy selector will select filter F2. Similarly, for the upper

, pixel B will be considered. The pixels adjacent s to B are A and D, as seen in Fig. 9e. The pixel combination across the horizontal block boundary is B (0) and D (1), the region is flat-complex, and hence, filter F2 will be selected as shown in Tab. 1. The pixel combination across the vertical block boundary is B (0) and A (1), a flat-complex region, which means the fuzzy selector will select filter F2. Similarly, for the upper  , the pixel to be considered is A with adjacent pixels as B and C, as depicted in Fig. 9b. Across the horizontal boundary, A and C are 1 (complex) and 1 (complex), respectively; hence, filter F4 comes into utilization. On the contrary, for vertical boundary pixel A (1) and B (0), the region is complex-flat, so the filter F3 is operational. In Lower

, the pixel to be considered is A with adjacent pixels as B and C, as depicted in Fig. 9b. Across the horizontal boundary, A and C are 1 (complex) and 1 (complex), respectively; hence, filter F4 comes into utilization. On the contrary, for vertical boundary pixel A (1) and B (0), the region is complex-flat, so the filter F3 is operational. In Lower  , consider pixel D with neighboring pixels B and C. The horizontal block boundary pixels, D (1) and B (0) make the region as complex-flat, and the filter F3 will work. In contrast, across vertical axis pixel D (1) and C( 1), the region becomes complex–complex, so F

, consider pixel D with neighboring pixels B and C. The horizontal block boundary pixels, D (1) and B (0) make the region as complex-flat, and the filter F3 will work. In contrast, across vertical axis pixel D (1) and C( 1), the region becomes complex–complex, so F filter will be used. In the Lower

filter will be used. In the Lower  , C pixel will be under consideration, having A on its horizontal boundary and D on its vertical boundary. In this case, both horizontal pixels C (1) and A (1) and vertical axis pixels C (1) and D (1) form a complex-complex combination that initiates the F4 filter. The complete table operates similarly.

, C pixel will be under consideration, having A on its horizontal boundary and D on its vertical boundary. In this case, both horizontal pixels C (1) and A (1) and vertical axis pixels C (1) and D (1) form a complex-complex combination that initiates the F4 filter. The complete table operates similarly.

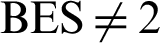

The proposed work also considers the chroma component, which is usually not considered in the HEVC deblocking filters across block edges of ( ) block size. Block Edge Strength (BES) value depends on two significant factors, namely the difference between the motion vector of surrounding regions and the prediction modes. Block strength generally varies between (0–2). For the luma component (

) block size. Block Edge Strength (BES) value depends on two significant factors, namely the difference between the motion vector of surrounding regions and the prediction modes. Block strength generally varies between (0–2). For the luma component ( ) and (

) and ( ), strong and light filters are used respectively.

), strong and light filters are used respectively.

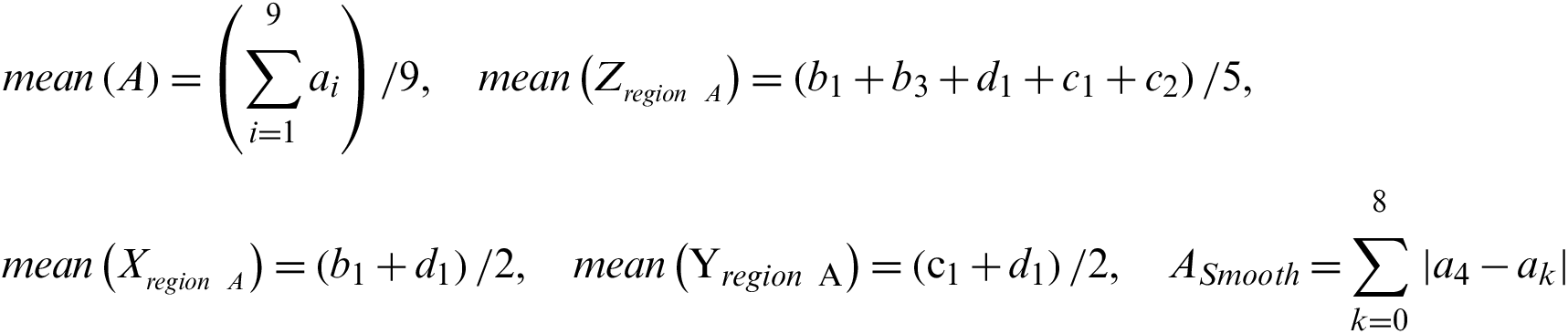

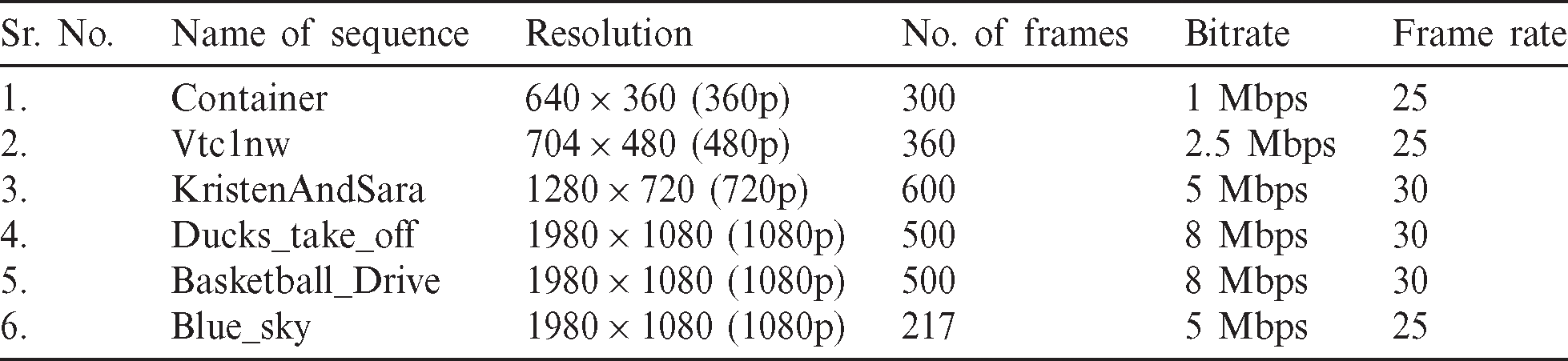

On the other hand, when the video is coded at a low bitrate and contains complex motion data (i.e.,  ), most of the B and P frames are processed by I-frames. So, a regular deblocking filter is not advisable in this case. With an increase in the size of the transform unit and prediction unit of HEVC videos, blocking artifacts are more prominent across edges due to the chroma components than the luma, as shown in Fig. 10.

), most of the B and P frames are processed by I-frames. So, a regular deblocking filter is not advisable in this case. With an increase in the size of the transform unit and prediction unit of HEVC videos, blocking artifacts are more prominent across edges due to the chroma components than the luma, as shown in Fig. 10.

Figure 10: Basketball drive compressed at  , chrome and luma component representation

, chrome and luma component representation

Fig. 10 clearly shows that the blocking artifacts mostly occurred due to chroma components. The difference between the V component’s value on both sides of the block edges is predominating the other components.

Therefore, the chroma component is equally essential in detecting and removal of blocking artifacts. This proposed research applies to luma and the chroma component of ( ) grid of HEVC coded video sequences.

) grid of HEVC coded video sequences.

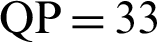

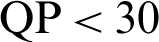

The following test sequences validate our results and compare them with various methods, as discussed in Standard HEVC decoded frame, Kim et al. [17], Chen et al. [22], Norkin et al. [23], Karimzadeh et al. [25], Wang et al. [28]. The details of test sequences are as shown in Tab. 3.

Table 3: Test sequences used in this paper

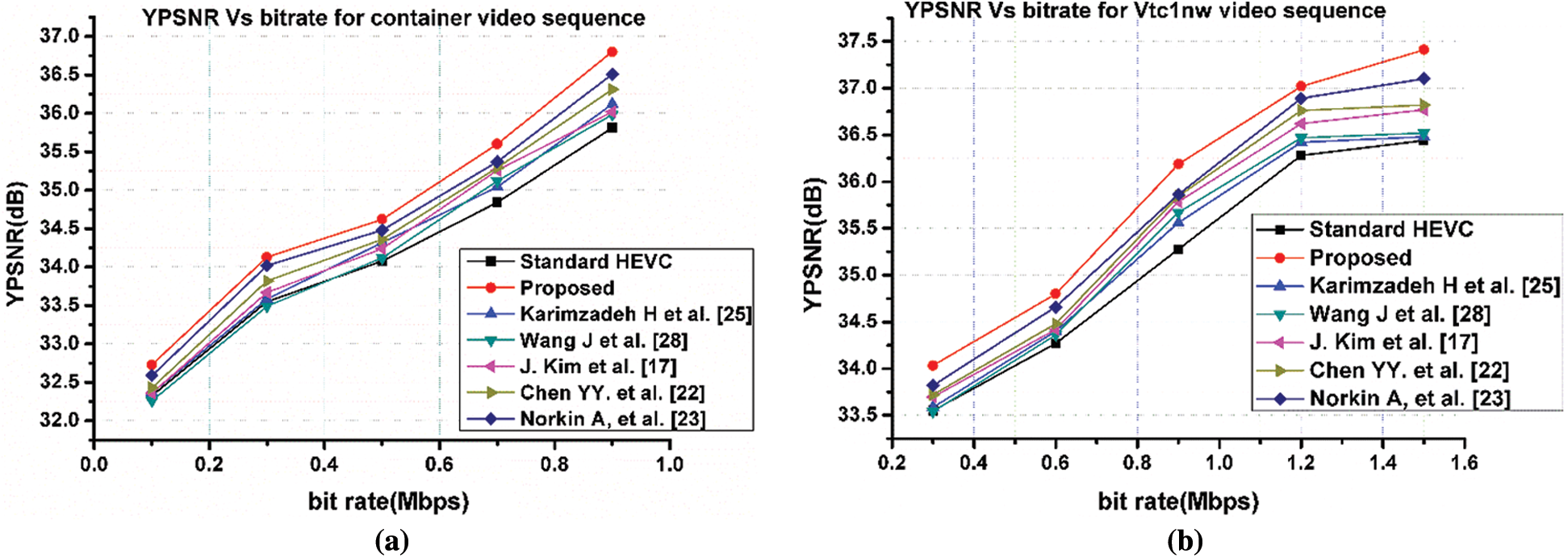

Our method uses the same HEVC coding, which Kim et al. [17], Chen et al. [22], Norkin et al. [23], Karimzadeh et al. [25], Wang et al. [28] used in the Standard HEVC frame decoding approach along with adaptive loop filtering. In fast videos with very little information (Blue_sky, Basketball_Drive), blocking artifacts are visible rarely when compressed at  . On the other hand, videos with intricate details (KristenAndSara, Duck_take_off) coded at a fast speed, when compressed at

. On the other hand, videos with intricate details (KristenAndSara, Duck_take_off) coded at a fast speed, when compressed at  , result in blocking artifacts’ disappearance. Therefore, we have analyzed all the results above

, result in blocking artifacts’ disappearance. Therefore, we have analyzed all the results above  .

.

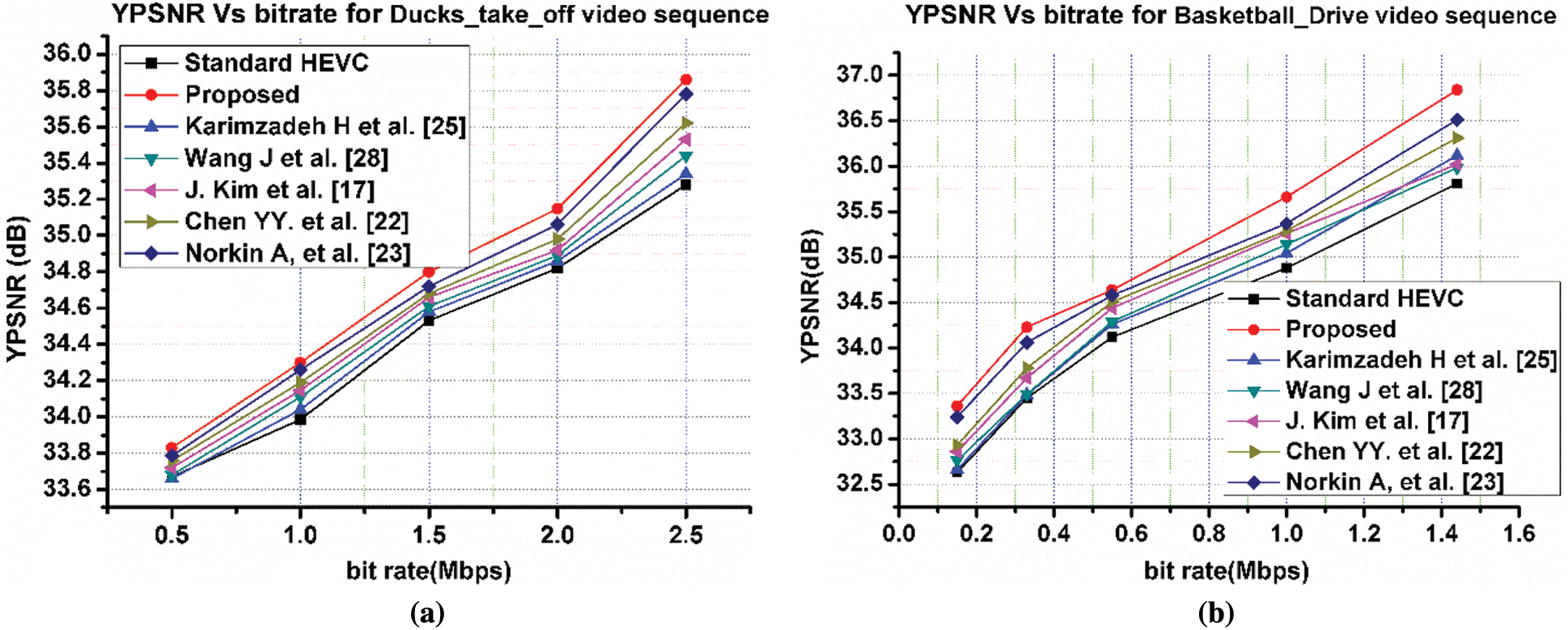

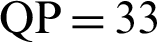

Fig. 11 depicts the luma component peak signal to noise ratio (YPSNR) vs. bitrate for standard definition video sequences, namely container and Vtc1nw. Both video sequences are encoded using HEVC at QP = 33 and analyzed using different post–processing techniques and the proposed method. The variation of average YPSNR for most of the methods, namely Kim et al. [17], Chen et al. [22], Norkin et al. [23], Karimzadeh et al. [25], Wang et al. [28] are almost linear in terms of bitrate for low-resolution videos. When the resolution of videos increases, the different methods’ performance significantly varied. The proposed method is comparatively linear and is almost independent of the resolution of video sequence due to its adaptive nature. Moreover, the fuzzy system provides efficient filtering for blocking artifacts, quantization noise, corner outlier removal, and provides perceptual quality of reconstructed videos. The proposed method outperforms existing techniques in terms of objective metrics YPSNR. It is more enhanced by (0.483–0.97dB) for different bitrates than the standard HEVC encoding method. For the SD video sequence, the average improvement in YPSNR ranges between (0.5–0.86dB); for HD, it is lying between (0.32–0.47 dB) w.r.t existing techniques.

Figure 11: YPSNR vs. bitrate for standard definition (SD) video sequences (a) container (b) Vtc1nw

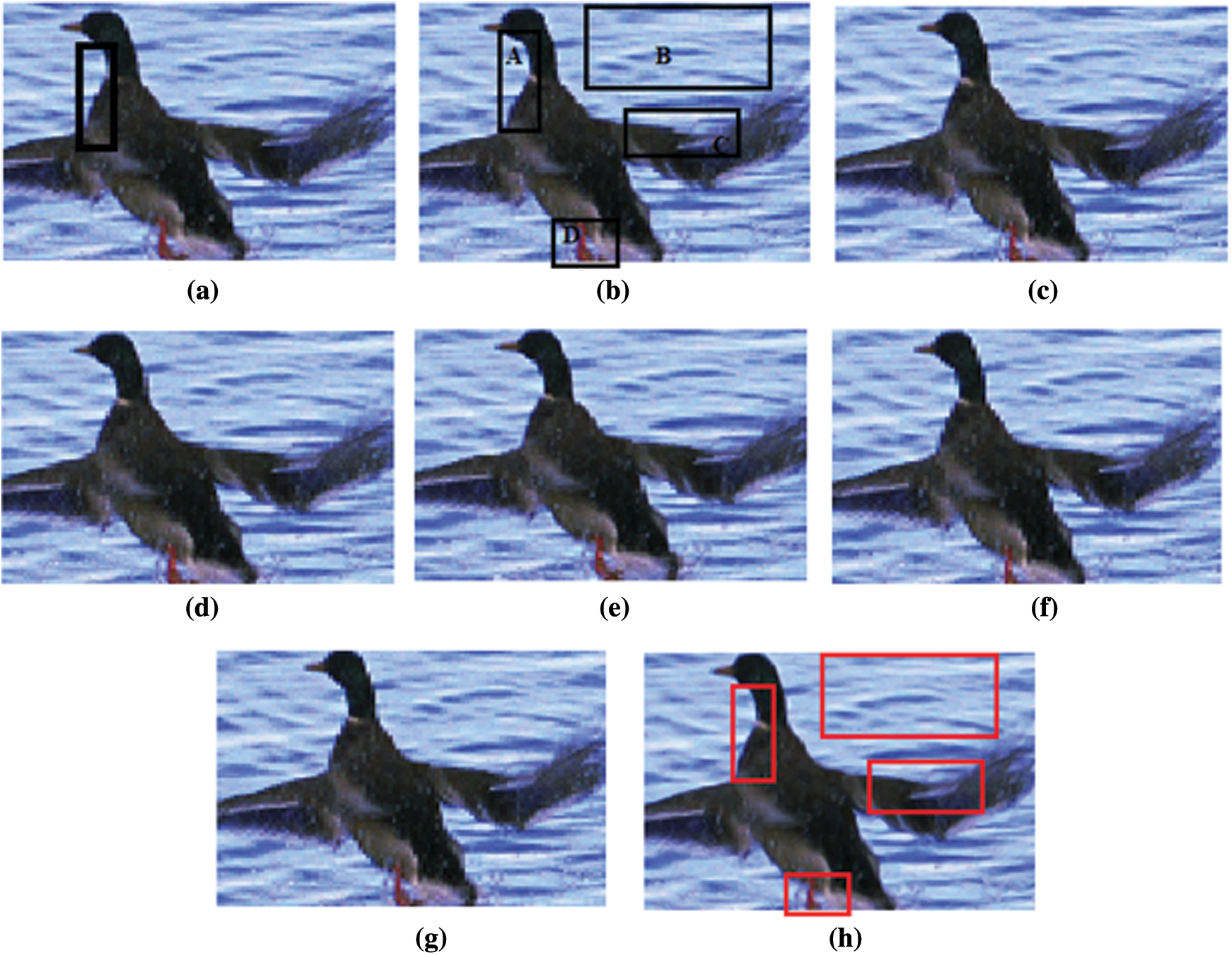

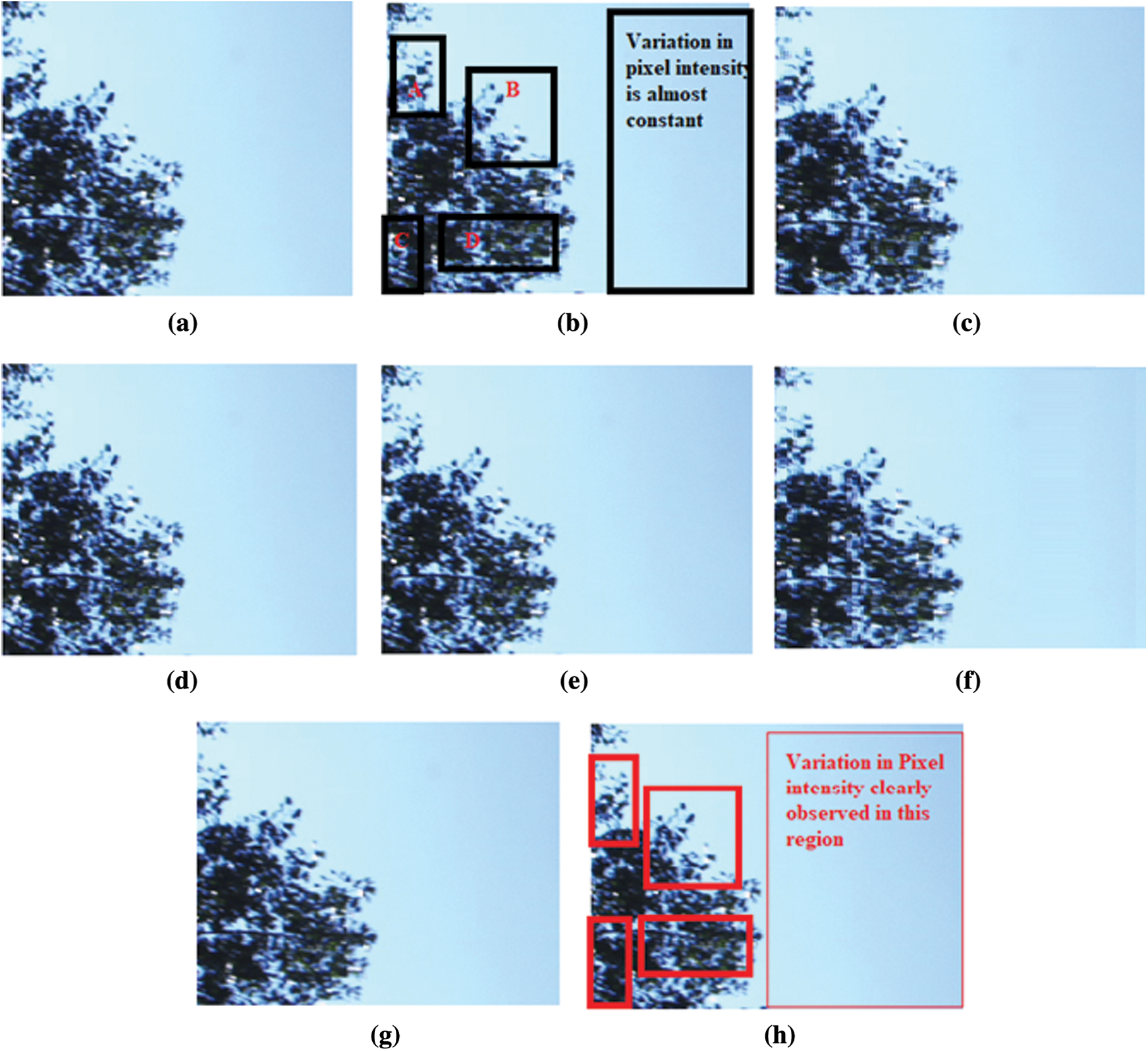

Fig. 12 depicts the objective analysis of high definition video sequences with resolution 1080p. Furthermore, in Fig. 13b, even for complex video sequences like ducks_take_off, the flying movement of ducks or ripples in water cause lots of artifacts when coded with HEVC standard at  . We have marked different areas A, B, C, and D, where we can easily observe artifacts and degradation in the image/frame’s perceptual quality.

. We have marked different areas A, B, C, and D, where we can easily observe artifacts and degradation in the image/frame’s perceptual quality.

Figure 12: YPSNR vs. bitrate of FHD video (a) ducks_take_off (b) basketball_drive

Figure 13: Subjective analysis of duck_take_off (1080p) frame compressed at  (a) frame no. 289 (b) standard decoding using HEVC (c) Wang et al. [28] (d) Kim et al. [17] (e) Chen et al. [22] (f) Norkin et al. [23] (g) Karimzadeh et al. [25] (h) proposed deblocking technique

(a) frame no. 289 (b) standard decoding using HEVC (c) Wang et al. [28] (d) Kim et al. [17] (e) Chen et al. [22] (f) Norkin et al. [23] (g) Karimzadeh et al. [25] (h) proposed deblocking technique

Fig. 13h shows that the proposed method removes these artifacts in (A, B, C, D) regions and completely removes corner outliers, which is possible as we have considered the luma and the chroma components simultaneously. It improves the overall quality of the frame as compared to other methods shown in Figs 13d–13h. Fig. 13h also depicts the result of the quantization error removal stage of the proposed method in region A, corner outlier removal in region B, and blocking artifacts in Region C and D, respectively, as shown in red rectangles. Fig. 13h clearly shows the proposed method’s correctness compared to the existing methods.

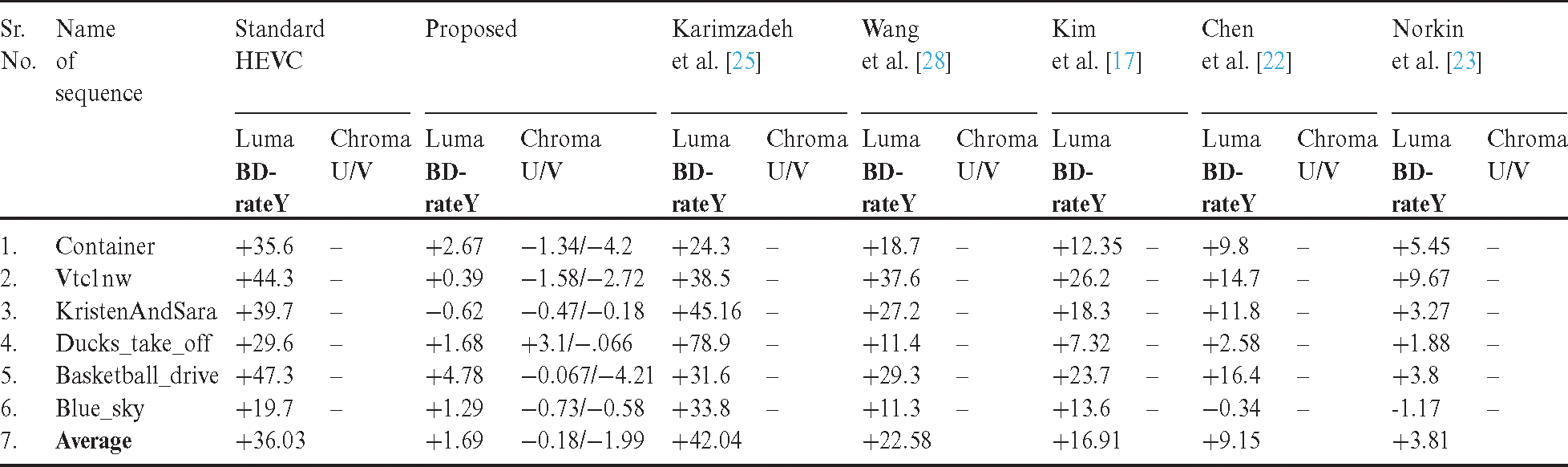

Fig. 14 represents the luma component analysis of various methods and compare the objective quality using YPSNR metrics for KristenAndSara as well as Blue_sky in 1080p video sequence. These sequences do not contain many complex data, and the performance of the proposed method is exponential. The proposed method shows promising results while reconstructing these video sequences, as shown in Tab. 4. Due to chroma consideration, blocking artifacts and corner outliers are alleviated efficiently with the proposed methods compared to other methods that generally focused on the luma component only, as discussed in this paper. Tab. 4 represents BD_rate for luma and chroma components of the proposed method and compares the results with BD_rate(Y), i.e., luma components of other methods as they are not considered chroma components. We can observe from Tab. 4 that Norkin et al. [23] have reached +3.81% BD_rate (luma), within an acceptable range. The value of BD_rate close to zero or negative shows the better performance of a given system. The proposed method achieves +1.69% BD_rate for the luma component and −0.18% (U) and −1.99% (V) chroma components, which shows the proposed technique’s efficiency.

Figure 14: Objective analysis (YPSNR vs. bitrate) of FHD video (a) KristenAndSara (b) Blue_sky

Table 4: Luma/Chroma analysis using BD_rate (%age) of test sequences

Fig. 15 shows the subjective analysis of Blue_sky (1080p). The blue_sky video sequence is an exceptionally smooth sequence without any intricate details in frames. The proposed method shows excellent subjective output in terms of the perceptual visual quality of the frame. Fig. 15c shows more details in these regions (A, B, C, D) and outperform other existing methods discussed in this paper.

• • Time Complexity of Decoder

Figure 15: Subjective analysis of Blue_sky (1080p) frame compressed at  (a) frame no. 167 (b) standard decoding using HEVC (c) Wang et al. [28] (d) Kim et al. [17] (e) Chen et al. [22] (f) Norkin et al. [23] (g) Karimzadeh et al. [25] (h) proposed deblocking technique

(a) frame no. 167 (b) standard decoding using HEVC (c) Wang et al. [28] (d) Kim et al. [17] (e) Chen et al. [22] (f) Norkin et al. [23] (g) Karimzadeh et al. [25] (h) proposed deblocking technique

To validate the decoder’s complexity of the proposed method, we compare our results with the HEVC standard (HM16.9). The mathematical relation for time complexity is given by

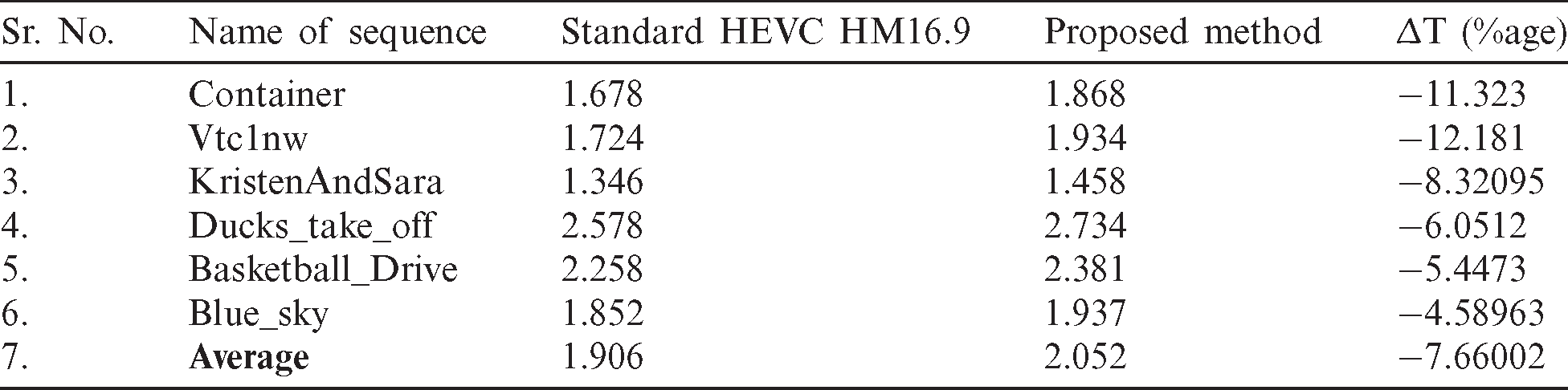

To evaluate the system’s computation complexity, we use different videos sequence, and the comparison is shown in Tab. 5.

Table 5: Time complexity of the proposed technique with standard HEVC for different test sequences

From Tab. 5, it is clear that the proposed method’s decoding time is quite close to the HEVC standard decoder even after removing blocking artifacts and corner outliers, which shows less computational complexity of the proposed method.

High Efficiency Video Coding is a powerful video compression technique, specifically when HD videos are under consideration. In-loop filter methods discussed in literature reduce blocking artifacts to some extent. However, when some complex data coded at a fast speed, certain blocking artifacts still exist, which significantly degrade the videos’ visual quality coded at a low bitrate. We proposed a four-step adaptive deblocking fuzzy-based filter selection method that efficiently removes block artifacts and corner outliers for various video sequences. The proposed method removes all the unwanted quantization noise, which disturbs the video frames natural edges and produces difficulty in selecting the desired filter. Later, we introduce a novel technique to detect blocking artifact as well as corner outliers. Finally, a fuzzy filter selection technique applies to remove blocking artifact along with corner outlier simultaneously in all the regions, namely (Flat–Flat), (Flat–Complex), (Complex–Flat) and (Complex–Complex). The proposed deblocking method is applied both to luma and chroma components. The result section’s experimentation clearly shows the proposed technique efficiency in terms of objective and subjective analysis of diverse input videos with tolerable visual quality using HVS. We have achieved average improvement in YPSNR ranges between (0.5–0.86 dB) for SD videos; for HD, it is lying between (0.32–0.47 dB) w.r.t existing techniques. The proposed method achieves +1.69% BD_rate for the luma component, 0.18% (U), and −1.99% (V) for chroma components, which shows the proposed technique’s efficiency. The low computational complexity of the proposed method provides a platform for real-time applications in the near future.

Funding Statement: The authors received no specific funding for this study.

Conflicts of Interest: The authors declare that they have no conflict of intrest to report regarding the present study.

1. T. Wiegand, G. J. Sullivan, G. Bjontegaard and A. Luthra. (2003). “Overview of the H.264/AVC video coding standard,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 13, no. 7, pp. 560–576. [Google Scholar]

2. O. I. Khalaf and B. M. Sabbar. (2019). “An overview on wireless sensor networks and finding optimal location of nodes,” Periodicals of Engineering and Natural Sciences, vol. 7, no. 3, pp. 1096–1101.

3. B. Mallik and A. S. Akbari. (2016). “HEVC based multi-view video codec using frame interleaving technique,” in 2016 IEEE Proc. DeSE, pp. 181–185. [Google Scholar]

4. G. J. Sullivan, J. R. Ohm, W. J. Han and T. Wiegand. (2012). “Overview of high-efficiency video coding (HEVC) standard,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 22, no. 12, pp. 1649–1668. [Google Scholar]

5. O. I. Khalaf and G. M. Abdulsahib. (2019). “Frequency estimation by the method of minimum mean squared error and P-value distributed in the wireless sensor network,” Journal of Information Science and Engineering, vol. 35, no. 5, pp. 1099–1112. [Google Scholar]

6. Y. W. Huang, C. M. Fu, C. Y. Chen, C. Y. Tsai, Y. Gao et al. (2010). “Improved deblocking filter,” in ITU-T SG16 WP3 and ISO/IEC JTC1/ SC29/WG11 Document JCTVC-C142. Guangzhou, China, pp. 7–15. [Google Scholar]

7. M. M. Hannuksela, D. Rusanovskyy, W. Su, L. Chen, R. Li et al. (2013). “Multiview-video-plus-depth coding based on the advanced video coding standard,” IEEE Transactions on Image Processing, vol. 22, no. 9, pp. 3449–3458. [Google Scholar]

8. A. S. Dias, M. Siekmann, S. Bosse, H. Schwarz, D. Marpe et al. (2015). “Rate-distortion optimised quantisation for HEVC using spatial just noticeable distortion,” in 2015 23rd European Signal Processing Conf., Nice, France, pp. 110–114. [Google Scholar]

9. T. Tang and L. Li. (2016). “Adaptive deblocking method for low bitrate coded HEVC video,” Journal of Visual Communication and Image Representation, vol. 38, pp. 721–734. [Google Scholar]

10. L. Trzcianowski. (2015). “Subjective assessment for standard television sequences and videotoms H.264/AVC video coding standard,” Journal of Telecommunications and Information Technology, vol. 1, pp. 37–41. [Google Scholar]

11. Y. Chen and V. Sze. (2014). “A deeply pipelined CABAC decoder for HEVC supporting level 6.2 high-tier applications,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 25, no. 5, pp. 856–868. [Google Scholar]

12. G. He, D. Zhou, Y. Li, Z. Chen, T. Zhang et al. (2015). “High-throughput power-efficient VLSI architecture of fractional motion estimation for ultra-HD HEVC video encoding,” IEEE Transactions on Very Large Scale Integration (VLSI) Systems, vol. 23, no. 12, pp. 3138–3142. [Google Scholar]

13. Y. J. Ahn, T. J. Hwang, D. G. Sim and W. J. Han. (2014). “Implementation of fast HEVC encoder based on SIMD and data-level parallelism,” EURASIP Journal on Image and Video Processing, vol. 16, pp. 1–19. [Google Scholar]

14. S. G. Blasi, I. Zupancic and E. Izquierdo. (2014). “Fast motion estimation discarding low impact fractional blocks,” in IEEE-Proc. of 22nd European Signal Processing Conf., Libson, Portugal, pp. 201–215. [Google Scholar]

15. S. Hu, L. Jin and H. Wang. (2017). “Objective video quality assessment based on perceptually weighted mean squared error,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 27, no. 9, pp. 1844–1855. [Google Scholar]

16. O. I. Khalaf, G. M. Abdulsahib and B. M. Sabbar. (2020). “Optimization of wireless sensor network coverage using the bee algorithm,” Journal of Information Science and Engineering, vol. 36, no. 2, pp. 377–386. [Google Scholar]

17. J. Kim and J. Jeong. (2007). “Adaptive deblocking technique for mobile video,” IEEE Transactions on Consumer Electronics, vol. 53, no. 4, pp. 1694–1702. [Google Scholar]

18. S. Singh, V. Kumar and H. K. Verma. (2007). “Reduction of blocking artifacts in JPEG compressed images,” Digit Signal Processing, vol. 17, no. 1, pp. 225–243. [Google Scholar]

19. O. I. Khalaf, G. M. Abdulsahib, H. D. Kasmaei and K. A. Ogudo. (2020). “A new algorithm on application of blockchain technology in live stream video transmissions and telecommunications,” International Journal of e-Collaboration, vol. 1, no. 16, pp. 16–32. [Google Scholar]

20. W. Hu, J. Xue, X. Lan and N. Zheng. (2009). “Local patch based regularized least squares model for compression artifacts removal,” IEEE Transactions on Consumer Electronics, vol. 55, no. 4, pp. 2057–2065. [Google Scholar]

21. E. H. Yang, C. Sun and J. Meng. (2014). “Quantization table design revisited for image/video coding,” IEEE Transactions on Image Processing, vol. 23, no. 11, pp. 4799–4811. [Google Scholar]

22. Y. Y. Chen, Y. W. Chang and W. C. Yen. (2008). “Design a deblocking filter with three separate modes in DCT-based coding,” Journal of Visual Communication and Image Representation, vol. 19, no. 4, pp. 231–244. [Google Scholar]

23. A. Norkin, G. Bjontegaard, A. Fuldseth, M. Narroschke, M. Ikeda et al. (2012). “HEVC deblocking filter,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 5, no. 22, pp. 1746–1754. [Google Scholar]

24. B. Mallik, A. S. Akbari and A. L. Kor. (2019). “Mixed-resolution HEVC based multi-view video codec for low bitrate transmission,” Multimedia Tools and Applications, vol. 78, no. 6, pp. 6701–6720.

25. H. Karimzadeh and M. Ramezanpour. (2016). “An efficient deblocking filter algorithm for reduction of blocking artifacts in HEVC standard,” International Journal of Image, Graphics and Signal Processing, vol. 8, no. 11, pp. 18–24. [Google Scholar]

26. J. Lee and J. Jeong. (2020). “Deblocking performance analysis of weak filter on versatile video coding,” Electronics Letters, vol. 56, no. 6, pp. 289–290.

27. A. D. Salman, O. I. Khalaf and G. M. Abdulsahib. (2019). “An adaptive intelligent alarm system for wireless sensor network,” Indonesian Journal of Electrical Engineering and Computer Science, vol. 15, no. 1, pp. 142–147.

28. J. Wang, Z. Wu, G. Jeon and J. Jeong. (2015). “An efficient spatial deblocking of images with DCT compression,” Digital Signal Processing, vol. 42, pp. 80–88. [Google Scholar]

29. K. A. Ogudo, D. M. J. Nestor, O. I. Khalaf and H. D. Kasmaei. (2019). “A device performance and data analytics concept for smartphones, IoT services and machine-type communication in cellular networks,” Symmetry, vol. 11, pp. 593.

30. K. Misra, A. Segall and F. Bossen. (2019). “Tools for video coding beyond HEVC: Flexible partitioning, motion vector coding, luma adaptive quantization, and improved deblocking,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 30, no. 5, pp. 1361–1373.

31. O. I. Khalaf, G. M. Abdulsahib and M. Sadik. (2018). “A modified algorithm for improving lifetime WSN,” Journal of Engineering and Applied Sciences, vol. 13, pp. 9277–9282. [Google Scholar]

32. W. Shen, Y. Fan, Y. Bai, L. Huang, Q. Shang et al. (2016). “A combined deblocking filter and SAO hardware architecture for HEVC,” IEEE Transactions on Multimedia, vol. 18, no. 6, pp. 1022–1033. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |