DOI:10.32604/cmc.2020.012945

| Computers, Materials & Continua DOI:10.32604/cmc.2020.012945 |  |

| Article |

Deep Learning-Based Classification of Fruit Diseases: An Application for Precision Agriculture

1Department of Computer Science, HITEC University, Taxila, 47040, Pakistan

2Department of Computer Science, COMSATS University Islamabad, Wah Campus, Wah Cantt, 47080, Pakistan

3Department of Computer Science, COMSATS University Islamabad, Attock Campus, Attock, Pakistan

4Department of Computer Science and Engineering, Soonchunhyang University, Asan, South Korea

5Department of Mathematics and Computer Science, Faculty of Science, Beirut Arab University, Beirut, Lebanon

*Corresponding Author: Seifedine Kadry. Email: skadry@gmail.com; ynam@sch.ac.kr

Received: 18 July 2020; Accepted: 05 October 2020

Abstract: Agriculture is essential for the economy and plant disease must be minimized. Early recognition of problems is important, but the manual inspection is slow, error-prone, and has high manpower and time requirements. Artificial intelligence can be used to extract fruit color, shape, or texture data, thus aiding the detection of infections. Recently, the convolutional neural network (CNN) techniques show a massive success for image classification tasks. CNN extracts more detailed features and can work efficiently with large datasets. In this work, we used a combined deep neural network and contour feature-based approach to classify fruits and their diseases. A fine-tuned, pretrained deep learning model (VGG19) was retrained using a plant dataset, from which useful features were extracted. Next, contour features were extracted using pyramid histogram of oriented gradient (PHOG) and combined with the deep features using serial based approach. During the fusion process, a few pieces of redundant information were added in the form of features. Then, a “relevance-based” optimization technique was used to select the best features from the fused vector for the final classifications. With the use of multiple classifiers, an accuracy of up to 99.6% was achieved on the proposed method, which is superior to previous techniques. Moreover, our approach is useful for 5G technology, cloud computing, and the Internet of Things (IoT).

Keywords: Agriculture; deep learning; feature selection; feature fusion; fruit classification

Early plant disease detection is economically vital. State-of-the-art computer vision and machine- learning can recognize and identify infections at a very early stage, thus reducing disease spread and increasing cure rates. Some disease symptoms appear too late for effective action to be taken, but in other cases diseases are apparent at a very early stage [1]. Apples and cherries, among other fruits, can develop powdery mildew, rust, and black rot that are often identified during manual inspection, or in the laboratory. Manual inspection is slow, error-prone, and has high manpower and time requirements. Artificial intelligence can be used to extract fruit color, shape, or texture data, thus aiding the detection of infections. Over 374 million fruits are harvested annually; in 2011, 50–55% of these were grown in Asia [2]. Fruit appearance affects customer decisions, and varies by the point of sale (hawkers, shop, malls, etc.). Most fruits are packed by hand, which can affect the quality and imposes a manpower cost. Automated techniques must be fast, work effectively in poor light, meet various client requirements, and be able to handle new fruit varieties. Low-quality fruit can be sent to clients who prefer it for juicing and pickling; the rotten parts are removed and the remainder is sliced or pressed. High-quality fruits command a good price. A robot that can distinguish among fruit types and quality is required.

Many automated strategies based on digital image processing are used to check fruit type and quality [3]. Computer vision techniques can be used to detect shapes, and have applications including breast cancer classification [4], object detection [5,6], and medical image evaluation [7,8]. Few machine-learning techniques have been used to analyze fruit type, ripeness, or disease, but could aid automatic harvesting, fruit-picking, and fruit identification.

Mobile devices place unprecedented demand on computing, edge computing, and the Internet of Things (IoT). In 2015, monthly data consumption was 3.7 exabytes; content providers (CPs) such as Netflix, Vimeo, and YouTube predict that the figure will reach 30.6 exabytes by the end of 2020 [9]. Capacity shortage and data consumption will become significant issues for mobile network operators (MNOs). The small cells (SCs) of 5G systems have many academic and industrial applications. The SCs are backhaul-connected to the core networks of mobile operators. Mobile edge caching avoids possible bottlenecks. The primary purpose of caching is to ensure fast and adaptable linkages between limited backhaul and radio connections [10]. 5G-enabled mobile edge computing (MEC) efficiently handles many SCs and their associated cache memories. In an MEC, services required by increasing numbers of users run inside a radio access network (RAN). MECs not only improve user experience, but also reduce the time taken to transfer data from a server to an end-user. Increases in cache memory, the number of CPs, and SC spatial density enhance the performance of mobile edge caching systems [11].

In a store, a clerk identifies and prices fruit. Standardized barcode tags are used for packed items; typically, fruit is not packed and thus not barcoded. Fruit classification is important for automated juicing and pricing. Fruits must first be identified, and then graded in terms of quality. In Chandwani et al. [12], robotic harvesting reduced labor costs and improved fruit quality. Robots are increasingly used for harvesting. Automated fruit classification is less well-established, and its efficacy is hotly debated; there is a need to rapidly identify and price fruit, which can be achieved by cashiers [13]. Automated fruit-packing and grading, via machine-learning algorithms, has attracted much interest [14]. The typical features extracted are color, texture, and shape. Trained classifiers may be restricted to unacceptably simple classifications.

A plant disease classification technique was proposed using pretrained GoogleNet and AlexNet models to extract deep features. The models were tested using the publicly available Plant Village dataset; the classification accuracy was 99.3% [15]. Another model was used to assess rice fields, baaed on high-definition images captured by unmanned aerial vehicles (UAVs). A deep convolutional neural network (DCNN) was used to extract features and the data were labeled by multiple classifiers. The overall classification accuracy was 72% [16]. In another study, a hybrid classification/detection model evaluated apple diseases. This method extracted and combined color, shape, and texture data and fed them to a Support Vector Machine (SVM) classifier; the maximum accuracy was 95.94% [17]. Tomato diseases were detected and classified using a robust DCNN model with a metaheuristic architecture including a single-shot multi-box detector, a region-based fast CNN, a regional CNN (which was even faster), and a data augmentation module; the classification accuracy was 85.9% [18].

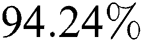

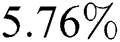

Given the enormous increase in fruit production, an accurate classification method is vital; manpower demands must be reduced and efficiency increased [19]. Machine-learning approaches, which employ computational and mathematical models trained on previous data, are essential and are now replacing manual methods in many industries. Previously, gas sensors, infrared imaging, and liquid chromatography devices were used to scan fruit. However, these methods require skilled operators and are expensive. Image-based fruit classification avoids these drawbacks, and requires only a digital camera and efficient model. The neural network (NN)-based artificial bee colony (ABC) algorithm was used to classify four types of fruits, with an accuracy of 76.39% [20]. A hybrid technique using an enhanced fitness-scaled chaotic ABC, feedforward NNs, and an optimized genetic algorithm had an accuracy of 87.9% [21]. Two-step classification extracted shape, color, and texture data that were fed to a fast NN trained by a biogeography-based optimization (BBO) algorithm. Principal component analysis (PCA) had a classification accuracy of 89.11% when applied to 18 different fruits [22]. Fruit texture, shape, and color data in Hue, Saturation, and Value (HSV) color space were used to evaluate a dataset including 20 different fruit types. The overall classification accuracy was  [23]. A custom dataset of 1,653 images of 18 fruit types (classes) was used to validate a method that extracted and classified FRFE features using a single-layer feedforward NN. The accuracy was 88.99% after five-fold cross-validation [24].

[23]. A custom dataset of 1,653 images of 18 fruit types (classes) was used to validate a method that extracted and classified FRFE features using a single-layer feedforward NN. The accuracy was 88.99% after five-fold cross-validation [24].

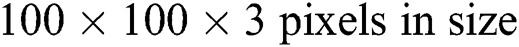

Classification of the 971 fruit images of the FIDS30 dataset was  accurate using a CNN with featured parameter optimization. The model had eight layers and classified images with dimensions of

accurate using a CNN with featured parameter optimization. The model had eight layers and classified images with dimensions of  [25]. Fruit images were classified based on edge distances and zoning features, combined via discrete Fourier transform. The highest accuracy (

[25]. Fruit images were classified based on edge distances and zoning features, combined via discrete Fourier transform. The highest accuracy ( was achieved by the neighbor-joining technique [26]. A shape-based method of fruit classification independent of fruit appearance was proposed. The technique was validated for seven fruits (210 images) and the overall accuracy was 88–95% [27]. Another technique used an SVM classifier to classify fruit based on color and textural features; a classification accuracy of 95.3% was achieved [28]. One real-time classification system trained an artificial NN using over 1,900 images; the accuracy was 97.4% [29]. The main issues for classifiers are as follows: i) Extracting the features that are most useful for classifying fruit types and diseases; ii) Eliminating irrelevant features that compromise accuracy; and iii) reducing the training time.

was achieved by the neighbor-joining technique [26]. A shape-based method of fruit classification independent of fruit appearance was proposed. The technique was validated for seven fruits (210 images) and the overall accuracy was 88–95% [27]. Another technique used an SVM classifier to classify fruit based on color and textural features; a classification accuracy of 95.3% was achieved [28]. One real-time classification system trained an artificial NN using over 1,900 images; the accuracy was 97.4% [29]. The main issues for classifiers are as follows: i) Extracting the features that are most useful for classifying fruit types and diseases; ii) Eliminating irrelevant features that compromise accuracy; and iii) reducing the training time.

This article is structured as follows. The objectives and contributions are detailed in Section 2 and the classification technique is described in Section 3. Experimental results validating the technique are presented in Section 4, and Section 5 concludes provides the discussion and future research directions.

2 Objectives and Contributions

To improve image-based fruit classification, we reviewed previous studies and identified the following issues: (i) Previous approaches used traditional manual feature extraction; and, (ii) Manually extracted features were often evaluated using only local datasets with few fruit classes and images. A CNN extracts more detailed features, and can work efficiently with large datasets. CNNs exhibited extraordinary object recognition capabilities during the ImageNet competition [30]. CNNs find be applied for medical imaging, object detection, agricultural imaging, and networking.

The multi-dimensionality, size, and complexity of big data produced by the IoT pose major challenges. The combined use of 5G technology, the IoT, and cloud computing can provide rapid and accurate results while minimizing resource utilization. A smart device can make intelligent real-time decisions. In this study, we applied a novel real-time method to classify mobile images using MEC and 5G technology. The classification was performed by CNNs based on hand-crafted features. To accelerate the classification process, we used a minimum redundancy maximum relevance (mRMR)-based feature selection technique to ensure the inclusion of relevant features only. No previous system has utilized 5G technology and cloud computing to classify real-time data with such high accuracy.

3 The Fruit Classification Framework

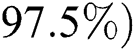

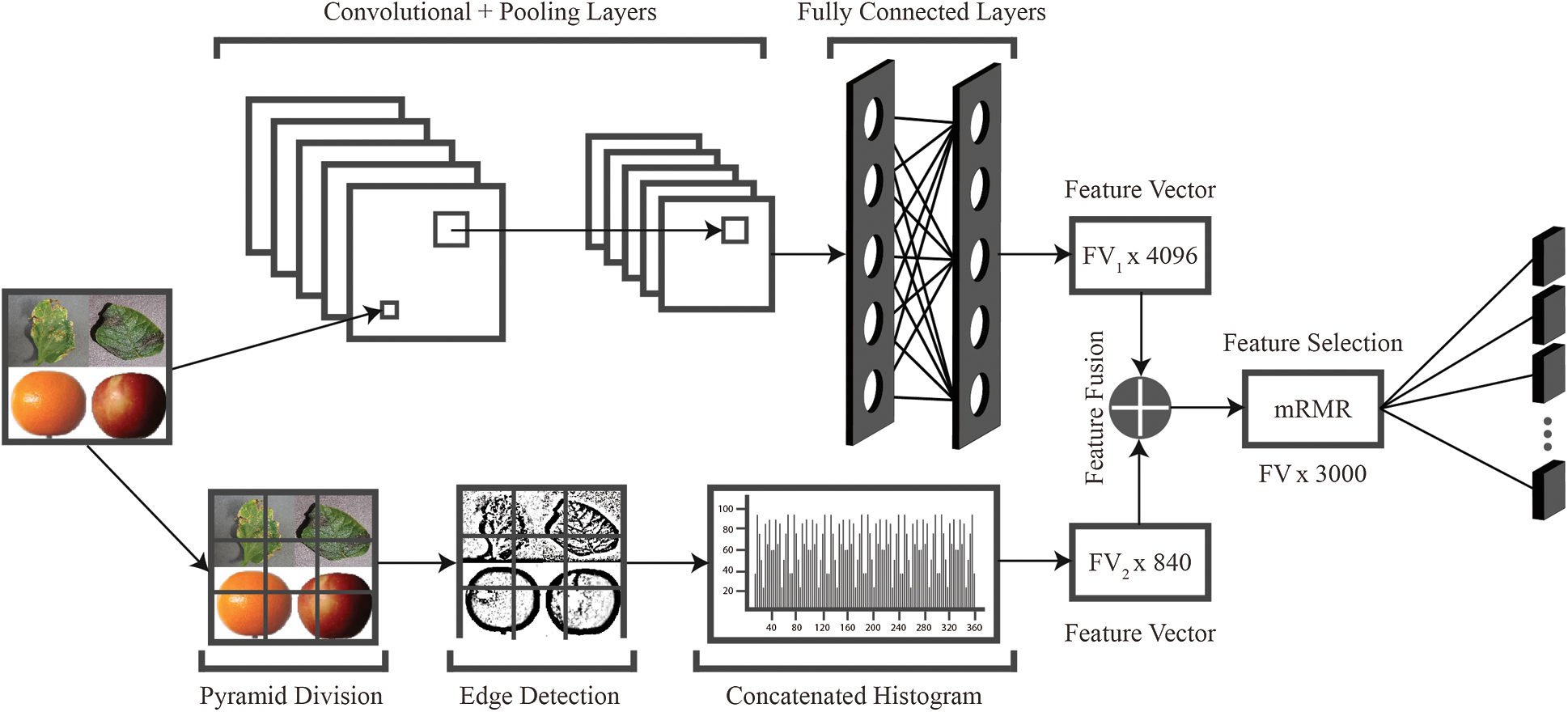

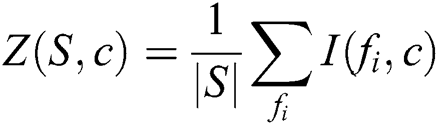

Our CNNs have infrastructure, resource engine, and data engine layers. The infrastructure layer contains a device sublayer, a “local edge cache unit”, MEC orchestrators, and RANs. The MECs and RANs distribute the resources among end-users. Network resources are optimized for efficient communication by the resource engine layer. Self-organizing networks (SONs), network function virtualization (NFV), and software-defined networks (SDNs) are used for optimization. Deep learning (DL) and ML techniques can be used to preprocess data, extract features, and classify objects. The overall structure of our model is illustrated in Fig. 1.

Figure 1: Cloud-based fruit classification framework based on the 5G network

The device layer includes mobile phones with cameras that capture images or video frames that are saved locally and then transferred via 5G to the local edge cache unit. The 5G network contains multiple SCs connected to separate cache memory units, which are reserved by CPs for MNOs. The MNOs operate in a multi-tenant environment and store a variety of content at different prices. All available cache memory slots are allocated to different over-the-top (OTT) CPs. A cache memory slot is reserved by a single optimized CP; different types of content can be stored based on the particular SC spatial distribution. This helps to identify the minimum missed cache rate as a function of the purchased slot. The order in which different types of content are sent over a 5G network is determined by demand and CP reputation (including the number of CPs with similar reputations).

The resource engine layer optimizes resources by learning about network parameters such as communication quality, data flow, and network type. This is critical for green communication when big data must be transferred but bandwidth is limited. The resource engine layer also influences 5G reliability, scalability, and flexibility. Network operations in data centers that control the cloud infrastructure are linked to the hardware via the NFV. Intelligent programmable networks, such as SDNs, provide consistent decoupling of network intellect, ultimately enabling the system to request and modulate network services. The data engine layer uses 5G technology to transfer images to data centers. Human-like intelligent systems, ML, and DL algorithms learn useful features and make detection/classification decisions. Large images can be resized to dimensions of  , which enables MNOs to rapidly transfer them to cache memory. The image with the highest demand is prioritized in the cache memory of the adjacent SC so that it can be effectively and quickly moved to the data engine for further processing.

, which enables MNOs to rapidly transfer them to cache memory. The image with the highest demand is prioritized in the cache memory of the adjacent SC so that it can be effectively and quickly moved to the data engine for further processing.

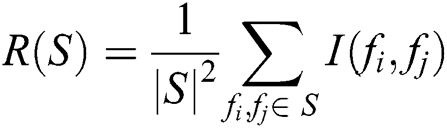

The data engine has a hybrid DL architecture for detecting and classifying fruits. The architecture uses the pretrained VGG19 model and pyramid histogram of oriented gradient (PHOG)feature extraction technique. Both methods extract features of images in the public Fruit-360 dataset. The extracted features are serially combined, and the  most important features are selected using the mRMR technique. A flow chart of the fruit classification process is shown in Fig. 2.

most important features are selected using the mRMR technique. A flow chart of the fruit classification process is shown in Fig. 2.

Figure 2: Hybrid deep learning model for fruit classification

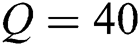

3.1.1 Pyramid Histogram of Oriented Gradient

PHOG represents edges in vector form [31]. The two principal techniques are pyramid representation, and use of a histogram of oriented gradients (HOGs) [32]. PHOG features are extracted based on the local features and spatial layouts of fruits. Spatial features are obtained by dividing images into sub-regions at various resolutions; local features are extracted via edge detection in each sub-region. Fig. 3 shows how PHOG extracts the features of different sub-regions at various resolutions after performing edge detection, dividing the image into pyramidal levels, computing HsOGs for all grids in all levels and, finally, concatenating all HOG feature vectors into a single feature vector.

The overall efficiency of the detector depends on the size of the Gaussian kernel; an increase in size decreases the sensitivity of the detector to noise. Edge directions may vary, so a canny edge detector (CED) uses four different filters to detect diagonal, vertical, and horizontal edges. The shapes and structures of different objects are characterized using feature descriptors (i.e., the HsOGs of multiple domains). Edge directions and local intensity gradients are often used to describe object shape and structure. HOGs have been used to describe the human body (particularly the face); when applied for fruit classification in this study, the results were outstanding. The normalized colors and gamma values of input images increase search efficiency. For fruit detection, a small sliding window probes the input image, region-by-region. The resultant histograms at all grid levels are concatenated to yield spatial information. We divided the PHOG descriptor into  orientation bins between

orientation bins between  and

and , and set

, and set  and

and  to give a

to give a  feature vector for the

feature vector for the  images.

images.

Figure 3: Extraction of features by PHOG

CNNs were initially developed to recognize handwriting. CNNs differ from other ML methods in that they accept preprocessed images rather than feature vectors. CNNs were inspired by human vision; they aim to understand the underlying nature of an image. A CNN includes convolutional pooling rectified linear unit (ReLu) and normalization layers, which often form fully connected layers (FCLs), and a softmax classifier layer as the output layer. Supervised CNN training uses comprehensive datasets such as ImageNet. The VGG19 model has 16 convolutional layers, 5 pooling layers, 3 FCLs [33], and 2 dropout layers with dropout probabilities of 0.5. VGG-19 also features an extractor and a classifier. The extractor changes an image to a vector that can be connected to various classifiers. VGG-19 is very accurate and efficient. Here, we used the penultimate fully connected “fc7” layer to extract  features.

features.

3.1.3 Fine-Tuning the VGG19 for Analysis of the Fruit-360 Dataset

Transfer learning (TL) is vital for smaller datasets with limited numbers of samples or classes [34]. In such cases, pretrained models serve as feature extractors. CNN fine-tuning yielded better results than generic feature extraction. The VGG19 model was trained on an ImageNet dataset with 1,000 different classes. As the Fruit-360 dataset contains 95 classes (fruit types), the final softmax layer is updated by replacing 1,000 with 95. However, this forces the network to train using arbitrary weights. In TL, the learning rate for the softmax layer is higher, because this layer must learn new features quickly. The VGG19 model was trained using a mini-batch size of 64, learning rate of 0.0001, weight decay of 0.005, and a momentum of 0.8. The softmax layer contains 95 target classes; a Gaussian distribution with a standard deviation of 0.01 was used to adjust the weights of the last layer. The network was trained using 65,429 images over 7,500 iterations with a dropout rate of 0.5 (to reduce overfitting). After every 150 iterations, 1,000 images were used to validate the learning.

3.1.4 Selecting and Combining Features

When PHOG and VGG19 are combined, their respective  and

and  feature vectors form the feature vector

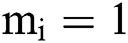

feature vectors form the feature vector  , which contains many redundant and negative values (noise) that degrade the model. We used the mRMR technique for feature selection [35] to identify shared data, correlations, and best-matched scores when selecting optimized features. A feature is “penalized” if it is redundant with respect to neighboring features. The relation between the feature set S for a specific class c is determined based on the mean scores of different features

, which contains many redundant and negative values (noise) that degrade the model. We used the mRMR technique for feature selection [35] to identify shared data, correlations, and best-matched scores when selecting optimized features. A feature is “penalized” if it is redundant with respect to neighboring features. The relation between the feature set S for a specific class c is determined based on the mean scores of different features  in the same S and

in the same S and  , as follows:

, as follows:

The repetition of every feature within an  gives the total mean value of

gives the total mean value of  and

and  , calculated as:

, calculated as:

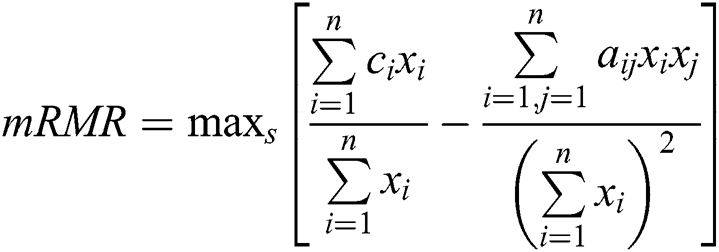

The mRMR technique maps the two different feature values described above as follows:

Suppose there are  features and

features and  denotes the applicability of a fitness function to feature

denotes the applicability of a fitness function to feature  . As

. As  denotes the features that are present in the globally optimized feature set, and

denotes the features that are present in the globally optimized feature set, and  denotes bes those that are absent, the above becomes:

denotes bes those that are absent, the above becomes:

Theoretically optimal features (in terms of maximum dependency) can be approximated using the mRMR technique. The information shared by selected features with neighboring features and classification variables are maximized and optimized. The mRMR technique creates a feature vector denoted by  3,000, which has 3,000 unique features that are fed to the classifiers to ensure accuracy.

3,000, which has 3,000 unique features that are fed to the classifiers to ensure accuracy.

4.1 Datasets and Experimental Setup

The Fruit-360 dataset [36] contains 65,429 images of 95 different classes. The dataset is separated into two parts: a training set of 48,905 images and a testing set of 16,421 images. For the DCNN models, the minimum number of (random) images per class was set to 300. Ten-fold cross-validation and seven classification methods were used to test the performance of our method, measured in terms of sensitivity, precision, area under the curve, the format-and-restore (FNR) rate, and accuracy.

The publicly available Plant Village dataset [37] was also used to test the model in terms of disease classification performance. The dataset contains several classes of leaf disease; we were interested in the apple black rot (ABR), apple scab (AS), apple rust (AR), apple healthy (AH), cherry powdery mildew (CPM), cherry healthy (CH), peach bacterial spot (PBS), and peach healthy (PH) classes. A total of 7,733 images were divided into training and testing datasets using the standard 70/30% split. The minimum number of (random) images per class was set to 360. To evaluate model performance, the accuracy, FNR rate, and training time was recorded for all seven classifiers. We used 10-fold cross-validation to measure performance. All simulations were performed in the MATLAB environment (2018a; MathWorks, Inc., Natick, MA, USA) using a PC with an i7 core, 16 GB of RAM and a 256 GB SSD.

4.2 Evaluation of the Fruit-360 Dataset

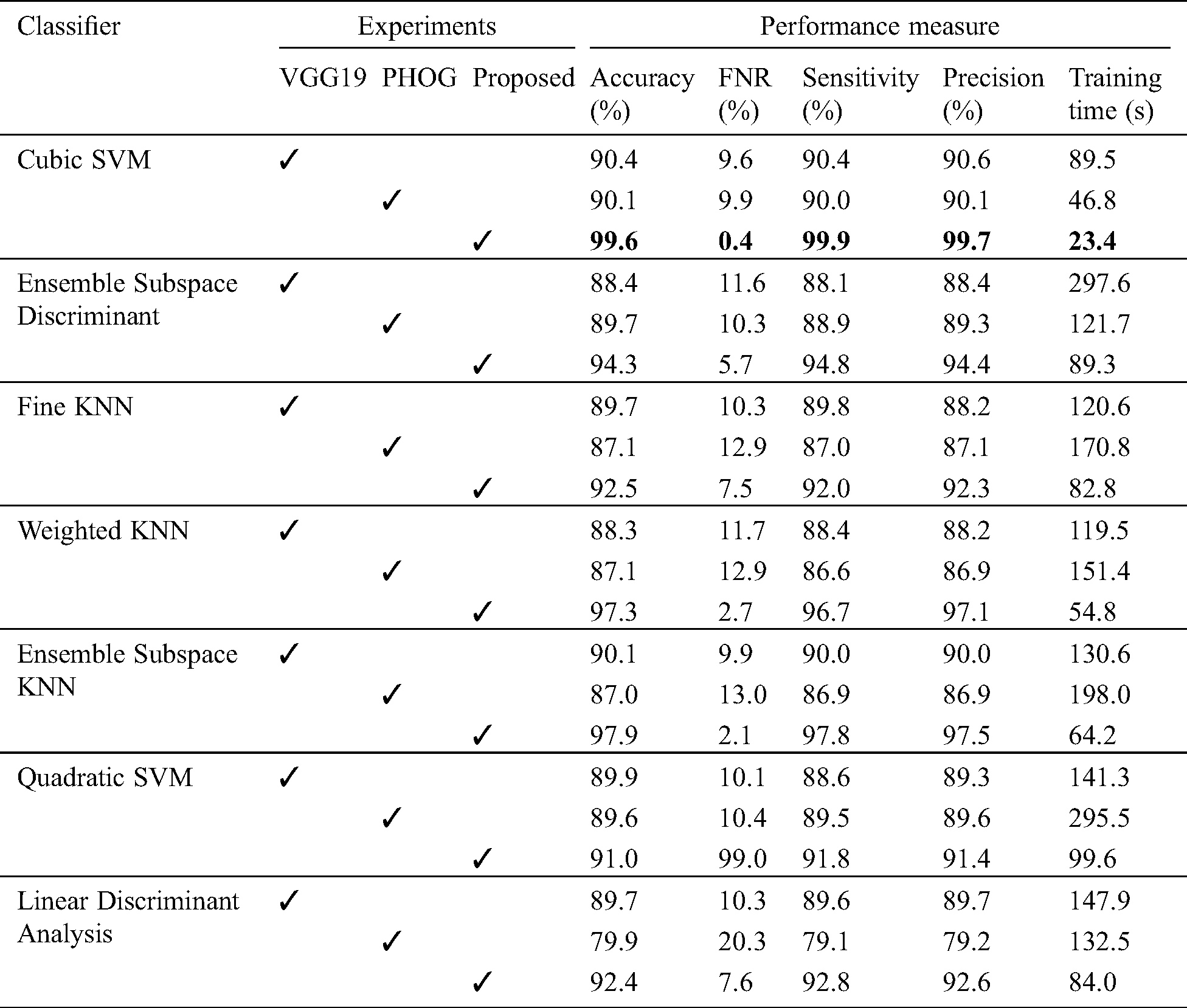

We evaluated the classification method via three experiments. In the first and second experiments, classification results were obtained by extracting features from the pretrained VGG19 DCNN model, and the hand-crafted PHOG features. In the third experiment, we employed a serial fusion strategy, which was then improved by selecting the best features. In the first experiment, the VGG19 activated the fully connected fc7 layer. Using the Cubic SVM, the highest classification accuracy was 90.4%. The FNR rate was 9.6%, the sensitivity was 90.4%, the precision was 90.6%, and the training time was 89.5 s. In the second experiment, PHOG features were extracted and the respective values were 90.1%, 9.9%, 90.0%, 90.1%, and 46.8 s. In the third experiment, we combined  VGG19 features with

VGG19 features with  PHOG features to form a feature vector with

PHOG features to form a feature vector with  features, which was then optimized via mRMR and sorted so that the best features were at the start. A total of

features, which was then optimized via mRMR and sorted so that the best features were at the start. A total of  starting features were selected and forwarded to the Cubic SVM. The maximum classification accuracy was

starting features were selected and forwarded to the Cubic SVM. The maximum classification accuracy was  . The FNR rate was

. The FNR rate was  , the sensitivity was

, the sensitivity was  , the precision was

, the precision was  and the training time was

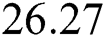

and the training time was  s. Tab. 1 presents the classification results of the seven classifiers for all experiments in terms of accuracy, the FNR rate, sensitivity, precision, and training time.

s. Tab. 1 presents the classification results of the seven classifiers for all experiments in terms of accuracy, the FNR rate, sensitivity, precision, and training time.

Table 1: Classification results of different experiments on Fruit-360 dataset

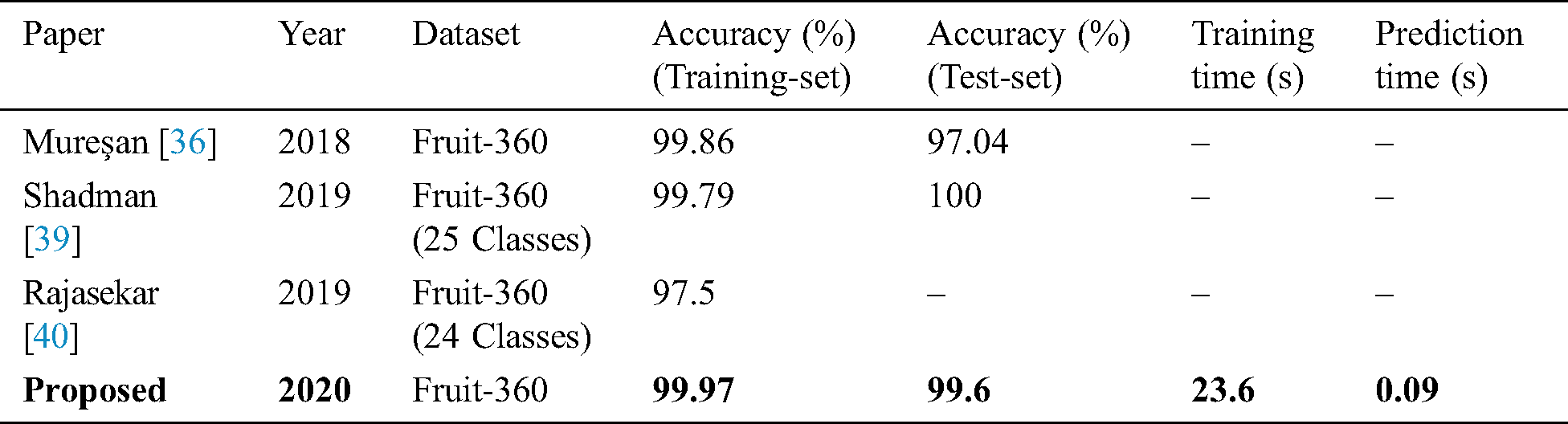

As the updated Fruit-360 dataset was only published in 2018, very few studies have used it. The dataset proposed in Mureşan et al. [36] was used to train a CNN that employed the TensorFlow library [38] for deep learning. A six-layered DCNN model was also used; this analyzed input images  , and featured four convolutional layers connected by max-pooling layers, two FCLs, and a final softmax output layer. Various combinations of color transformations were tested; the [Grayscale, HSI, HSV, and Flips] combination provided accuracy rates of 97.04% and 99.86% for the test and training datasets, respectively. Another technique used only 25 classes of fruit in the Fruit-360 dataset; of the five cases that were tested, case 1 had the highest accuracy, of 99.94% [39]. A classification technique based on shape, color, and textural features was used to classify 24 fruit classes (2,400 images) in the Fruit-360 dataset. The kNN classifier achieved an accuracy of 97.5% [40]. However, the accuracy rates in our study were higher, at 99.6% and 99.97% for the test and training data sets, respectively. The classifier training time was 23.6 s, and the average prediction time was 0.09 s (Tab. 2).

, and featured four convolutional layers connected by max-pooling layers, two FCLs, and a final softmax output layer. Various combinations of color transformations were tested; the [Grayscale, HSI, HSV, and Flips] combination provided accuracy rates of 97.04% and 99.86% for the test and training datasets, respectively. Another technique used only 25 classes of fruit in the Fruit-360 dataset; of the five cases that were tested, case 1 had the highest accuracy, of 99.94% [39]. A classification technique based on shape, color, and textural features was used to classify 24 fruit classes (2,400 images) in the Fruit-360 dataset. The kNN classifier achieved an accuracy of 97.5% [40]. However, the accuracy rates in our study were higher, at 99.6% and 99.97% for the test and training data sets, respectively. The classifier training time was 23.6 s, and the average prediction time was 0.09 s (Tab. 2).

Table 2: Comparison with the existing technique

4.3 Evaluation of Classifier Performance Using a Plant Disease Dataset

We evaluated our model using the three experiments described above. In the first experiment, which used the VGG19 model, the highest classification accuracy was  . The FNR rate was

. The FNR rate was  and the training time was

and the training time was  s for the Cubic SVM. In the second experiment, the respective values were

s for the Cubic SVM. In the second experiment, the respective values were  ,

, and

and  s; those in the third experiment were

s; those in the third experiment were  ,

,  and

and  s, and the average prediction time was 0.03 s. Tab. 3 lists the classification results of all seven classifiers in all experiments in terms of accuracy, FNR rate, and training time.

s, and the average prediction time was 0.03 s. Tab. 3 lists the classification results of all seven classifiers in all experiments in terms of accuracy, FNR rate, and training time.

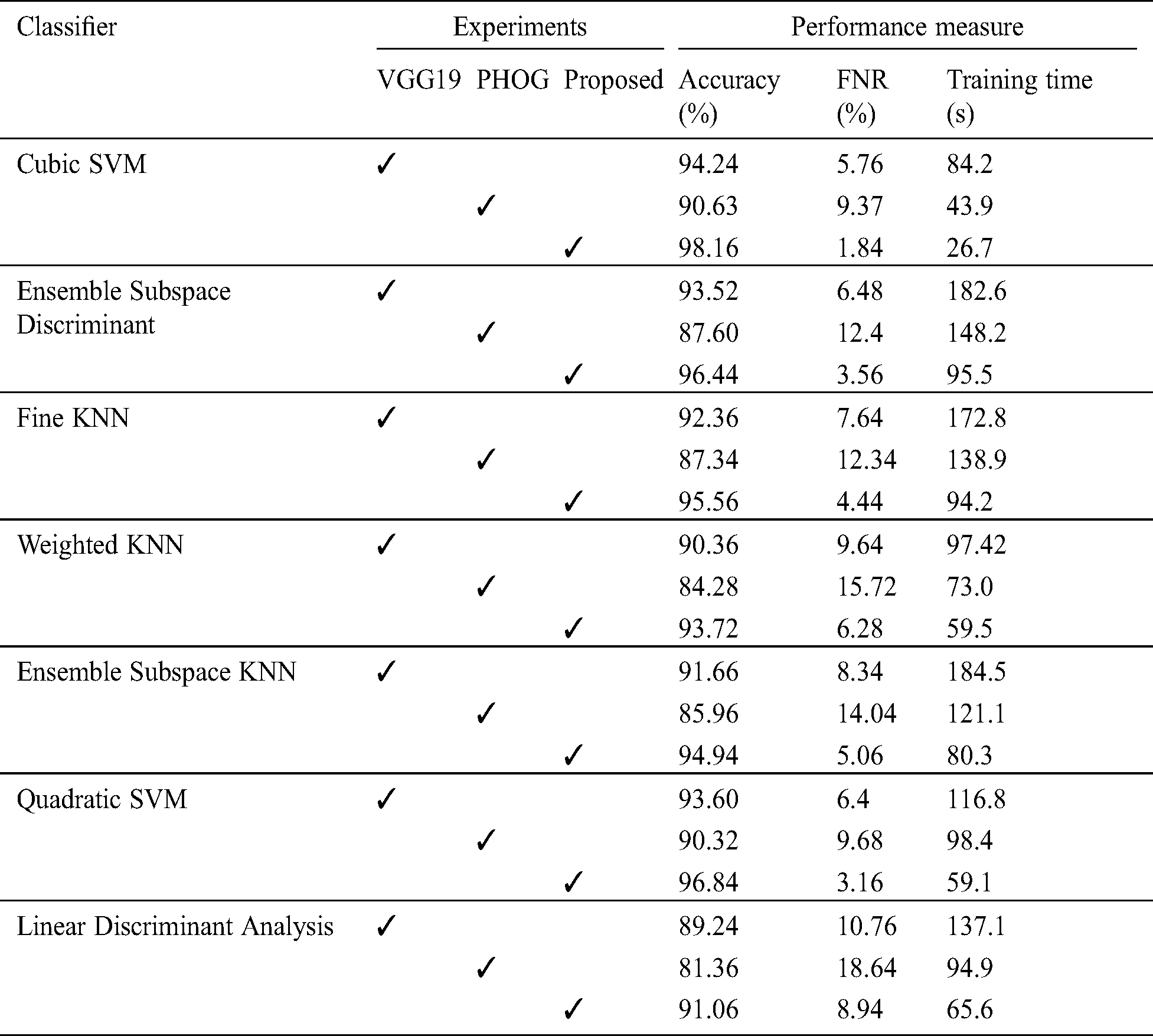

To determine the validity of the model, it was compared with existing techniques (Tab. 4). In a previous study, color, shape, and textural features were extracted to classify Google images of apples as healthy or diseased (blotches, rot, and scabs); the accuracy was 95.47% [41]. Another DCNN-based technique distinguished among the apple diseases Alternaria, brown rust, and mosaicism. An AlexNet model was used to evaluate 13,689 images and the accuracy was 97.62% [42]. A DCNN-based approach was used to classify plant leaf disease in another dataset, with 96.3% accuracy [43]. Finally, DCNN models (AlexNet and VGG) were used to extract features from the Plant Village dataset. Multi-level fusion followed by entropy selection provided an overall classification accuracy of 97.80% [44].

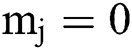

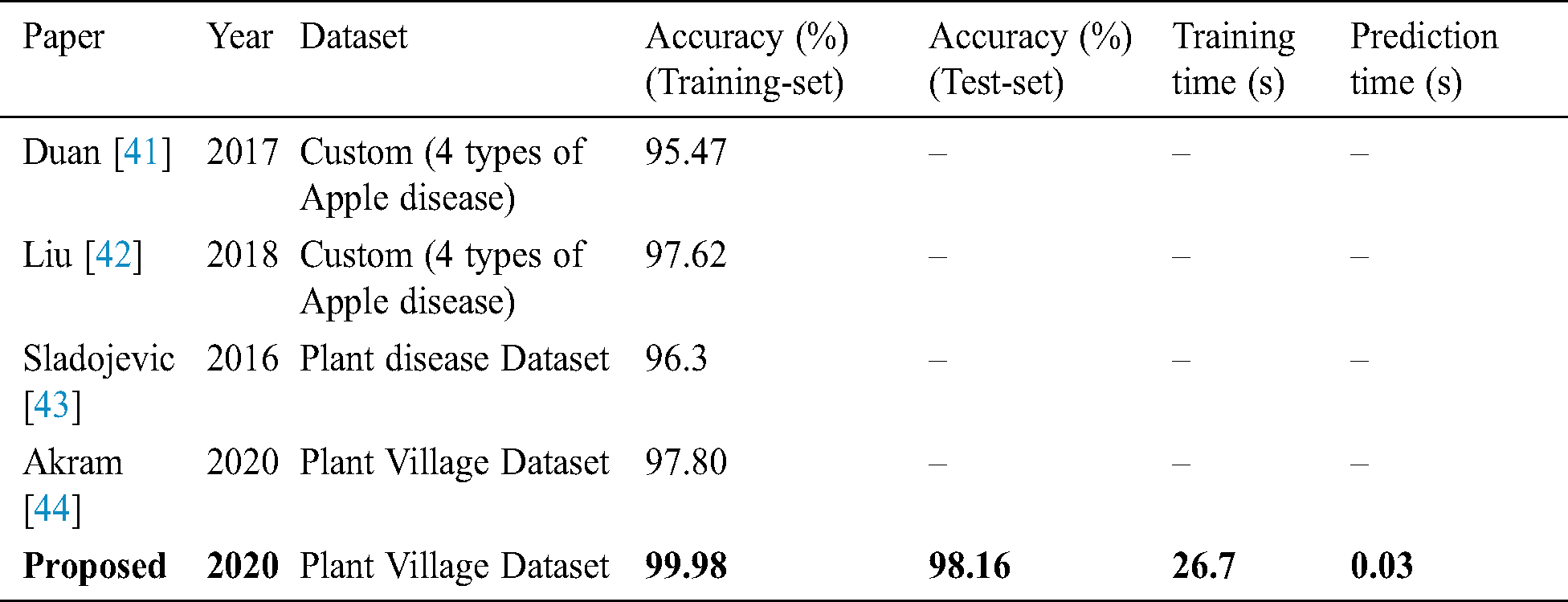

When analyzing the Fruit-360 dataset, the VGG19 training loss decreased rapidly to less than 20% after 5,000 iterations. Similarly, the overall network accuracy increased gradually, reaching 99% after 5,000 iterations. The training loss and accuracy rates are shown in Fig. 4. Fine-tuning was valuable, increasing the overall accuracy from 87.3% to 99.97%. When analyzing the Plant Village dataset, the VGG19 training loss decreased rapidly to less than 20% after 3,500 iterations. Similarly, the overall accuracy of the network gradually increased, stabilizing after 3,000 iterations and reaching 98% after all iterations. The training loss and accuracy rates are shown in Fig. 4. The accuracy was improved by fine-tuning, from 82.8% to 98.16%.

Table 3: Classification results of different experiments on Plant Village dataset

Table 4: Comparison with existing technique

Figure 4: Accuracy rates and training loss after fine-tuning of both datasets

The results show that a fine-tuned DCNN model converges faster and is more accurate. Fruit classification does not require preprocessing when a DCNN model is combined with structural features. Our method is very accurate even when evaluating a very large and challenging dataset.

Our hybrid classification method combines a DCNN model with PHOG features to classify fruits using 5G and cloud technology. Images are captured on a smart device and transferred to the cloud via the 5G network. Large images are resized before transfer, and the fine-tuned hybrid model classifies them. The combined DCNN and PHOG features are optimized using the mRMR technique. We employed the public Fruit-360 dataset to determine the validity of our method, which was considerably better than currently available classifiers. To the best of our knowledge, this is the first attempt to combine DCNN and PHOG features for fruit classification based on 5G technology and the IoT. Fusion was associated with good classification accuracy. In the future, we will add features for multi-fruit classification, which should aid fully automated robotic harvesting.

Funding Statement: This research was supported by the MSIT(Ministry of Science and ICT), Korea, under the ICAN (ICT Challenge and Advanced Network of HRD) program(IITP-2020-2020-0-01832) supervised by the IITP (Institute of Information & Communications Technology Planning & Evaluation) and the Soonchunhyang University Research Fund.

Conflicts of Interest: On the behalf of corresponding author, all authors declare that they have no conflict of interest.

1. S. S. Abu-Naser, K. A. Kashkash and M. Fayyad. (2010). “Developing an expert system for plant disease diagnosis,” Journal of Artificial Intelligence. [Google Scholar]

2. E. Tsormpatsidis, M. Ordidge, R. G. C. Henbest, A. Wagstaffe, N. H. Battey et al. (2011). , “Harvesting fruit of equivalent chronological age and fruit position shows individual effects of UV radiation on aspects of the strawberry ripening process,” Environmental and Experimental Botany, vol. 74, pp. 178–185. [Google Scholar]

3. P. C. Marchal, D. M. Gila, J. G. García and J. G. Ortega. (2013). “Expert system based on computer vision to estimate the content of impurities in olive oil samples,” Journal of Food Engineering, vol. 119, no. 2, pp. 220–228. [Google Scholar]

4. I. M. Nasir, M. Rashid, J. H. Shah, M. Sharif, M. Y. H. Awan et al. (2020). , “An optimized approach for breast cancer classification for histopathological images based on hybrid feature set,” Current Medical Imaging. [Google Scholar]

5. M. Rashid, M. A. Khan, M. Alhaisoni, S. H. Wang, S. R. Naqvi et al. (2020). , “A sustainable deep learning framework for object recognition using multi-layers deep features fusion and selection,” Sustainability, vol. 12, no. 12, pp. 5037. [Google Scholar]

6. A. Daniel, K. Subburathinam, B. Muthu, N. Rajkumar, S. Kadry et al. (2020). , “Procuring cooperative intelligence in autonomous vehicles for object detection through data fusion approach,” IET Intelligent Transport Systems, vol. 14, no. 11. [Google Scholar]

7. B. Muthu, C. Sivaparthipan, G. Manogaran, R. Sundarasekar, S. Kadry et al. (2020). , “IOT based wearable sensor for diseases prediction and symptom analysis in healthcare sector,” Peer-to-Peer Networking and Applications, pp. 1–12. [Google Scholar]

8. I. M. Nasir, M. A. Khan, M. Alhaisoni, T. Saba, A. Rehman et al. (2020). , “A hybrid deep learning architecture for the classification of superhero fashion products: An application for medical-tech classification,” Computer Modeling in Engineering & Sciences, vol. 124, no. 3, pp. 1017–1033. [Google Scholar]

9. CISCO. (2016). “Index, Global mobile data traffic forecast update, 2015-2020,” White Paper. [Google Scholar]

10. X. Wang, M. Chen, T. Taleb, A. Ksentini and V. C. Leung. (2014). “Cache in the air: Exploiting content caching and delivery techniques for 5G systems,” IEEE Communications Magazine, vol. 52, no. 2, pp. 131–139. [Google Scholar]

11. B. Bharath, K. G. Nagananda and H. V. Poor. (2016). “A learning-based approach to caching in heterogenous small cell networks,” IEEE Transactions on Communications, vol. 64, no. 4, pp. 1674–1686. [Google Scholar]

12. V. Chandwani, V. Agrawal and R. Nagar. (2015). “Modeling slump of ready mix concrete using genetic algorithms assisted training of Artificial Neural Networks,” Expert Systems with Applications, vol. 42, no. 2, pp. 885–893. [Google Scholar]

13. B. Zhang, W. Huang, J. Li, C. Zhao, S. Fan et al. (2014). , “Principles, developments and applications of computer vision for external quality inspection of fruits and vegetables: A review,” Food Research International, vol. 62, pp. 326–343. [Google Scholar]

14. M. A. Khan, T. Akram, M. Sharif, M. Awais, K. Javed et al. (2018). , “CCDF: Automatic system for segmentation and recognition of fruit crops diseases based on correlation coefficient and deep CNN features,” Computers and Electronics in Agriculture, vol. 155, pp. 220–236. [Google Scholar]

15. S. P. Mohanty, D. P. Hughes and M. Salathé. (2016). “Using deep learning for image-based plant disease detection,” Frontiers in Plant Science, vol. 7, pp. 1419. [Google Scholar]

16. N. C. Tri, T. Van Hoai, H. N. Duong, N. T. Trong, V. Van Vinh et al. (2016). , “A novel framework based on deep learning and unmanned aerial vehicles to assess the quality of rice fields,” in Int. Conf. on Advances in Information and Communication Technology, Cham: Springer, pp. 84–93. [Google Scholar]

17. S. R. Dubey and A. S. Jalal. (2016). “Apple disease classification using color, texture and shape features from images,” Signal, Image and Video Processing, vol. 10, no. 5, pp. 819–826. [Google Scholar]

18. A. Fuentes, S. Yoon, S. C. Kim and D. S. Park. (2017). “A robust deep-learning-based detector for real-time tomato plant diseases and pests recognition,” Sensors, vol. 17, no. 9, pp. 2022. [Google Scholar]

19. A. Khan, J. P. Li, R. A. Shaikh and I. Khan. (2016). “Vision based classification of fresh fruits using fuzzy logic,” in 2016 3rd Int. Conf. on Computing for Sustainable Global Development (INDIAComNew Delhi, India, pp. 3932–3936. [Google Scholar]

20. M. Adak and N. Yumusak. (2016). “Classification of e-nose aroma data of four fruit types by ABC-based neural network,” Sensors, vol. 16, no. 3, pp. 304. [Google Scholar]

21. Y. Zhang, S. Wang, G. Ji and P. Phillips. (2014). “Fruit classification using computer vision and feedforward neural network,” Journal of Food Engineering, vol. 143, pp. 167–177. [Google Scholar]

22. Y. Zhang, P. Phillips, S. Wang, G. Ji, J. Yang et al. (2016). , “Fruit classification by biogeography-based optimization and feedforward neural network,” Expert Systems, vol. 33, no. 3, pp. 239–253. [Google Scholar]

23. F. Garcia, J. Cervantes, A. Lopez and M. Alvarado. (2016). “Fruit classification by extracting color chromaticity, shape and texture features: Towards an application for supermarkets,” IEEE Latin America Transactions, vol. 14, no. 7, pp. 3434–3443. [Google Scholar]

24. S. Wang, Z. Lu, J. Yang, Y. Zhang, J. Liu et al. (2016). , “Fractional fourier entropy increases the recognition rate of fruit type detection,” BMC Plant Biology, vol. 16. [Google Scholar]

25. Z. M. Khaing, Y. Naung and P. H. Htut. (2018). “Development of control system for fruit classification based on convolutional neural network,” in 2018 IEEE Conf. of Russian Young Researchers in Electrical and Electronic Engineering, Moscow, Russia, pp. 1805–1807. [Google Scholar]

26. P. A. Macanhã, D. M. Eler, R. E. Garcia and W. E. M. Junior. (2018). “Handwritten feature descriptor methods applied to fruit classification, ” in Latifi S. (eds.) Information Technology-New Generations. Advances in Intelligent Systems and Computing, Cham: Springer, vol. 558, pp. 699–705. [Google Scholar]

27. S. Jana and R. Parekh. (2017). “Shape-based fruit recognition and classification,” in Int. Conf. on Computational Intelligence, Communications, and Business Analytics, Singapore: Springer, pp. 184–196. [Google Scholar]

28. R. S. S. Kumari and V. Gomathy. (2018). “Fruit Classification using Statistical Features in SVM Classifier,” in 2018 4th Int. Conf. on Electrical Energy Systems, Chennai, India, pp. 526–529. [Google Scholar]

29. H. S. Choi, J. B. Cho, S. G. Kim and H. S. Choi. (2018). “A real-time smart fruit quality grading system classifying by external appearance and internal flavor factors,” in 2018 IEEE Int. Conf. on Industrial Technology, Lyon, France, pp. 2081–2086. [Google Scholar]

30. K. Alex, I. Sutskever and G. E. Hinton. (2012). “Imagenet classification with deep convolutional neural networks,” Advances in Neural Information Processing Systems, pp. 1097–1105. [Google Scholar]

31. A. Bosch, A. Zisserman and X. Munoz. (2007). “Representing shape with a spatial pyramid kernel,” in Proc. of the 6th ACM Int. Conf. on Image and Video Retrieval, pp. 401–408. [Google Scholar]

32. N. Dalal and B. Triggs. (2005). “Histograms of oriented gradients for human detection,” in 2005 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, San Diego, CA, USA, vol. 1, pp. 886–893. [Google Scholar]

33. K. Simonyan and A. Zisserman. (2014). “Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556. [Google Scholar]

34. C. Szegedy, V. Vanhoucke, S. Ioffe, J. Shlens and Z. Wojna. (2016). “Rethinking the inception architecture for computer vision,” in Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition, pp. 2818–2826. [Google Scholar]

35. H. Peng, F. Long and C. Ding. (2005). “Feature selection based on mutual information: Criteria of max-dependency, max-relevance, and min-redundancy,” IEEE Transactions on Pattern Analysis & Machine Intelligence, no. 8, pp. 1226–1238. [Google Scholar]

36. H. Mureşan and M. Oltean. (2018). “Fruit recognition from images using deep learning,” Acta Universitatis Sapientiae Informatica, vol. 10, no. 1, pp. 26–42. [Google Scholar]

37. K. P. Ferentinos. (2018). “Deep learning models for plant disease detection and diagnosis,” Computers and Electronics in Agriculture, vol. 145, pp. 311–318. [Google Scholar]

38. M. Abadi, P. Barham, J. Chen, Z. Chen, A. Davis et al. (2016). , “Tensorflow: A system for large-scale machine learning,” in 12th {USENIX} Sym. on Operating Systems Design and Implementation, pp. 265–283. [Google Scholar]

39. S. Sakib, Z. Ashrafi, M. Siddique and A. Bakr. (2019). “Implementation of fruits recognition classifier using convolutional neural network algorithm for observation of accuracies for various hidden layers. arXiv preprint arXiv:1904.00783. [Google Scholar]

40. L. Rajasekar and D. Sharmila. (2019). “Performance analysis of soft computing techniques for the automatic classification of fruits dataset,” Soft Computing, vol. 23, no. 8, pp. 2773–2788. [Google Scholar]

41. Y. Duan, F. Liu, L. Jiao, P. Zhao and L. Zhang. (2017). “SAR Image segmentation based on convolutional-wavelet neural network and markov random field,” Pattern Recognition, vol. 64, pp. 255–267. [Google Scholar]

42. B. Liu, Y. Zhang, D. He and Y. Li. (2018). “Identification of apple leaf diseases based on deep convolutional neural networks,” Symmetry, vol. 10, no. 1, pp. 11. [Google Scholar]

43. S. Sladojevic, M. Arsenovic, A. Anderla, D. Culibrk and D. Stefanovic. (2016). “Deep neural networks based recognition of plant diseases by leaf image classification,” Computational Intelligence and Neuroscience, vol. 2016. [Google Scholar]

44. T. Akram, M. Sharif and T. Saba. (2020). “Fruits diseases classification: Exploiting a hierarchical framework for deep features fusion and selection,” Multimedia Tools and Applications, pp. 1–21. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |