Open Access

Open Access

ARTICLE

Enhancing Emotional Expressiveness in Biomechanics Robotic Head: A Novel Fuzzy Approach for Robotic Facial Skin’s Actuators

Institute of Intelligent and Interactive Technologies, University of Economics Ho Chi Minh City–UEH, Ho Chi Minh City, 72516, Vietnam

* Corresponding Author: Nguyen Truong Thinh. Email:

(This article belongs to the Special Issue: Applied Artificial Intelligence: Advanced Solutions for Engineering Real-World Challenges)

Computer Modeling in Engineering & Sciences 2025, 143(1), 477-498. https://doi.org/10.32604/cmes.2025.061339

Received 22 November 2024; Accepted 31 January 2025; Issue published 11 April 2025

Abstract

In robotics and human-robot interaction, a robot’s capacity to express and react correctly to human emotions is essential. A significant aspect of the capability involves controlling the robotic facial skin actuators in a way that resonates with human emotions. This research focuses on human anthropometric theories to design and control robotic facial actuators, addressing the limitations of existing approaches in expressing emotions naturally and accurately. The facial landmarks are extracted to determine the anthropometric indicators for designing the robot head and is employed to the displacement of these points to calculate emotional values using Fuzzy C-Mean (FCM). The rotating angles of skin actuators are required to account for the smaller emotions, which enhance the robot’s ability to perform emotions in reality. In addition, this study contributes a novel approach based on facial anthropometric indicators to tailor emotional expressions to diverse human characteristics, ensuring more personalized and intuitive interactions. The results demonstrated how fuzzy logic can be employed to improve a robot’s ability to express emotions, which are digitized into fuzzy values. This is also the contribution of the research, which laid the groundwork for robots that can interact with humans more intuitively and empathetically. The performed experiments demonstrated that the suitability of proposed models to conduct tasks related to human emotions with the accuracy of emotional value determination and motor angles is 0.96 and 0.97, respectively.Keywords

Humanoid robots have become a fascinating and increasingly popular area of research and development in robotics and artificial intelligence. These cutting-edge technology elements have paved the way for developing humanoid and social robots that can interact with humans more naturally by mimicking human-like characteristics and expressions. The notion of a robotic head entails designing and integrating facial components, such as eyes, mouths, and expressions, to provide a visually and emotionally compelling interface for human-robot interaction [1]. In recent years, significant advancements in sensor technology, artificial intelligence algorithms, and actuation mechanisms have enabled the development of robotic heads. These robots are designed to replicate human appearance, although independent thinking in this form of robots is often deemed undesirable. There are various answers to the question, “Why create robots that resemble humans?” One reason is that research on human behavior consistently attracts interest, as it fuels curiosity about ourselves. In addition, human-like robots are used in simulations to assess equipment safety in hazardous environments. Based on research by Hanson et al. [2], since most objects and structures are designed based on human anthropometry, humanoid robots are the most suitable choice for applications intended for human use. Robots are often designed with a head unit that provides social feedback through facial expressions, attention, eye contact, and more to enhance the quality of human-robot interaction [3]. This study introduces a biomechanical robotic head with an appearance based on Vietnamese anthropometry. Facial landmarks are recognized and tracked to determine emotional values using a Fuzzy model, which means the robot collects and stores emotional data for various applications. In addition, the emotions of human interactors are monitored and evaluated to enable the robot to exhibit genuine emotions during interactions. Based on these determined emotional values, a matrix of motor angles for the robot’s movements is calculated for each emotion. Many psychological studies have shown that emotions are classified into primary and secondary types. The theory of primary emotions identifies six core emotions, while the Circumplex Model of Affect suggests that emotions consist of two dimensions: arousal and valence. In Kensinger’s study [4], these emotional values are defined as arousal, which is the body’s physiological response to stimuli, and valence, which reflects the positivity or negativity of the emotional experience. Arousal is categorized into three levels: low, moderate, and high. Low arousal indicates a passive state, such as fatigue or lethargy. Moderate arousal represents normal functioning with good concentration and engagement, while high arousal signifies heightened activity, often associated with tension or excitement. Valence is divided into three states: positive, neutral, and negative. Positive valence includes feelings of joy and satisfaction, while negative valence encompasses emotions such as sadness and anxiety.

In recent years, extensive research on humanoid robots has been conducted worldwide. For instance, the robot head KISMET, first proposed in 1998 [5], was designed to express basic emotions, featuring a cartoon-like face and a childlike voice. Following this, several studies on humanoid robots with emotional expressions were conducted, including the BARTHOC robot, a robotic head named Lilly, the Albert Einstein robot, the ROMAN robot, and, more recently, robots such as Abel [6–10]. In addition, a new type of robot, known as the “geminoid robot,” has been introduced, a humanoid robot designed to closely resemble a human in appearance [11]. At the robotics laboratory of Osaka University, the first geminoid robot modeled after Professor Hiroshi Ishiguro, called HI-1, was introduced. It was presented as a remote-controlled android system without artificial intelligence, featuring 50 degrees of freedom (DoF) [12]. Subsequent studies by the authors led to the development of various geminoid robots, such as HI-2, HI-4, HI-5, Geminoid F, Geminoid HI-2, and Geminoid DK, all designed to create human replicas [13,14]. These geminoids serve different purposes, primarily in childcare, elderly care, and the study and evaluation of human behavior [15,16]. Fuoco [17] explored the integration of geminoid robots with artificial agents in creative processes and theatrical performances, discussing their potential as actors and the aesthetic experiences they can provide. In geminoid robots, the emphasis is placed more on appearance and less on emotional analysis. The study focused on the robots’ appearance to meet various research objectives, with geminoids often used as remote-controlled telecommunication devices. Recently, a new android robot called Ibuki [18] was proposed, featuring a design focused on the upper body and the ability to move on two wheels. Most robots can only express fundamental human emotions, with most motor rotations generating emotions based on fixed and established theories. This makes it impossible for robots to fully express emotions. For example, happy emotions can be caused by many different reasons. This means that the emotional values (arousal, valence) are different, but with the robot’s expression as before, most of them are different. Emotions are only classified, and motive angles are stored as a fixed set of motive angles. Models have been proposed to create the most realistic emotions for robotic heads to address these challenges and collect the necessary angle data for emotional robots to replicate human emotions. Reviews on humanoid robots indicate that while many studies have been conducted for various applications in different fields, these robots remain expensive and not widely commercialized [1], and their emotional expressions are still not fully human-like [19]. Emotions are qualitative quantities that cannot be measured accurately, making their reproduction a significant challenge for researchers. Most studies have focused on the mechanical design of humanoid robot systems and surveys on robot-user interactions. This approach means that some biomechanical and anatomical features are not well-integrated in certain designs, and the biological basis for generating emotions in robots is often lacking [20]. The main objective is to digitize emotions into quantitatively defined values by combining human reasoning with anthropometric data. These values are then utilized to reconstruct emotions through the rotation angles of motors installed beneath the robot’s skin, aligning with the natural laws of human reasoning. This enhances the robot’s ability to create the most natural communication scenarios with users. This study thoroughly investigates human psychological and anthropometric data, providing a strong foundation for design and inference in reconstructing emotions and strengthening the Fuzzy model rules. Recent research has increasingly focused on reproducing emotions in humanoid robots. For example, Liu et al. [21] proposed a two-stage method using action units (AUs) to generate realistic robot facial expressions, treating models as black boxes to produce emotions. Their earlier study [22] introduced landmarks to mimic emotional behavior through lightweight networks, and recent advancements include using text-to-speech models, such as ChatGPT, to enhance human-robot interaction [23].

In developed countries, the population is increasingly aging, prompting the encouragement of robot development to perform daily tasks. Emotional robots are anticipated to exhibit more realistic emotional expressions, enhancing the efficiency of everyday communication with users. This study adopts an approach to digitizing human emotional values as a theoretical foundation for evaluating and controlling robotic heads to generate robot emotions. Section 2 introduces the theoretical foundations for designing a robot based on Vietnamese anthropometry. Facial landmarks are detected for specific reasons and identified as key points of interest due to their significant role in human emotional expression. These landmarks are the basis for calculating the angles of the actuators controlling the robotic facial skin. Since emotions lack a standard formula for quantification, the Fuzzy model is applied to digitize human emotions through an expert system. This approach combines uncertain data with expert knowledge to classify the type of emotion and realistically replicate it on a humanoid robot. Emotions are analyzed based on primary emotions, with fuzzy values formed during communication situations influenced by surrounding conditions. They are further categorized into varying degrees, such as slightly happy, happy, and very happy, each with different arousal and valence values. These values are utilized to determine motor angles, enabling the creation of authentic emotions in the robot, as detailed in Section 4. Finally, experiments were conducted to evaluate the effectiveness of this approach, as presented in Section 5.

2 Robotic Design Platform Based on Vietnamese Anthropometry

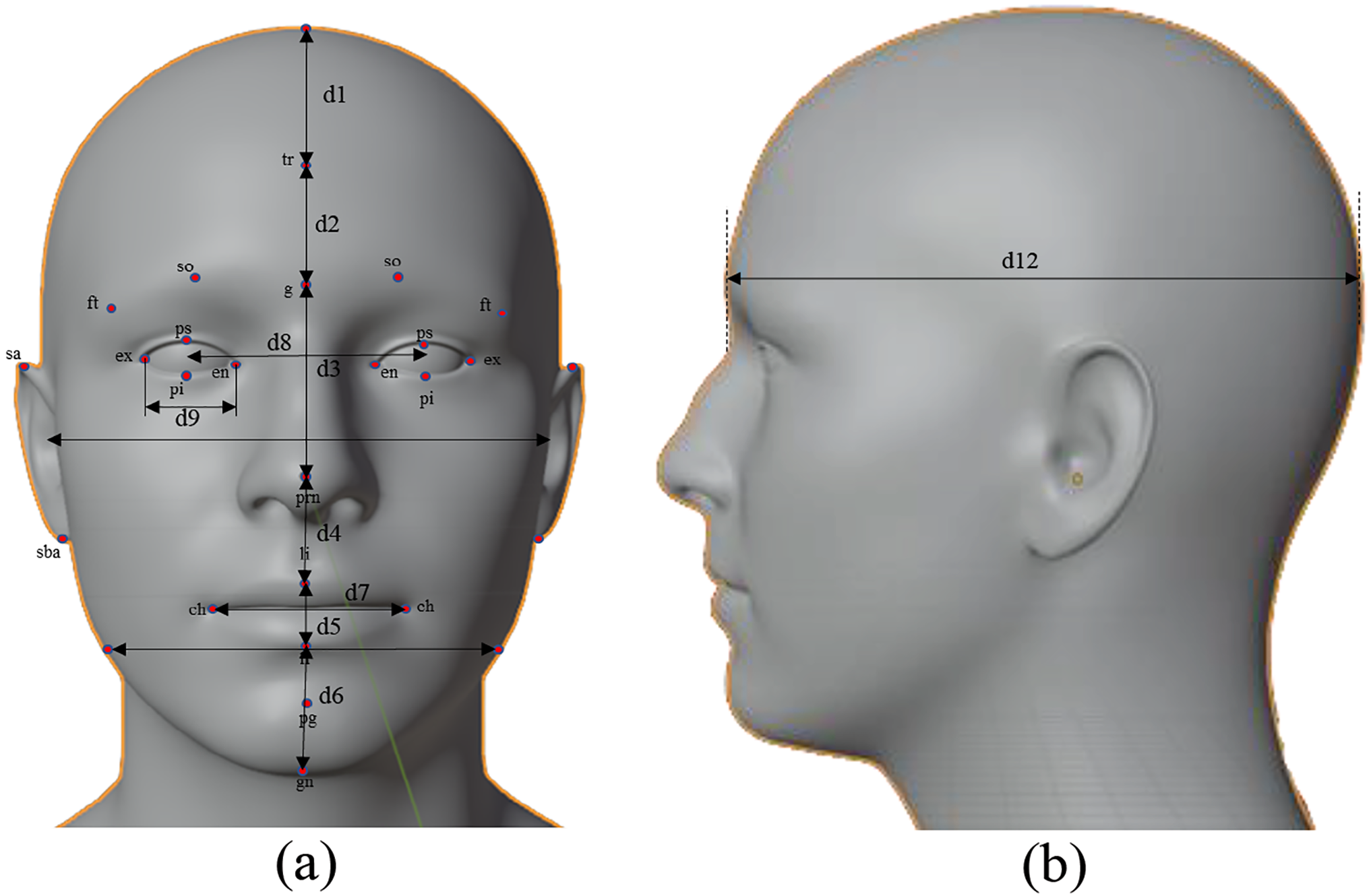

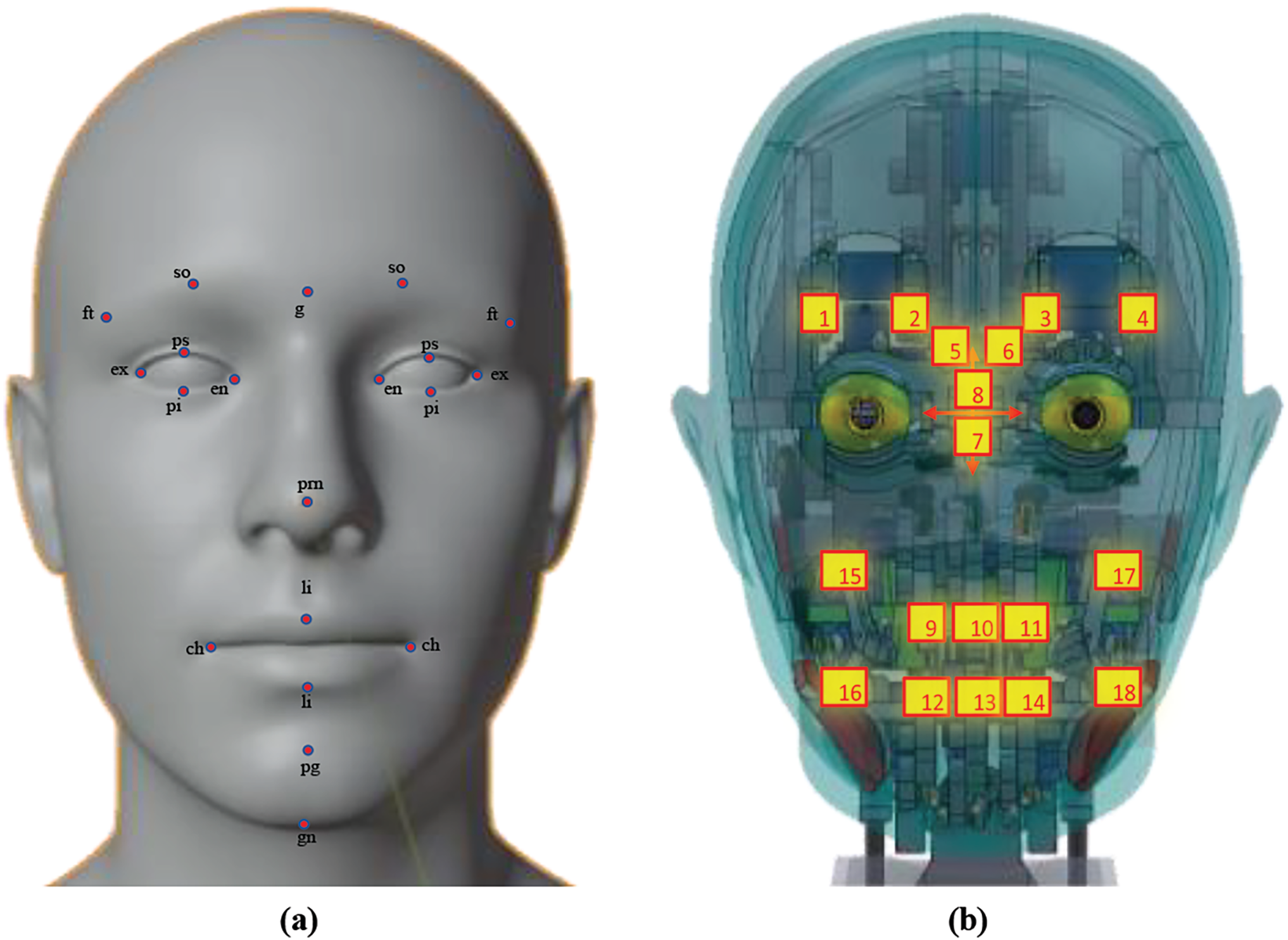

Human anthropometry and facial muscles are focused on studying to design the robotic head based on human biomechanics, with the desire to create a robotic head with design characteristics following Vietnamese anthropometry. The identification of anthropometric indicators is necessary and applies to many fields, such as medicine, apparel, or the design of protective clothing. The key points are identified and tracked in their movement to evaluate and analyze human emotions [24]. In medicine, anthropometric indicators can be utilized to predict diseases such as Down’s disease [25], or in plastic surgery, they are utilized to measure facial proportions [26]. In addition, anthropometric indicators are also employed to identify ethnic groups and human origins [27]. Research was done on the human anthropometry base using direct or indirect measurements via normalized imaging. The facial landmarks are described in Fig. 1 based on anthropometric landmarks suggested by LG Farkas [28], with landmarks defined in Table 1. In robot design, it is necessary to ensure the safety rules of a person’s identity [29]. In the study of Ibáñez-Berganza et al. [30], the authors presented facial attractiveness expressed in 10 dimensions extracted from 19 landmarks adapted in Fig. 1. The robotics head is designed based on the anthropometric ratio of Vietnamese people, and a survey was conducted. However, the head is designed and manufactured in 3D space, so the angle of view is also evaluated to design robotics heads similar to humans with the required dimensions to survey them, as shown in Table 2.

Figure 1: Dimensions measured in the survey. (a) Frontal view. (b) Lateral view

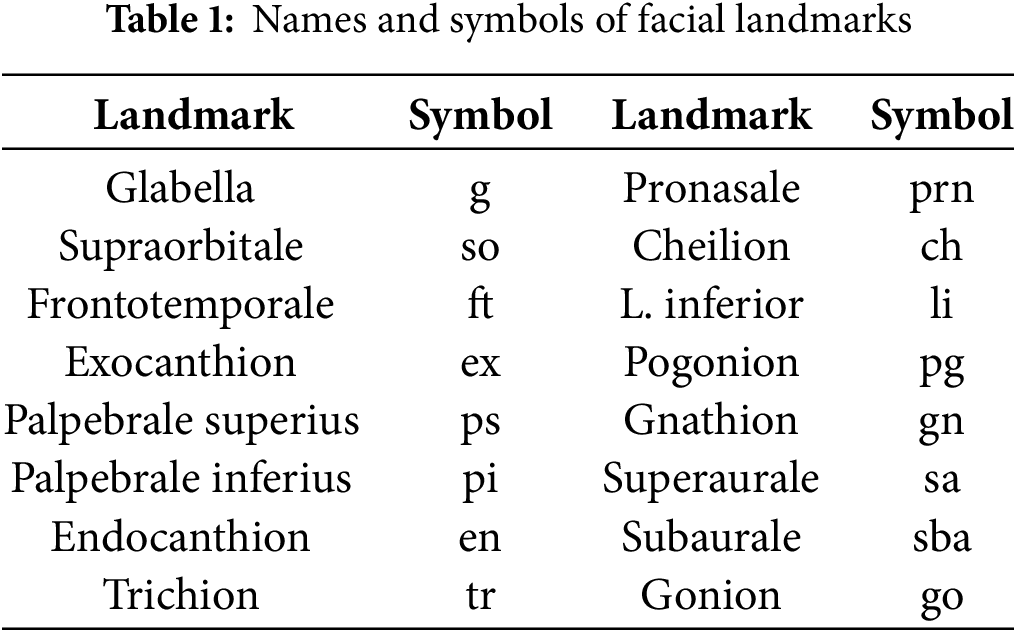

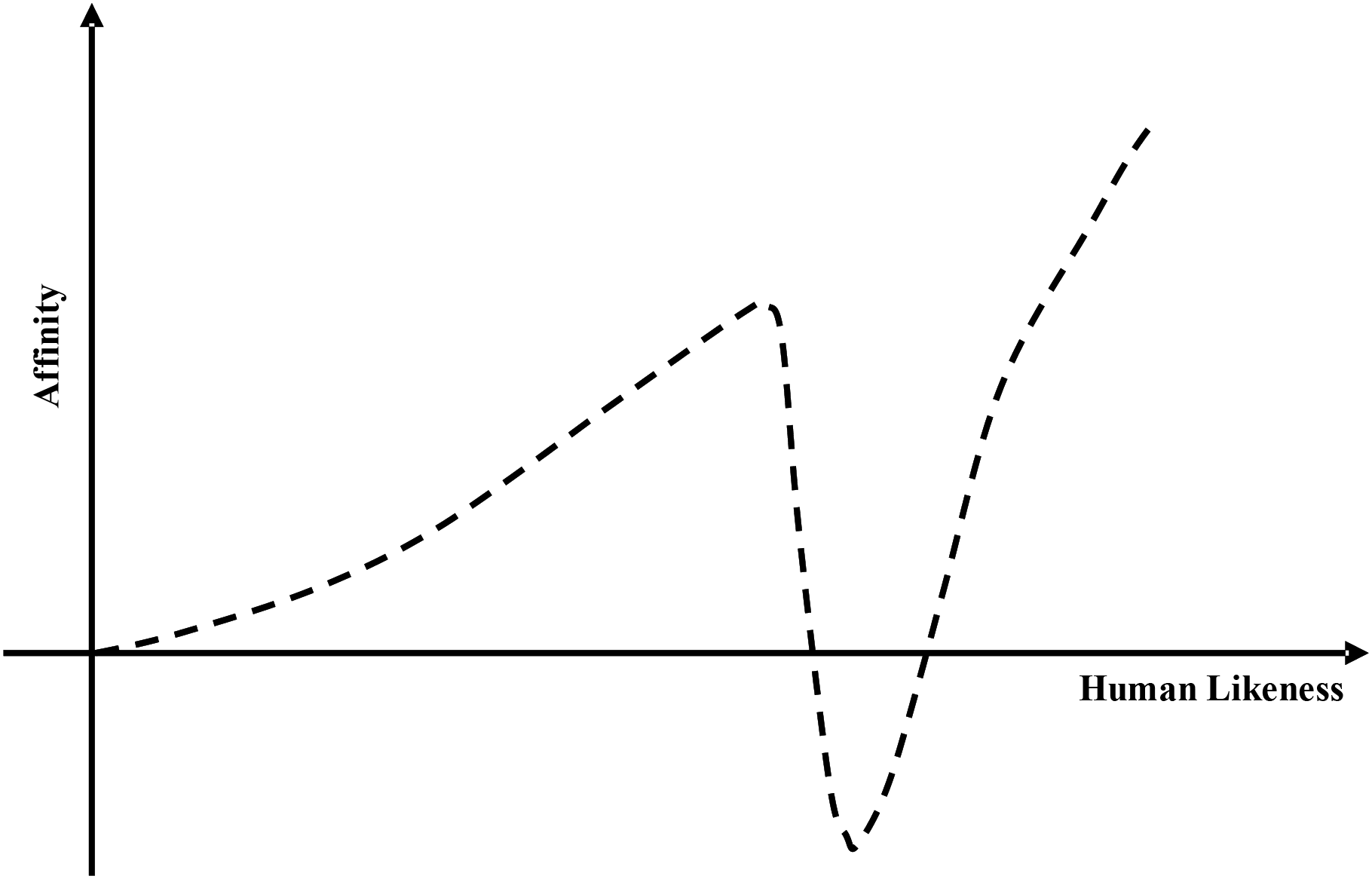

A study involving 182 Vietnamese participants, with an average age of 22.01 ± 1.39 years and 55.5% female, was conducted to collect the design’s facial characteristics and anthropometric indices. The robot’s design does not replicate a specific face to adhere to identity rules. Based on Mori’s uncanny valley hypothesis [31], robots can evoke negative feelings when their appearance and gestures are not entirely human-like, creating a sense of unease during human interaction. This study carefully considers the uncanny valley to avoid such discomfort during human-robot communication. Future research will delve deeper into the uncanny valley’s impact. Evaluating behaviors and emotions in human-robot communication is essential to prevent adverse effects on users’ emotions. Various authors have explored the uncanny valley since Mori’s initial hypothesis. Accordingly, two additional theories have been proposed [32,33], complementing rather than refuting Mori’s original hypothesis. In addition, Kim et al. [34] found that robots with low to moderate human-like appearances also provoke repulsion in users. Cheetham et al. [35] suggested that the uncanny valley emerges when a humanoid robot is about 70% similar to humans, which aligns with Mori’s hypothesis, as illustrated in Fig. 2. These hypotheses aim to evaluate robots’ acceptability in communication, emphasizing that a robot’s appearance and behavior must be refined to surpass the uncanny valley. Based on this theory, achieving at least 70% human likeness is necessary to foster the most realistic and comfortable communication experience.

Figure 2: Uncanny Valley introduced by Mori

2.1 Theory of Facial Emotion for Robotic Head

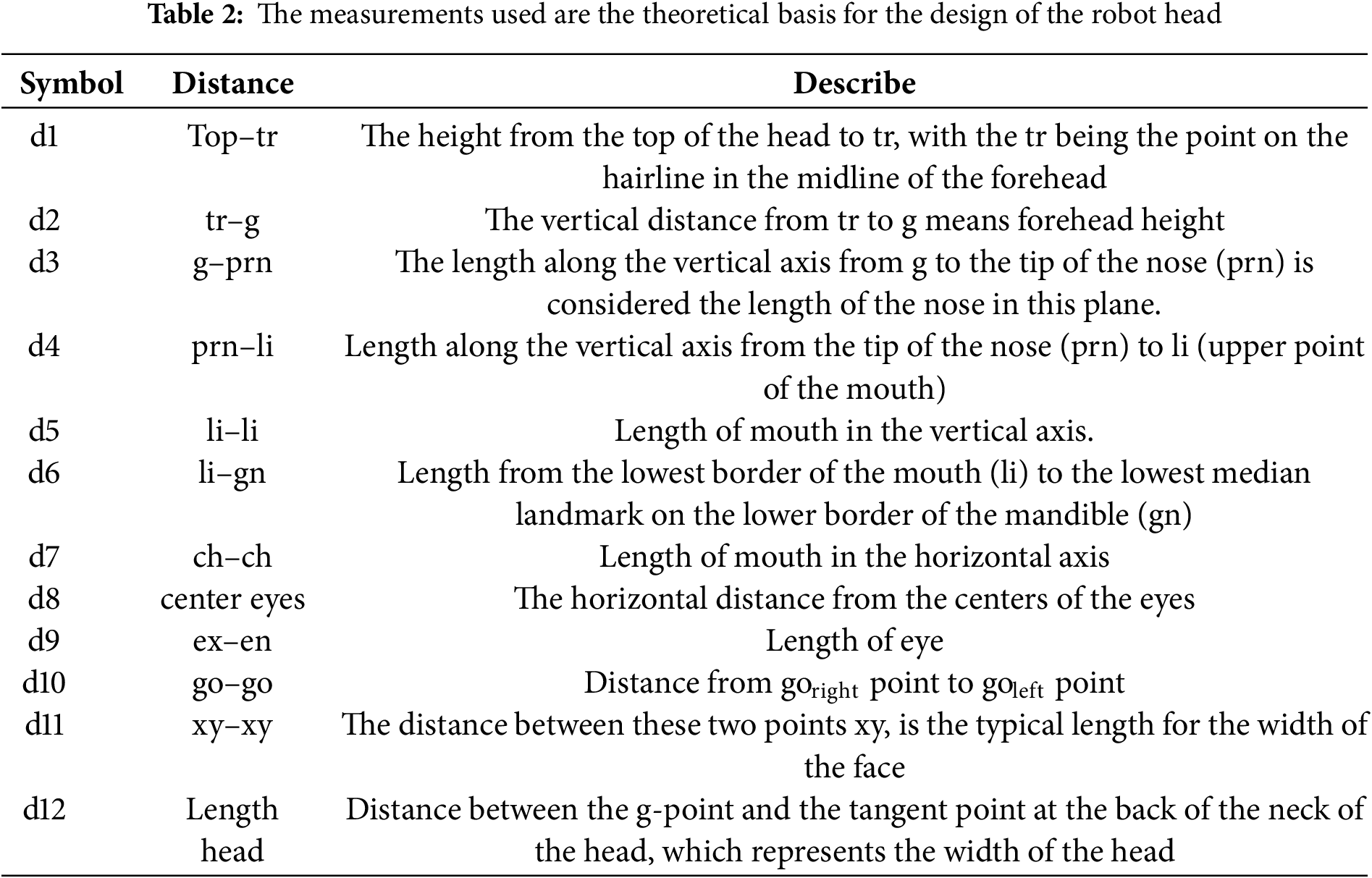

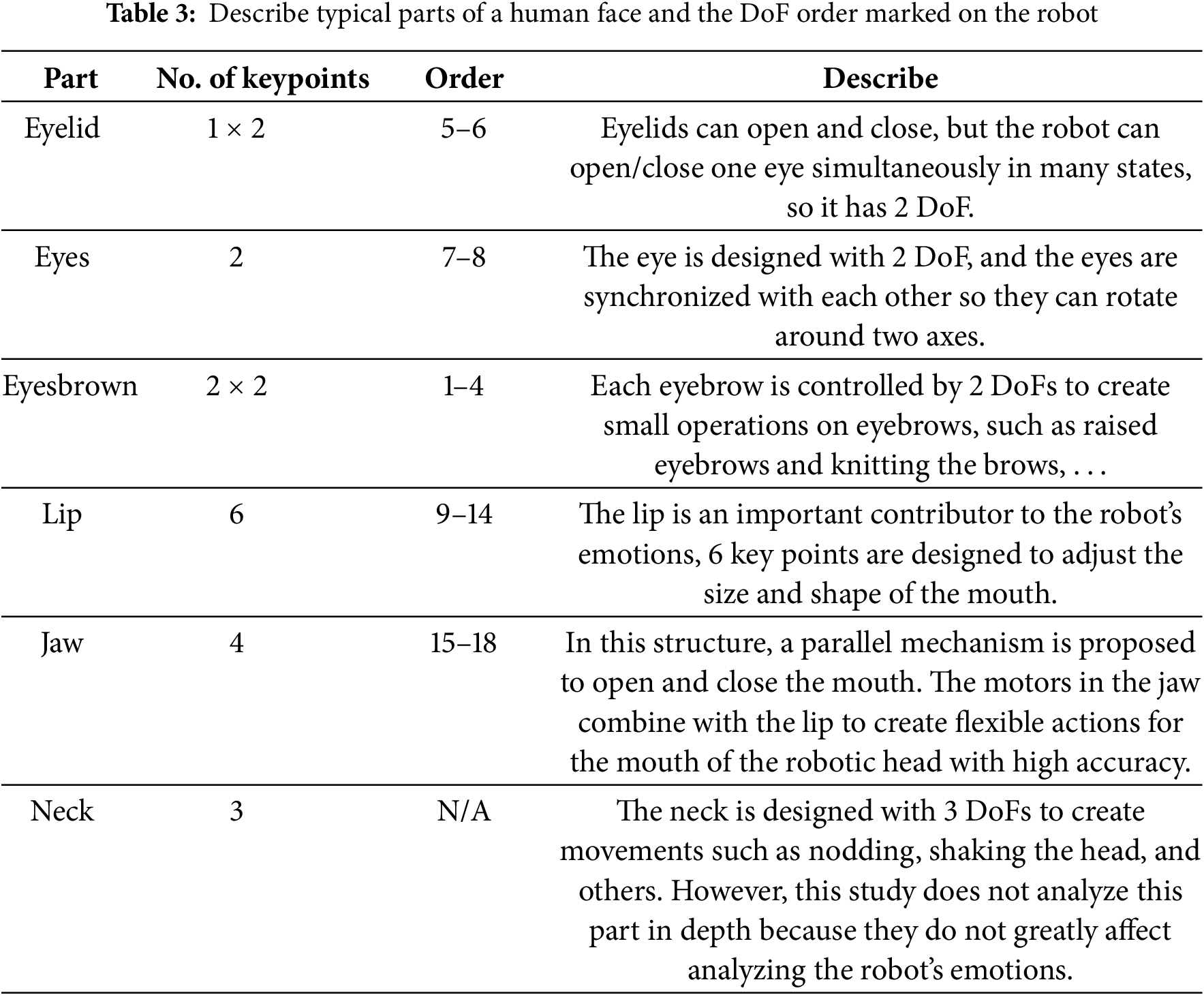

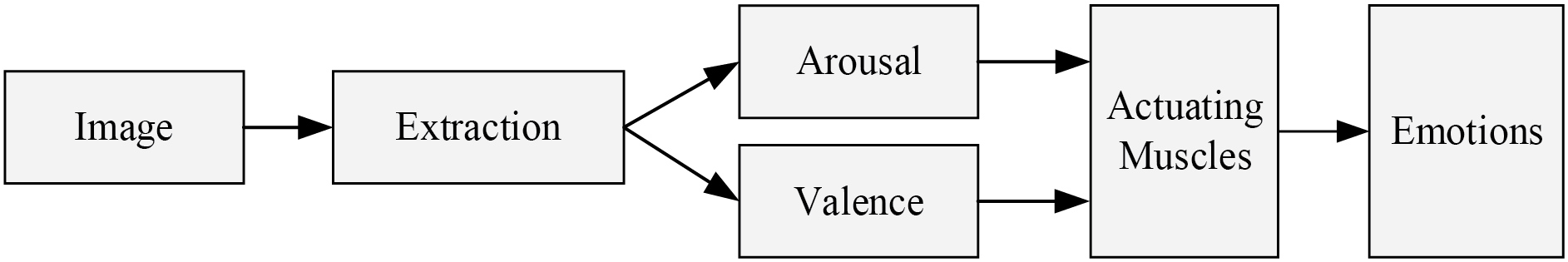

Emotion is the mental state of sensation produced by physiological changes in facial muscles [36]. It is a complex behavioral phenomenon related to levels of neural and chemical integration [37]. This is a topic of research and debate among experts, and many emotional models have been proposed to assess human emotions in psychology [38]. The model proposed by Ekman [39] is prominent in this regard, as it introduces six basic emotions. The study indicated that emotions are essentially encompassed independent quantities and can be combined to produce more complex emotions. Later, Plutchik [40] also proposed a model with eight basic emotions, supporting Ekman’s model theory that the existence of basic and complex emotions is generated from their combination. Later, many studies discussed emotions. For example, Feidakis et al. [41] divided emotions into 66 categories, with 10 basic and 56 secondary emotions. The emotional dimensions are the main focus of the measured emotions, and they are essentially versions of Russell’s model [42]. Emotion analysis is one of the complex problems of human evolution. Emotional theories in psychology show that emotional behavior and psychophysiology are independent of each other [39,43]. During the last decades, many studies on emotion classification have been done. Emotion is classified into two types, including primary and secondary ones. Primary emotions are defined as essential and natural states of innate nature. Damasio and Descartes [44] proposed the primary emotions: anger, fear, happiness, and sadness. Secondary emotions are more complex than primary emotions because they are created based on a combination of primary emotions. An investigation of the arrangement and movement of muscle bundles should be considered in this study to design robots that exhibit human-like emotions on a biological level. Emotions are assessed based on the displacement of 20 key points, as illustrated in Fig. 3a. This study proposes the algorithms applied to the robotic head with 16 key points and 2 degrees of freedom of the eyes to create emotions for the robot. The parts on the robotic head are designed and calculated based on human anthropometry in Vietnam, with the dimensions listed in Table 3. However, the dynamic calculations for the robot will be presented in more detail in this study. Table 3 lists the robot’s emotions generated by combining the robot’s facial parts. It presents the parts with the number of key points contributing to each face, as numbered in Fig. 3b.

Figure 3: (a) Description of the key points tracked to analyze human movements. (b) Keypoints are designed on a robotic head

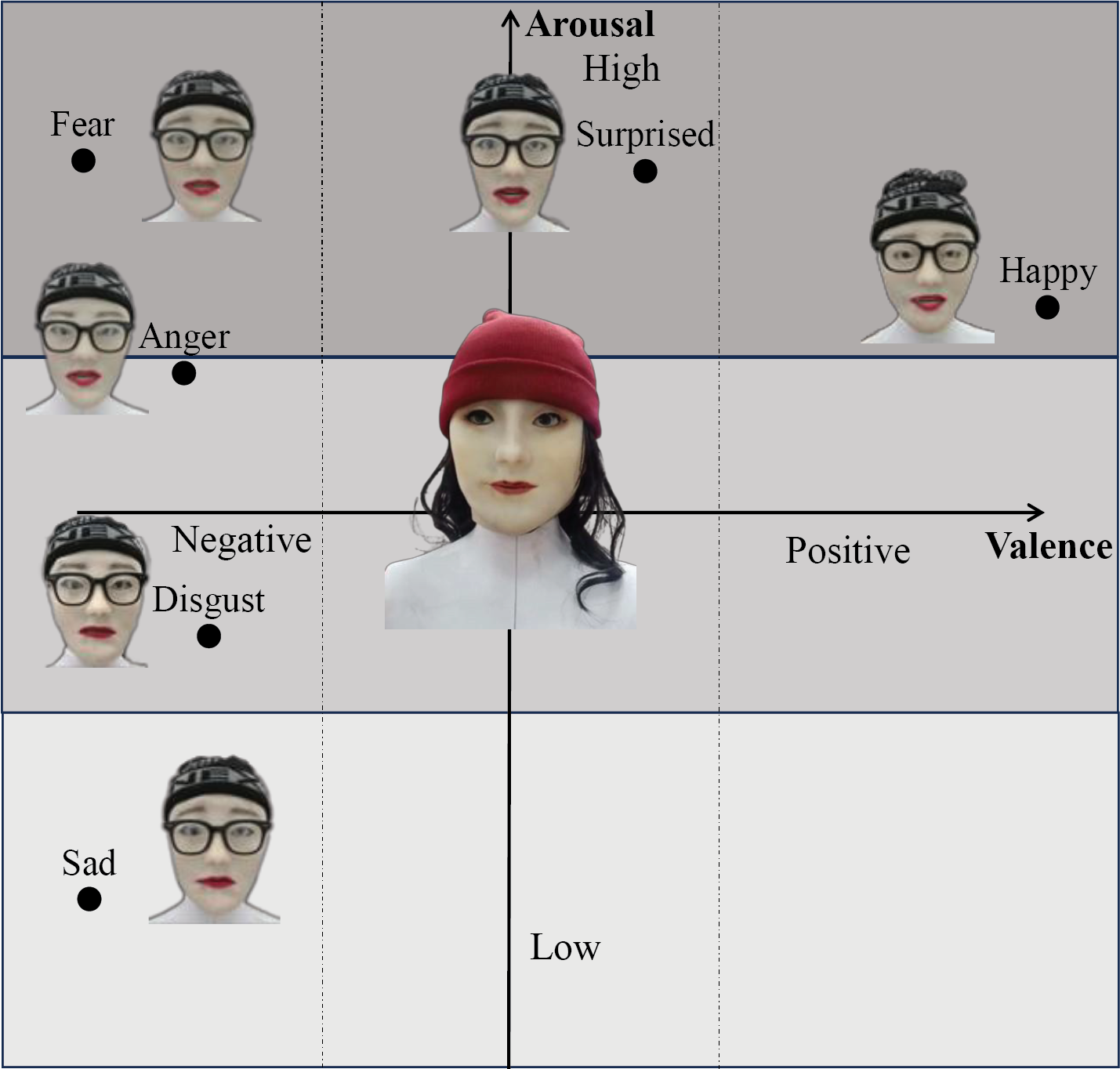

As mentioned, the human complex psychological state plays an important role in decisions, behaviors, and social interactions. Recent studies have tried digitizing emotions using methods such as electroencephalogram (EEG) analysis, heart rate analysis, and others [45,46]. These methods require sophisticated hardware to measure and analyze the data. Based on Russel’s model [42], emotion is described based on two values, namely valence and arousal dimensions. Based on this model, Russel argues that emotions only need two dimensions to define an emotion, and the authors failed to show the valence and arousal values of each emotion, which means qualitative values. In this study, emotions are desired to be digitized and put into the model to decide the angle of motors, which creates primary emotions in the robotic head. Many theories about the number and types of emotions have been put forward in human emotion research. However, a popular hypothesis proposed by Ekman [47] is that people have six basic emotions: happiness, sadness, anger, fear, disgust, and surprise. In previous studies, only examining robots with primary emotions [48] creates an unreal feeling for the humanoid robot even though the emotions are well recognized in the robot. A study was conducted to classify and digitize the emotions set up to use input data collection to control the robot. In this study, genuine emotions are desired to establish the robotic head. Accordingly, there has been no publication on accurately determining the value of each emotion based on the image of gestures in communication. Therefore, this study was conducted to classify and digitize the emotions set up to use input data collection to control the robot. Emotions are broken down to be set up for specific robots and evaluated to different degrees. Each emotion is represented by two values, as listed in Table 4, and six basic emotions are proposed by Ekman [47].

3 Reconstruction Emotions Based on a Hybrid Fuzzy Model of Robotic Facial Skin’s Actuators

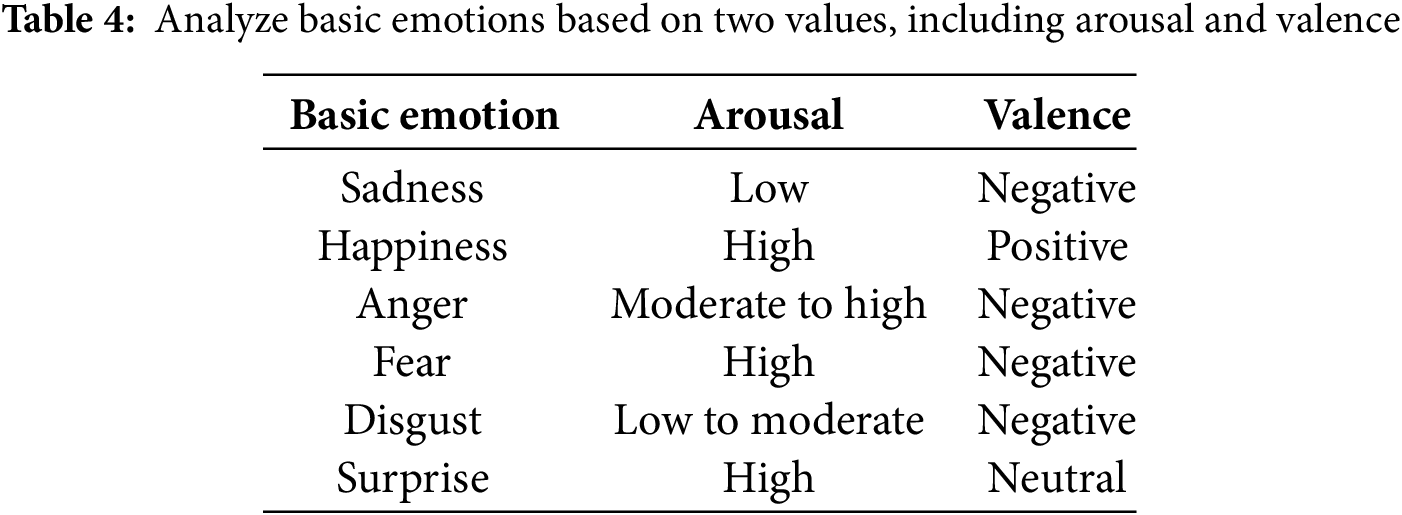

Several experiments to digitize human emotions are conducted based on expert judgment to partition six emotions with different levels. Those values are input to predict the motor’s rotation angles to create the robot’s most realistic emotions. The data for each emotion is evaluated by the level of that emotion based on two values: arousal and valence. The arousal describes the state’s arousal level, and valence represents the positive or negative level of the emotion. Emotions are analyzed into two characteristic values (valence and arousal) with different degrees of each variable based on fuzzy logic to minimize the input parameters of the model that determine the movements of the muscle bundles of the robot. The fuzzy model is suitable for qualitative data and cannot be precisely determined, which is consistent with the nature of emotions [49]. Fig. 4 shows the processing diagram of the robot’s process of creating emotions. The value of emotions is determined using the user’s image. In addition, to create true emotions for the robot, many elements resonate with each other, such as extracting from the tone, emotions in the sentence, and others. However, in this study, the emotional values extracted from the user’s image with the flowchart are shown in Fig. 4.

Figure 4: Processing diagram of the whole process of creating emotions in the robot based on the hybrid model

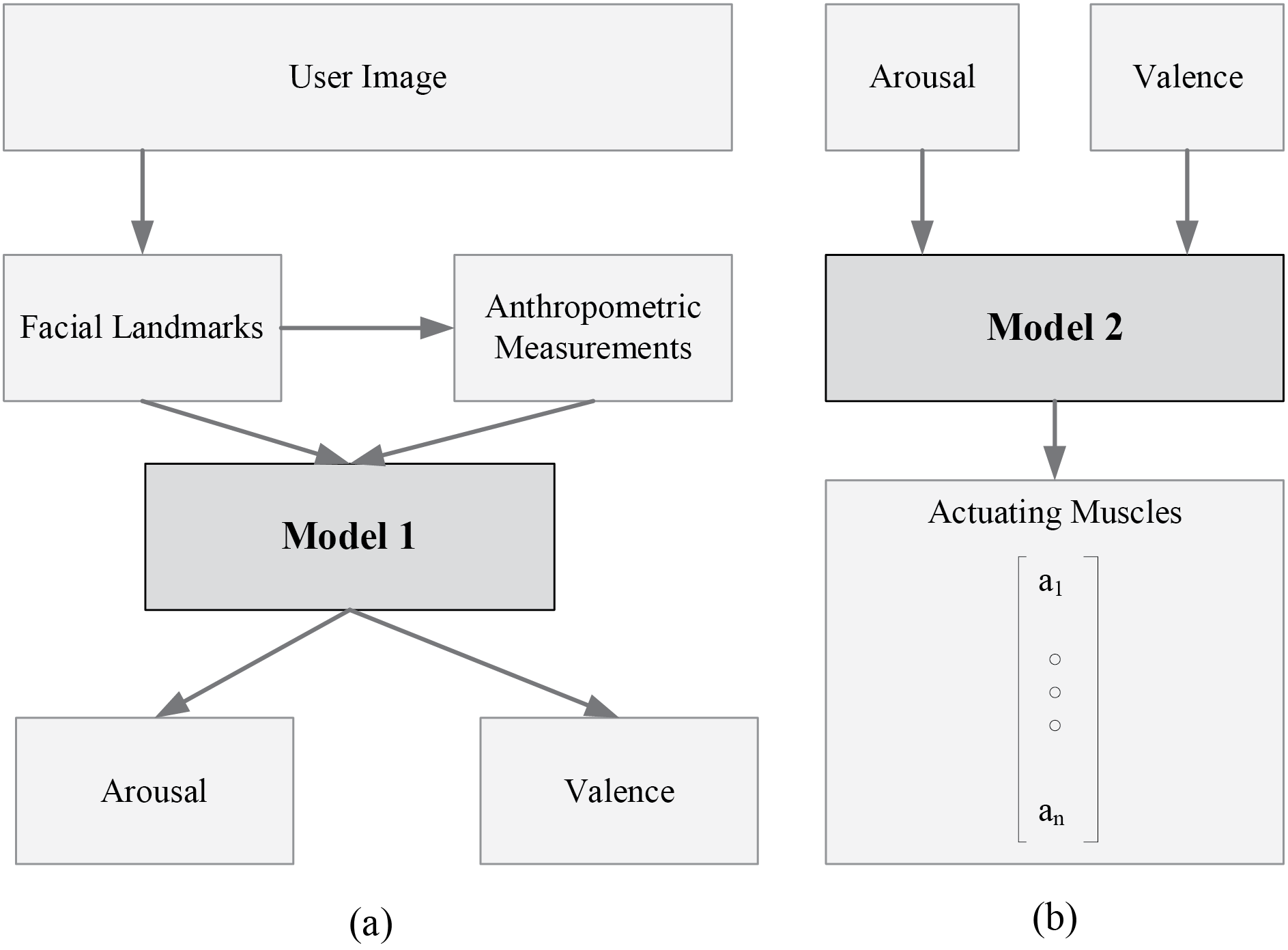

The process is divided into two stages to speed up the calculation of the system and help the robot to express human emotions in real-time. A robotic head is designed to imitate the user with genuine emotions in the conversation. This is the foundational research for making robots capable of remembering and learning facial emotions. In stage 1, emotional values were extracted based on facial landmark movement using the Fuzzy C-Means model (FCM). The flowchart of Stage 1 is depicted in Fig. 5a. The output of this stage includes the value of emotions, namely arousal and valence values, and it is the input for stage 2 to calculate the rotation angles of the motor generating emotions for the robot using the approach described in Fig. 5b.

Figure 5: Illustrate the two stages of the process for calculating robot emotions with (a) is Stage 1, and (b) is Stage 2

3.1 Stage 1. Determining the Emotional Values

In this stage, the desired emotional values are determined based on moving parts of the user’s face. The input is the user image collected through communications, and the output is emotional values, which are the input for Stage 2. Images are utilized to extract values because facial expressions, gestures, and attitudes are clearly shown in the images. Thus, images can help convey emotions automatically and nonverbally. The movement of four facial parts is detected based on the results of the previous study [29]. Various studies have shown that different expressions produce different transitions for these landmarks [48], combined with the displacements of facial key parts, namely eyebrows, eyes, lips, and mouth, explained in the below section.

Human emotions are created from 44 action units in the facial action coding system (FACS) [50], and emotions are digitized into two values realized by the fuzzy C-Mean based on the displacement of facial parts. For example, raised eyebrows, eyes wide open, lips raised, and mouth wide open to show happy emotions. During initialization, neutral emotion faces are collected and used for reference, meaning the displacements of the parts under consideration and key points are taken as landmarks (Dall = 0). Eye movement was determined by the four key points used in human emotion analysis studies (ex-ps-en-pi), which is the same for both eyes. The mouth is defined by a figure passing through 4 points (chl-lia-chr-lib). Emotions are created by complex transformations of facial muscles; however, for robots, this is not possible due to the complexity of muscles. Therefore, to create realistic emotions for the robot, other points on the face are expected to be investigated to create realistic movements for the robot, such as g, gn, prn, so x2, ft (x2), pg. Ekman et al. [51] demonstrated that the points on the face have a shift when generating emotions; however, the points with a large shift are presented in this study. The deterministic model valence and arousal are built on the six inputs, essentially a shift of 20 key points, so a fuzzy C-Means model is proposed in this case. In this stage, data is collected from participants in previous research [29] combined with the Cohn Kanade (CK+) dataset [52] to survey the movement of landmarks and the essence of the evaluation process is based on the movement of landmarks. Each point is considered to include 2 values

Fuzzy C-Means (FCM) is an unsupervised clustering algorithm based on Fuzzy clustering theory, which allows data points (di) to belong to more groups or clusters [53]. In this method, the key components include the fuzzy membership function, partition matrix, and objective function. A partition matrix is used in clustering methods for Fuzzy C-Means (FCM) to show the degree to which each data point is a member of each cluster, also known as the membership matrix. The fuzzy cluster centers

where m defines the fuzziness of the resulting clusters with (3). Then, the new membership

where

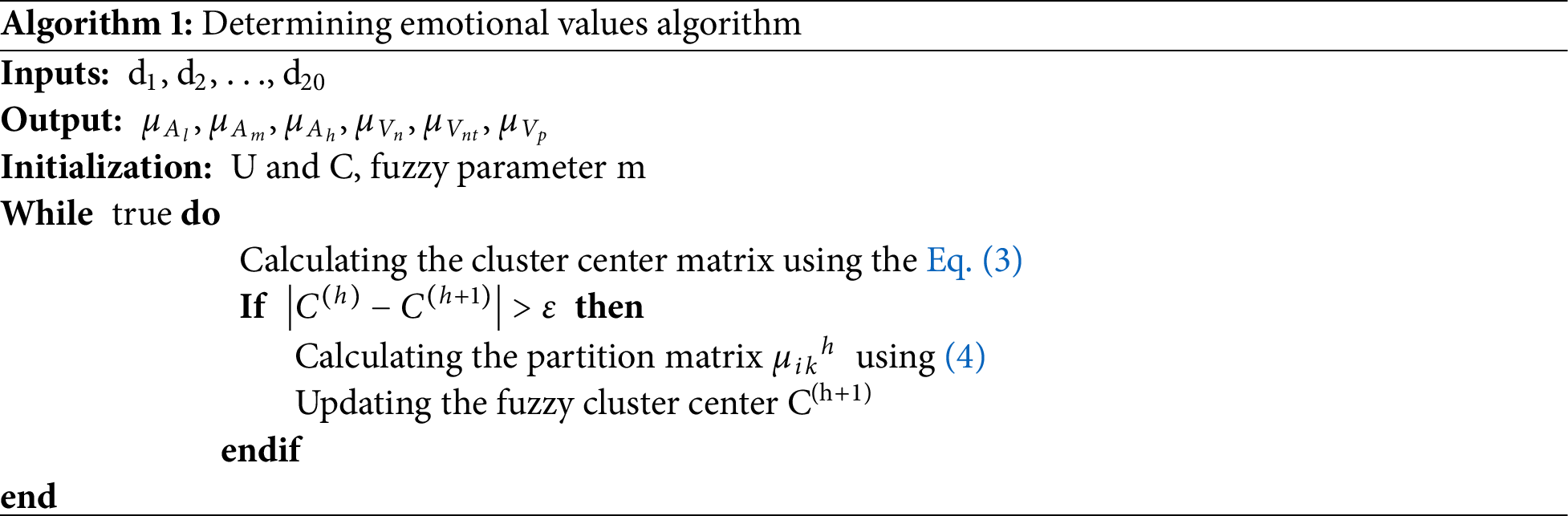

The goal function is to find the optimal U and C values that minimize the overall error or difference between the data points and their assigned centroids. The algorithm iteratively modifies the membership values and centroids until convergence is attained and the objective function is minimized. The algorithm steps are performed in sequence. First, initialize the number of clusters c, and then the new fuzzy centers are calculated as (3). Finally, the partition matrix is updated using (4), and the above steps are repeated to minimize J in (5).

The model output is arousal and valence values, which are defined as emotional values; in this case, two sets of FCMs are utilized to determine the degree of membership in each level of arousal or valence values. Considering arousal values have three levels, namely low, moderate, and high are denoted by

The FCM model determines the degree of membership of each data point, which reflects the strength and weakness of belonging to each cluster based on its degree of dependence. A data point with a membership close to 1 for a particular cluster strongly belongs to that cluster, while a value close to 0 for another cluster represents non-belonging to that cluster. These outputs are put into the next stage, including

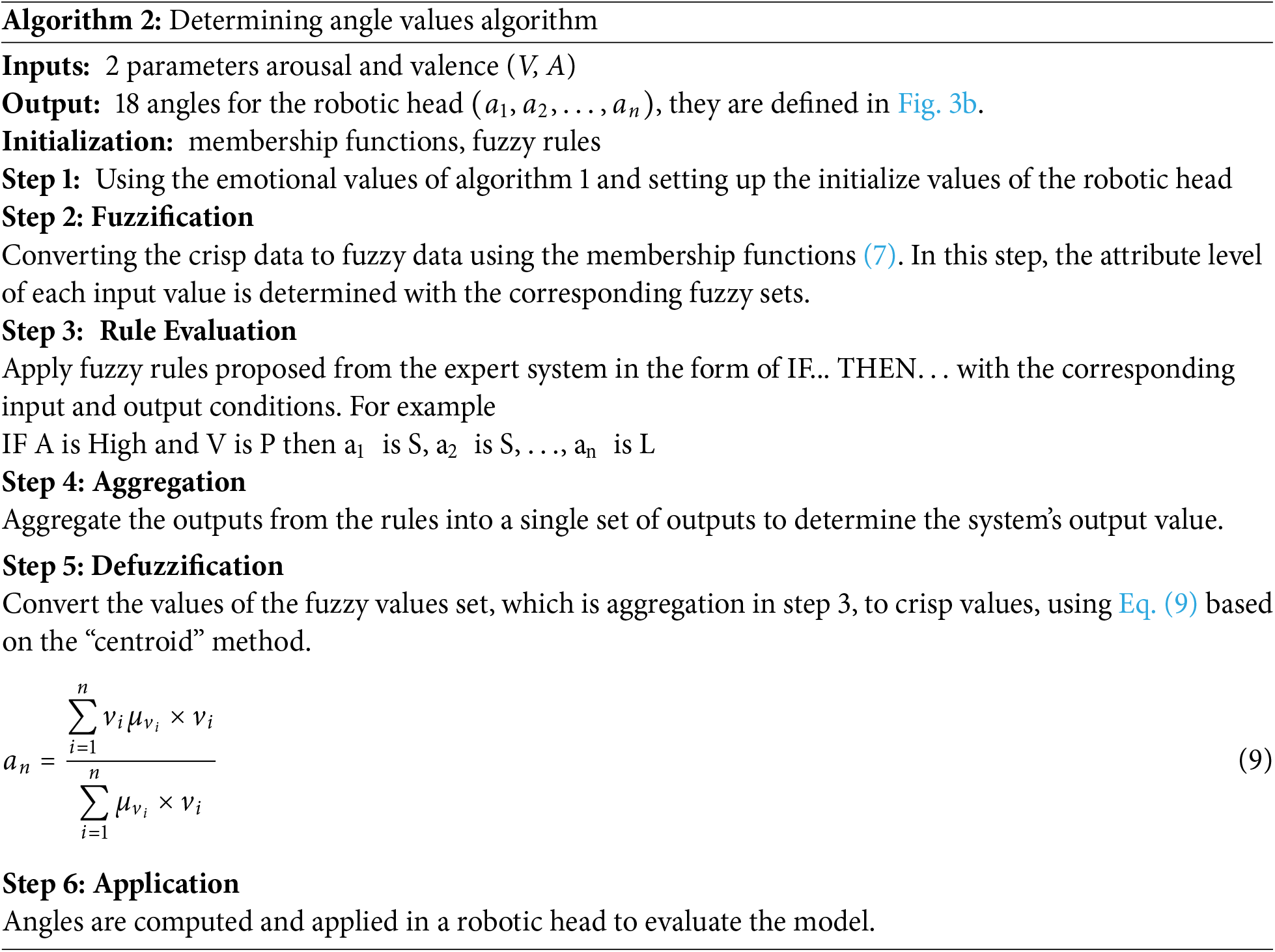

3.2 Stage 2. Determining the Actuating Muscles

A fuzzy emotion inference system is set up to calculate the angles of motors based on mappings between input and output, and it works on the inference of facts from the knowledge base. This stage aims to determine the motor angles that make up the robotic skin’s movements. Human emotions are quantities that cannot be expressed in absolute or binary terms. Based on the emotional values, calculate the angle using empirically established and empirically established fuzzy rules. Fuzzy logic allows for flexible evaluation and processing of diverse data. It is possible to define fuzzy sets and rules to describe complex relationships between input and output variables. This makes the model applicable to many situations and variations without having to define specific rules in advance. This method allows the motor or actuator angles to be adjusted to achieve a specified goal. Depending on the value of each emotion, the system can suggest suitable angles for the emotions so that the pseudo-muscle bundles represent the emotions more realistically. This means that emotions are analyzed in depth by looking at the level of each emotion, for example, happy little, happy, very happy.

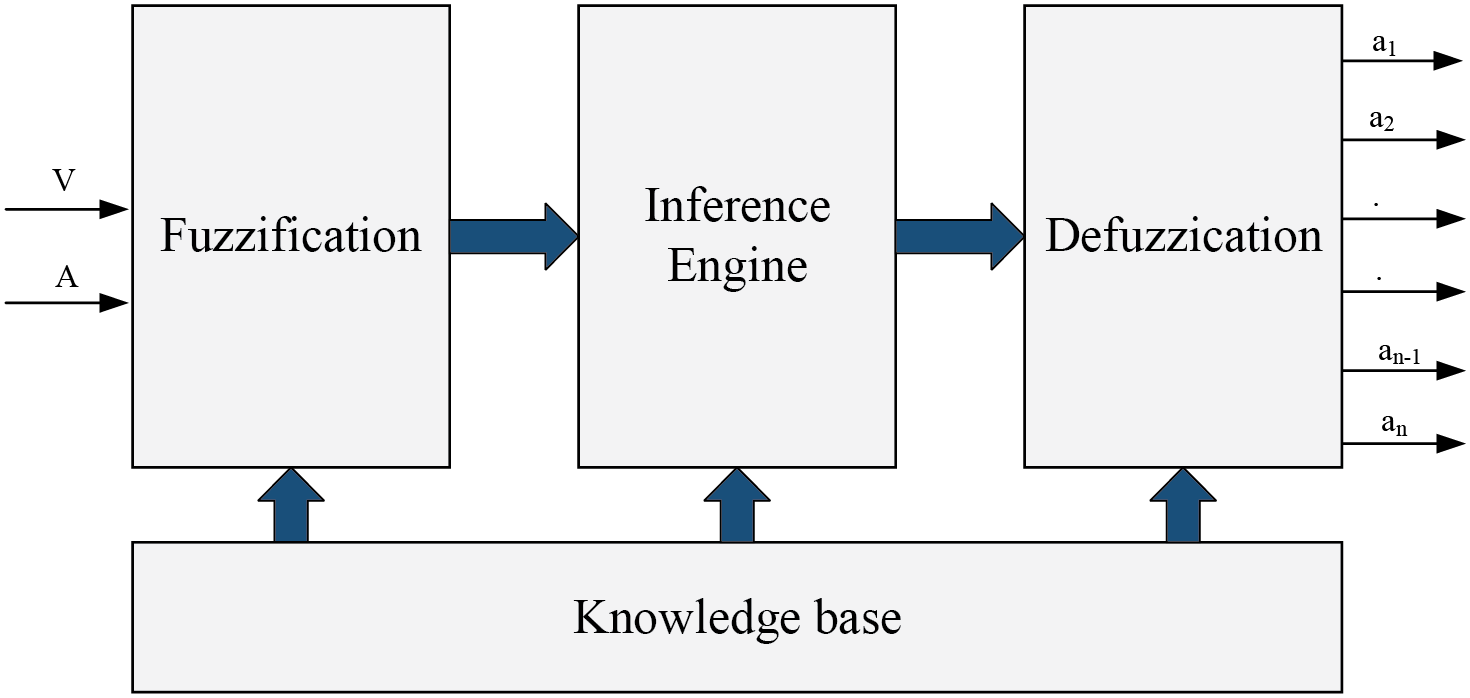

Fig. 6 indicates that the proposed system consists of 3 main steps: fuzzification, inference engine, and defuzzification. The crisp data in the fuzzification step is transformed into fuzzy data based on fuzzy logic. They are important steps because they allow us to calculate with fuzzy data and make decisions based on fuzzy membership degrees. The second stage aims to determine the degree of relationship of the input variables, and the data is turned into fuzzy language variables by membership functions.

Figure 6: Illustrate the FIS model in stage 2

Let a fuzzy set Vi on the universal, set V

The Fuzzy system maps input variables to output variables based on an expert system of IF-THEN sequences. The fuzzification process converts script input and output variables into a set of fuzzy variables. Each arousal value has an explicit value represented by language terms such as “High”, “Moderate”, and “Low” based on membership functions. The triangular fuzzy number (TFN) is utilized to handle uncertainty, and it is defined as Eq. (7) and

After this stage, the calculated angles based on the FIS model are applied to the robotic head. To our knowledge, up to the present time, emotions are a quantity, and there is no way to quantify them accurately. Robotic interaction studies often use six basic emotions for robot interaction tasks [12]. This means some android robots can cause fear in humans when interacting with humans through the robot’s gestures and emotions. The study aims to create a robotic head that can express emotions genuinely and human-likely. The robot’s emotions are expected to embody small gestures of facial landmarks. The pseudo-code to determine the angles for the robotic head is in Algorithm 2 below.

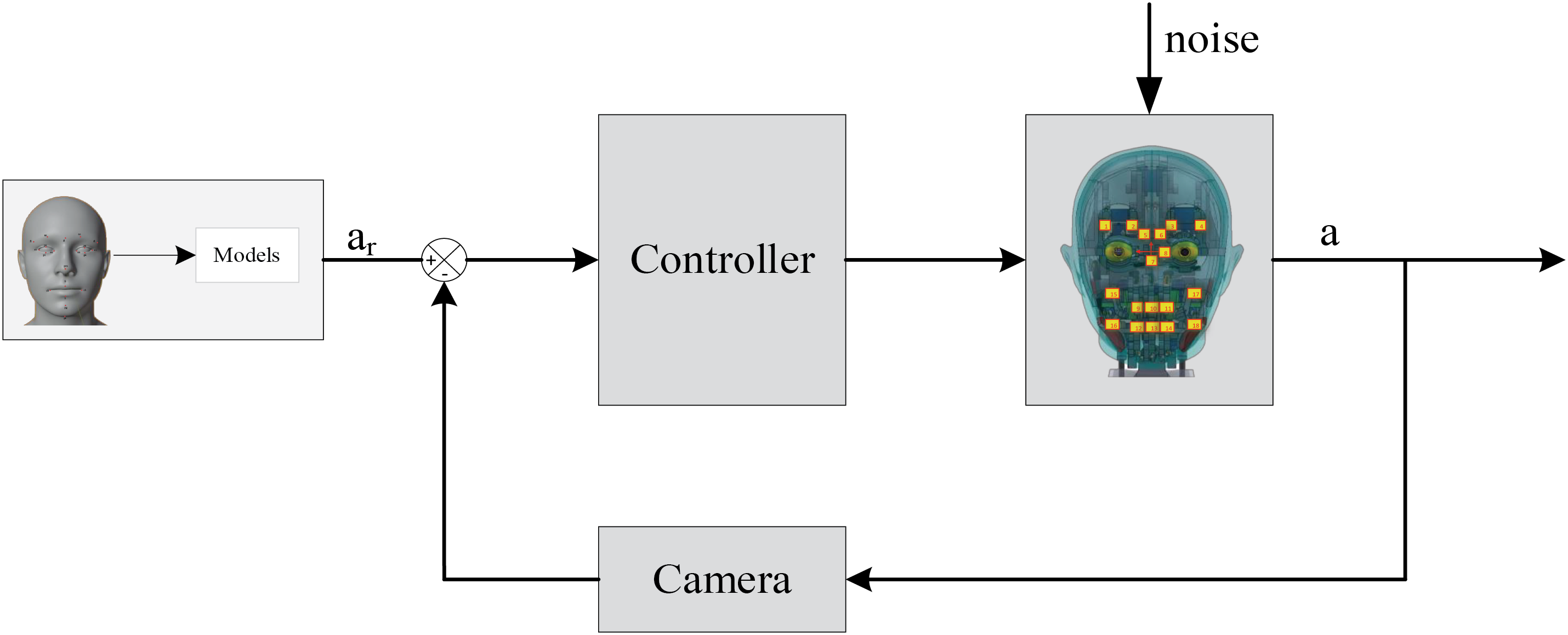

This study performs the digitization of emotions using a hybrid fuzzy model, which is used for decision-making of motor angles and serves many other fields [54,55]. Accordingly, the emotional values calculated from the images are fed into the model to calculate the motor rotation angles and create realistic emotions for the robot. In this version, the focus is on accurately reproducing emotions in the robot, meaning that when the input image shows happiness, the algorithms calculate to enable the robot to faithfully replicate that emotion. Rules for responding to more complex emotions will be added in future research to enhance the interaction between humans and robots, creating more natural and profound conversations. The controller is used with the proposed scheme in Fig. 7, and the feedback signal is the image extracted from the position of the landmarks, which ensures the actuators perform with high precision [55]. When receiving angle signals from the calculation system, the controller is applied to control points on the robot to create emotions for the robot. The proposed control system has a closed loop due to the events arising from the effects of the environment, especially the uneven elasticity of the artificial skin everywhere on the face of the robot. The error of rotation angles is extracted from the image of the robot using a digital camera. With this control, the robot’s emotions are optimally calibrated compared to reference angles computed based on the Fuzzy model. The inputs are calculated from the models used, represented in matrix form, and the controllers are set up for the motors. The output of the calculation system using fuzzy models is shown in formula (10), and a represents the actual angles presented in Eq. (11). The controller is designed to meet the synchronous control of motors to create emotions. The goal is to minimize the error between the computed and measured angles. The images are extracted in real-time at the processing speed, ensuring the motors perform sufficient rotation relative to the setpoints.

Figure 7: Illustrate the control diagram of the system

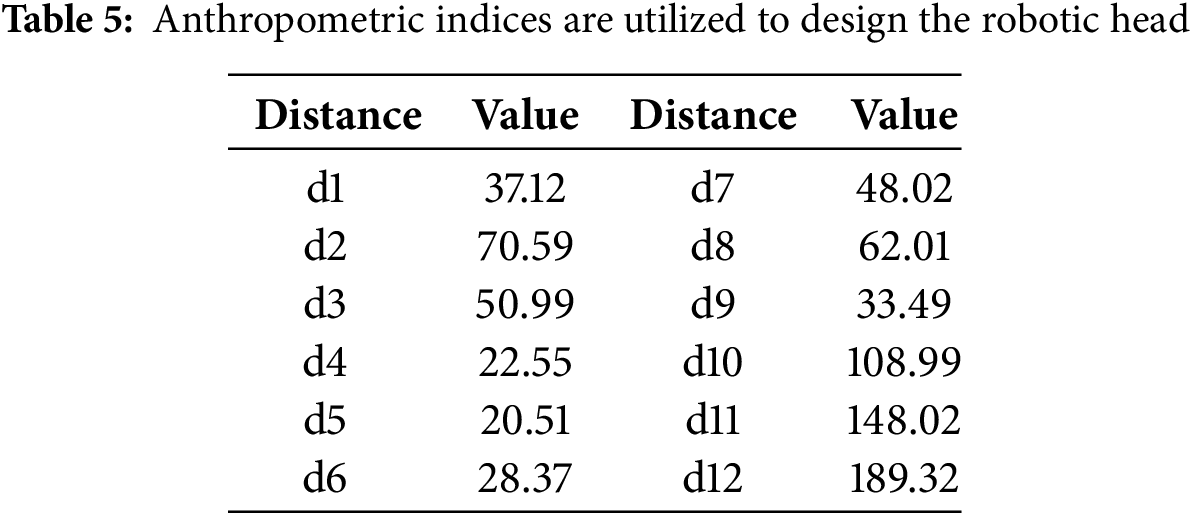

FCM is proposed because the survey of emotions found ambiguity or similarity of basic emotions. This uncertain property of emotions is the main factor well-mapped by the Fuzzy C-Mean algorithm. Two experiments are set up in this research to evaluate the effectiveness of this approach. First, the experiment was set up to evaluate the emotional differences between robots and humans. Next, the experiment evaluates the similarity of emotions suggested on the robot head. The models are then set up to compare the proposed models’ performance with those set up with default parameters to demonstrate that the proposed model can fit this goal, and this is not meant to disparage different models. The robotic head is designed based on the Vietnamese anthropometric index, and the parameters are evaluated in a general way to provide suitable data for the robot design with 12 distances, as listed in Table 5. A robotic android study is presented with the desire to create a robotic head that looks human in appearance with local features. The robot was designed based on the parameters described in the survey, and the average values of the measurements were calculated. These parameters are used as a theoretical basis for the design, so small measurement errors need not be considered.

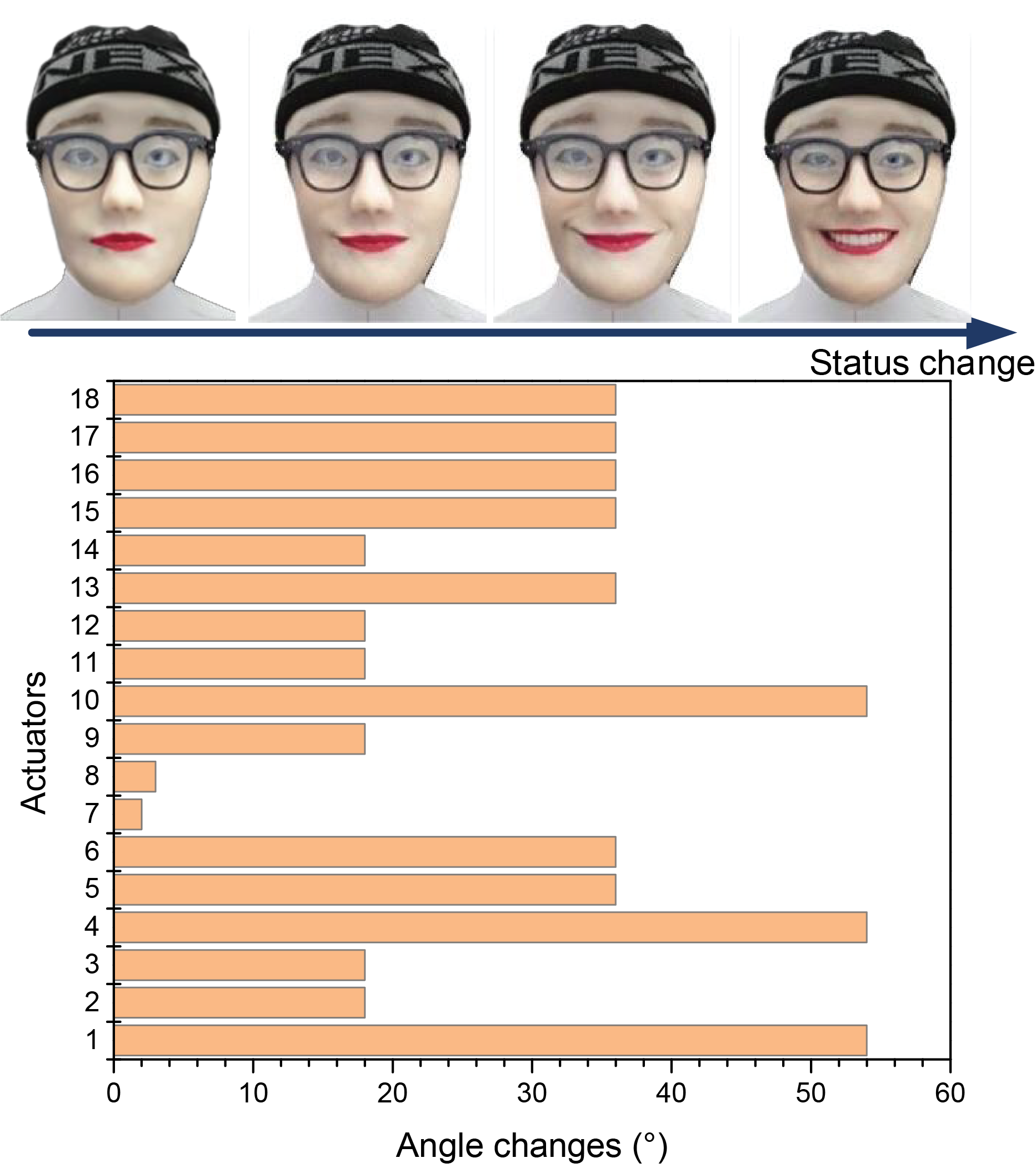

The robotic emotions are formed by the displacement of 18 motors, and response time is evaluated by the change of angle of the motors with synchronous control of all motors. The response speed of the engines is measured from the signal of the microcontroller to evaluate the responsiveness of the robotic head. The robot is set up to change the angle from 90° to 0° with a response time for this motor of 0.45 s. At this rate, the robot’s expressions and movements can be rendered in real time. In addition, the transition from neutral to happiness was surveyed with movements mainly in the eyebrows and mouth with an angle of change of 55° and the most extensive response time of 0.27 s. Note that the motors are synchronously controlled so that the time to complete an emotion is determined to be equal to the response rate of the motor with the most significant angle of motion. The angular variation of 18 engines is shown in Fig. 8, and the response times of the motors are depicted. Fig. 8 illustrates the robot’s transition from a neutral to a happy state with the change of 18 actuators as presented. The angle values are decided by FIS based on input requirements. These values are continuously adjusted based on previously established rules, not created immediately but transformed flexibly between emotional states based on two values of valence and arousal.

Figure 8: Illutation is the response of actuators while the robot transitions from neutral to happy with intermediate states

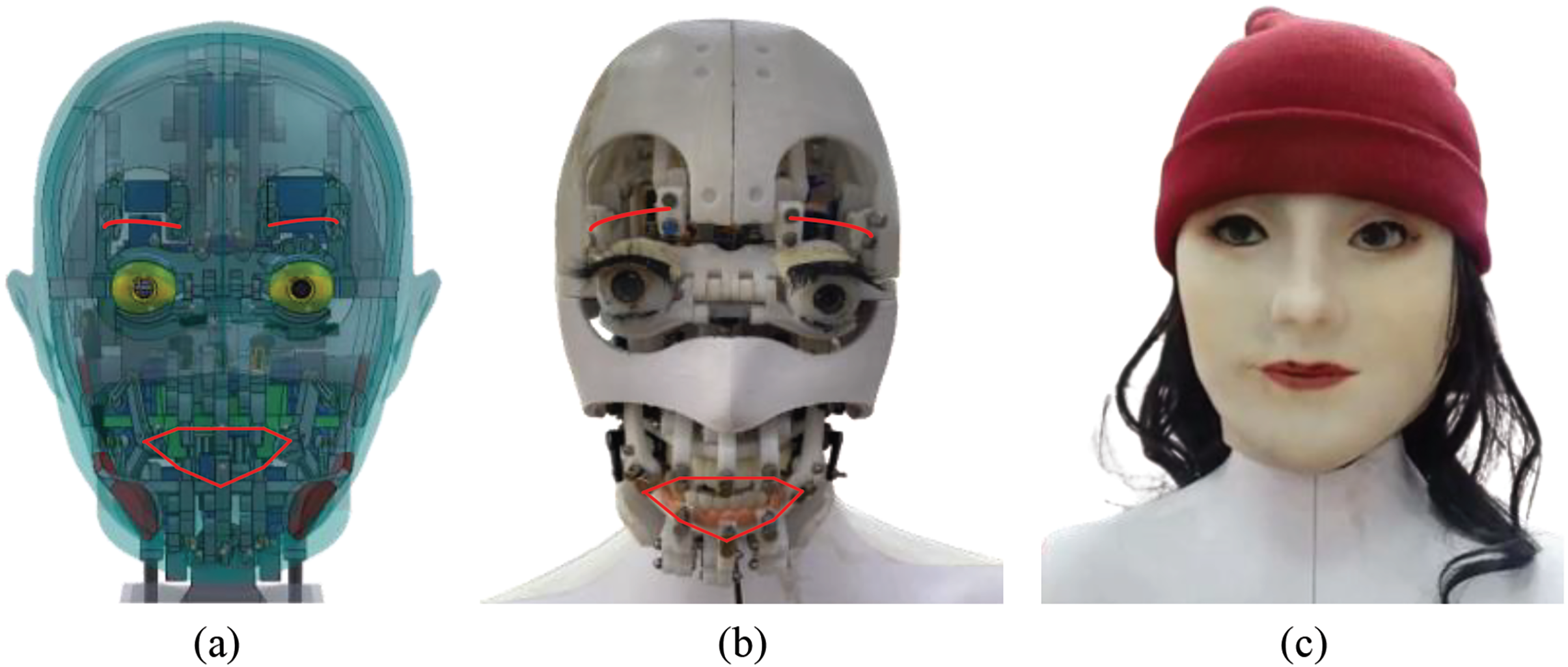

Designing a robot head model in software and reality is an exciting and complex process. The parts are designed based on the parameters provided, and factors such as the dimensions of the main parts and the overall dimensions of the robot must be ensured; the rotation angles of the motor have limitations. Rotation angle similar to human parts. A robotic head is proposed and designed as shown in Fig. 9 with the following parameters to Table 4, namely Fig. 9a shows the rendering of the head robotic design in the software, Fig. 9b shows the internal structure, and Fig. 9c is the actual appearance of the humanoid robot. The emotions are created mainly from the main parts such as eyebrows, eyelids, eyes, and especially the mouth of robots with the sustainability theories presented above. Human eyes can move with a maximum angle of 70, and robot eyes can move with a maximum angle of 72.6. Alternatively, the angle of the jaw-lip of the human and robot is designed to be 41 and 49.2, respectively. The mechanical design part ensures that emotions can be generated on the robot in a way that is similar to that of a human. Emotions are calculated from the laws of Fuzzy models to suggest the angles of the motives. The control algorithm is built to accurately control the moving points on the robot. Models are designed and put into practice through plastic 3D printing technology. Components can be tested and adjusted to ensure the portability and reproduction of realistic facial expressions. Russell proposes the circumplex model of affect in psychology, and it has been adapted to analyze emotional values. Robotic emotions are analyzed into two influence values to create emotions, as shown in Fig. 10.

Figure 9: Design robotic head in software and reality; (a) Render the design; (b) Internal structure; (c) Appearance of robotic

Figure 10: The values of six primary emotions expressed on the robotic head

Unlike previous studies, emotional assessments were conducted on the robotic head with fundamental emotions and levels of each emotion (little, medium, and many). The degree of each emotion is established because its formation is influenced by the different reasons that produce the emotion. Emotions are calculated based on two values based on the theory of Russell’s model, with basic emotions expressed with values conventionalized in a language such as Table 4. The desired emotion is clearly expressed at different levels and evaluated based on the expert system. This process aims to calculate the rotation angles that create the emotions for the robot. Emotional issues cannot be fully assessed because these are uncertain factors and cannot be quantified in absolute terms. Therefore, fuzzy models are built based on human reasoning to decide a problem, which is the main reason why this model is proposed for use.

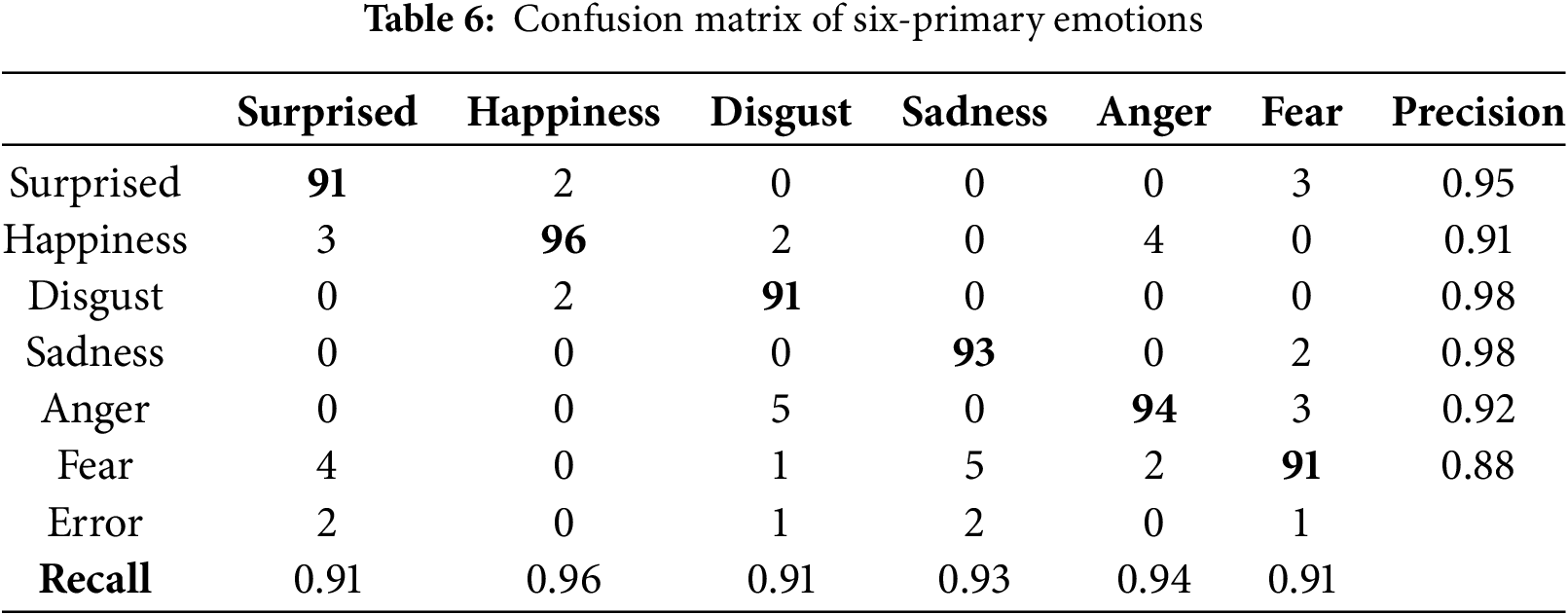

The emotional recognition experiment was conducted with the participation of a group of 100 participants, with an average age of 23.68 ± 2.79, with 63% female. Each participant received a survey to evaluate the emotions expressed by the robot. Each row had seven main options: sadness, happiness, anger, fear, disgust, surprise, and unknown. Each main option has columns indicating the level of evaluation for that emotion, including little, medium, or much. They correspond to different emotional adjectives, such as secondary emotions. However, three levels are suggested in the survey to make it easier for participants to understand. Participants were instructed to check the relevant expression in the survey that they believed to be the most appropriate for each expression the robotic head made. Participants are instructed to check the error box if they cannot identify the expression while it is being formed. First, the facial expressions that are evaluated in general through primary emotions are presented in a general way by the confusion matrix, which is detailed in Table 6, and the recall and precision values are calculated in Eq. (12). In order to assess the results of the survey, the parameters are evaluated by precision, recall for the results of expressing emotions of a robotic head, showing that the robot’s accuracy achieved 92.67% with the precision of each emotion achieving the highest performance to 0.98 for disgust and sad emotions, the lowest in fear with 0.88. Recall of all emotions expressed on the regulator robot reached above 0.91. With these evaluation parameters, emotions are appreciated when expressed on the robot’s head. However, some error emotions prevent participants from identifying it as this type of emotion. These errors are caused by the uncertainty of emotions that cause these values to be parsed as marginal values at different levels of each emotional value.

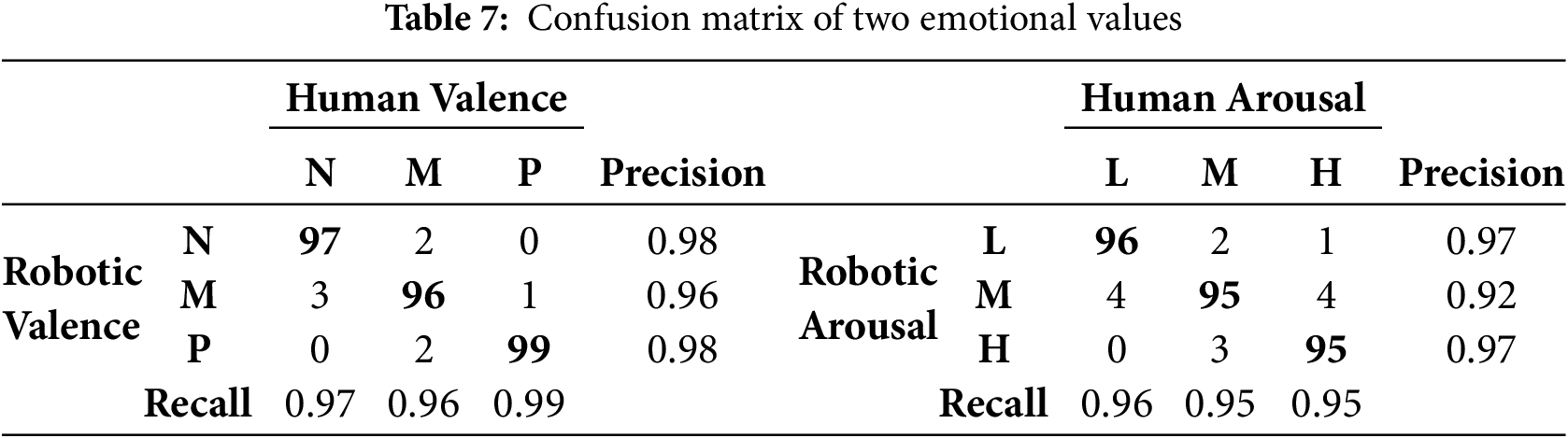

The process of calculating emotional-specific values consists of two values based on theories of emotion formation in psychology. These parameters are input for calculating rotation angles to reconstruct emotions on the robotic head to help it express emotions naturally without causing awkward feelings to the user during communication. An experiment is set up as follows to evaluate the accuracy of this process on a robot. The images of human emotions put into the model to calculate the angle values (an) and human emotional values (vh, ah) are sorted to evaluate, then the robotic emotion image into the model for calculation of robotic emotional values (vr, ar) with a process described as confusion matrix in Table 7. In the table, each emotional value is classified into three different levels. The experiment was conducted to evaluate the process of digitizing the emotional values of the model. The results show that the accuracy of using the FCM model to digitize emotions based on the displacement of facial landmarks achieves the accuracy of both valence and arousal parameters of 0.97 and 0.95, respectively. With this result, it shows the suitability of this model to use for analyzing users’ emotions. The applied FCM model is a delicate combination of flexibility and a deep understanding of human emotional semantics. It can classify emotions that are not merely “positive” and “negative” but can also assess the level of intermediate emotions. This enhances the richness and complexity of humans in expressing moods, allowing robots to express emotions more realistically.

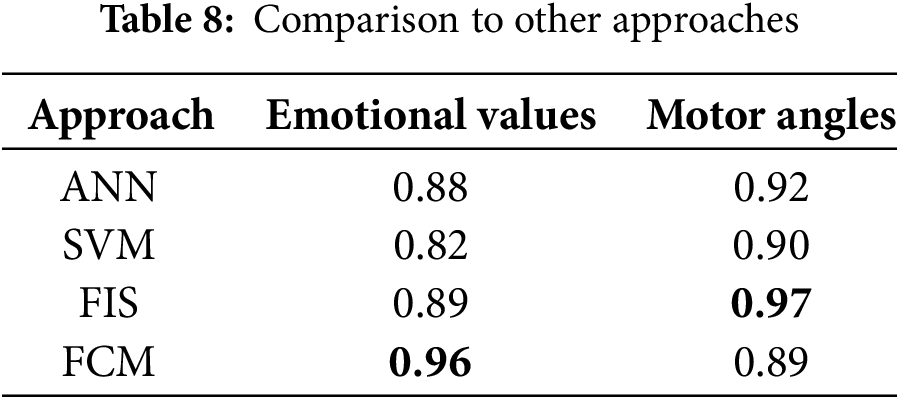

In addition, to evaluate the effectiveness of the FCM model, an Artificial neural network (ANN) and Support Vector Machine (SVM) model are set up based on the default parameters with the support of the available libraries, and they are written in Python programming. Emphasizing that these comparisons are used only to demonstrate that the digitization of emotions and the representation of emotions on robots can be suitable for the Fuzzy model because of the uncertain nature of emotions. Table 8 shows the comparisons presented. This indicates that the FCM is suitable for digitizing emotions with an accuracy of 0.97 and 0.95 for valence and arousal, with a mean of 0.96 for the whole digitizing process. Finally, the calculation accuracy of the motor’s rotational angle of the emotions was evaluated and compared to other models, as listed in Table 8. The accuracy of these angles greatly influenced the extent to which representation of the robot’s emotions. In this experiment, models are set up to evaluate how well each model is compared to this problem. As evaluated results, FCM achieved the accuracy of extracting emotional values; however, calculating motor angles achieved the highest accuracy of 0.97 while using the FIS model.

Integrating emotional intelligence into robots has become essential in the rapidly advancing field of robotics and human-robot interaction. This study explores the application of a Fuzzy Logic model to predict motor control angles for generating emotional expressions in robots. The research indicates their potential to enhance robots’ ability to respond to human emotions effectively using the capabilities of Fuzzy models. The authenticity of the robot is further demonstrated through the appearance and gestures of its facial muscles. Experiments are conducted to collect human anthropometric data, as digitizing emotional values based on key point movements is crucial for improving human-robot interaction. The results highlight the importance of using the Fuzzy model to bridge the gap between emotion recognition and motor control in a robotic head designed to resemble human features. The experiments evaluate the accuracy of the models, and the performance results indicate the suitability of these models, opening promising avenues for future research and development. The combined use of FCM (Fuzzy Cognitive Map) and FIS (Fuzzy Inference System) represents a significant step toward creating robots capable of realistically experiencing emotions. The proposed method proves effective with an emotion recognition process, yielding a result of 0.96. Robotics is expected to enhance human-robot connections more authentically, profoundly, and harmoniously. Future research is anticipated to refine the robot’s responses to communication scenarios. Experiments involving larger participant samples will be necessary to strengthen conclusions regarding design and robot emotions.

Acknowledgement: We acknowledge the financial support from the University of Economics in Ho Chi Minh City, Vietnam.

Funding Statement: This research is funded by the University of Economics Ho Chi Minh City–UEH, Vietnam.

Author Contributions: The authors confirm contribution to the paper as follows: Conceptualization, methodology, Nguyen Truong Thinh and Nguyen Minh Trieu; software, resources, data curation, writing—original draft preparation, visualization, Nguyen Minh Trieu; validation, formal analysis, investigation, writing—review and editing, supervision, project administration, funding acquisition, Nguyen Truong Thinh. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: All data generated or analyzed during this study are included in this published article.

Ethics Approval: Not applicable.

Conflicts of Interest: The authors declare no conflicts of interest to report regarding the present study.

References

1. Minh Trieu N, Truong Thinh N. A comprehensive review: interaction of appearance and behavior, artificial skin, and humanoid robot. J Robot. 2023;2023(3–4):e5589845. doi:10.1155/2023/5589845. [Google Scholar] [CrossRef]

2. Hanson D, Bar-Cohen Y. The coming robot revolution: expectations and fears about emerging intelligent, humanlike machines. New York: Springer; 2009. doi:10.1007/978-0-387-85349-9. [Google Scholar] [CrossRef]

3. Marchesi S, Marchesi S, Abubshait A, Kompatsiari K, Wu Y, Wykowska A. Cultural differences in joint attention and engagement in mutual gaze with a robot face. Sci Rep. 2023;13(1):11689. doi:10.1038/s41598-023-38704-7. [Google Scholar] [PubMed] [CrossRef]

4. Kensinger EA. Remembering emotional experiences: the contribution of valence and arousal. Rev Neurosci. 2004;15(4):241–52. doi:10.1515/REVNEURO.2004.15.4.241. [Google Scholar] [PubMed] [CrossRef]

5. Breazeal C, Scassellati B. A context-dependent attention system for a social robot. In: Proceedings of Sixteenth International Joint Conference on Artifical Intelligence (IJCAI1999); 1999; San Francisco, CA, USA: Morgan Kaufman Publishers Inc. p. 1146–53. [Google Scholar]

6. Hackel M, Schwope S, Fritsch J, Wrede B, Sagerer G. Humanoid robot platform suitable for studying embodied interaction. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems; 2005 Aug 2–6; Edmonton, AB, Canada. [Google Scholar]

7. Rajruangrabin J, Popa DO. Robot head motion control with an emphasis on realism of neck-eye coordination during object tracking. J Intell Robot Syst. 2011;63(2):163–90. doi:10.1007/s10846-010-9468-x. [Google Scholar] [CrossRef]

8. Oh J-H, Hanson D, Kim W-S, Han Y, Kim J-Y, Park I-W. Design of android type humanoid robot Albert HUBO. In: Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems; 2006 Oct 9–15; Beijing, China. p. 1428–33. [Google Scholar]

9. Cominelli L, Hoegen G, De Rossi D. Abel: integrating humanoid body, emotions, and time perception to investigate social interaction and human cognition. Appl Sci. 2021;11(3):1070. doi:10.3390/app11031070. [Google Scholar] [CrossRef]

10. Berns K, Hirth J. Control of facial expressions of the humanoid robot head ROMAN. In: 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems; 2006; Beijing, China: IEEE. p. 3119–24. [Google Scholar]

11. Nishio S, Ishiguro H, Hagita N. Geminoid: teleoperated android of an existing person. In: Humanoid robots: new developments; 2007. p. 343–52. doi:10.5772/39. [Google Scholar] [CrossRef]

12. Sakamoto D, Ishiguro H. Geminoid: remote-controlled android system for studying human presence. Kansei Eng Int. 2009;8(1):3–9. doi:10.5057/ER081218-1. [Google Scholar] [CrossRef]

13. Becker-Asano C, Ishiguro H. Evaluating facial displays of emotion for the android robot Geminoid F. In: Proceedings of the 2011 IEEE Workshop on Affective Computational Intelligence (WACI); 2011 Apr 11–15; Paris, France. p. 1–8. [Google Scholar]

14. Pluta I. Teatro e robótica: os androides de Hiroshi Ishiguro, em encenações de Oriza Hirata. ARJ-Art Res J: Revista De Pesquisa Em Artes. 2016;3(1):65–79. doi:10.36025/arj.v3i1.8405. [Google Scholar] [CrossRef]

15. Spatola N, Chaminade T. Cognitive load increases anthropomorphism of humanoid robot. The automatic path of anthropomorphism. Int J Hum Comput Stud. 2022;167(2):102884. doi:10.1016/j.ijhcs.2022.102884. [Google Scholar] [CrossRef]

16. Tamai T, Majima Y, Suga H, Inoue S, Murashima K. Design of health advice system for elderly people by communication robot. In: Proceedings of the 11th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2018)—HEALTHINF; 2018. Vol. 5, p. 486–91. doi:10.5220/0006637904860491. [Google Scholar] [CrossRef]

17. Fuoco E. Could a robot become a successful actor? The case of Geminoid F. Acta Universitatis Lodziensis. Folia Litteraria Polonica. 2022;65(2):203–19. doi:10.18778/1505-9057.65.11. [Google Scholar] [CrossRef]

18. Nakata Y, Yagi S, Yu S, Wang Y, Ise N, Nakamura Y, et al. Development of ‘ibuki’ an electrically actuated childlike android with mobility and its potential in the future society. Robotica. 2022;40(4):933–50. doi:10.1017/S0263574721000898. [Google Scholar] [CrossRef]

19. Darvish K, Penco L, Ramos J, Cisneros R, Pratt J, Yoshida E, et al. Teleoperation of humanoid robots: a survey. IEEE Trans Robot. 2023;39(3):1706–27. doi:10.1109/TRO.2023.3236952. [Google Scholar] [CrossRef]

20. Tong Y, Liu H, Zhang Z. Advancements in humanoid robots: a comprehensive review and future prospects. IEEE/CAA J Automatic Sinica. 2024;11(2):301–28. doi:10.1109/JAS.2023.124140. [Google Scholar] [CrossRef]

21. Liu X, Ni R, Yang B, Song S, Cangelosi A. Unlocking human-like facial expressions in humanoid robots: a novel approach for action unit driven facial expression disentangled synthesis. IEEE Trans Robot. 2024;40:3850–65. doi:10.1109/TRO.2024.3422051. [Google Scholar] [CrossRef]

22. Liu X, Chen Y, Li J, Cangelosi A. Real-time robotic mirrored behavior of facial expressions and head motions based on lightweight networks. IEEE Internet Things J. 2022;10(2):1401–13. doi:10.1109/JIOT.2022.3205123. [Google Scholar] [CrossRef]

23. Liu X, Lv Q, Li J, Song S, Cangelosi A. Multimodal emotion fusion mechanism and empathetic responses in companion robots. IEEE Trans Cogn Dev Syst. 2024:1–15. doi:10.1109/TCDS.2024.3442203. [Google Scholar] [CrossRef]

24. Ishihara H, Iwanaga S, Asada M. Comparison between the facial flow lines of androids and humans. Front Rob AI. 2021;8:540193. doi:10.3389/frobt.2021.540193. [Google Scholar] [PubMed] [CrossRef]

25. Qin B, Liang L, Wu J, Quan Q, Wang Z, Li D. Automatic identification of down syndrome using facial images with deep convolutional neural network. Diagn. 2020;10(7):487. doi:10.3390/diagnostics10070487. [Google Scholar] [PubMed] [CrossRef]

26. Armengou X, Frank K, Kaye K, Brébant V, Möllhoff N, Cotofana S, et al. Facial anthropometric measurements and principles-overview and implications for aesthetic treatments. Facial Plast Surg. 2023;40(3):348–62. doi:10.1055/s-0043-1770765. [Google Scholar] [PubMed] [CrossRef]

27. Dhulqarnain AO, Mokhtari T, Rastegar T, Mohammed I, Ijaz S, Hassanzadeh G. Comparison of nasal index between Northwestern Nigeria and Northern Iranian populations: an anthropometric study. J Maxillofac Oral Surg. 2020;19(4):596–602. doi:10.1007/s12663-019-01314-w. [Google Scholar] [PubMed] [CrossRef]

28. Farkas LG. Accuracy of anthropometric measurements: past, present, and future. Cleft Palate Craniofac J. 1996;33(1):10–22. doi:10.1597/1545-1569_1996_033_0010_aoampp_2.3.co_2. [Google Scholar] [PubMed] [CrossRef]

29. Minh Trieu N, Truong Thinh N. The anthropometric measurement of nasal landmark locations by digital 2D photogrammetry using the convolutional neural network. Diagn. 2023;13(5):891. doi:10.3390/diagnostics13050891. [Google Scholar] [PubMed] [CrossRef]

30. Ibáñez-Berganza M, Amico A, Loreto V. Subjectivity and complexity of facial attractiveness. Sci Rep. 2019;9(1):8364. doi:10.1038/s41598-019-44655-9. [Google Scholar] [PubMed] [CrossRef]

31. Mori M, MacDorman KF, Kageki N. The uncanny valley [from the field]. IEEE Robot Autom Mag. 2012;19(2):98–100. doi:10.1109/MRA.2012.2192811. [Google Scholar] [CrossRef]

32. Ferrey AE, Burleigh TJ, Fenske MJ. Stimulus-category competition, inhibition, and affective devaluation: a novel account of the uncanny valley. Front Psychol. 2015;6(1488):249. doi:10.3389/fpsyg.2015.00249. [Google Scholar] [PubMed] [CrossRef]

33. Mathur MB, Reichling DB, Lunardini F, Geminiani A, Antonietti A, Ruijten PA, et al. Uncanny but not confusing: multisite study of perceptual category confusion in the Uncanny Valley. Comput Human Behav. 2020;103(4):21–30. doi:10.1016/j.chb.2019.08.029. [Google Scholar] [CrossRef]

34. Kim B, de Visser E, Phillips E. Two uncanny valleys: re-evaluating the uncanny valley across the full spectrum of real-world human-like robots. Comput Human Behav. 2022;135(6):107340. doi:10.1016/j.chb.2022.107340. [Google Scholar] [CrossRef]

35. Cheetham M, Suter P, Jäncke L. The human likeness dimension of the uncanny valley hypothesis: behavioral and functional MRI findings. Front Hum Neurosci. 2011;5:126. doi:10.3389/fnhum.2011.00126. [Google Scholar] [PubMed] [CrossRef]

36. Ali MF, Khatun M, Turzo NA. Facial emotion detection using neural network. Int J Sci Eng Res. 2020;11:1318–25. [Google Scholar]

37. Lindsley DB. Emotion. In: Stevens SS, editor. Handbook of experimental psychology. Oxford, UK: Wiley; 2018. p. 473–516. [Google Scholar]

38. Tracy JL, Randles D. Four models of basic emotions: a review of Ekman and Cordaro, Izard, Levenson, and Panksepp and Watt. Emot Rev. 2011;3(4):397–405. doi:10.1177/1754073911410747. [Google Scholar] [CrossRef]

39. Ekman P. An argument for basic emotions. Cogn Emot. 1992;6(3–4):169–200. doi:10.1080/02699939208411068. [Google Scholar] [CrossRef]

40. Plutchik R. A general psychoevolutionary theory of emotion. In: Theories of Emotion. Amsterdam, The Netherlands: Elsevier; 1980. 333 p. [Google Scholar]

41. Feidakis M, Daradoumis T, Caballé S. Endowing e-learning systems with emotion awareness. In: Proceedings of the 2011 Third International Conference on Intelligent Networking and Collaborative Systems; 2011 Nov 30–Dec 2; Fukuoka, Japan. p. 68–75. [Google Scholar]

42. Russell JA. A circumplex model of affect. J Pers Soc Psychol. 1980;39(6):1161. doi:10.1037/h0077714. [Google Scholar] [CrossRef]

43. Panksepp J. Affective neuroscience: the foundations of human and animal emotions. Oxford University Press; 2004 [cited 2024 Dec 30]. [Google Scholar]

44. Damasio A. Descartes’ error: emotion, reason and the human brain. New York: G. P. Putnam’s Sons; 1994. [Google Scholar]

45. Miah ASM, Shin J, Islam MM, Molla MKI. Natural human emotion recognition based on various mixed reality (MR) games and electroencephalography (EEG) signals. In: 2022 IEEE 5th Eurasian Conference on Educational Innovation (ECEI); 2022 Feb 10–12; Taipei, Taiwan. p. 408–11. [Google Scholar]

46. Wu Y, Gu R, Yang Q, Luo YJ. How do amusement, anger and fear influence heart rate and heart rate variability? Front Neurosci. 2019;13:1131. doi:10.3389/fnins.2019.01131. [Google Scholar] [PubMed] [CrossRef]

47. Ekman P. An argument for basic emotions. Cognition Emotion. 1999;6(3–4):169–200. doi:10.1080/02699939208411068. [Google Scholar] [CrossRef]

48. Toan NK, Le Duc Thuan LBL, Thinh NT. Development of humanoid robot head based on FACS. Int J Mech Eng Robot Res. 2022;11(5):365–72. doi:10.18178/ijmerr.11.5.365-372. [Google Scholar] [CrossRef]

49. Talpur N, Abdulkadir SJ, Alhussian H, Hasan MH, Aziz N, Bamhdi A. Deep neuro-fuzzy system application trends, challenges, and future perspectives: a systematic survey. Artif Intell Rev. 2023;56(2):865–913. doi:10.1007/s10462-022-10188-3. [Google Scholar] [PubMed] [CrossRef]

50. Auflem M, Kohtala S, Jung M, Steinert M. Facing the FACS—using AI to evaluate and control facial action units in humanoid robot face development. Front Rob AI. 2022;9:887645. doi:10.3389/frobt.2022.887645. [Google Scholar] [PubMed] [CrossRef]

51. Ekman P, Friesen WV. Facial action coding system: a technique for the measurement of facial movement. Palo Alto: Consulting Psychologists Press; 1978. [Google Scholar]

52. Lucey P, Cohn JF, Kanade T, Saragih J, Ambadar Z, Matthews I. The extended Cohn-Kanade dataset (CK+a complete dataset for action unit and emotion-specified expression. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition—Workshops; 2010; IEEE. p. 94–101. [Google Scholar]

53. Bezdek JC. Pattern recognition with fuzzy objective function algorithms.In: Advanced applications in pattern recognition. Vol. 25. New York, NY, USA. Philadelphia, PA, USA: Springer; 1981. p. 442. [Google Scholar]

54. Lalitharatne TD, Tan Y, Leong F, He L, Van Zalk N, De Lusignan S, et al. Facial expression rendering in medical training simulators: current status and future directions. IEEE Access. 2020;8:215874–91. doi:10.1109/ACCESS.2020.3041173. [Google Scholar] [CrossRef]

55. Abdelmaksoud SI, Al-Mola MH, Abro GEM, Asirvadam VS. In-depth review of advanced control strategies and cutting-edge trends in robot manipulators: analyzing the latest developments and techniques. IEEE Access. 2024;12(1):47672–701. doi:10.1109/ACCESS.2024.3383782. [Google Scholar] [CrossRef]

Cite This Article

Copyright © 2025 The Author(s). Published by Tech Science Press.

Copyright © 2025 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools