Open Access

Open Access

ARTICLE

ANNDRA-IoT: A Deep Learning Approach for Optimal Resource Allocation in Internet of Things Environments

1 Department of Electrical and Electronic Engineering, College of Engineering and Computer Science, Jazan University, Jazan, 45142, Saudi Arabia

2 Department of Information Technology, The University of Haripur, Haripur, 22620, Pakistan

3 College of Computer Science, King Khalid University, Abha, 62529, Saudi Arabia

4 Department of Computing, College of Engineering and Computing, Umm Al-Qura University, Makkah, 21955, Saudi Arabia

* Corresponding Author: Abdullah M. Alqahtani. Email:

(This article belongs to the Special Issue: Leveraging AI and ML for QoS Improvement in Intelligent Programmable Networks)

Computer Modeling in Engineering & Sciences 2025, 142(3), 3155-3179. https://doi.org/10.32604/cmes.2025.061472

Received 25 November 2024; Accepted 30 January 2025; Issue published 03 March 2025

Abstract

Efficient resource management within Internet of Things (IoT) environments remains a pressing challenge due to the increasing number of devices and their diverse functionalities. This study introduces a neural network-based model that uses Long-Short-Term Memory (LSTM) to optimize resource allocation under dynamically changing conditions. Designed to monitor the workload on individual IoT nodes, the model incorporates long-term data dependencies, enabling adaptive resource distribution in real time. The training process utilizes Min-Max normalization and grid search for hyperparameter tuning, ensuring high resource utilization and consistent performance. The simulation results demonstrate the effectiveness of the proposed method, outperforming the state-of-the-art approaches, including Dynamic and Efficient Enhanced Load-Balancing (DEELB), Optimized Scheduling and Collaborative Active Resource-management (OSCAR), Convolutional Neural Network with Monarch Butterfly Optimization (CNN-MBO), and Autonomic Workload Prediction and Resource Allocation for Fog (AWPR-FOG). For example, in scenarios with low system utilization, the model achieved a resource utilization efficiency of 95% while maintaining a latency of just 15 ms, significantly exceeding the performance of comparative methods.Keywords

The Internet of Things (IoT) has become a significant technological paradigm, redefining the interaction and integration of devices within the digital ecosystem. [1–4]. In IoT systems, the main focus is to achieve seamless integration in several dominions with the help of an elaborate network consisting of connected sensors [5], actuators and intelligent devices [6]. This connected architecture can allow a wide range of applications [7], to be automated, allowing data-driven decision making to be realized; these include addressing various critical quality attributes. However, the increasing scale and complexity of IoT ecosystems implies the need for robust mechanisms that can ensure optimal performance in the presence of challenges related to computational resource constraints [8–10]. The resolution of these challenges is very significant for the widespread adoption of IoT in practical applications in the real world [11–14]. Resource allocation is one of the major challenges to the implementation of IoT systems [15]. Resource allocation needs to be efficiently distributed within the growing device network. Conventional static models used for resource allocation do not adapt in dynamic IoT environments. This lack leads to a deficient utilization of resources [16], increased latency, and high energy consumption [17,18].

Resource allocation remains one of the critical challenges in the deployment of IoT systems [19]. A challenging issue is how to distribute limited resources effectively among the increasing number of interconnected devices [20]. The traditional static models of allocation, though at the foundation, have some inherent limitations. They lack the flexibility needed to adapt to the ever-changing resource demands of IoT ecosystems [21,22]. These models rely on a predefined resource distribution, assuming certain fixed and static requirements. This rigidity significantly reduces the efficiency of IoT systems, whose resource requirements may change over time [23]. Static allocation methods do not fill the gaps in resources dynamically as they appear; this causes unnecessary delays, increased energy consumption, and a lack of efficiency that undermines the sustainability of IoT environments [24–26]. Overcoming these limitations will definitely help IoT systems perform at their best under dynamic real-world conditions.

Conventional static allocation models, which are designed based on fixed resource requirement assumptions, are not able to cope with the dynamically changing requirements typical of the IoT ecosystem. These models consider rigid distributions of resources, thus not fitting in well for situations where resource needs vary over time. This limited adaptability often introduces a number of inefficiencies, which manifest themselves through increased latency or unnecessary/extra energy consumptions, which impact the operational efficiency and sustainability of IoT infrastructures accordingly [27–29]. These inefficiencies limit Adaptive Neural Network-based Dynamic Resource Allocation (ANNDRA-IoT) approaches, necessitating long- and short-term memory (LSTM) networks with both long- and short-term memories for a proposal. It sets the performance optimally by using real-time analytical resources, hence dynamically adjusting the resources according to their ever-changing conditions via IoT systems. This kind of adaptability can be achieved very well in diverse IoT ecological surroundings, which was beyond the efficacy of previous ones. The proposed ANNDRA-IoT has mainly performed the following:

• LSTM-Based Real-Time Dynamic Resource Allocation in the IoT. The model outsmarts traditional static allocation methods by learning to adapt to unique patterns in data flows in the IoT.

• Optimized distribution of resources by predicting and adapting to changes in IoT networks. This would result in reductions in latency and energy consumption.

• The effectiveness in scalability and adaptability to manage resources in the various IoT scenarios. This flexibility expands the applicability of ANNDRA-IoT in many applications.

The following sections are arranged as follows. Section 2 provides a comprehensive review of the literature. Section 3 presents the proposed ANNDRA-IoT approach. Section 4-Experimental Simulation & Results. Section 5 presents a detailed discussion of the results. Finally, Section 6 serves as the conclusion of the article.

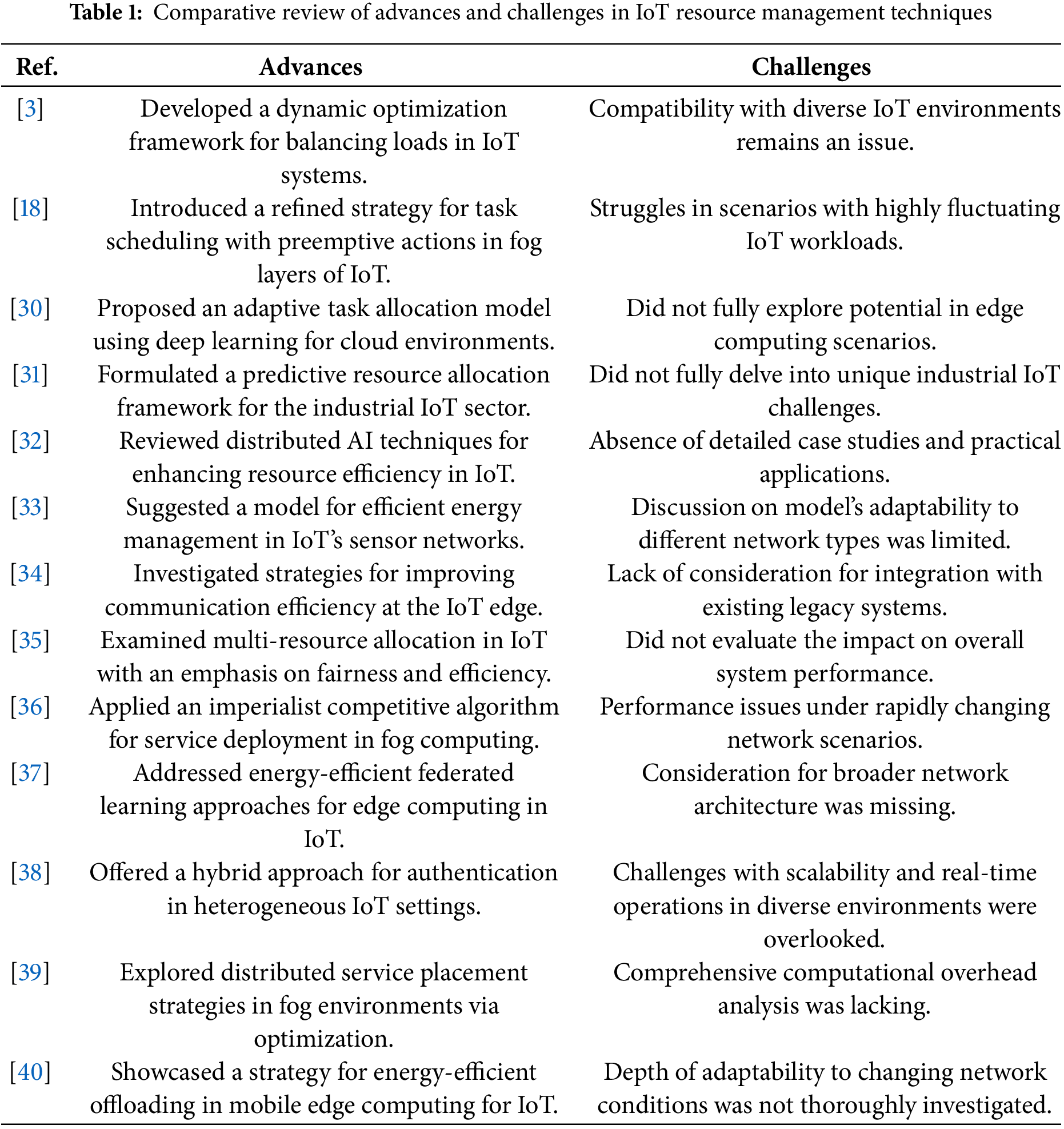

The IoT has become a transformative technology, significantly altering the way computing and communication systems function. It has introduced new paradigms of connectivity and interaction across a wide range of devices and systems. However, efficiently managing resources in IoT environments remains a significant challenge. This section analyzes recent advances and methodologies in the management of IoT resources. Table 1 summarizes these approaches, highlights their strengths, and identifies the gaps that the proposed ANNDRA-IoT model aims to address.

2.1 Existing State-of-the-Art Approaches

In [3], the authors proposed a dynamic load balancing mechanism to improve the efficiency and adaptability of the IoT environment. Their research work highlighted how dynamic resource management approaches can provide a solution to fluctuating workloads in IoT environments. Similarly, study [18] proposed an optimized task-scheduling mechanism specifically for fog-assisted IoT environments. It develops this mechanism to introduce preemptive features in scheduling architectures. This concept has been closely aligned with our efforts to improve responsiveness in IoT systems. The study in [30] presented an overview of the secure and adaptive deep learning cloud task scheduling. In their approach, by marrying security with efficiency, there is a developed focus on managing computational tasks efficiently, hence laying down the principles that could echo the ANNDRA-IoT framework objectives. Meanwhile, in [31], the authors have proposed an autonomic framework for workload prediction and resource allocation in industrial IoT systems. Predictive analysis and autonomic allocation strategies in their work share common goals with ANNDRA-IoT and point out the necessity for intelligent resource management.

The authors in [32] conducted a comprehensive review of resource-efficient distributed AI methods for IoT applications. Their review highlighted the increasing demand for AI-driven solutions, laying the foundations for integrating AI to optimize IoT resource allocation-a central theme in our research. On the other hand, study [33] developed an energy management model for wireless sensor networks in IoT systems, emphasizing resource optimization. Finally, reference [34] studied the AI-driven communication-efficient method at the edge of IoT. The contributions of [34] complement our work in minimizing communications overhead in IoT settings. Furthermore, study [35] proposed a multiresource allocation approach with fairness constraints; the ANNDRA-IoT model developed has considered the fairness of coverage consideration for resource allocation.

In [36], an imperialist competitive algorithm was used to implement IoT services in fog computing, focusing on resource utilization. Similarly, study [37] proposed a federated learning technique to achieve energy efficiency and resource optimization within environmentally sustainable IoT edge intelligence systems. These studies align with the goals of ANNDRA-IoT to maintain energy-efficient operations. Lastly, study [38] presented a hybrid authentication architecture for heterogeneous IoT systems, blending centralized and blockchain-based methods. This approach addresses security and efficiency, critical to the secure functioning of IoT environments. Works such as [39] and [40] further contribute to this discourse by exploring distributed IoT service placement and energy-efficient task offloading strategies, respectively.

2.2 Comparison of LSTM in IoT Resource Management

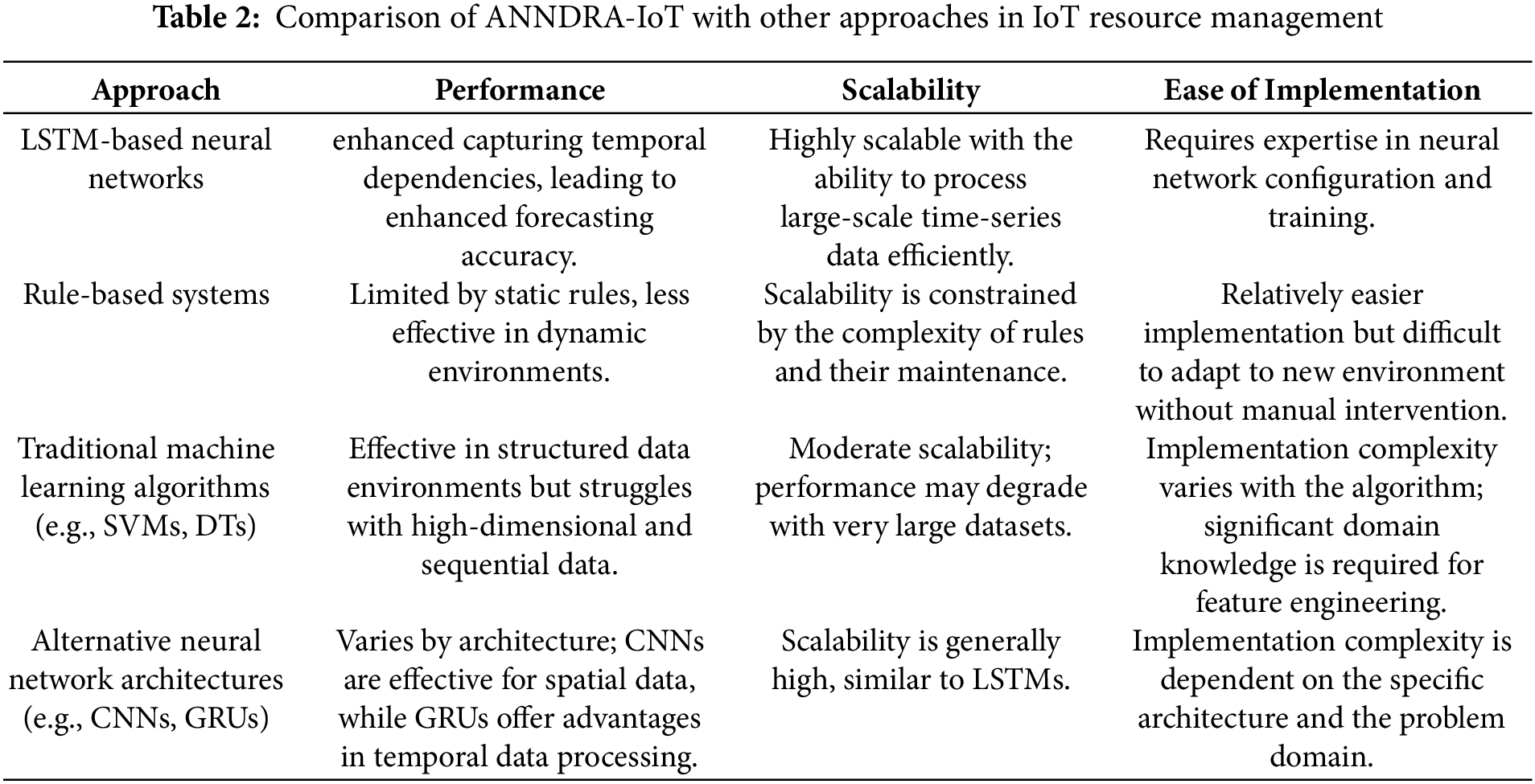

The management of resources within the IoT has seen significant advancements through the adoption of LSTM neural networks. Table 2 provides a comparison including rule-based systems, classic machine learning techniques, and different neural network designs.

LSTM, a type of recurrent neural network, has the distinct ability to identify and retain temporal patterns, making it essential for managing resources in IoT scenarios characterized by time-series data. In [41], experimental evidence demonstrated that LSTMs exhibited superior predictive performance by effectively capturing temporal dynamics. This capability improves resource allocation strategies, thus improving the responsiveness of IoT systems [13,41,42]. In contrast, rule-based systems perform inadequately in dynamic IoT environments due to their static nature. Their inability to adapt to evolving data patterns renders them ineffective compared to LSTM models, which can seamlessly integrate new information and maintain consistent performance as system configurations evolve.

Conventional machine learning techniques, including support vector machines and decision trees, played a fundamental role in the early stages of IoT resource management. However, their reliance on manual feature selection and significant domain expertise limits their applicability in complex, high-dimensional sequential data scenarios [43,44]. LSTMs address these limitations by processing intricate sequential information with remarkable efficiency, which makes them particularly suited to the sophisticated demands of IoT frameworks. Other neural network architectures, such as convolutional neural networks (CNNs) and gated recurring units (GRUs), have their own strengths in the management of spatial and temporal data [45]. Hybrid models that integrate CNNs with LSTM have proven especially effective in tasks such as solar irradiance prediction and photovoltaic power [46]. These combinations balance computational efficiency with predictive accuracy, overcoming the constraints of single-architecture models in IoT applications [47].

3 Proposed ANNDRA-IoT Approach

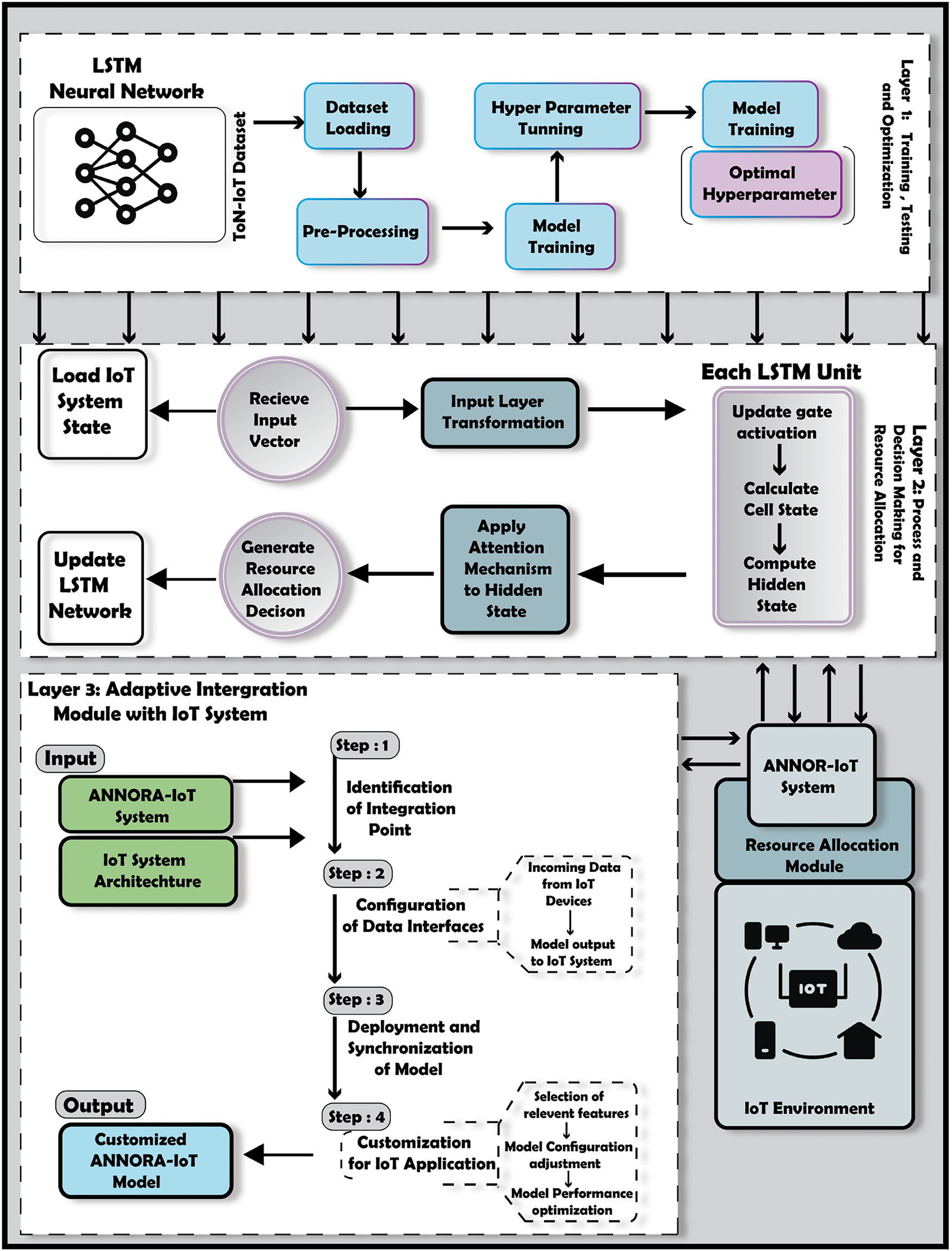

This ANNDRA-IoT framework implements a type of neural network known as long-short-term memory neural networks to address disorders in resource allocation in heterogeneous IoT environments. This framework has considered IoT devices’ heterogeneity by being able to adapt at run-time to different device capabilities and demands. The implementation details of ANNDRA-IoT will be elaborated here, which mentions the key contributions towards increasing system performance with the optimization of resource utilization.

3.1 Overview of Proposed Approach (ANNDRA-IoT)

ANNDRA-IoT has been proposed for resource management in highly heterogeneous IoTs. Introduce reconfigurability for adaptation in versatile and complex scenarios related to IoT ecosystems. By incorporating LSTM neural networks with Artificial Neural Networks (ANNs), ANNDRA-IoT enables dynamic allocation of resources, thus performing self-adaptation even under complex operational conditions. This system uses prediction capabilities as an intelligent one, working in real time to manage network and computational resources through device utilization patterns.

The architecture of ANNDRA-IoT includes LSTM units responsible for processing input data streams from a variety of IoT devices. These units analyze resource usage patterns and generate resource allocation decisions. Fig. 1 illustrates the architecture, showcasing the interaction among the system’s components and the data flow within ANNDRA-IoT.

Figure 1: The architecture of the proposed ANNDRA-IoT appraoch

3.2 LSTM-Based Real-Time IoT Resource Allocation

This section introduces a new LSTM neural network that is suitable for real-time IoT resource allocation. The framework is based on stochastic calculus, tensor analysis, variational optimization, differential geometry, and information theory; thus, it can learn sophisticated temporal and multi-dimensional dynamics of an IoT system.

Consider an IoT environment comprising a set of devices

where

where

where

where

where

where

where

where

where

with

where

where

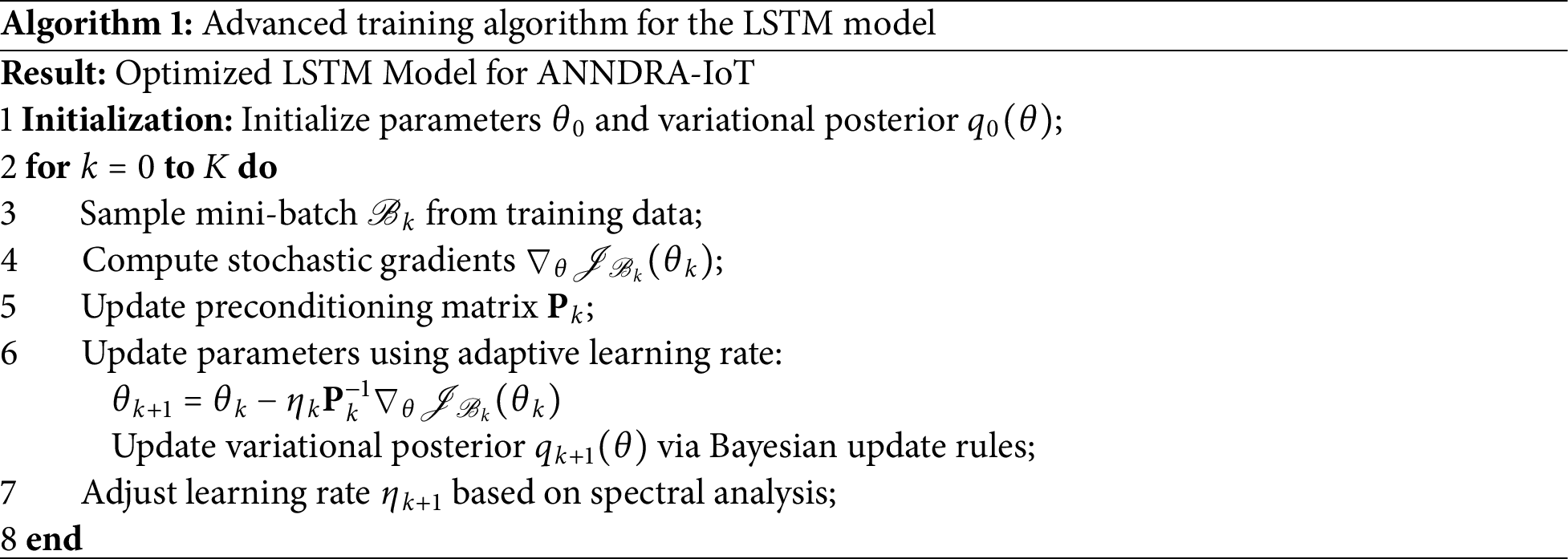

3.3 Training and Optimization Framework for the LSTM Model

This section presents a novel mathematical framework for training and optimizing the LSTM model within the ANNDRA-IoT architecture. Let

where

where

where

where

with

where

where

with

where

where

and

where

where

where

We incorporate constraints into the optimization problem to ensure feasibility with respect to resource limitations:

where

for some

3.4 Advanced Framework for Adaptation and Scalability of the LSTM Model in IoT Systems

This framework incorporated decentralized optimization, variational inference, and nonlinear dynamical systems to address the challenges posed by the heterogeneous and dynamic nature of IoT environments.

3.4.1 Manifold Embedding for Adaptive Representations

Let

By operating on manifold embeddings, the model adapts to the underlying data structure, improving generalization. To ensure scalability, we model the LSTM parameters as elements of a Hilbert space

where

We use a consensus-based algorithm where each device updates its local parameters

where

where

Using Lyapunov’s direct method, we establish conditions for the asymptotic stability of the system by finding a Lyapunov function

where

where

3.5 Integration with IoT Systems

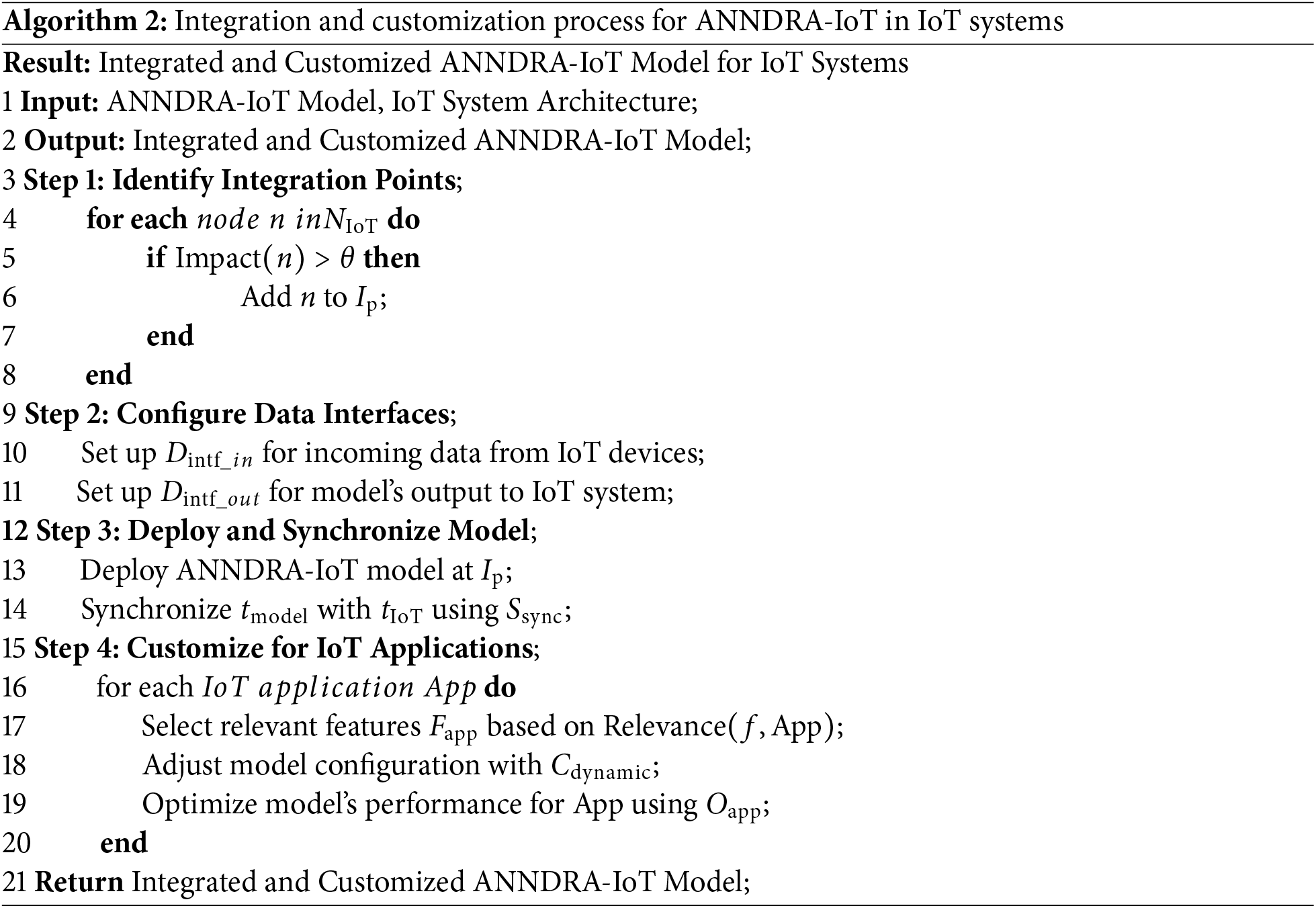

The development of the integrative ANNDRA-IoT model in existing and future IoT systems is the last significant step towards a deployed model with full capabilities, as shown in Algorithm 2.

3.5.1 Process of Integrating ANNDRA-IoT in IoT Environments

The integration of ANNDRA-IoT within diverse IoT environments is a multifaceted process, involving several key steps to ensure seamless functionality and compatibility. This process is not just a mere deployment of the model but a harmonious fusion with the existing IoT ecosystem. The first step involves identifying specific points within the IoT infrastructure where the ANNDRA-IoT model can be most effective. This involves analyzing the architecture of the IoT network and pinpointing nodes where resource allocation decisions have the most significant impact. Let

where

where

where

3.5.2 Customization for Various IoT Applications

Customization is one of the building blocks to integrate ANNDRA-IoT into different IoT applications. Every IoT application has its unique features and requirements, and hence a tailored approach to its integration and realization. The customization process starts with selecting the relevant features for every tailored IoT application. Let

where

where

where

4 Experimental Simulation and Results

This section elaborates the evaluation of the ANNDRA-IoT model through the experimental simulations. The experimental simulations were conducted with the use of simulation of falling edge computing technology within the IoTSim-Edge simulator. To ensure the robustness of the ANNDRA-IoT system, objective and subjective performance evaluations were performed. Objective evaluations include quantitative metrics such as resource utilization efficiency, response time, system performance, load balance effectiveness, and latency. Subjective evaluations emphasize practical implications, demonstrating the system’s effectiveness in addressing real-world challenges like dynamic resource allocation and scalability in IoT environments. Extensive simulations have been conducted against several cutting-edge approaches to demonstrate the superiority of ANNDRA-IoT in optimizing resource allocation. Specifically, we compared ANNDRA-IoT with the following models: DEELB [3], OSCAR [18], CNN-MBO [30] and AWPR-FOG [31].

IoTSim-Edge simulator emulates the complex IoT ecosystem within the simulation environment. The basis of our training and testing stages comprised data from the TON_IoT Dataset, obtained from UNSW Research. The realization also utilizes IoTSim-Edge’s API for integrating the model within the simulated IoT environment.

4.2 Performance Evaluation Metrics

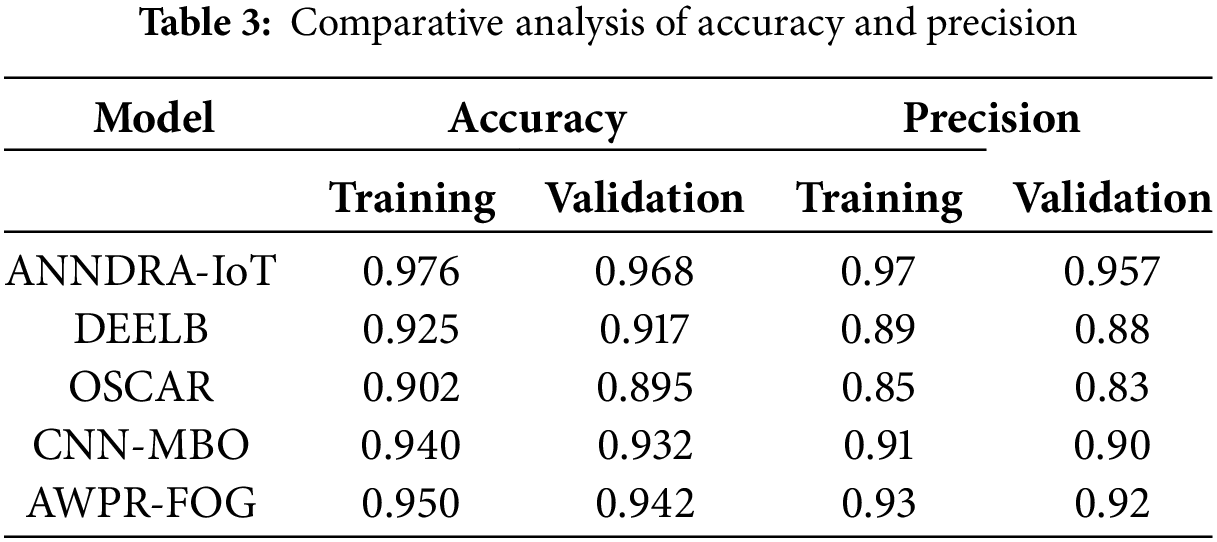

This section discusses the key performance metrics to be used to assess the efficacy of the ANNDRA-IoT model in optimal resource allocation within IoT environments. This indicates that 100 training epochs of the ANNDRA-IoT model accuracy metric are tracked. In a comparative analysis, the internal accuracy of the ANNDRA-IoT model over time was benchmarked compared to four important models in this field, namely DEELB, AWPR-FOG, and OSCAR. At epoch 100, the ANNDRA-IoT model outperformed the competing models with a training accuracy of 0.976 and a validation accuracy of 0.968 as illustrated in Table 3. In contrast, the DEELB model reported a training accuracy of 0.925 and a validation accuracy of 0.917, while the OSCAR model achieved a training accuracy of 0.902 and a validation accuracy of 0.895. The CNN-MBO model presented a training accuracy of 0.940 and a validation accuracy of 0.932, and the AWPR-FOG model showed a training accuracy of 0.950 and a validation accuracy of 0.942.

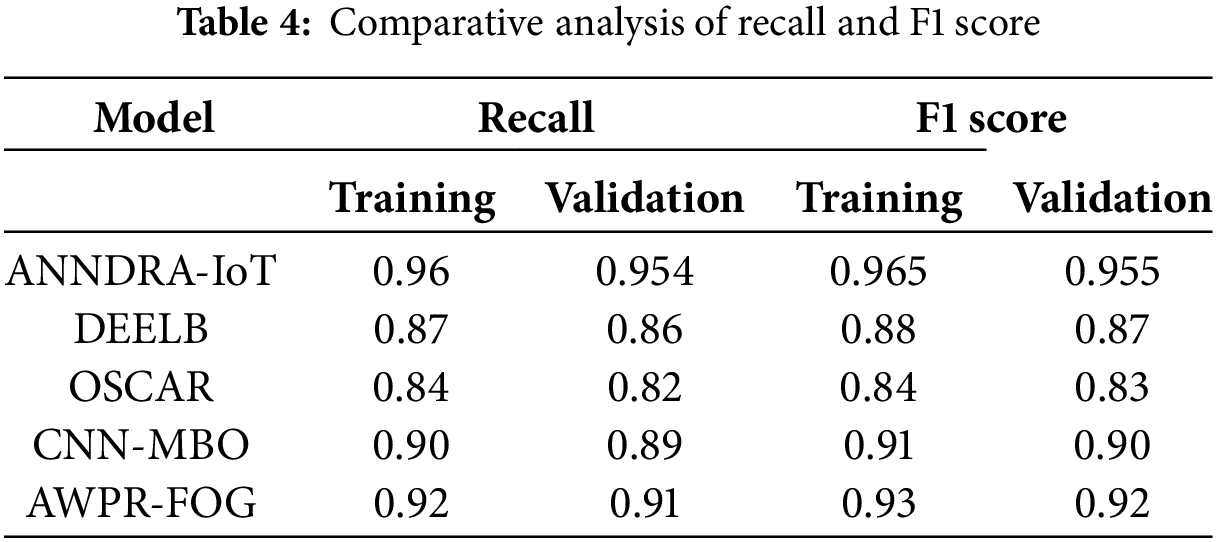

The precision and recall of the ANNDRA-IoT model were observed for the 100 training epochs. The precision and recall of ANNDRA-IoT were compared with other algorithms. It can be seen in Table 4 that upon completion of the training, the ANNDRA-IoT model exhibited a training precision value of 0.97 and a recall value of 0.96. The precision and recall of the ANNDRA-IoT model in the classification states were recorded as the model was trained over 100 training epochs to assess its classification efficacy. At the end of the training, the ANNDRA-IoT model achieved training and validation precision of 0.97 and 0.96, and recall of 0.96 and 0.96, surpassing the corresponding metrics of the algorithms compared as represented in Table 4. The DEELB model was trained to achieve a precision of 0.89 and 0.88 and a recall of 0.87 and 0.86, for training and validation, respectively. OSCAR was trained and validated to reach a precision of 0.85 and 0.84 and a recall of 0.83 and 0.82, respectively. The CNN-MBO approach achieved a training precision of 0.91 and a validation precision of 0.90, a training recall of 0.90 and validation recall of 0.89. Finally, the AWPR-FOG model needed to achieve training precision of 0.93 and recall of 0.92, with corresponding validation measurements of 0.92 and 0.91.

For benchmarking the performance of the ANNDRA-IoT model, the F1 score’s performance was compared to that of DEELB, OSCAR, CNN-MBO, and AWPR-FOG, where this comparison was done against the F1 score at the last epoch, which is 100. Herein, the ANNDRA-IoT model has given the highest performance with 0.965 of the training F1 score and 0.955 of the validation F1 score.

In this comparison, the DEELB model reached 0.88 for a training F1 score and 0.87 for a validation F1 score according to [3]. Besides, OSCAR, which was proposed in [18], reported 0.84 for a training F1 score and 0.83 for a validation F1 score, while the CNN-MBO approach of [30] had 0.91 for a training F1 score and 0.90 for a validation F1 score. The last model, AWPR-FOG, proposed in [31] reached 0.93 F1 score during training, and its F1 score when validated was 0.92.

4.3 Resource Allocation Optimization Metrics

In a comprehensive way of assessing the effectiveness of ANNDRA-IoT as a framework to optimize resource allocation in IoT environments, a set of custom metrics is used, including efficiency, response time, and overall system performance.

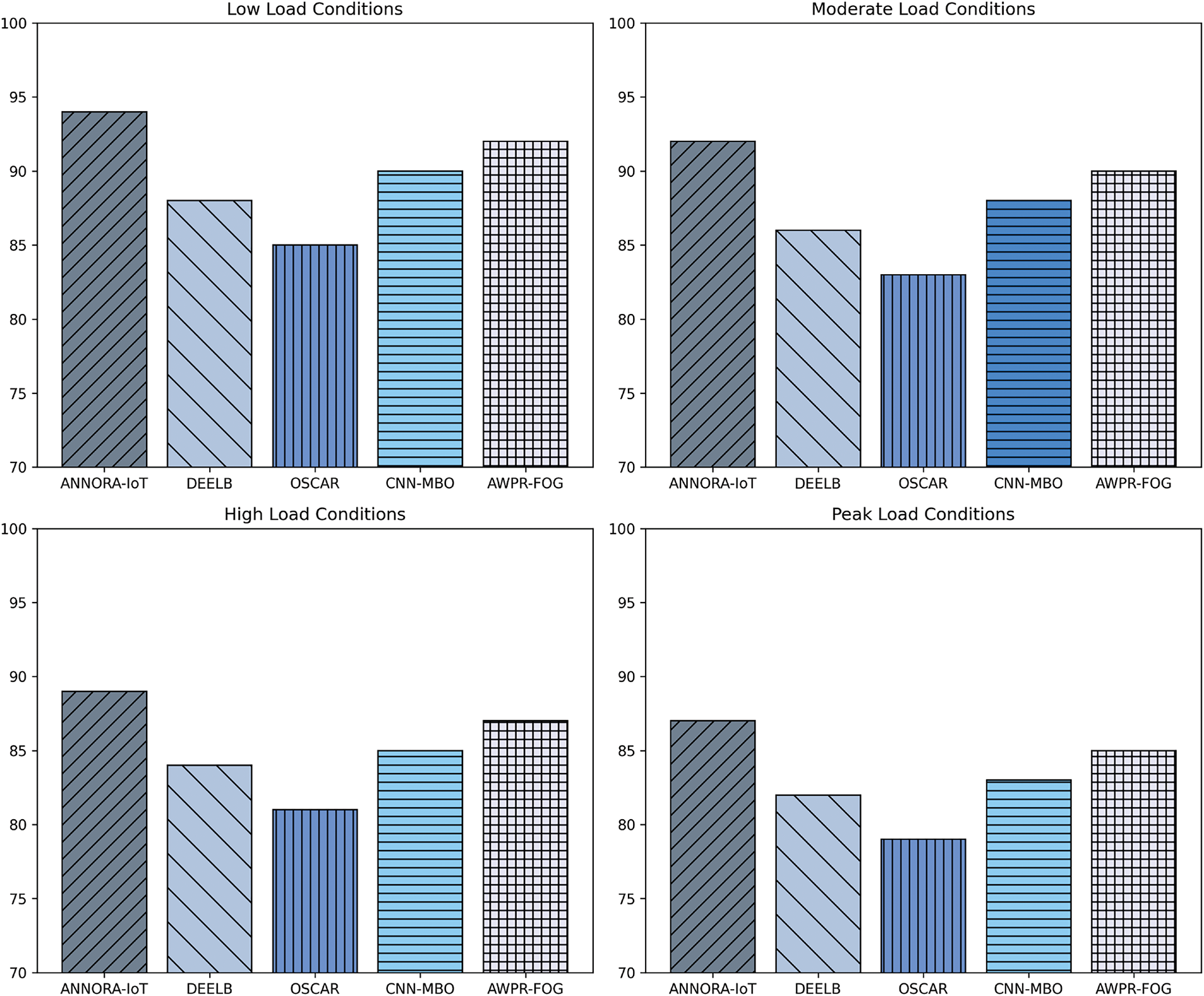

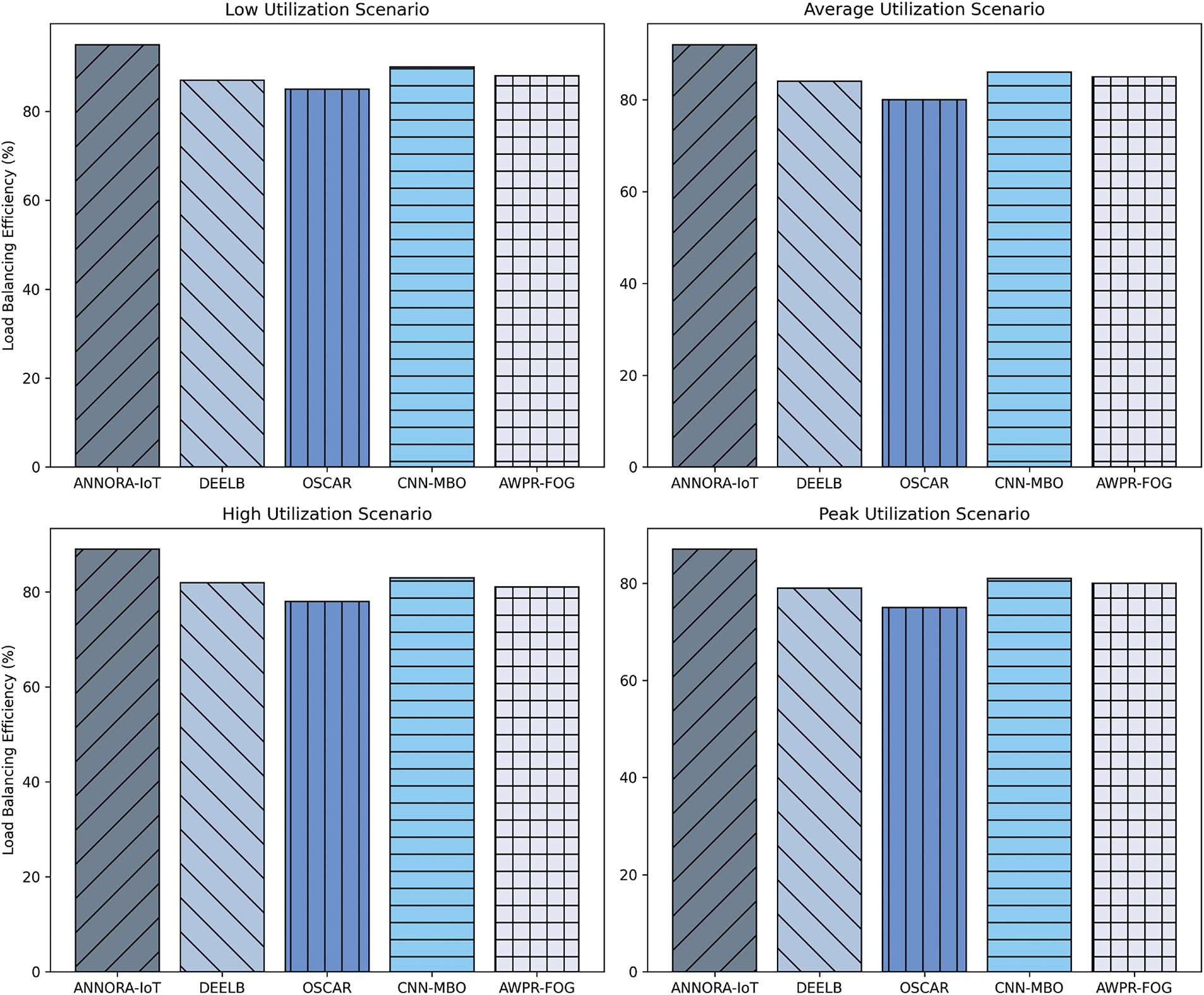

4.3.1 Resource Utilization Efficiency

Efficiency in resource utilization is one of the important metrics concerned with IoT systems; hence, a significant amount of performance analysis needs to be taken into consideration regarding variable load conditions. It was estimated that, when the load was low, the ANNDRA-IoT model gained an efficiency rate of 94%, which definitely outperformed the compared models: DEELB with 88%, OSCAR 85%, CNN-MBO 90%, and AWPR-FOG 92%. Thus, these numeric results depict that this model has great capability for the optimization of resources in under utilization conditions. Shown here in Fig. 2 are the performance results in different scenarios.

Figure 2: Load-varying efficiency comparison of IoT resource management models

In the case of the application of moderate load, ANNDRA-IoT maintained an efficiency of 92%, while DEELB achieved an efficiency of 86%, OSCAR similarly reached 83%, CNN-MBO recorded 88%, and AWPR-FOG recorded 90%. Finally, for increasing load conditions, the efficiency of the ANNDRA-IoT model was at 89%, well above that of DEELB at 84%, OSCAR at 81%, CNN-MBO at 85%, and AWPR-FOG at 87%, reflecting the capability of the model to manage resources in times of high load with much more efficiency. At peak load conditions, the ANNDRA-IoT model achieved an efficiency of 87%, exceeding the performance of DEELB (82%), OSCAR (79%), CNN-MBO (83%) and AWPR-FOG (85%), which demonstrated superior performance in resource management under maximum demand.

4.3.2 Resource Allocation Response Time

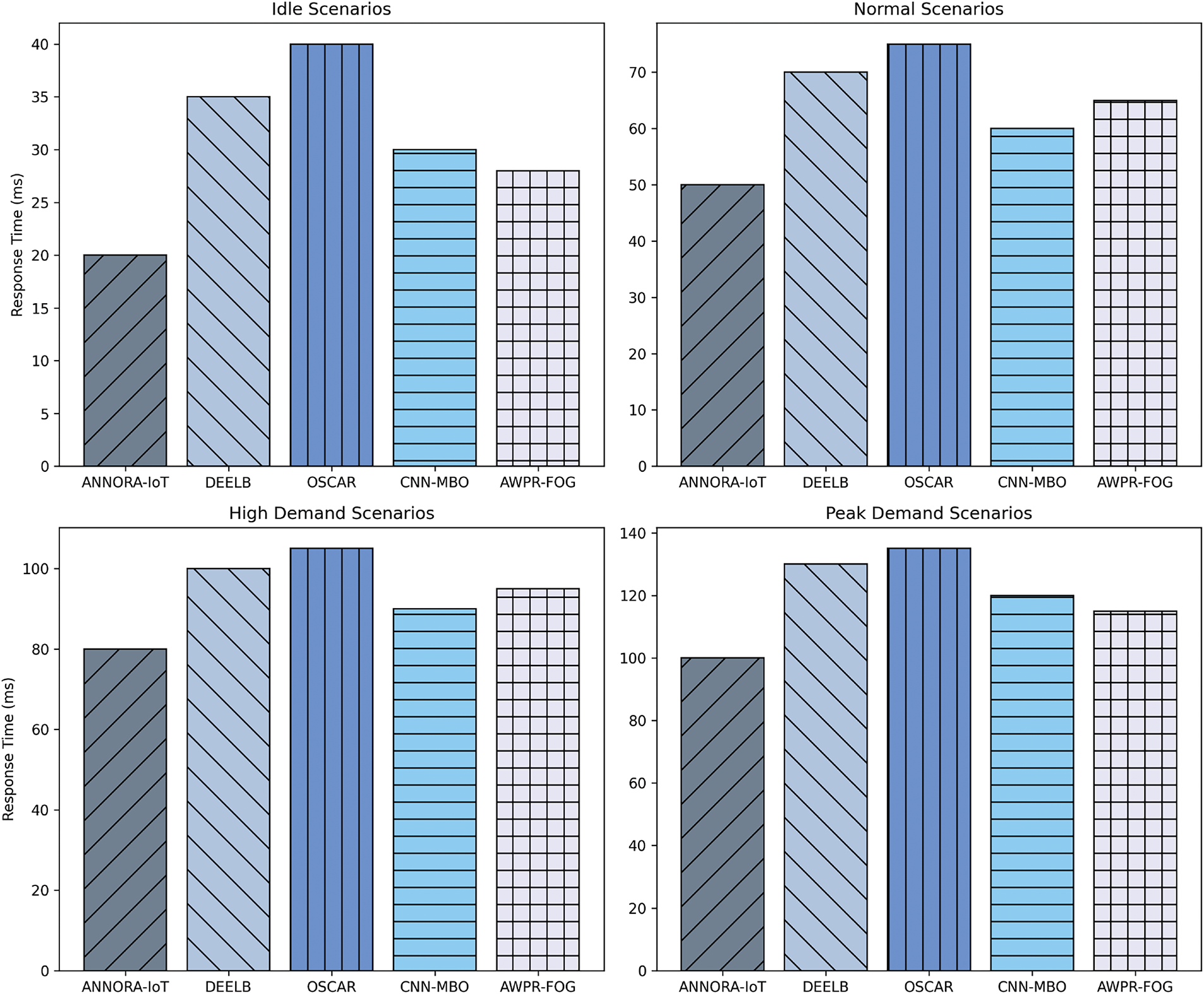

Resource allocation responsiveness time is one of the useful performance indices of a model under varied operational conditions in IoT systems for a useful decision. In idle scenarios, characterized by minimal resource demands, the ANNDRA-IoT model demonstrated a rapid response time of 20 ms (see Fig. 3), significantly faster than DEELB (35 ms), OSCAR (40 ms), CNN-MBO (30 ms) and AWPR-FOG (28 ms).

Figure 3: Comparative analysis of resource allocation response times under varying operational scenarios

Although ANNDRA-IoT had a higher response time during high-demand situations, where resource allocation becomes more difficult, of 80 ms vs. DEELB, OSCAR, CNN-MBO, and AWPR-FOG, who had 100, 105, 90, and 95 ms. Finally, in the peak demand condition, which represents the testing point at both the resource and system limit, ANNDRA-IoT records a response time of 100 ms, overcoming DEELB (130 ms), OSCAR (135 ms), CNN-MBO (120 ms), and AWPR-FOG (115 ms).

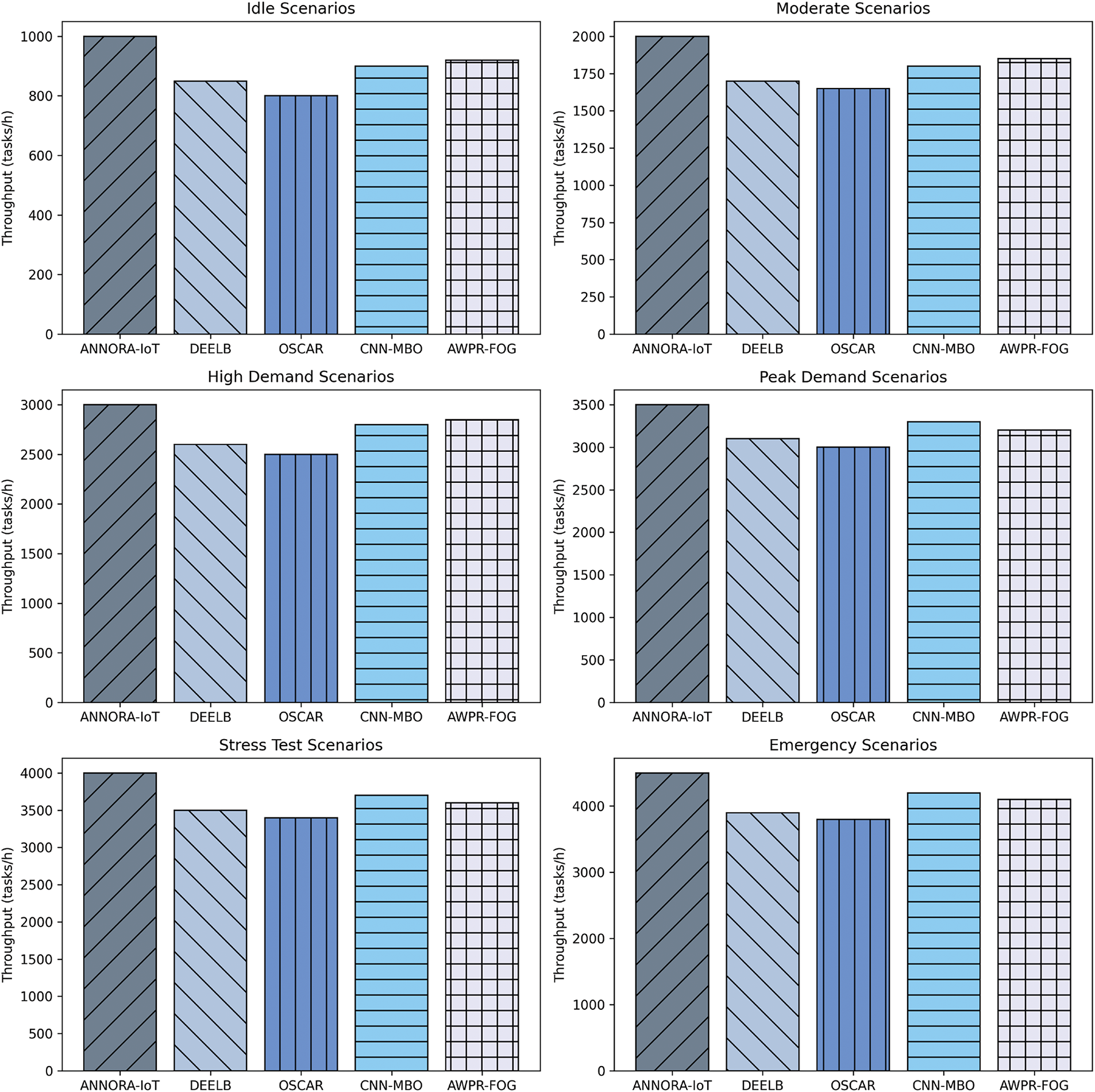

This study computed the overall work handled by the IoT system under the operational scenarios considered, which inherently represent quite challenging and distinctly different conditions. However, the results are presented in Fig. 4. In the idle scenario, where the system load was minimal, the throughput in the ANNDRA-IoT model was 1000 tasks per hour, much better than that of DEELB (850 tasks/h), OSCAR (800 tasks/h), CNN-MBO (900 tasks/h) and AWPR-FOG (920 tasks/h). In regular demands of operations, a moderate use scenario was observed through the throughput of the ANNDRA-IoT model at 2000 tasks per hour compared to DEELB (1700 tasks/h), OSCAR (1650 tasks/h), CNN-MBO (1800 tasks/h) and AWPR-FOG (1850 tasks/h).

Figure 4: Comparative system throughput analysis across various operational scenarios

The use of the system is very high, in such a way that the demands for this system increase. ANNDRA-IoT can handle a throughput of 3000 tasks per hour, which means that it is faster than DEELB (2600 tasks/h), OSCAR (2500 tasks/h), CNN-MBO (2800 tasks/h) and AWPR-FOG (2850 tasks/h). Under peak demand scenarios, i.e., the limits of the system being tested, the throughput arrived at for the ANNDRA-IoT model was 3500 tasks per hour against DEELB (3100 tasks/h), OSCAR (3000 tasks/h), CNN-MBO (3300 tasks/h), and AWPR-FOG (3200 tasks/h). In such conditions, the ANNDRA-IoT model registered a throughput of 4000 tasks per hour in a stress test scenario, leaving DEELB at 3500 tasks per hour, OSCAR at 3400 tasks per hour, and CNN-MBO at 3700 tasks per hour, while AWPR-FOG remained at 3600 tasks per hour. Finally, in the worst-case situation of an emergency scenario, with demands being both urgent and unexpected and that these demands reach a high volume, the ANNDRA-IoT throughput model in this work is 4500 tasks per hour, while that of DEELB is 3900 tasks/h, OSCAR is 3800 tasks/h, CNN-MBO is 4200 tasks/h, and AW.

4.3.4 Load Balancing Effectiveness

This study evaluated the performance of the ANNDRA-IoT model under four distinct operational scenarios: low utilization, average utilization, high utilization, and peak utilization, while the result of these is presented in Fig. 5. The first low utilization scenario is implemented with minimal system load, the ANNDRA-IoT model achieved a load balancing efficiency of 95%, indicating its superior capability to evenly distribute work. This was significantly higher than DEELB (87%), OSCAR (85%), CNN-MBO (90%), and AWPR-FOG (88%), demonstrating ANNDRA-IoT’s advanced load management under underutilized conditions. In addition, in the average utilization scenario that represents regular operational demands, the ANNDRA-IoT model maintained a load balancing efficiency of 92%, outperforming DEELB (84%), OSCAR (80%), CNN-MBO (86%) and AWPR-FOG (85%).

Figure 5: Comparative load balancing effectiveness across various utilization scenarios

In a high utilization scenario implemented under increased demands, where effective load balancing becomes more challenging, ANNDRA-IoT showcased an efficiency of 89%. In comparison, DEELB recorded 82%, OSCAR 78%, CNN-MBO 83%, and AWPR-FOG 81%. Upon further evaluation of performance, another scenario is implemented named the Peak Utilization Scenario. During peak utilization tests, the ANNDRA-IoT model demonstrated its exceptional load balancing capability with an efficiency of 87%, surpassing DEELB (79%), OSCAR (75%), CNN-MBO (81%) and AWPR-FOG (80%).

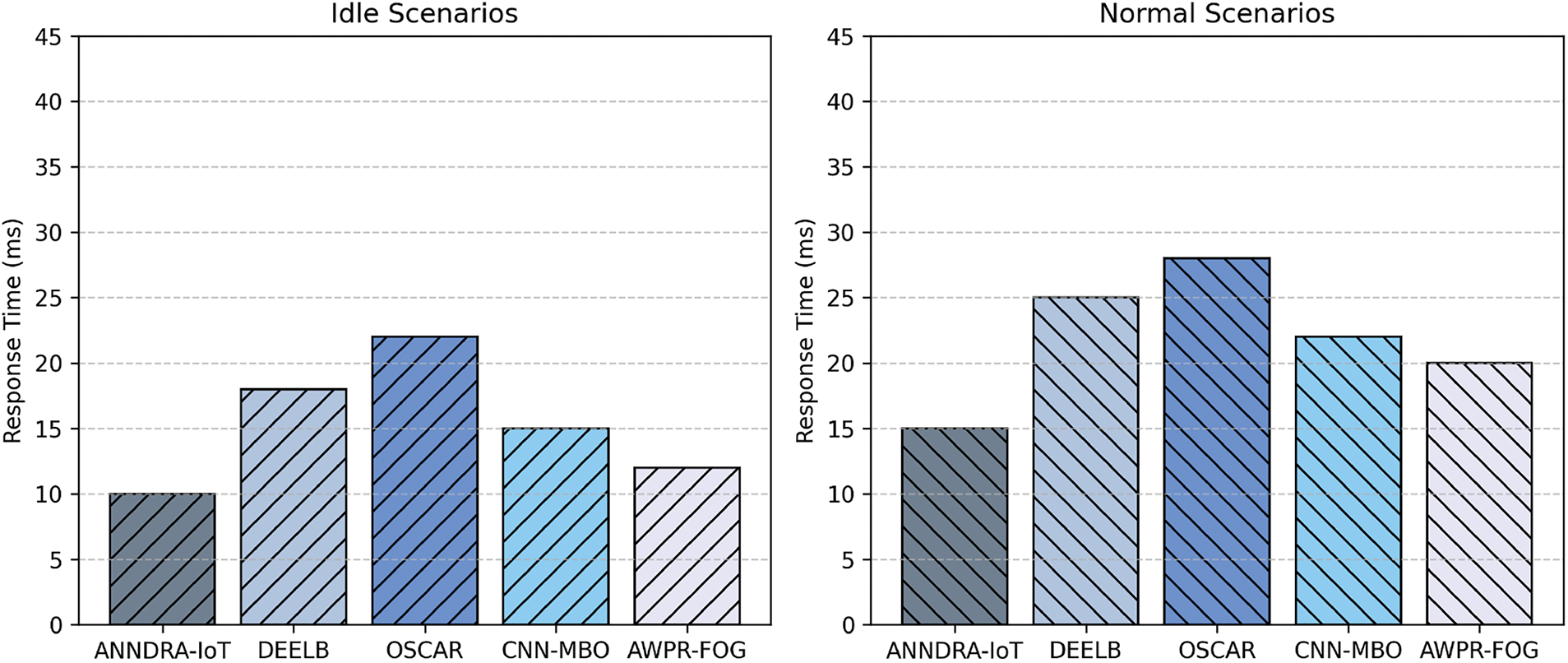

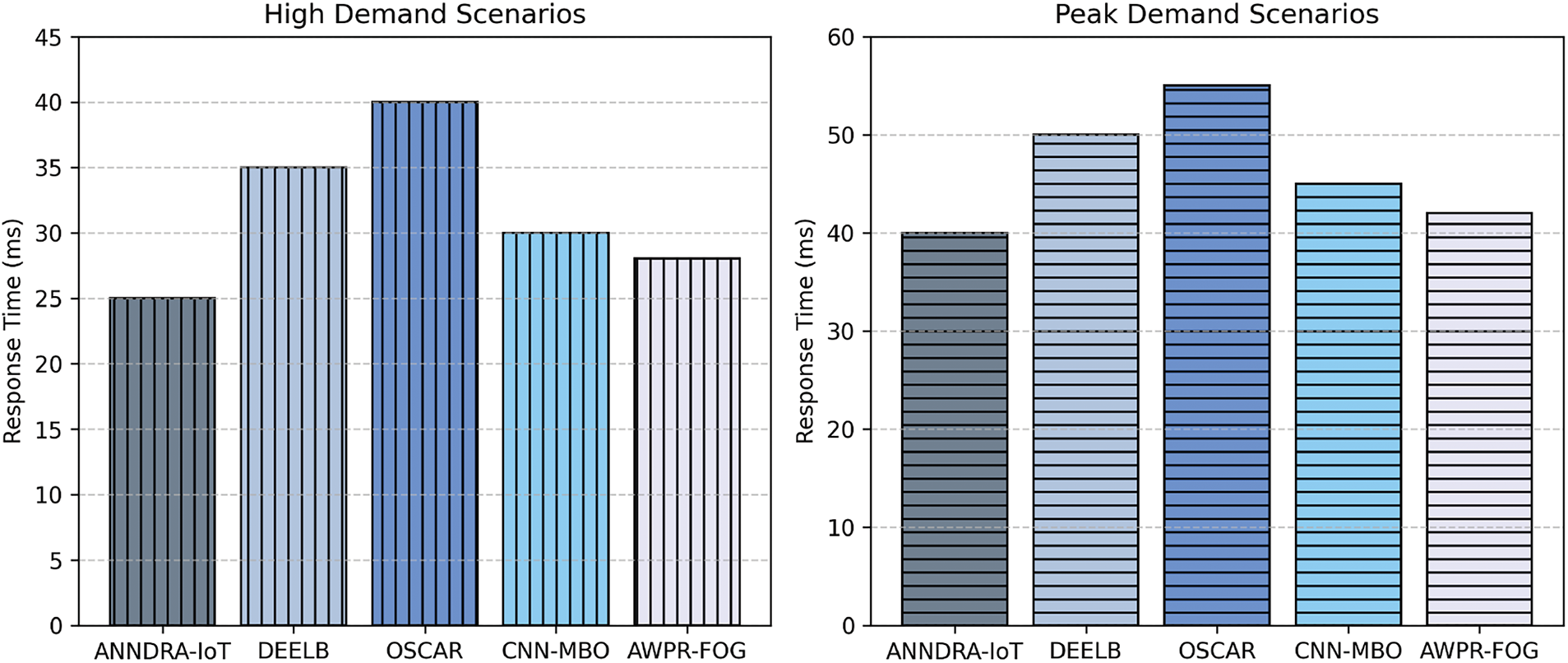

The latency performance of the ANNDRA-IoT model was compared to DEELB, OSCAR, CNN-MBO, and AWPR-FOG in four distinct scenarios. A baseline scenario was established without the ANNDRA-IoT model to highlight its impact on system latency. The results for each scenario are presented below:

• Idle Environment: In scenarios with minimal resource demand, ANNDRA-IoT demonstrated a latency of 10 ms, outperforming DEELB (18 ms), OSCAR (22 ms), CNN-MBO (15 ms), and AWPR-FOG (12 ms). Without ANNDRA-IoT, the system latency increased to 25 ms.

• Normal Operations: During typical operations, ANNDRA-IoT maintained a latency of 15 ms, significantly lower than DEELB (25 ms), OSCAR (28 ms), CNN-MBO (22 ms), and AWPR-FOG (20 ms). In systems without ANNDRA-IoT, the latency rose to 30 ms.

• High Demand: Under conditions of high demand, ANNDRA-IoT achieved a latency of 25 ms, compared to DEELB (35 ms), OSCAR (40 ms), CNN-MBO (30 ms), and AWPR-FOG (28 ms). Systems without ANNDRA-IoT recorded a latency of 45 ms.

• Peak Demand: During peak demand scenarios, ANNDRA-IoT maintained a latency of 40 ms, outperforming DEELB (50 ms), OSCAR (55 ms), CNN-MBO (45 ms), and AWPR-FOG (42 ms). Systems without ANNDRA-IoT exhibited a latency of 60 ms.

Fig. 6 depicts the outcome in these four scenarios that demonstrates the adaptability and efficiency of the ANNDRA-IoT model in diverse operational environments. These results validate the ANNDRA-IoT model as a superior solution for the allocation of real-time IoT resources.

Figure 6: Comparative load balancing effectiveness across various utilization scenarios

The proposed ANNDRA-IoT model is a novel architecture based on adaptive neural networks that addresses the complexities and dynamic nature of IoT environments. The adaptive approach employed by ANNDRA-IoT facilitates optimal resource distribution, ensuring efficient utilization while minimizing latency and improving overall system throughput. The key to this approach is its ability to intelligently interpret and respond to different IoT scenarios, thus boosting the performance and reliability of IoT systems. The implementation of LSTM-based neural networks for IoT resource management faces several key challenges. The large-scale data collection and processing carried out by these models poses a potential threat to data privacy, necessitating the development of privacy-preserving mechanisms.

Our simulation results demonstrate that ANNDRA-IoT outperforms state-of-the-art solutions such as DEELB, OSCAR, CNN-MBO, and AWPR-FOG. For example, ANNDRA-IoT achieves 95% efficiency in highly loaded scenarios, a significant improvement over DEELB’s 87% and OSCAR’s 85%. In terms of latency reductions, the proposed model reduces latency to 15 ms, as opposed to 22 ms in CNN-MBO and 20 ms in AWPR-FOG. These results demonstrate not only the effectiveness of ANNDRA-IoT in diverse operational conditions but also its potential to revolutionize IoT resource management.

The possible future expansion would be the incorporation of state-of-the-art machine learning algorithms for predictive analytics, allowing the model to predict future resource requirements based on trends in historical data. In addition, research for the incorporation of some edge computing paradigms may enhance the processing power of the model to capture, trace, and retrieve the nearest possible data from its source, reducing latency further with an exceptionally superfast decision-making processes’ speed.

This paper introduces the ANNDRA-IoT model that provides a significant improvement in resource allocation in environmental settings of the IoT. The ANNDRA-IoT model effectively combats the complex and dynamic natures associated with IoT systems through its innovative neural network-based architecture. The implementation of the model has led to many achievements in improving system performance. ANNNORA-IoT lowered latency to 15 ms in simulation scenarios as compared to CNN-MBO’s 22 ms and AWPR-FOG’s 20 ms. The efficiency in resource utilization under low utilization scenarios hit peaks at 95% with DEELB only at 87% and OSCAR only at 85%. Moreover, the throughput of the system with ANNDRA-IoT has been measured in high-demand scenarios that were 3000 tasks per hour, better than the DEELB and OSCAR results of 2600 tasks/h and 2500 tasks/h, respectively. The efficiency in load balancing was also superior in high utilization scenarios and registered an efficiency 89% compared to DEELB 82% and OSCAR 78%. The primary limitations of this study include the computational complexity of implementing LSTM networks on resource-constrained devices. Future extension of the proposed approach can include more diversity in the data set to increase efficiency in resource allocation.

Acknowledgement: None.

Funding Statement: The authors gratefully acknowledge the funding of the Deanship of Graduate Studies and Scientific Research, Jazan University, Saudi Arabia, through Project Number: ISP-2024.

Author Contributions: The authors confirm contributions to the paper as follows: study conception and design: Abdullah M. Alqahtani, Kamran Ahmad Awan; data collection: Kamran Ahmad Awan, Abdulaziz Almaleh; analysis and interpretation of results: Abdullah M. Alqahtani, Kamran Ahmad Awan, Osama Aletri; draft manuscript preparation: Kamran Ahmad Awan, Osama Aletri. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: The data used in this study is derived from the publicly available TON_IoT Dataset, which can be accessed at The TON_IoT Datasets (accessed on 29 January 2025). The code utilized for this research is currently part of ongoing work and will be made available upon request to maintain the integrity and progress of the research.

Ethics Approval: Not applicable.

Conflicts of Interest: The authors declare no conflicts of interest to report regarding the present study.

References

1. Zaidi T, Usman M, Aftab MU, Aljuaid H, Ghadi YY. Fabrication of flexible role-based access control based on blockchain for Internet of Things use cases. IEEE Access. 2023;11:106315–33. doi:10.1109/ACCESS.2023.3318487. [Google Scholar] [CrossRef]

2. Ahmed SF, Alam MSB, Afrin S, Rafa SJ, Taher SB, Kabir M, et al. Towards a secure 5G-enabled Internet of Things: a survey on requirements, privacy, security, challenges, and opportunities. IEEE Access. 2024;12(8):13125–45. doi:10.1109/ACCESS.2024.3352508. [Google Scholar] [CrossRef]

3. Shuaib M, Bhatia S, Alam S, Masih RK, Alqahtani N, Basheer S, et al. An optimized, dynamic, and efficient load-balancing framework for resource management in the Internet of Things (IoT) environment. Electronics. 2023;12(5):1104. doi:10.3390/electronics12051104. [Google Scholar] [CrossRef]

4. Zhang Y, He D, Vijayakumar P, Luo M, Huang X. SAPFS: an efficient symmetric-key authentication key agreement scheme with perfect forward secrecy for industrial internet of things. IEEE Internet Things J. 2023;10(11):9716–26. doi:10.1109/JIOT.2023.3234178. [Google Scholar] [CrossRef]

5. Mowla MN, Mowla N, Shah AS, Rabie K, Shongwe T. Internet of Things and wireless sensor networks for smart agriculture applications: a survey. IEEE Access. 2023;11(2):145813–52. doi:10.1109/ACCESS.2023.3346299. [Google Scholar] [CrossRef]

6. Bakar KA, Zuhra FT, Isyaku B, Sulaiman SB. A review on the immediate advancement of the Internet of Things in wireless telecommunications. IEEE Access. 2023;11(70):21020–48. doi:10.1109/ACCESS.2023.3250466. [Google Scholar] [CrossRef]

7. Araújo SO, Peres RS, Filipe L, Manta-Costa A, Lidon F, Ramalho JC, et al. Intelligent data-driven decision support for agricultural systems-ID3SAS. IEEE Access. 2023;11:115798–815. doi:10.1109/ACCESS.2023.3324813. [Google Scholar] [CrossRef]

8. Zhang F, Han G, Li A, Lin C, Liu L, Zhang Y, et al. QoS-driven distributed cooperative data offloading and heterogeneous resource scheduling for IIoT. IEEE Internet Things Mag. 2023;6(3):118–24. doi:10.1109/IOTM.001.2200264. [Google Scholar] [CrossRef]

9. Singh M, Sahoo KS, Gandomi AH. An intelligent IoT-based data analytics for freshwater recirculating aquaculture system. IEEE Internet Things J. 2023;11(3):4206–17. doi:10.1109/JIOT.2023.3298844. [Google Scholar] [CrossRef]

10. Singh SK, Kumar M, Tanwar S, Park JH. GRU-based digital twin framework for data allocation and storage in IoT-enabled smart home networks. Future Gener Comput Syst. 2024;153(1):391–402. doi:10.1016/j.future.2023.12.009. [Google Scholar] [CrossRef]

11. Xu A, Hu Z, Zhang X, Xiao H, Zheng H, Chen B, et al. QDRL: queue-aware online DRL for computation offloading in industrial Internet of Things. IEEE Internet Things J. 2023;11(5):7772–86. doi:10.1109/JIOT.2023.3316139. [Google Scholar] [CrossRef]

12. Jeribi F, Amin R, Alhameed M, Tahir A. An efficient trust management technique using ID3 algorithm with blockchain in smart buildings IoT. IEEE Access. 2022;11:8136–49. doi:10.1109/ACCESS.2022.3230944. [Google Scholar] [CrossRef]

13. Zhang F, Han G, Liu L, Zhang Y, Peng Y, Li C. Cooperative partial task offloading and resource allocation for IIoT based on decentralized multi-agent deep reinforcement learning. IEEE Internet Things J. 2023;11(3):5526–44. doi:10.1109/JIOT.2023.3306803. [Google Scholar] [CrossRef]

14. Khan D, Alshahrani A, Almjally A, Al Mudawi N, Algarni A, Al Nowaiser K, et al. Advanced IoT-based human activity recognition and localization using deep polynomial neural network. IEEE Access. 2024;12:94337–53. doi:10.1109/ACCESS.2024.3420752. [Google Scholar] [CrossRef]

15. Minhaj SU, Mahmood A, Abedin SF, Hassan SA, Bhatti MT, Ali SH, et al. Intelligent resource allocation in LoRaWAN using machine learning techniques. IEEE Access. 2023;11(4):10092–106. doi:10.1109/ACCESS.2023.3240308. [Google Scholar] [CrossRef]

16. George A, Ravindran A, Mendieta M, Tabkhi H. Mez: an adaptive messaging system for latency-sensitive multi-camera machine vision at the IoT edge. IEEE Access. 2021;9:21457–73. doi:10.1109/ACCESS.2021.3055775. [Google Scholar] [CrossRef]

17. Zou S, Wu J, Yu H, Wang W, Huang L, Ni W, et al. Efficiency-optimized 6G: a virtual network resource orchestration strategy by enhanced particle swarm optimization. Dig Commun Netw. 2024;10(5):1221–33. doi:10.1016/j.dcan.2023.06.008. [Google Scholar] [CrossRef]

18. Wadhwa H, Aron R. Optimized task scheduling and preemption for distributed resource management in fog-assisted IoT environment. J Supercomput. 2023;79(2):2212–50. doi:10.1007/s11227-022-04747-2. [Google Scholar] [CrossRef]

19. Peng M, Zhang K. Recent advances in fog radio access networks: performance analysis and radio resource allocation. IEEE Access. 2016;4:5003–9. doi:10.1109/ACCESS.2016.2603996. [Google Scholar] [CrossRef]

20. Park H, Kim N, Lee GH, Choi JK. MultiCNN-FilterLSTM: resource-efficient sensor-based human activity recognition in IoT applications. Future Gener Comput Syst. 2023;139(15):196–209. doi:10.1016/j.future.2022.09.024. [Google Scholar] [CrossRef]

21. Walia GK, Kumar M, Gill SS. AI-empowered fog/edge resource management for IoT applications: a comprehensive review, research challenges and future perspectives. IEEE Commun Surv Tutor. 2023;26(1):619–69. doi:10.1109/COMST.2023.3338015. [Google Scholar] [CrossRef]

22. Xu M. A novel machine learning-based framework for channel bandwidth allocation and optimization in distributed computing environments. EURASIP J Wirel Commun Netw. 2023;2023(1):97. doi:10.1186/s13638-023-02310-y. [Google Scholar] [CrossRef]

23. Ke H, Wang J, Wang H, Ge Y. Joint optimization of data offloading and resource allocation with renewable energy aware for IoT devices: a deep reinforcement learning approach. IEEE Access. 2019;7:179349–63. doi:10.1109/ACCESS.2019.2959348. [Google Scholar] [CrossRef]

24. Singh J, Singh P, Hedabou M, Kumar N. An efficient machine learning-based resource allocation scheme for SDN-enabled fog computing environment. IEEE Trans Veh Technol. 2023;72(6):8004–17. doi:10.1109/TVT.2023.3242585. [Google Scholar] [CrossRef]

25. Otoshi T, Murata M, Shimonishi H, Shimokawa T. Hierarchical Bayesian attractor model for dynamic task allocation in edge-cloud computing. In: 2023 International Conference on Computing, Networking and Communications (ICNC); 2023;Honolulu, HI, USA. p. 12–8. [Google Scholar]

26. Liu L, Yuan X, Chen D, Zhang N, Sun H, Taherkordi A. Multi-user dynamic computation offloading and resource allocation in 5G MEC heterogeneous networks with static and dynamic subchannels. IEEE Trans Veh Technol. 2023;72(11):14924–38. doi:10.1109/TVT.2023.3285069. [Google Scholar] [CrossRef]

27. Fan W, Liu X, Yuan H, Li N, Liu Y. Time-slotted task offloading and resource allocation for cloud-edge-end cooperative computing networks. IEEE Trans Mob Comput. 2024;23(8):8225–41. doi:10.1109/TMC.2024.3349551. [Google Scholar] [CrossRef]

28. Liu Y, Wang Y, Shen Y. Delay Doppler division multiple access resource allocation in aircraft network. IEEE Internet Things J. 2024;11(17):27881–93. doi:10.1109/JIOT.2024.3352030. [Google Scholar] [CrossRef]

29. Ortiz F, Skatchkovsky N, Lagunas E, Martins WA, Eappen G, Daoud S, et al. Energy-efficient on-board radio resource management for satellite communications via neuromorphic computing. IEEE Trans Mach Learn Commun Netw. 2024;2:169–89. doi:10.1109/TMLCN.2024.3352569. [Google Scholar] [CrossRef]

30. Badri S, Alghazzawi DM, Hasan SH, Alfayez F, Hasan SH, Rahman M, et al. An efficient and secure model using adaptive optimal deep learning for task scheduling in cloud computing. Electronics. 2023;12(6):1441. doi:10.3390/electronics12061441. [Google Scholar] [CrossRef]

31. Kumar M, Kishor A, Samariya JK, Zomaya AY. An autonomic workload prediction and resource allocation framework for fog-enabled industrial IoT. IEEE Internet Things J. 2023;10(11):9513–22. doi:10.1109/JIOT.2023.3235107. [Google Scholar] [CrossRef]

32. Baccour E, Mhaisen N, Abdellatif AA, Erbad A, Mohamed A, Hamdi M, et al. Pervasive AI for IoT applications: a survey on resource-efficient distributed artificial intelligence. IEEE Commun Surv Tutor. 2022;24(4):2366–418. doi:10.1109/COMST.2022.3200740. [Google Scholar] [CrossRef]

33. Kuthadi VM, Selvaraj R, Baskar S, Shakeel PM, Ranjan A. Optimized energy management model on data distributing framework of wireless sensor network in IoT system. Wirel Pers Commun. 2022;127(2):1377–403. doi:10.1007/s11277-021-08583-0. [Google Scholar] [CrossRef]

34. Mwase C, Jin Y, Westerlund T, Tenhunen H, Zou Z. Communication-efficient distributed AI strategies for the IoT edge. Future Gener Comput Syst. 2022;131(3):292–308. doi:10.1016/j.future.2022.01.013. [Google Scholar] [CrossRef]

35. Chen S, Yang C, Huang W, Liang W, Ke N, Souri A, et al. Fairness constraint efficiency optimization for multiresource allocation in a cluster system serving Internet of Things. Int J Commun Syst. 2023;36(3):e5395. doi:10.1002/dac.5395. [Google Scholar] [CrossRef]

36. Zare M, Sola YE, Hasanpour H. Imperialist competitive based approach for efficient deployment of IoT services in fog computing. Cluster Comput. 2023;27:1–14. [Google Scholar]

37. Salh A, Ngah R, Audah L, Kim KS, Abdullah Q, Al-Moliki YM, et al. Energy-efficient federated learning with resource allocation for green IoT edge intelligence in B5G. IEEE Access. 2023;11:16353–67. doi:10.1109/ACCESS.2023.3244099. [Google Scholar] [CrossRef]

38. Khashan OA, Khafajah NM. Efficient hybrid centralized and blockchain-based authentication architecture for heterogeneous IoT systems. J King Saud Univ Comput Inf Sci. 2023;35(2):726–39. doi:10.1016/j.jksuci.2023.01.011. [Google Scholar] [CrossRef]

39. Huangpeng Q, Yahya RO. Distributed IoT services placement in fog environment using optimization-based evolutionary approaches. Expert Syst Appl. 2024;237(1):121501. doi:10.1016/j.eswa.2023.121501. [Google Scholar] [CrossRef]

40. Diwaker C, Sharma A. ETOSP: energy-efficient task offloading strategy based on partial offloading in mobile edge computing framework for efficient resource management. J Auton Intell. 2024;7(3). [Google Scholar]

41. Lara-Benítez P, Carranza-García M, Riquelme JC. An experimental review on deep learning architectures for time series forecasting. Int J Neural Syst. 2021;31(3):2130001. doi:10.1142/S0129065721300011. [Google Scholar] [PubMed] [CrossRef]

42. Liu Y, Liu A, Xia Y, Hu B, Liu J, Wu Q, et al. A blockchain-based cross-domain authentication management system for IoT devices. IEEE Trans Netw Sci Eng. 2023;11(1):115–27. doi:10.1109/TNSE.2023.3292624. [Google Scholar] [CrossRef]

43. Muazu T, Yingchi M, Muhammad AU, Ibrahim M, Samuel O, Tiwari P. IoMT: a medical resource management system using edge-empowered blockchain federated learning. IEEE Trans Netw Serv Manag. 2023;21(1):517–34. doi:10.1109/TNSM.2023.3308331. [Google Scholar] [CrossRef]

44. Syu JH, Lin JCW, Srivastava G, Yu K. A comprehensive survey on artificial intelligence empowered edge computing on consumer electronics. IEEE Trans Consum Electron. 2023;69(4):1023–34. doi:10.1109/TCE.2023.3318150. [Google Scholar] [CrossRef]

45. Nabi ST, Islam MR, Alam MGR, Hassan MM, AlQahtani SA, Aloi G, et al. Deep learning-based fusion model for multivariate LTE traffic forecasting and optimized radio parameter estimation. IEEE Access. 2023;11(5):14533–49. doi:10.1109/ACCESS.2023.3242861. [Google Scholar] [CrossRef]

46. Rajagukguk RA, Ramadhan RAA, Lee HJ. A review on deep learning models for forecasting time series data of solar irradiance and photovoltaic power. Energies. 2020;13(24):6623. doi:10.3390/en13246623. [Google Scholar] [CrossRef]

47. Ali NF, Atef M. An efficient hybrid LSTM-ANN joint classification-regression model for PPG-based blood pressure monitoring. Biomed Signal Process Control. 2023;84(2):104782. doi:10.1016/j.bspc.2023.104782. [Google Scholar] [CrossRef]

Cite This Article

Copyright © 2025 The Author(s). Published by Tech Science Press.

Copyright © 2025 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools