Open Access

Open Access

REVIEW

Analyzing Real-Time Object Detection with YOLO Algorithm in Automotive Applications: A Review

Department of Automotive and Transport Engineering, Transilvania University of Brasov, Brasov, 500036, Romania

* Corresponding Author: Carmen Gheorghe. Email:

Computer Modeling in Engineering & Sciences 2024, 141(3), 1939-1981. https://doi.org/10.32604/cmes.2024.054735

Received 06 June 2024; Accepted 05 September 2024; Issue published 31 October 2024

Abstract

Identifying objects in real-time is a technology that is developing rapidly and has a huge potential for expansion in many technical fields. Currently, systems that use image processing to detect objects are based on the information from a single frame. A video camera positioned in the analyzed area captures the image, monitoring in detail the changes that occur between frames. The You Only Look Once (YOLO) algorithm is a model for detecting objects in images, that is currently known for the accuracy of the data obtained and the fast-working speed. This study proposes a comprehensive literature review of YOLO research, as well as a bibliometric analysis to map the trends in the automotive field from 2020 to 2024. Object detection applications using YOLO were categorized into three primary domains: road traffic, autonomous vehicle development, and industrial settings. A detailed analysis was conducted for each domain, providing quantitative insights into existing implementations. Among the various YOLO architectures evaluated (v2–v8, H, X, R, C), YOLO v8 demonstrated superior performance with a mean Average Precision (mAP) of 0.99.Keywords

Modern transportation systems are becoming more and more complex, demanding creative solutions to address challenges like traffic jams, safety issues, and general efficiency. In this context, autonomous vehicles have emerged as a potentially revolutionary technological advancement in transportation. A fundamental requirement for safe and reliable autonomous vehicle operation is the critical function of real-time object detection. By accurately identifying and tracking vehicles, pedestrians, traffic signs, and other relevant objects in the surrounding environment, autonomous vehicles can make informed decisions, navigate complex traffic scenarios, and mitigate potential hazards. This capability allows vehicles to understand and perceive their environment, enabling them to safely travel highways and interact with other vehicles and pedestrians [1]. Moreover, object detection is essential for advanced driver assistance systems (ADAS) [2], which provide drivers with essential information and support, ultimately contributing to safer roadways. In this context, deep learning-based object detectors [3] have shown outstanding results, surpassing traditional methods [4].

Among various object detection algorithms, You Only Look Once (YOLO) has become very popular in the automotive field due to its emphasis on real-time performance [5]. YOLO’s single-stage architecture speeds up the object detection process, making it faster than its multi-stage counterparts. This speed is particularly crucial for autonomous vehicles since they need to be able to detect objects quickly and accurately to avoid collisions and make decisions in real time.

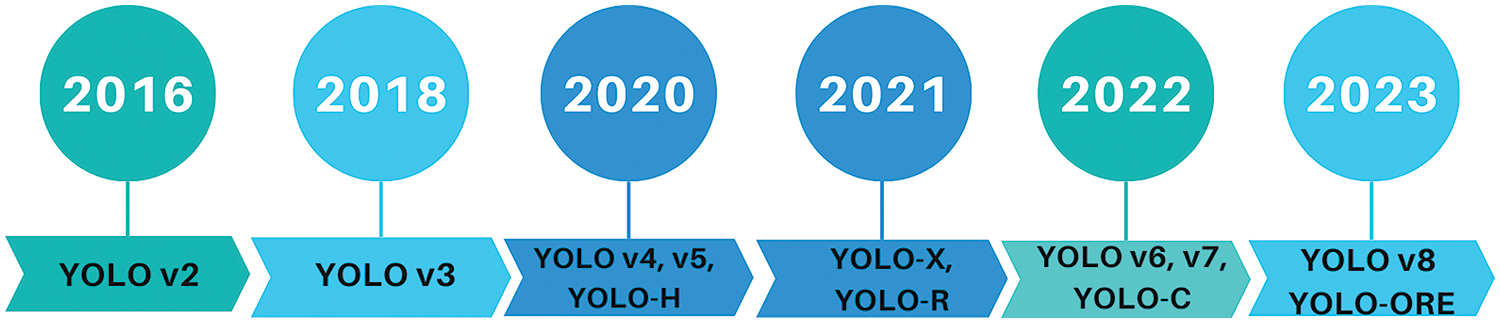

YOLO was developed and implemented in 2015 starting with the first version, YOLO v1. Since its introduction, YOLO has been applied to various computer vision tasks, such as vehicle detection and monitoring, autonomous and intelligent vehicles, manufacturing industry due to its ability to detect multiple objects in real time. However, the version of YOLO in research may vary depending on when researchers started integrating it into their systems or projects, as seen in Fig. 1.

Figure 1: Timeline of YOLO detection models applied in the automotive field between 2016–2023

YOLO can detect objects in real time at a speed of several frames per second, even on devices with limited resources. To detect and locate objects in the image with high accuracy, several improved versions were needed.

YOLO v4 addressed most of the shortcomings of previous versions, such as better balance between accuracy and speed of detection, improved post-processing techniques leading to reduced false positives and improved accuracy of object localization, the ability to handle multi-scale features more effectively, improved object detection at various scales. While YOLO v5 and later versions (like YOLO v6, YOLO v7, YOLO v8, etc.) also brought improvements and refinements (YOLO v5 focused on ease of use, performance optimization, and practical deployment features, YOLO v6 aimed at industrial-grade accuracy and efficiency, with multiple model sizes and optimizations for edge devices, YOLO v7 introduced novel architectural improvements, advanced training techniques, and achieved state-of-the-art performance in both accuracy and speed and YOLO v8 brought further improvements in accuracy and speed, advanced feature fusion, and optimized training and post-processing techniques), YOLO v4 marked a substantial leap by addressing numerous shortcomings and introducing several key innovations over YOLO v3 and YOLO v2. This comprehensive set of enhancements makes YOLO v4 particularly noteworthy in the evolution of the YOLO family of models.

YOLO covers a wide range of domains, from the detection of objects in medical images to the resolution of detection systems in autonomous vehicles and to the detection of human faces in selfie images. YOLO (as an algorithm for detecting objects in an image) meets the needs of several types of specialists in the field of vehicles, regardless of whether we are talking about road traffic or vehicle production. Detecting objects in images through augmented reality can increase safety in industrial settings by visualizing operators performing dangerous maneuvers in the production area.

One of the main advantages of using the YOLO algorithm in the automotive field is the successful implementation of vehicle detection in homogeneous traffic [6]. This highlights the transformation of ordinary cities into smart cities through the implementation of video monitoring of road traffic. This involves the installation of video surveillance cameras and the application of moving object detection algorithms. Simply changing the traffic light times on the main traffic arteries has the potential to reduce traffic congestion, especially during peak hours [7]. Also, regarding car parking systems, license plate recognition through object detection and text recognition is a big step forward in automating large urban parking areas, especially those of shopping centers [8]. Furthermore, the YOLO object detection model combined with Py-tesseract for text recognition had impressive results in vehicle number plate recognition with an accuracy of 97% [9].

This paper delves into the application of YOLO in automotive settings. To the best of our knowledge, the literature lacks a study analyzing the emerging trends related to this technology in the field, although there are many articles reviewing the YOLO algorithm developments [10–14] or the use of YOLO in different fields [15–19]. Thus, the motivation behind research on YOLO video detection in the automotive field, including applications in road traffic, autonomous vehicles, and industrial manufacturing, stems from the growing demand for efficient and accurate computer vision systems in these domains. The review starts by conducting a comprehensive literature survey to identify existing research articles, papers, and studies related to YOLO implementations specifically in the context of road traffic management, autonomous vehicle applications, and industrial manufacturing. This involves searching databases, academic journals, conference proceedings, and relevant online resources.

The structure of this review is as follows: Section 1 introduces motivation and related studies. Section 2 provides an overview of the methods and research techniques used to identify the published papers. Section 3 focuses on the three most common research fields. Section 4 summarizes the research and its limitations, and Section 5 highlights the conclusions and provides future research directions. Structuring the review in this manner, ensures a thorough and systematic analysis of existing research on YOLO implementations in the specified domains, contributing to the collective understanding of the technology’s applications, advancements, and areas for future exploration within the automotive and road traffic sectors.

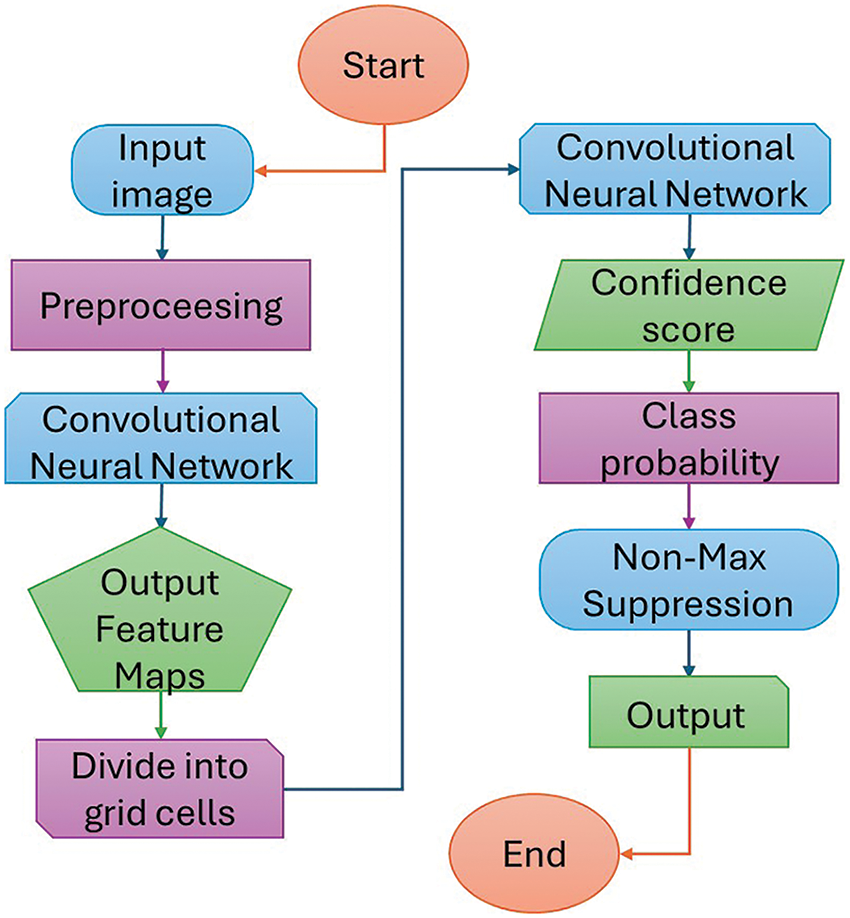

The YOLO algorithm flowchart consists of several key components that work together to perform object detection in images. As initial input, the algorithm takes an image of fixed size. Depending on the specific YOLO version, the image might undergo preprocessing steps like resizing or normalization. The input image is passed through a neural network backbone, based on Convolutional Neural Network (CNN) architectures such as Darknet or ResNet. This backbone extracts hierarchical features from the image at multiple scales, capturing low-level details and high-level semantic information. The neural network backbone processes the input image to extract feature maps which encode semantic information about objects present in the image, with higher-level features capturing more abstract concepts.

A detection head which consists of convolutional layers responsible for predicting bounding boxes, confidence scores, and class probabilities for objects within the image, is attached to the feature maps generated by the neural network backbone. Also, the detection head predicts bounding boxes for potential objects by applying a set of convolutional filters to the feature maps. The bounding boxes are represented by their coordinates (x, y, width, height) relative to the image dimensions. The confidence score is estimated by the detection head and reflects both the accuracy of the bounding box prediction and the probability of an object being present within the box. Besides bounding boxes and confidence scores, the detection head predicts class probabilities for each bounding box. The class probabilities indicate the probability of the object within the bounding box belonging to various predefined classes (e.g., car, truck, bus, tram). In the next step, the algorithm applies Non-Max Suppression (NMS) to remove redundant or overlapping detections. NMS ensures that only the most confident and non-overlapping detections are retained, eliminating duplicate detections of the same object. The output of the YOLO algorithm is a set of bounding boxes, each associated with a confidence score and class probabilities. These bounding boxes represent the detected objects in the input image, along with their corresponding class labels and confidence levels. The flow chart of the YOLO implementation is presented in Fig. 2.

Figure 2: The YOLO algorithm flowchart

This detailed understanding of YOLO’s implementation highlights why it is effective for real-time object detection, balancing speed and accuracy through its innovative grid-based approach, efficient architecture, and robust post-processing techniques. The YOLO algorithm is widely applicable in various real-life scenarios in the automotive field. Traffic monitoring systems use YOLO mostly for managing and analyzing traffic flow. Usually, a traffic camera monitors an intersection or any busy road section and then YOLO detects multiple vehicles in the camera feed. NMS is applied to eliminate redundant detections, ensuring accurate counting and tracking of vehicles. YOLO assigns class probabilities to each detected object, allowing the system to differentiate between various vehicle types (e.g., car, truck, tram, bus). This information can be used for traffic analysis and congestion management.

In autonomous driving, YOLO object detection is very important for the safe and efficient operation of self-driving cars. While driving, the car encounters various objects like pedestrians, other vehicles, traffic signs, and obstacles. In the feature extraction step, the YOLO algorithm divides the camera feed into a grid and extracts features from each grid cell. This allows the system to detect multiple objects within the same frame. After that, YOLO predicts bounding boxes around pedestrians, vehicles, and other relevant objects using predefined anchor boxes that are adjusted to fit the detected objects, then processes the input image in real time to detect objects and provide the necessary data for the car’s control systems to make immediate decisions, such as stopping for pedestrians or navigating around obstacles. YOLO can be integrated into advanced driver assistance systems to prevent accidents. Using grid division and real-time inference, the system continuously analyzes the road ahead in real time, detecting obstacles such as fallen debris or animals and alerting the driver or taking autonomous action to avoid a collision. Further on, applying class probability and object recognition, YOLO detects and classifies various traffic signs and signals, providing crucial information to the driver or autonomous system to ensure compliance with traffic rules and enhance safety.

In the automotive manufacturing industry environment, YOLO is employed in manufacturing plants for real-time quality control and defect detection. Using grid division and feature extraction, YOLO can inspect car parts on a production line. The system divides images of car parts into grids and extracts features to identify defects such as scratches, dents, or misalignments. If there is a defect, YOLO predicts bounding boxes around the defect and provides confidence scores indicating the likelihood of the defect being present. High-confidence detections are flagged for further inspection or removal from the production line.

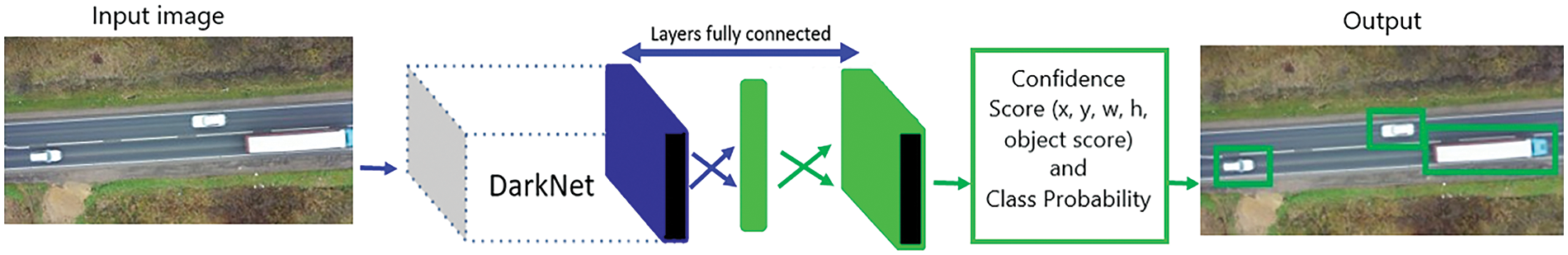

As presented in Fig. 3, the core functionality of the YOLO algorithm is explained with an image captured in real-time traffic, using a drone.

Figure 3: The core functionality of YOLO

This section furnishes essential details and guidelines necessary for conducting a focused systematic literature review centered on real-time object detection in the automotive field, utilizing the YOLO object detection algorithm.

The review questions originate from the main goal of the investigation and structure the literature search process, influencing the criteria used to decide which studies are included or excluded from the review. Additionally, these questions guide the interpretation and analysis of the review results.

The following are the questions formulated in this review:

Review Question 1: What are the automotive field’s main applications of real-time object detection and recognition using YOLO?

Knowing the main applications of YOLO in the automotive field helps researchers identify areas of opportunity and also challenges in the field of real-time object detection. So, real-time object detection and recognition using YOLO have diverse applications in the automotive field, contributing to safer, more efficient, and smarter traffic, vehicles, and manufacturing systems.

Review Question 2: What hardware is used for the implementation of real-time object detection systems based on YOLO?

Hardware serves as a vital component in automotive recognition systems, furnishing the processing and storage capacity required to analyze and process video images captured by cameras. In the absence of appropriate hardware, automotive recognition systems would struggle to operate effectively, hindering their ability to detect and track objects in real time. Additionally, hardware significantly influences the speed and efficiency of traffic recognition and detection systems; stronger hardware capabilities enable faster and more accurate processing and analysis of images.

Review Question 3: Which metrics are employed to gauge the effectiveness of object detection within the realm of real-time automotive applications, when employing YOLO for object detection?

Accurate performance metrics are essential for ensuring the effectiveness of real-time object detection and recognition systems. Inaccuracies in object detection could compromise the functionality and safety of these systems, potentially posing risks to humans. Understanding relevant metrics enables the identification of errors within object detection systems and facilitates the development of solutions to enhance accuracy.

Review Question 4: What YOLO version had the better result after validating these systems?

Understanding the performance differences between different versions of YOLO helps in making informed decisions regarding algorithm selection, optimization strategies, and deployment considerations in various applications and scenarios.

Review Question 5: What are the challenges and trends encountered in real-time object detection in the automotive field using YOLO?

Understanding the challenges, and also the trends associated with object detection in the automotive sector using YOLO aids researchers and developers in pinpointing areas requiring technological advancement. Moreover, awareness of the challenges fosters a deeper comprehension of its constraints and possible drawbacks, facilitating informed decisions regarding the optimal utilization of the technology.

This section outlines the procedures employed to identify, select, and assess pertinent studies for incorporation into the review. It encompasses details regarding databases and information sources, keywords, as well as inclusion and exclusion criteria utilized in the identification of relevant studies.

The published articles used in this review were retrieved from the Clarivate Web of Science (WoS) and Scopus online platforms in March and April 2024, using the search query “YOLO AND (traffic OR automotive OR vehicle OR car) AND (detection OR identification)”.

These keywords were chosen to generate relevant results for using YOLO in automotive applications. The timeframe chosen was from 2020 to 2024. The period from 2020 to 2024 captures recent advancements and innovations in computer vision, deep learning, and object detection algorithms such as YOLO. By focusing on this timeframe, we can discuss the latest improvements, optimizations, and applications of YOLO in automotive contexts and how YOLO has evolved and adapted to meet the growing demands and challenges in the automotive industry. Also, many research papers, publications, and open-source implementations related to YOLO and automotive applications have been released within this timeframe.

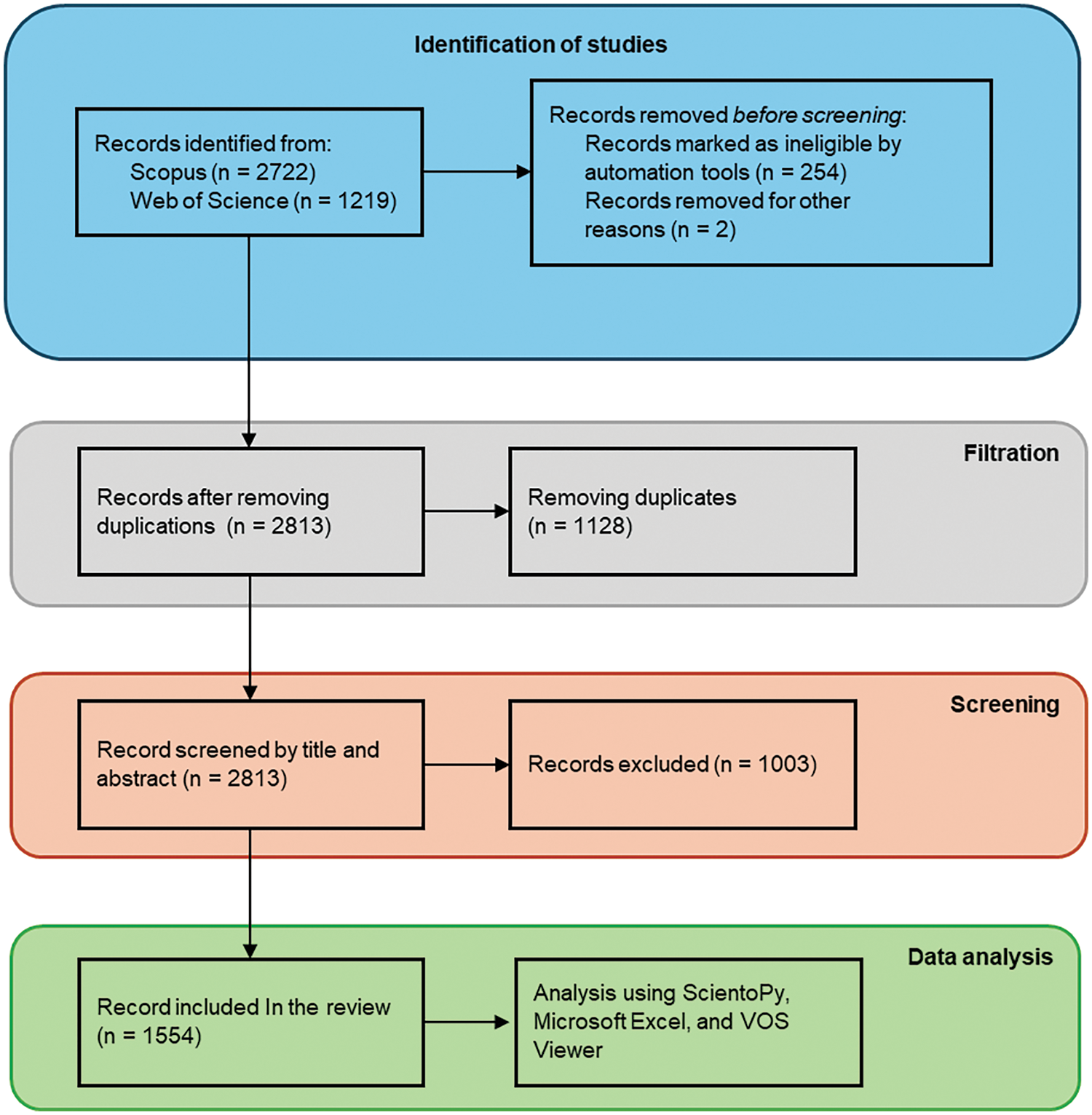

The Clarivate Web of Science and Scopus databases were chosen for their broad coverage of publications and their commitment to indexing high-quality, peer-reviewed academic literature. Both databases excel in citation tracking, enabling researchers to trace citation relationships between articles and identify influential papers in specific fields. Moreover, they offer advanced search functionalities that facilitate precise searches and allow results to be filtered by various criteria such as publication year, author, and journal. Thus, 1219 articles were generated from WoS and 2722 articles on the Scopus platform using the selected search keywords. The full process of the study selection is presented in Fig. 4.

Figure 4: Flowchart of the review process

To ensure a consistent and reliable dataset, the search was limited to peer-reviewed journal articles and conference papers published in English. Other document types such as reviews, letters, book chapters, book reviews, and data papers were excluded. By focusing on English-language journal articles, a dataset of 3941 documents was compiled. Comprehensive bibliographic information, including full records and cited references, was extracted from the two databases and then imported into Microsoft Excel.

To preprocess these datasets and eliminate duplicate papers, was used the ScientoPy [20] tool, a robust solution that effectively identifies and removes redundant entries, thereby ensuring the final dataset is accurate, comprehensive, and free from duplication. After the preprocessing, 2813 unique papers were obtained. Two researchers (Carmen Gheorghe and Razvan Gabriel Boboc) independently screened the record list to guarantee adherence to the established criteria. Disagreements were resolved through consensus-building with a third party (Mihai Duguleana). Finally, 1554 articles were included in the review.

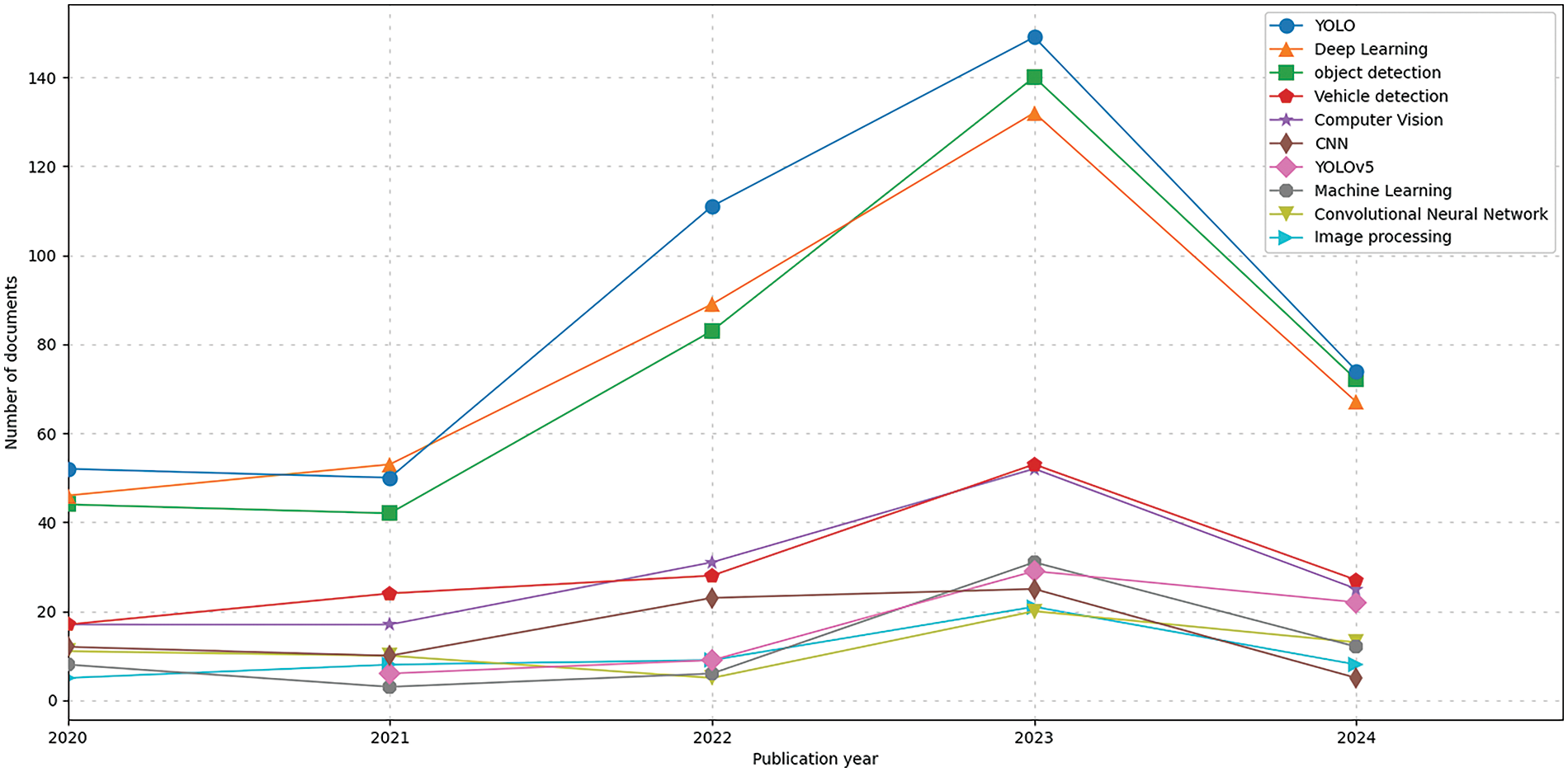

At the same time, the application also reveals subjects associated with published articles whose main research topic is automotive. Using ScientoPy and the author’s subjects (based on all their keywords, including YOLO) resulted in a timeline, as shown in Fig. 5.

Figure 5: Timeline and trending for top 10 topics

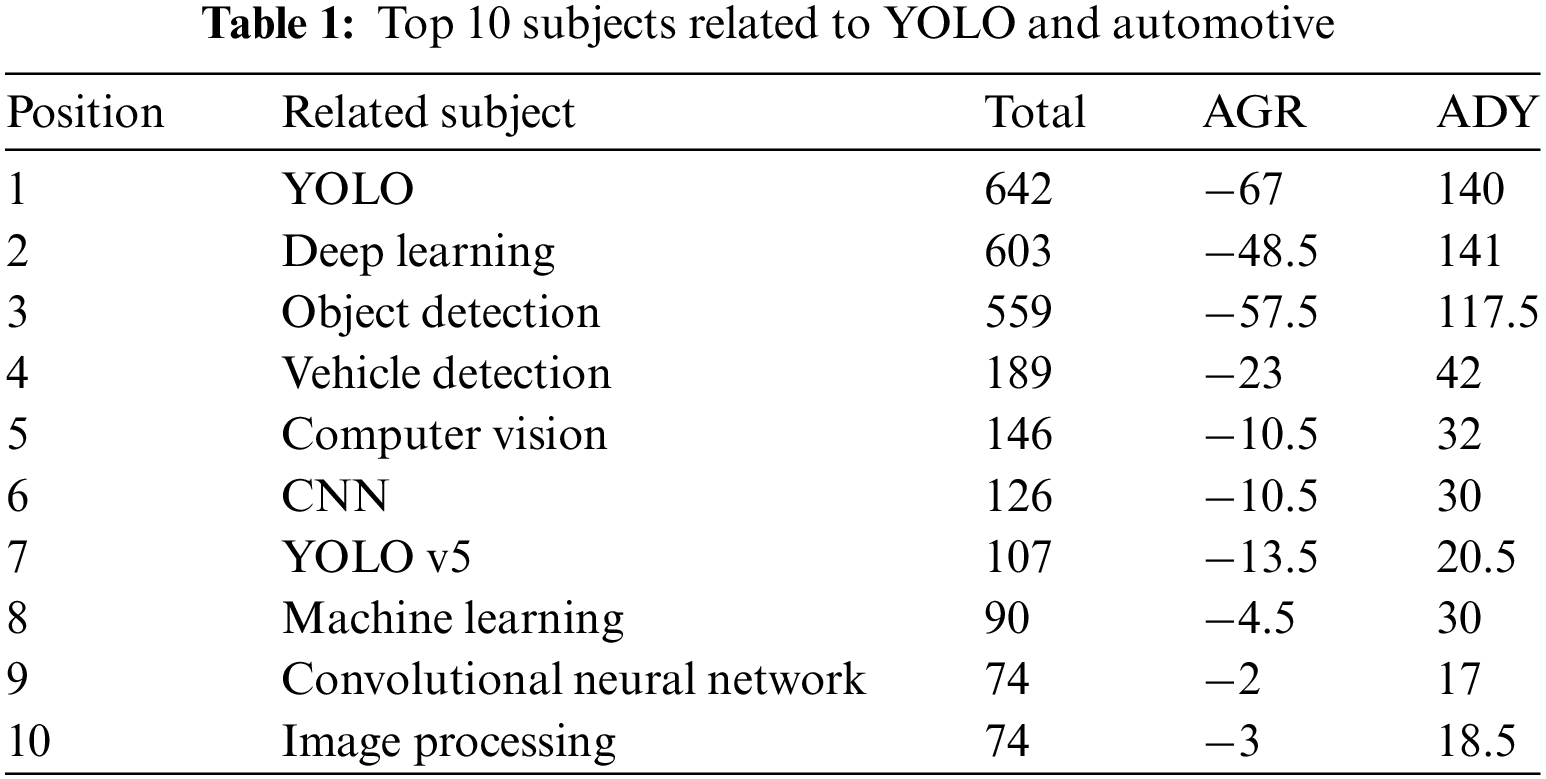

The classification of the related fields from Table 1 was based on the Average Growth Rate (AGR) –the average difference between the number of articles published in one year (2024) and the number of articles published in the previous year (2023), and on the Average Documents per Year (ADY)–the average number of articles published in a time frame for topics (2020–2024) related to the main field, automotive.

After analyzing the papers with the most citations and the most relevant for YOLO and automotive research, the following exclusion criteria were applied to select the articles to be discussed. The exclusion was done by publication type (books, chapters, and editorials were excluded), publication date (starting 2015, when YOLO was released with its first version until 2019, all papers were excluded), irrelevant subjects, and duplicate articles.

The potential of using the YOLO algorithm has increased from version to version, with constant improvements being brought to it. This aspect led to an increase in the number of researchers who choose to use YOLO in engineering and computer science research, such as road traffic and monitoring, the construction of autonomous vehicles, and automation and manufacturing technology.

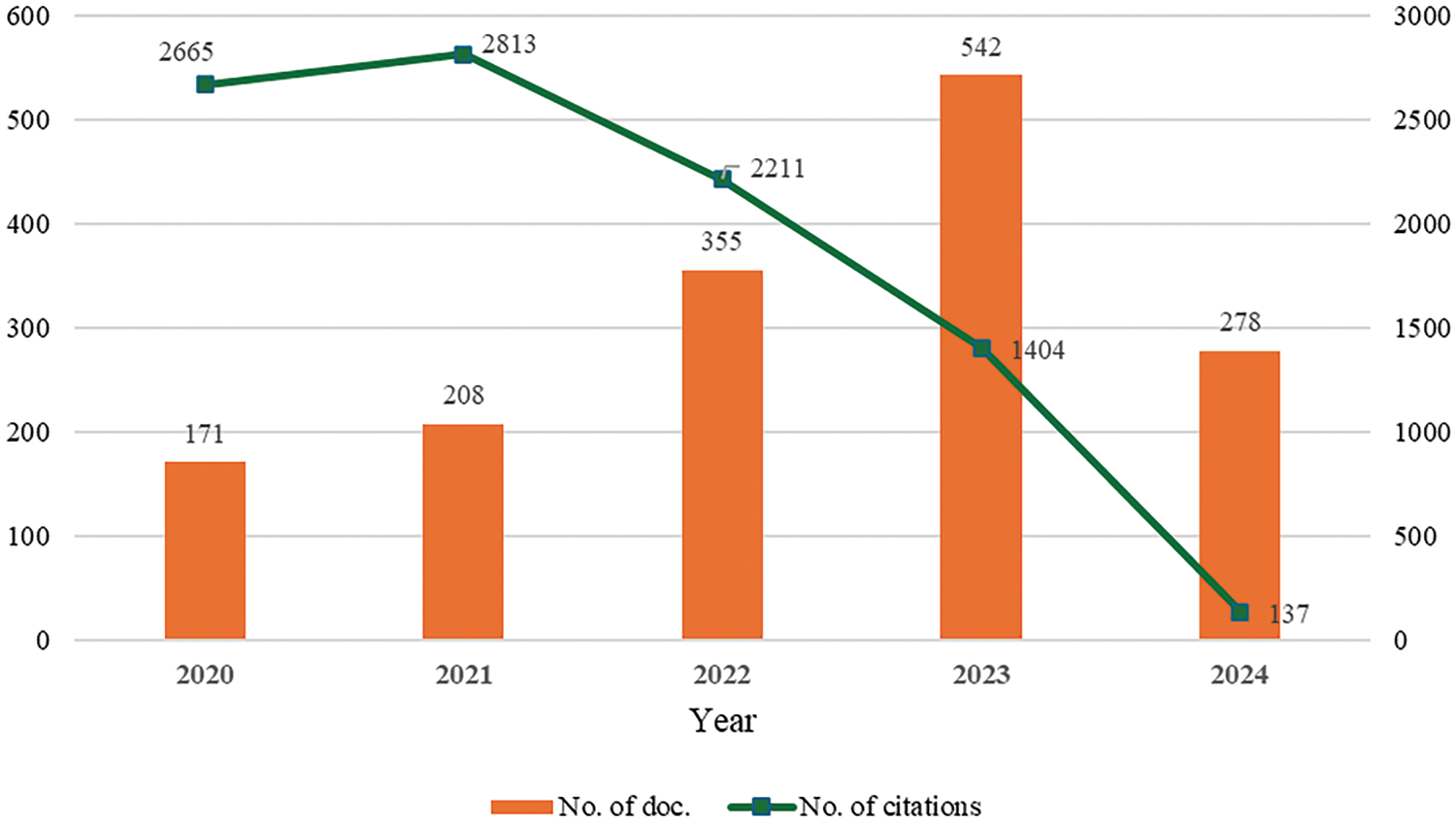

To comprehend the evolution and influence of YOLO algorithm research in automotive field, a bibliometric analysis of Scopus and Web of Science-indexed articles was undertaken. Fig. 6 illustrates the annual publication and citation patterns within this domain from 2020 to 2024.

Figure 6: Scientific publication and the number of citations per year

Since its introduction in 2015, the YOLO algorithm has experienced rapid adoption within the automotive sector, exhibiting exponential growth from 2020 to 2024. A marked acceleration in research output emerged post-2020, driven primarily by YOLO’s iterative refinements in speed, accuracy, and adaptability to diverse applications. It can be seen that the upward trend is maintained considering the number of publications for the first quarter of 2024 (until the search was performed). Fig. 6 also presents the cumulative number of citations received by publications per year. As expected, a downward trend is observed due to the time-dependent nature of citation accumulation.

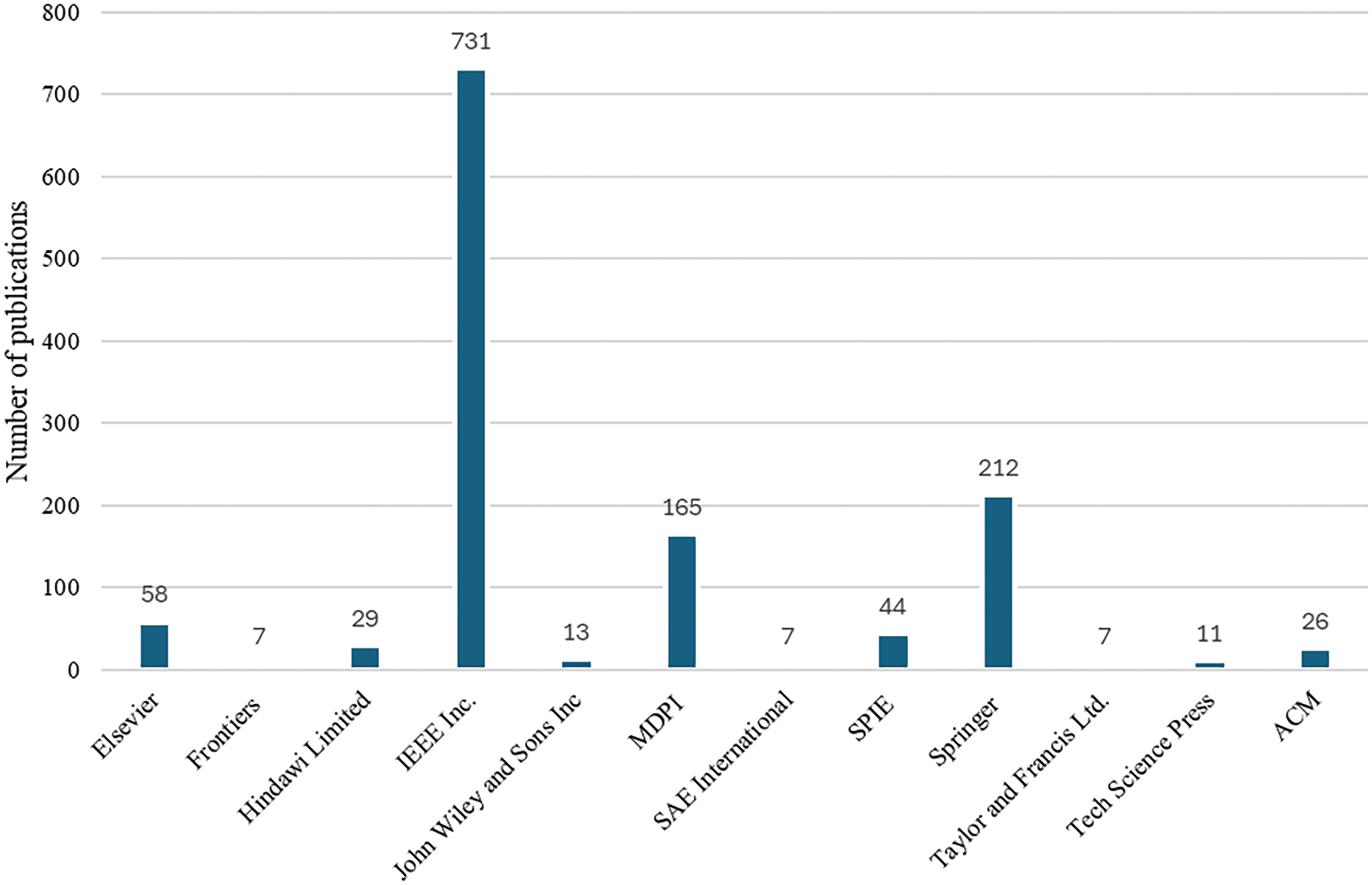

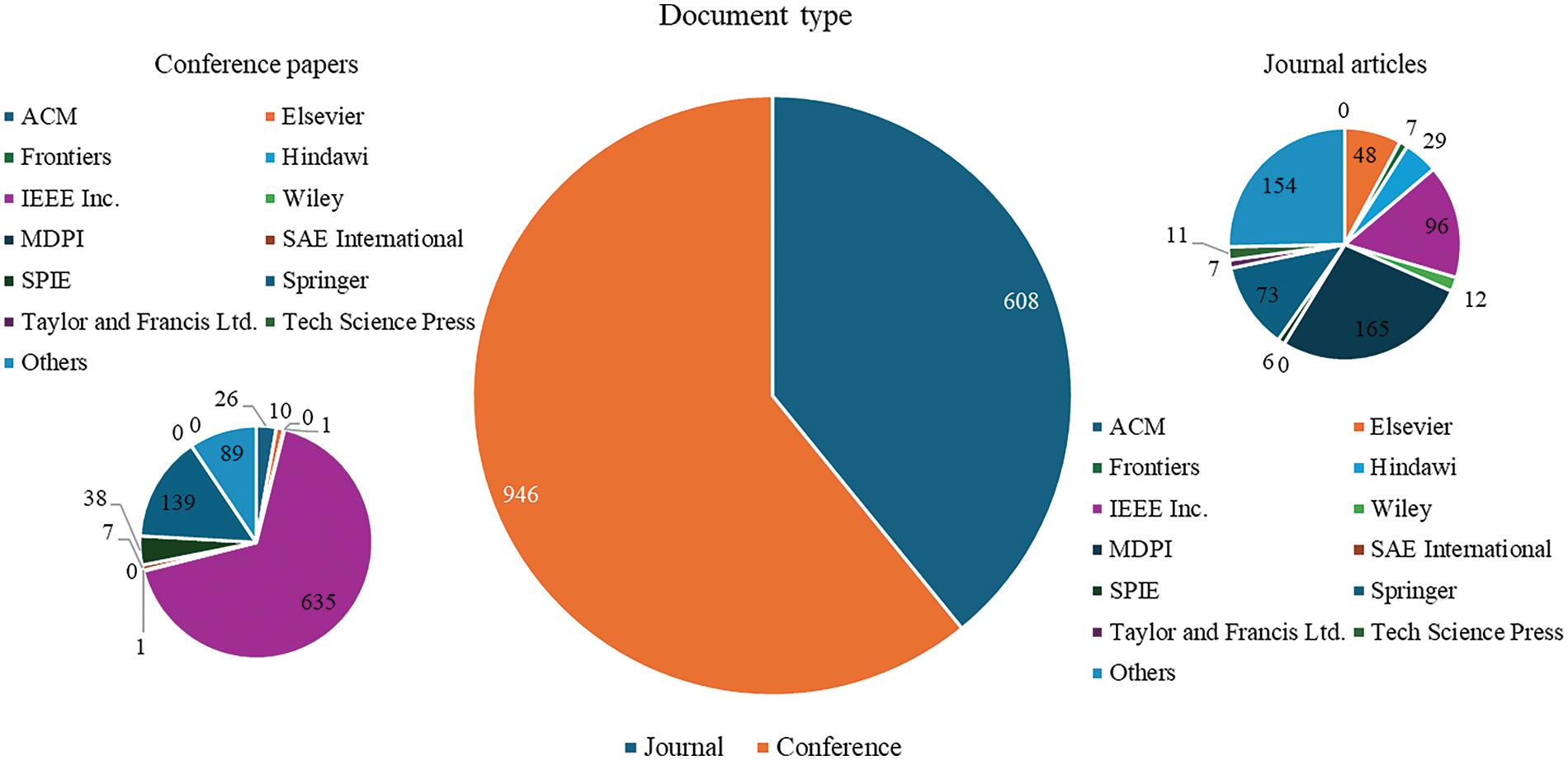

The corpus of documents was categorized by publisher using Microsoft Excel. Fig. 7 illustrates the distribution of publications across top publishers, revealing the preferred ones for dissemination of automotive research within Scopus and Web of Science publishers. This analysis highlights the diverse publisher landscape represented in both databases. Institute of Electrical and Electronics Engineers (IEEE) holds the dominant position, contributing 47.04% of the total publications. This encompasses both journal articles and conference papers, as defined by inclusion criteria.

Figure 7: Publication count by leading publishers

Fig. 8 presents the classification of the identified articles by type (conference or journal) and publisher. Conference papers constitute 60.88% of the total number of documents, with IEEE as the predominant publishing platform (67.12%). Journal articles account for the remaining 39.12% of the items, most of them being published in MDPI (27.14%).

Figure 8: Distribution of published documents by type and publisher

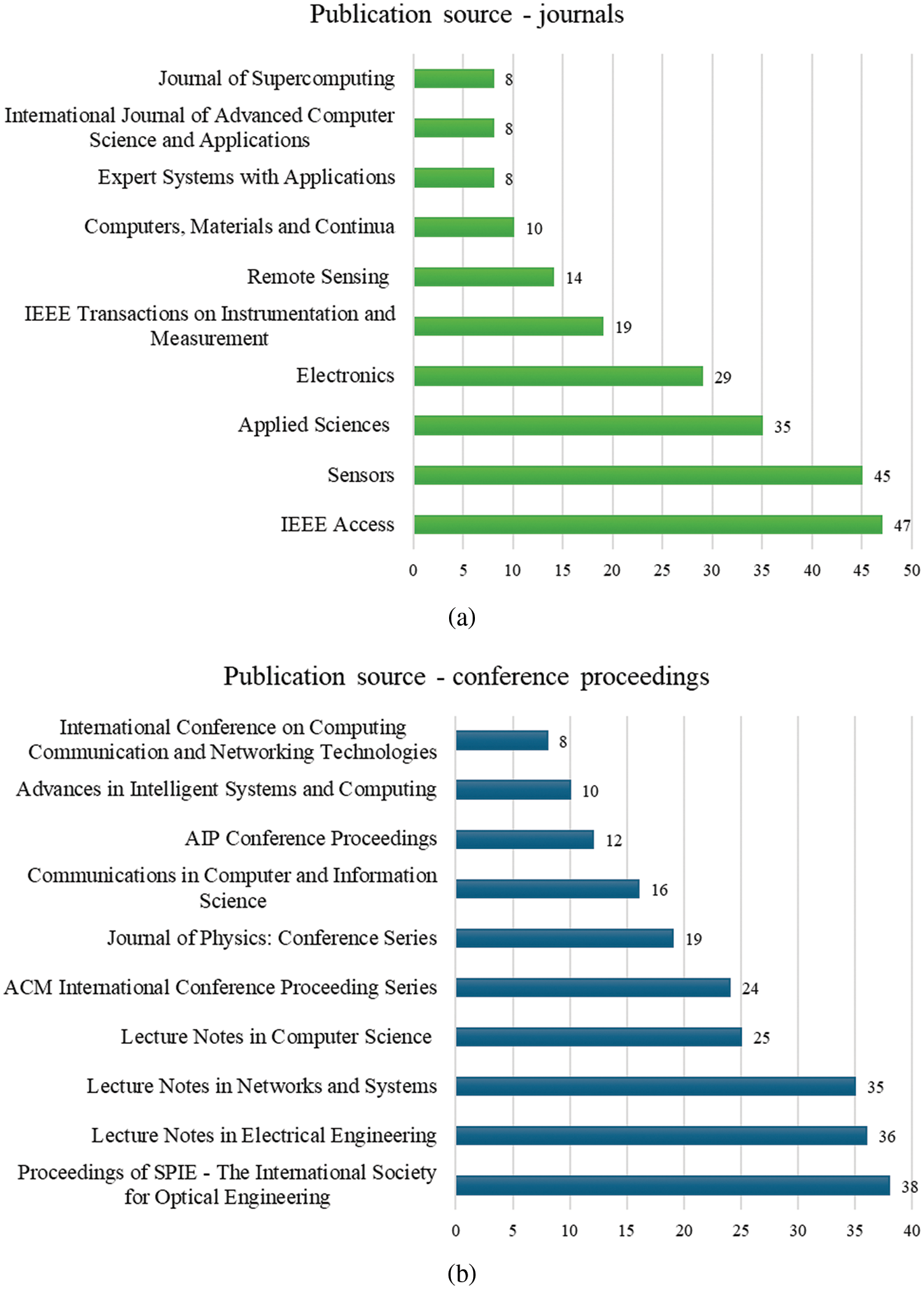

Further, the articles were grouped according to the source of publication, with journal articles and conference papers still differentiated. Fig. 9a shows the journals that published the most studies related to the automotive field and YOLO indexed in Scopus and Web of Sciences from 2020 to 2024. Multidisciplinary or specialized journals on electronics and sensors are the most active in terms of publishing scientific articles. For example, “IEEE Access” published the most articles (7.73% of the total number of scientific articles), being succeeded by “Sensors” with 45 publications (7.40%). In terms of conference proceedings, “Proceedings of SPIE” and “Lecture Notes” are the most prolific sources. Collectively, the top 10 journals contributed to 36.68% of the total journal articles, while the top 10 conference proceedings accounted for 23.57% of the overall dataset.

Figure 9: Top 10 publication sources: journals (a) and conference proceedings (b)

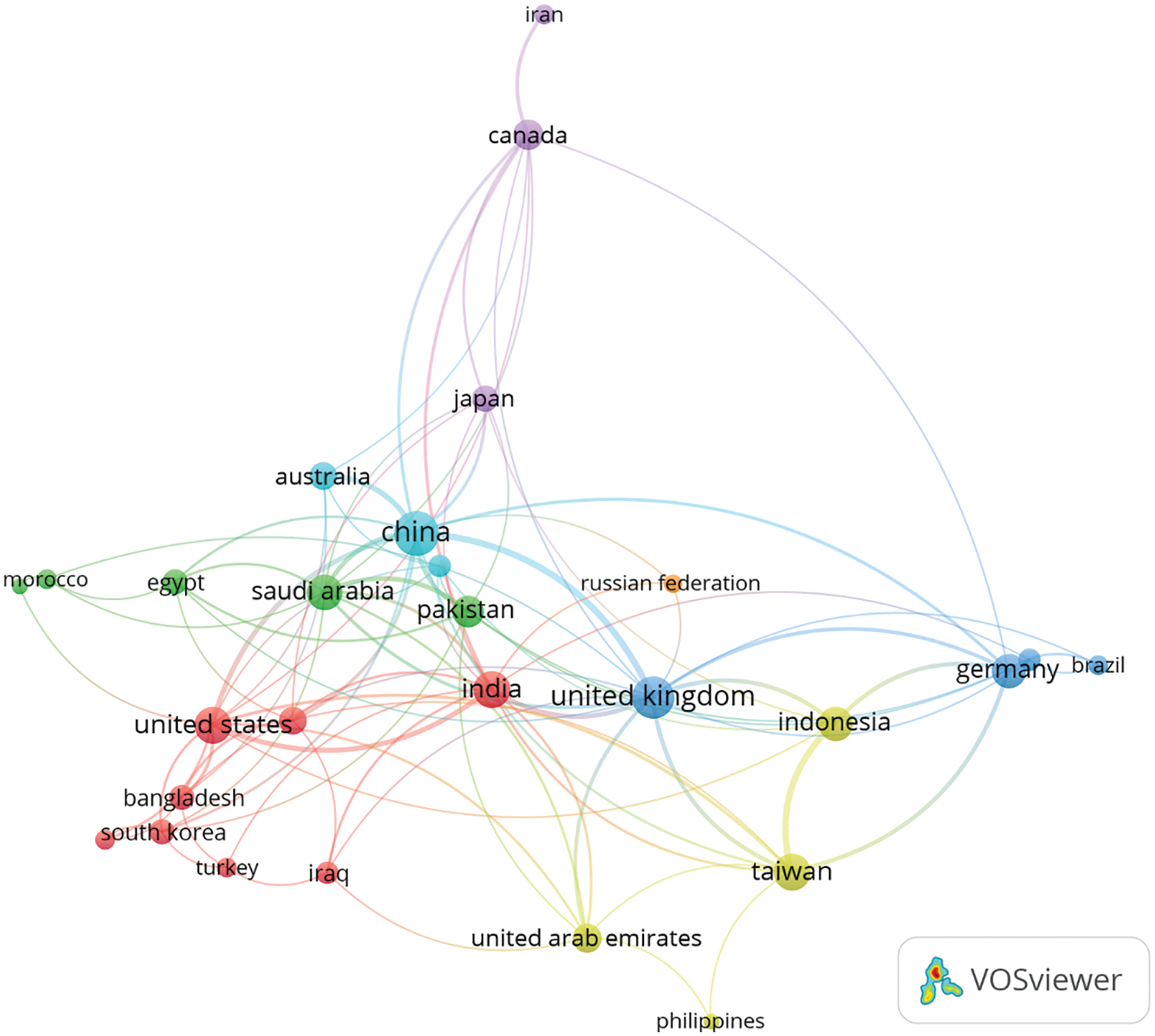

To identify key research contributors and geographic research patterns, a country-level co-authorship analysis was conducted. Using VOSviewer software [21], a co-authorship network was constructed (Fig. 10) based on a publication threshold of 10. This analysis included 28 of the 98 countries within the dataset. Nodes representing countries were connected to indicate collaborative relationships, with link thickness reflecting collaboration intensity. China and India emerged as leading contributors in terms of publication output and total citations. The United Kingdom demonstrated significant research impact, as evidenced by its high total link strength.

Figure 10: Country collaboration network

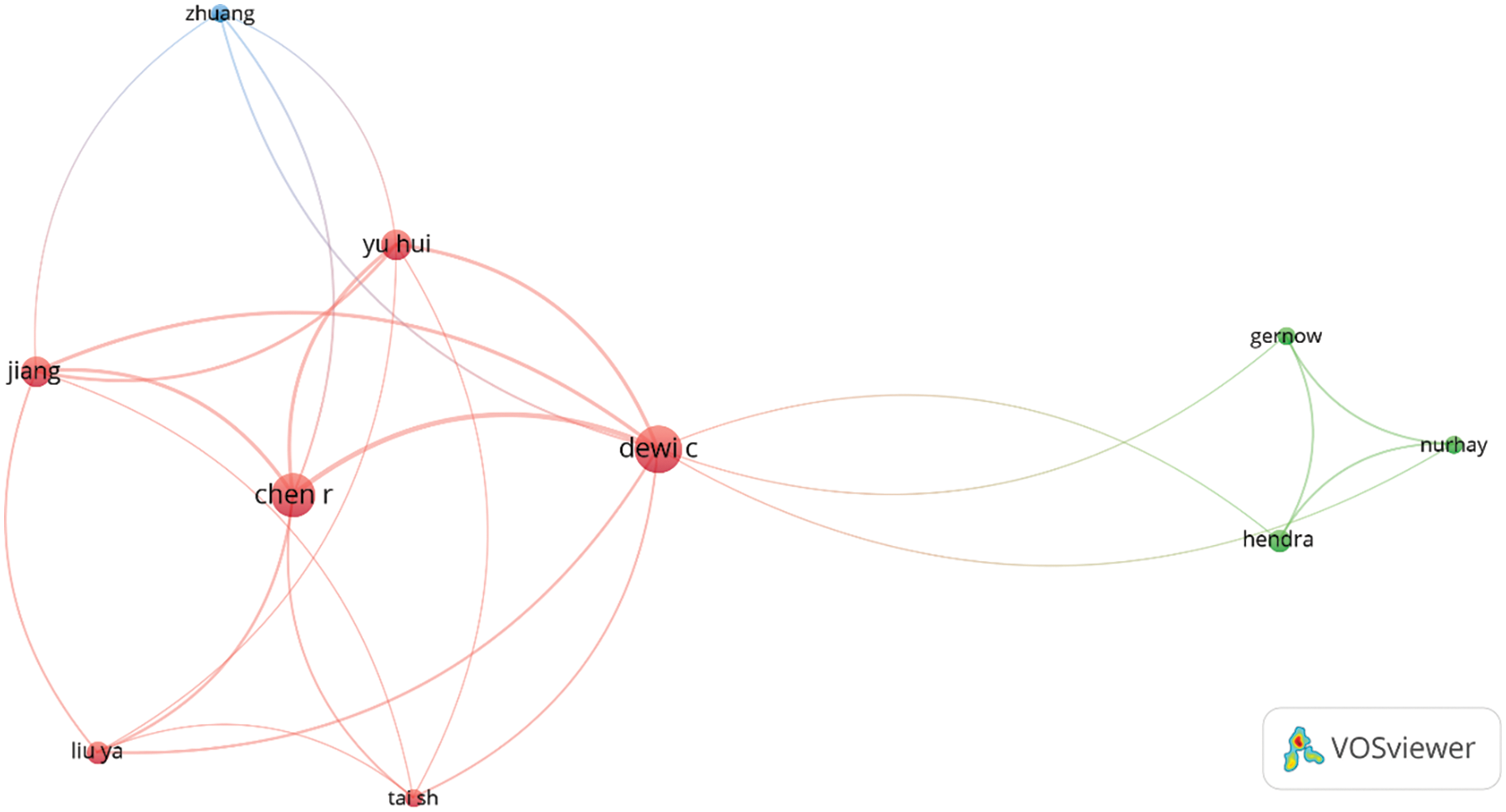

A co-author analysis was performed to identify key researchers and collaboration models in the field of YOLO application in automotive. Based on the 1554 publications, the co-authorship network was constructed using VOSviewer, applying thresholds of 3 documents and 10 citations. 20 authors were identified in this way. Of these, ten exhibited collaborative relationships, as represented in Fig. 11. The size of the nodes corresponds to the number of documents for each author. Dewi Christine and Chen Rung-Ching emerged as the most prolific and cited researchers, contributing 10 and 9 documents, respectively, with over 430 citations each. Jiang Xiaoyi and Yu Hui followed with 5 documents each, accumulating 296 and 237 citations, respectively.

Figure 11: Co-authorship network

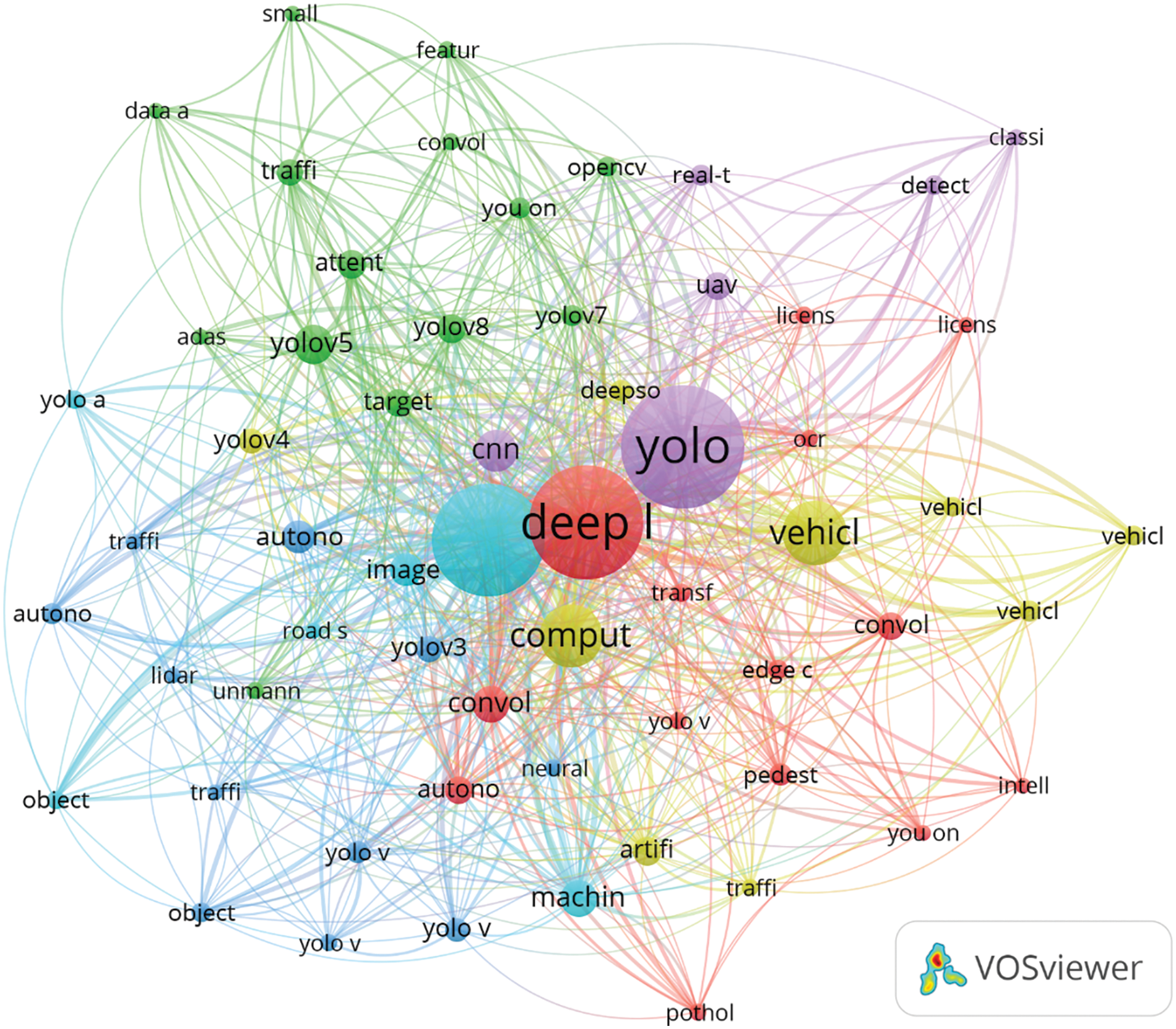

Author-provided keywords that appeared more than fifteen times in the Scopus and Web of Science databases (out of 2980 total) were selected for further analysis. This resulted in 60 keywords. “Yolo” (occurrences: 437, total link strength: 765), “object detection” (occurrences: 383, total link strength: 720), and “deep learning” (occurrences: 387, total link strength: 719) were the most frequent terms. They are followed by “computer vision” (occurrences: 143, total link strength: 308) and “vehicle detection” (occurrences: 149, total link strength: 255). The co-occurrence network of author keywords generated with VOSViewer is shown in Fig. 12. Regarding the Yolo versions, “yolov5”was the most frequently used keyword (total link strength: 109), followed by “yolov8” (total link strength: 67), “yolov3” (total link strength: 56), “yolov4” (total link strength: 54), and “yolov7” (total link strength: 38).

Figure 12: Co-occurrence network of author keywords

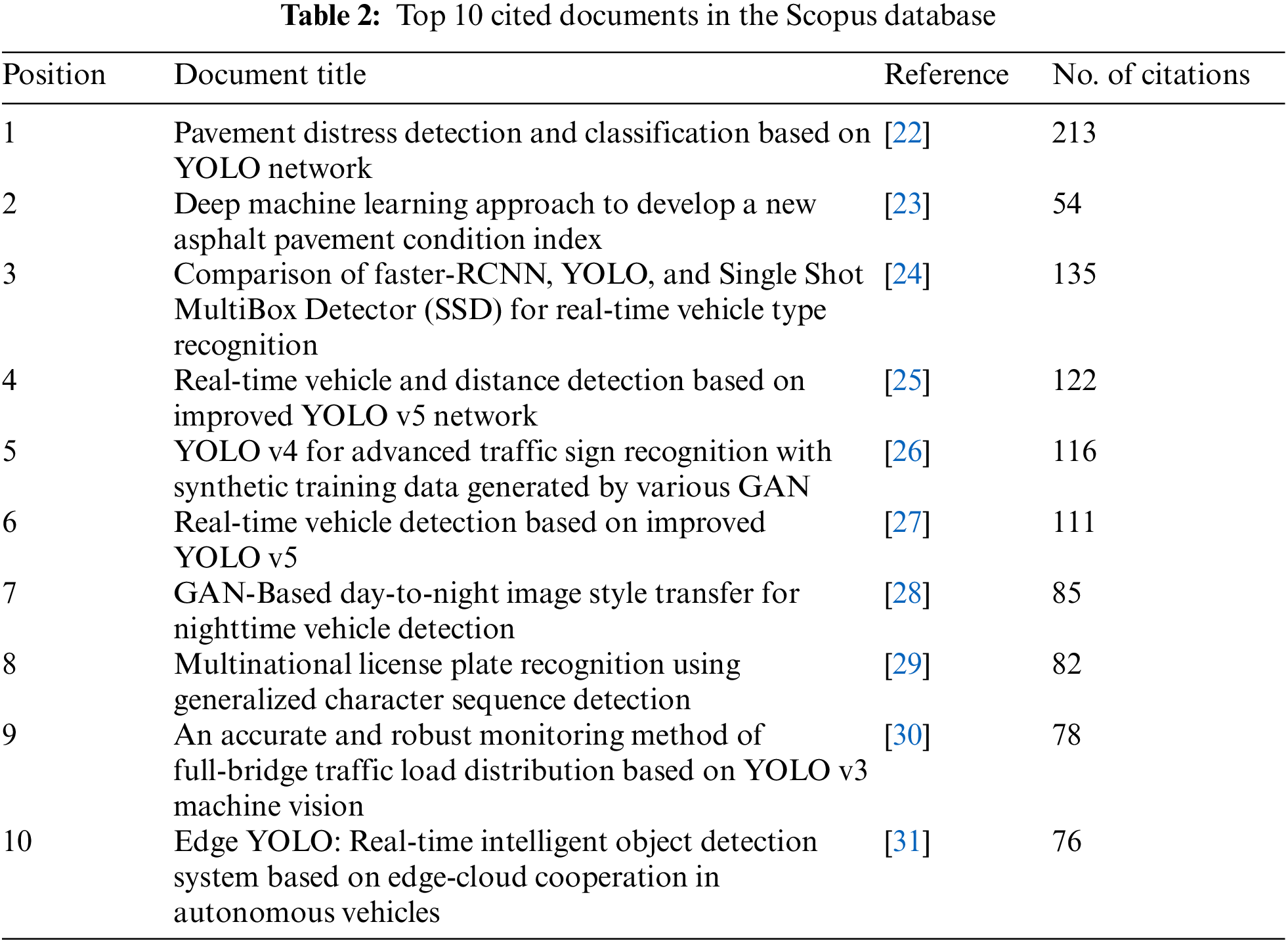

A citation analysis was conducted to assess the impact of identified articles within scientific literature. Based on Scopus data, the ten most cited studies focused on YOLO applications in the automotive domain are presented in Table 2.

The following sections delve into the application of YOLO within automotive for object detection, categorized into three areas: road traffic, autonomous vehicle development, and industrial settings.

3.2 Object Detection Used in Road Traffic

The analysis of road traffic includes several areas, such as intelligent systems for monitoring general traffic, systems for emergency vehicles, recognition of the registration number for access to public and private parking lots, detection systems for violating traffic rules, and many others. For these systems to provide the information that the authorities need, various algorithms are used, and one of the most popular nowadays is YOLO.

In [32], the authors proposed a system that accomplishes an objective for smart city infrastructure and a mechanism for green light corridors that are very useful for emergency vehicles. A vision-based dynamic traffic light system using the YOLO algorithm is proposed to facilitate priority in intersections.

Assessing traffic density is crucial for optimizing intelligent transportation systems and facilitating efficient traffic management. In [33], data were gathered from various open-source libraries, and vehicle classification was conducted through image annotation, followed by image enhancement via sharpening techniques. Subsequently, a hybrid model combining Faster Region-based Convolutional Neural Network (R-CNN) and YOLO, employing a majority voting classifier, was trained on the preprocessed data. The proposed model achieved a detection accuracy of up to 98%, outperforming individual models such as YOLO (95.8%) and Faster R-CNN (97.5%).

Regarding cities with high and very high density of vehicles, there are noted difficulties in identifying the correct category of vehicles on the road. In [34], an approach for this challenging problem in India is analyzed and validated. Using YOLO v5, the authors obtained improved results compared to the state-of-the-art approaches and succeeded with a reduced computational time. For the large-scale changes in the fast speed of vehicles and many different traffic signs, in [35], a multi-scale traffic sign detection model Compressed and Robust YOLO v8 (CR-YOLO v8) is proposed. The experimental results show that this improved method increases the accuracy of small objects by 1.6%.

Counting vehicles poses a major challenge in highway management due to the different sizes of vehicles. In [20], a model using YOLO v5 and GhostBottleneck for vehicle detection was proposed, showing high detection rates and quick detection times. Besides counting vehicles, their speeds can also be estimated. For example, in [36], an algorithm based on YOLO v4 was developed for this purpose. Additional methods can be found in [14,37–39]. In [40], a real-time road traffic management strategy was introduced using an improved version of YOLO v3. The authors trained the neural network on publicly available datasets and implemented the solution to enhance vehicle detection. A convolutional neural network was used for the traffic analysis system, and evaluation results indicated that the proposed system performed well. The newest technique for obtaining relevant information from traffic in a nonintrusive way is aerial image analysis. Although drones have limited flight autonomy, they can obtain valuable images from traffic, either in real-time or through subsequent processing of the images. Detecting small objects in aerial images [41] was challenging due to the complex background in images from unmanned aerial vehicles (UAVs). Using an approach that uses UAVs and the YOLO v7 algorithm, the experiment’s results demonstrate that YOLO v7 UAV has better results by at least 27% in real-time detection speed than YOLO v7 alone. Another proposal in [42] for small object detection is a model that focuses on the important position of the object in images by improving the YOLO v4 network with Atrous Spatial Pyramid Pooling (ASPP). The authors proposed a Hybrid Dilated Convolution Attention module in parallel with it. The results showed an improvement of 2.31% compared with the original YOLO v4. In addition to highways and cities, there are rural areas where stationary video cameras are unavailable. Thus, in [43], an aerial vehicle detection with vehicle classification and tracking system using YOLO v7 is proposed. The results showed an overall classification accuracy of 93%, compared to an individual accuracy of 99.5%.

In [44], the paper dealt with obtaining quality data on traffic parameters based on static surveillance street cameras. The solution is based on YOLO v3 and the Simple Online and Real-time Tracking (SORT) open-source tracker. The system was tested during the daytime and at night in six intersections, with an accuracy of 92% for flow rate, traffic density, and speed. For speed determination, the error did not exceed 1.5 km/h. As a comparative analysis of real-time vehicle detection systems for traffic monitoring, the authors in [45] provided an analysis of algorithms YOLO v3, v3 tiny, v4, and v4 tiny and their efficiencies. The conclusion was that YOLO v4 provides the best accuracy, at 95.41%. A novel YOLO detector based on fuzzy attention (YOLO-FA) was proposed in [46], which proved to be better in terms of speed and accuracy than other detectors. In [47], a new method for object recognition called YOLO-ORE was introduced, integrating YOLO with a radar image generation module and an object recheck system. Object Recheck and Elimination (ORE) system aims to address the limitations of YOLO, specifically focusing on mitigating overlap errors between different object classes and reducing misclassification errors of low-confidence objects. They performed comprehensive real-world experiments under realistic scenarios to assess the proposed methodology. The outcomes demonstrated that the YOLO-ORE system surpassed the performance of the current state-of-the-art YOLO-based object recognition system.

The global issue of power wastage due to continuous street lighting has prompted the proposal of a system where streetlights adjust their intensity based on vehicle proximity, thus conserving power. A deep learning model is used in [48] for traffic light detection and categorization, with the selection of relevant traffic lights refined using previous data. Various deep learning models like Alexnet, VGG-16, Resnet-18, and Resnet-50 are evaluated to determine the most suitable combination for vehicle detection in the smart street lighting system. Different object detectors such as SSD and YOLO v3 are compared based on their trade-offs between accuracy, detection time, computational complexity, and time complexity, using metrics like mean average precision (mAP) and precision-recall curves, with results of over 74% mAP.

Alongside road traffic, there is also the connection between congestion and fuel consumption at nearby city intersections. Traffic congestion wastes time increases delays and comes with financial loss as stated in [49]. The authors in [50] proposed a system with an intelligent algorithm for traffic flow optimization by switching traffic lights depending on traffic density. In conclusion, this system has more advantages than traditional traffic management systems, such as improved accuracy, efficiency, and the possibility of adapting to different traffic conditions in real-time. The innovative traffic management system, integrating the Internet of Things (IoT) and machine learning technologies like YOLO v3 in [51], offered an efficient approach to tackle intricate traffic issues, in areas with dense traffic like India. The results of the study showed the achievement of over 90% accuracy in the real-time identification of vehicles, pedestrians, and cyclists with YOLO v3.

The proposed model in [52] can effectively detect objects of various sizes, including large, small, and tiny objects. Compared to the original YOLO v5 architecture, this model reduces the number of trainable parameters while significantly enhancing precision. Experimental results indicate a slight decrease in parameters from 7.28 million to 7.26 million, achieving a detection speed of 30 fps, surpassing the YOLO-v5-L/X profiles. Notably, the model demonstrates a remarkable 33% improvement in detecting tiny vehicles compared to the YOLO-v5-X profile.

Reference [53] introduced Ghost-YOLO, a model featuring the innovative C3Ghost module designed to replace the feature extraction module in YOLO v5. The C3Ghost modules efficiently extract features, significantly accelerating inference speed. Additionally, a novel multi-scale feature extraction method is developed to improve the detection of small targets. Experimental results demonstrate that Ghost-YOLO achieves a mAP of 92.71% while reducing the number of parameters and computations to 91.4% and 50.29% of the original, respectively. Compared to various models, this approach demonstrates competitive speed and accuracy.

Vehicle counting accuracy significantly improves when a blend of deep learning and traditional image processing is applied in object detection. Initially, deep learning effectively detects objects during daylight hours, as evidenced in experimental tests conducted in [54] with high-angle rain videos. However, the efficacy of deep learning object detection methods is contingent upon favorable lighting conditions within the video. Furthermore, real-time object detection is achievable through convolutional neural networks, as demonstrated in experiments where all YOLO v5 models achieved accuracies surpassing 97.9% in the test video.

To determine road load capacity, efficient vehicle classification, and counting are necessary, and in [55], the input video that is processed with YOLO is sourced from both a stationary video camera and drone-captured footage to perform tasks like car counting, direction determination, and vehicle type classification on the road. The system outputs the total count of vehicles moving in each direction along with the identification of each vehicle type. This methodology can also be applied to other applications such as surveillance tracking and monitoring. In [56], the proposed method is based on Closed-Circuit Television (CCTV) cameras for images and the YOLO v3 framework. It comprises cascade steps such as masking, detection, classification, and counting vehicles from different classes. The results showed an accuracy of 93.65% in the daytime and 87.68% at night. The approach employed in [57] involves capturing traffic movement using a smartphone camera to create a video saved in MP4 format. Subsequent calculations are conducted in the office using a custom program developed by the authors, leveraging Python, OpenCV, Pytorch, and YOLO version 8 software. Upon passing through a designated counter box, the system counts and logs traffic volume data in Excel format (.xls). The video captures traffic activity in the vicinity of the Halim area along the Jakarta-Cikampek toll road, achieving a measurement accuracy of 99.63%.

For humans, saving time is essential. In most world cities, there is no possibility of switching traffic lights to give priority to emergency vehicles, and this is what the authors in [58] are proposing. After confirming the presence of an emergency vehicle, the traffic light switches to green for the lane that the emergency vehicle is in. The green signal remains active until the emergency vehicle clears the traffic junction. The timing of the green signal is adjusted based on the number of vehicles in the lane, ensuring efficient allocation of time. Specifically, the system has been configured with a timer value of 30 s for lanes with fewer than 15 vehicles.

Another proposal is presented in [59] to detect emergency vehicles and their arrival time on a lane and then clear the traffic on that lane. This proposal was modeled and tested with real-time videos and the YOLO v5 algorithm, with improved performance compared to implemented solutions. Also, vehicle tracking systems can be improved by detecting all types of vehicles using Regions with Convolutional Neural Networks (RCNN), Faster RCNN, and YOLO [60]. The experimental research presented in [61] aims to address the critical issues of emergency vehicles in traffic substantially. A framework has been developed in the study for real-time vehicle detection and classification using an improved version of YOLO v7 and Gradient Boosting Machine (GBM). The framework prioritizes emergency vehicles for immediate path clearance based on pre-assigned priorities to different vehicle classes and had an accuracy of 98.83%.

As part of road traffic, different types of cars, two-wheelers, or bike riders can be considered. Wearing a helmet during rides is very important, and in [62], the authors proposed a traffic monitoring system based on YOLO v5 and CCTV cameras for identifying bike riders who are not using helmets. At the same time, this approach is also useful for traffic police to identify the bikers who are using helmets and the ones who are not using helmets [63]. In [64], the authors proposed a method for detecting multiple traffic violations, such as overspeeding, not wearing a helmet, and using a phone while driving. The YOLO v5 algorithm and Haar Cascade were used to identify these violations in real time. In [65], the YOLO algorithm was used in China’s traffic informatization for sign recognition and reducing the number of traffic accidents.

Another part of object detection in traffic management is related to vehicle number or license plate detection, a general monitoring strategy used on a large scale in cities [66]. To identify a vehicle’s license plate, it is important first to calculate the correct position from a vehicle image, as this impacts the recognition rate and speed. In [67], YOLO-PyTorch deep learning architecture was used to recognize the license plate, with satisfactory results. License plate recognition is used not only in traffic management and locating vehicles but also in building a proper parking management system, as parking lots have become increasingly crowded in recent years [68].

In addition to vehicles, many pedestrians are also encountered in road traffic. They are part of the traffic and need to be counted when the local authorities decide to improve the number of crosswalks in a city. In [69], the pedestrians are detected and labeled using YOLO v3 with an accuracy of 96.1%. Another method named YOLOv8n_T is proposed in [70], which is developed on the YOLO v8 skeleton network and can be used for small targets in traffic, such as pedestrians. This method proposed aims to significantly enhance the precision of target detection in all road scenarios.

In crowded pedestrian places, such as sidewalks, airports, and pedestrian crossings in central areas, there is a significant risk of unforeseen and unpleasant situations, so tracking suspicious persons or objects is an advantage for the population’s safety in traffic. In [71], a multi-object tracking model is presented using the YOLO track and SORT algorithm track for identifying pedestrians in continuous videos for detection by the SORT algorithm. This or something similar can be used in public transportation for better traffic management [72] and fewer suspicious situations that can interrupt the traffic schedule.

Road pavement is a component of road traffic, and it refers to the infrastructure and conditions related to roads and vehicular movement. Reference [55] discussed the cracks that appear in the pavement as a structural element closely related to road traffic. The purpose is to detect cracks on the road in a short time, and for this YOLO v5 is used on a smartphone as a detector for fast maintenance of the road. The detection accuracy in this case is 0.91 mAP on an area of 1.1682 m2 of cracked pavement. Also, the cracks on the pavement can be detected using vehicle-mounted images and YOLO v5, as the authors proved in [56]. Considering the shadows occlusion and uneven lighting brightness shadow, Reference [57] proposed a complex background defect detection algorithm based on YOLO v7 with an accuracy of 72.3%.

3.3 Object Detection Used in Developing Autonomous Vehicles

The YOLO algorithm detects unpredictable situations that can lead to collisions when developing and constructing autonomous vehicles. It is also used for electric vehicle charging systems, the correct detection of the traffic lane, navigation on board the vehicle, and obstacle detection on the roadside. As most collisions happen at night, researchers proposed an approach that integrates the IoT and the YOLO algorithm that manages the system to adjust the light projection as feedback to the other vehicles on the road [73]. Thus, it will automatically prevent shining into the vehicles from the opposite direction. For the same approach of warning of potential collisions, in [74], the authors implemented a robot operating system in two parts. In the first part, they detect vehicles in an autonomous vehicle environment using the YOLO v2 algorithm. The second part uses the robot operating system node for distance assessment. The results after applying this method were promising.

An advanced collision detection and warning system has been developed to improve safety for both autonomous vehicles and smart vehicles driven on highways, where traffic flow is continuous, and speeds are higher than in urban areas [60]. Pedestrian detection presents another challenge for autonomous vehicles. In [61], a pedestrian tracking algorithm combines StrongSORT, a sophisticated deep-learning multi-object tracker, with YOLO v8, an object detection algorithm. This combination enhances tracking results, and either system can also be used independently. While autonomous vehicles are rapidly advancing, there are still countries and regions where road conditions need improvement, such as more clearly marked lanes and better traffic management [62]. In such areas, it is crucial to develop self-driving cars capable of controlling speed, steering angle, and braking effectively. Competent autonomous cars require efficient detection algorithms. In [63], lane detection is performed using OpenCV, while YOLO v5 is employed for detecting speed breakers. The experimental results showed improved detection accuracy for normal, shadowed, and multiple speed breakers. YOLO can be adapted to Lightweight and Fast YOLO (LF-YOLO) to achieve faster detection speeds and greater sensitivity to large objects [64]. Starting from the idea that a single sensor has poor real-time performance, in [75], a method for urban autonomous vehicles based on the fusion of the camera and Light Detection and Ranging (LiDAR) is proposed. They made a transformation model between two sensors to achieve the pixel level of the two sensors. The YOLO v3-tiny algorithm was applied to increase the accuracy of the target detection. This fusion recognition meets the expectations of accuracy and real-time performance. Autonomous vehicles can be limited in complex road scenarios, especially in cities, where there are many traffic lights and many road lanes in each direction. In [76], an optimization of YOLO for traffic light detection and lane-keeping in the simulation environment is presented. The study compares YOLO v5, v6, v7, and v8 algorithms for traffic light signal detection, and the best results were obtained with YOLO v8. In [77], a challenge is identified by using a fixed traffic surveillance camera, which does not detect the object if it is not in the camera frame. Thus, an object detection system with the camera mounted in front of the moving object, in this case, a vehicle, is proposed to overcome this situation. For the object detection process, YOLO v3 was used, and together with the new position of the camera, improvement in detection accuracy was shown.

In a smart city, the traffic signs dataset has been changed compared to a traditional one. In [78], a computer vision-based road surface marking recognition system is introduced and serves as an extra layer of data from which autonomous vehicles will make decisions. The model trained the detector to recognize 25 classes of road marking by using YOLO v3 and over 25,000 images. The results demonstrated satisfactory performance in terms of speed of detection and accuracy.

The research presented in [79] focuses on a set of 72 distinct traffic signs that are prevalent on urban roads in China, intending to develop an enhanced YOLO v5 algorithm tailored to this specific task. The average precision of the method proposed was 93.88%.

Electric and autonomous technology development is increasing yearly as a leading solution for decreasing pollution, and advancements in charging stations worldwide accompany it. The authors in [70] developed a system called Advanced Vision Track Routing and Navigation (AViTRoN). It was designed for Automatic Charging Robots in the field of electric vehicle charging. AViTRoN ensures real-time charging port detection by using YOLO v8 as a deep learning model and a notification mechanism for charging completion, sending instant notifications via Global System for Mobile Communications (GSM) or General Packet Radio Service (GPRS) directly to users. The development of autonomous cars requires advances in perception, localization, control, and decision-making, as many self-driving cars use sensors [71]. Besides this, as autonomous vehicles become more prevalent, the accuracy and speed of surrounding objects become increasingly important. Using YOLO v8 on commercial vehicles on high-speed roads got an accuracy of surrounding object detection of 64.5% with reduced computational requirements [72]. In [73], the authors combined YOLO for object detection with Monocular Depth Estimation in Real-Time (MiDas) for depth sensing on localization and obstacles to improve the decision-making on the path-planning of the cars. The results of this technological development showed that this system is robust, bringing an innovative approach to self-driving cars.

Object detection is a very important and widely discussed part of the development of self-driving cars. It requires integrating advanced sensing systems to address obstacles in road traffic and different weather conditions [80]. In [81], an improved MV2_S_YE object detection algorithm is proposed, which will be used to improve object detection accuracy on roads based on the YOLO v4 network. The results show that MobileNetV2 with Spatial Pyramid Pooling and Enhanced YOLO (MV2_S_YE) has better results than YOLO v8 alone.

Supervised object detection models relying on deep learning methodologies struggle to perform effectively when faced with domain shift scenarios due to limited annotated training data. As a solution, domain adaptation technologies have emerged to tackle these domain shift challenges by facilitating knowledge transfer. In [82], a framework called stepwise domain adaptive YOLO (S-DAYOLO) has been developed to address this issue, obtaining an increase of 5.4% mAP compared to YOLO v5.

The paper [83] presented a solution for object detection and tracking in autonomous driving scenarios. It compares and assesses the performance of various state-of-the-art object detectors trained on the BDD100K dataset, including YOLO v8, YOLO v7, YOLO v5, Scaled-YOLO v4, and YOLOR. The proposed approach combines a YOLOR-CSP architecture with a DeepSORT tracker, achieving a real-time inference rate of 33.3 frames per second (FPS) with a detection interval of one, and a 17 FPS rate with an interval of zero. The best-performing object detector was YOLORCSP, achieving a mAP of 69.70%.

Regarding Automatic Driving Systems (ADS) and Driver Assistance Systems (DAS), the accurate detection of objects is crucial. However, existing real-time detection models face challenges, particularly in accurately detecting tiny vehicle objects, leading to low precision and subpar performance. In [84], authors proposed a novel real-time object detection model named Optimized You Only Look Once Version 2 (O-YOLO v2), built upon the You Only Look Once Version 2 (YOLO v2) deep learning framework specifically tailored for tiny vehicle objects. Through experimentation and analysis conducted on the dataset, the model demonstrates significant improvements in both the accuracy of tiny vehicle object detection and overall vehicle detection accuracy (reaching mAP 94%) without compromising detection speed.

In [85], a comprehensive solution-Radar-Augmented Simultaneous Localization and Mapping (RA-SLAM) was developed, and an enhanced Visual Simultaneous Localization and Mapping (V-SLAM) mechanism for road scene modeling, was integrated using the YOLO v4 algorithm. This solution came after the authors encountered anomalies like unapproved speed bumps, potholes, and other hazardous conditions, especially in low-income countries. On the same path, in [86], a robust solution for pothole detection is proposed using the YOLO v8 algorithm. The system employs a deep learning methodology to identify real-time potholes, helping autonomous vehicles avoid hazards and minimize accident risk. Detected pothole data is based on the conditions and the graveness. In [87], the authors classified the potholes into two categories, dry and submerged and the average detection accuracy with YOLO v5 was 85.71%. The purpose of their study was to minimize accidents and vehicular damage. Detecting road hazards helps the self-driving vehicle move smoothly, avoiding getting stuck in potholes. In [88], the authors propose a two-part method for detecting hazards. The first one is for object detection, using the YOLO algorithm. Part two is implemented on Raspberry Pi4, which is better suited for running objects. This solution solves common problems on the roads, like collisions or slowing down the transportation system. As the field of self-driving cars will soon be the face of the automotive industry [89], numerous changes in object detection algorithms have been observed. The detection of obstacles in [90] is called the “YOLO non-maximum suppression fuzzy algorithm”, and it performs the reaction to obstacles with better accuracy and more speed than the hazard detection algorithms used for the designed framework. The proposed algorithm revealed, after trials, that the method has a higher speed than the baseline YOLO v3 model.

For vulnerable road users, such as pedestrians, it is better to forecast their behavior when developing autonomous vehicles. In [91], an end-to-end pedestrian intention detection is developed that works in daytime and nighttime, using YOLO v3, Darknet-53, and YOLO v7. In [92], a solution for pedestrian tracking by self-driving vehicles is proposed by exploring YOLO v5, Scaled-YOLO v4, and YOLOR. In addition, the deployment of the algorithms on devices like NVIDIA Jetson SCX Xavier is examined, as well as the use of Deep Stream like Normalized Visual Domain Contrastive Framework (NvDCF) and DeepSORT.

Optical perception is one of the most important factors for autonomous cars [93]. For example, in [94], YOLO v3-tiny is used for detecting temporary roadworks signs, which achieved a 94.8% mAP and proved its reliability in real-world applications. YOLO can also be used to support building a model on low-cost computer resources [89]. That being said, automatic traffic sign detection and recognition is an essential topic for safe self-driving cars [95].

Authors in [96] proposed an object detection system is proposed for improving the detection accuracy in adverse weather circumstances in real-time, using YOLO, an emerging CNN approach. The system validation was done on the Canadian Adverse Driving Conditions (CADC) dataset. The experiments showed that this solution has better results than the traditional methods. Also, authors in [97] investigated the use of YOLOx to detect vehicles in bad weather (rain, fog, snow), resulting in a mAP between 0.89 and 0.95 for different kinds of bad weather conditions.

In challenging lighting conditions such as haze, rain, and low light, the accuracy of traffic sign recognition tends to diminish due to missed detections or misalignments. Reference [98] introduced a novel traffic sign recognition (TSR) algorithm that combines Faster R-CNN and YOLO v5. The algorithm detects road signs from the driver’s perspective with assistance from satellite imagery. Initially, the input image undergoes image preprocessing using guided image filtering to eliminate noise. Subsequently, the processed image is fed into the proposed networks for both model training and testing. The effectiveness of the proposed method is evaluated using three datasets, demonstrating promising outcomes in traffic sign recognition.

3.4 Object Detection Used in Industrial Environments

Based on the selected articles, the research areas in industrial environments where YOLO is integrated can be divided into manufacturing, materials used, quality, and operator safety. In the automotive field, welding detection is a step-in-wheel production. In [99], a method based on YOLO v4 tried to detect vehicle wheel welds, and the results showed an increased accuracy of 4.92% compared to the baseline model. Printed Board Circuits (PCBs) are part of any electronic device used in the automotive field, and not only there. In [100], a method to inspect the PCB to create a defect-free product is proposed based on a hardware framework that involves setting up modules and a software framework that uses YOLO for image pre-processing. The results showed a satisfactory performance. For monitoring assembly lines of vehicle components, Reference [101] proposed a method with the YOLO v4 algorithm in the background for monitoring, classifying, and segregating industrial components in assembly lines. The proposed model achieved an average accuracy of 98.3 %, detecting several tool types and their locations in real time. Object detection and computer vision enable machines to perceive, analyze, and control factory production, and they play an essential role in Industry 4.0 [102]. Even if deep learning methods succeed in solving many problems, a lot of labeled data should still be provided. In [103], a hybrid dataset of 29,550 images of tools commonly used in manufacturing cells is evaluated in real time using background YOLO.

Steel and aluminum are two of the most utilized materials in automotive manufacturing. In [104], the quality of the steel coil end face directly affects the equipment safety of the production lines. Considering this, the detection of steel coil end face defects is important. A YX-FR model, based on Faster RCNN and YOLOX, was proposed and implemented with outstanding effectiveness. Aluminum wheels are susceptible to internal casting defects, so the authors in [105] present an approach to identify the defects by X-ray inspection and harnessing YOLO v5. The average mAP was 97.5%.

In [106], a detection model, YOLO-C, an optimization model that combines CNN with attention mechanisms, is proposed. The model can improve the accuracy of detecting steel defects in manufacturing industries. Like on steel, it is important to detect defects on the surface of the aluminum profile. The authors in [107] proposed a model called YOLO v5-CA, which is an improvement of the YOLO v5 algorithm. YOLO v5-CA is optimized using the LoU (Location of Union) distance instead of the Euclidean distance of YOLO v5 to enhance the model’s adaptability to the dataset.

Since classic image processing techniques are vulnerable to the strong reflection on the surface of new energy vehicles, in [108], the linear array high-resolution camera, and YOLO for the recognition of weld defects. Based on the single-stage target detection network, YOLO is optimized according to the particularity of weld defects. The welding stud is an extended part in vehicle manufacturing, so its quality has an important role. The authors in [109] aimed to solve the difficulties of low accuracy and slow speed in the stud inspection process. Their method is based on the YOLO v7 algorithm with a mAP increased from 94.6% on the traditional detection network, to 95.2% with the YOLO v7 improved network.

Defect detection is problematic in wire and arc additive manufacturing due to complex defect types and noisy detection environments. The paper [110] presented an automatic defect detection solution based on YOLO v4. YOLO attention improves the channel-wise attention mechanism, multiple spatial pyramid pooling, and exponential moving average. After evaluating the wire and arc additive manufacturing defect dataset, the model obtains a 94.5 mAP with at least 42 frames per second.

With the development of the Internet of Things and the Industrial Internet of Things came several smart solutions for factories [111]. The manufacturing industries are increasingly willing to improve product quality by using computer science techniques because human operators cannot accurately detect defects. In [112], a deep learning model is proposed along with the YOLO algorithm for better production accuracy and quality. To evaluate the results, the authors utilized metrics such as precision, recall, and inference and obtained values for these metrics that indicated improved performance.

Vision-based defect inspection algorithms are great for quality assurance in manufacturing different car parts, and among these is Industry 4.0, which features intelligent manufacturing. In [113], an algorithm was proposed using Field Programmable Gate Array (FPGA)-accelerated YOLO v3 based on an attention mechanism to make further improvements in smart defect inspection. The results showed that the proposed algorithm had a high accuracy of 99.2%, a processing speed of 1.54 Frames Per Second (FPS), and a power consumption of only 10 W. In [114], a region-based robotic machining system is proposed that enhances the automation of local grinding of automotive body welding slags. This system, based on YOLO v3, integrates the processes of defect detection, defect region division, and decision-making of the machining path.

In addition to the product quality assurance processes, the automotive industry must ensure the implementation of safety measures for the human factor. The study in [115] demonstrated how computer vision can assist in implementing the 6th S alongside the already existing 5S (Sort, Set in Order, Shine, Standardize, and Sustain) by identifying operators who do not respect safety procedures. The suggested model used the YOLO v7 architecture with satisfactory results to confirm operators’ protective equipment compliance.

Using a safety helmet is important for ensuring the safety of employees in the automotive manufacturing sector. However, employees, more frequently than expected, remove their helmets and expose themselves to different dangerous situations, and all this is due to a lack of security awareness and inconvenience [116]. In [117], for automatic helmet-wearing detection in the production area, a framework for real-time deep learning-based detection called YOLO-H was proposed. The proposed framework showed great performance in terms of speed and accuracy.

Automating the archiving of design data can significantly reduce designers’ time spent on non-creative tasks, allowing them to work more productively. Reference [118] introduced GP22, a dataset comprising car styling features defined by automotive designers. GP22 includes 1480 car side profile images from 37 brands across ten car segments. A baseline model was trained, using YOLO v5 as the design feature detection model with this dataset. The results showed a mAP score of 0.995.

The main goal of this study was to identify the most relevant papers that discuss the implementation of the YOLO algorithm in the automotive field in areas like road traffic surveillance, autonomous vehicles, and industrial automation for vehicle manufacturing because YOLO’s fast and accurate object detection capabilities, coupled with its adaptability and scalability, make it a promising technology for advancing various aspects of automotive technology, safety, and intelligent transportation systems. The integration of YOLO-based solutions contributes significantly to the development of safer, more efficient, and intelligent automotive ecosystems. This research maps the trends in the YOLO used in the automotive field over a four-year timeframe from 2020 to 2023. Each topic and article were analyzed to identify future directions and current challenges.

Review Question 1: What are the automotive field’s main applications of real-time object detection and recognition using YOLO?

The automotive industry won its place in the research and innovation market a long time ago. However, it continues to develop and thus influence related fields such as road traffic and the manufacturing industry.

In road traffic assessment, the case studies in this review are focused on vehicle detection and counting, particularly on real-time traffic monitoring [6,41–43,45]. Also, YOLO can quickly detect vehicles on roads and highways, allowing for real-time monitoring of traffic conditions [55,56,60,65,77]. Additionally, traffic flow analysis can be performed by counting vehicles in specific regions, enabling traffic management systems to analyze flow and density, optimize traffic light timings, and reduce congestion [119–123].

In automatic license plate recognition [65–67,124,125], YOLO is used in conjunction with optical character recognition systems to identify and read vehicle license plates, aiding in law enforcement and toll collection. Furthermore, YOLO is also integrated with speed cameras and traffic lights to detect vehicles running red lights or exceeding speed limits.

Autonomous vehicles, the newest area of research and implementation in the automotive industry, attract increased interest in car research and development centers. The YOLO algorithm is used alone or with other methods to achieve a high percentage of accuracy in self-driving such as object detection, traffic sign recognition, lane detection and tracking, obstacle detection and avoidance, path planning and navigation, and collision avoidance systems. YOLO detects and classifies other vehicles on the road, including cars, trucks, buses, and motorcycles [81,84,89,93]. It also classifies these vehicles, allowing for more accurate identification and differentiation among them during real-time traffic analysis, including license plate recognition and classification [126–130]. This helps the autonomous vehicle understand its environment and make informed decisions, not only about vehicles, but also about pedestrians on the road [90–92,131,132]. Traffic sign recognition together with lane detection and tracking are part of the vehicle’s safety performance parameters [76,78,133–135]. Implementing YOLO in autonomous vehicles makes possible the identification and classification of different traffic signs, such as stop signs, yield signs, speed limit indicators lane markings and road edges, allowing the vehicle to follow road rules, to maintain its lane or to perform safe lane changes [136–140]. In terms of path planning and navigation, YOLO assists in efficient path planning and navigation [141], ensuring optimal route selection and fuel efficiency, at the same time, maintaining safe distances from other vehicles and adjusting speed based on detected objects and road conditions. Furthermore, YOLO helps in implementing emergency braking systems by detecting imminent collision threats, such as hazards on the road and triggering the brakes [74,85,86,96] and in conjunction with other systems, YOLO aids in performing evasive maneuvers to avoid accidents.

In the automotive manufacturing industry, YOLO is used to enhance efficiency, quality control, and safety through real-time object detection and image analysis. In quality control and inspection, YOLO is successfully used to detect defects in automotive parts, ensuring that only high-quality products proceed through the production line, as stated in the analyzed case studies [100,104,109,110,113]. Regarding workplace safety, the case studies [116,117,142] shown that YOLO can detect potential safety hazards on the factory floor, such as spills or misplaced tools, and alert workers or halt operations to prevent accidents. In terms of production monitoring, YOLO is able to identify anomalies that may indicate underlying issues, allowing for timely intervention [99,101,115].

Review Question 2: What hardware is used for the implementation of real-time object detection systems based on YOLO?

Deploying real-time object detection systems through the YOLO framework within the automotive sector often demands hardware configurations that balance computing power and efficiency. The selection and arrangement of hardware components may vary depending on the specific application and its associated requirements. Graphics Processing Units (GPUs) play a critical role in expediting the inference process of deep learning models like YOLO, with high-performance GPUs from leading companies such as Nvidia [92] commonly employed for real-time or near-real-time inference tasks.

Embedded platforms like Raspberry Pi [88] have become crucial solutions in the realm of traffic sign detection and recognition technologies. Classified as electronic devices, embedded systems offer an admirable equilibrium between energy efficiency and processing capabilities, rendering them indispensable for enhancing road safety and intelligent transportation applications. This capability enables accurate identification and classification of traffic signals, signs, and road conditions.

Moreover, the innate versatility and seamless connectivity of these devices make them well-suited for edge computing and industrial automation applications, thereby significantly contributing to advancements in road safety and traffic optimization across both urban and rural settings.

4.1 YOLO Application and Performance

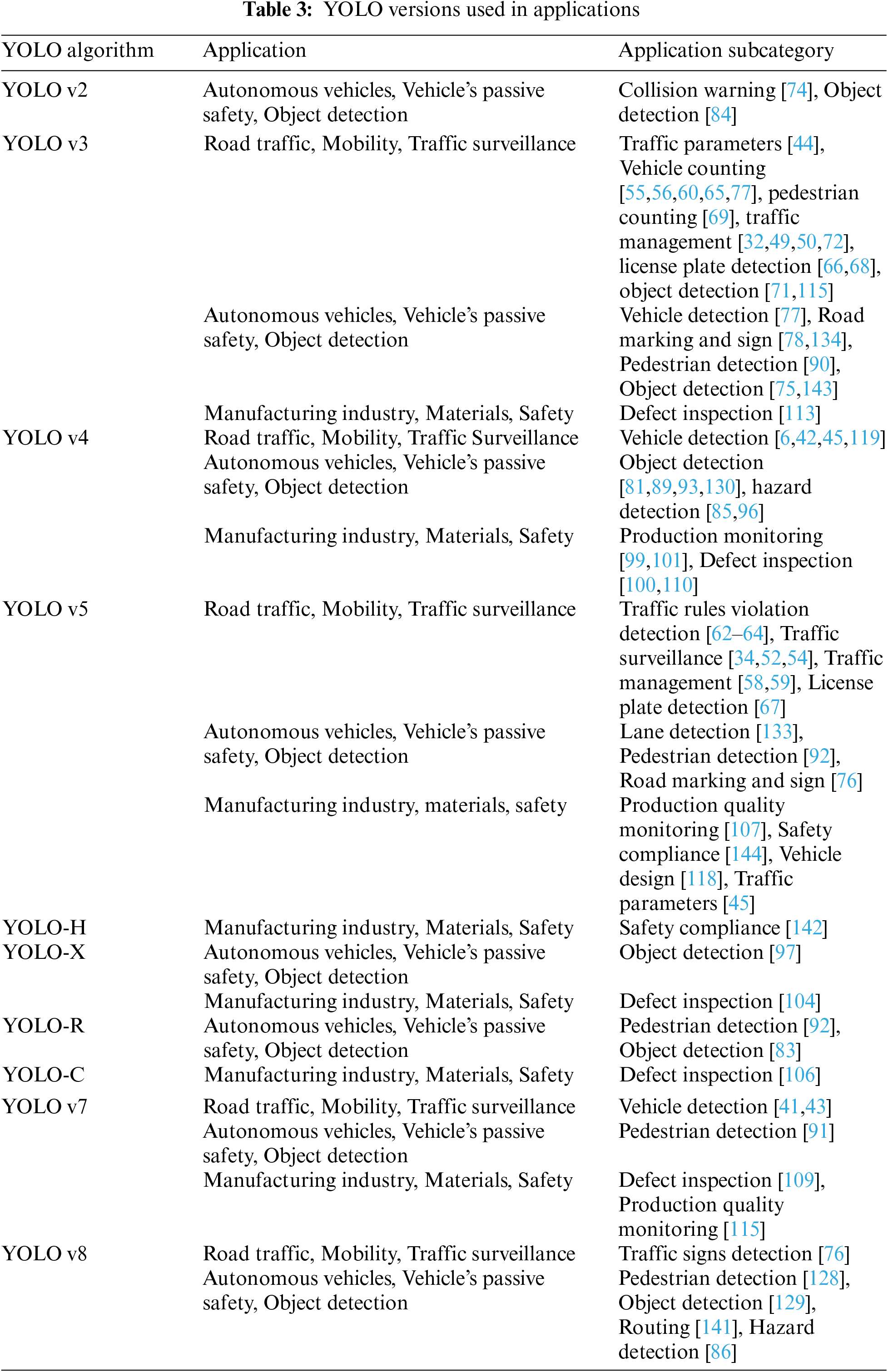

Table 3 presents all the versions of the YOLO algorithm used in the studies presented in this paper, together with the main analyzed categories and their subcategories, thus offering a comparative view of each version. We measured quantitatively how often a certain YOLO version was used and how relevant it was for the analyzed category.

YOLO is implemented in the manufacturing industry to monitor operators’ activity and highlight the appropriate wearing of work equipment. This aspect is crucial for the safety and physical integrity of the work staff. YOLO proved to be the algorithm that can be applied somewhat end-to-end, from manufacturing a vehicle’s parts to its actual completion and then putting it into traffic on the roadside.

Review Question 3: Which metrics are employed to gauge the effectiveness of object detection within the realm of real-time automotive applications, when employing YOLO for object detection?

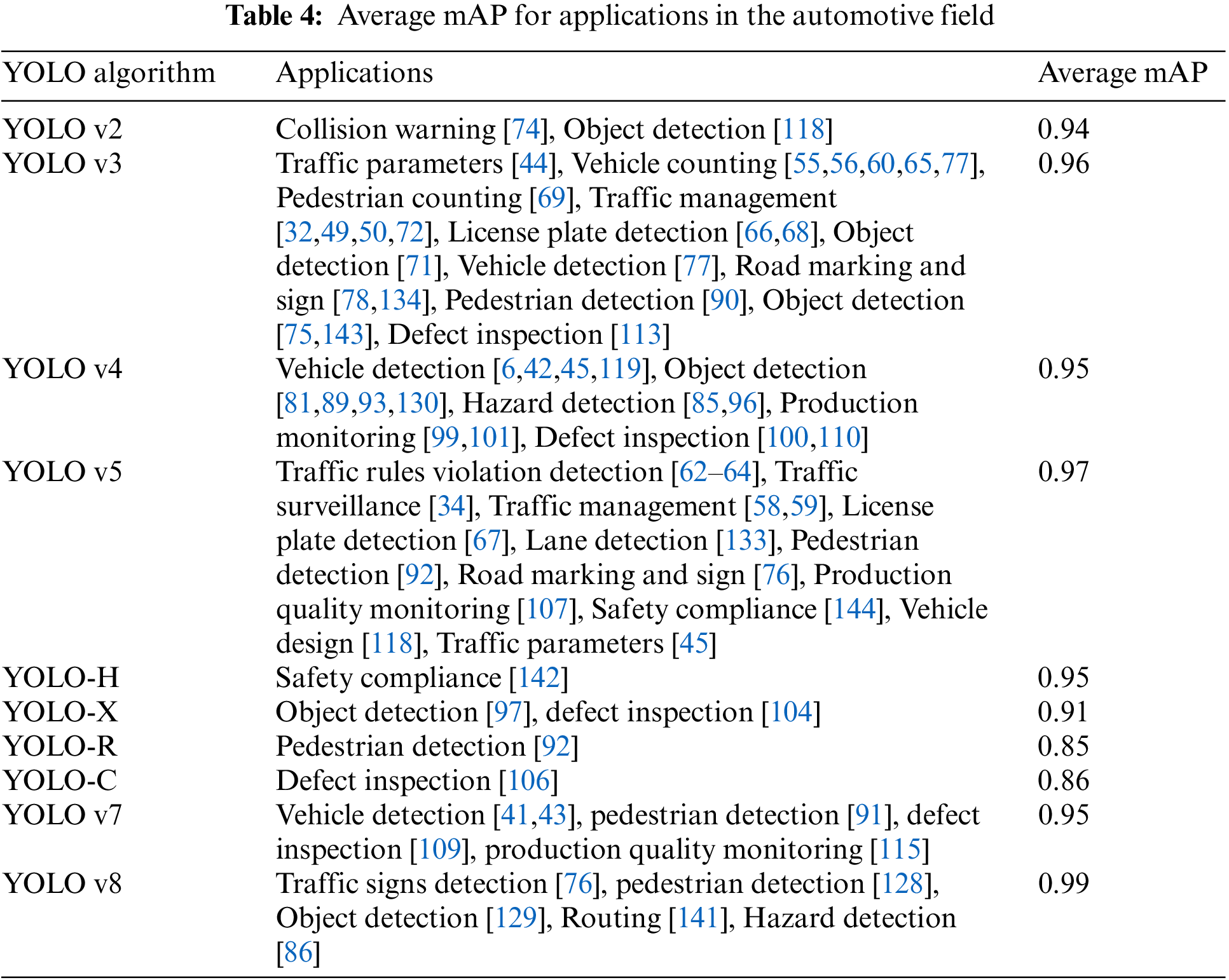

However, the big challenge that YOLO faces, whether used alone or in combination with other methods, is that it has yet to prove 100% accurate, as can be seen in Table 4, which means there is room for improvement. These improvements can also take place over time, with the expansion of research and the use of a wider dataset of images.

Across various versions of YOLO, the mAP stands out as the predominant metric in object detection, extensively utilized for evaluating performance across diverse datasets. YOLO places equal emphasis on accuracy and speed, a balance reflected in mAP’s consideration of both aspects. This metric effectively gauges both precision (accuracy of detections) and recall (completeness of detections), providing a comprehensive assessment of model performance.

Utilizing the average precision enables a thorough evaluation of the system’s efficiency in real-time object detection, promoting the development of resilient and adaptable recognition systems capable of excelling under diverse operational conditions in the automotive area.

Review Question 4: What YOLO version had the better result after validating these systems?

As seen in Table 4, YOLO v8 has the best real-time detection precision when used in automotive applications.

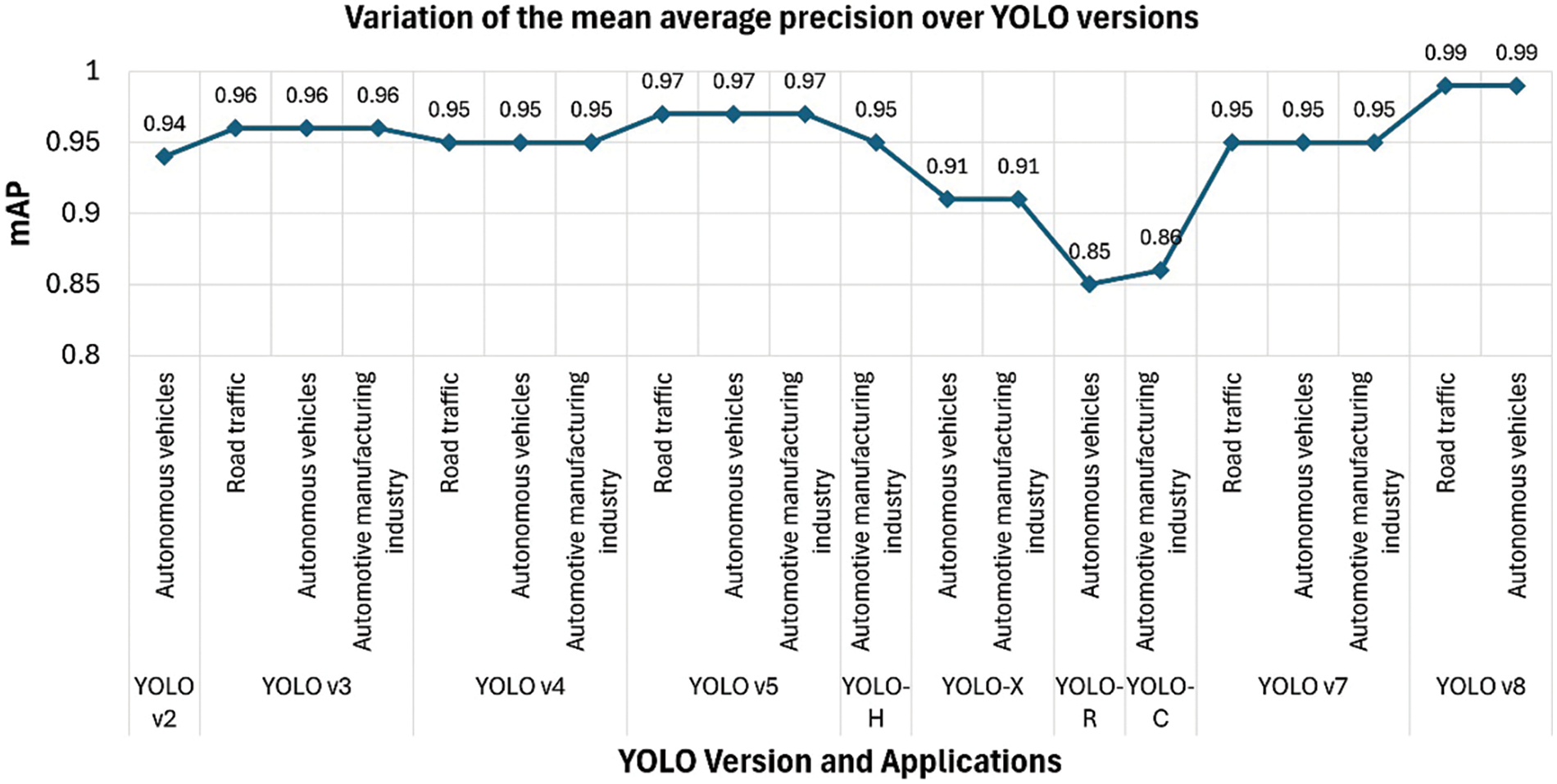

Fig. 13 depicts the variation of the mAP over the YOLO versions reviewed and also the applications where the YOLO version was implemented. An average trendline is a statistical tool used to smooth out fluctuations in mAP data over the YOLO versions that were mentioned chronologically. The precision trend appears to fluctuate, yet it exhibits an upward trajectory with YOLO v7. It continues with YOLO v8, approaching a maximum of 100% precision in real-time object detection within the automotive field. So, the best accuracy in terms of real-time object detection in the automotive field has been at YOLO v8.

Figure 13: Variation of the mAP over the YOLO versions reviewed

The second-best version of YOLO that is widely used in the automotive field is YOLO v5. Compared to the previous versions, YOLO v5 offers a simpler architecture and training procedure, making it more accessible to researchers, especially those new to object detection tasks. YOLO v5 builds upon the strengths of its predecessors and incorporates advancements in architecture and training techniques, resulting in state-of-the-art performance in terms of accuracy and speed. And this can be seen in Fig. 13 and Table 4 as the value of mAP is closer to 0.99 (for YOLO v8) than any other versions.

Another point is that YOLO v8 is not yet used in automotive manufacturing applications and most of them are using YOLO v5. YOLO v8 is currently widely used in autonomous vehicle development, rather than other automotive applications.

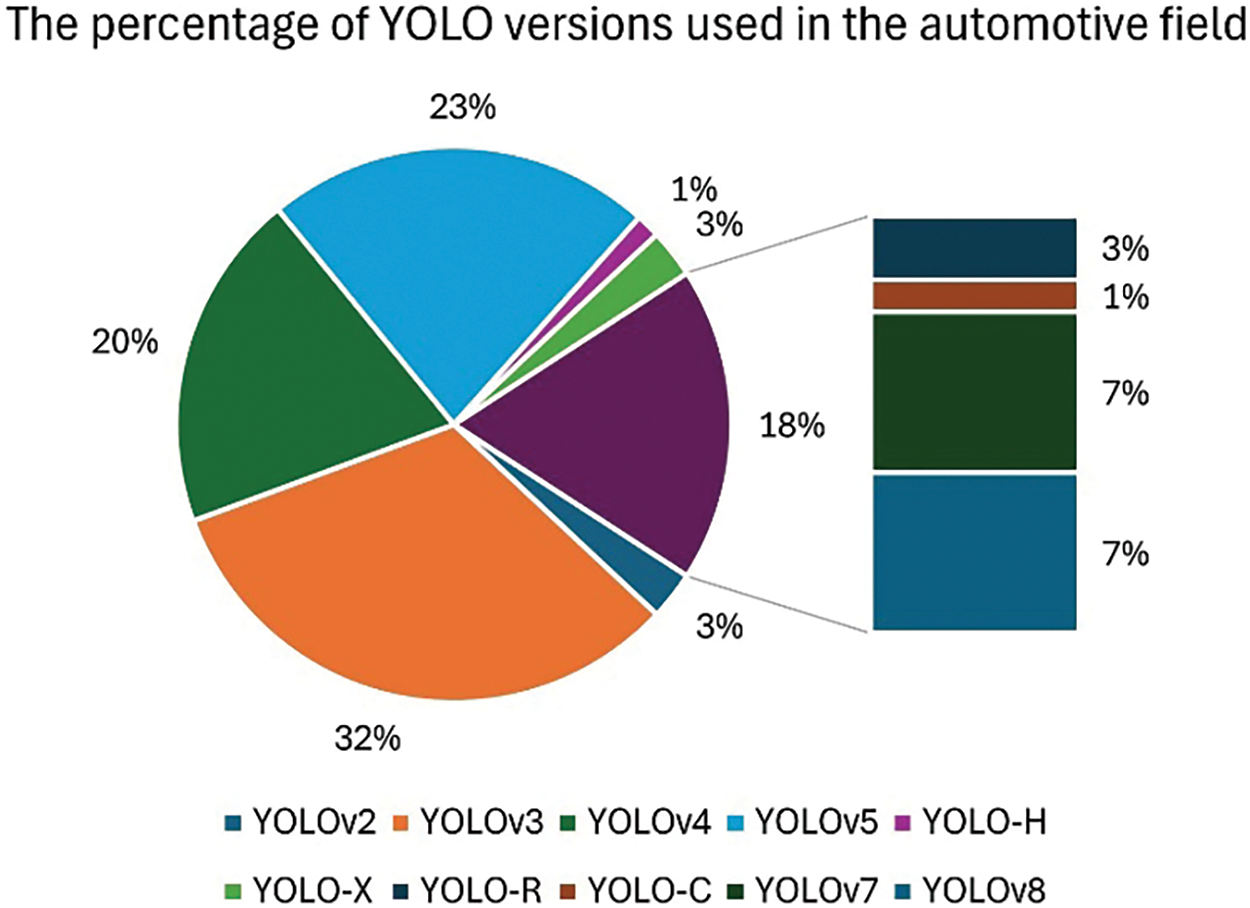

Fig. 14 presents a pie chart with the percentage of YOLO versions used in the review.

Figure 14: Percentage distribution of YOLO versions used in the review

YOLO v3 is the most used version among the reviewed papers, followed by YOLO v5. In terms of application, YOLO is used the most in Road traffic applications, followed by autonomous vehicles.

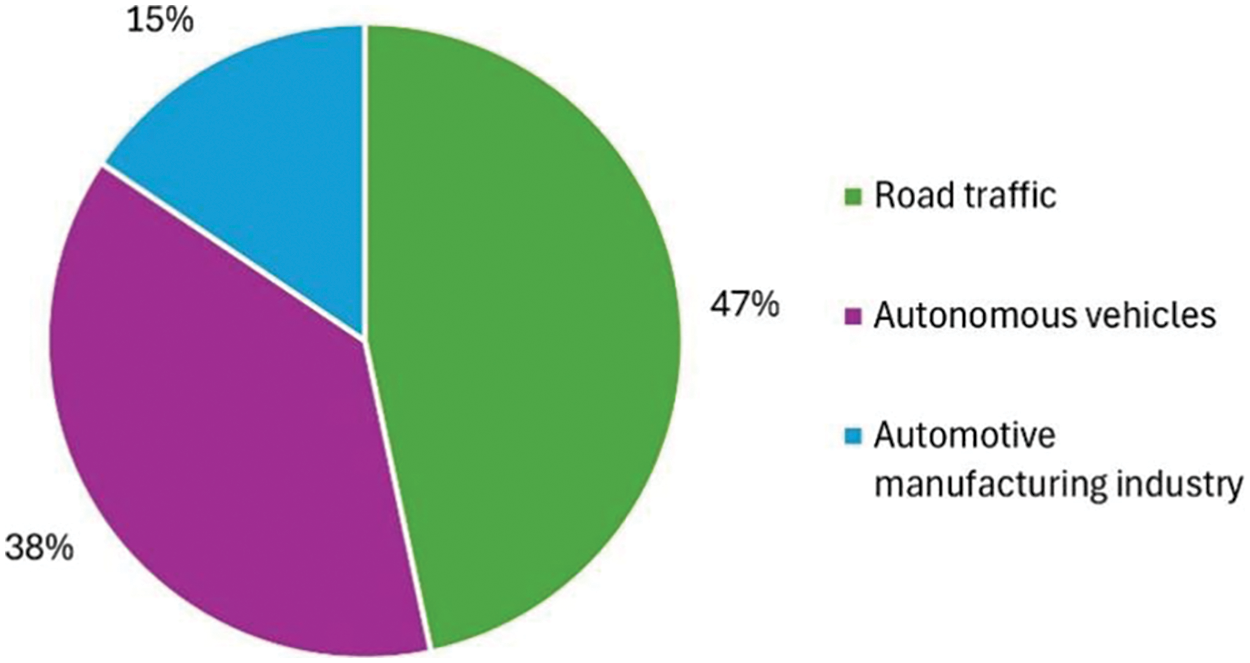

Fig. 15 depicts the distribution of the automotive applications discussed in the paper, which use YOLO for object detection.

Figure 15: Percentage distribution of the applications in the automotive field that use YOLO

In the scope of road traffic analysis, this review outlines diverse areas, including monitoring general traffic, facilitating emergency vehicle movement, parking lot access control, and detecting traffic violations [32,34,35,145]. It addresses challenges such as identifying vehicle categories in dense traffic areas [34], improving small object detection in aerial images [41,42,119], and detecting vehicles in rural areas where stationary cameras are unavailable [43]. The YOLO algorithm and its variants are extensively utilized across these studies for tasks like vehicle detection, traffic sign detection, and object tracking, especially YOLO v5 and v8. The performance evaluation of YOLO v5 and v8 was done through experimental results, demonstrating improved accuracy, reduced computational time, and better real-time detection speed compared to the other versions (YOLO v2, v3, v4, v7 YOLO-H, YOLO-X, YOLO-R, and YOLO-C). In terms of real-world applications, many of the proposed systems have practical applications in traffic management, emergency vehicle response, and traffic parameter estimation. Also, as innovative solutions, the review points out innovative approaches, like YOLO-ORE for object recognition with radar integration [47], traffic flow optimization through intelligent traffic light control [50], and real-time vehicle detection and classification for emergency vehicle prioritization [61]. Beyond vehicle detection, it also covers applications, such as helmet detection for bike riders [62], traffic violation detection [64], pedestrian detection [69,70], and road pavement defect detection [72,146,147].

Besides road traffic, the YOLO algorithm is applied in various contexts related to autonomous vehicles, and electric vehicle charging systems [73,74,128,133,143]. It is also used for traffic lane detection, navigation, and roadside obstacle detection [148,149]. The development of charging infrastructure for electric autonomous vehicles is discussed, utilizing YOLO v8 for real-time charging port detection [141]. Also, the development of autonomous vehicles has begun to pick up speed in recent years, and the first point discussed in the industry is related to the safety of such a vehicle and the preservation of the integrity of passengers throughout a route [110]. Autonomous vehicles have integrated various sensors and video cameras to capture images from all angles of the vehicle’s exterior. Those images can be processed by YOLO to identify and enhance obstacles in the way of the autonomous vehicle [130]. After that, the millimeter wave radar can be used to measure the obstacle-blocking angle [150].

As for safety enhancement, several studies focus on improving safety onboard autonomous vehicles, including collision detection and warning systems [73,74,148], and pedestrian tracking algorithms to mitigate potential collisions [128,149]. Research suggests that developing self-driving cars capable of navigating roads with improved markings and traffic management can enhance road conditions and vehicle control [133,149].

In terms of detection algorithms and sensors, the review discusses the importance of competent detection algorithms for autonomous vehicles, such as lane detection and speed breaker detection using YOLO v5 [133], and modifications like LF-YOLO for faster detection speed [143]. To address the limitations of single sensors, studies propose sensor fusion models, such as camera and LiDAR fusion, to enhance detection accuracy [75]. Integration of YOLO with depth sensing technologies like Monocular Depth Estimation using Scale-invariant Depths (MiDas) is proposed to improve decision-making for path-planning in autonomous cars [151], and frameworks like S-DAYOLO are developed to address domain shift challenges, improving object detection accuracy [82].

Solutions for pothole detection using YOLO v8 are proposed to minimize accident risk [86], and the classification of potholes into different categories is discussed for improved road safety [87]. Some papers explore the use of YOLO in adverse weather conditions, achieving better detection accuracy compared to traditional methods [96,97]. Also, for pedestrian behavior forecasting, end-to-end pedestrian intention detection is developed using YOLO v3 for forecasting pedestrian behavior [91].

Integration of YOLO in industrial environments for automotive development is another aspect discussed in this review, so YOLO is integrated into various aspects of industrial environments, including manufacturing, materials inspection, quality control, and operator safety.