Open Access

Open Access

ARTICLE

Integrating Transformer and Bidirectional Long Short-Term Memory for Intelligent Breast Cancer Detection from Histopathology Biopsy Images

1 Department of Computer Science, College of Computer Science, King Khalid University, Abha, 61451, Saudi Arabia

2 College of Science and Arts in Rijal Alma, King Khalid University, Abha, 61451, Saudi Arabia

3 Department of Information Technology, Muffakham Jah College of Engineering and Technology, Hyderabad, 500034, India

4 Department of Computer Science and Business Systems, Rajalakshmi Engineering College, Thandalam, 602105, India

* Corresponding Author: Prasanalakshmi Balaji. Email:

(This article belongs to the Special Issue: Intelligent Medical Decision Support Systems: Methods and Applications)

Computer Modeling in Engineering & Sciences 2024, 141(1), 443-458. https://doi.org/10.32604/cmes.2024.053158

Received 25 April 2024; Accepted 03 July 2024; Issue published 20 August 2024

Abstract

Breast cancer is a significant threat to the global population, affecting not only women but also a threat to the entire population. With recent advancements in digital pathology, Eosin and hematoxylin images provide enhanced clarity in examining microscopic features of breast tissues based on their staining properties. Early cancer detection facilitates the quickening of the therapeutic process, thereby increasing survival rates. The analysis made by medical professionals, especially pathologists, is time-consuming and challenging, and there arises a need for automated breast cancer detection systems. The upcoming artificial intelligence platforms, especially deep learning models, play an important role in image diagnosis and prediction. Initially, the histopathology biopsy images are taken from standard data sources. Further, the gathered images are given as input to the Multi-Scale Dilated Vision Transformer, where the essential features are acquired. Subsequently, the features are subjected to the Bidirectional Long Short-Term Memory (Bi-LSTM) for classifying the breast cancer disorder. The efficacy of the model is evaluated using divergent metrics. When compared with other methods, the proposed work reveals that it offers impressive results for detection.Keywords

Breast tumors cause serious health risks for women. Breast cancer is the second-deadliest disease due to the rise of breast cancers every year [1]. Due to a lack of resources for infrastructure and treatment, the death rate from breast cancer is exceptionally high in some countries. The likelihood of recovery depends on the disease’s stage. The breast cancer stage is known by using the breast biopsy report. By extracting the tissue for pathologic analysis, the sample is determined as malignant or not [2]. Breast ultrasound imaging, magnetic resonance imaging, mammograms, and frequent checkups help to detect breast cancer early. The likelihood of breast cancer death is lowered by early identification. Histopathology image analysis is an effective method for identifying breast cancer. It is employed to find malignant tumors. Manually analyzing histopathological images is time-consuming and vulnerable to human error [3]. The artificial neural network was utilized to identify cancer using the breast histopathology image. The initial identification of breast cancer is commonly done by utilizing Computer Aided Diagnosis (CAD) systems [4]. The feature extraction technique and segmentation of nuclei are the essential steps for identifying breast cancers from histopathological images [5].

Due to the transfer learning abilities and automated feature extraction, deep convolutional neural networks (CNNs) are attracting much attention nowadays. Among all the Transformer-based techniques, the swin transformer model has shown better performance for feature extraction when combined with the UNet [6]. The uncontrollable growth of lumps or tissues inside the organ is considered cancerous. Pathologists use histopathology images to identify the growth tissues in the organs [7]. However, manual observation of these images takes more time to identify the cancer. With the help of CAD, the structure and cells in the histopathology images are identified early [8]. The complex structure of the tissues in histopathological images makes it necessary to use CNNs with deep layers to extract information from the raw image. More memory and better hardware are needed for training deep CNNs [9]. Additionally, fine-tuning the critical parameters is difficult and time-consuming when training a deep CNN automatically for the feature retrieving process. The loss of information occurs when images are resized to the CNN’s input layer. The high implementation costs for digital pathology are really challenging [10]. As a result, getting a consistent, comprehensive dataset of histopathology images is difficult. Hence, a breast cancer recognition framework using deep learning is suggested.

To fill out the research gap identified from the existing methods, some of the vital contributions of the designed breast cancer identification approach with the help of deep learning in histopathology biopsy images are listed below:

• To design a deep learning-based breast cancer recognition framework via histopathology biopsy images for finding breast cancer in its early stages and to start appropriate treatment for the cancer.

• To retrieve the necessary features from the input biopsy images, the multi-scale dilated vision transformer is developed.

• To accurately detect breast cancer, the BiLSTM is utilized.

• The outcome is verified with other classification techniques to estimate the potential of the deep learning-based breast cancer detection model investigated.

The proposed deep learning-based breast cancer identification approach using histopathology biopsy images is narrated in the succeeding sections. In Section 2, the existing works based on breast cancer are provided. In addition, the merits and limitations of the prior deep learning-based breast cancer recognition techniques are given in detail. The proposed breast cancer identification technique and the histopathology biopsy image collection are explained in Section 3. Section 4 describes the feature extraction process using a multi-scale dilated vision transformer and the detection using BiLSTM. The results and discussions of the offered breast cancer identification model using histopathology biopsy images are given in Section 5. The conclusion of the proposed model is available in Section 6.

In 2023, Ashurov et al. [11] introduced a breast cancer detection method by combining the attention models with five different transfer learning methods: Xception, VGG16, ResNet50, MobileNet, and DenseNet121 under different magnification levels. Their method outperformed existing methodologies in breast cancer detection.

In 2022, Abbasniya et al. [12] investigated deep feature transfer learning-based attribute retrieval for CAD systems. For the classification stage, they investigated 16 different pre-trained networks. After validating the CNN models, the Inception-ResNet-v2 model, which combined the benefits from the inception and residual networks, exhibited the finest feature retrieval abilities for breast cancer histopathology images. The combination of LightGBM, XGBoost, and CatBoost offered better accuracy in the classification phase. The suggested technique was validated using the BreakHis dataset. It comprised 7909 histopathology images in four magnification factors, including numerous malignant and benign images. They focused on the classification stage because most studies on automated breast cancer diagnosis only considered the feature extraction phase.

The soft voting ensemble has obtained better results because of the benefits of LightGBM, XGBoost, and CatBoost.

In 2016, Abdel-Zaher et al. [13] developed a computer-aided diagnostic model for the detection of breast cancer using deep belief unsupervised networks followed by a supervised backpropagation path, which was achieved based on Liebenberg Marquardt learning function while weights are initialized from the deep belief network path.

In 2022, Łowicki et al. [14] created a deep artificial neural network for identifying breast tumor using histopathological images. The suggested model is useful to assist doctors, help with diagnoses, maintain sustainable health, and reduce the workload of doctors and their time. These factors could be used to enhance the quality of healthcare. From 163 WSI-scanned breast histopathology images, the input data samples were collected. The ResNet18, made up of residual blocks, served as the model’s foundation. The network detected cancer tissues with better F1-scores, sensitivity, and accuracy during the final validation of the test set. The effectiveness of the overall model was higher than the existing mechanisms.

In 2022, Yan et al. [15] introduced a nuclei-guided network for grading invasive ductal carcinoma pathological images. With the guidance of nuclei-related features, the proposed network could learn fine-grained features to grade the Instrument Deployment Camera (IDC) images.

One of the significant health issues among women is breast cancer. Initial diagnosis improves the treatment and survival. Histopathological image analysis is an effective way to automatically diagnose cancer patients. High-level abstracts from the image can be extracted automatically by the deep learning method. Several works have been developed to recognize breast cancer. Their uses and demerits are given in Table 1. Reference [11] uses very small receptive fields, providing faster and more accurate detection. However, it needs more time to train parameters and also needs a large model size [12], which is used for better modal adaptation and contains multiple-sized convolutional filters. However, it is more expensive and time-consuming and has complex structures inside modules [13]. It needs low computational capability, but does it require an adequate number of nuclei [14]? It can implement tasks that cannot be done by linear programs and executed in all applications, but it requires training to operate and high processing time. Reference [15] is accurate at image recognition and does not require human supervision, but it needs a large dataset for training the neural network and tends to be slower for some operations. These challenging issues motivate us to design an intelligent detection model for breast cancer.

3 Architectural View of Histopathology Biopsy Image-Based Breast Cancer Detection with Elucidation of Dataset

3.1 Implemented Breast Cancer Detection Approach

Many deep learning systems are employed for breast cancer detection. The Artificial Neural Network (ANN)-based breast cancer recognition model consumes more time for the training. Also, the performance of ANN on high-dimensional data is complex. Some models suffer from poor parameter leanings, which results in poor structure because of the size variation in sample data. Moreover, a huge volume of memory and powerful GPUs are needed for training breast cancer identification by deep learning. The medical images with labeled databases for the training of deep learning networks are costly. Most deep learning-based models for classifying benign and malignant results have many false positive results. The graphical representation of the designed deep learning-based breast cancer detection is shown in Fig. 1.

Figure 1: Pictorial representation of the designed deep learning-based breast cancer detection model

An automated breast cancer identification framework using histopathology biopsy images based on deep learning is developed to identify breast cancer in the beginning stages accurately. At first, the histopathology biopsy images required for the research are collected from standard data sources. Then, the necessary features are retrieved from the gathered images using a multi-scale dilated vision transformer. After feature extraction, the retrieved features are designated to the Bi-LSTM for breast cancer classification. The simulation results of the suggested breast cancer classification model are evaluated with other existing classification models to verify its performance.

Dataset (“BreaKHis 400X”): From the dataset link of https://www.kaggle.com/datasets/forderation/breakhis-400x (accessed on 21 June 2023), the histopathology images in breast cancer is taken. This dataset consists of both malignant and benign breast cancer biopsy images. It contains separate folders for testing and training the data. The total size of the dataset is 842 MB. It consists of 1693 files in total. The sample histopathology biopsy images for breast cancer detection are specified as

Figure 2: Sample histopathology biopsy images for breast cancer detection

4 Multi-Scale Dilated Vision Transformer-Based Feature Extraction and BiLSTM-Based Breast Cancer Detection from Biopsy Images

The proposed methodology utilises the whole slide input images obtained from the pathologist using different staining approaches after fixation and sectioning. These slides are further digitized using a whole slide scanner. These digitized images are available in the open source databases and are further utilized here with a multi-dilated vision transformer to extract required features and further processed with the Bi-LSTM model to detect malignancy. Fig. 3 shows the complete process taken up in the proposed methodology.

Figure 3: Integration of digital pathology workflow with proposed method

4.1 Feature Extraction through Multi-Scale Dilated Vision Transformer

Feature extraction from the biopsy images is carried out using the multi-scale dilated vision transformer [16]. The input images are given to the multi-scale dilated vision transformer for performing the feature extraction. Feature extraction retrieves the relevant information from the image while preserving the original information. The dilation mechanism is utilized to detain the spatial dependencies. The formulation of the dilation layer is given in Eq. (1).

Here, the dilated factor is specified as

A multi-scale vision transformer consists of skip connection, key-value pooling, query pooling, and channel expansion and scale stages.

The scaling stage consists of a number of transformer blocks with the same dimension and the same scale. In this stage, complex features are processed, and the spatiotemporal resolution decreases. The output of the scale stage is increased to expand the dimensions of the channel in the channel expansion layer. If the channel dimension is

Figure 4: Architectural representation of multi-scale dilated vision transformer-based feature extraction

Fig. 4 shows the sample scaling process of the input blocks, in which the input blocks of the N × N dimension are scaled in level 1 I terms of blocks, which is then scaled to N/2 blocks in the second level and thereby scaled to N/4 in the next level. This shows the process of multi-scale dilated transformer.

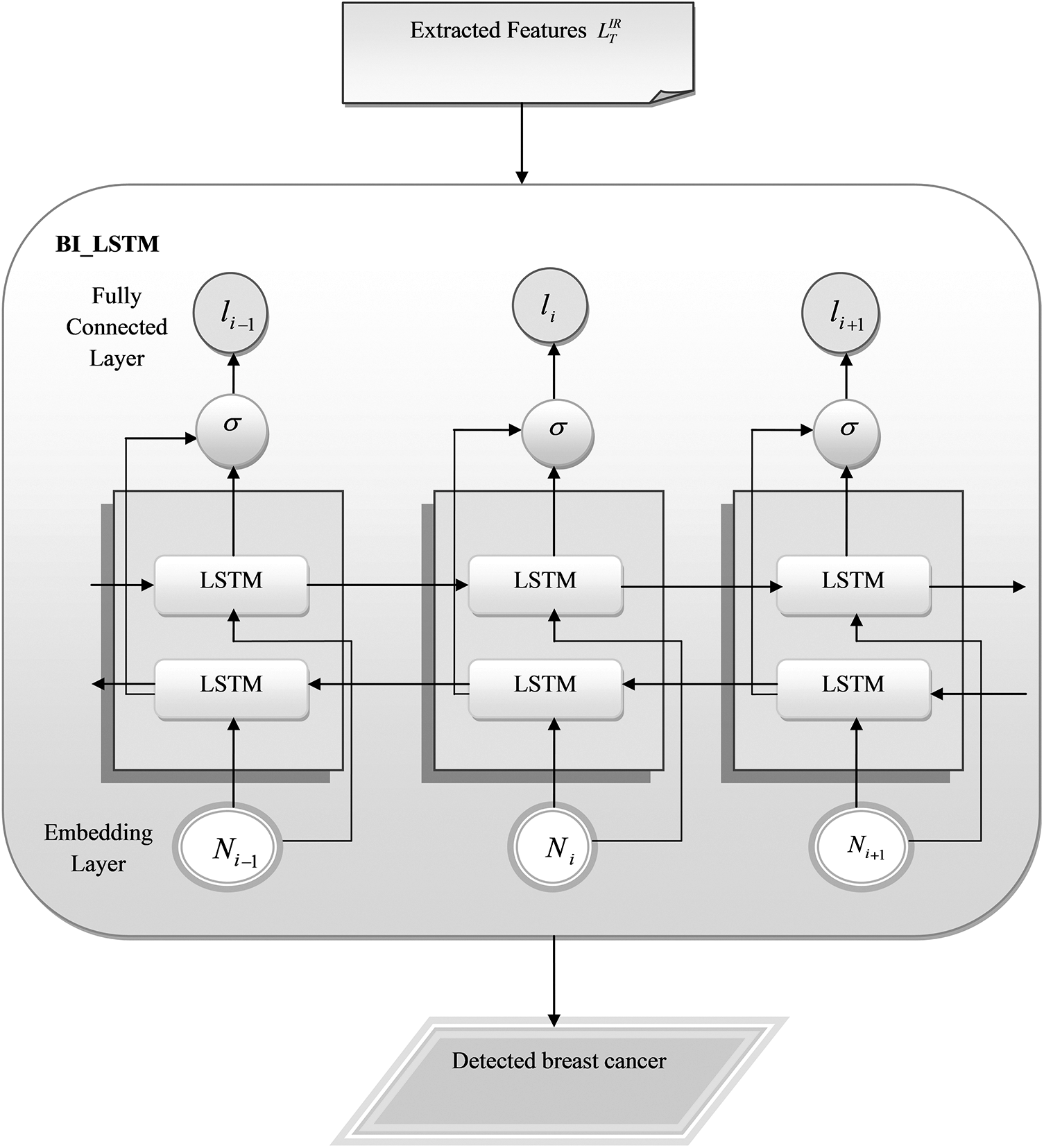

The BiLSTM [17] is adopted from the LSTM. BiLSTM can learn from both future and previous time steps. It effectively solves the gradient vanishing by utilizing the gate mechanism. Forget layer is the first layer present in BiLSTM and it is applied with a sigmoid function to obtain the output. The output may be 1 or 0. If it is 1, it indicates the remember state; if it is 0, it represents the forget gate. The function of the forget layer is expressed in Eq. (2).

Here, the forget gate is indicated by the term

In the above equation, the term

Here, the output gate is specified as

4.3 Breast Cancer Detection Using BiLSTM

The feature retrieved images

Figure 5: Schematic representation of the suggested breast cancer identification using BiLSTM

The deep learning-based breast cancer recognition technique was implemented by Python. The performance of the developed BiLSTM-based breast cancer detection is validated with other classification techniques to estimate the overall potential of the designed model. The VGG16 [18], CNN [15], ResNet50 [19], and LSTM [20] were the classification approaches used to compare the results obtained from the proposed deep learning-based breast cancer detection model. The data is tested on the existing methods and the proposed method with the learning percentage of 35%–85% varying in increments of 10%, wherein the results of 85% were found to be overtrained and hence the results of 75% training data were found satisfactory for all the methods and concluded results discussed are taken up for 75% training data.

The positive and negative metrics are considered to evaluate the effectiveness of the recommended breast cancer recognition model using histopathology biopsy images. The formulas for calculating False Discovery Rate (FDR), False Positive Rate (FPR), F1-score, False Negative Rate (FNR), specificity, Matthews Correlation Coefficient (MCC), sensitivity, precision, Negative Predictive Value (NPV) and accuracy are given in the below Eqs. (8)–(17).

Here, the true negative and positive values are referred to by the terms

5.3 Performance Estimation among Classifiers

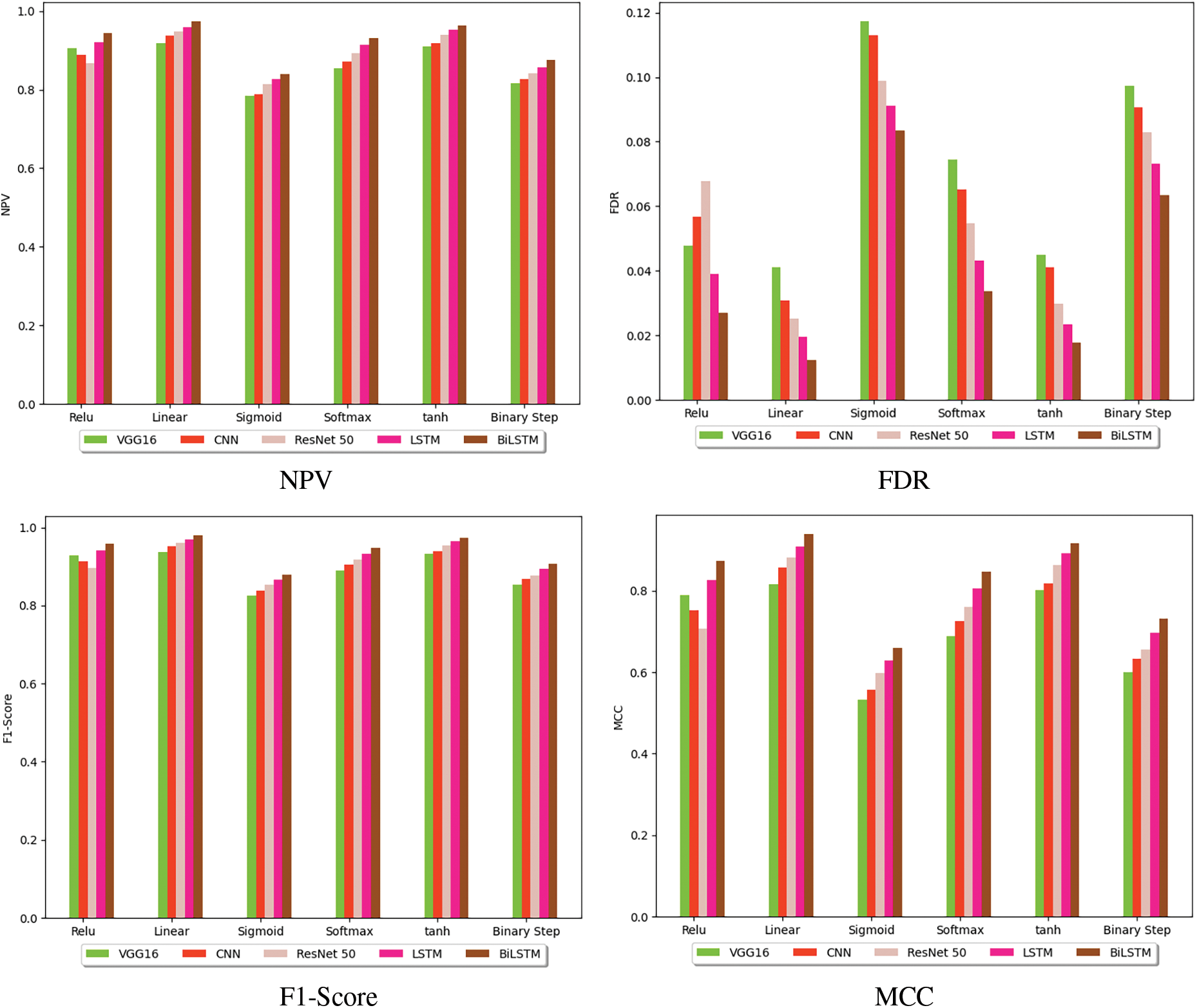

The performance of the suggested BiLSTM-based breast cancer identification model using histopathology images is compared with other classification models. Fig. 6 shows the performance of the investigated BiLSTM-based breast cancer detection approach by varying the activation function. The F1-score of the implemented breast cancer recognition using BiLSTM is 5.55%, 4.39%, 3.26% and 2.15% higher than VGG16, CNN, ResNet50 and LSTM at softmax function. The experimental outcomes show that the sensitivity, precisions, F1-score, specificity, NPV, FNR and accuracy are better than existing methods.

Figure 6: Performance analysis of the presented deep learning-based breast cancer identification model among various classification methods

The investigated BiLSTM-based breast cancer detection approach is evaluated among different classifiers to estimate the receiver operating characteristic (ROC) curve, and it is given in Fig. 7. At a false positive rate of 0.4, the recommended BiLSTM-based breast cancer recognition is 16.8%, 15.38%, 9.75% and 8.43% improved than VGG16, CNN, ResNet50 and LSTM, respectively. Thus, the error rates are highly minimized by the introduced breast cancer identification model using BiLSTM.

Figure 7: ROC estimation of the recommended deep learning-based breast cancer recognition model

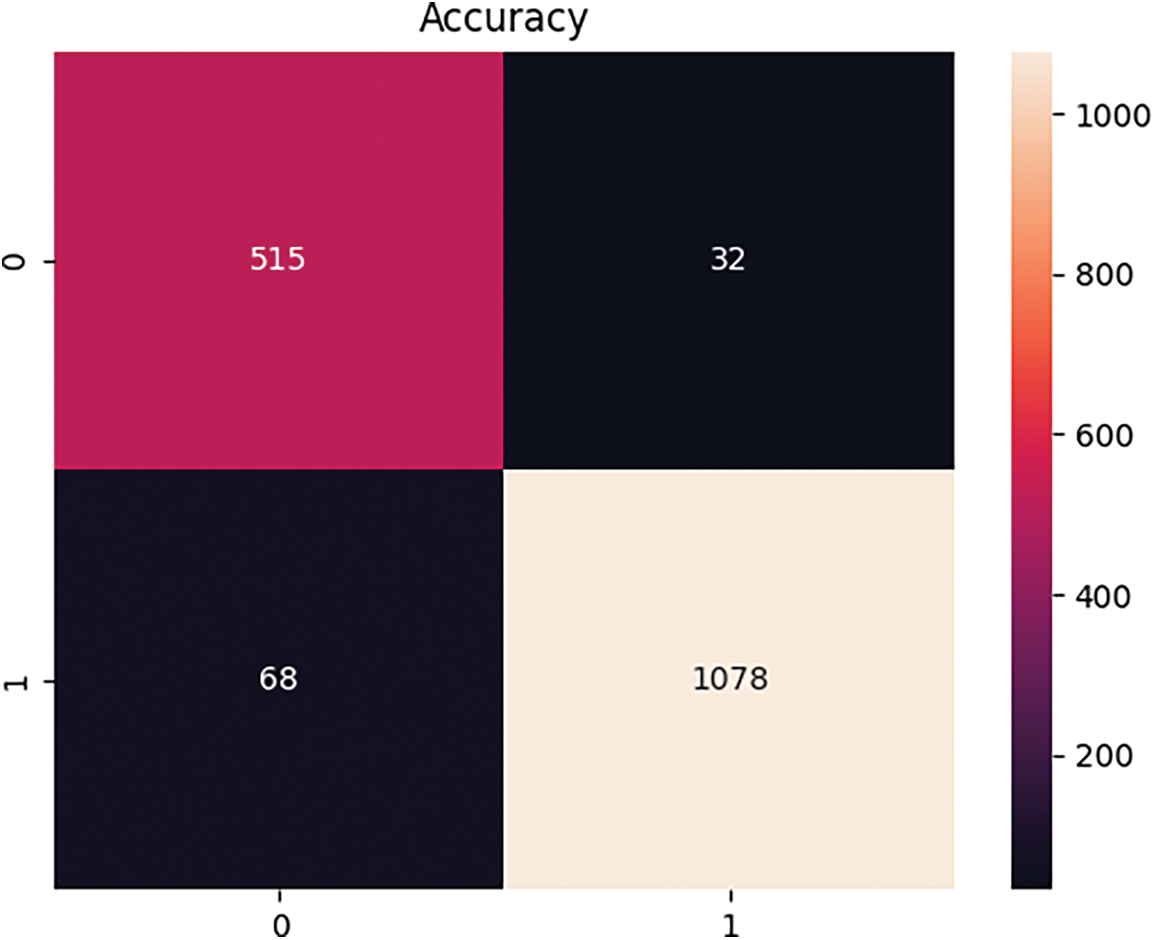

The performance of the developed breast cancer identification framework using histopathology biopsy images is evaluated by the confusion matrix. The predicted and actual values were estimated in the confusion matrix. Fig. 8 shows the confusion matrix of the developed BiLSTM-based breast cancer recognition approach.

Figure 8: Convergence analysis of the suggested deep learning-based breast cancer detection model

5.6 Performance Estimation among Classifiers

The performance validation of the performed BiLSTM-based breast cancer recognition approach using histopathology biopsy images over various classifiers is provided in Table 2. The designed BiLSTM-based breast cancer identification specificity is 6.17% improved than VGG16, 3.90% more progressed than CNN, 2.69% enhanced than ResNet50 and 1.60% superior to LSTM. The simulation results manifested that the proposed BiLSTM-based breast cancer detection methodology outperformed existing techniques.

An automated breast cancer detection model was developed using histopathology biopsy images based on deep learning.

At first, the histopathology biopsy images needed for the research are collected from standard data sources. Then, the necessary features were extracted from the gathered images using a multi-scale dilated vision transformer. After feature extraction, the Bi-LSTM was utilized for classifying breast cancer. The experimental findings of the suggested breast cancer classification model were evaluated with other existing classification models to verify its performance. The accuracy of the performed Bi-LSTM-based breast cancer screening model was 6.11% superior to VGG16, 4.04% progressed than CNN, 2.74% higher than ResNet50 and 1.41% improved than LSTM. The implemented Bi-LSTM-based breast cancer detection model provided accurate detection results and reduced the false positive results.

The Mathews correlation coefficient, when it comes closer to 1, interprets the closeness in the results achieved, which implies the complete agreement between the predicted and observed values. F1-score nearing 1 indicates the model prediction is perfect. An FDR of 0.01240 in Bi-LSTM implies a 1.2% chance of false positives, which is highly negotiable when compared with the other methods taken into account. Negative predictive values nearing 1 indicate a lower number of false positives with a value attained to 0.97441. Since these values depend on the preliminary values of accuracy, sensitivity, specificity, precision, FPR, and FNR and show a remarkable outcome in the Bi-LSTM model, they are also assumed to go well with the proposed method.

Acknowledgement: The authors would like to express their gratitude to King Khalid University, Saudi Arabia, for providing administrative and technical support. Also, the authors would like to thank the referees for their comments and suggestions on the manuscript.

Funding Statement: The authors extend their appreciation to the Deanship of Research and Graduate Studies at King Khalid University for funding this work through Small Group Research Project under Grant Number RGP1/261/45.

Author Contributions: The authors confirm contribution to the paper as follows: study conception and design: Prasanalakshmi Balaji, Omar Alqahtani; data collection: Mousmi Ajay; analysis and interpretation of results: Prasanalakshmi Balaji, Sangita Babu, Shanmugapriya Prakasam; draft manuscript preparation: Prasanalakshmi Balaji. All authors reviewed the results and approved the final version of the manuscript.

Availability of Data and Materials: The data that support the findings of this study are openly available in BreakHis at https://www.kaggle.com/datasets/forderation/breakhis-400x (accessed on 21 June 2023).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. Kanavos A, Kolovos E, Papadimitriou O, Maragoudakis M. Breast cancer classification of histopathological images using deep convolutional neural networks. In: Proceedings of 2022 7th South-East Europe Design Automation, Computer Engineering, Computer Networks and Social Media Conference (SEEDA-CECNSM), 2022; Ioannina, Greece. p. 1–6. doi:10.1109/SEEDA-CECNSM57760.2022.9932898 [Google Scholar] [CrossRef]

2. Li B, Mercan E, Mehta S, Knezevich S, Arnold CW, Donald L, et al. Classifying breast histopathology images with a ductal instance-oriented pipeline. In: Proceedings of 2020 25th International Conference on Pattern Recognition (ICPR), 2021; Milan. p. 8727–34. doi:10.1109/icpr48806.2021.9412824. [Google Scholar] [PubMed] [CrossRef]

3. Khaliliboroujeni S, He X, Jia W, Amirgholipour S. End-to-end metastasis detection from breast cancer histopathology whole slide images. Comput Med Imaging Graph. 2022 Oct;102:102136. doi:10.1016/j.compmedimag.2022.102136. [Google Scholar] [PubMed] [CrossRef]

4. Sheeba A, Santhosh Kumar P, Ramamoorthy M, Sasikala S. Microscopic image analysis in breast cancer detection using ensemble deep learning architectures integrated with web of things. Biomed Signal Process Control. 2023 Jan;79(2):104048. doi:10.1016/j.bspc.2022.104048. [Google Scholar] [CrossRef]

5. Farajzadeh N, Sadeghzadeh N, Hashemzadeh M. A fully-convolutional residual encoder-decoder neural network to localize breast cancer on histopathology images. Comput Biol Med. 2022 Aug;147:105698. doi:10.1016/j.compbiomed.2022.105698. [Google Scholar] [PubMed] [CrossRef]

6. Iqbal A, Sharif M. BTS-ST: swin transformer network for segmentation and classification of multimodality breast cancer images. Knowl-Based Syst. 2023 May 1;267(12):110393–3. doi:10.1016/j.knosys.2023.110393. [Google Scholar] [CrossRef]

7. Ahmed M, Rabiul Islam Md. A combined feature-vector based multiple instance learning convolutional neural network in breast cancer classification from histopathological images. Biomed Signal Process Control. 2023 Jul 1;84:104775–5. doi:10.1016/j.bspc.2023.104775. [Google Scholar] [CrossRef]

8. Khan HU, Raza B, Shah MH, Usama SM, Tiwari P, Band SS. SMDetector: small mitotic detector in histopathology images using faster R-CNN with dilated convolutions in backbone model. Biomed Signal Process Control. 2023 Mar;81:104414. doi:10.1016/j.bspc.2022.104414. [Google Scholar] [CrossRef]

9. Shawly T, Alsheikhy AA. An improved fully automated breast cancer detection and classification system. Comput Mater Contin. 2023 Jan 1;76(1):731–51. doi:10.32604/cmc.2023.039433. [Google Scholar] [CrossRef]

10. Pal A, Xue Z, Desai K, Banjo AFA, Adepiti CA, et al. Deep multiple-instance learning for abnormal cell detection in cervical histopathology images. Comput Biol Med. 2021 Nov;138:104890. doi:10.1016/j.compbiomed.2021.104890. [Google Scholar] [PubMed] [CrossRef]

11. Ashurov A, Chelloug SA, Tselykh A, Muthanna MSA, Muthanna A, Al-Gaashani MSAM. Improved breast cancer classification through combining transfer learning and attention mechanism. Life. 2023 Sep 21;13(9):1945–5. doi:10.3390/life13091945. [Google Scholar] [PubMed] [CrossRef]

12. Abbasniya MR, Sheikholeslamzadeh SA, Nasiri H, Emami S. Classification of breast tumors based on histopathology images using deep features and ensemble of gradient boosting methods. Comput Electr Eng. 2022 Oct;103:108382. doi:10.1016/j.compeleceng.2022.108382. [Google Scholar] [CrossRef]

13. Abdel-Zaher AM, Eldeib AM. Breast cancer classification using deep belief networks. Expert Syst Appl. 2016;46:139–44. doi:10.1016/j.eswa.2015.10.015. [Google Scholar] [CrossRef]

14. Łowicki B, Hernes M, Rot A. Towards sustainable health—detection of tumor changes in breast histopathological images using deep learning. Procedia Comput Sci. 2022 Jan 1;207(2):1657–66. doi:10.1016/j.procs.2022.09.223. [Google Scholar] [CrossRef]

15. Yan R, Ren F, Li J, Rao X, Lv Z, Zheng C, et al. Nuclei-guided network for breast cancer grading in HE-stained pathological images. Sensors. 2022 May 27;22(11):4061. doi:10.3390/s22114061. [Google Scholar] [PubMed] [CrossRef]

16. Fan H, Xiong B, Mangalam K, Li Y, Yan Z, Malik J, et al. Multi-scale vision transformers. 2021 Apr 22. doi:10.48550/arXiv.2104.11227. [Google Scholar] [CrossRef]

17. Cornegruta S, Bakewell R, Withey S, Montana G. Modelling radiological language with bidirectional long short-term memory networks. 2016 Sep 27. doi:10.48550/arXiv.1609.08409. [Google Scholar] [CrossRef]

18. Aslan MF. A hybrid end-to-end learning approach for breast cancer diagnosis: convolutional recurrent network. Comput Electr Eng. 2023 Jan;105:108562. doi:10.1016/j.compeleceng.2022.108562. [Google Scholar] [CrossRef]

19. Prasad CR, Arun B, Amulya S, Abboju P, Kollem S, Yalabaka S. Breast cancer classification using CNN with transfer learning models. 2023 Jan 24;1–5. doi:10.1109/ICONAT57137.2023.10080148. [Google Scholar] [CrossRef]

20. Sherstinsky A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Physica D: Nonlinear Phenomena. 2020 Mar;404(8):132306. doi:10.1016/j.physd.2019.132306. [Google Scholar] [CrossRef]

Cite This Article

Copyright © 2024 The Author(s). Published by Tech Science Press.

Copyright © 2024 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools