Open Access

Open Access

ARTICLE

Highly Accurate Golden Section Search Algorithms and Fictitious Time Integration Method for Solving Nonlinear Eigenvalue Problems

1 Center of Excellence for Ocean Engineering, National Taiwan Ocean University, Keelung, 202301, Taiwan

2 Department of Marine Engineering, National Taiwan Ocean University, Keelung, 202301, Taiwan

* Corresponding Author: Yung-Wei Chen. Email:

Computer Modeling in Engineering & Sciences 2024, 139(2), 1317-1335. https://doi.org/10.32604/cmes.2023.030618

Received 14 April 2023; Accepted 06 November 2023; Issue published 29 January 2024

Abstract

This study sets up two new merit functions, which are minimized for the detection of real eigenvalue and complex eigenvalue to address nonlinear eigenvalue problems. For each eigen-parameter the vector variable is solved from a nonhomogeneous linear system obtained by reducing the number of eigen-equation one less, where one of the nonzero components of the eigenvector is normalized to the unit and moves the column containing that component to the right-hand side as a nonzero input vector. 1D and 2D golden section search algorithms are employed to minimize the merit functions to locate real and complex eigenvalues. Simultaneously, the real and complex eigenvectors can be computed very accurately. A simpler approach to the nonlinear eigenvalue problems is proposed, which implements a normalization condition for the uniqueness of the eigenvector into the eigen-equation directly. The real eigenvalues can be computed by the fictitious time integration method (FTIM), which saves computational costs compared to the one-dimensional golden section search algorithm (1D GSSA). The simpler method is also combined with the Newton iteration method, which is convergent very fast. All the proposed methods are easily programmed to compute the eigenvalue and eigenvector with high accuracy and efficiency.Keywords

We consider a general nonlinear eigenvalue problem [1]:

which is an eigen-equation used to determine the eigen-pair

where

The nonlinear eigenvalue problem consists of finding vector

In the gyroscopic system (4), M, G, K

In the free vibration of a q-degree mass-damping-spring structure, the system of differential equations for describing the motion is [14]

where q(t) is a time-dependent q-dimensional vector to signify the generalized displacements of the system.

In the engineering application, the mass matrix M and the stiffness matrix K are positive definite because they are related to the kinetic energy and elastic strain energy. However, the damping properties of a system reflected in the viscous damping matrix C are rarely known, making it difficult to evaluate exactly [15,16].

In terms of the vibration mode x, we can express the fundamental solution of Eq. (7) as

which leads to a nonlinear eigen-equation for

Eq. (9) is a quadratic eigenvalue problem to determine the eigen-pair

by inserting

A lot of applications and solutions to quadratic eigenvalue problems have been proposed, e.g., the homotopy perturbation technique [17], the electromagnetic wave propagation and the analysis of an acoustic fluid contained in a cavity with absorbing walls [18], and a friction induced vibration problem under variability [19]. In addition, several applications and solvers of generalized eigen-value problems have been addressed, e.g., the overlapping finite element method [20], the complex HZ method [21], the context of sensor selection [22], and a generalized Arnoldi method [23].

This paper develops two simple approaches to solving nonlinear eigenvalue problems. The innovation points of this paper are as follows:

1. When solving the nonlinear eigenvalue problems, they can be transformed into minimization problems regardless of real and complex eigenvalues.

2. For solving the linear equations system on the right-hand side with a zero vector, this paper presents the variable transformation to create a new nonhomogeneous linear system and merit functions.

3. When solving real or complex nonlinear eigenvalue problems, the vector variable of merit functions can search linearly in a desired range of the curve or surface by using 1D and 2D golden section search algorithms.

4. A simpler method is combined with the Newton iteration method, which is convergent very fast to solve nonlinear eigenvalue problems.

The rest of the paper’s contents are organized as follows: Section 2 introduces the first method to recast the original homogeneous eigen-equation to a nonhomogeneous linear system for satisfying a minimization requirement to determine the real eigenvalue. An example demonstrates this method upon using the one-dimensional golden section search algorithm (1D GSSA) to seek real eigenvalue. Then, other minimization requirements are derived to determine the complex eigenvalue. An example demonstrates this method. Section 3 proposes a simpler method to derive other nonhomogeneous linear systems by directly implementing the normalization condition into the eigen-equation. The fictitious time integration method (FTIM) is employed to seek real eigenvalue, and the two-dimensional golden section search algorithm (2D GSSA) is employed to find complex eigenvalue. Some examples of nonlinear eigenvalue problems are presented in Section 4, which displays the advantages of the presented two methods. Finally, the conclusions are drawn in Section 5.

We call the set of all eigenvalues λ of N the spectrum of N and denote it by

It is known that if

As noticed by Liu et al. [24], from Eq. (1), it is hard to directly determine the eigenvalue and eigenvector by a numerical method. In fact, from

In order to definitely obtain a nonzero vector x, we can create a nontrivial solution of Eq. (1), of which at least one component of x is not zero, say the j0-th component. We can normalize it to be

where

which is obtained by moving the j0-th column of the eigen-equation to the right-hand side as shown by the componential form:

In the Appendix, a computer code is given to derive cij from nij.

If λ is an eigenvalue and x is the corresponding eigenvector,

where

The motivation by transforming Eq. (1), which is a homogeneous equation with a zero vector on the right-hand side, to a nonhomogeneous Eq. (14) is that we can obtain a nonzero vector x by Eq. (13). Then, the minimization in Eq. (16) can be carried out. If we directly solve Eq. (1), only a trivial solution with x = 0 is obtained by numerical solver and thus Eq. (16) cannot be workable. Moreover, for each λ used in the minimization (16), Eq. (14) is a linear system that is easily solved for the new method being workable by using Eqs. (14)–(16).

The first method (FM) for solving the nonlinear eigenvalue problems is given as follows. (i) Select

To demonstrate the new idea in Eq. (16), we consider Eq. (2) with [25]:

We take a large interval with

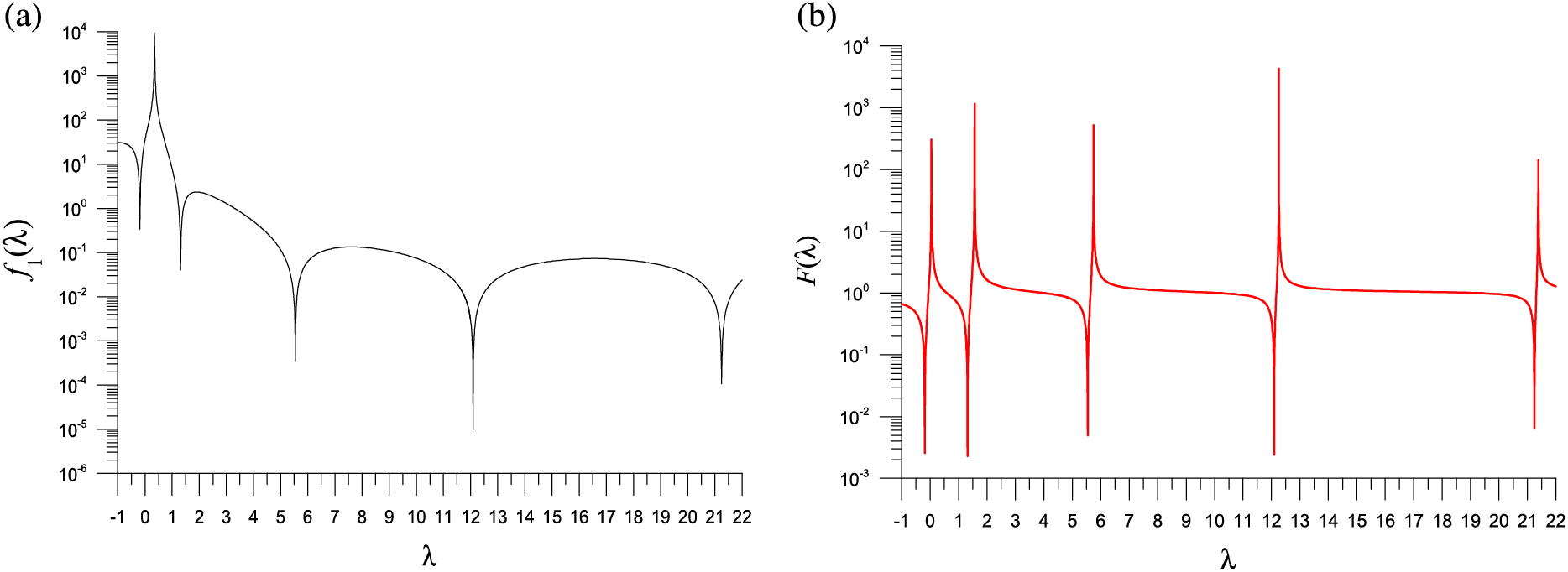

Figure 1: For a generalized eigenvalue problem, showing five minima in a merit function: (a) first method and (b) second method

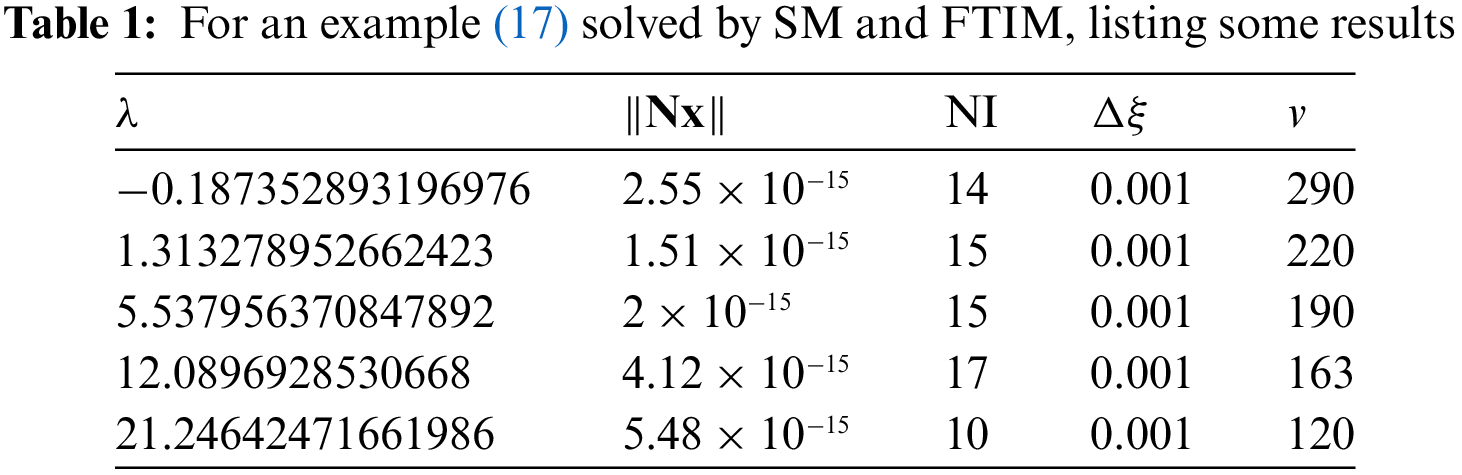

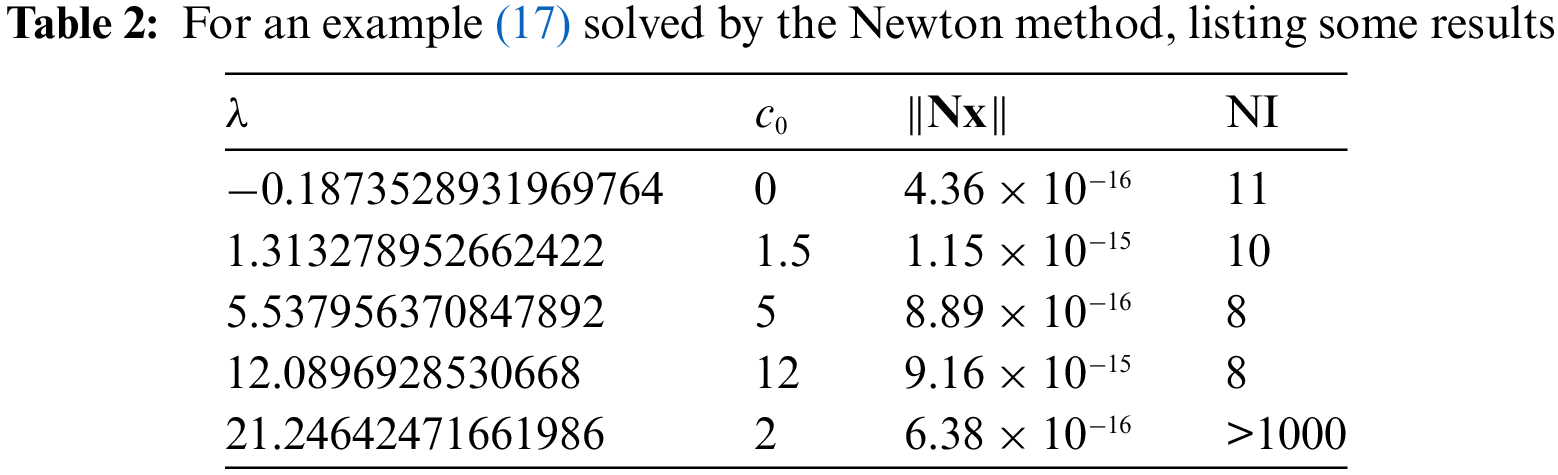

Applying the 1D GSSA to solve this problem, we record the number of iterations (NI) and the number of the computations of

With

We note that the Newton type methods are not suitable for solving the minimization problem in Eq. (16), because those minimal points are very sharp as shown in Fig. 1a.

For a received nonlinear eigenvalue problem, the initial interval

The complex eigenvalue is assumed to be

Correspondingly, we take

Inserting Eqs. (18) and (19) into Eq. (1), yields

Upon letting

Eq. (20) becomes

To determine the complex eigenvalue, instead of Eq. (16), we consider

The first method (FM) seeks the complex eigenvalue by (i) selecting

Both the convergence criteria of the 1D and 2D GSSA are fixed to be

When

whose complex eigenvalues are

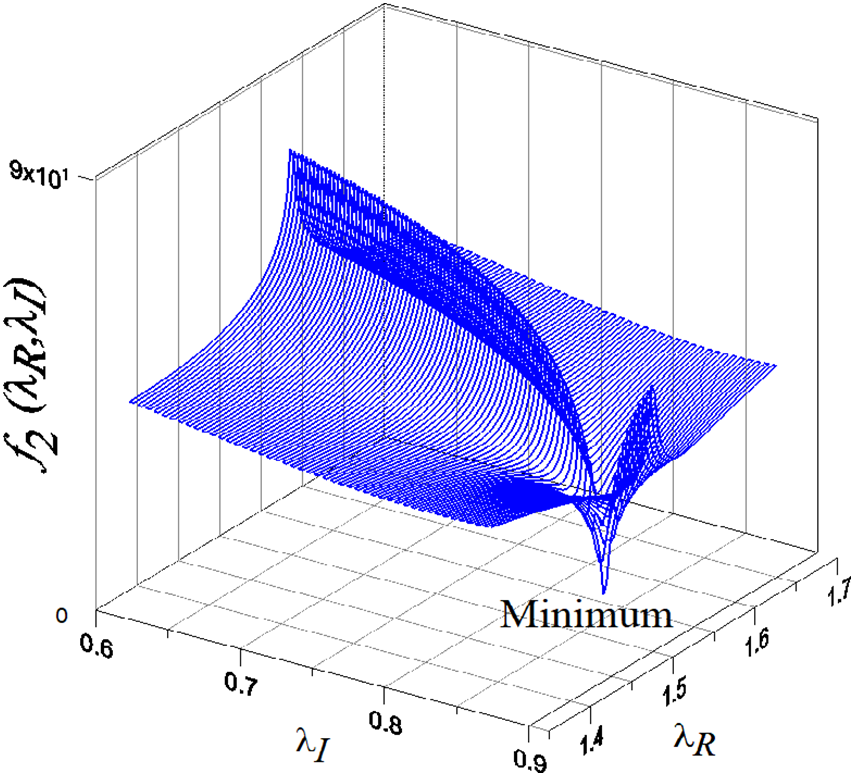

We take

Figure 2: The detection of a complex eigenvalue as a minimal point of a merit function

3 A Simpler Approach to a Nonhomogeneous System

If x is an eigenvector of Eq. (1), then

We can prove the following result.

Lemma 1. For

Proof. Suppose that

Combining Eqs. (27) and (28) together yields

On both sides multiplied by

we have

Hence, we can derive

which leads to

Theorem 1. For

where

Proof. Combined Eqs. (1) and (26) together yields an over-determined linear system:

On both sides multiplied by

we have

Hence, we can derive

If the coefficient matrix N is highly ill-conditioned, we can reduce Eq. (35) to Eq. (32), which is easily derived by adding

Eq. (35) bears certain similarity to the equations derived in [27,28] for solving ill-posed linear system, where the idea is adding

Eqs. (32) and (16) are the simplest method to find the real eigenvalues of Eq. (1), which is labeled as the second method or a simpler method (SM). It is apparent that Eq. (32) is simpler than Eqs. (12)–(15) used in the first method (FM) to solve the nonzero vector variable x.

In general, the GSSA requires many iterations and the evaluations of merit function to solve the minimization problem. The fictitious time integration method (FTIM) was first coined by Liu et al. [29] to solve a nonlinear equation. Eq. (16) is to be solved by FTIM for finding the real eigenvalues.

Let us define

which is an implicit function of

For Eq. (37), Liu et al. [29] developed an iterative scheme:

where

Starting from an initial guess

which makes a monotonically decreasing sequence of

The second method (SM) for determining the real eigenvalue of Eq. (1) is summarized as follows. (i) Select

We revisit the generalized eigenvalue problem given by Eq. (17) and using the SM. In Fig. 1b, we plot

With

Theorem 2. For

where

Proof. Inserting Eq. (41) into Eq. (32), yields

Equating the real and imaginary parts of Eq. (42), we have

which can be recast to that in Eq. (40).

When x is solved from Eq. (40), we can employ the minimization in Eq. (23) by picking up the complex eigenvalue with the aid of 2D GSSA.

We revisit the standard eigenvalue problem given by Eq. (24) by using the SM with

To compare with the Newton method, we supplement Eq. (1) by

which is a normalized condition. Letting

At the k-th step the Jacobian matrix reads as

Then the Newton iteration method is given by

The iteration is terminated if it converges with a given criterion

In Table 2, we list the results computed from the Newton method, where the initial guess is

We can observe that

To improve the performance, we can combine the Newton method to the SM, and solve Eqs. (32) and (26) by the following iteration:

The iteration is terminated if the given convergence criterion

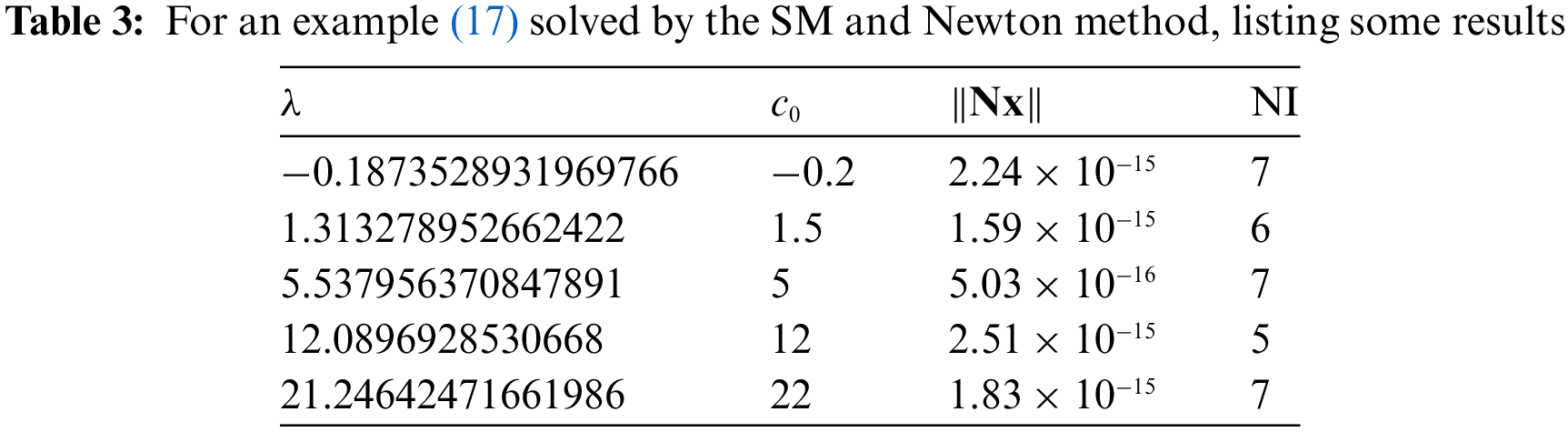

In Table 3, we list the results computed from the SM and Newton method, where

Upon comparing Table 3 with Table 2, it is obvious that the combination of the simpler method (SM) to the Newton iteration outperforms the original Newton method.

Remark 1. Even the proofs of Theorems 1 and 2 are simple and straightforward, they are crucial for the developments of the proposed numerical methods for effectively and accurately solving the nonlinear eigenvalue problems. Eq. (49) as an application of Theorem 1 is a very effective combination of the SM and Newton method to solve nonlinear eigenvalue problems.

4 Examples of Nonlinear Eigenvalue Problems

Example 1. Consider

where

We apply the 1D GSSA to solve this problem. With

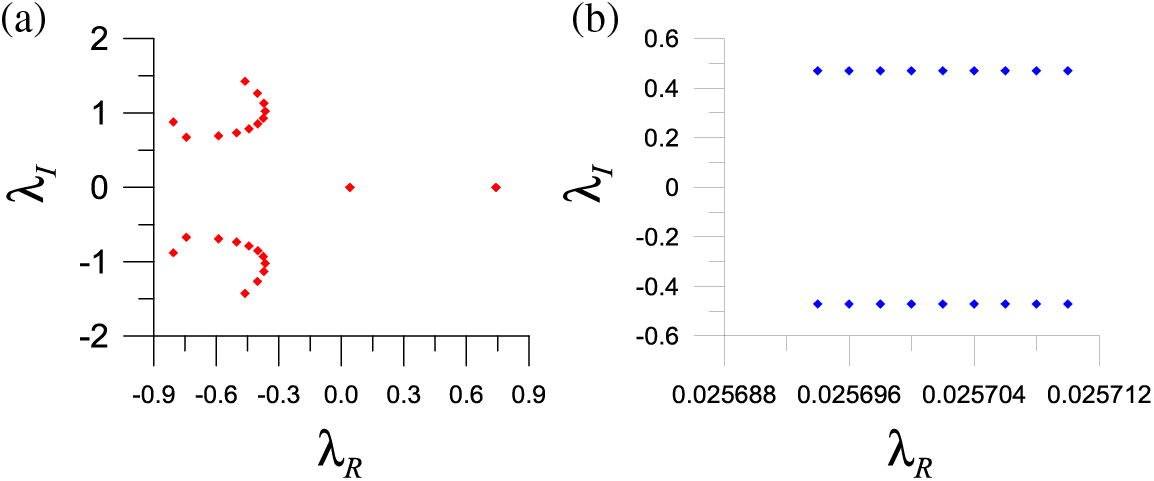

There are totally 24 eigenvalues as shown in Fig. 3a, which are computed by the detecting method based on 2D GSSA.

Figure 3: For the distribution of (a) example 1 with 24 eigenvalues and (b) example 3 with 18 eigenvalues

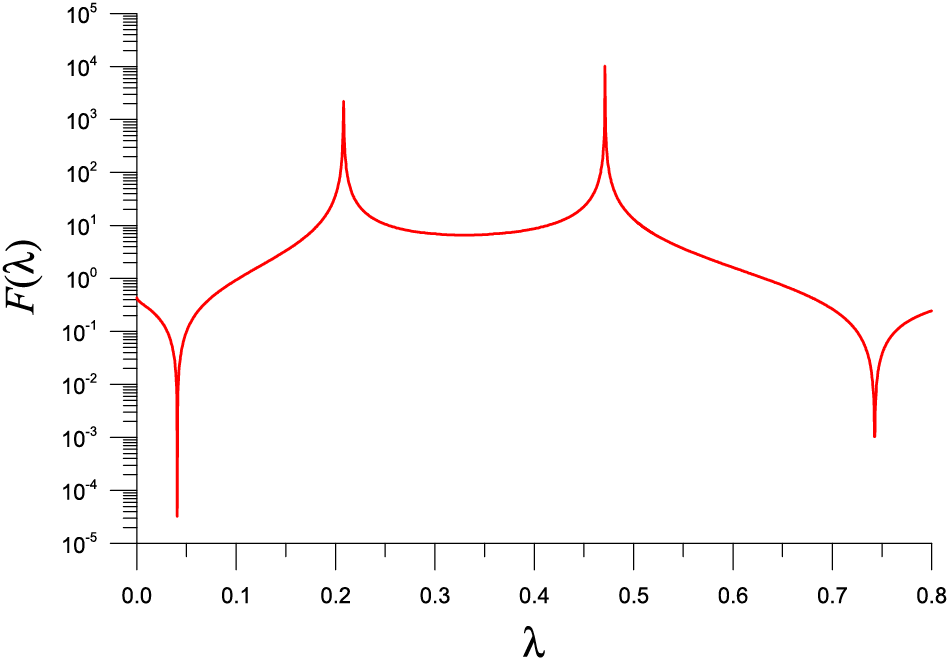

In Eq. (50), if

With

Figure 4: For a nonlinear eigenvalue problem of example 1 solved by SM, showing two minimums in a function F used in the FTIM

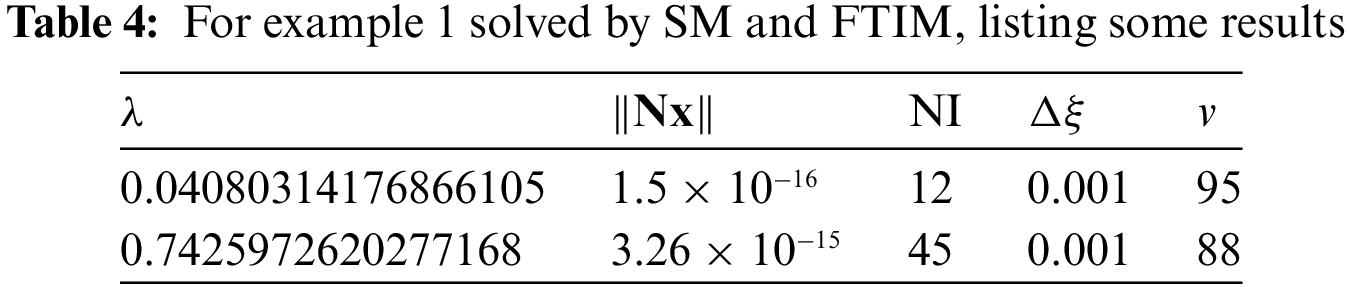

Table 4 lists the eigenvalue, the error

Example 2. Next, we consider [8]

where

It describes a time-delay system.

We take a1 = 1.5, a2 = 1, a3 = 0.5 and b1 = 0.3, b2 = 0.2, b3 = 0.1, and there exist four complex eigenvalues. Through some manipulations, we find that

When we apply the 2D GSSA to solve this problem, with

By taking the parameters of ai, bi, i = 1, 2, 3 as that listed in [8,30], there exists a double non-semi simple eigenvalue

Example 3. From [8]

where

We can derive

By applying the 2D GSSA to solve this problem with

Example 4. We consider

where

We apply the SM to solve this problem with

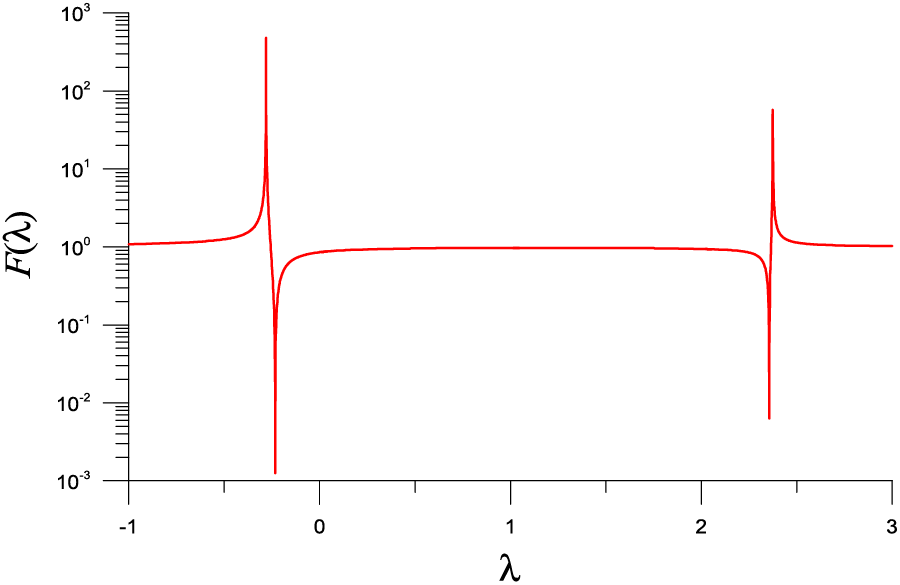

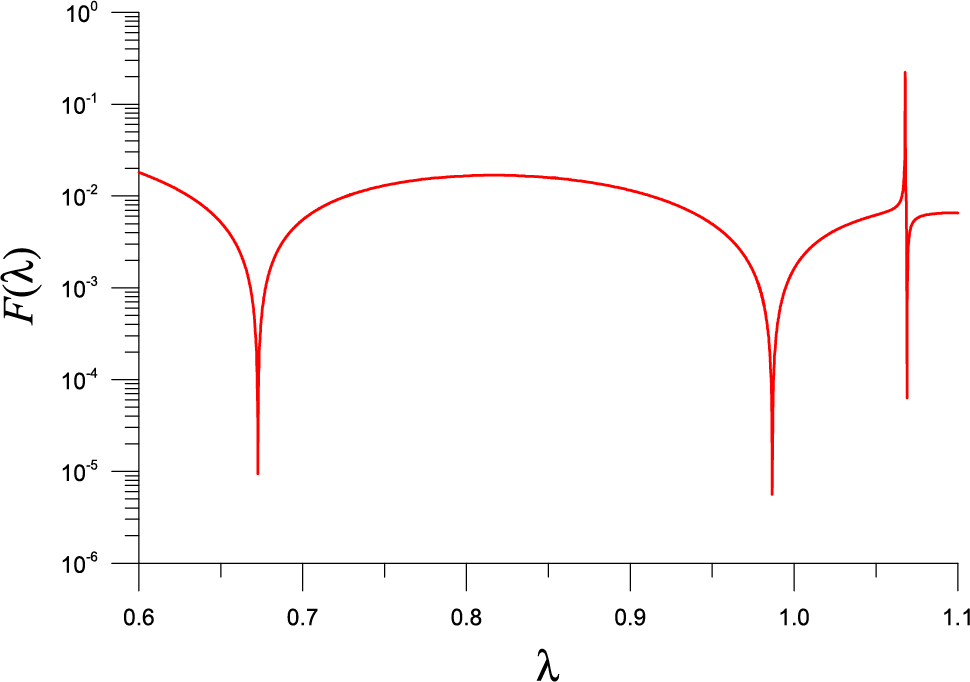

Figure 5: For a nonlinear eigenvalue problem of example 4 solved by SM, showing two minima in a function F used in the FTIM

Table 5 lists the eigenvalue, the error

Example 5. This example is Example 6.2 of [31], and we have a quadratic eigenvalue problem (3) with

where

By taking m = 5, we have n = 25, and we take c11 = 1, c12 = 1.3, c21 = 0.1, c22 = 1.1, c31 = 1 and c32 = 1.2.

We apply the SM to solve this problem with

Figure 6: For a nonlinear eigenvalue problem of example 5 solved by SM, showing three minima in a function F used in the FTIM

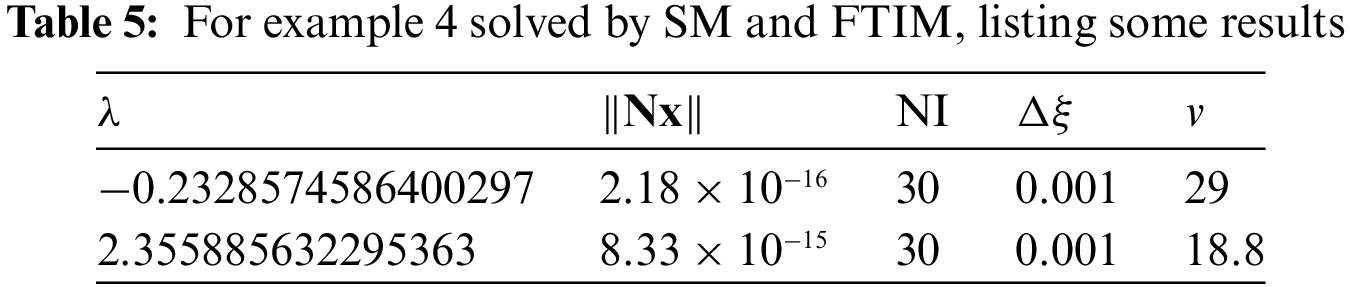

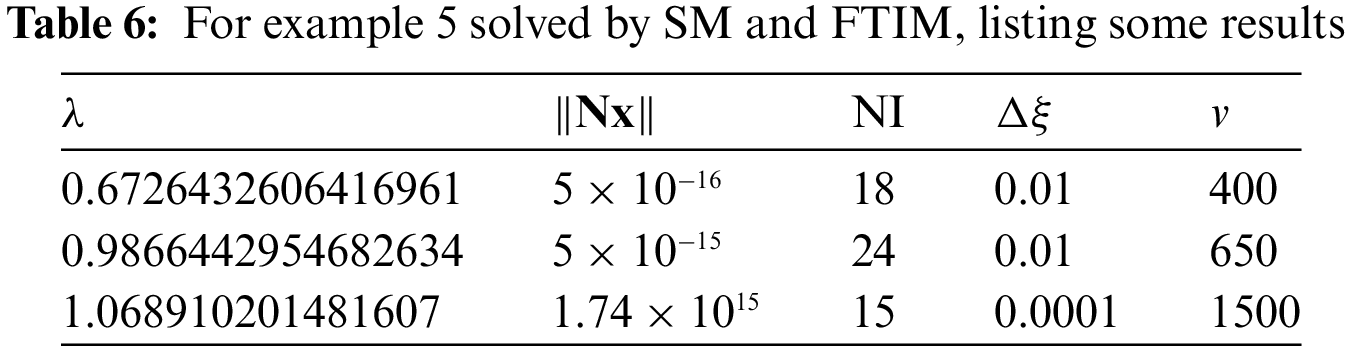

Table 6 lists the eigenvalue, the error

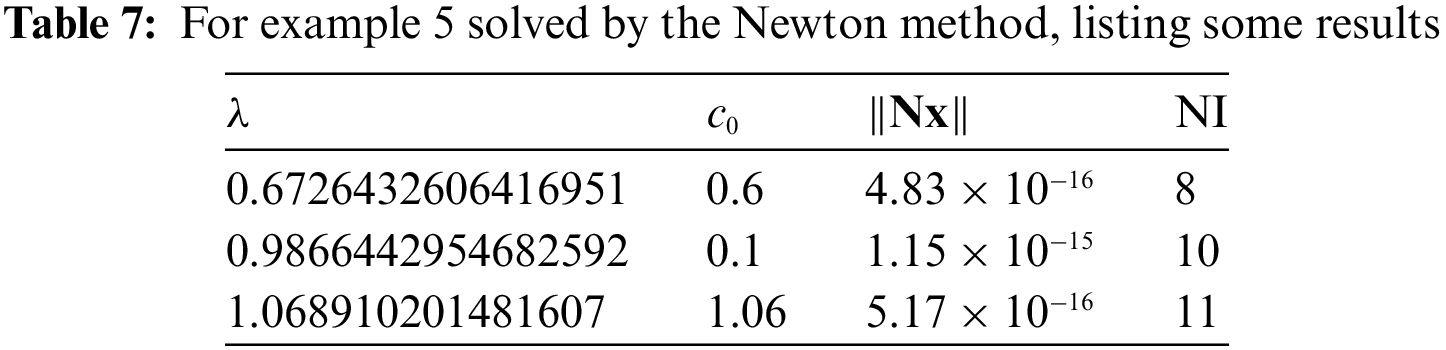

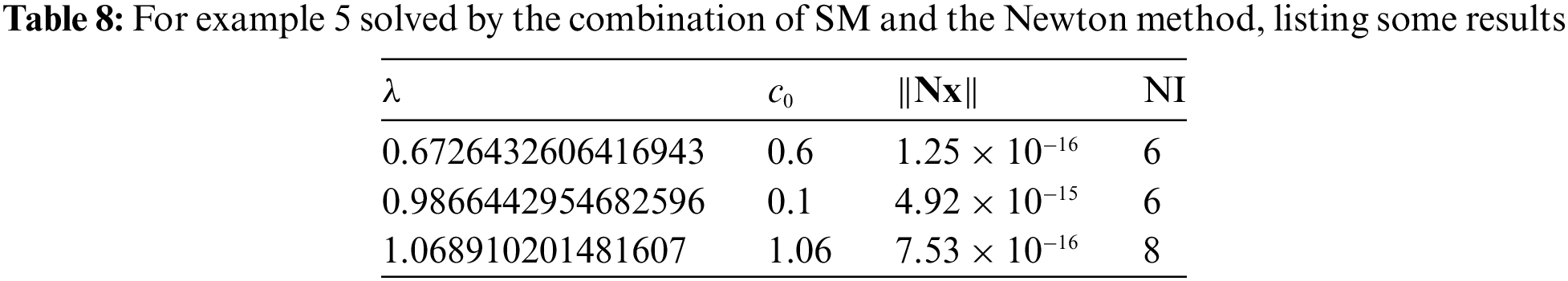

In Table 7, we list the results computed from the Newton method, where the initial guess is

We can observe that

In Table 8, we list the results computed from the Newton method, where

Upon comparing Table 8 to Table 7, the performance is improved by combining SM to the Newton method.

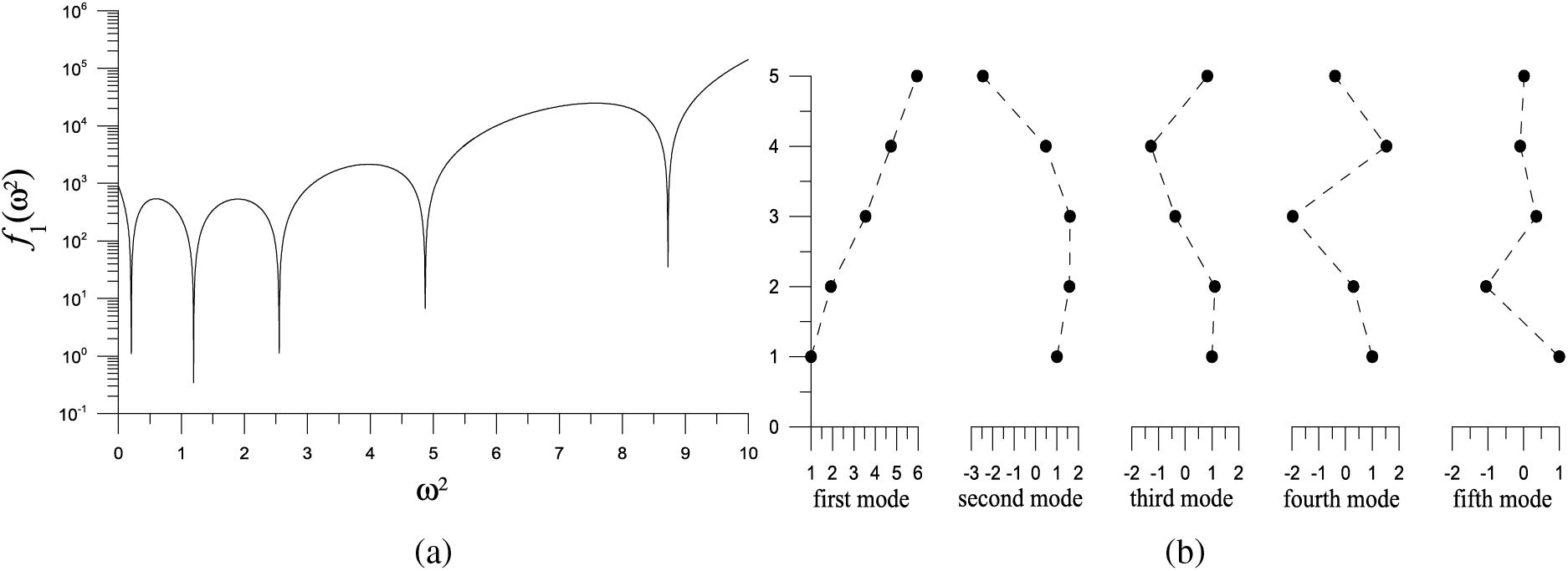

Example 6. As a practical application, we consider a five-story shear building with [32]

We take n = 5 and plot

Figure 7: For example 6 of a five-degree MK system, (a) showing five minima in a merit function, and (b) displaying the five vibration modes

Starting from

Starting from

Starting from

Starting from

This study proposes two simple approaches to solve the nonlinear eigenvalue problems, which directly implement a normalization condition for the uniqueness of the eigenvector into the eigen-equation. When the eigen-parameter runs in a desired range, the curve or surface for real and complex eigenvalues reveals local minimums of the constructed merit functions. In the merit function, the vector variable is solved from the nonhomogeneous linear system, which is available by reducing the eigen-equation by one dimension less and moving the normalized component to the right side. It is possible to quickly obtain the real and complex eigenvalues using 1D and 2D golden section search algorithms to solve the resultant minimization problems. The second method is simpler than the first by inserting the normalization condition into the eigen-equation. From the resulting nonhomogeneous linear system, the fictitious time integration method (FTIM) computes the real eigenvalues faster, which saves computation costs compared to the GSSA. The combination of the simpler method with the Newton iteration outperforms the original Newton method. Combining the simpler method to the Newton iteration without using the extra parameters is also better than the FTIM. It can obtain highly precise eigenvalues and eigenvectors very fast.

Acknowledgement: The authors would like to thank the National Science and Technology Council, Taiwan, for their financial support (Grant Number NSTC 111-2221-E-019-048).

Funding Statement: The corresponding authors would like to thank the National Science and Technology Council, Taiwan for their financial support (Grant Number NSTC 111-2221-E-019-048).

Author Contributions: The authors confirm contribution to the paper as follows: study conception and design: Chein-Shan Liu; data collection: Jian-Hung Shen; analysis and interpretation of results: Yung-Wei Chen; draft manuscript preparation: Chung-Lun Kuo and all authors reviewed the results and all approved the final version of the manuscript.

Availability of Data and Materials: Not applicable.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. Betcke, T., Higham, N. J., Mehrmann, V., Schröder, C., Tisseur, F. (2013). NLEVP: A collection of nonlinear eigenvalue problems. ACM Transactions on Mathematical Software, 39(2), 1–28. https://doi.org/10.1145/2427023.2427024 [Google Scholar] [CrossRef]

2. Qian, J., Lin, W. W. (2007). A numerical method for quadratic eigenvalue problems of gyroscopic systems. Journal of Sound and Vibration, 306(1–2), 284–296. https://doi.org/10.1016/j.jsv.2007.05.009 [Google Scholar] [CrossRef]

3. Chen, C., Ma, C. (2019). An accelerated cyclic-reduction-based solvent method for solving quadratic eigenvalue problem of gyroscopic systems. Computers & Mathematics with Applications, 77(10), 2585–2595. https://doi.org/10.1016/j.camwa.2018.12.040 [Google Scholar] [CrossRef]

4. Tisseur, F., Meerbergen, K. (2001). The quadratic eigenvalue problem. SIAM Review, 43(2), 235–286. https://doi.org/10.1137/S003614450038198 [Google Scholar] [CrossRef]

5. Higham, N. J., Kim, H. M. (2001). Solving a quadratic matrix equation by Newton’s method with exact line searches. SIAM Journal on Matrix Analysis and Applications, 23(2), 303–316. https://doi.org/10.1137/S089547989935097 [Google Scholar] [CrossRef]

6. Meerbergen, K. (2009). The quadratic Arnoldi method for the solution of the quadratic eigenvalue problem. SIAM Journal on Matrix Analysis and Applications, 30(4), 1463–1482. https://doi.org/10.1137/07069273X [Google Scholar] [CrossRef]

7. Hammarling, S., Munro, C. J., Tisseur, F. (2013). An algorithm for the complete solution of quadratic eigenvalue problems. ACM Transactions on Mathematical Software, 39(3), 1–19. https://doi.org/10.1145/2450153.2450156 [Google Scholar] [CrossRef]

8. Jarlebring, E. (2012). Convergence factors of Newton methods for nonlinear eigenvalue problems. Linear Algebra and its Applications, 436(10), 3943–3953. https://doi.org/10.1016/j.laa.2010.08.045 [Google Scholar] [CrossRef]

9. Mehrmann, V., Voss, H. (2004). Nonlinear eigenvalue problems: A challenge for modern eigenvalue methods. GAMM Mitteilungen, 27(2), 121–152. https://doi.org/10.1002/gamm.201490007 [Google Scholar] [CrossRef]

10. Betcke, M. M., Voss, H. (2008). Restarting projection methods for rational eigenproblems arising in fluid-solid vibrations. Mathematical Modelling and Analysis, 13(2), 171–182. https://doi.org/10.3846/1392-6292.2008.13.171-182 [Google Scholar] [CrossRef]

11. Hochstenbach, M. E., Sleijpen, G. L. (2008). Harmonic and refined Rayleigh-Ritz for the polynomial eigenvalue problem. Numerical Linear Algebra with Applications, 15(1), 35–54. https://doi.org/10.1002/nla.562 [Google Scholar] [CrossRef]

12. Xiao, J., Zhou, H., Zhang, C., Xu, C. (2017). Solving large-scale finite element nonlinear eigenvalue problems by resolvent sampling based Rayleigh-Ritz method. Computational Mechanics, 59, 317–334. https://doi.org/10.1007/s00466-016-1353-4 [Google Scholar] [CrossRef]

13. Chiappinelli, R. (2018). What do you mean by “nonlinear eigenvalue problems”? Axioms, 7(2), 39. https://doi.org/10.3390/axioms7020039 [Google Scholar] [CrossRef]

14. Meirovitch, L. (1986). Elements of vibrational analysis, 2nd edition. New York: McGraw-Hill. [Google Scholar]

15. Smith, H. A., Singh, R. K., Sorensen, D. C. (1995). Formulation and solution of the non-linear, damped eigenvalue problem for skeletal systems. International Journal for Numerical Methods in Engineering, 38(18), 3071–3085. https://doi.org/10.1002/nme.1620381805 [Google Scholar] [CrossRef]

16. Osinski, Z. (1998). Damping of vibrations. Rotterdam, Netherlands: A.A. Balkema. https://doi.org/10.1201/9781315140742 [Google Scholar] [CrossRef]

17. Sadet, J., Massa, F., Tison, T., Turpin, I., Lallemand, B. et al. (2021). Homotopy perturbation technique for improving solutions of large quadratic eigenvalue problems: Application to friction-induced vibration. Mechanical Systems and Signal Processing, 153, 107492. https://doi.org/10.1016/j.ymssp.2020.107492 [Google Scholar] [CrossRef]

18. Hashemian, A., Garcia, D., Pardo, D., Calo, V. M. (2022). Refined isogeometric analysis of quadratic eigenvalue problems. Computer Methods in Applied Mechanics and Engineering, 399, 115327. https://doi.org/10.1016/j.cma.2022.115327 [Google Scholar] [CrossRef]

19. Sadet, J., Massa, F., Tison, T., Talbi, E., Turpin, I. (2022). Deep Gaussian process for the approximation of a quadratic eigenvalue problem application to friction-induced vibration. Vibration, 5(2), 344–369. https://doi.org/10.3390/vibration5020020 [Google Scholar] [CrossRef]

20. Lee, S., Bathe, K. J. (2022). Solution of the generalized eigenvalue problem using overlapping finite elements. Advances in Engineering Software, 173, 103241. https://doi.org/10.1016/j.advengsoft.2022.103241 [Google Scholar] [CrossRef]

21. Hari, V. (2022). On the quadratic convergence of the complex HZ method for the positive definite generalized eigenvalue problem. Linear Algebra and its Applications, 632, 153–192. https://doi.org/10.1016/j.laa.2021.08.022 [Google Scholar] [CrossRef]

22. Dan, J., Geirnaert, S., Bertrand, A. (2022). Grouped variable selection for generalized eigenvalue problems. Signal Processing, 195, 108476. https://doi.org/10.1016/j.sigpro.2022.108476 [Google Scholar] [CrossRef]

23. Alkilayh, M., Reichel, L., Ye, Q. (2023). A method for computing a few eigenpairs of large generalized eigenvalue problems. Applied Numerical Mathematics, 183, 108–117. https://doi.org/10.1016/j.apnum.2022.08.018 [Google Scholar] [CrossRef]

24. Liu, C. S., Kuo, C. L., Chang, C. W. (2022). Free vibrations of multi-degree structures: Solving quadratic eigenvalue problems with an excitation and fast iterative detection method. Vibration, 5(4), 914–935. https://doi.org/10.3390/vibration5040053 [Google Scholar] [CrossRef]

25. Golub, G. H., van Loan, C. F. (2013). Matrix computations. Maryland: The John Hopkins University Press. [Google Scholar]

26. Rani, G. S., Jayan, S., Nagaraja, K. V. (2019). An extension of golden section algorithm for n-variable functions with MATLAB code. IOP Conference Series: Materials Science and Engineering, 577(1), 012175. https://doi.org/10.1088/1757-899X/577/1/012175 [Google Scholar] [CrossRef]

27. Liu, C. S., Hong, H. K., Atluri, S. N. (2010). Novel algorithms based on the conjugate gradient method for inverting ill-conditioned matrices, and a new regularization method to solve ill-posed linear systems. Computer Modeling in Engineering & Sciences, 60(3), 279–308. https://doi.org/10.3970/cmes.2010.060.279 [Google Scholar] [CrossRef]

28. Liu, C. S. (2012). Optimally scaled vector regularization method to solve ill-posed linear problems. Applied Mathematics and Computation, 218(21), 10602–10616. https://doi.org/10.1016/j.amc.2012.04.022 [Google Scholar] [CrossRef]

29. Liu, C. S., Atluri, S. N. (2008). A novel time integration method for solving a large system of non-linear algebraic equations. Computer Modeling in Engineering & Sciences, 31(2), 71–83. https://doi.org/10.3970/cmes.2008.031.071 [Google Scholar] [CrossRef]

30. Jarlebring, E., Michiels, W. (2010). Invariance properties in the root sensitivity of time-delay systems with double imaginary roots. Automatica, 46(6), 1112–1115. https://doi.org/10.1016/j.automatica.2010.03.014 [Google Scholar] [CrossRef]

31. Mehrmann, V., Watkins, D. (2001). Structure-preserving methods for computing eigenpairs of large sparse skew-Hamiltonian/Hamiltonian pencils. Journal on Scientific Computing, 22(6), 1905–1925. https://doi.org/10.1137/S1064827500366434 [Google Scholar] [CrossRef]

32. Berg, G. V. (1998). Elements of structural dynamics. New Jersey: Prentice-Hall. [Google Scholar]

In this appendix, we demonstrate the computer code to obtain the coefficient matrix [cij] from [nij] by

If

Cite This Article

Copyright © 2024 The Author(s). Published by Tech Science Press.

Copyright © 2024 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools