Open Access

Open Access

REVIEW

Evolutionary Neural Architecture Search and Its Applications in Healthcare

1 School of Economics and Management, Hebei University of Technology, Tianjin, 300401, China

2 School of Artificial Intelligence, Hebei University of Technology, Tianjin, 300401, China

3 School of Electrical Engineering, Hebei University of Technology, Tianjin, 300401, China

4 Department of Game Design, Uppsala University, Uppsala, S-75105, Sweden

* Corresponding Authors: Jie Li. Email: ; Bin Cao. Email:

Computer Modeling in Engineering & Sciences 2024, 139(1), 143-185. https://doi.org/10.32604/cmes.2023.030391

Received 03 April 2023; Accepted 09 October 2023; Issue published 30 December 2023

Abstract

Most of the neural network architectures are based on human experience, which requires a long and tedious trial-and-error process. Neural architecture search (NAS) attempts to detect effective architectures without human intervention. Evolutionary algorithms (EAs) for NAS can find better solutions than human-designed architectures by exploring a large search space for possible architectures. Using multiobjective EAs for NAS, optimal neural architectures that meet various performance criteria can be explored and discovered efficiently. Furthermore, hardware-accelerated NAS methods can improve the efficiency of the NAS. While existing reviews have mainly focused on different strategies to complete NAS, a few studies have explored the use of EAs for NAS. In this paper, we summarize and explore the use of EAs for NAS, as well as large-scale multiobjective optimization strategies and hardware-accelerated NAS methods. NAS performs well in healthcare applications, such as medical image analysis, classification of disease diagnosis, and health monitoring. EAs for NAS can automate the search process and optimize multiple objectives simultaneously in a given healthcare task. Deep neural network has been successfully used in healthcare, but it lacks interpretability. Medical data is highly sensitive, and privacy leaks are frequently reported in the healthcare industry. To solve these problems, in healthcare, we propose an interpretable neuroevolution framework based on federated learning to address search efficiency and privacy protection. Moreover, we also point out future research directions for evolutionary NAS. Overall, for researchers who want to use EAs to optimize NNs in healthcare, we analyze the advantages and disadvantages of doing so to provide detailed guidance, and propose an interpretable privacy-preserving framework for healthcare applications.Graphic Abstract

Keywords

Deep learning (DL) has made remarkable strides in several areas. How to design automatic neural architecture search (NAS) methods has received substantial interest. NAS has surpassed hand-design architectures on some difficult tasks, particularly in the medical fields [1]. Medical image data account for 90% of electronic medical data. Most imaging data are standardized for communication and storage, which is crucial for rapid diagnosis and prediction of disease. Convolutional neural networks (CNNs) can be used to solve various computer vision problems, including medical image classification and segmentation. However, manually designed network architectures are overparameterized. To optimize deep CNNs for NAS, multiobjective evolutionary algorithms (MOEAs) have made important contributions to reduce the parameter amount and trade off the accuracy and the model complexity [2]. Therefore, NAS has become popular for medical image data in healthcare. Moreover, NAS can efficiently and automatically complete analytical tasks in electronic health records (EHRs), including disease prediction and classification, data enhancement, privacy protection, and clinical event prediction [3].

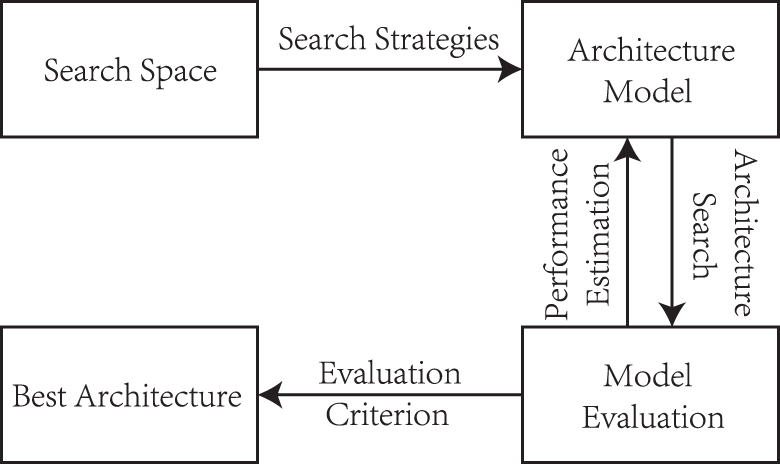

NAS can be used to solve the combinatorial optimization problem of hyperparameters. Traditional hyperparameter optimization mainly uses grid search and manual intervention. It has very limited flexibility [4]. NAS uses some strategies to obtain the optimal network architecture when a set of candidate neural network (NN) structures are given. As shown in Fig. 1, the fundamental concept of NAS involves exploring a search space containing various candidate NN architectures, using specific strategies to discover the most efficient network structure. Performance evaluation involves analyzing the strengths and weaknesses of an architecture, which can be quantified using various indicators, including model size and accuracy. The search space of NAS defines candidate architectures that can be searched. A good search space should not only be constrained but also possess enough flexibility [5]. In efficient neural architecture search (ENAS) [6], the first weight-sharing NAS method, the searching is based on only a very small block search space, and the whole neural architecture is constructed by stacking a few blocks. This indicates that better performance can be obtained while exploring the block-based search space [7] by simply changing the block structure, that is, changing the number and the sizes of convolutional kernels. This also enables the neural architecture to be easily transferred to other tasks. The ENAS algorithm forces all models to share the same weights, rather than training the model to converge from scratch, and the weights in previous models can be applied. Therefore, each time that you train a new model, transfer learning can be applied, and the convergence speed is much faster. AutoGAN [8] connects generative adversarial networks and NAS, using weight sharing and dynamic resetting to accomplish NAS, which is very competitive. Therefore, under various constraints, weight sharing can be used to accelerate NAS in various devices. Evolutionary algorithms (EAs) are applicable to different search spaces for NAS [9–11]. Moreover, based on changing the block structure [11] and transfer learning [12] for NAS, EAs can achieve high search efficiency at the initial stage of the search.

Figure 1: NAS principle

Search strategies determine the approach for searching fundamental architecture to achieve the desired search objectives and establish the configuration of network architecture. The search strategy of NAS [13] can be roughly divided into reinforcement learning (RL), gradient-based methods, and EAs, according to the optimization algorithm used. RL is regarded as a meta-controller to exploit various strategies to auto-perform NN architecture design [14]. NAS only needs to set state-action space and reward function to complete the search process. RL requires extensive interaction to explore the search space. The RL-based search method cannot flexibly optimize multiple objectives and consumes vast amounts of time and resources. The gradient-based algorithm is more effective than the RL algorithm in some aspects, but the gradient-based searching assumes the optimization problem has some particular mathematical properties, such as continuous, convex, and so on. However, the search space of NAS is discrete. Therefore, for the gradient-based methods, the key issue is to convert the discrete network space into continuous and obtain the optimal architecture from its continuous representation. The most representative approach is that of differentiable architecture search (DARTS) [15], in which the authors proposed a differential approach and used gradient descent to solve the architecture search problem. The softmax function has been used to transform the search space. This process increases the training time.

EAs is different from RL. Neuroevolution of augmenting topologies (NEAT) enables artificial evolution to replicate the formation of brain structures and connections, determine the appropriate number of connections, and eliminate ineffective ones [16]. When DL models are scaled up, the amount of parameters expands rapidly, which poses difficulties to the coding space and computing efficiency problems for the optimization strategies [11,17,18]. To enhance optimization efficiency, we concentrate on employing various neuroevolutionary strategies for NAS. Neuroevolution strategies have the advantages of parallel search and high adaptability [19,20]. Neuroevolution is the application of evolutionary ideas to the hyperparameter optimization of NNs. It uses EAs to optimize NNs, which can avoid falling into the local optimum. The work by [21] incorporated a torus walk instead of a uniform walk to improve local search capability. Neuroevolution can mimic biological evolution to evolve NNs, which can learn building blocks, block connections, and even optimization algorithms, and it has inherent parallelism and global exploration characteristics [22]. Neuroevolution approaches have achieved superior performance in medical field. Jajali et al. [23] proposed the MVO-MLP to facilitate early diagnosis of cardiovascular diseases. EvoU-Net used a genetic algorithm (GA) to search the precise and simple CNNs for MRI image segmentation [24].

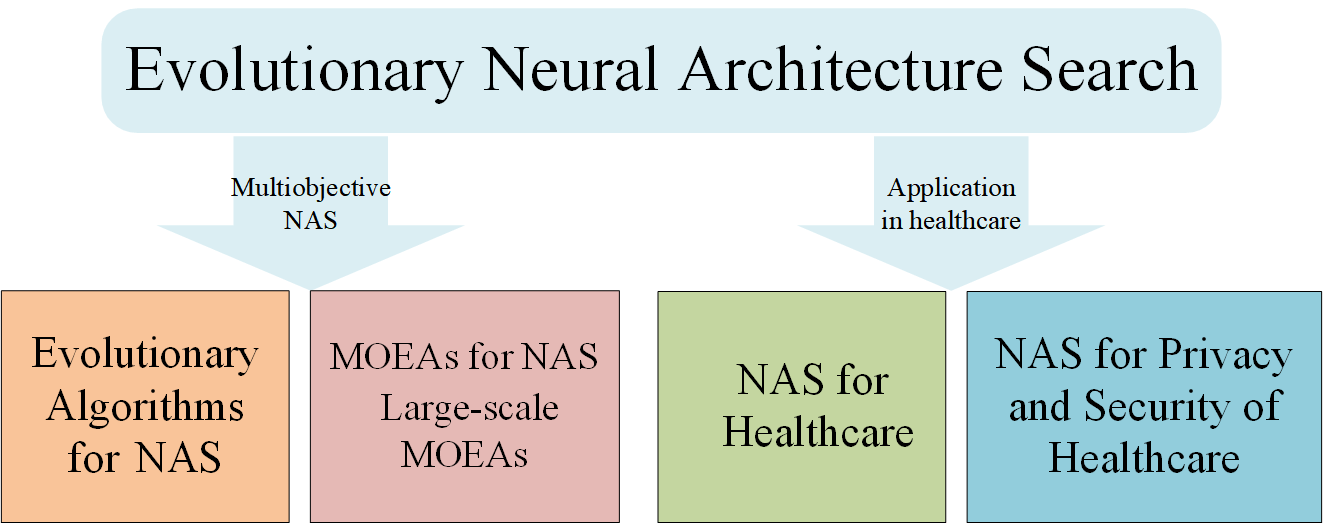

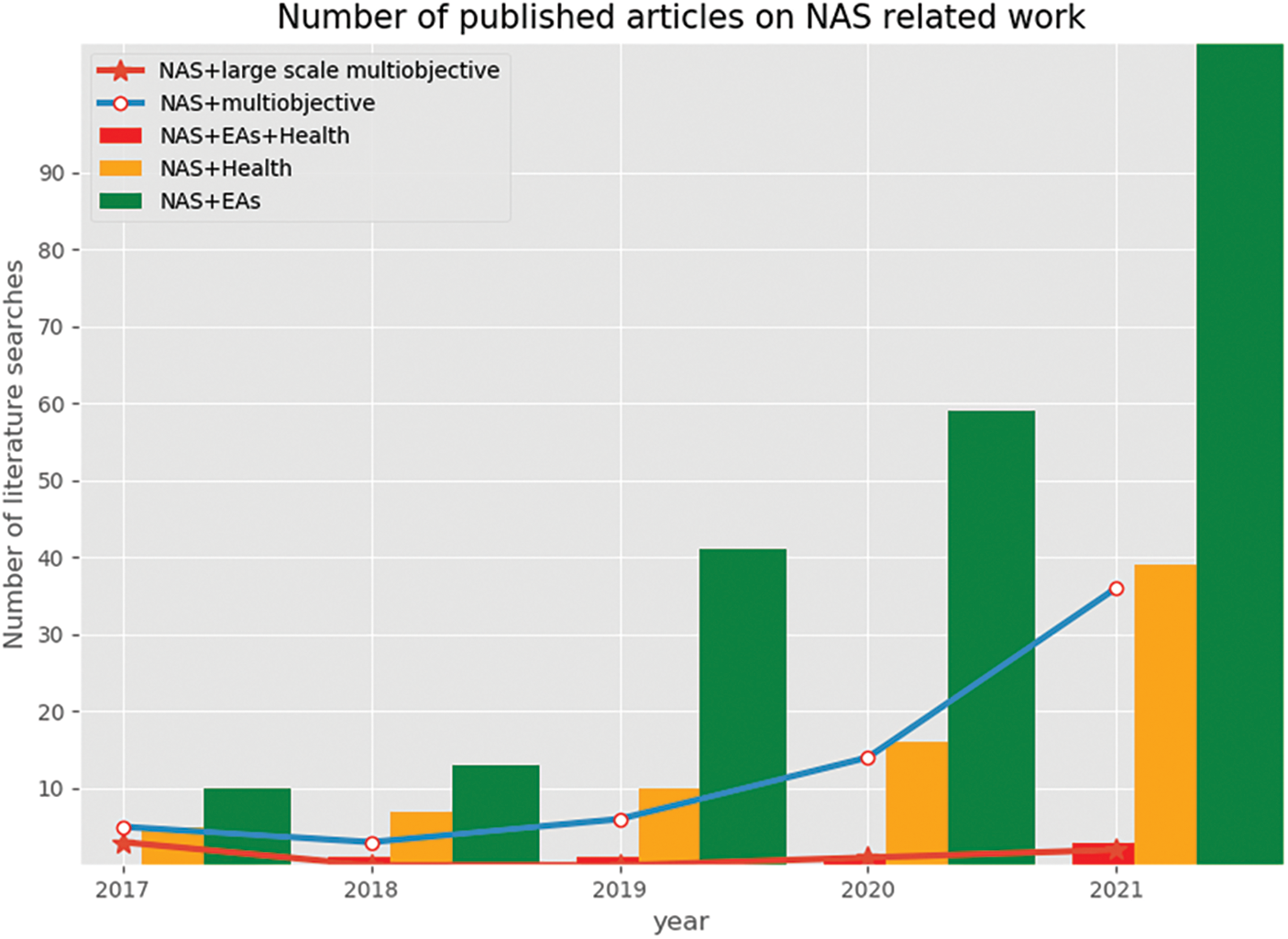

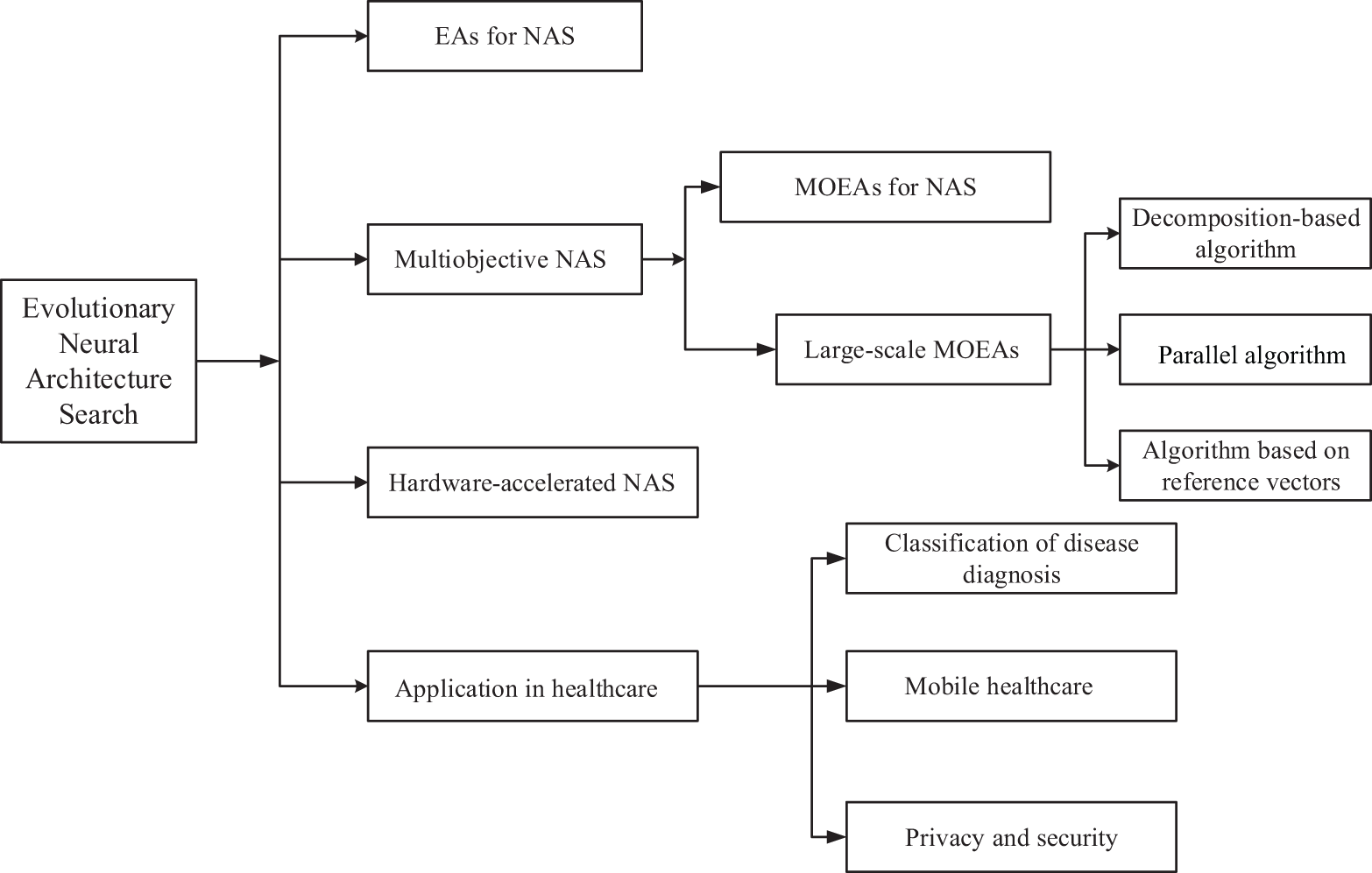

MOEAs can automatically design simple and efficient network architectures [25] as well as discover and explore optimal neural architectures that meet various performance criteria effectively. The work by [9] demonstrated that large-scale evolution algorithms could achieve high accuracy from a simple initial condition in CIFAR-10 and CIFAR-100 for image classification. Since then, extensive studies have been conducted on the utilization of EAs for NAS. As shown in Fig. 2, EAs for NAS have shown a substantially growing trend from 2017 to 2021. We used the keywords “NAS+EAs,” “NAS+Health,” “NAS+EAs+health,” “NAS+multiobjective optimization,” and “NAS+large-scale multiobjective” to show the publication status of NAS-related studies in the Web of Science (WOS) database in the past five years. It was observed that few papers have focused on EAs for NAS in deep neural networks (DNN). Most of the existing NAS studies describe how to implement NAS using different search strategies [26,27], but only few studies focus on EAs for NAS. Studies related to EAs for NAS, including multiobjective and large-scale MOEAs for NAS, are currently very uncommon. Given the survey summary depicted in Fig. 2, in response to the lack of research in the aforementioned direction, we develop a research classification for NAS, as shown in Fig. 3. In this study, we analyze the advantages and disadvantages of EAs to optimize the NN architecture and provide a detailed guidance for researchers who want to use EAs to optimize the NNs. The existing large-scale multiobjective algorithms are summarized to provide a feasible method for researchers to improve the design and efficiency of NAS. Moreover, we propose large-scale multiobjective neuroevolution strategies and distributed parallel neuroevolution strategies for medical data processing that combine privacy protection with medical data security concerns.

Figure 2: Number of published articles on NAS-related work

Figure 3: Evolutionary neural architecture search

The remainder of this paper can be organized as follows. Section 2 presents EAs for NAS. We explore MOEAs, large-scale multiobjective evolutionary optimization for NAS, parallel EAs for NAS or hardware accelerated NAS methods in Section 3, and these novel search methods can greatly reduce manual intervention and achieve automatic search. Section 4 discusses the healthcare application of EA-based NAS and interpretable network architecture. Section 5 proposes an interpretable evolutionary neural architecture search based on federated learning (FL-ENAS) framework applied to healthcare to address search efficiency and privacy concerns. Future direction is presented to guide readers to research the NAS via EAs in Section 6. We conclude this paper in Section 7.

2 Evolutionary Algorithms for NAS

EAs can tackle black-box functions for global optimization with population-based strategies. The evolutionary process usually includes initialization, crossover, mutation, and selection. The optimization of NN using EAs can be traced back 30 years to a technique called neuroevolution. Angeline et al. [28] proposed optimizing the structures and weights simultaneously by applying genetic strategies in 1994. In 1997, Yao et al. [29] proposed an evolutionary programming neural network (EPNet) to construct the architecture of ANNs. In addition to optimizing the neural architecture, the weights are also optimized. As these NNs are very small, they are easy to solve, but after that, the NN becomes very large in scale, which cannot be optimized directly. At the beginning of the 20th century, research on encoding ways had a breakthrough. Indirect encoding was introduced to auto-design effective topological structures in 2002 [16,30]. This neuroevolution of augmented topologies is one of the earliest and most well-known examples of using EAs for NN optimization (NEAT). Additionally, it modifies not only the weights and bias but also the structure of the whole network. The NASnet [12] model designed two different block architectures: cells of the normal and reduction types. The superiority of NASnet resided in automatically generating CNNs and applying transfer learning for large-scale image classification and objective detection. Cantu-Paz et al. [31] proved that EAs for NNs could attain the more accurate classification than hand-designed neural architectures in 2005. Since then, numerous researchers have optimized the neural architectures by changing the encoding methods; for example, the indirect encoding with innovation proposed by Stanley et al. [32]. EAs that search DNNs for image classification could obtain the optimal individual from the population by applying mutation and crossover operators [33]. A memetic algorithm (MA) could improve search efficiency. Through the implementation of a novel encoding method, study by [9] utilized an EA to search for deep neural architectures, demonstrating that EAs could achieve high-accuracy architectures from a basic starting point for CIFAR-10 and CIFAR-100 data sets. This had garnered considerable attention. Real et al. [34] proposed AmoebaNet with the aging evolution, which was a variant of the tournament selection. In the progress of evolution, the younger models that could help in exploration were preferred and compared to RL and random search, EAs were faster and could produce better architectures [35]. EAs could improve optimization efficiency by setting suitable hyperparameters, including mutation rate, population size, and selection strategy [36].

2.1 Evolutionary NAS Framework

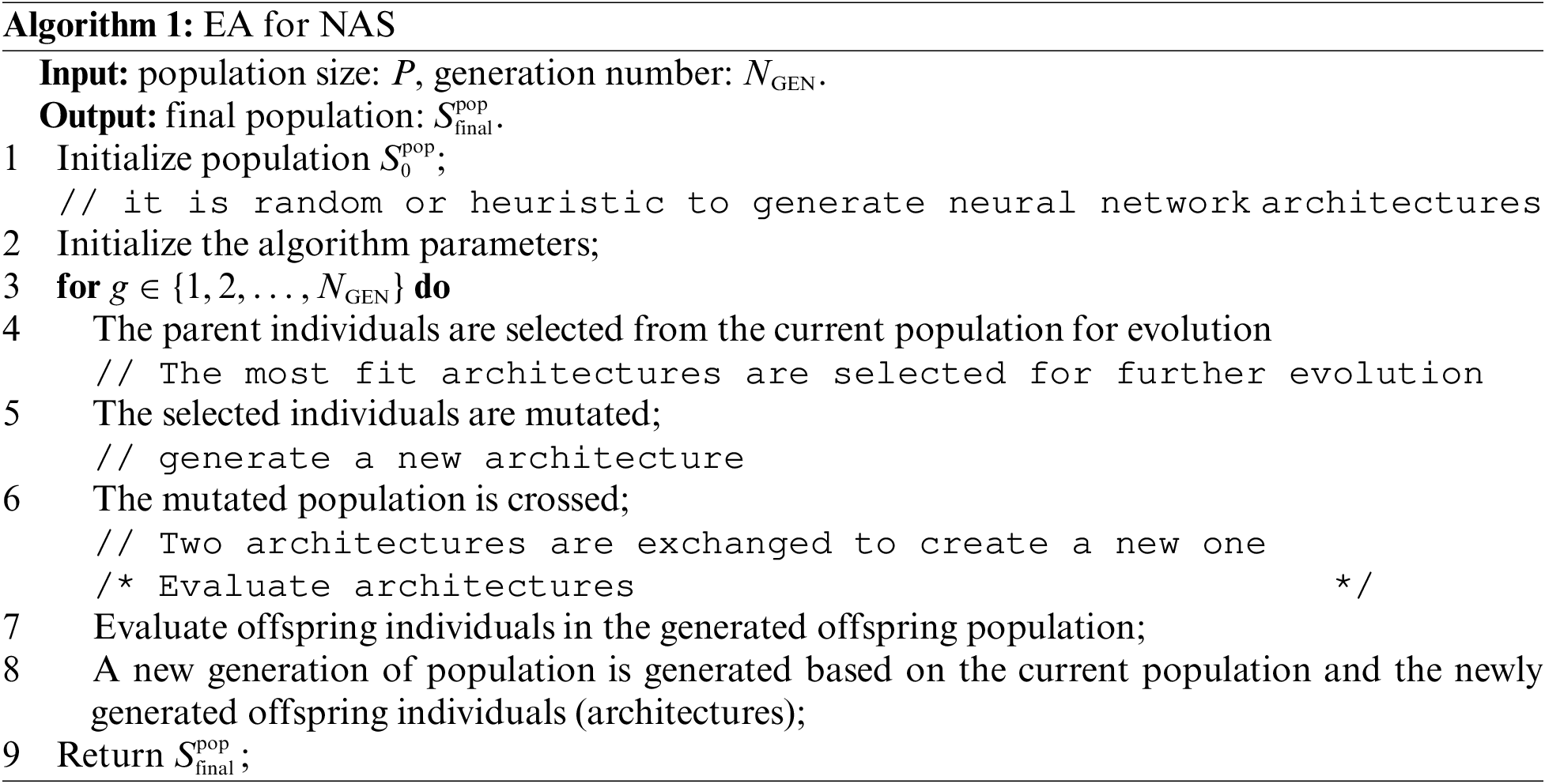

RL was based on gradient and could be stuck in local optima [37]. However, EAs optimizing NNs has been an active research area for many years, proved as an effective technique, and continues to experience ongoing innovation [38]. EAs are very similar; they first randomly sample a population, then select parents, and generate offspring via evolutionary operators such as crossover and mutation until the final population is attained. The EAs process for NAS is shown as Algorithm 1. First, input some necessary parameters such as population size P, evolution generation number

In the progress of EAs, the crossover and mutation are the most important operations for the autogeneration of new NN architectures. Crossover operation can dramatically alter the NN architecture by exchanging a fraction of architecture between a group of NN architectures. The mutation changes the NN architecture with slight possibilities. EAs can use flexible encoding for NAS. The encoding space can indicate various complex structures [40,41]. EAs can guide the exploration and determine the search direction by simple genetic operators and survival of the fittest in natural selection. EAs use the population to organize the search, which makes it possible to concurrently explore multiple regions of the solution space, so this is particularly suitable for large-scale parallelism. For the evolutionary algorithm (EA) for NAS approach, The critical factor lies in designing an effective EA and a suitable coding strategy. At its core, NAS is aimed at addressing combinatorial optimization problems. The variants of EAs can be effectively employed in addressing combinatorial problems. Therefore, in theory, all EA variants hold potential to be utilized as NAS methods. The effect of EAs on NN architecture optimization is particularly obvious [41]. For example, the multiobjective optimization algorithm NSGA-II [42] was utilized to optimize the NAS problem [43]. Based on the basic GA, each individual was stratified according to the relationship between Pareto dominance and non-dominance as well as crowding distance. MOEAs could simultaneously optimize network architecture and model accuracy for complex medical image segmentation [25]. MOEAs could automatically design simple and efficient network architectures, and the flexibility of the architecture and the convergence of the EA need to be improved. Therefore, improving EAs’ search efficiency remains the primary challenge in the EA for NAS methods. We will address this problem in Section 3.

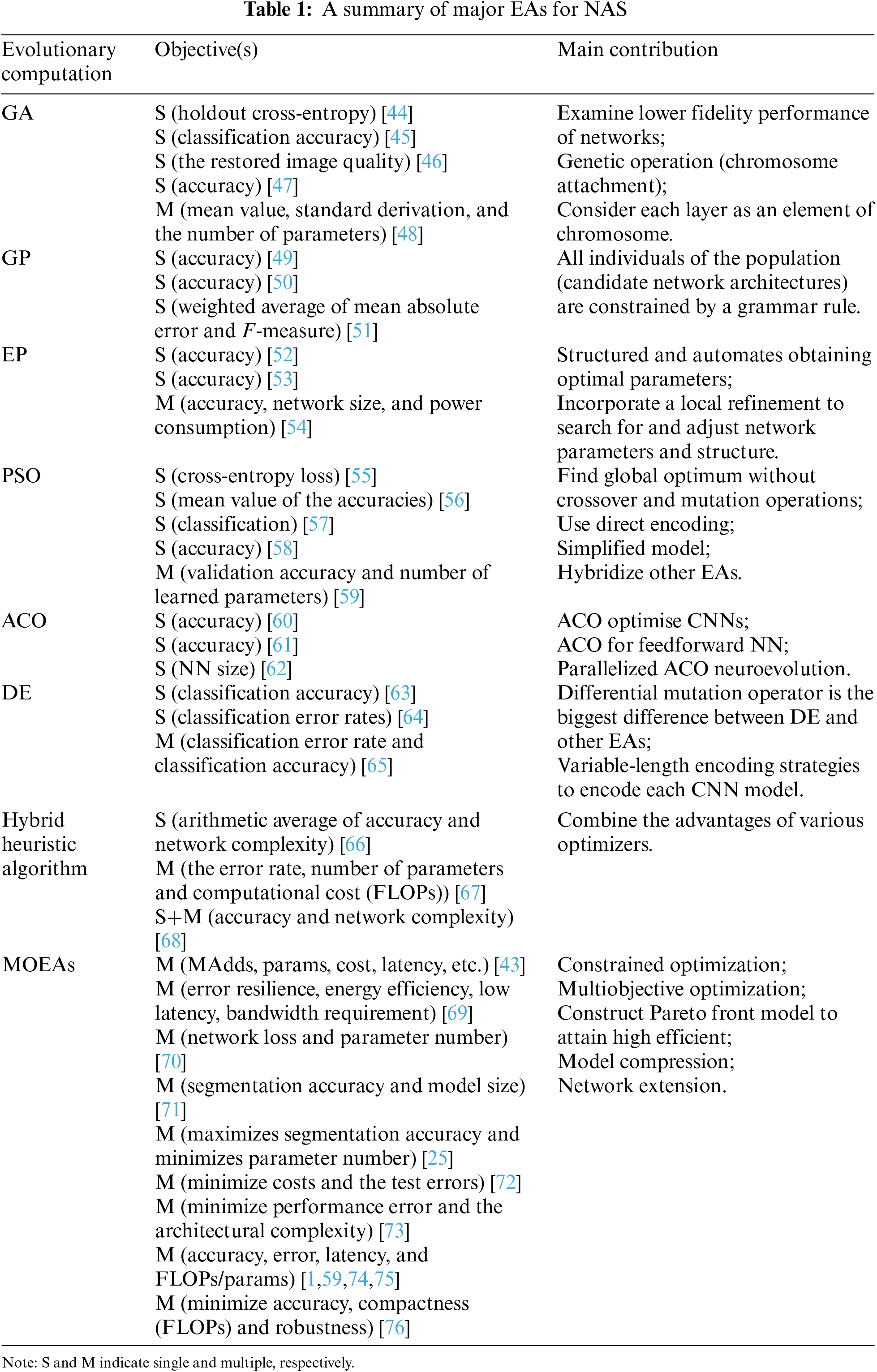

In Table 1, we summarize major EAs for NAS, the representative algorithms and their main contributions, and the objective number (single or multiple) of these algorithms. We provide a guide to how the EAs can generate the best NN architecture. NAS based on GA [77,78] could use a binary string as a representation of the architecture, whereas NAS based on genetic programming (GP) [49,50] used a tree-based representation with nodes representing operations. NAS based on evolutionary programming (EP) [53] used continuous or discrete representations. NAS based on particle swarm optimization (PSO) [40,56,55] used a swarm of particles to represent neural network architectures. NAS based on ant colony optimization (ACO) [79,62] used pheromone trails to guide the search. Moreover, large-scale MOEAs could avoid overfitting on a single objective, handle a substantial amount of decision variables and constraints, and autogenerate the best NN architecture [80–82]. The most representative algorithm was the GA for NAS. GA could be employed to train CNNs. Because each parameter was considered as a chromosome element in the traditional encoding scheme, the challenge was how to map a network to a chromosome [77]. However, this coding scheme made the chromosome too large in scale, leading to problems such as genetic operator failure in the large-scale problem. An alternative would be for each convolution layer (or another layer) to be treated as an element of the chromosome. All the layer connection weights were contained in each chromosome element. This encoding scheme significantly reduced the chromosome structure, which had faster operation speed, faster convergence speed, and higher accuracy. Through crossover and mutation, good individuals were generated and selected, and bad ones were eliminated; thus, the optimal solution could be searched out through the evolution [83]. Greedy exploration based on experience and transfer learning could accelerate GA to optimize both hyperparameters and NNs. Moreover, the genes could represent any part, such as block type, loss function, or learning strategy of an NN [46]. A gene map could encode a deep convolutional neural networks (DCNN) [47]. Evolving deep convolutional neural networks (EvoCNNs) had used a flexible encoding way to optimize the DCNN and connection weight initialization values [48]. EAs used chromosome information encoded in each solution, mutation and crossover, to simulate the evolution of populations [84].

GP based on grammar-guided constraints can generate population individuals (i.e., candidate architectures) according to the defined grammar rules. Suganuma et al. [49] presented a novel method encoding the CNN architecture based on cartesian genetic programming (CGP), which utilized highly functional modules such as convolution block as their node function in CGP. The CNN architecture and connection were optimized based on CGP, and a more competitive architecture could be achieved. Improved gene expression programming optimized the NN structure, weight, and threshold. The efficiency could be improved by adjusting the fitness function and genetic operator [50]. Hybrid meta-evolutionary programming (EP) combined with ANN could develop a structured and automatic way to optimize NNs [53]. EP is often combined with other algorithms for NAS, but it has not been widely used in recent years; consequently, it needs further exploration. PSO originates from simulating the foraging behavior of the bird swarm. Its superiority is based on few adjustable parameters, simple operation, and a very fast convergence speed. It has no crossover and mutation operations, as in GAs, and it finds the global optimum by following the optimal candidates in the current search. Wang et al. [56] used PSO to optimize CNNs to automatically explore the appropriate structure, where Internet protocol (IP) address type encoding could be combined with PSO for the evolution of the DCNN. PSO could optimize the internal parameters in hidden layers [40]. In PSO, for DCNNs to perform image classification tasks, a new direct encoding scheme and the velocity operator were designed, allowing for the optimization of CNNs with fast-converging PSO [55]. PSO could be used to simplify models with maintained performance. Multiobjective NN simplification could be achieved via NN pruning and tuning; thus, a simplified NN with high accuracy could be obtained. PSO could also be combined with other algorithms to facilitate the information processing of NNs [85]. DE is a kind of heuristic and stochastic search algorithm based on individual differences. The differential mutation operator is the biggest difference between DE and other EAs. Adaptive DE combined with ANNs could be used for classification problems. Mininno et al. [86] proposed the compact differential evolution (cDE) algorithm. This method had a small hardware demand and was suitable for practical applications with large search space dimensions and limited cost. However, in a constrained environment, DE had some drawbacks, including low convergence and early convergence [87,88].

In addition to searching through a large search space of NAS efficiently, EAs-based NAS is ideal for complex tasks that require many parameters to be adjusted [75,74]. Because it can consider combinations of operations and structures that are difficult for humans. EA-based NAS has some common advantages. First, using multiple populations can improve solution diversity and efficiency [89,90]. Second, EAs-based NAS has high scalability, and can explore a large search space, which can be difficult to handle manually. Lastly, in addition to flexibility, multiobjective EAs-based NAS can be customized for specific applications to meet specific requirements. Moreover, GA-based, GP-based, and EP-based NAS exhibit parallelization [91], which means that they can simultaneously be executed on multiple CPUs or GPUs. This results in a search space exploration that is faster and more efficient. GA was one of the most effective ways to evolve deep neural networks [92,93]. Xie et al. [11] used the traditional GA to automatically generate CNNs, proposed a novel encoding scheme on behalf of the fix-length binary string, and then generated a set of random individuals to initialize the GA. Sun et al. [94] used GA to evolve the deep CNN’s neural architecture and the initial connection weight value for image classification.

However, one limitation of EA-based NAS is that it could be computationally expensive, especially for large search spaces or complex objective functions. It also has some disadvantages, such as dependence on hyperparameters and limited exploration. Population initialization is crucial to metaheuristic algorithms, such as PSO and DE, which improve diversity and convergence. Quasirandom sequences were more effective than random distributions for initializing the population [95]. Furthermore, researchers were currently focused on swarm convergence as a high-priority challenge in EAs [87]. Researchers were exploring various techniques for improving swarm convergence in EAs, such as using adaptive swarm sizes, incorporating diversity into the swarm, and applying advanced communication and coordination strategies. To solve the convergence efficiency problem, the work by [96] proposed two DE variants. The search’s efficiency could be significantly affected by the choice of EAs technique and the parameters used in the algorithm. Additionally, the fitness may not always capture all aspects of the desired performance, leading to suboptimal architectures. Multiobjective or large-scale multiobjective algorithms [73] could solve these problems. Optimizing multiple objectives simultaneously, such as accuracy and computational efficiency, can result in optimal architectures that trade off between different objectives. Researchers have proposed methods, such as surrogate models, to accelerate the search, which reduces computational costs.

Interpretability of the resulting architectures is also critical. Most of EAs for NAS can result in complex architectures that are difficult to interpret or understand, which can be a disadvantage. However, GP [84] added interpretability to NAS. GP used a tree-like structure of interconnected nodes to generate neural architectures through an evolutionary approach. The architecture could be visualized and interpreted easily, allowing researchers to identify the critical components that contribute to its performance. In addition, researchers could understand its functionality. Researchers have also simplified architectures using network pruning. To compare EA-based NAS algorithms, it is imperative to have standardized evaluation metrics and benchmarks. Evaluating EA-based NAS algorithms is another significant area of research. For image recognition tasks, several benchmark and evaluation metrics were commonly used, including CIFAR-10 and ImageNet, and floating-point operations per second (FLOPs) for computation efficiency [1,43]. The abbreviations “FLOPs” and “FLOPS” have different meanings. In this study, the complexity of neural networks is typically expressed in terms of “FLOPs”. FLOPs mean floating-point operations, understood as calculations. It can be used to measure the complexity of algorithms or models [43,74,97]. Usually, FLOPS, an acronym for Floating Point Operations Per Second, means “In computing, floating point operations per second (FLOPS, flops, or flop/s) is a measure of computer performance” or hardware speed [98,99].

Some researchers have used the integrated algorithms of tabu search, SA, GA, and back propagation (BP) to optimize the network architecture and weights, in which connections between layers were optimized to control the network size, constructing a new way to find the optimal network architecture [66,68]. In the work by [100], five metaheuristic algorithms (GA, whale optimization algorithm, multiverse optimizer, satin bower optimization, and life choice-based optimization algorithms) were used to fine-tune the hyperparameters, biases, and weights of CNN for detecting the abnormalities of breast cancer images. Although EAs have been used for NNs for many years and have solved many practical problems, these previous methods cannot fit the complicated and large-scale NAS or optimization of numerous connection weights. Improving search efficiency and trade-off the multiple requirements (objectives) of real-world applications poses a significant challenge for EAs in NAS. In Section 3, we introduce multiobjective and hardware-accelerated NAS methods to solve these problems.

With the continuous development of MOEAs, the scalability of MOEAs has attracted increasing attention. In general, the scalability of an algorithm characterizes the tendency of the algorithm’s performance (such as running time) variable based on problem size. In multiobjective optimization, the problem mainly includes two aspects: the increase in the objective function and the increase in decision variables. Therefore, multiobjective NAS mainly includes MOEAs for NAS and large-scale MOEAs for NAS. Moreover, low efficiency and search constraints can cause significant challenges in searching NNs, so new methods have emerged to overcome these difficulties. Federated learning (FL) [101], large-scale MOEAs [80], hardware-accelerated NAS methods [81,82], and fully automatic searching are some efficient methods used to address these problems. In the work of [72], multiobjective evolution was utilized for NAS in FL. Some studies had discussed the full automation to seek the best neural architecture (hyperparameters) [6,102]. Complete automation in search could be achieved through the utilization of large-scale MOEAs [19] and hardware-accelerated methods [81,82].

The primary goal of NAS is to maximize accuracy. Thus, researchers are always focusing on this single objective in optimization. In an actual scenario, there are other properties such as computation complexity, energy consumption, model size, and inference time. When NNs have certain requirements for robust, it should be considered in the search progress. Therefore, NAS could be considered as a multiobjective optimization problem [103]. For example, researchers might be interested in the neural architecture level and weight level [43]; in the meantime, researchers could also consider the objectives of hardware awareness and hardware diversity [104,105]. Multiobjective optimization may need to simultaneously restrict the size of the neural architecture and attain high accuracy, so light-weight NNs should be attained to solve this problem [103]. Moreover, NN model compression [106] could satisfy budget constraints. Table 1 shows some major MOEAs for NAS.

One of the challenges of multiobjective optimization is that it can be tough to discover a single solution that optimize all of the objectives, so Pareto optimality [42] could be considered. Some researchers utilize aggregation to transform multiple objectives into a single objective via weights. However, the weights are difficult to determine, and trial and error experiments are necessary. For this issue, MOEAs can generate a population of solutions by simultaneously considering multiple objectives in a self-organized manner, resulting in diversified solutions for conflicting objectives. Traditionally, there are three strategies for MOEAs: decomposition [107], Pareto dominance [42], and indicator [108,109]. One of the most popular methods is decomposition [107], where multiobjective problems are divided into a series of aggregated single-objective problems. We can also use surrogate models to optimize and improve the efficiency of NAS search. To complete various competitive tasks, Lu et al. [43] proposed two surrogate models: the architecture and weight levels. In the process of NAS, the surrogate model is efficient, which aims to simulate the original model to alleviate the high computational cost. In the work of [99], network expansion was applied to construct efficient DCNNs with high accuracy. Therefore, the DCNNs can be optimized under a small budget to reduce computation complexity and then be expanded for large-scale problems.

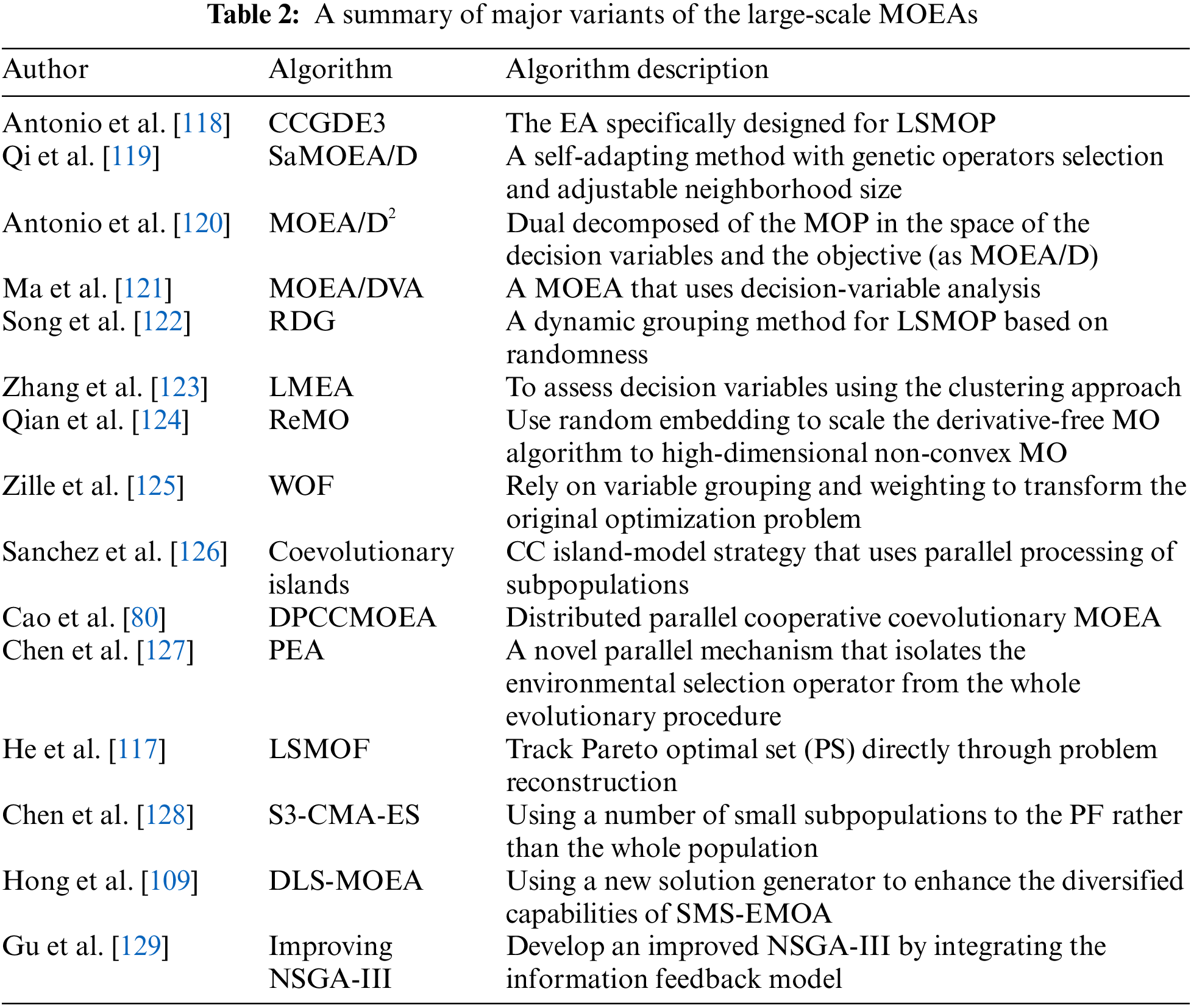

Large-scale MOEAs can complete automation search, which could be effective and accelerated NAS methods [110–112]. Inspired by large-scale single-objective optimization algorithms, some proven strategies are introduced into large-scale MOEAs. As in the work by [113], large-scale optimization methods have been reviewed, and the algorithms can be categorized into two types: those that decompose and those that not. Non-decomposition algorithms approach the optimization problem as a unified entity and enhance the algorithm’s performance by creating efficient operators or integrating them with other optimization techniques. Based on the EA of decomposition, the strategy of divide and conquer is used to decompose large-scale optimization problems into a set of small-scale problems. Each small-scale problem is optimized through a collaborative framework. The variable grouping methods have been proposed, including fixed grouping, random grouping [114], dynamic grouping [110], differential grouping [115], and delta grouping [116]. The existing large-scale MOEAs include these categories [117]: methods based on decision variable analysis, cooperative coevolution frameworks, and problem-based transformation. These classifications are relative, and different algorithms can be divided into different classes from various perspectives. Table 2 shows a summary of major variants of large-scale MOEAs.

NAS can be seen as a large-scale multiobjective optimization problem (LSMOP). Numerous parameters are set to optimize the accuracy and model architecture complexity [73]. Based on competitive decomposition, the multiobjective optimization method decomposes the original problem into several subproblems. This method can solve the issues of irregular Pareto front, population degeneration, etc. In the work by [111], the author regarded the NAS as an LSMOP problem with constraints in which the nondifferentiable, nonconvex, and black box nature of this problem makes EAs a promising solution. Multimodal multiobjective optimization problems (MMOPs) with a high quantity of variables cannot be handled well by EAs. A sparse Pareto optimal EA is proposed for large-scale MMOPs. MMOPs as much as 500 decision variables can be addressed by using this approach. Moreover, they have competitive performance for NAS [112]. EAs targeting at LSMOPs for NAS are effective and they can tackle issues that traditional EAs cannot solve. However, related papers about LSMOP for NAS are still in the initial stage and need further research.

3.2.1 Decomposition-Based Algorithm

Cooperative coevolutionary generalized differential evolution (CCGDE3) proposed by Antonio et al. [118] used a cooperative coevolution framework to solve LSMOP. It tested the maximum scale decision variables of 5000. Experiments showed that it could be expanded well while the amount of variables grows. However, this method has not been analyzed in more complex test sets, such as WFG or DTLZ. Since Zhang et al. [107] proposed a general evolutionary multiobjective optimization algorithm based on decomposition (MOEA/D) in 2007, decomposition-based MOEAs have attracted substantial attention from researchers [120]. Qi et al. [119] developed a parameter adaptation strategy that combined neighboring domain size selection and genetic operator selection and introduced it into MOEA/D. Antonio et al. [120] studied the effects of scalability in MOEA/D, and proposed MOEA/D2 combining MOEA and collaborative evolution techniques. Ma et al. [121] employed this decomposition strategy into the large-scale MOEA, and proposed a large-scale MOEA according to decision variables analysis, namely a multiobjective EA based on decision variables analysis (MOEA/DVA). It took into account the relevance of decision variables, as well as the impact of the decision variable on optimization objectives. For the former, this work analyzed whether any two variables were related by sampling and divided unrelated variables into different groups. With regard to the latter, this study analysed the control properties of variables through sampling. Using the analysis of the variables described above, the LSMOP was detached into several smaller sub-problems. This algorithm was tested on up to 200 decision variables. Further studies have been conducted on MOEA/DVA. Song et al. proposed a random dynamic grouping strategy called random dynamic grouping (RDG) [122], which used decision variable analysis to divide variables for cooperative evolution. To reduce the number of evaluations, RDG used a dynamic grouping strategy based on MOEA/DVA framework to determine suitable variable groups.

Based on decision variable clustering, an EA for large-scale many-objective optimization (LMEA) was proposed by Zhang et al. [123], which was according to a correlation analysis of variables and decision attributes. Different strategies were optimized separately due to the different decision variables involved in the problem. LMEA used clustering to accomplish study of decision variables’ attributes rather than sampling-based strategies. The group with the smaller clustering angle was divided into convergence variables, and the group with the larger clustering angle was divided into diversity variables. Different strategies were used to optimize convergence and diversity variables, respectively. Such issues not only had high-dimensional decision space space, but their objective space is also high dimensional. Research on this kind of problem is also worthy of further attention. The experimental results showed that these algorithms achieved good results. However, when it was difficult to discriminate between decision variables, these methods might fail. Additionally, analyzing the above-mentioned decision variables required multiple times for fitness evaluations, and the scale of the problem increased rapidly. Fitness evaluations in practical problems is often time-consuming, which limits the scalability of this kind of algorithm to some extent.

Scholars use the divide-and-conquer mechanism to decompose the decision vector into small components and solve them in parallel [130]. Cao et al. [80] proposed a distributed parallel cooperative coevolutionary MOEA (DPCCMOEA) based on message passing interface (MPI). In DPCCMOEA, the decision variables were split into multiple groups using an improved variable analysis method, and each group was optimized by a different subpopulation (species). As each species was further divided into multiple subspecies (groups), the amount was determined by the number of CPUs available, which increased parallelism and allows for adaptive achievement. Therefore, the calculation time could be considerably reduced, and the calculation efficiency was higher.

Garcia-Sanchez et al. [126] proposed a coevolution algorithm based on the island model. Multiple subpopulations (islands) were executed in parallel, and different regions in the decision variable space were explored in this model. In particular, it divided the decision variable into multiple parts and used a subpopulation to optimize each part. According to these results, overlapping schemes performed better than disjoint schemes when the number of islands increased. Using a parallel framework, Chen et al. [127] proposed separating the environment selection operator from the entire evolutionary process, thus reducing data transmission and dependency between sub-processes. A novel parallel EA (PEA) was designed based on the parallel framework. PEA convergence was achieved through independent subgroups. Moreover, an environmental selection strategy was proposed without considering convergence to improve diversity.

3.2.3 Algorithm Based on Reference Vectors

Yang et al. [131] proposed a new high-dimensional optimization framework called dynamic evolutionary constrained clustering with grouping (DECC-G), which was used for large-scale optimization problems. The key ideas behind the DECC-G framework were grouping and adaptive weighting strategies. DECC-G could effectively solve large-scale single-objective optimization problems of up to 1000 dimensions. Inspired by scholars, such as Zhang et al. [131], Zille et al. [125] and other scholars introduced the large-scale single-objective grouping strategy and adaptive weighting strategy into the large-scale MOEA and proposed a weighted optimization framework (WOF) [125]. Some scholars grouped variables together and applied weights to each group [131,132]. The framework for accelerating large-scale multiobjective optimization by problem reformulation (LSMOF) [117] also employed an adaptive weighting strategy, which transformed the decision variables optimization into the weighting variables optimization. Compared with WOF, where the search weight variable was unidirectional, the weight variable in the proposed LSMOF was bidirectional, and the search coverage was larger. Although the transformation strategy could somewhat reduce the the decision space dimensionality, the subproblems of transformation were optimized respectively, therefore the relationship between various weight variables was not taken into account. The conversion result depended significantly on the grouping technology used, which had yet to be strengthened in terms of stability and computational effectiveness [133,134].

Beume et al. [135] proposed a multiobjective selection algorithm based on dominant hypervolume, referred to as

3.3 Hardware-Accelerated NAS Method

The NAS for DNNs and large-scale data could be treated as a large-scale optimization problem, which has high complexity and consumes lots of time. Meanwhile, EAs are population-based, which have inherent parallel properties. Accordingly, based on specific hardware platforms with numerous CPU, GPU, and FPGA resources, distributed parallel EAs can be designed. Compared to traditional NAS based on EA, the efficiency of the distributed NAS will be significantly improved [136].

In distributed parallel EAs (DPEAs) [91], several populations can be formed to explore the solution space, which can also contribute to sufficiently utilizing the computational resources. A distributed parallel MOEA, DPCCMOEA, had been proposed in [80] to tackle large-scale multiobjective problems. DPCCMOEA algorithm used an improved variable analysis method [121,137] to split the decision variables into different groups, with each group being optimized by subpopulation. In order to increase parallelism and achieve adaptive implementations of different numbers of CPUs, each population was further broken down into multiple subpopulations (groups), the number of which was determined by the number of available CPUs. Therefore, the calculation time can be greatly reduced and the calculation efficiency is higher. Based on DPCCMOEA, a distributed parallel cooperative coevolutionary multiobjective large-scale evolution algorithm (DPCCMOLSEA) was proposed [138], which modified the parallel structure to evolve each subgroup serially in each main CPU core of each subgroup. Optimization performance had improved because it was easy to reference the overall information of subgroups without having to communicate between CPU cores, and several adaptive strategies had been tried. The work of [81] proposed the distributed parallel cooperative coevolutionary multiobjective large-scale immune algorithm (DPCCMOLSIA), which solved multiobjective large-scale problems by constructing a distributed parallel framework with three layers. Therefore, the CPU core belonged to a subgroup that targeted one objective (or all objectives) and a group of variables. Similar to DPCCMOLSEA, the evolution of a subpopulation was performed in serial in a main CPU. Using the same parallel strategy, the multi-population distributed parallel cooperative coevolutionary multiobjective large-scale EA (DPCCMOLSEA-MP) [82] also constructed a distributed parallel framework with three layers. The difference was that DPCCMOLSIA is according to non-dominated sorting as in NSGA-II, while DPCCMOLSEA-MP was based on problem decomposition as in MOEA/D. In addition, further improvements were made to DPCCMOLSEA and DPCCMOLSIA [19]. One population was constructed to optimize each variable group, and all populations cooperate for better performance [139]. Then, the individuals in each population were further decomposed to realize larger-scale parallelism. For large-scale search, Li et al. [140] analyzed the disadvantages of previous NAS methods and pointed out that random search could achieve better performance than ENAS [6] and DARTS [15]. Greff et al. [141] presented the large-scale analysis of LSTM, and used the random search method to optimize the hyperparameters [142]. They put forward an effective and accurate adjustment policy. Moreover, more effective parallel structures could be devised via modification [138] or by adding parallel layers [81,82].

Via combining distributed MOEAs (DMOEAs) with the fuzzy rough NN, a multiobjective evolutionary interval type-2 fuzzy rough NN framework has been proposed to tackle the time series prediction [143] task in the work of [19], in which, the connections and weights are optimized by simultaneously considering the network simplicity and precision. In future work, DMOEAs can be applied to NAS by simultaneously encoding the network architecture and weights to generate light-weight NNs, and the back-propagation [144] can also be employed.

4 NAS for Healthcare Application

Image classification [2,10,47,48,55,145–147] and image segmentation [8,24,25,70,71,148–150] are the most widely used fields of NAS in healthcare. In the traditional working mode, doctors often use the naked eye to scan under the microscope for judgment, use the manual experience to find the lesions, and then use the high-power lens for review, diagnosis, classification, and decision, which is a complicated process. This is a huge workload and a time-consuming task. Moreover, these lesion images are very sparse, and the DL model needs to sample local medical images under conditions of high magnify to make an accurate diagnosis and classification. Therefore, the method of DL increases the calculation cost, the utilization efficiency of medical images is low, and the correlation information of diagnosis is weak. Researchers have used EAs to automatically design efficient NNs for disease diagnosis to help doctors make efficient and intelligent diagnostic decisions [2].

NAS is generally considered a single objective optimization problem aiming at finding a high-precision network architecture. Most practical problems require not only high precision but also efficient network architectures with low complexity, so as to reduce computation and memory. However, DNNs are overparameterized and inefficient in the whole learning process. A multiobjective optimization algorithm provides an effective strategy for designing network architecture automatically and efficiently. In healthcare, MOEAs can simultaneously optimize classification accuracy and improve network efficiency, which have been applied to the field of image classification. For image segmentation, MOEAs based on minimizing the segmentation error rate and model size can automatically design efficient networks to complete specific image segmentation tasks. Multiobjective optimization is helpful for completing architecture search and difficult medical tasks.

4.1 NAS for Classification of Disease Diagnosis

The purpose of traditional DL methods is to implement an automatic disease diagnosis system. Cancer cells are classified by using a preset collection of manual features obtained from histological images and trained with a classifier. The extraction of features and their classification within a uniform learning structure have been popular in medical image processing in recent years [151]. Image classification tasks are widely performed by using CNNs [100]. CNN may, however, suffer from disappearing gradients and saturation accuracy owing to a great increase in depth. NAS combined with CNN can help medical practitioners make more accurate and efficient medical diagnosis classification decisions while minimizing human intervention.

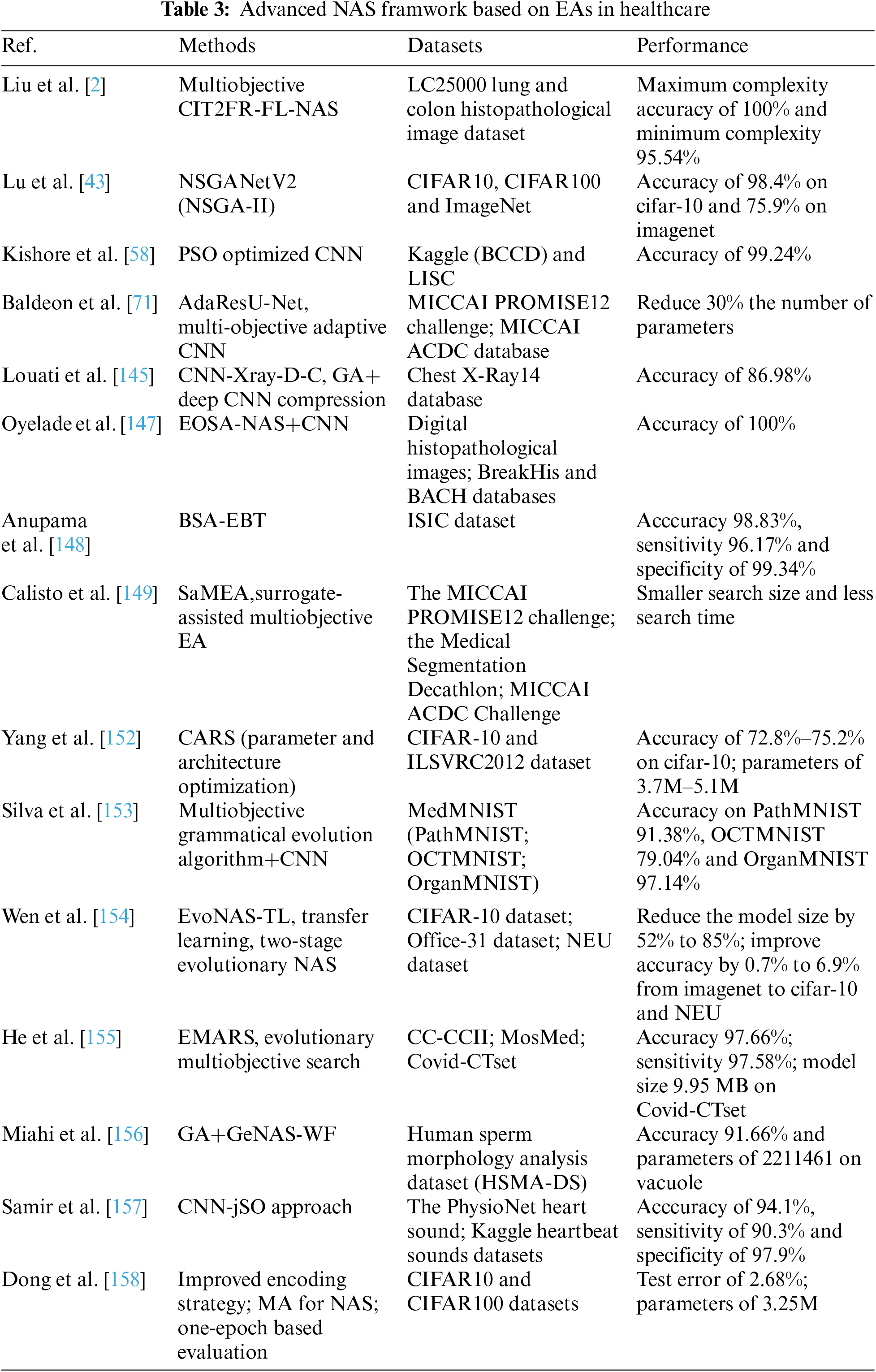

As shown in Table 3, MOEAs can combine with deep CNN for NAS to efficiently complete image segmentation and image classification in healthcare. For skin lesions, Anupama et al. [148] proposed backtracking search optimization algorithm with entropy-based thresholding (BSA-EBT) to improve image segmentation accuracy. For prostate cancer and cardiology, Calisto et al. [149] proposed a surrogate-assisted multiobjective evolutionary algorithm (SaMEA) for minimizing the architecture size and search time, aiming to complete 3D image segmentation. Baldeon et al. [71] used a multiobjective adaptive CNN (AdaResU-Net) to optimize the performance and size of the model. These two models were evaluated on two publicly available data sets: the MICCAI PROMISE12 challenge data set and the MICCAI ACDC challenge data set. Yang et al. [152] proposed continuous evolution for efficient NAS (CARS) that could offer models in the Pareto front with very high efficiency for CIFAR-10 and ILSVRC2012. Its contribution was to propose a highly efficient multiobjective NAS framework with a continuous evolution strategy, taking advantage of a mechanism with protection (pNSGA-III) [159]. For lung and colon cancer image classification, Liu et al. [2] proposed a CIT2FR-FL-NAS model, which could reduce network complexity and add interpretability. For colorectal cancer, retinal diseases, and liver tumor, Silva et al. [153] proposed multiobjective grammatical evolution algorithm, it could reduce model complexity and improve model accuracy. For thorax disease and COVID-19, Louati et al. [145,160] used GA to compress the deep CNN. He et al. [155] proposed efficient evolutionary multiobjective architecture search (EMARS) to implement COVID-19 computed tomography (CT) classification. The advanced multiobjective evolutionary NAS can not only improve model performance but also reduce network complexity in healthcare. NAS can be applied in healthcare for image classification and image segmentation. Additionally, it can efficiently process the EHR data sets. The work by [161] proposed MUltimodal Fusion Architecture SeArch (MUFASA) model that could process structured and unstructured EHR data and automatically learn the multi-modal characteristic architecture of EHR. This study allowed non-experts to develop differential privacy (DP) models that could be customized for each data profile of the EHR and to find the ability to effectively integrate clinical records, continuous data, and discrete data. This method could help doctors make the right diagnostic decisions, make personalized treatment plans, and advance health care for patients.

Therefore, the use of evolutionary NAS in healthcare applications is necessary. Firstly, healthcare datasets are often massive and complex, making them challenging to extract relevant information and insights using traditional machine learning approaches. Traditional RL for NAS cannot optimize multiple objectives flexibly and can lead to heavy computing [14]. Using gradient-based search [15] need to make the discrete network space into continuous, which add training time. Neuroevolution strategies can accelerate search efficiency, real-time processing these datasets, consequently enhancing accuracy, reducing model size and minimizing errors in healthcare [154]. Secondly, evolutionary NAS can adapt to meet the multiple requirements of a given task. Multiobjective evolutionary NAS applications in healthcare can attain high accuracy, improve model performance and reduce model size [2,71,149,153–155]. Large-scale multiobjective neuroevolution strategies [73,110–113] and distributed parallel EAs strategies [80,91,121,137,138] can accelerate the evolution process and search process of NAS. Using multiple GPUs, the training is also accelerated. In FL, multiple federated client terminals search simultaneously, which will greatly improve efficiency. NAS can process medical data and help doctors make accurate and rapid diagnostic decisions [162]. By automating the design process, NAS can help reduce the time and cost required to develop new AI solutions in healthcare, while also improving their performance and reducing the risk of human error. Moreover, NAS can automatically predict and classify diseases, so as to assist doctors to make personalized diagnoses and treatment. Medical data leakage will cause adverse effects. Due to legal and ethical requirements, the privacy protection of medical data is particularly important. In Section 5, we develop an interpretable evolutionary neural architecture search based on federated learning (FL-ENAS) framework applied to healthcare, to address search efficiency and privacy concerns.

Aging, the contradiction between medical supply and demand, and the medical burden are major economic and social problems facing most countries in the world. Solving this problem requires enormous healthcare costs and labor resources. The main problem with traditional hospital-centered healthcare is long waiting times, which, if not addressed effectively, can lead to delays and even life-threatening conditions. Mobile healthcare technology’s application and innovation can decrease the medical burden, shorten waiting times, facilitate patients access to medical care, and prevent diseases before their onset [163]. Mobile healthcare uses sensors and portable devices to collect clinical healthcare data, deliver healthcare information to both hospital researchers and patients, monitor vital signs, and furnish personalized diagnoses and services through telemedicine.

In mobile healthcare, activity recognition for smartphones and smartwatches has various applications for the health management of people. By sensing human activity, smartphones and smartwatches can significantly enhance human health and well-being [164]. An unhealthy diet and lack of exercise are the main causes of chronic diseases, while regular physical exercise can protect human health and reduce the risk of mortality. Overindulgence in junk food can also lead to sub-optimal health and pose significant health risks. Activity recognition via smartphones and smartwatches can supply precise, real-time insights into sedentary behavior, exercise, and eating habits to help counteract inactivity and overeating. Moreover, with the help of Medical 4.0 technology, a patient-centric paradigm has replaced doctor-centric treatment methods [165]. Wearable devices can be connected to edge computing to process large amounts of data, thus avoiding privacy risk and low computing efficiency during data transmission in mobile healthcare. To save limited resources, the Early-Exit (EEx) option was applied in multiobjective NAS to process time series data requiring real-time monitoring in wearable devices [166]. This model achieves not only high classification accuracy but also the goal of energy saving and high efficiency. It has been effectively applied in mobile data sets, diagnosis decisions of heart infarction, human activity recognition, and real-time monitoring of human health status. Human activity recognition based on wearable sensors is beneficial to human health supervision. DNN models in mobile phones and other small sensor devices can accurately identify human activity, such as going up and down stairs [167].

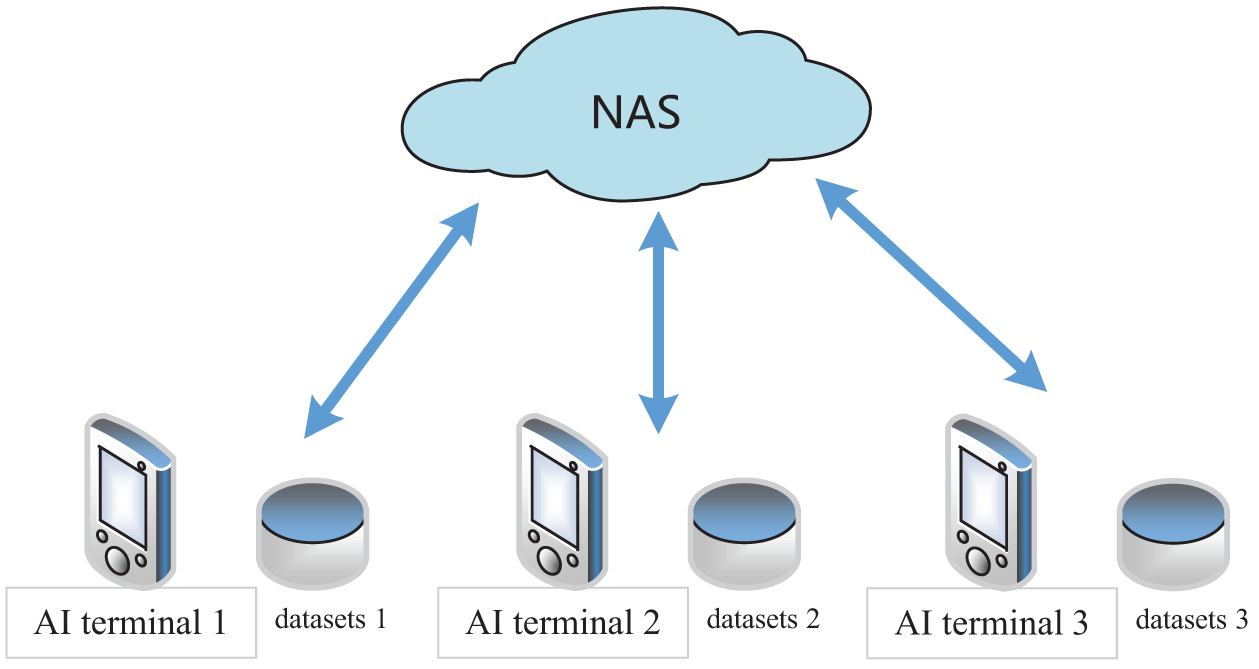

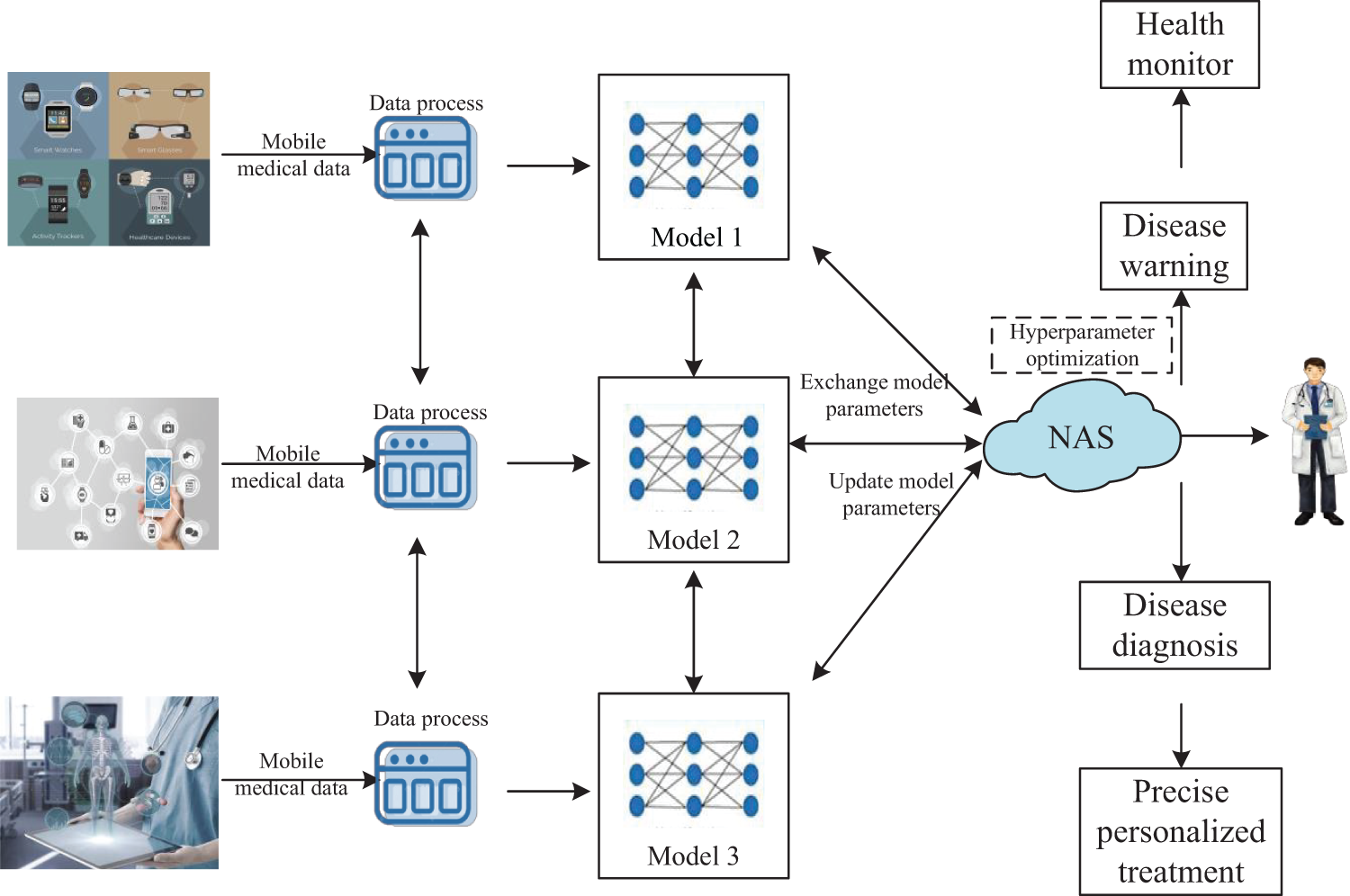

A multiobjective NAS strategy has been effectively used in mobile healthcare. NAS algorithms can achieve high accuracy while achieving other optimization goals, such as reducing time, minimizing parameters, and reducing model size, to effectively deploy resources within limited resources. By enhancing the performance of the model on wearable devices and reducing its size, the author proposed NAS for photoplethysmography (NAS-PPG), a lightweight NN model for heart rate monitoring [168]. In the work by [39], the authors presented a BioNetExplorer framework that enabled hardware-aware DNN and reduced search time by GAs for biosignal processing. To balance the performance and computing speed of a model, the multiobjective search algorithm NSGA-II [42] was utilized, and lightweight architecture human activity recognition using neural architecture search (HARNAS) was designed for human behavior recognition [97]. NAS used neuroevolution to search for the best architectures not only for image recognition data set (CIFAR-10) but also for PAMAP2 data sets in human activity recognition (HAR) [97]. MOEAs search can perform the constrained search process, that is, the search space’s parameters are set within the given range, and can fully use the available resources for strategic deployment. Moreover, FL combined multiobjective NAS can protect medical data security and reduce model complexity. As shown in Fig. 4, it shows the use of FL for AI terminals.

Figure 4: Federated neural architecture search for AI terminals

4.3 Interpretable Network Architecture for Healthcare

With their excellent performance (as shown in Table 3), CNNs have been successfully applied in medical image classification [169,170]. Nonetheless, one of the most significant drawbacks of deep CNNs is their lack of interpretability [171]. Zhang et al. [172] designed a loss for the feature maps, thus making the knowledge representation of the high convolutional layers clearer and easier to understand the logic of CNNs. High interpretability can break the black box bottleneck of DL and make the CNN presentation process visible [173]. The CNN model should be accurate and interpretable in medical diagnosis [174]. For example, for the diagnosis and treatment management of breast cancer, CNNs can be used to segment suspicious areas in images and provide heat maps to help pathologists make decisions [175]. Few papers have previously combined interpretability with NAS for healthcare data analysis.

Fuzzy rough neural network (FRNN) unites fuzzy set theory, rough set theory, and NNs simultaneously, so it has powerful learning and interpretability abilities. Instead of the value 0 or 1, fuzzy set theory [176] can represent every input feature as a “degree” in the range [0,1] [84]. By transforming input feature values into fuzzy domain, fuzzy set theory is an important medium between the precise computational world and the fuzzy human world. It can mimic human decision making based on “if-then”, so as to better solve real world problems. Rough set theory emphasizes the unidentifiability of knowledge in information systems, it deals with all kinds of imprecision and incomplete information, and finds hidden laws. Fuzzy rough set theory can process complex data and generate interpretable knowledge [177]. DFRNN can be built and optimized with EAs [178,179] to solve complex problems in real world, especially in medical image classification [180].

Integrating gene expression programming into FRNN can improve the effectiveness and interpretability of NNs [19]. Yeganejou et al. [181] combined CNN and explainable fuzzy logic to propose an explainable model in which CNN was employed to extract features, and fuzzy clustering is utilised to further process features. Evolution-based NAS could automatically search for the optimal network architecture and hyperparameters [182]. This could be used in FL environments [101] to achieve multiobjective optimization. CNN is an efficient DL model for medical image processing [183], and evolution-based NAS has been applied to medical image recognition. For example, neuroevolutionary algorithms are used to automatically design the DL architecture of COIVD-19 diagnostics based on X-ray images [184]. For CT and MRI image datasets, an evolutionary CNN framework with an attention mechanism is applied to 2D and 3D medical image segmentation [185]. Attention mechanisms are an effective AI techniques. For example, multiple instances of learning CNN incorporate an attention mechanism to recognize sub-slice area in medical images, so as to solve the explainable multiple abnormality classification problems [186].

Evolution-based NAS can automatically search the architecture of NNs and construct NNs models, avoiding the manual design of NNs and greatly reducing the work burden. In addition, MOEAs can optimize multiple objectives at the same time, thus taking into account multiple properties of multiobjective optimization problems (MOPs), such as network error, interpretability, etc. For FRNNs, it is helpful to comprehensively optimize and analyzed clearly MOPs. The combination of fuzzy rules and neuroevolution based on fuzzy sets can be regarded as “the combination of rules and learning”, and the multiobjective FRNNs evolution is a useful exploration of “high accuracy and interpretability” in healthcare.

5 NAS for Privacy and Security of Healthcare

Although big data in medicine can be helpful in diagnostics and therapies, the release of private information can result in social panic. Thus, medical data security and privacy have become increasingly important [187,188]. This biomedical data is highly sensitive, making it subject to strict regulations on data protection and privacy issues. On the premise of privacy protection for organizational pathological data, how to make efficient artificial intelligence classification and diagnosis decisions is a problem worthy of further exploration. Federated NAS [101] can effectively trade off privacy against model architecture choices. The traditional centralized learning process leads to the disclosure of private information. FL transmits only models, and local training of data can effectively protect data privacy. However, hundreds of millions of devices are involved in the interaction between the user client and the cloud server, which significantly increases the communication burden and cost [189]. A certain communication bottleneck exists between the local client and server; this is partly due to a great deal of parameters exchanged as well as a significant number of participating clients, and partly due to the limited broadband of networks, which has become a bottleneck in the development of FL [190]. Deploying NAS algorithms in the FL framework can simultaneously consider privacy protection and model architecture selection in the process of cancer diagnosis [2].

The deep fuzzy rough NN model was used for image classification in the FL environment. This model can add to the explanation of NAS. A deep fuzzy rough NN [2] uses NAS to solve the tuning and optimization problems in the FL environment. NAS is divided into two parts. The first part is the search part. After the local model parameters are uploaded to the cloud server, the cloud server integrates the model and returns the parameters or models. The second part is the training and inheritance part to obtain the optimal neural architecture. Federated NAS is divided into online federated NAS and offline NAS. The difference between online and offline is whether the two parts are performed separately or simultaneously [101]. Most researchers believe that NAS is a two-level optimization problem: internal and external optimization. NSGANetV2 [43] is a typical architecture for NAS optimization using a multiobjective optimization algorithm. This NAS model uses the initial weight as the initial value for the underlying optimization. The upper layer agent adopts an online learning algorithm and concentrates on the network architecture that is close to the optimal balance in the search space, which can significantly improve search efficiency.

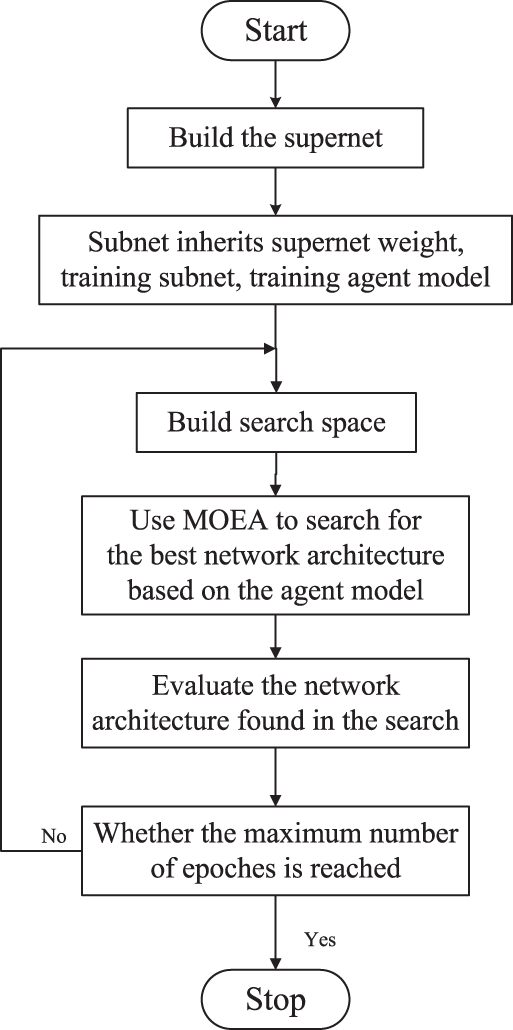

In this paper, we propose an interpretable FL-ENAS framework that incorporates neuroevolution to address search efficiency and privacy concerns for healthcare data analysis. The algorithm flow of evolutionary NAS is shown in Fig. 5. First, define the search space, supernet, and optimization objectives, as well as set the initial number of samples, and maximum number of training epochs. The agent model is constructed. Second, starting from a model, the MOEA is used to optimize the accuracy, complexity, and other objectives, and the selected architecture is trained. The candidate architecture is evaluated while improving the prediction agent model and this process is continued until the maximum amount of iteration is achieved. Lastly, the optimal non-dominant solution set is derived from the evaluated architectures.

Figure 5: Evolutionary NAS algorithm flow

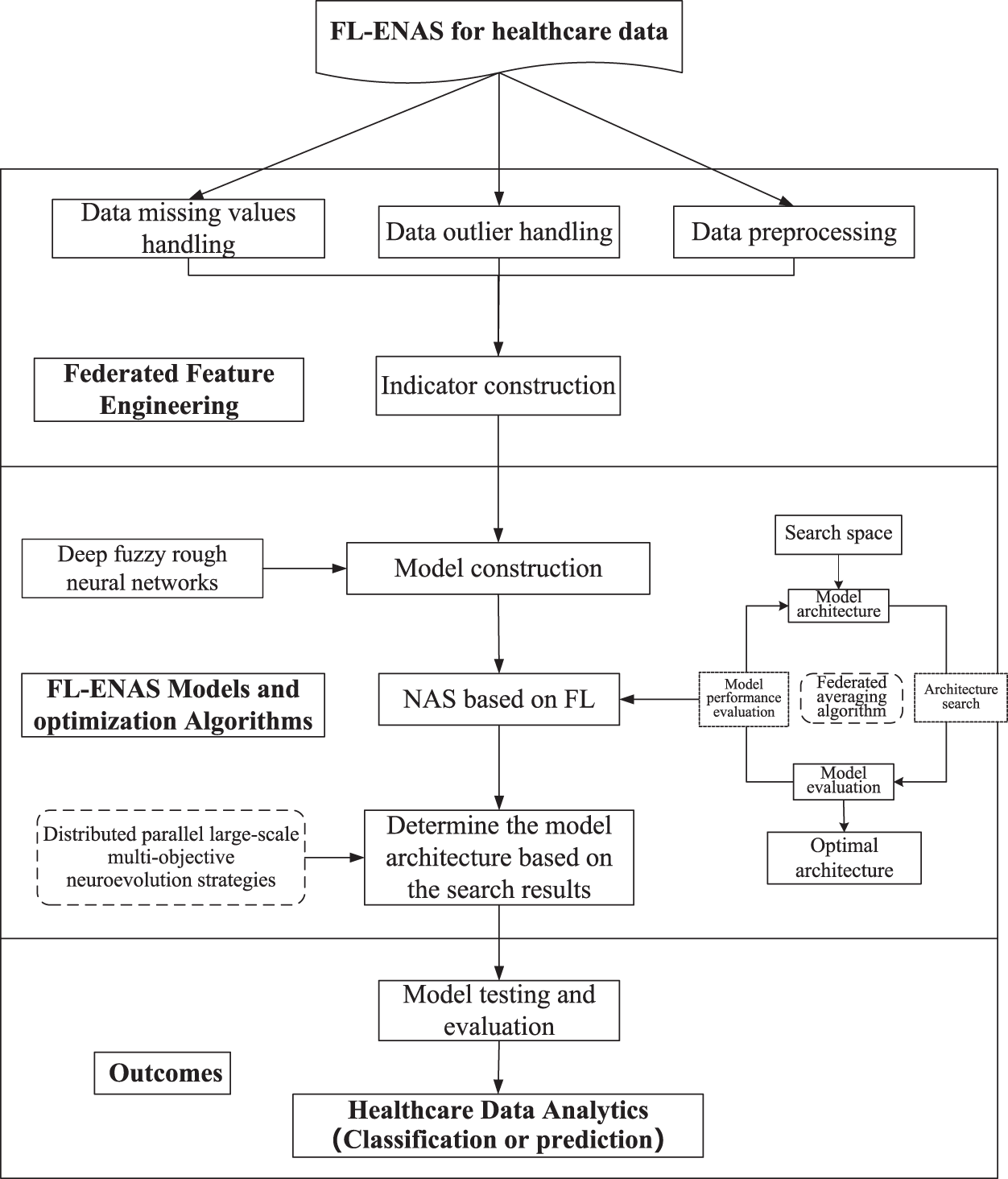

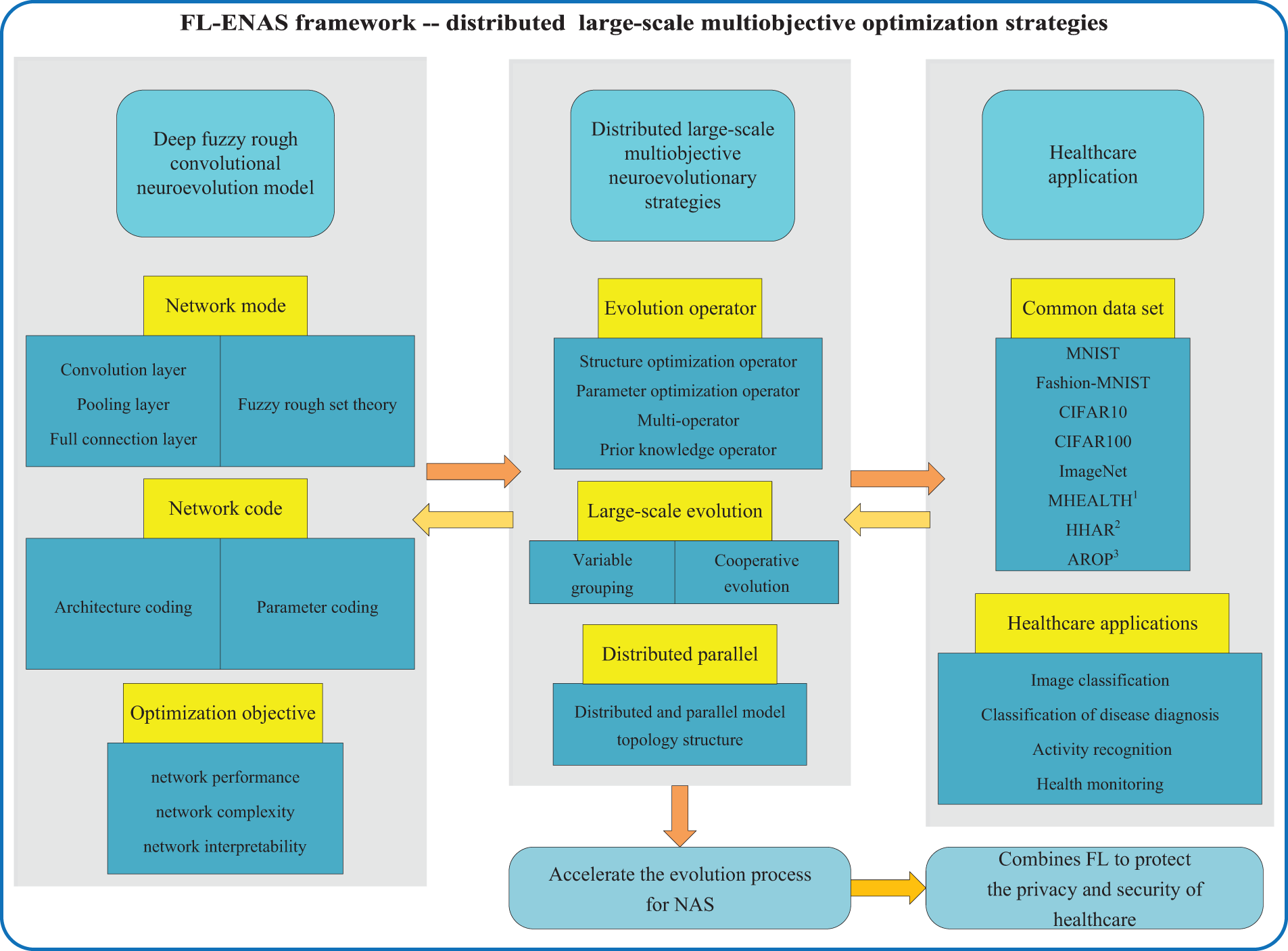

The search space is mainly constructed in multiple dimensions of the deep neural network; for example, with respect to deep CNN, the considered dimensions include depth (number of layers), width (number of channels), convolution kernel size, and input resolution [191]. The CNN architecture considered in this paper can be decomposed into several sequentially connected blocks. The network gradually reduces the size of the feature map and increases the amount of channels from shallow to deep. The overall algorithm flow of FL-ENAS is shown in Fig. 6. The algorithm operates as follows: (1) Federated feature engineering: including data preprocessing, indicator construction. At the same time, the deep fuzzy rough neural networks model is constructed. (2) Design a search space: search space for NAS should be designed. The search space can be defined or designed by using existing architectures to encode the set of possible architectures that the algorithm can explore. (3) Implement FL: to train a shared network model across multiple devices, we need to implement the FL framework. (4) Implement evolutionary NAS: using distributed parallel large-scale multiobjective neuroevolution strategies, we can conduct the search for the best network architecture once the FL framework is set up. (5) Evaluate the architecture: once the network architecture is optimized, it needs to be evaluated for the target task. (6) If the selected architecture does not perform well, further explore the search space and adjust evolutionary parameters. Our ultimate goal is to analyze and process healthcare data generated by users, predict or classify diseases, and effectively supervise human health in real time.

Figure 6: FL-ENAS framework

5.1 Deep Fuzzy Rough Neural Networks

Deep fuzzy rough neural networks (DFRNN) can handle the complex and uncertain data. Multiple layers of neurons form the architecture of the network, and each layer generates a set of fuzzy values based on its inputs. Using rough set theory, the most relevant features are then identified based on these fuzzy values. FRNN did not consider neuroevolution when first proposed by Sarkar et al. [192], that was, its architecture was programmed manually. Lingras [193] studied the serial combination of fuzzy rough and rough fuzzy, and explored the connection mode of fuzzy neurons and rough neurons, without involving the automatic construction of architecture. By coding the network structure and parameters, EAs were used to conduct FRNN architecture search and parameter adjustment [2]. Although network accuracy and comlexity indexes were taken into consideration, the fitness calculation was derived through a weighted sum of the two indexes. The weight was primarily determined by the experience of the researchers, which imposed subjective limitations. Studies on FRNN evolution are scarce, and do not involve MOEAs-based multiobjective evolution strategies, which need to be further explored.

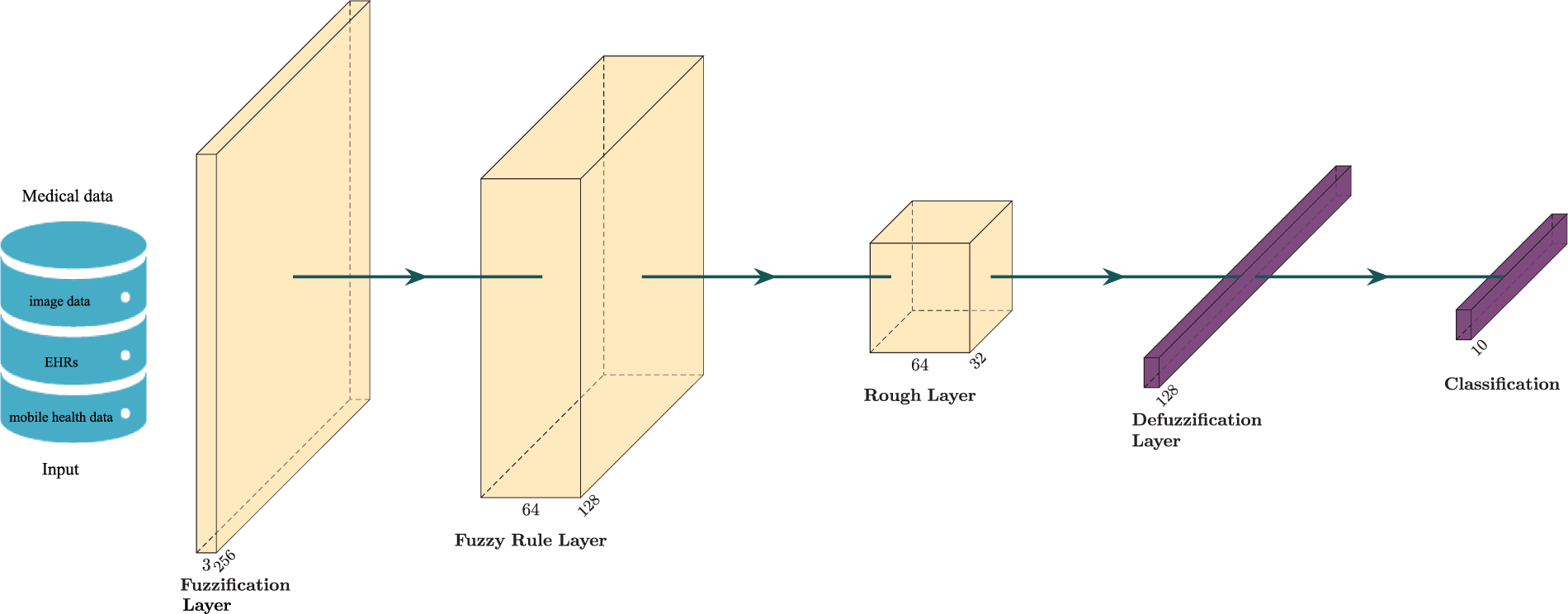

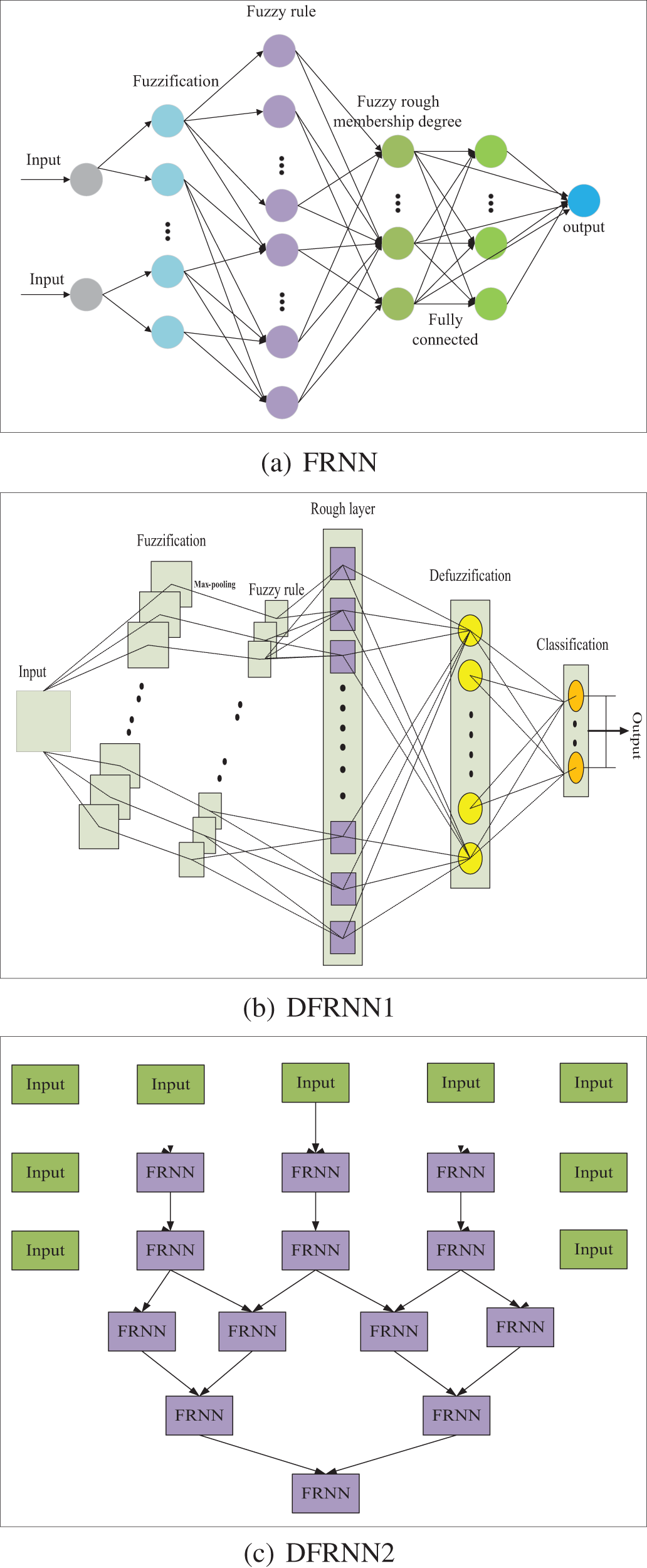

In DFRCNNs, fuzzy rough set theory was incorporated into DCNNs. As shown in Fig. 7, the proposed deep fuzzy rough neural network architecture basically includes fuzzification layer, fuzzy rule layer, rough layer, defuzzification layer, and finally output classification results. As shown in Fig. 8, it shows DFRNNs with three different architectures. In the basic FRNN architecture (FRNN), each input node is assigned to a number of clusters by the fuzzification layer. Each node in the fuzzy rule layer connects to at most one cluster of each input. After the fuzzy rough membership layer, the classification is output. DFRNN1 is extended to CNN, where the inputs are images and the CNN is interpreted and constructed based on fuzzy rough theory. DFRNN2 is also a basic FRNN architecture; it includes multiple FRNN modules, and every FRNN module includes high interpretable fuzzification layer, fuzzy rule layer, fuzzy rough membership layer, defuzzification layer, etc. Multiple FRNN modules are connected deeply to handle complex inputs. To improve interpretability, fuzzy rough theory is combined with CNNs. Multiple attributes of the DNN are quantified, and multiple optimization objectives are constructed for comprehensive optimization. The optimization of the deep neuroevolution model is perceived as a large-scale multiobjective optimization problem, and a large-scale multiobjective evolution strategy is proposed. Based on the classical modules in the existing DNNs, including convolutional, fully connected, and various modules in the fuzzy rough neural network, the coding scheme is designed to flexibly generate various DNN models and construct a general deep fuzzy rough CNN architecture. Various attributes of DNNs are summarized, and multiple optimization objectives are extracted, including network performance, complexity, and interpretability, to comprehensively optimize DNNs and improve optimization performance. Next, to optimize DFRNN models in the FL-ENAS framework, we propose large-scale multiobjective neuroevolution strategies, and distributed parallel neuroevolution strategies. As shown in Fig. 9, distributed large-scale multiobjective optimization strategies is illustrated in FL-ENAS framework.

Figure 7: Deep fuzzy rough neural network architecture

Figure 8: DFRCNN architectures

Figure 9: Distributed large-scale multiobjective optimization strategies in FL-ENAS framework

5.2 Large-Scale Multiobjective Neuroevolution Strategies

Evolution operators are crucial parts of large-scale multiobjective evolution strategies. First, the coding optimization of the model architecture needs to be addressed. The discrete evolution operator is intended to be adopted. Crossover operators may destroy useful architecture, so some neuroevolution algorithms eliminate crossover operators. To reduce significant changes in the crossing process, statistics can be conducted on the two selected paternal individuals inheriting common characteristics, and certain probabilities are given to determine whether to activate the corresponding variables for different characteristics.

Second, parameter coding optimization of the models is also important. The values of the variables used for parameter coding are continuous, and continuous evolution operators are used for optimization. Many operations of the EA are affected by random numbers, and the sequences generated by chaotic mapping have nonlinearity and unpredictability. Therefore, they are proposed to replace the traditional uniform random numbers and to improve the performance of the operator. The chaotic maps [194,195] include the following mapping: Chebyshev, Circle, Gauss, and Logistic mapping. Here, piecewise linear mapping can be summarized as follows:

where

Third, multiple operators need to be considered. Different evolutionary operators have numerous advantages. The dominant operators of neuroevolution need to be tested for specific neuroevolution problems. Different operators (or operators with different parameters) influence the optimization effect differently at evolution’s different phases. Combining different operators may be useful.

Fourth, operators can use prior knowledge. The pseudo-inverse method [196], which is accurate and fast, is used to obtain the weight for learning the width output layer.

Large-scale evolution could adopt variable grouping and cooperative evolution [5]. Large-scale evolution necessitates the efficient grouping of large-scale problems. The encoding parameters and architecture variables are divided into groups. We can continue dividing if each group still has many variables. The partitioning strategies include network layer partitioning, node partitioning, module partitioning, and matrix cutting. To achieve large-scale evolution, coevolutionary methods are needed, particularly distributed parallel large-scale evolution. An NN model can be built by combining all variables into one complete solution. To reduce communication costs and information exchange frequency, the information of variables in other groups is temporarily stored in each subpopulation of a variable group. Multiple objectives are optimized simultaneously in the process of NAS. These objectives might include training accuracy, model size, and FLOPs. By doing so, we can discover a set of NN architectures that balance these objectives and achieve high performance while minimizing computational cost.

5.3 Distributed Parallel Neuroevolution Strategies

Multi-layer-distributed parallel architecture suitable for the above algorithm is proposed. Considering the characteristics of hardware architecture and the need for practical medical engineering problems, based on variable grouping, each variable component is transferred to different nodes for evolution and cooperation. If each island corresponds to multiple nodes, the master–slave model can be applied to assign the assessment of individual fitness values on the island to multiple nodes to form the island–master–slave model. To further increase parallelism and diversity, multiple populations could be used, and each population optimizes one or several objectives. In the top-level island model, a cluster of the island–island–master/slave models is formed. The NN can be loaded into multiple GPUs or CPUs for parallel evaluation, further expanding the construction of island–master/slave-master/slave models and island–island–master/slave–master/slave models to improve evaluation efficiency. Ultimately, a multi-layer distributed parallel architecture is constructed. Agent-assisted multi-terminal collaborative parallel architecture is proposed. To evaluate the network architecture, a agent model with low computational complexity is used to estimate the performance of the neural architecture. By leveraging the agent model to assist in the evaluation of network architecture, a multi-end collaborative parallel architecture is constructed to fully utilize computing resources.

Based on distributed parallel technology, parallel EAs can accelerate the evolution process for NAS and improve the efficiency of healthcare data analysis. In FL-ENAS framework, the distributed parallel neuroevolution strategy optimizes NAS process and combines FL to protect the privacy and security of healthcare. The distributed parallel neuroevolution strategy uses multiple GPUs to train simultaneously to accelerate the training process. In FL-ENAS, multiple federated clients search at the same time based on distributed parallel neuroevolution strategy for NAS, which will improve the search efficiency for healthcare data processing and analysis.

Many publicly available data sets can be used, including MNIST data set, Fashion-MNIST data set, CIFAR10 data set, CIFAR100 data set, ImageNet data set, MHEALTH1, activity recognition with healthy older people using a batteryless wearable sensor data set (AROP)2 and heterogeneity activity recognition data set (HHAR)3. The proposed FL-ENAS framework can be tested, evaluated, and improved by these data sets to realize the tasks of image classification, human activity recognition, and other health-related tasks in healthcare.

This FL-ENAS framework can be applied to disease prevention and treatment. In scenarios where patient data is stored across multiple healthcare institutions or wearable devices, this framework can assist in developing a distributed healthcare data analysis and processing system. This distributed approach allows researchers and healthcare professionals to gain insights from a broader perspective while preserving data ownership and compliance with data protection regulations. Disease is predicted from these healthcare data, facilitating making corresponding countermeasures or prevention and control of disease risk. By analyzing healthcare data from a diverse set of patients, FL-ENAS can be used to identify patterns that may enable more accurate diagnosis and treatment planning. This framework can assist in providing personalized recommendations to healthcare providers, tailoring treatment plans to specific patients based on large-scale data analysis.

The crucial aspect is that this framework can be used for real-time monitoring of human health status. The multiobjective FL-ENAS framework will complete real-time healthcare data analysis tasks with low latency, as well as good accuracy, interpretability, and privacy protection. As shown in Fig. 10, health-related data can be collected from various sources such as wearable devices, smartphones, electronic health records, or medical sensors. Based on these devices, this framework automates the search for the optimal architecture for healthcare data analysis and real-time supervision of human health. It allows the model to learn from a diverse range of local data sources, leading to personalized and accurate health supervision without the need for data centralization. By continuously automating the updating of the global model using local device data, the FL-ENAS framework will allow for real-time monitoring of human health. This enables timely detection of health anomalies, such as abnormal heart rate or blood pressure, allowing for immediate intervention or alert notifications. Overall, the FL-ENAS framework provides a powerful and privacy-preserving approach for disease prevention and treatment as well as real-time human health supervision. It ensures personalized and accurate health monitoring while balancing data availability and privacy protection, allowing for the development of robust models that can contribute to the analysis and processing of healthcare data.

Figure 10: FL-ENAS framework application

To provide a guide for readers to do research on the NAS via EAs, corresponding future directions are presented in the following subsections.

6.1 NAS Based on Large-Scale MOEAs

Future research includes achieving NAS utilizing large-scale MOEAs. Current work is still limited in the curse of dimensionality problem, the convergence pressure problem and the problem of maintaining population diversity in the process of neuroevolution. Although there are some works to enhance MOEAs by dimensionality reduction and introducing local search operators, their performance improvement for many large-scale multiobjective problems is still very limited. One possible factor is that most of these existing large-scale MOEAs simply use the research results of large-scale single-objective optimization and many-objective optimization, while the research on the difficulties of large-scale multiobjective optimization itself is not enough. Although large-scale multiobjective optimization optimization is related to these two research directions to some extent, there are still essential differences: on the one hand, multiobjective optimization seeks the optimal solution set of a set of compromise solutions, while single-objective optimization only seeks a global optimal solution. Therefore, it is difficult to effectively solve large-scale multiobjective optimization by simply using the research results of large-scale single-objective optimization. On the other hand, in multiobjective optimization problems, the mapping between the decision vector and the objective is often very complicated, so the design method for the high-dimensional objective space may not be effective in dealing with the high-dimensional decision space. Therefore, more efficient grouping and dimensionality reduction methods need to be further studied for large-scale multiobjective NAS.