Open Access

Open Access

ARTICLE

Transductive Transfer Dictionary Learning Algorithm for Remote Sensing Image Classification

1

Aliyun School of Big Data, Changzhou University, Changzhou, 213164, China

2

Hua Lookeng Honors College, Changzhou University, Changzhou, 213164, China

* Corresponding Author: Tongguang Ni. Email:

(This article belongs to the Special Issue: Computer Modeling for Smart Cities Applications)

Computer Modeling in Engineering & Sciences 2023, 137(3), 2267-2283. https://doi.org/10.32604/cmes.2023.027709

Received 10 November 2022; Accepted 24 March 2023; Issue published 03 August 2023

Abstract

To create a green and healthy living environment, people have put forward higher requirements for the refined management of ecological resources. A variety of technologies, including satellite remote sensing, Internet of Things, artificial intelligence, and big data, can build a smart environmental monitoring system. Remote sensing image classification is an important research content in ecological environmental monitoring. Remote sensing images contain rich spatial information and multi-temporal information, but also bring challenges such as difficulty in obtaining classification labels and low classification accuracy. To solve this problem, this study develops a transductive transfer dictionary learning (TTDL) algorithm. In the TTDL, the source and target domains are transformed from the original sample space to a common subspace. TTDL trains a shared discriminative dictionary in this subspace, establishes associations between domains, and also obtains sparse representations of source and target domain data. To obtain an effective shared discriminative dictionary, triple-induced ordinal locality preserving term, Fisher discriminant term, and graph Laplacian regularization term are introduced into the TTDL. The triplet-induced ordinal locality preserving term on sub-space projection preserves the local structure of data in low-dimensional subspaces. The Fisher discriminant term on dictionary improves differences among different sub-dictionaries through intra-class and inter-class scatters. The graph Laplacian regularization term on sparse representation maintains the manifold structure using a semi-supervised weight graph matrix, which can indirectly improve the discriminative performance of the dictionary. The TTDL is tested on several remote sensing image datasets and has strong discrimination classification performance.Keywords

The ecological environment is closely related to human life. A good ecological environment is the foundation of human survival and health. At present, maintaining a healthy ecological environment has been the consensus of people all over the world. Ecological environment monitoring refers to the monitoring activities carried out to accurately, timely, and comprehensively reflect the ecological environment status and its changing trend with the objects of mountains, water, forests, fields, lakes, and grasses. The rapid development of satellite and aviation technology makes the application of remote sensing technology an important part of ecological environmental monitoring [1]. A large amount of real-time and reliable ecological environmental image information can be obtained by using satellite remote sensing images. Remote sensing image classification is the process of real-time scene classification and recognition based on the content extracted from images. Improving the accuracy of scene image classification can bring many conveniences to ecological environmental monitoring. Remote sensing image classification labels an image as a high-level semantic class [2]. Different from pixel-based semantic segmentation, it only pays attention to which pixels correspond to a certain feature. In scene classification, a small-scale image has its semantic information and can be classified into a certain semantic class through semantic features. Therefore, the overall cognition of a remote sensing image constitutes the scene class. The texture and spatial features of a scene, as well as the relationship between the objects, enable not only to describe the underlying physical features of the image, but also to characterize the semantic information of images. Dictionary learning has achieved success in this field due to its sparse representation and reconstruction capabilities. For example, Soltani-Farani et al. [3] developed a spectral and contextual characteristics-based dictionary learning algorithm for multispectral resolution sample classification. The linear combinations of two features of spectral are used as the common elements in the dictionary. Vu et al. [4] developed a dictionary learning model characterized by shared and class-specific dictionaries. Especially, the Fisher discrimination and low-rank constraints are enforced for the learned dictionaries. Geng et al. [5] used an online dictionary learning algorithm with the special atom’s selection strategy. The particle swarm optimization algorithm was adopted in the model update phase.

In scene classification, some different scene classes may contain similar feature representations and spatial texture structures [6]. For example, commercial and residential areas often contain houses, vehicles, highways, and interchanges. Bridges often contain roads, vehicles, and other objects. There will also be large differences in characteristics in the same class of scene, such as parking lots with cars and parking lots without cars. Thus, how to effectively and efficiently classify scenes is a challenging problem. In addition, nature scenes are rich and diverse, and even the same ground object may exhibit different characteristics under different time and space conditions, which results in “the same object with different spectrum” and “different objects with the same spectrum”. Such differences make most remote sensing application fields lack suitable labeled sample sets. At present, most remote sensing image processing problems based on artificial intelligence adopt supervised learning algorithms. The supervised learning algorithms require sufficiently fine-grained data labeling of the target image, and use these manually labeled remote sensing samples to train effective automatic classifiers on data with the same feature distribution. Therefore, it is a challenge to utilize traditional supervised learning algorithms to build a universal and reusable remote sensing image processing model [7].

Transfer learning is a type of algorithm of using the relevant dataset (called source domain) to calibrate the target domain when the source domain with sufficient class labels and the target domain with insufficient labels (or without class labels) have different feature distributions [8]. In practical applications, a large number of pre-labeled images or open-source public datasets are readily available, which can be used as the source domain. Due to the difference in objective factors such as sensors, algorithms, atmospheric conditions, solar radiation, ground features, building styles, and vegetation characteristics of data acquisition, there are often differences between different domains. To solve this problem, Zhou et al. [9] proposed the correlation alignment (CORAL) algorithm to achieve domain adaptation and capture the texture of the image structure. Tuia et al. [10] developed the spectral feature alignment (SFA) algorithm to use singular value decomposition for calculating the mapping function of feature representation so that the two domains can learn common features independent of their respective domains in a common latent space. Wang et al. [11] developed the structural correspondence learning algorithm to model the correlation of features.

In this paper, we propose a transductive transfer dictionary learning (TTDL) algorithm for remote sensing image classification. By TTDL, the source and target domains are transformed into a common subspace, and the samples in the two domains are re-encoded to have similar feature distributions. In this subspace, a shared dictionary is trained to establish the association between two domains and obtain the sparse representations of two domain samples. Also, the triplet-induced ordinal locality preserving term on subspace projection, Fisher discriminant term on dictionary and graph Laplacian regularization term on sparse representation are introduced into the TTDL algorithm. The triplet-induced ordinal locality preserving term considers the ranking information of each sample’s neighborhood, so it can more accurately describe the local geometry of the data. Fisher’s criterion directly constrains the intra-class distance and inter-class distance of the learned dictionary, instead of constraining the sparse representation. Its direct benefit is that the dictionary atoms of the same class can be more compact, so the similarity between different classes can be greatly reduced and the reconstruction ability and discriminative ability of the dictionary can be enhanced. Following the principle that the sparse representation of the same class should be as similar as possible and the sparse representation of different classes should be as different as possible, the graph Laplacian regularization term on sparse representation is constructed. The semi-supervised weight graph matrix in the source domain is built using the known class labels. Since the weight graph matrix in the target domain is unknown, it appears as a variable in the model optimization and reaches its optimal solution when the algorithm converges. Finally, TTDL obtains the discriminative dictionary and sparse representation, to better complete the remote sensing image classification across datasets.

The contributions of this paper are as follows:

(1) A remote sensing image classification algorithm for cross dataset transfer learning is proposed. Different domains are projected into the subspace to eliminate distribution differences. And the shared dictionary is established the relationship between two domains.

(2) Fisher’s criterion on dictionary improves the intra-class compactness and inter-class differences of sub-dictionary. In this way, one sub-dictionary reconstructs a certain class of training samples, which can enhance the discrimination of the dictionary.

(3) The unsupervised triplet graph in the triplet-induced ordinal locality preserving term is used to exploit the data local structure information. The semi-supervised weight graph matrix is used to maintain the manifold structure. Thereby, the discriminative ability of subspace and sparse representation can be enhanced.

Let

where

In classification tasks, the new test sample

The function

2.2 Fisher Discrimination Dictionary Learning

Fisher discrimination dictionary learning (FDDL) [13] algorithm aims to obtain a structured dictionary of training data. Using Fisher’s criterion, the learned sparse representations belonging to different classes have a large spatial distance, while the sparse representations belonging to the same class have a small spatial distance. The objective function of FDDL is,

where

where

3 Transductive Transfer Dictionary Learning Algorithm

Let

where

The triplet-induced ordinal locality preserving term

Denote

Eq. (8) can be represented as,

where

where

Let

Substituting Eqs. (14) and (15) into Eq. (11),

where

Because the class label of

where

Combining Eqs. (8), (11), and (19) into Eq. (6), the objective function of TTDL is re-written as,

For the objective function of the TTDL algorithm, four variables

(1)

According to [14], there exists the matrices

We have the closed-form solution of

where

where

(2) D can be optimized by fixing

Using the Lagrange dual approach, D is obtained by,

(3)

We re-write Eq. (27) in terms of the row vector

The closed-form solution of

(4)

We define

The closed-form solution of

where

It is noted that according to [14],

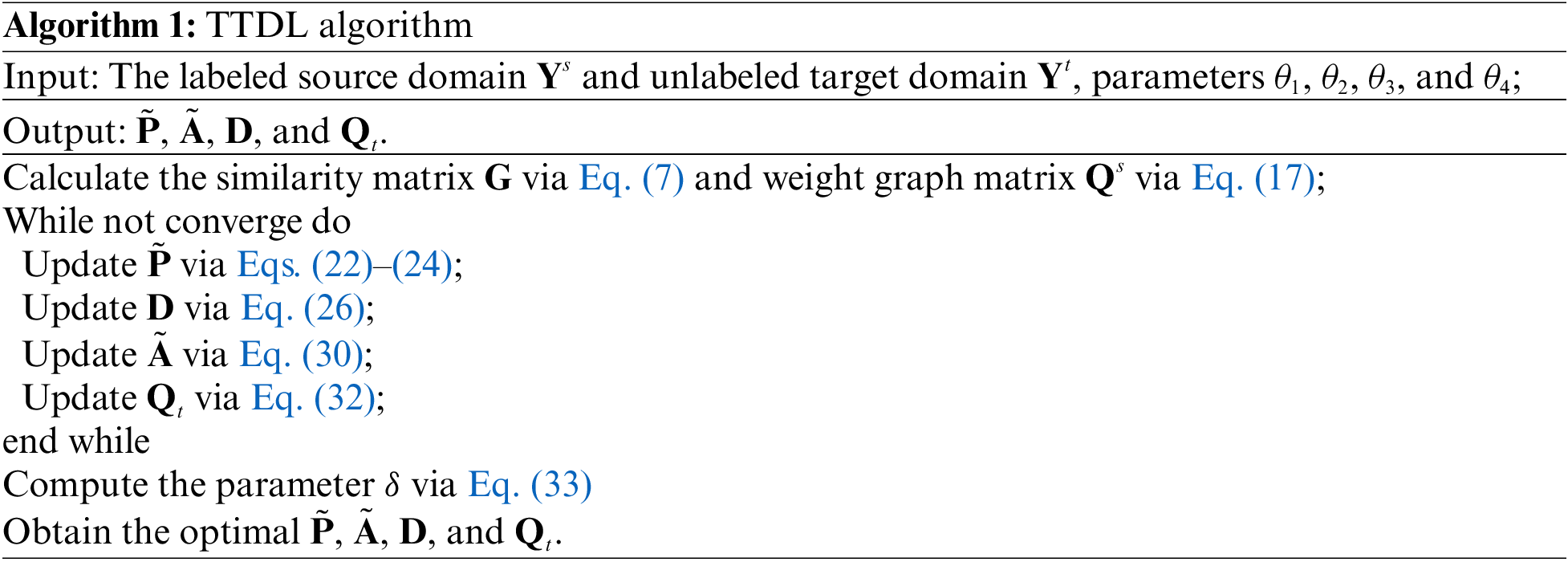

The solving procedure of Eq. (20) is summarized in Algorithm 1.

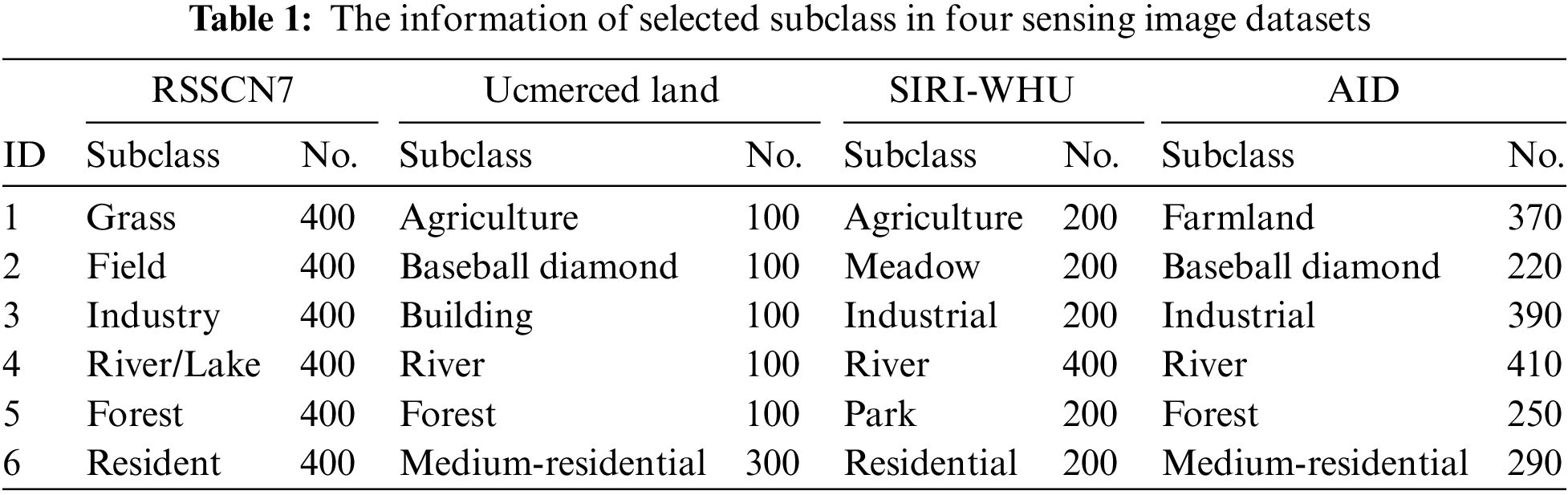

The TTDL algorithm is evaluated on real-world remote sensing datasets: RSSCN7 [1], Ucmerced land [2], Aerial image dataset (AID) [15], and SIRI-WHU dataset [16]. The RSSCN7 dataset consists of 2800 images of seven scene classes. There are 400 images of each class, in which the size of each image is 400 × 400 pixels. The Ucmerced land dataset consists of 2100 aerial scene images from 21 classes, with the size of 256 × 256 pixels. The AID dataset is a large scale aerial scene dataset, which consists of 10,000 remote sensing scene images of 30 classes, with the size of 600 × 600 pixels. The SIRI-WHU dataset is also an aerial scene dataset, composed of 12 classes of aerial scene images, in which each class contains 200 images, with the size of 200 × 200 pixels. To efficiently represent the remote sensing images, we adopt two different types of deep feature representations: ResNet50 and VGG-VD-16 [7]. They are extracted by the two convolution neural networks (CNN). The dimensions of the two deep features are 2048.

In the experiment, we design three cross-domain remote sensing image scene classification tasks: Ucmerced→RSSCN7 (named U→R), AID→RSSCN7 (named A→R), and SIRI-WHU→RSSCN7 (named S→R), referring to

Figure 1: Sample images in four dataset, (a) RSSCN7, (b) Ucmerced land, (c) SIRI-WHU, (d) AID

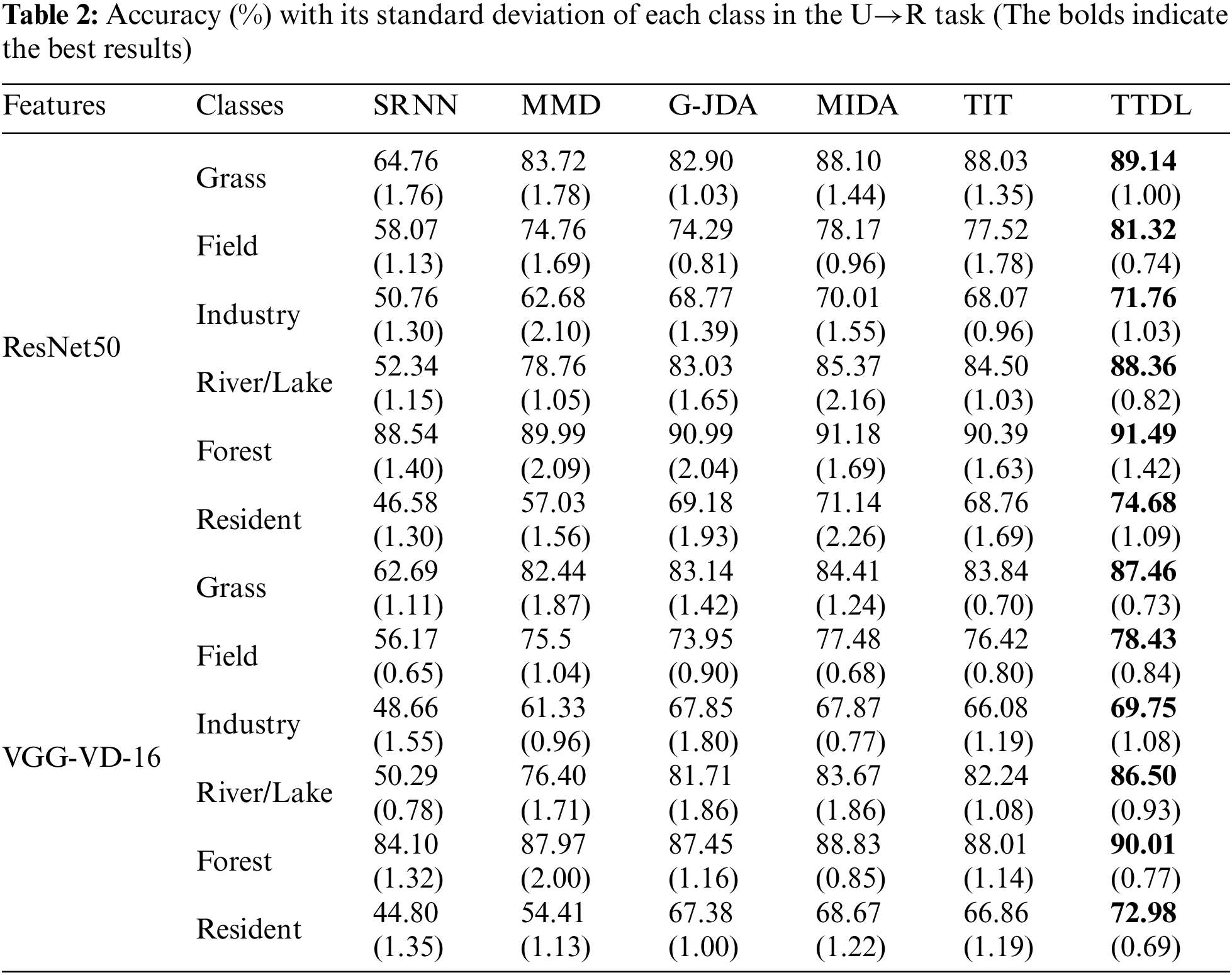

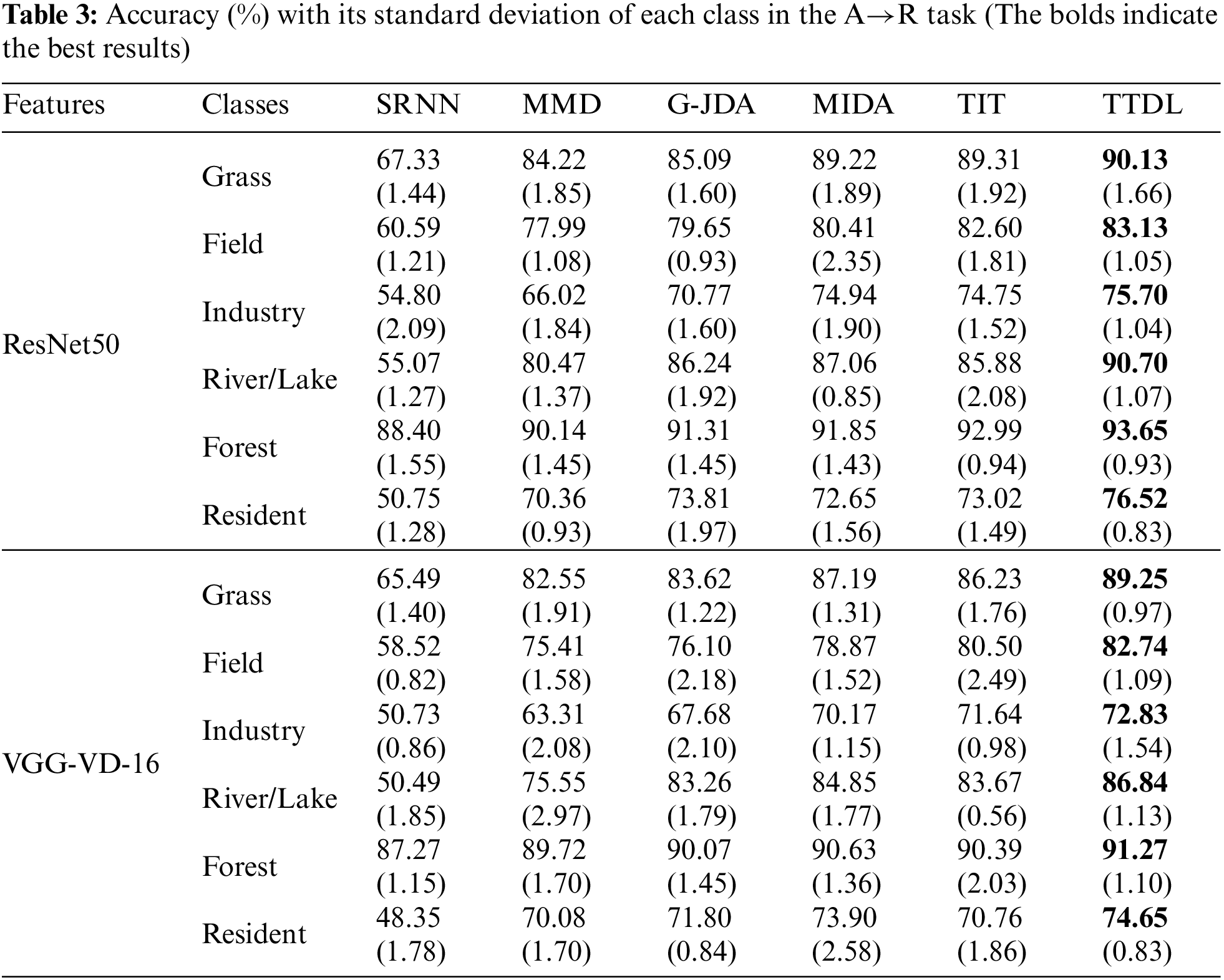

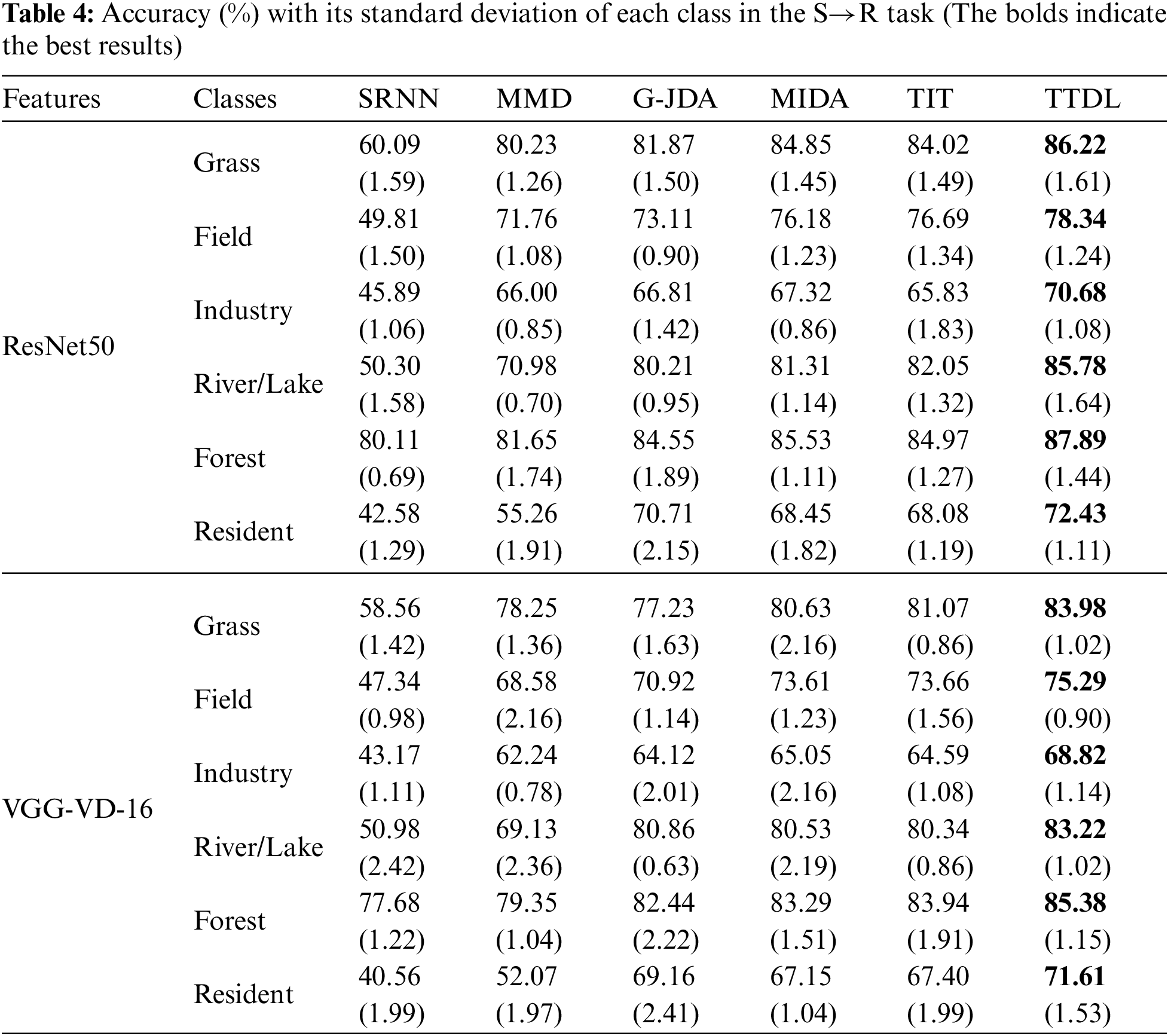

We compare the TTDL algorithm on three cross-domain remote sensing image classification tasks. The accuracy results in each class are shown in Tables 2–4. Analyzing the experimental results, we can see that the TTDL algorithm achieves the best classification accuracy. The baseline algorithm SRNN cannot obtain satisfactory performance in all cross-domain remote sensing image classification tasks. Since SRNN is only trained on the source domain, it cannot be used directly on the RSSCN7 dataset due to differences in data distribution across different domains. G-JDA aligns cross-domain edge feature probability distributions and conditional feature probability distributions in the new feature space by applying their respective projection matrices. TIT also uses landmarks to select representative samples for cross-domain feature matching while maintaining the manifold structure using graphs. Both MMD and MIDA project different domains into the common subspace to achieve cross-domain feature alignment. The above algorithms except TTDL do not consider triplet-induced ordinal locality preserving term, Fisher discriminant term, and graph Laplacian regularization term in transfer learning. The TTDL algorithm not only maintains the local structure in the subspace, but also makes similar sparse representations as similar as possible, to enhance the discriminative performance of sparse representations. Additionally, TTDL minimizes the intra-class scatter of atoms and maximizes the inter-class scatter of atoms, which can greatly promote the discriminative performance of the dictionary.

The classification results using ResNet50 and VGG-VD-16 features are comparable. As we know, the contained information in remote sensing scene images is often closely related to its class. The traditional feature information such as color, texture, space, and spectral information is insufficient for remote sensing images. Especially when the features corresponding to some classes are not significant enough, the accuracy of the classifier will be reduced. Thus, the deep features are effective in our experiments.

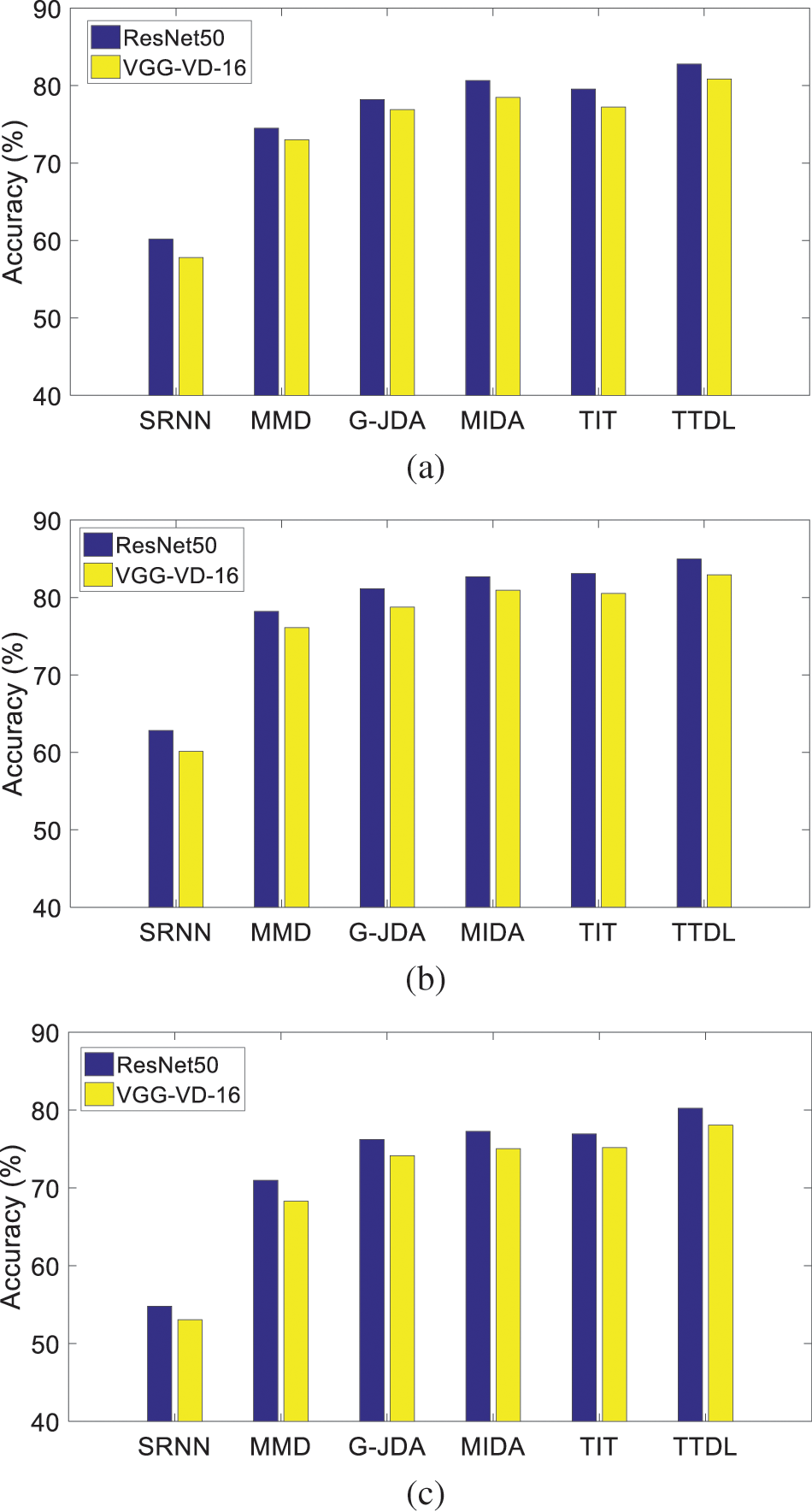

Fig. 2 records the classification performance of each algorithm in three transfer learning tasks. We can see that the average accuracy of TTDL is the highest among all sub-classes. For example, in the U→R task, using the ResNet50 features, the average accuracy of TTDL is 22.62% higher than the SRNN algorithm, and 3.25% higher than the second best. In the A→R task, using the VGG-VD-16 features, the average accuracy of TTDL is 22.17% higher than the SRNN algorithm, and 1.88% higher than the MIDA algorithm. In the S→R task, using the ResNet50 features, the average accuracy of TTDL algorithm is 25.53% higher than the SRNN algorithm, and 3.28% higher than the second best. These results show that TTDL is effective in three classification tasks of U→R, A→R, and S→R. Thus, the dictionary learning framework combined with subspace learning, Fisher discriminant, and local information preserving is a good choice for cross-dataset remote sensing image classification.

Figure 2: Accuracy comparison of all algorithms in the, (a) U→R task, (b) A→R task, (c) S→R task

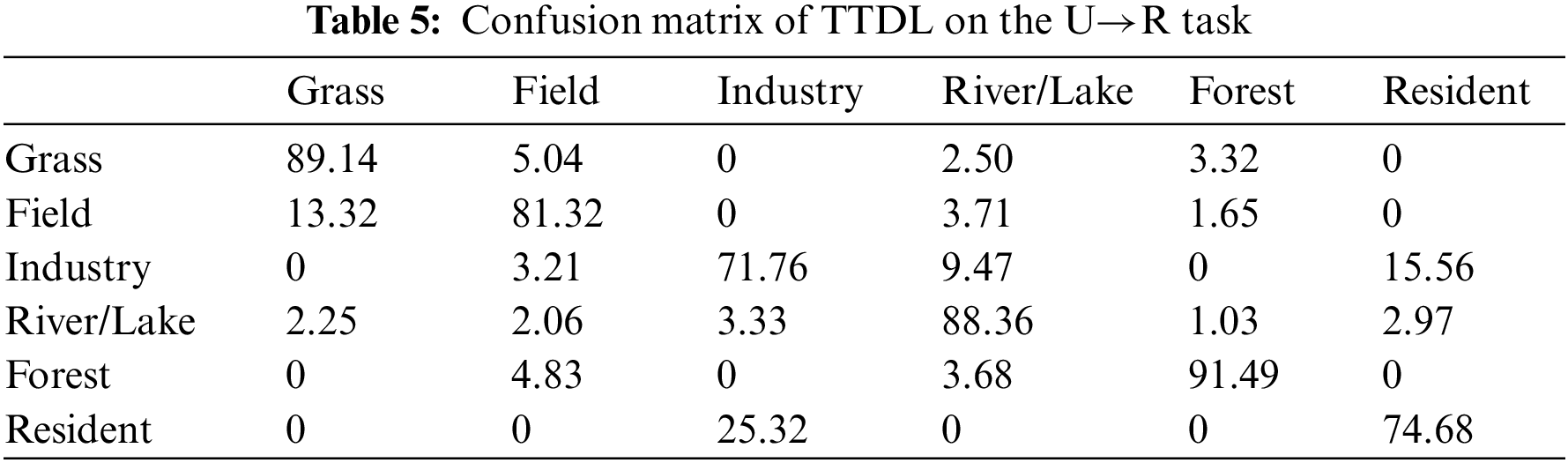

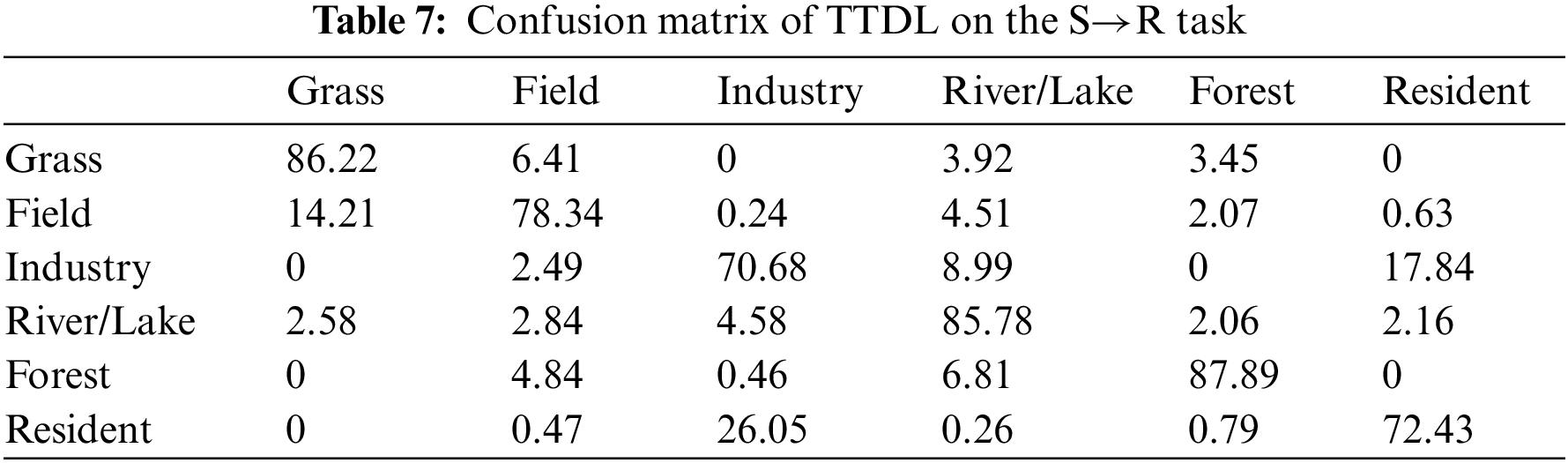

We show the confusion matrix using the ResNet50 feature in Tables 5–7. The value in confusion matrices means the accuracy (%) of TTDL in each class. The testing data of each scene class in the RSSCN7 dataset consists of 380 images. We can see that in the U→R task, TTDL classifies scene classes of Grass, River/Lake, and Forest over 80%, and the accuracy of Industry is low. The reason is mainly that Industry and Resident have the high similarity in the Ucmerced land and RSSCN7 datasets. The performances of TTDL in the A→R and S→R tasks show similar results. The classification performance in Grass, River/Lake, and Forest, are a higher than that of other classes.

To further analysis three components in TTDL, we show the ablation experiment results using ResNet50 feature in Table 8. TTDL with

The ecological environment has become one of the root factors affecting human health. Environmental and health management is a comprehensive and complex work across departments, fields, and disciplines. The rapid development of aerospace, satellite remote sensing, and data communication technology makes the application of remote sensing technology in ecological environmental monitoring more extensive. Reducing the workload of manual annotation and achieving high-precision classification of remote sensing images are difficult problems in ecological environmental monitoring. Benefiting from the previous manual labeling work, there are a large number of labeled datasets in the source domain. Because of the large differences between different datasets, it is difficult to achieve the ideal classification by directly training the classifier with these labeled datasets. To solve this problem, this paper proposes a transductive transfer dictionary learning algorithm TTDL. To obtain the representation of different domain samples, TTDL uses a subspace projection strategy to eliminate the distribution difference. In TTDL, the triplet induced ordinal locality preserving term, Fisher discriminant term, and graph Laplacian regularization term are introduced, so that the dictionary has intra-class compactness and inter-class differences. The TTDL algorithm has achieved satisfactory results in remote sensing image classification across datasets. Our work in the next stage includes integrating statistical information from data into subspace projection to eliminate feature distribution differences. For remote sensing images, the class imbalance often exists; it will lead to a high misclassification rate for classes with fewer samples. How to solve this problem is also our future research work. In addition, the transfer learning algorithm proposed in this paper is only applied to a single feature perspective. How to use multiple feature perspective data to accurately describe the data structure has important research significance and application value.

Funding Statement: This research was funded in part by the Natural Science Foundation of Jiangsu Province under Grant BK 20211333, and by the Science and Technology Project of Changzhou City (CE20215032).

Availability of Data and Materials: Four public datasets RSSCN7, Ucmerced land, SIRI-WHU, and AID are used in this study. The RSSCN7 dataset can be downloaded in https://github.com/palewithout/RSSCN7. The Ucmerced land dataset can be downloaded in http://weegee.vision.ucmerced.edu/datasets/landuse.html. The SIRI-WHU dataset can be downloaded in https://figshare.com/articles/dataset/SIRI_WHU_Dataset/8796980. The AID dataset can be downloaded in https://paperswithcode.com/dataset/aids.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. Zou, Q., Ni, L., Zhang, T., Wang, Q. (2015). Deep learning based feature selection for remote sensing scene classification. IEEE Geoscience and Remote Sensing Letters, 12(11), 2321–2325. [Google Scholar]

2. Yang, Y., Newsam, S. (2010). Bag-of-visual-words and spatial extensions for land-use classification. Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, pp. 270–279. San Jose. [Google Scholar]

3. Soltani-Farani, A., Rabiee, H. R., Hosseini, S. A. (2014). Spatial-aware dictionary learning for hyperspectral image classification. IEEE Transactions on Geoscience and Remote Sensing, 53(1), 527–541. [Google Scholar]

4. Vu, T. H., Monga, V. (2017). Fast low-rank shared dictionary learning for image classification. IEEE Transactions on Image Processing, 26(11), 5160–5175. [Google Scholar]

5. Geng, H., Wang, L., Liu, P. (2014). Dictionary learning for large-scale remote sensing image based on particle swarm optimization. 2014 12th International Conference on Signal Processing (ICSP), pp. 784–789. Hangzhou, China. [Google Scholar]

6. Zheng, Z., Zhong, Y., Su, Y., Ma, A. (2022). Domain adaptation via a task-specific classifier framework for remote sensing cross-scene classification. IEEE Transactions on Geoscience and Remote Sensing, 60(2), 1–13. [Google Scholar]

7. Zhang, J., Liu, J., Pan, B., Shi, Z. (2020). Domain adaptation based on correlation subspace dynamic distribution alignment for remote sensing image scene classification. IEEE Transactions on Geoscience and Remote Sensing, 58(11), 7920–7930. [Google Scholar]

8. Zhu, L., Ma, L. (2016). Class centroid alignment based domain adaptation for classification of remote sensing images. Pattern Recognition Letters, 83(11), 124–132. [Google Scholar]

9. Zhou, Z., Wu, Y., Yang, X., Zhou, Y. (2022). Neural style transfer with adaptive auto-correlation alignment loss. IEEE Signal Processing Letters, 29(4), 1027–1031. https://doi.org/10.1109/LSP.2022.3165758 [Google Scholar] [CrossRef]

10. Tuia, D., Marcos, D., Camps-Valls, G. (2016). Multi-temporal and multi-source remote sensing image classification by nonlinear relative normalization. ISPRS Journal of Photogrammetry and Remote Sensing, 120(10), 1–12. [Google Scholar]

11. Wang, D., Wu, J., Yang, J., Jing, B., Zhang, W. et al. (2021). Cross-lingual knowledge transferring by structural correspondence and space transfer. IEEE Transactions on Cybernetics, 52(7), 6555–6566. [Google Scholar]

12. Jiang, Z., Lin, Z., Davis, L. S. (2013). Label consistent K-SVD: Learning a discriminative dictionary for recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(11), 2651–2664. [Google Scholar] [PubMed]

13. Yang, M., Zhang, L., Feng, X., Zhang, D. (2014). Sparse representation based fisher discrimination dictionary learning for image classification. International Journal of Computer Vision, 109(5), 209–232. [Google Scholar]

14. Qi, L., Huo, J., Fan, X., Shi, Y., Gao, Y. (2018). Unsupervised joint subspace and dictionary learning for enhanced cross-domain person re-identification. IEEE Journal of Selected Topics in Signal Processing, 12(6), 1263–1275. [Google Scholar]

15. Xia, G. S., Hu, J., Hu, F., Shi, B., Bai, X. et al. (2017). AID: A benchmark data set for performance evaluation of aerial scene classification. IEEE Transactions on Geoscience and Remote Sensing, 55(7), 3965–3981. [Google Scholar]

16. Zhao, B., Zhong, Y., Xia, G. S., Zhang, L. (2015). Dirichlet-derived multiple topic scene classification model for high spatial resolution remote sensing imagery. IEEE Transactions on Geoscience and Remote Sensing, 54(4), 2108–2123. [Google Scholar]

17. Zou, J., Li, W., Du, Q. (2015). Sparse representation-based nearest neighbor classifiers for hyperspectral imagery. IEEE Geoscience and Remote Sensing Letters, 12(12), 2418–2422. [Google Scholar]

18. Sejdinovic, D., Sriperumbudur, B., Gretton, A., Fukumizu, K. (2013). Equivalence of distance-based and RKHS-based statistics in hypothesis testing. The Annals of Statistics, 41(5), 2263–2291. [Google Scholar]

19. Hsieh, Y. T., Tao, S. Y., Tsai, Y. H. H., Yeh, Y. R., Wang, Y. C. F. (2016). Recognizing heterogeneous cross-domain data via generalized joint distribution adaptation. 2016 IEEE International Conference on Multimedia and Expo (ICME), pp. 1–6. Seattle. [Google Scholar]

20. Yan, K., Kou, L., Zhang, D. (2017). Learning domain-invariant subspace using domain features and independence maximization. IEEE Transactions on Cybernetics, 48(1), 288–299. [Google Scholar] [PubMed]

21. Li, J., Lu, K., Huang, Z., Zhu, L., Shen, H. T. (2018). Transfer independently together: A generalized framework for domain adaptation. IEEE Transactions on Cybernetics, 49(6), 2144–2155. [Google Scholar] [PubMed]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools