Open Access

Open Access

ARTICLE

A Client Selection Method Based on Loss Function Optimization for Federated Learning

1 School of Computer Science and Technology, Hangzhou Dianzi University, Hangzhou, 310018, China

2 Key Laboratory of Complex System Modeling and Simulation, Ministry of Education, Hangzhou, 310018, China

3 Zhejiang Engineering Research Center of Data Security Governance, Hangzhou, 310018, China

4 Intelligent Robotics Research Center, Zhejiang Lab, Hangzhou, 311100, China

5 HDU-ITMO Joint Institute, Hangzhou Dianzi University, Hangzhou, 310018, China

* Corresponding Authors: Tian Xiang. Email: ; Yongjian Ren. Email:

(This article belongs to the Special Issue: Federated Learning Algorithms, Approaches, and Systems for Internet of Things)

Computer Modeling in Engineering & Sciences 2023, 137(1), 1047-1064. https://doi.org/10.32604/cmes.2023.027226

Received 20 October 2022; Accepted 05 January 2023; Issue published 23 April 2023

Abstract

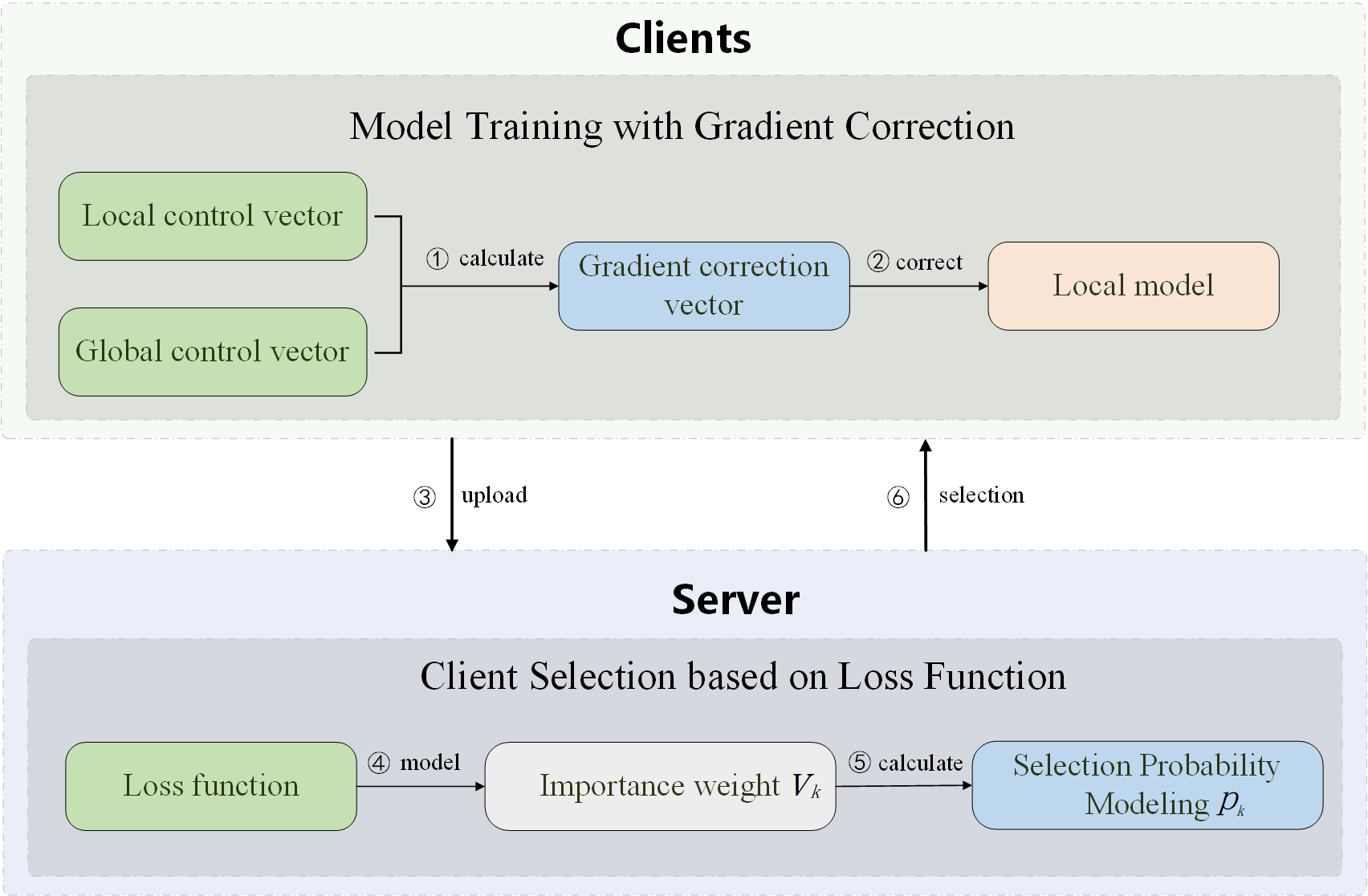

Federated learning is a distributed machine learning method that can solve the increasingly serious problem of data islands and user data privacy, as it allows training data to be kept locally and not shared with other users. It trains a global model by aggregating locally-computed models of clients rather than their raw data. However, the divergence of local models caused by data heterogeneity of different clients may lead to slow convergence of the global model. For this problem, we focus on the client selection with federated learning, which can affect the convergence performance of the global model with the selected local models. We propose FedChoice, a client selection method based on loss function optimization, to select appropriate local models to improve the convergence of the global model. It firstly sets selected probability for clients with the value of loss function, and the client with high loss will be set higher selected probability, which can make them more likely to participate in training. Then, it introduces a local control vector and a global control vector to predict the local gradient direction and global gradient direction, respectively, and calculates the gradient correction vector to correct the gradient direction to reduce the cumulative deviation of the local gradient caused by the Non-IID data. We make experiments to verify the validity of FedChoice on CIFAR-10, CINIC-10, MNIST, EMNITS, and FEMNIST datasets, and the results show that the convergence of FedChoice is significantly improved, compared with FedAvg, FedProx, and FedNova.Graphic Abstract

Keywords

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools