Open Access

Open Access

ARTICLE

An Improved Elite Slime Mould Algorithm for Engineering Design

1 School of Artificial Intelligence, Beijing Institute of Economics and Management, Beijing, 100102, China

2 College of Computer Science and Technology, Jilin University, Changchun, 130012, China

3 College of Computer Science and Artificial Intelligence, Wenzhou University, Wenzhou, 325035, China

4 Zhejiang Academy of Science and Technology Information, Hangzhou, 310008, China

* Corresponding Author: Deng Chen. Email:

(This article belongs to the Special Issue: Computational Intelligent Systems for Solving Complex Engineering Problems: Principles and Applications)

Computer Modeling in Engineering & Sciences 2023, 137(1), 415-454. https://doi.org/10.32604/cmes.2023.026098

Received 15 August 2022; Accepted 16 December 2022; Issue published 23 April 2023

Abstract

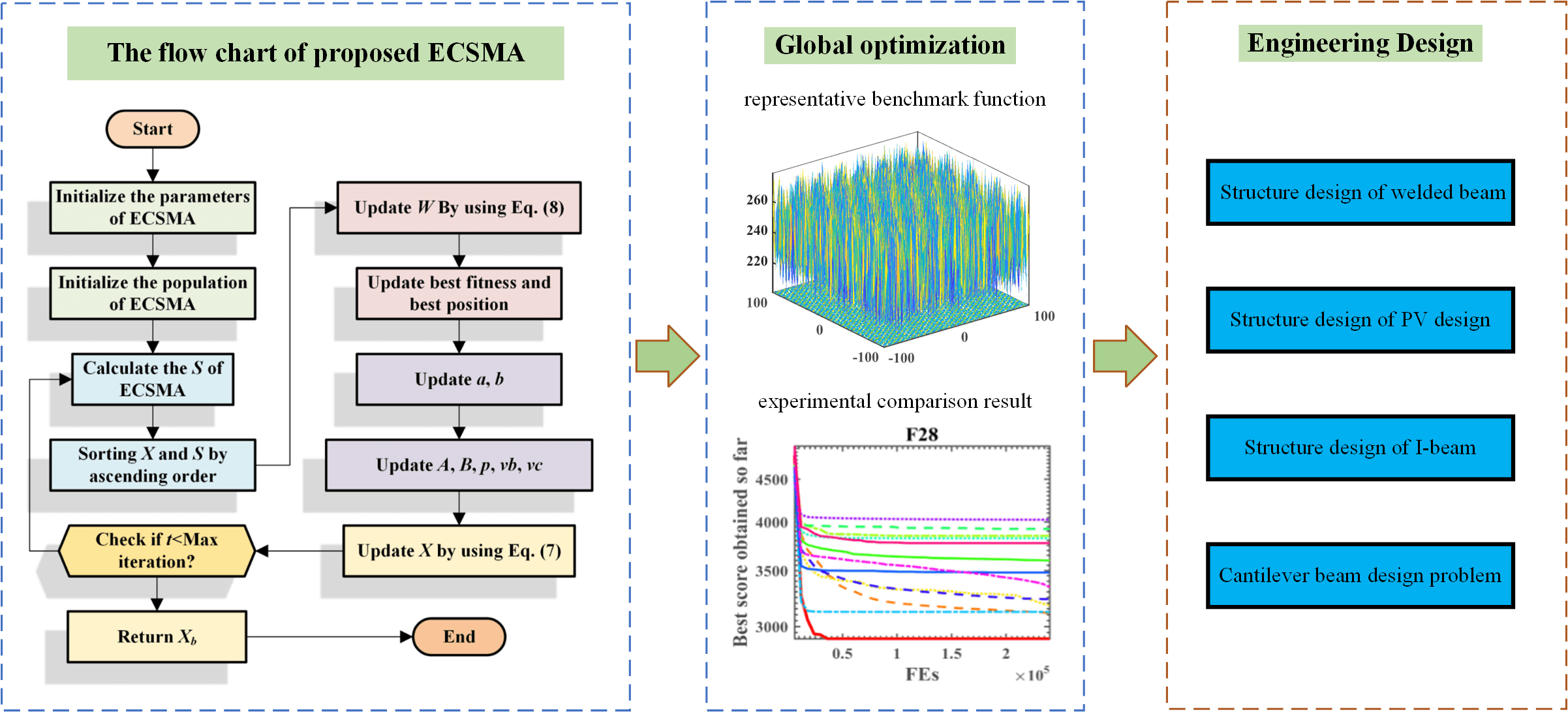

The Swarm intelligence algorithm is a very prevalent field in which some scholars have made outstanding achievements. As a representative, Slime mould algorithm (SMA) is widely used because of its superior initial performance. Therefore, this paper focuses on the improvement of the SMA and the mitigation of its stagnation problems. For this aim, the structure of SMA is adjusted to develop the efficiency of the original method. As a stochastic optimizer, SMA mainly stimulates the behavior of slime mold in nature. For the harmony of the exploration and exploitation of SMA, the paper proposed an enhanced algorithm of SMA called ECSMA, in which two mechanisms are embedded into the structure: elite strategy, and chaotic stochastic strategy. The details of the original SMA and the two introduced strategies are given in this paper. Then, the advantages of the improved SMA through mechanism comparison, balance-diversity analysis, and contrasts with other counterparts are validated. The experimental results demonstrate that both mechanisms have a significant enhancing effect on SMA. Also, SMA is applied to four structural design issues of the welded beam design problem, PV design problem, I-beam design problem, and cantilever beam design problem with excellent results.Graphic Abstract

Keywords

Swarm intelligence (SI) algorithms are currently a very prevalent topic and are receiving increasing attention. Therefore, they have been employed in many fields, such as medical prediction, tourism path planning, urban construction planning, and engineering design problems. Therefore, the SI algorithm is widely adopted in many real-world application scenarios, where the slime mould algorithm (SMA) [1] has superior performance and has recently been proposed to reveal new aspects of new problems. The SI algorithms are mostly inspired by optimization phenomena in nature, such as Harris hawks optimizer (HHO) [2,3] {Kennedy, 2010 #158}, multi-verse optimizer (MVO) [4], and particle swarm optimization (PSO) [5]. Some algorithms stimulate the physical phenomenon such as sine cosine algorithm (SCA) [6,7] and gravitational search algorithm (GSA) [8].

In addition, some novel algorithms have been proposed one after another, which include not only some novel basic algorithms, such as weighted mean of vectors (INFO) [9], hunger games search (HGS) [10], colony predation algorithm (CPA) [11], and Runge Kutta optimizer (RUN) [12], but also some newly proposed variants of basic algorithms, such as evolutionary biogeography-based whale optimization (EWOA) [13], Harris hawks optimization with gaussian mutation (GCHHO) [14], opposition-based ant colony optimization (ADNOLACO) [15], Harris hawks optimization with elite evolutionary strategy (EESHHO) [16], improved whale optimization algorithm (LCWOA) [17], ant colony optimization with Cauchy and greedy Lévy mutations (CLACO) [18], chaotic, random spare ant colony optimization (RCACO) [19], moth-flame optimizer with sine cosine mechanism (SMFO) [20], adaptive chaotic sine cosine algorithm (ASCA) [7], and sine cosine algorithm with linear population size reduction mechanism (LSCA) [21]. Of course, they have been successfully applied to many other fields as well, such as gate resource allocation [22,23], feature selection [24,25], bankruptcy prediction [26,27], expensive optimization problems [28,29], image segmentation [30,31], robust optimization [32,33], solar cell parameter identification [34], train scheduling [35], multi-objective problem [36,37], resource allocation [38], scheduling problems [39–41], optimization of machine learning model [42], medical diagnosis [43,44], and complex optimization problem [45]. These excellent SI algorithms, including SMA, have shown some superiority. But there are still some common drawbacks for these algorithms, such as slow convergence speed, more iterations consumed, and they are prone to stagnating in premature solutions on certain functions with some harsh or flat feature space.

SMA was proposed in 2020 that imitates the behavioral and morphological transforms of the slime mould during food-seeking and solves the optimization problem by weighting the positive and negative feedback during foraging. Compared with peers, SMA has the advantages of justifiability of logical principle, few variables, and energetic, dynamic explorative capability. However, the local search capability of SMA is still deficient in some functions, and, as a newly proposed meta-heuristic algorithm, there are relatively few improvements to SMA at present. From the existing improved algorithms, it can be clearly seen that adding effective mechanisms or combining specific procedures contributes to the performance upgrading of the algorithms. For example, Ebadinezhad et al. [46] developed an adaptive ant colony optimization (ACO) called DEACO, adopting a dynamic evaporation strategy. The experimental results showed that compared with the conventional ACO, the convergence speed of DEACO is faster and the search accuracy is higher. Chen et al. [47] presented an augmented SCA with multi-strategy. Specifically, the proposed memory-driven algorithm called MSCA combines a reverse learning strategy, chaotic local search mechanism, Cauchy mutation operation as well as two operators from differential evolution. The overall outcomes demonstrate the superior solution quality and convergence speed of the proposed MSCA to its competitors. Guo et al. [48] presented a WOA with the wavelet mutation strategy and the social learning. The algorithm proposed in the article was applied to three water resource prediction models.

Jiang et al. [49] designed a chaotic gravitational search algorithm based on balance tuning (BA-CGSA) with sinusoidal stochastic functions and equilibrium mechanisms, and the overall outcomes revealed its efficiency in continuous optimization problems. Javidi et al. [50] introduced an enhanced crow search algorithm (ECSA) that combines a free-flight mechanism and an individual cap strategy that replaces each offending decision variable with a corresponding decision variable and global optimal solution. Therefore, ECSA obtained better or very competitive results. Tawhid et al. [51] presented a new hybrid binary bat enhanced PSO (HBBEPSO), and the outcomes indicated the capability of the proposed HBBEPSO to search for optimal feature combinations in the feature space. Luo et al. [52] proposed a boosted MSA named elite opposition-based MSA (EOMSA). The presented EOMSA employed an elite opposition-based strategy to increase population variation and exploration capability. The results showed that EOMSA is capable of probing more accurate solutions with fast convergence and high stability compared to other population-based algorithms. To handle a complex power system problem, economic environmental dispatch (EED), Sulaiman et al. [53] presented a hybrid optimization algorithm EGSJAABC3, which combined the evolutionary gradient search (EGS) and the recently proposed artificial swarm variant (JA-ABC3), and obtained the performance enhancement. The obtained benchmark function and EED application results revealed the optimization efficacy of EGSJAABC3. Consequently, it can be observed that the new mechanisms and the hybrid of algorithms based on the origin greatly improve the capability.

SMA has excellent convergence and accuracy, so it is also challenging to improve SMA. Here, an idea for improving the SMA is provided: using elite strategy and chaotic randomness to improve SMA coefficients A and B. The elite strategy is utilized to ensure convergence while chaotic randomness is utilized to enhance exploration tendencies. To demonstrate the effectiveness of ECSMA, several advanced elevating algorithms were compared against SMA. Besides, this paper attempts to apply ECSMA to several engineering design problems.

The main contributions of this paper are listed as follows:

1) In this paper, a new SMA-based swarm intelligence optimization algorithm, called ECSMA, is proposed.

2) The ECSMA skillfully combines the elite strategy and the chaotic stochastic strategy with the original SMA to enhance its performance effectively.

3) ECSMA is compared with some state-of-the-art similar algorithms on 31 benchmark functions and its performance is well demonstrated.

4) ECSMA is applied to four engineering design problems and achieves excellent results.

This paper is structured as follows. The principle and description of SMA are given in Section 2. Hereafter, Section 3 describes the detail of the improved ECSMA. In Section 4, the test function experimental results and explanation is presented. The application experiments of ECSMA to fundamental engineering problems are given in Section 5. Section 6 gives the primary contributions of this thesis and presents future work.

There are many different types of slime mould, while they have different morphological structures and behaviors. Therefore, the type of slime mould studied by the original author is mainly Physarum polycephalum. The slime mould covers the search space as much as possible by forming a large-scale diffusion net. When spreading, the organic matter at the front of the slime mould diffuses into a fan-shaped structure to expand the expansion area. Organic substances containing enzymes flow in the vein structure of slime molds and digest the covered edible substances. Furthermore, the spread network structure also ensures that slime mould can cover multiple food sources at the same time, thereby forming a node network based on food concentration.

In 2020, Li et al. established a mathematical model for slime mould based on their foraging behavior in nature and applied SMA to solve a series of optimization problems. The major steps of SMA are shown below:

Approach food:

By assessing the concentration of food in the air using receptors, slime mould spread in a general direction toward the food. The authors used the following formula to simulate their expansion and contraction behavior roughly.

where

The adaptive parameter

where

The oscillation parameter

The weight

where

2) Wrap food:

The following equation is utilized to update the values for slime mould in each iteration:

where

3) Oscillation:

The tendency of slime mould towards high-quality food arises from propagation waves generated by biological oscillators used to alter cytoplasmic flow in mucilage veins.

Among them,

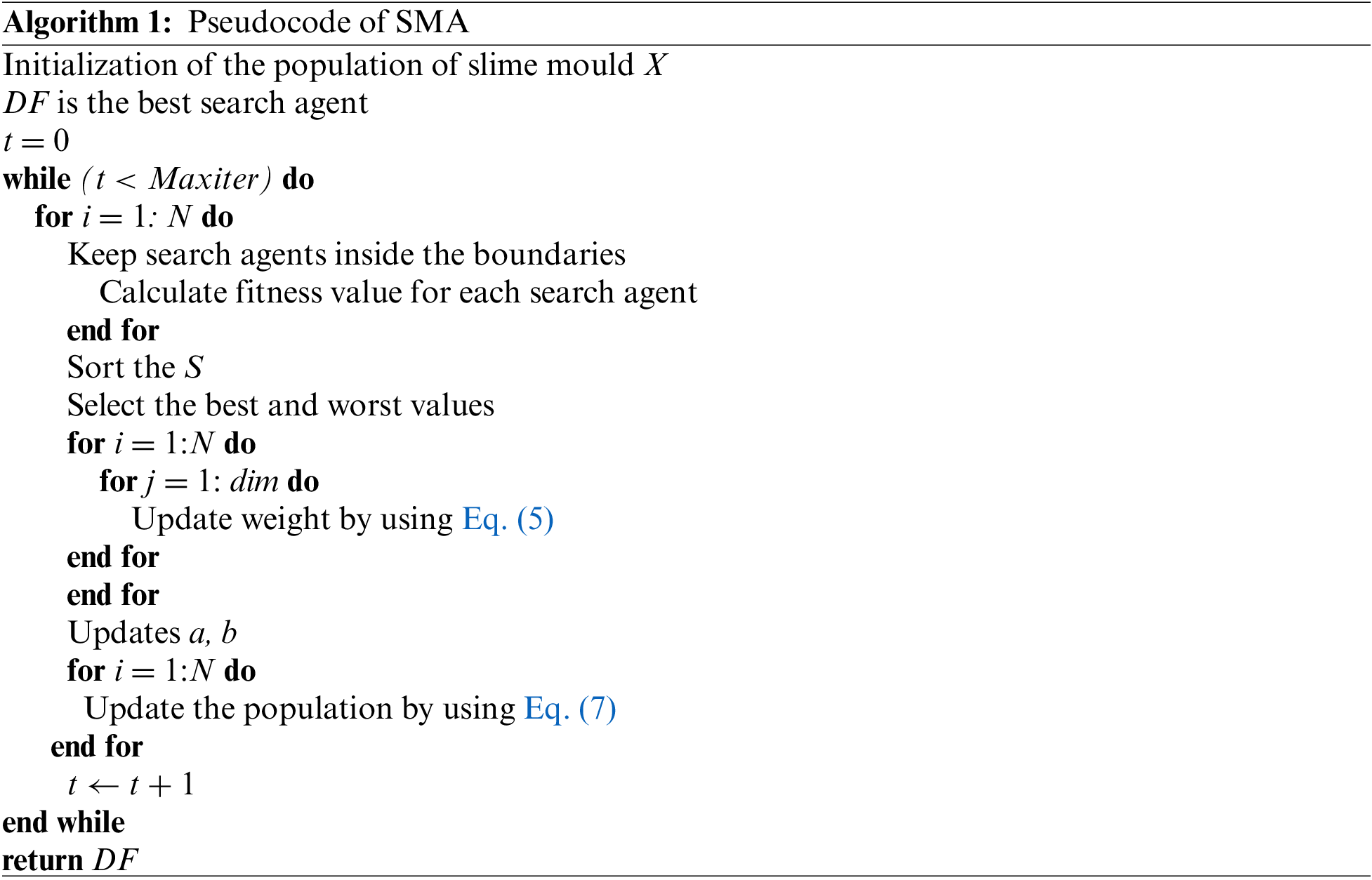

The pseudocode of the original SMA is shown in Algorithm 1.

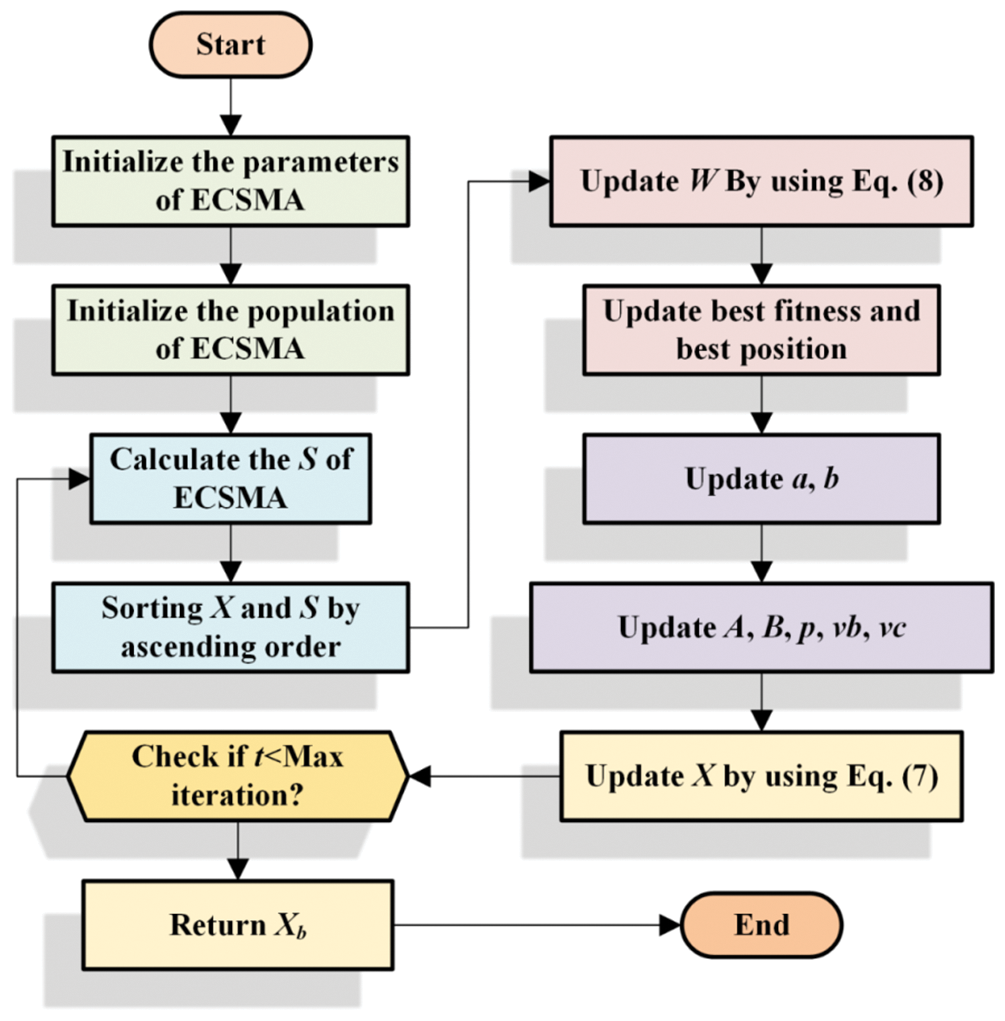

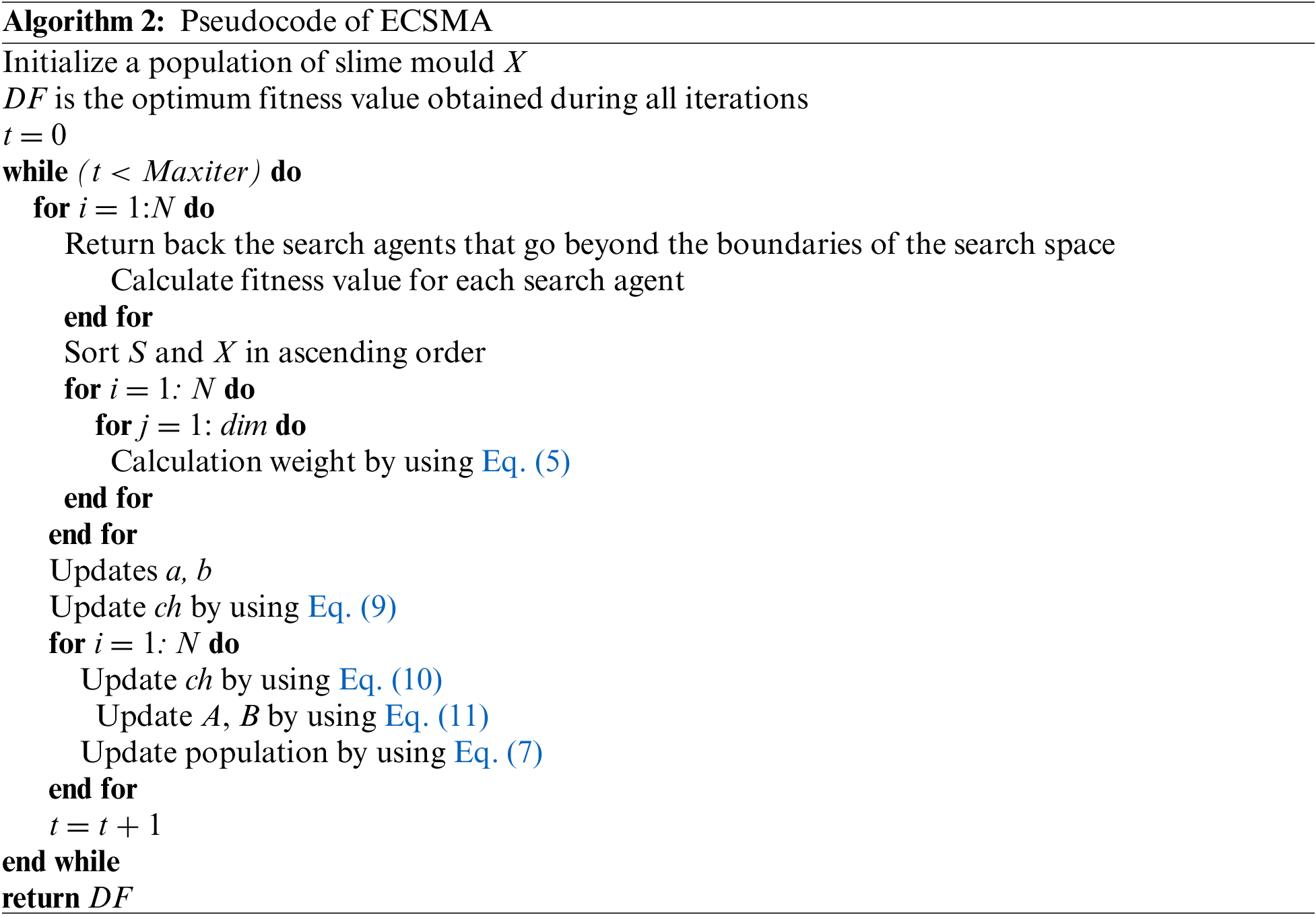

The improved ECSMA is equipped with two valid strategies. First, the elite strategy is introduced to enhance the exploitation of SMA and reduce the adverse effects of false solutions on the optimal solution. Second, a chaotic strategy is added to improve the ergodicity of SMA and prevent SMA from falling into local optimum (LO) prematurely.

The MGABC algorithm [54] improves the neighbor search formula by randomly selecting two neighbors and using the optimum individual in the population as the initialization state for the search. Convergence speed is accelerated thanks to the guidance of the global optimal individual. On this basis, the paper selects two elite individuals as neighbors to further enhance the rate of convergence, as shown in Eq. (8).

where

3.2 Chaotic Stochastic Strategy (CSS)

A chaotic stochastic strategy is used to randomly select

where

Although SMA already has good convergence and accuracy, it still has some room for improvement in these two aspects. Therefore, the elite strategy and the chaotic stochastic strategy are utilized to improve SMA coefficients A and B. The use of elite strategy is to ensure the convergence of SMA, while the use of chaotic randomness is to enhance the exploration tendency of SMA. Algorithm 2 shows the procedure of ECSMA, while Fig. 1 displays the flowchart of ECSMA.

Figure 1: Flowchart of ECSMA

The proposed ECSMA’s time complexity includes several aspects: the number of algorithm iterations (T), the number of search agents (N), and the dimensions of the optimization problems (D). Therefore, the complexity of calculating fitness and sorting fitness is both O(N), while the computational complexity of calculating weight and updating individual is both O (

4 Discussions on Experimental Results

The benchmark experiment is carried out in this section. Firstly, the diversity and balance of ECSMA and SMA are analyzed. Then, we proved the performance of ECSMA through mechanism comparison and experiment comparison with other algorithms.

4.1 Validation Using Benchmark Problems and Parameter Settings

In this experimental section, the optimizer’s efficiency with distinguished test functions is benchmarked. The function equations are shown in Table A1. In this paper, 23 benchmark functions and 8 composite functions in CEC2014 are opted to evaluate the efficacy of ECSMA. As we all know, the unimodal function has only one optimal solution, so it proves the exploitation ability of the method well. Compared with single-peak functions, multi-peak functions are more likely to lead to LO cases. Moreover, the increase of function dimensionality increases the complexity of LO cases. Therefore, the multi-peak function is suitable for testing the exploration capability of the algorithm and the ability to jump out of LO. In order to eliminate randomness in the experiment, all the algorithms involved are compared under the same conditions, where the population size is set to 30, the maximum evaluation number MaxFEs is uniformly set to 250,000 times, and all algorithms are independently tested 30 times on the benchmark functions. Also, to better present the comparative results of the experiments, the results were analyzed by the Wilcoxon signed-rank test in this paper.

To ensure fairness, all experiments were conducted on a desktop computer with an Intel(R) Xeon(R) CPU E5-2660 v3 (2.60 GHz) and 16 GB RAM, and all methods mentioned above were coded on the MATLAB R2020b.

4.2 Performance Analysis of ECSMA and SMA

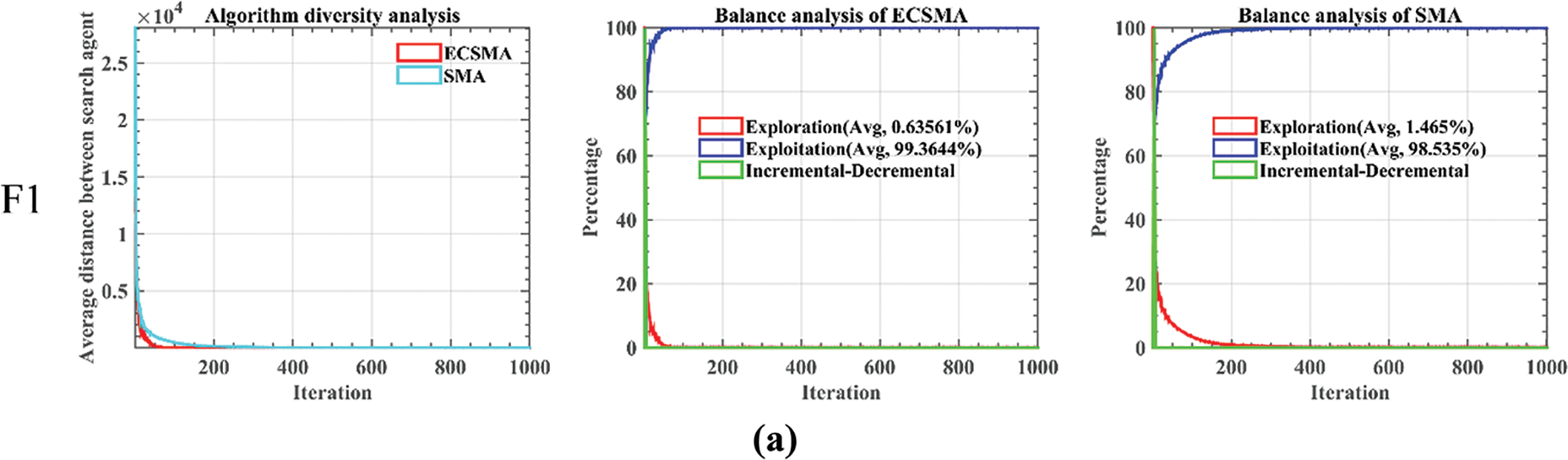

We analyzed the algorithmic optimality of ECSMA and SMA including diversity and balance in this part. The related experiment was carried out on 31 benchmark functions; in addition, to ensure fairness, the experiment ensured that the parameters such as dimensionality, population size, and assessment time were the same. To fully analyze the performance, the balance and diversity of ECSMA and SMA are verified on the designed set of benchmark functions. Fig. 2 depicts the balance and diversity of DCSMA and SMA on partial functions. The first column is the diversity image. The x-axis denotes the number of iterations, while the y-axis denotes the diversity measure. The initial population of the algorithm is randomly generated, so the population has rich diversity at first. However, the diversity of the population decreases as the iteration progresses.

Figure 2: Diversity and balance analysis of algorithms

From the diversity analysis image, it can be seen that ECSMA always reaches the bottom of the image earlier than SMA, which shows that ECSMA converges faster than SMA, and ECSMA has stronger exploitation ability than SMA. The second and third columns are balance images, which have three curves: exploration curve, incremental decline curve, and production curve. The exploration stability of the algorithm in optimization stems from the high value of the exploration curve. And, the change in the exploitation curve demonstrates the change in the exploitation ability of the algorithm. The incremental decline curve is the result of balancing the two behaviors of exploitation and exploration. When the exploration efficiency is greater than or equal to the exploitation capacity, the incremental curve will increase. Rather, it is dwindling. The incremental decline curve reaches its maximum when the exploration and exploitation capabilities are the same. From the balance analysis, it can also be seen that ECSMA enters the exploitation stage faster than SMA. So, ECSMA can always spend less time and enter the exploitation stage faster than SMA. Therefore, ECSMA has better performance than SMA.

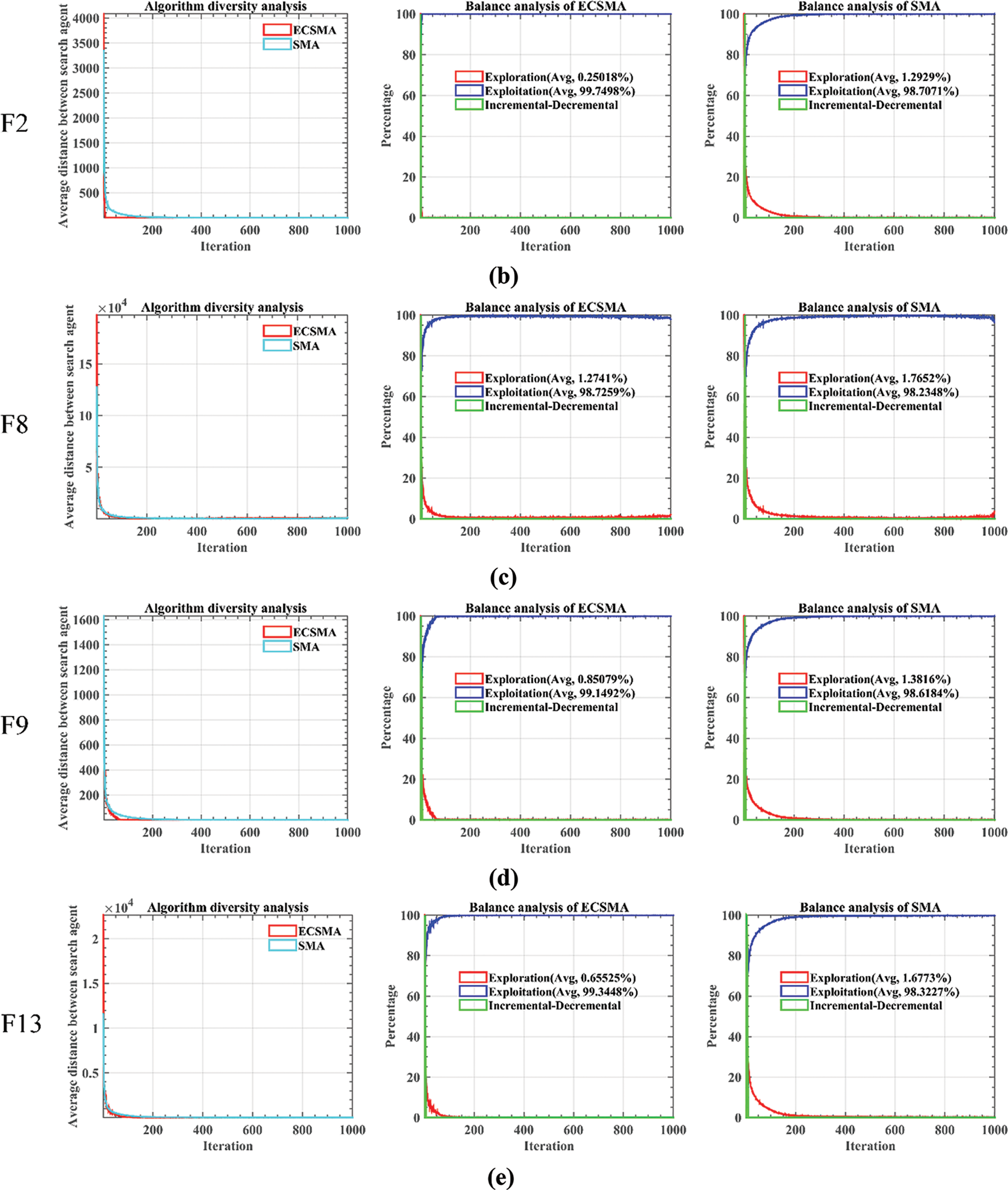

Two strategies are incorporated into the original SMA, called ES and CSS in Section 3. Four different variants of SMA are shown in Table 1 to investigate the impact of the introduced mechanisms. “1” represents that the mechanism is introduced in SMA, and “0” represents that the mechanism is not introduced. For example, ESMA introduced the “ES” mechanism on behalf of SMA.

Three SMA variants were tested for performance on a benchmark function set. In Table 2, the experimental results show the p-values of the various SMAs ranked by Wilcoxon signed-rank test. The Wilcoxon signed-rank test was used for this experiment, and the significance threshold difference rate between the comparison algorithms was 5%. The symbol “+” in the table indicates that ECSMA performs better than other algorithms. The symbol “−” in the table indicates that ECSMA performance is inferior to other algorithms. The symbol “=” in the table indicates that ECSMA behaves similarly to other algorithms. Regarding “+/−/=”, there is a difference in performance between ECSMA and other algorithms. So, ECSMA is inferior to ESMA, CSMA, and SMA on 3, 0, 0 out of 31 problems. Although the advantages of ECSMA in many functions are not distinct compared with ESMA, CSMA, and SMA, it is not worse or even better than these variants. Therefore, ECSMA demonstrates superiority. Moreover, ECSMA ranks first overall, showing better performance compared with its peers in the face of benchmark functions. Finally, ECSMA is chosen as the best lifting approach for SMA in the light of the above analysis. Therefore, by testing and comparing on benchmark functions, ECSMA is also very advantageous in optimizing performance.

4.4 Comparison with Excellent Peers

In Table A2, the improved SMA was compared with the primitive SMA, 12 efficient metaheuristic algorithms and improved metaheuristic algorithms on the functions, including improved GWO algorithm (IGWO) [55], opposition-based learning GWO (OBLGWO) [56], chaotic whale optimizer algorithm (CWOA) [57], improved WOA (IWOA) [58], chaotic map bat algorithm with random black hole model (RCBA) [59], chaos-enhanced moth-flame optimizer (CMFO) [60], adaptive differential evolution (JADE) [61], particle swarm optimization with an aging leader and challengers (ALC-PSO) [62], bat optimizer (BA) [63], differential evolution (DE) [64], whale optimizer (WOA) [65] and grey wolf algorithm (GWO) [66].

The experiments were conducted on the effects of dimensional changes. The dimension of the benchmark experiment is set to 30. Relative parameters and function verification remain unchanged from the original version. Table A2 records the standard deviation (STD) and the mean values (AVG) obtained by algorithms to calculate functions.

In Table A2, the AVG and STD can reflect the stability of an algorithm. It can be observed that the stability of ECSMA is slightly weaker than other algorithms on F6, F12, f13, F20, F23, and F27, but it also ranks in the first few. In many functions such as F1, F2, F9, F11, F14, F16, F17, F18, F19, the AVG and STD of several algorithms, including ECSMA, reach the lowest at the same time. Therefore, proposed ECSMA can dig out the best solution more stably. The proposed model in this paper shows advantages on multiple types of functions. This includes multimodal function, unimodal function, and fixed dimension multimodal function. According to the ranking results, whether dealing with problems with a different dimension, the proposed ECSMA has obtained the first average ranking, which verifies the improvement of ECSMA performance compared to the original SMA.

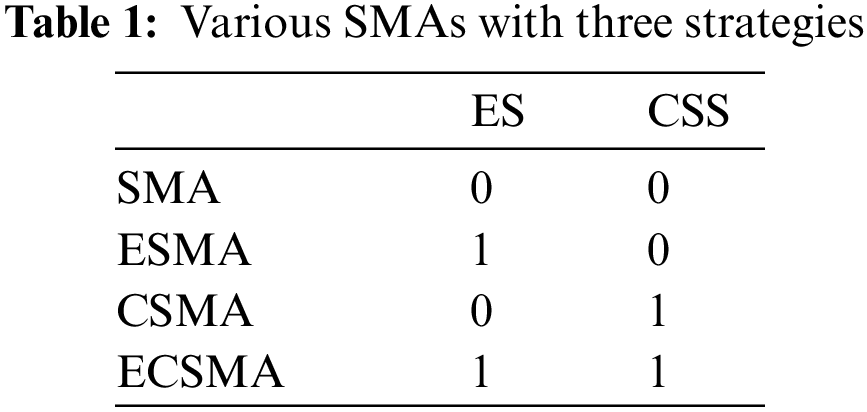

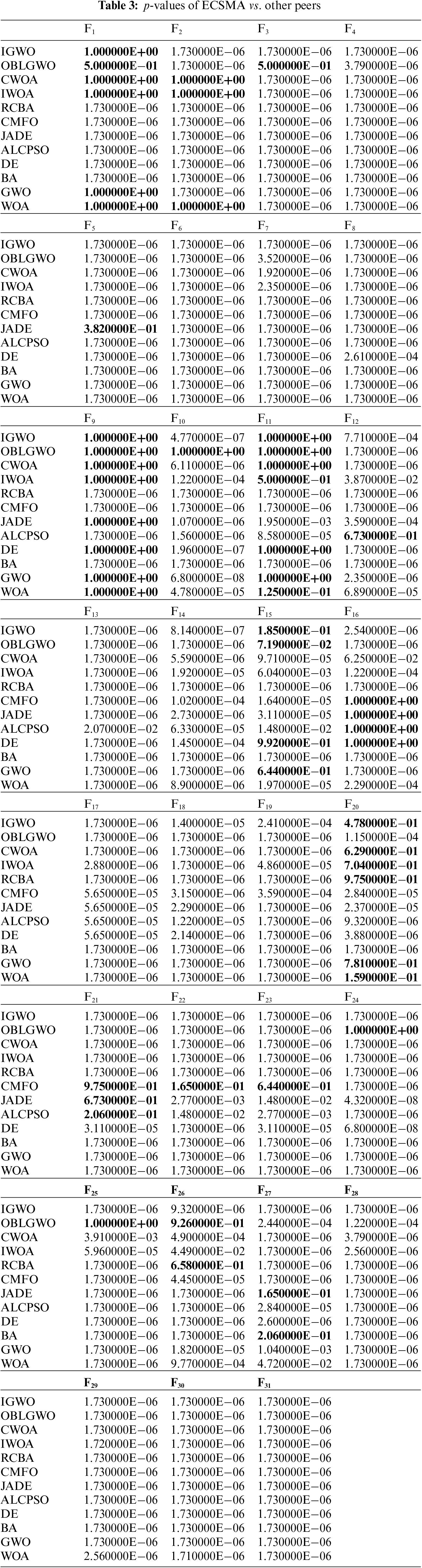

The Wilcoxon signed-rank test evaluated the significance of the proposed ECSMA and other optimizers on 31 benchmark functions. Its result was also recorded at the end of Table A2, which demonstrated that the presented method performed well on most problems. p-values less than 0.05 in Table 3 means that ECSMA is significantly superior to competitors. Table 3 shows that ECSMA has no discernible difference with other algorithms in F1, F2, F9, F11, F15, F16, F20. But in other functions, it can be seen that ECSMA is obviously superior to most of the comparison algorithms in the convergence rate. This proves the superiority of ECSMA in testing functions.

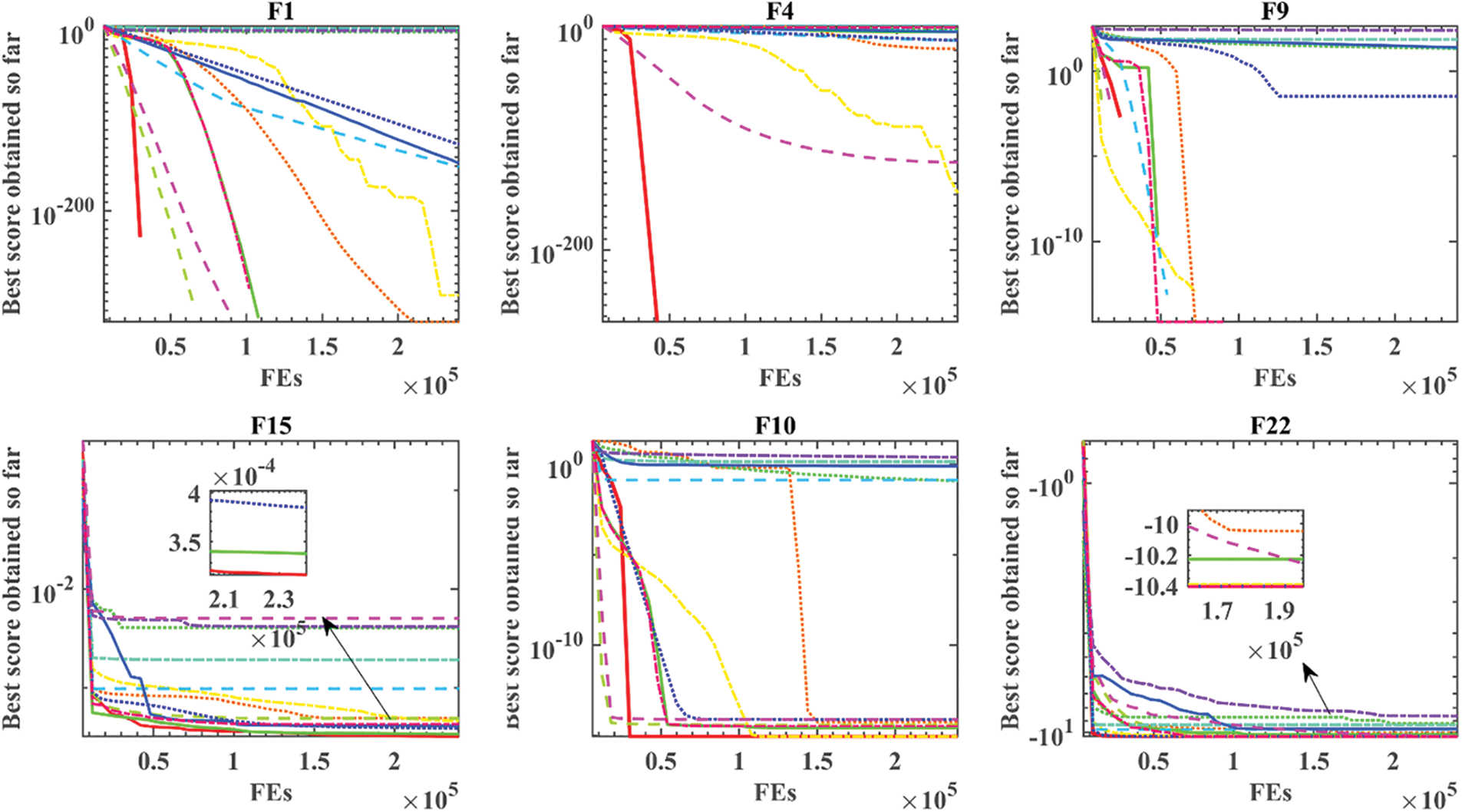

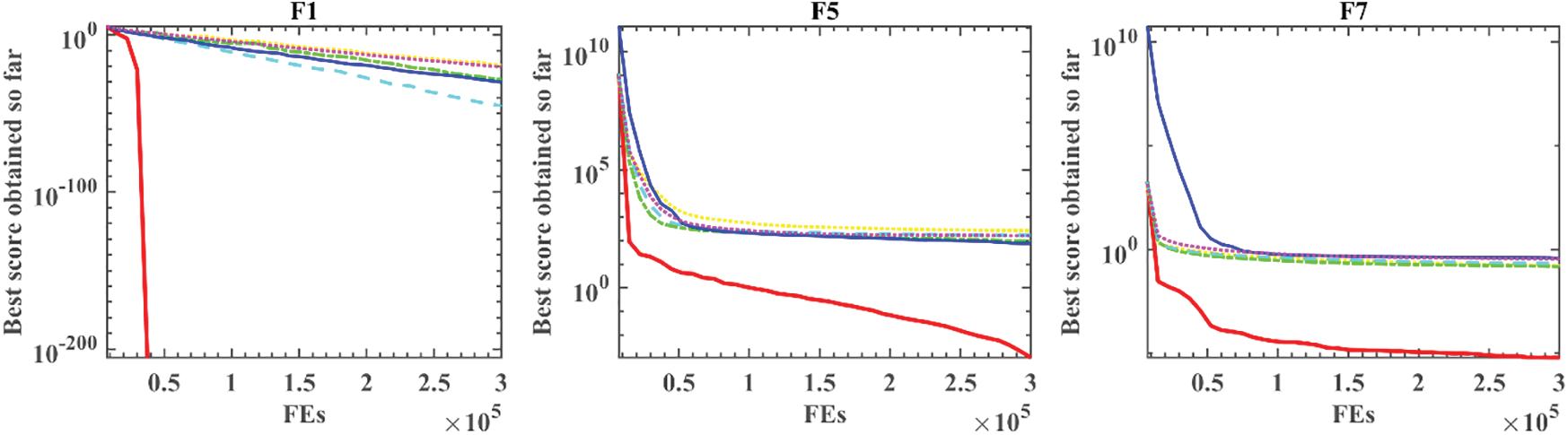

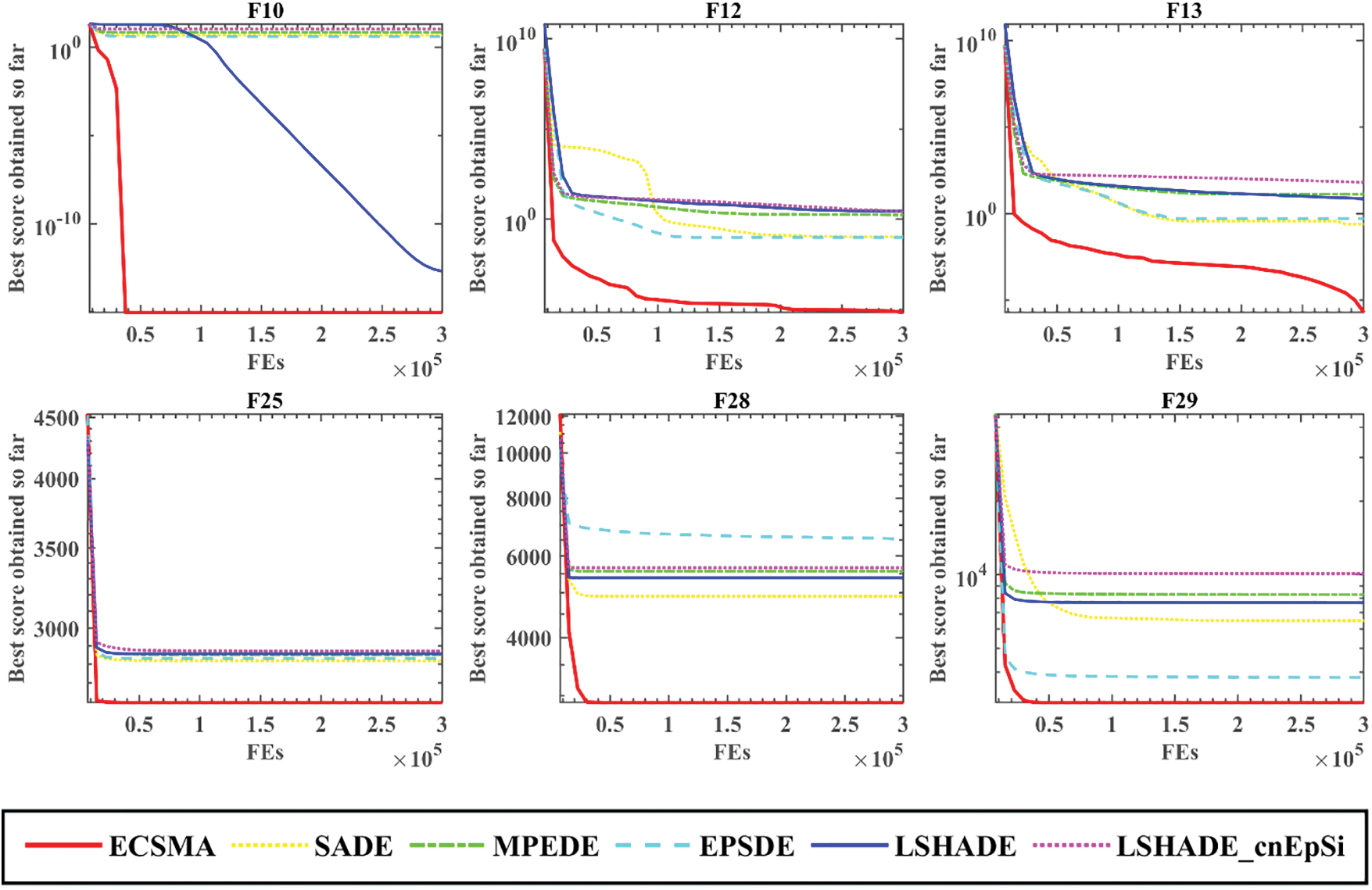

Fig. 3 shows 12 convergence curves of ECSMA and other competitors on the 30-dimensional benchmark functions. ECSMA shows the best convergence when tackling problems F15, F22, F24, F25, F28, F29, while other optimizers stagnate in the local optimum. When considering F30 and F31, although JADE and DE converge rapidly in the early stage, ECSMA reaches the relative optimum at the later stage of the whole process. Compared with other algorithms, ECSMA converges to the right solution with the fastest speed when dealing with F1 and F2 problems.

Figure 3: Convergence curves of ECSMA and other peers

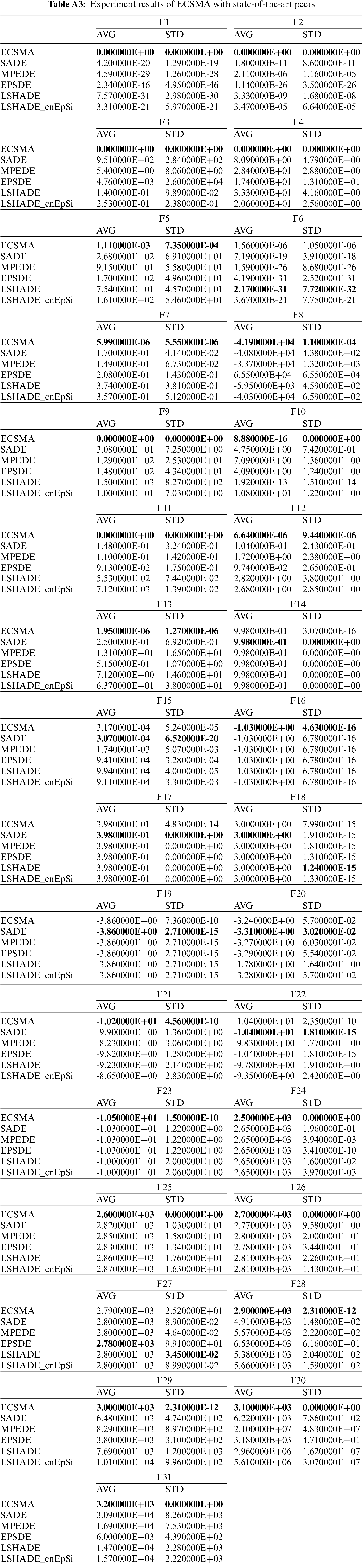

4.5 Comparison with State-of-the-Art Peers

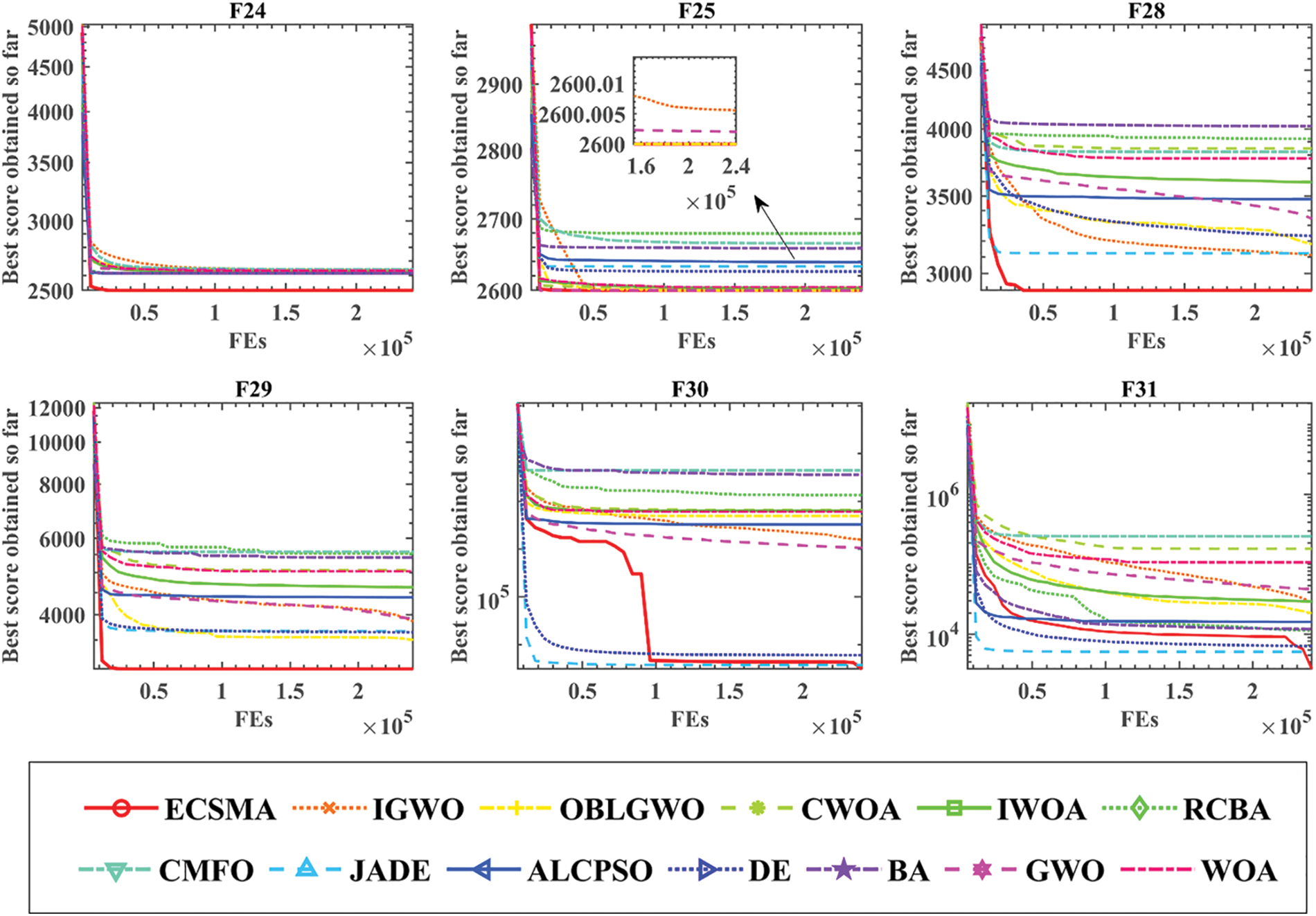

In this subsection, ECSMA was compared with five state-of-the-art algorithms, mainly SADE [64], PEDE [67], EPSDE [68], LSHADE [69], and LSHADE_cnEpSi [70], which are some of the champion algorithms. The average and variance obtained by ECSMA and these advanced algorithms on each of the benchmark function tests are given in Table A3. It is easy to see that ECSMA achieves very good results compared to these advanced algorithms for the 31 benchmark functions selected in this paper. First, the excellent performance of ECSMA on the mean value fully illustrates that ECSMA has strong optimization ability on the function problems and can outperform these advanced algorithms on most of the benchmark functions. Secondly, from the outstanding performance on the variance of ECSMA, it fully illustrates that ECSMA has strong stability in the optimization process and can perform well.

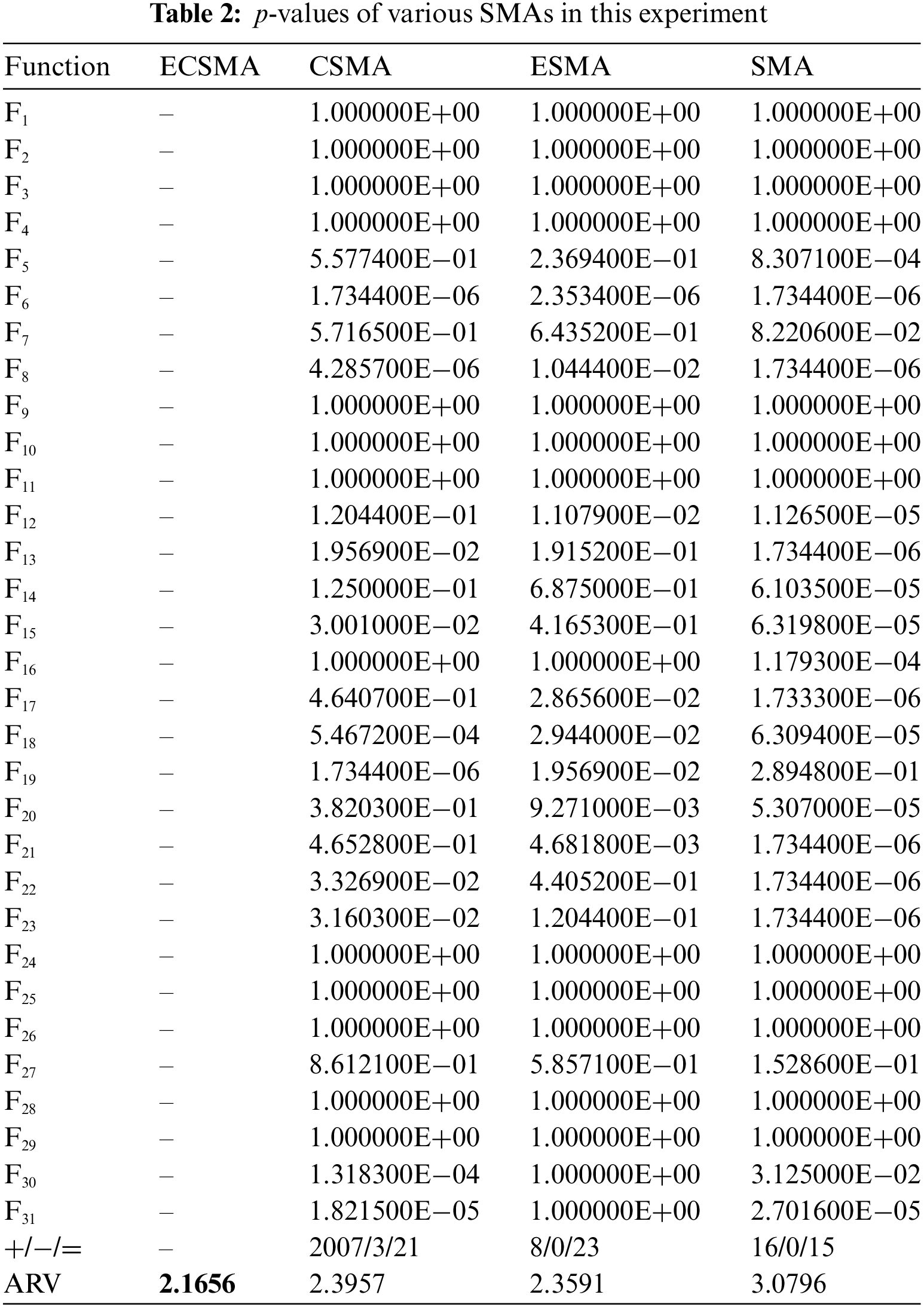

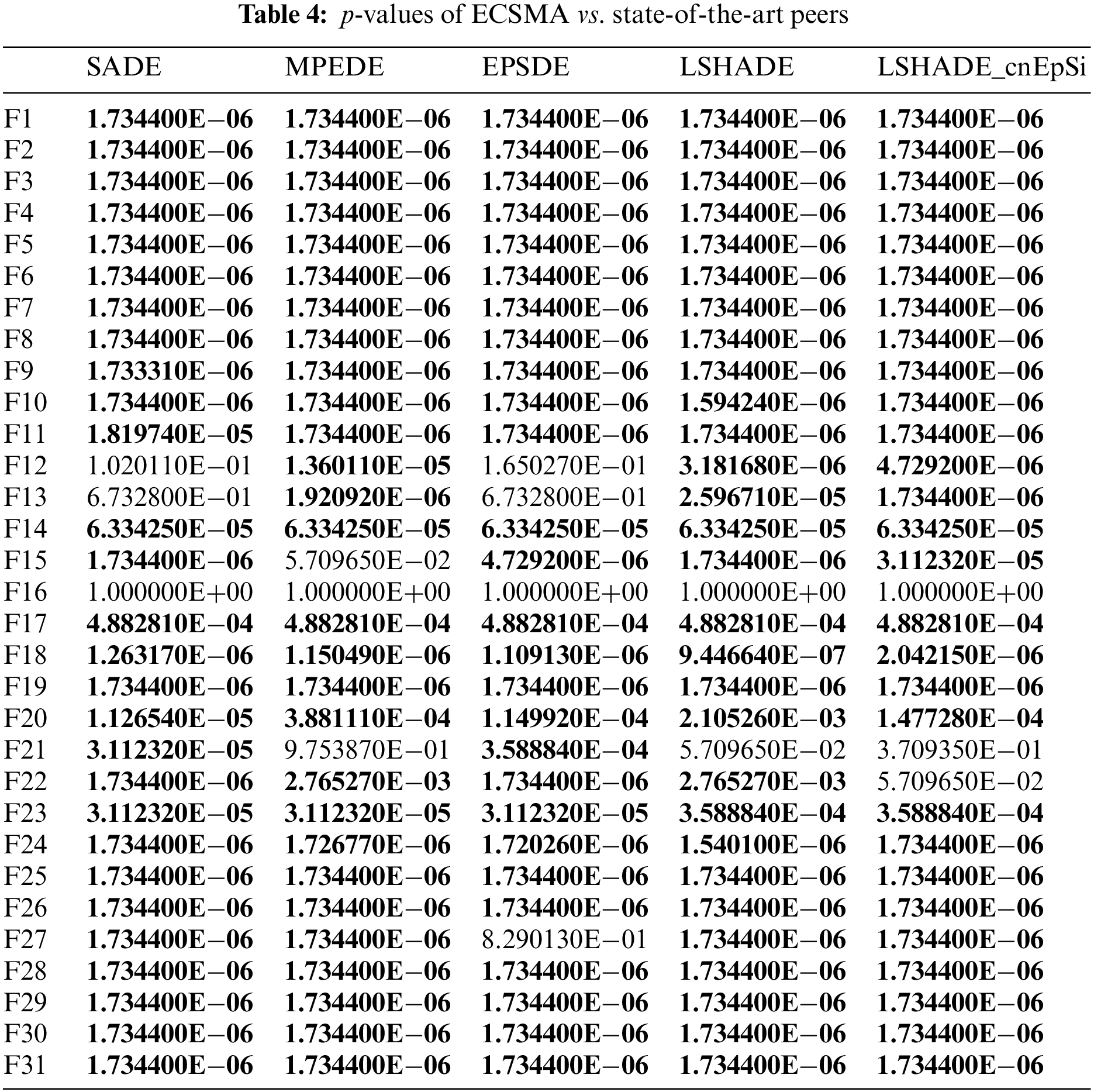

Further, the significance of the proposed ECSMA and other advanced algorithms on 31 benchmark functions was evaluated using the Wilcoxon signed-rank test. Observing the specific results in Table 4, it can be found that most of the p-values in the Wilcoxon test are less than 0.05, which fully demonstrates the validity of our experiments and the given results are sufficient to prove the advancedness of ECSMA. Finally, the convergence curves of ECSMA and other advanced algorithms on F1, F5, F7, F10, F12, F13, F25, F28, and F29 are given in Fig. 4. In the given convergence curves, it can be seen that ECSMA has excellent convergence effect and the ability to jump out of local optimum. The core advantages of ECSMA are further revealed, indicating that ECSMA is an excellent swarm intelligence optimization algorithm that can be used to solve most optimization problems.

Figure 4: Convergence curves of ECSMA and other state-of-the-art peers

In summary, the superiority of ECSMA not only in convergence speed as well as convergence accuracy is well demonstrated but also in avoiding falling into local optimum and optimization capability is illustrated through a series of benchmark function comparison experiments.

5 ECSMA for the Structural Design Issues

To demonstrate the practical performance of the proposed method, the ECSMA-based model is applied to several engineering optimization problems. Engineering optimization problems differ in that the optimal solution must be obtained while satisfying the constraints. So, the value needs to be within a certain range. Four engineering problems as follows.

5.1 Structure Design of Welded Beam (WB)

The idea of the structural design problem is to optimize the structure of the WB so that the material consumption of the WB is minimized. The main parameters involved are the length of the bar (l), the thickness of the bar (b), the thickness of the weld (h), the height of the bar (t). Further, the primary constraints are deflection rate (

Consider

Objective

Variable ranges:

where

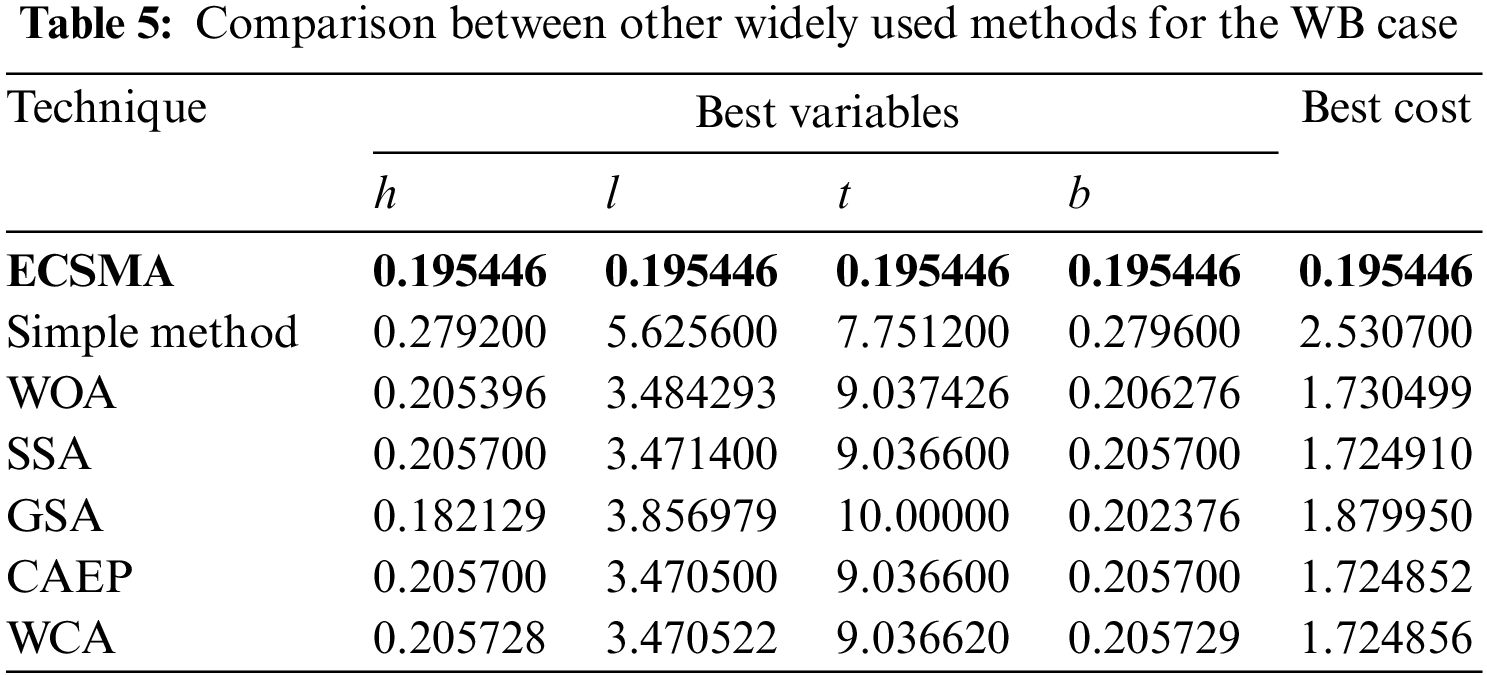

This structural design problem has been studied extensively as a constrained optimization problem. Mirjalili et al. [71] used SSA to optimize this problem. Rashedi et al. [8] proposed GSA to solve the problem. GSA could obtain an optimum cost of 1.879950.

Table 5 shows the results of ECSMA and other similar algorithms for solving WB. ECSMA’s performance is the best. And, best cost is 1.715213. Four parameters: h = 0.195446, l = 3.419576, t = 9.132268, and b = 0.205258. Finally, ECSMA can satisfy the constraints and solve this problem to obtain the minimum manufacturing cost for WB.

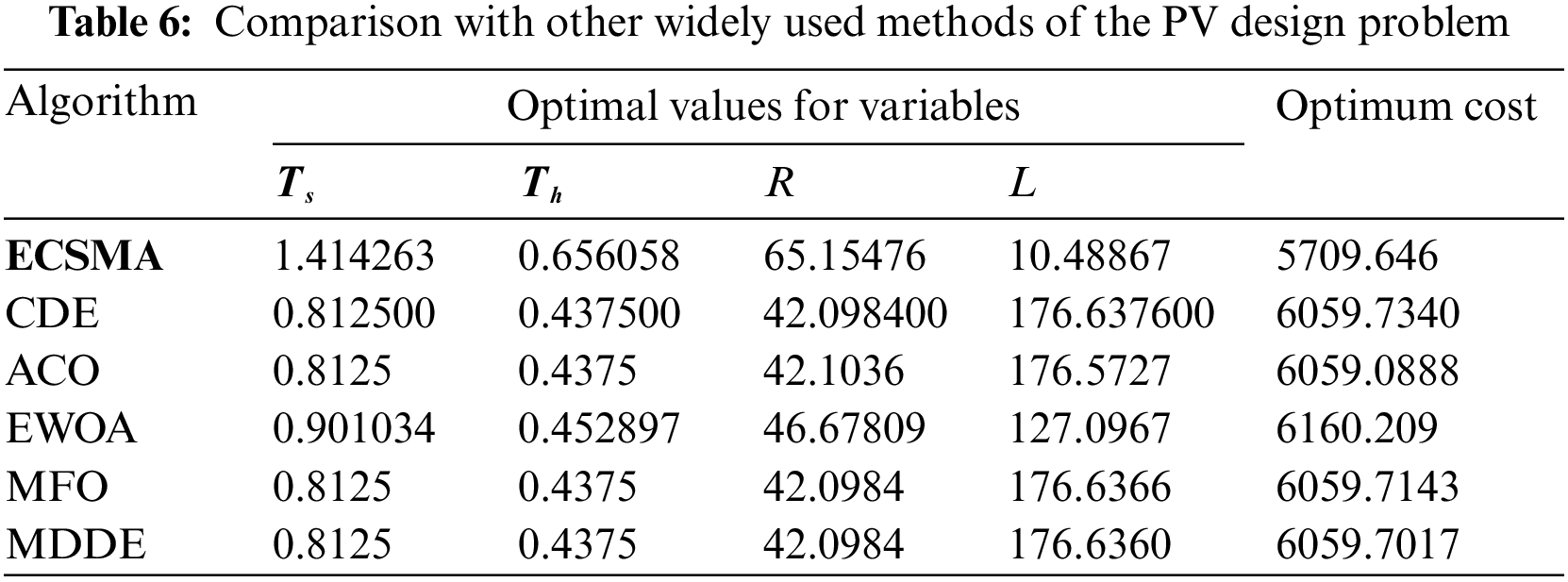

5.2 Structure Design of PV Design

The cylindrical PV model needs to optimize the constraint variables to reduce the cost. These variables are section range minus head (l), head thickness

Consider

Objective:

Variable ranges:

ECSMA was used to optimize the problem. The experimental results of ECSMA were compared with CDE, ACO [72,73], EWOA, MFO [74], MDDE. In Table 6, the comparison outcomes are shown in detail. The consequence of ECSMA is superior to other algorithms, which demonstrate that our proposed ECSMA can effectively handle this problem.

5.3 Structure Design of I-Beam

This experimental design minimizes the vertical deflection of the I-beam. And the model requires solving structural parameters such as length, height and thickness. The model is as follows:

Consider

Objective:

Subject to

Variable range

The meta-heuristic methods can be adopted in combination with mathematical models to solve the design problem of I-beam (IBD). Meta-heuristic methods include RCBA [59], WEMFO, SCA [6], CS [75], HBO [76], CLSGMFO [26]. The constraint correction equation of the loss function is adopted to deal with the IBD problem. The experimental comparison results of ECSMA and other optimizers are illustrated in Table 6. Further, we use the same penalty function to ensure a fair comparison.

Table 7 indicates that ECSMA is superior to other optimizers compared when handling IBD problems and ultimately yields the most efficient design.

5.4 Cantilever Beam Design Problem

In this engineering structural design problem, we use ECSMA to obtain the minimum quantity of materials of the cantilever beam. The cantilever beam is composed of five hollow square blocks vertically stacked together, and the inner diameter is arranged in increasing order. The mathematical model equation of the problem is as follows:

Consider

Minimize

Subject to

Variable range

ECSMA is used to deal with this optimization problem. At the same time, the results of ECSMA with CS, GCA_II [77], GCA_I [77], MMA [77], SOS, and SSA [71] are listed in Table 8.

As outlined in Table 8, it indicates that ECSMA possesses more stability and effectiveness than counterparts compared. Therefore, our method provides more economical results so that it can also be applied to more other fields in the future, such as power flow optimization [78], road network planning [79], information retrieval services [80,81], human activity recognition [82], structured sparsity optimization [83], dynamic module detection [84,85], recommender system [86,87], tensor completion [88], colorectal polyp region extraction [89], image-to-image translation [90], smart contract vulnerability detection [91], and medical data processing [92].

Finally, the experimental results of solving four classical structural design problems with the model designed in this paper demonstrate the feasibility and practicability of ECSMA. The experimental results demonstrate the ability of SMA to solve constrained problems, and ECSMA continues the advantage of SMA in solving for even trends.

6 Conclusions and Future Works

In this study, the ECSMA is designed for the lack of exploration and exploitation ability of the original SMA. In ECSMA, the elite strategy can facilitate the exploitation capability of SMA, and chaos stochastic mechanism is adopted to enhance the randomness, to improve the exploration ability during the early period. The introduction of the two strategies gives SMA a better balance of exploration and exploitation capabilities. The experimental results on the benchmark function set (including unimodal function, multimodal function, and dimensionally determined multimodal function) show that the two strategies introduced can effectively tackle the problem of function optimization, alleviate the premature convergence of SMA by jumping out of local optimum, and provide better accuracy and diversity of SMA. When handling the above four structure design problems, the simulation outcomes also demonstrate that ECSMA can achieve better accuracy of the calculation results, which has a certain practical value in a real-world application. However, since two improvement strategies are introduced, they inevitably cause an increase in the complexity of the algorithm, which makes ECSMA limited in some scenarios.

In the future, GPU parallel approaches and multi-threaded parallel processing will be considered to solve more complex problems. In addition, given that SMA is a relatively new algorithm, its in-depth study and application in multiple disciplines still need to be fully explored.

Funding Statement: This work was supported in part by the National Natural Science Foundation of China (J2124006, 62076185).

Availability of Data and Materials: The data involved in this study are all public data, which can be downloaded through public channels.

Conflicts of Interest:: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. Li, S., Chen, H., Wang, M., Heidari, A. A., Mirjalili, S. (2020). Slime mould algorithm: A new method for stochastic optimization. Future Generation Computer Systems, 111(Supplement C), 300–323. https://doi.org/10.1016/j.future.2020.03.055 [Google Scholar] [CrossRef]

2. Heidari, A. A., Mirjalili, S., Faris, H., Aljarah, I., Mafarja, M. et al. (2019). Harris hawks optimization: Algorithm and applications. Future Generation Computer Systems, 97, 849–872. https://doi.org/10.1016/j.future.2019.02.028 [Google Scholar] [CrossRef]

3. Chen, H., Jiao, S., Wang, M., Heidari, A. A., Zhao, X. (2019). Parameters identification of photovoltaic cells and modules using diversification-enriched Harris hawks optimization with chaotic drifts. Journal of Cleaner Production, 244, 118778. https://doi.org/10.1016/j.jclepro.2019.118778 [Google Scholar] [CrossRef]

4. Mirjalili, S., Mirjalili, S. M., Hatamlou, A. (2016). Multi-verse optimizer: A nature-inspired algorithm for global optimization. Neural Computing & Applications, 27(2), 495–513. https://doi.org/10.1007/s00521-015-1870-7 [Google Scholar] [CrossRef]

5. Del Valle, Y., Venayagamoorthy, G. K., Mohagheghi, S., Hernandez, J. C., Harley, R. G. (2008). Particle swarm optimization: Basic concepts, variants and applications in power systems. IEEE Transactions on Evolutionary Computation, 12(2), 171–195. https://doi.org/10.1109/TEVC.2007.896686 [Google Scholar] [CrossRef]

6. Mirjalili, S. (2016). SCA: A sine cosine algorithm for solving optimization problems. Knowledge-Based Systems, 96(63), 120–133. https://doi.org/10.1016/j.knosys.2015.12.022 [Google Scholar] [CrossRef]

7. Ji, Y., Tu, J., Zhou, H., Gui, W., Liang, G. et al. (2020). An adaptive chaotic sine cosine algorithm for constrained and unconstrained optimization. Complexity, 2020, 6084917. https://doi.org/10.1155/2020/6084917 [Google Scholar] [CrossRef]

8. Rashedi, E., Nezamabadi-Pour, H., Saryazdi, S. (2009). GSA: A gravitational search algorithm. Information Sciences, 179(13), 2232–2248. https://doi.org/10.1016/j.ins.2009.03.004 [Google Scholar] [CrossRef]

9. Ahmadianfar, I., Asghar Heidari, A., Noshadian, S., Chen, H., Gandomi, A. H. (2022). INFO: An efficient optimization algorithm based on weighted mean of vectors. Expert Systems with Applications, 195(12), 116516. https://doi.org/10.1016/j.eswa.2022.116516 [Google Scholar] [CrossRef]

10. Yang, Y., Chen, H., Heidari, A. A., Gandomi, A. H. (2021). Hunger games search: Visions, conception, implementation, deep analysis, perspectives, and towards performance shifts. Expert Systems with Applications, 177(8), 114864. https://doi.org/10.1016/j.eswa.2021.114864 [Google Scholar] [CrossRef]

11. Tu, J., Chen, H., Wang, M., Gandomi, A. H. (2021). The colony predation algorithm. Journal of Bionic Engineering, 18(3), 674–710. https://doi.org/10.1007/s42235-021-0050-y [Google Scholar] [CrossRef]

12. Ahmadianfar, I., Asghar Heidari, A., Gandomi, A. H., Chu, X., Chen, H. (2021). RUN beyond the metaphor: An efficient optimization algorithm based on Runge Kutta method. Expert Systems with Applications, 181(21), 115079. https://doi.org/10.1016/j.eswa.2021.115079 [Google Scholar] [CrossRef]

13. Tu, J., Chen, H., Liu, J., Heidari, A. A., Zhang, X. et al. (2021). Evolutionary biogeography-based whale optimization methods with communication structure: Towards measuring the balance. Knowledge-Based Systems, 212(9), 106642. https://doi.org/10.1016/j.knosys.2020.106642 [Google Scholar] [CrossRef]

14. Song, S., Wang, P., Heidari, A. A., Wang, M., Zhao, X. et al. (2021). Dimension decided Harris hawks optimization with Gaussian mutation: Balance analysis and diversity patterns. Knowledge-Based Systems, 215(5), 106425. https://doi.org/10.1016/j.knosys.2020.106425 [Google Scholar] [CrossRef]

15. Zhao, D., Liu, L., Yu, F., Heidari, A. A., Wang, M. et al. (2022). Opposition-based ant colony optimization with all-dimension neighborhood search for engineering design. Journal of Computational Design and Engineering, 9(3), 1007–1044. https://doi.org/10.1093/jcde/qwac038 [Google Scholar] [CrossRef]

16. Li, C., Li, J., Chen, H., Heidari, A. A. (2021). Memetic Harris hawks optimization: Developments and perspectives on project scheduling and QoS-aware web service composition. Expert Systems with Applications, 171(5), 114529. https://doi.org/10.1016/j.eswa.2020.114529 [Google Scholar] [CrossRef]

17. Wei, Z., Liu, L., Kuang, F., Li, L., Xu, S. et al. (2022). An efficient multi-threshold image segmentation for skin cancer using boosting whale optimizer. Computers in Biology and Medicine, 151, 106227. https://doi.org/10.1016/j.compbiomed.2022.106227 [Google Scholar] [PubMed] [CrossRef]

18. Liu, L., Zhao, D., Yu, F., Heidari, A. A., Li, C. et al. (2021). Ant colony optimization with Cauchy and greedy Levy mutations for multilevel COVID 19 X-ray image segmentation. Computers in Biology and Medicine, 136(13), 104609. https://doi.org/10.1016/j.compbiomed.2021.104609 [Google Scholar] [PubMed] [CrossRef]

19. Zhao, D., Liu, L., Yu, F., Heidari, A. A., Wang, M. et al. (2021). Chaotic random spare ant colony optimization for multi-threshold image segmentation of 2D Kapur entropy. Knowledge-Based Systems, 216, 106510. https://doi.org/10.1016/j.knosys.2020.106510 [Google Scholar] [CrossRef]

20. Chen, C., Wang, X., Yu, H., Wang, M., Chen, H. (2021). Dealing with multi-modality using synthesis of Moth-flame optimizer with sine cosine mechanisms. Mathematics and Computers in Simulation, 188(3), 291–318. https://doi.org/10.1016/j.matcom.2021.04.006 [Google Scholar] [CrossRef]

21. Wu, S., Mao, P., Li, R., Cai, Z., Heidari, A. A. et al. (2021). Evolving fuzzy k-nearest neighbors using an enhanced sine cosine algorithm: Case study of lupus nephritis. Computers in Biology and Medicine, 135(5), 104582. https://doi.org/10.1016/j.compbiomed.2021.104582 [Google Scholar] [PubMed] [CrossRef]

22. Deng, W., Xu, J., Zhao, H., Song, Y. (2020). A novel gate resource allocation method using improved PSO-based QEA. IEEE Transactions on Intelligent Transportation Systems, 23(3), 1737–1745. https://doi.org/10.1109/TITS.2020.3025796 [Google Scholar] [CrossRef]

23. Deng, W., Xu, J., Song, Y., Zhao, H. (2020). An effective improved co-evolution ant colony optimisation algorithm with multi-strategies and its application. International Journal of Bio-Inspired Computation, 16(3), 158–170. https://doi.org/10.1504/IJBIC.2020.111267 [Google Scholar] [CrossRef]

24. Hu, J., Gui, W., Heidari, A. A., Cai, Z., Liang, G. et al. (2022). Dispersed foraging slime mould algorithm: Continuous and binary variants for global optimization and wrapper-based feature selection. Knowledge-Based Systems, 237(5), 107761. https://doi.org/10.1016/j.knosys.2021.107761 [Google Scholar] [CrossRef]

25. Liu, Y., Heidari, A. A., Cai, Z., Liang, G., Chen, H. et al. (2022). Simulated annealing-based dynamic step shuffled frog leaping algorithm: Optimal performance design and feature selection. Neurocomputing, 503(6), 325–362. https://doi.org/10.1016/j.neucom.2022.06.075 [Google Scholar] [CrossRef]

26. Xu, Y., Chen, H., Heidari, A. A., Luo, J., Zhang, Q. et al. (2019). An efficient chaotic mutative moth-flame-inspired optimizer for global optimization tasks. Expert Systems with Applications, 129, 135–155. https://doi.org/10.1016/j.eswa.2019.03.043 [Google Scholar] [CrossRef]

27. Zhang, Y., Liu, R., Heidari, A. A., Wang, X., Chen, Y. et al. (2021). Towards augmented kernel extreme learning models for bankruptcy prediction: Algorithmic behavior and comprehensive analysis. Neurocomputing, 430(4), 185–212. https://doi.org/10.1016/j.neucom.2020.10.038 [Google Scholar] [CrossRef]

28. Wu, S. H., Zhan, Z. H., Zhang, J. (2021). SAFE: Scale-adaptive fitness evaluation method for expensive optimization problems. IEEE Transactions on Evolutionary Computation, 25(3), 478–491. https://doi.org/10.1109/TEVC.2021.3051608 [Google Scholar] [CrossRef]

29. Li, J. Y., Zhan, Z. H., Wang, C., Jin, H., Zhang, J. (2020). Boosting data-driven evolutionary algorithm with localized data generation. IEEE Transactions on Evolutionary Computation, 24(5), 923–937. https://doi.org/10.1109/TEVC.2020.2979740 [Google Scholar] [CrossRef]

30. Hussien, A. G., Heidari, A. A., Ye, X., Liang, G. et al. (2022). Boosting whale optimization with evolution strategy and Gaussian random walks: An image segmentation method. Engineering with Computers, 12(6), 702. https://doi.org/10.1007/s00366-021-01542-0 [Google Scholar] [CrossRef]

31. Yu, H., Song, J., Chen, C., Heidari, A. A., Liu, J. et al. (2022). Image segmentation of leaf spot diseases on maize using multi-stage Cauchy-enabled grey wolf algorithm. Engineering Applications of Artificial Intelligence, 109(12), 104653. https://doi.org/10.1016/j.engappai.2021.104653 [Google Scholar] [CrossRef]

32. He, Z., Yen, G. G., Ding, J. (2020). Knee-based decision making and visualization in many-objective optimization. IEEE Transactions on Evolutionary Computation, 25(2), 292–306. https://doi.org/10.1109/TEVC.2020.3027620 [Google Scholar] [CrossRef]

33. He, Z., Yen, G. G., Lv, J. (2019). Evolutionary multiobjective optimization with robustness enhancement. IEEE Transactions on Evolutionary Computation, 24(3), 494–507. https://doi.org/10.1109/TEVC.2019.2933444 [Google Scholar] [CrossRef]

34. Ye, X., Liu, W., Li, H., Wang, M., Chi, C. et al. (2021). Modified whale optimization algorithm for solar cell and PV module parameter identification. Complexity, 2021, 8878686. https://doi.org/10.1155/2021/8878686 [Google Scholar] [CrossRef]

35. Song, Y., Cai, X., Zhou, X., Zhang, B., Chen, H. et al. (2023). Dynamic hybrid mechanism-based differential evolution algorithm and its application. Expert Systems with Applications, 213(1), 118834. https://doi.org/10.1016/j.eswa.2022.118834 [Google Scholar] [CrossRef]

36. Deng, W., Zhang, X., Zhou, Y., Liu, Y., Zhou, X. et al. (2022). An enhanced fast non-dominated solution sorting genetic algorithm for multi-objective problems. Information Sciences, 585(5), 441–453. https://doi.org/10.1016/j.ins.2021.11.052 [Google Scholar] [CrossRef]

37. Hua, Y., Liu, Q., Hao, K., Jin, Y. (2021). A survey of evolutionary algorithms for multi-objective optimization problems with irregular pareto fronts. IEEE/CAA Journal of Automatica Sinica, 8(2), 303–318. https://doi.org/10.1109/JAS.2021.1003817 [Google Scholar] [CrossRef]

38. Deng, W., Ni, H., Liu, Y., Chen, H., Zhao, H. (2022). An adaptive differential evolution algorithm based on belief space and generalized opposition-based learning for resource allocation. Applied Soft Computing, 127(24), 109419. https://doi.org/10.1016/j.asoc.2022.109419 [Google Scholar] [CrossRef]

39. Han, X., Han, Y., Chen, Q., Li, J., Sang, H. et al. (2021). Distributed flow shop scheduling with sequence-dependent setup times using an improved iterated greedy algorithm. Complex System Modeling and Simulation, 1(3), 198–217. https://doi.org/10.23919/CSMS.2021.0018 [Google Scholar] [CrossRef]

40. Gao, D., Wang, G. G., Pedrycz, W. (2020). Solving fuzzy job-shop scheduling problem using DE algorithm improved by a selection mechanism. IEEE Transactions on Fuzzy Systems, 28(12), 3265–3275. https://doi.org/10.1109/TFUZZ.2020.3003506 [Google Scholar] [CrossRef]

41. Wang, G. G., Gao, D., Pedrycz, W. (2022). Solving multiobjective fuzzy job-shop scheduling problem by a hybrid adaptive differential evolution algorithm. IEEE Transactions on Industrial Informatics, 18(12), 8519–8528. https://doi.org/10.1109/TII.2022.3165636 [Google Scholar] [CrossRef]

42. Chen, L. H., Yang, B., Wang, S. J., Wang, G., Li, H. Z. et al. (2014). Towards an optimal support vector machine classifier using a parallel particle swarm optimization strategy. Applied Mathematics and Computation, 239(3), 180–197. https://doi.org/10.1016/j.amc.2014.04.039 [Google Scholar] [CrossRef]

43. Wang, M., Chen, H., Yang, B., Zhao, X., Hu, L. et al. (2017). Toward an optimal kernel extreme learning machine using a chaotic moth-flame optimization strategy with applications in medical diagnoses. Neurocomputing, 267(1–3), 69–84. https://doi.org/10.1016/j.neucom.2017.04.060 [Google Scholar] [CrossRef]

44. Chen, H. L., Wang, G., Ma, C., Cai, Z. N., Liu, W. B. et al. (2016). An efficient hybrid kernel extreme learning machine approach for early diagnosis of Parkinson’s disease. Neurocomputing, 184(6), 131–144. https://doi.org/10.1016/j.neucom.2015.07.138 [Google Scholar] [CrossRef]

45. Deng, W., Xu, J., Gao, X. Z., Zhao, H. (2022). An enhanced MSIQDE algorithm with novel multiple strategies for global optimization problems. IEEE Transactions on Systems, Man, and Cybernetics: Systems, 52(3), 1578–1587. https://doi.org/10.1109/TSMC.2020.3030792 [Google Scholar] [CrossRef]

46. Ebadinezhad, S. (2020). DEACO: Adopting dynamic evaporation strategy to enhance ACO algorithm for the traveling salesman problem. Engineering Applications of Artificial Intelligence, 92(1), 103649. https://doi.org/10.1016/j.engappai.2020.103649 [Google Scholar] [CrossRef]

47. Chen, H., Wang, M., Zhao, X. (2020). A multi-strategy enhanced sine cosine algorithm for global optimization and constrained practical engineering problems. Applied Mathematics and Computation, 369(4), 124872. https://doi.org/10.1016/j.amc.2019.124872 [Google Scholar] [CrossRef]

48. Guo, W., Liu, T., Dai, F., Xu, P. (2020). An improved whale optimization algorithm for forecasting water resources demand. Applied Soft Computing, 86, 105925. https://doi.org/10.1016/j.asoc.2019.105925 [Google Scholar] [CrossRef]

49. Jiang, J., Yang, X., Meng, X., Li, K. (2020). Enhance chaotic gravitational search algorithm (CGSA) by balance adjustment mechanism and sine randomness function for continuous optimization problems. Physica A: Statistical Mechanics and its Applications, 537(96), 122621. https://doi.org/10.1016/j.physa.2019.122621 [Google Scholar] [CrossRef]

50. Javidi, A., Salajegheh, E., Salajegheh, J. (2019). Enhanced crow search algorithm for optimum design of structures. Applied Soft Computing, 77, 274–289. https://doi.org/10.1016/j.asoc.2019.01.026 [Google Scholar] [CrossRef]

51. Tawhid, M. A., Dsouza, K. B. (2018). Hybrid binary dragonfly enhanced particle swarm optimization algorithm for solving feature selection problems. Mathematical Foundations of Computing, 1(2), 181–200. https://doi.org/10.3934/mfc.2018009 [Google Scholar] [CrossRef]

52. Luo, Q., Yang, X., Zhou, Y. (2019). Nature-inspired approach: An enhanced moth swarm algorithm for global optimization. Mathematics and Computers in Simulation, 159(1), 57–92. https://doi.org/10.1016/j.matcom.2018.10.011 [Google Scholar] [CrossRef]

53. Sulaiman, N., Mohamad-Saleh, J., Abro, A. G. (2018). A hybrid algorithm of ABC variant and enhanced EGS local search technique for enhanced optimization performance. Engineering Applications of Artificial Intelligence, 74, 10–22. https://doi.org/10.1016/j.engappai.2018.05.002 [Google Scholar] [CrossRef]

54. Zhou, X., Lu, J., Huang, J., Zhong, M., Wang, M. (2021). Enhancing artificial bee colony algorithm with multi-elite guidance. Information Sciences, 543(2), 242–258. https://doi.org/10.1016/j.ins.2020.07.037 [Google Scholar] [CrossRef]

55. Cai, Z., Gu, J., Luo, J., Zhang, Q., Chen, H. et al. (2019). Evolving an optimal kernel extreme learning machine by using an enhanced grey wolf optimization strategy. Expert Systems with Applications, 138, 112814. https://doi.org/10.1016/j.eswa.2019.07.031 [Google Scholar] [CrossRef]

56. Heidari, A. A., Ali Abbaspour, R., Chen, H. (2019). Efficient boosted grey wolf optimizers for global search and kernel extreme learning machine training. Applied Soft Computing, 81(13), 105521. https://doi.org/10.1016/j.asoc.2019.105521 [Google Scholar] [CrossRef]

57. Yousri, D., Allam, D., Eteiba, M. B. (2019). Chaotic whale optimizer variants for parameters estimation of the chaotic behavior in permanent magnet synchronous motor. Applied Soft Computing Journal, 74(1), 479–503. https://doi.org/10.1016/j.asoc.2018.10.032 [Google Scholar] [CrossRef]

58. Tubishat, M., Abushariah, M. A. M., Idris, N., Aljarah, I. (2019). Improved whale optimization algorithm for feature selection in Arabic sentiment analysis. Applied Intelligence, 49(5), 1688–1707. https://doi.org/10.1007/s10489-018-1334-8 [Google Scholar] [CrossRef]

59. Liang, H., Liu, Y., Shen, Y., Li, F., Man, Y. (2018). A hybrid bat algorithm for economic dispatch with random wind power. IEEE Transactions on Power Systems, 33(5), 5052–5061. https://doi.org/10.1109/TPWRS.2018.2812711 [Google Scholar] [CrossRef]

60. Li, H. W., Liu, J. Y., Chen, L., Bai, J. B., Sun, Y. Y. et al. (2019). Chaos-enhanced moth-flame optimization algorithm for global optimization. Journal of Systems Engineering and Electronics, 30(6), 1144–1159. https://doi.org/10.21629/JSEE.2019.06.10 [Google Scholar] [CrossRef]

61. Zhang, J., Sanderson, A. C. (2009). JADE: Adaptive differential evolution with optional external archive. IEEE Transactions on Evolutionary Computation, 13(5), 945–958. https://doi.org/10.1109/TEVC.2009.2014613 [Google Scholar] [CrossRef]

62. Chen, W., Zhang, J., Lin, Y., Chen, N., Zhan, Z. et al. (2013). Particle swarm optimization with an aging leader and challengers. IEEE Transactions on Evolutionary Computation, 17(2), 241–258. https://doi.org/10.1109/TEVC.2011.2173577 [Google Scholar] [CrossRef]

63. Yang, X. S. (2010). A new metaheuristic bat-inspired Algorithmed. In: Studies in computational intelligence, pp. 65–74. Berlin, Heidelberg: Springer. [Google Scholar]

64. Qin, A. K., Huang, V. L., Suganthan, P. N. (2009). Differential evolution algorithm with strategy adaptation for global numerical optimization. IEEE Transactions on Evolutionary Computation, 13(2), 398–417. https://doi.org/10.1109/TEVC.2008.927706 [Google Scholar] [CrossRef]

65. Mirjalili, S., Lewis, A. (2016). The whale optimization algorithm. Advances in Engineering Software, 95(12), 51–67. https://doi.org/10.1016/j.advengsoft.2016.01.008 [Google Scholar] [CrossRef]

66. Mirjalili, S., Mirjalili, S. M., Lewis, A. (2014). Grey wolf optimizer. Advances in Engineering Software, 69, 46–61. https://doi.org/10.1016/j.advengsoft.2013.12.007 [Google Scholar] [CrossRef]

67. Wu, G., Mallipeddi, R., Suganthan, P. N., Wang, R., Chen, H. (2016). Differential evolution with multi-population based ensemble of mutation strategies. Information Sciences, 329, 329–345. https://doi.org/10.1016/j.ins.2015.09.009 [Google Scholar] [CrossRef]

68. Mallipeddi, R., Suganthan, P. N., Pan, Q. K., Tasgetiren, M. F. (2011). Differential evolution algorithm with ensemble of parameters and mutation strategies. Applied Soft Computing, 11(2), 1679–1696. https://doi.org/10.1016/j.asoc.2010.04.024 [Google Scholar] [CrossRef]

69. Tanabe, R., Fukunaga, A. S. (2014). Improving the search performance of SHADE using linear population size reductioned. 2014 IEEE Congress on Evolutionary Computation (CEC), pp. 1658–1665. Beijing, China. [Google Scholar]

70. Awad, N. H., Ali, M. Z., Suganthan, P. N. (2017). Ensemble sinusoidal differential covariance matrix adaptation with Euclidean neighborhood for solving CEC2017 benchmark problemsed. 2017 IEEE Congress on Evolutionary Computation (CEC), pp. 372–379. Donostia, Spain. [Google Scholar]

71. Mirjalili, S., Gandomi, A. H., Mirjalili, S. Z., Saremi, S., Faris, H. et al. (2017). Salp swarm algorithm: A bio-inspired optimizer for engineering design problems. Advances in Engineering Software, 114, 163–191. https://doi.org/10.1016/j.advengsoft.2017.07.002 [Google Scholar] [CrossRef]

72. Dorigo, M. (1992). Optimization, learning and natural algorithmsed (Ph.D. Thesis). Politecnico di Milano. [Google Scholar]

73. Dorigo, M., Caro, G. D. (1999). The ant colony optimization meta-heuristiced. In: In new ideas in optimization, pp. 11–32. UK: McGraw-Hill, Ltd. [Google Scholar]

74. Mirjalili, S. (2015). Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowledge-Based Systems, 89, 228–249. https://doi.org/10.1016/j.knosys.2015.07.006 [Google Scholar] [CrossRef]

75. Yang, X., Suash, D. (2009). Cuckoo search via Lévy flightsed. 2009 World Congress on Nature & Biologically Inspired Computing (NaBIC), pp. 210–214. Coimbatore, India. [Google Scholar]

76. Cheng, M. Y., Prayogo, D. (2014). Symbiotic organisms search: A new metaheuristic optimization algorithm. Computers & Structures, 139, 98–112. https://doi.org/10.1016/j.compstruc.2014.03.007 [Google Scholar] [CrossRef]

77. Chickermane, H., Gea, H. C. (1996). Structural optimization using a new local approximation method. International Journal for Numerical Methods in Engineering, 39, 829–846. https://doi.org/10.1002/(ISSN)1097-0207 [Google Scholar] [CrossRef]

78. Cao, X., Wang, J., Zeng, B. (2022). A study on the strong duality of second-order conic relaxation of AC optimal power flow in radial networks. IEEE Transactions on Power Systems, 37(1), 443–455. https://doi.org/10.1109/TPWRS.2021.3087639 [Google Scholar] [CrossRef]

79. Huang, L., Yang, Y., Chen, H., Zhang, Y., Wang, Z. et al. (2022). Context-aware road travel time estimation by coupled tensor decomposition based on trajectory data. Knowledge-Based Systems, 245(6), 108596. https://doi.org/10.1016/j.knosys.2022.108596 [Google Scholar] [CrossRef]

80. Wu, Z., Li, R., Xie, J., Zhou, Z., Guo, J. et al. (2020). A user sensitive subject protection approach for book search service. Journal of the Association for Information Science and Technology, 71(2), 183–195. https://doi.org/10.1002/asi.24227 [Google Scholar] [CrossRef]

81. Wu, Z., Shen, S., Zhou, H., Li, H., Lu, C. et al. (2021). An effective approach for the protection of user commodity viewing privacy in e-commerce website. Knowledge-Based Systems, 220(2), 106952. https://doi.org/10.1016/j.knosys.2021.106952 [Google Scholar] [CrossRef]

82. Qiu, S., Zhao, H., Jiang, N., Wang, Z., Liu, L. et al. (2022). Multi-sensor information fusion based on machine learning for real applications in human activity recognition: State-of-the-art and research challenges. Information Fusion, 80(2), 241–265. https://doi.org/10.1016/j.inffus.2021.11.006 [Google Scholar] [CrossRef]

83. Zhang, X., Zheng, J., Wang, D., Tang, G., Zhou, Z. et al. (2022). Structured sparsity optimization with non-convex surrogates of l2,0-Norm: A unified algorithmic framework. IEEE Transactions on Pattern Analysis and Machine Intelligence, 1–18. https://doi.org/10.1109/TPAMI.2022.3213716 [Google Scholar] [PubMed] [CrossRef]

84. Li, D., Zhang, S., Ma, X. (2021). Dynamic module detection in temporal attributed networks of cancers. IEEE/ACM Transactions on Computational Biology and Bioinformatics, 19(4), 2219–2230. https://doi.org/10.1109/TCBB.2021.3069441 [Google Scholar] [PubMed] [CrossRef]

85. Ma, X., Sun, P. G., Gong, M. (2020). An integrative framework of heterogeneous genomic data for cancer dynamic modules based on matrix decomposition. IEEE/ACM Transactions on Computational Biology and Bioinformatics, 19(1), 305–316. https://doi.org/10.1109/TCBB.2020.3004808 [Google Scholar] [PubMed] [CrossRef]

86. Li, J., Chen, C., Chen, H., Tong, C. (2017). Towards context-aware social recommendation via individual trust. Knowledge-Based Systems, 127(8), 58–66. https://doi.org/10.1016/j.knosys.2017.02.032 [Google Scholar] [CrossRef]

87. Li J., Zheng X. L., Chen S. T., Song W. W., Chen D. R. (2014). An efficient and reliable approach for quality-of-service-aware service composition. Information Sciences, 269(3), 238–254. https://doi.org/10.1016/j.ins.2013.12.015 [Google Scholar] [CrossRef]

88. Wang, W., Zheng, J., Zhao, L., Chen, H., Zhang, X. (2022). A non-local tensor completion algorithm based on weighted tensor nuclear norm. Electronics, 11(19), 3250. https://doi.org/10.3390/electronics11193250 [Google Scholar] [CrossRef]

89. Hu, K., Zhao, L., Feng, S., Zhang, S., Zhou, Q. et al. (2022). Colorectal polyp region extraction using saliency detection network with neutrosophic enhancement. Computers in Biology and Medicine, 147(10), 105760. https://doi.org/10.1016/j.compbiomed.2022.105760 [Google Scholar] [PubMed] [CrossRef]

90. Zhang, X., Fan, C., Xiao, Z., Zhao, L., Chen, H. et al. (2022). Random reconstructed unpaired image-to-image translation. IEEE Transactions on Industrial Informatics, 1. https://doi.org/10.1109/TII.2022.3160705 [Google Scholar] [CrossRef]

91. Zhang, L., Wang, J., Wang, W., Jin, Z., Su, Y. et al. (2022). Smart contract vulnerability detection combined with multi-objective detection. Computer Networks, 217(10), 109289. https://doi.org/10.1016/j.comnet.2022.109289 [Google Scholar] [CrossRef]

92. Guo, K., Chen, T., Ren, S., Li, N., Hu, M. et al. (2022). Federated learning empowered real-time medical data processing method for smart healthcare. IEEE/ACM Transactions on Computational Biology and Bioinformatics, 1–12. https://doi.org/10.1109/TCBB.2022.3185395 [Google Scholar] [PubMed] [CrossRef]

Appendix

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools