Open Access

Open Access

ARTICLE

Easy to Calibrate: Marker-Less Calibration of Multiview Azure Kinect

1 Department of Liberal Arts, Hongik University, Sejong, 30016, Korea

2 Department of Computer Science and RINS, Gyeongsang National University, Jinju-si, 52828, Korea

* Corresponding Author: Suwon Lee. Email:

(This article belongs to the Special Issue: Application of Computer Tools in the Study of Mathematical Problems)

Computer Modeling in Engineering & Sciences 2023, 136(3), 3083-3096. https://doi.org/10.32604/cmes.2023.024460

Received 31 May 2022; Accepted 22 November 2022; Issue published 09 March 2023

Abstract

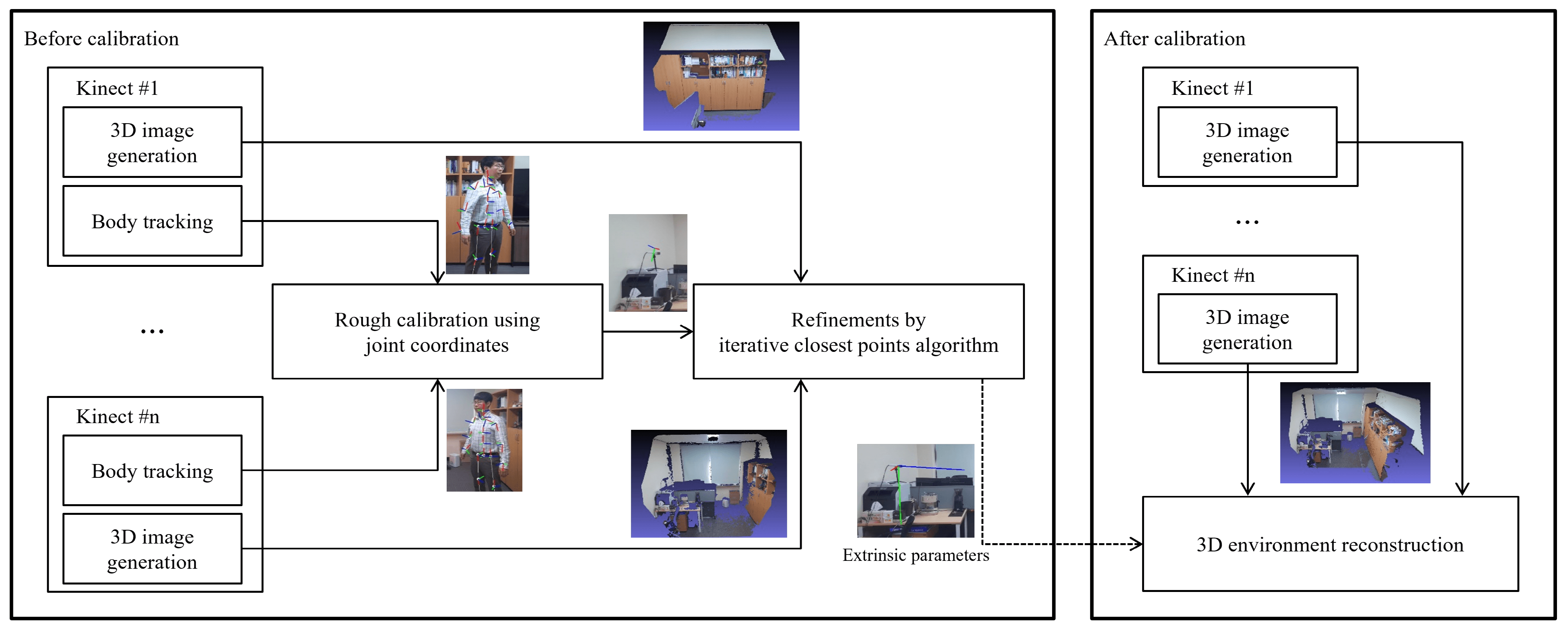

Reconstructing a three-dimensional (3D) environment is an indispensable technique to make augmented reality and augmented virtuality feasible. A Kinect device is an efficient tool for reconstructing 3D environments, and using multiple Kinect devices enables the enhancement of reconstruction density and expansion of virtual spaces. To employ multiple devices simultaneously, Kinect devices need to be calibrated with respect to each other. There are several schemes available that calibrate 3D images generated from multiple Kinect devices, including the marker detection method. In this study, we introduce a markerless calibration technique for Azure Kinect devices that avoids the drawbacks of marker detection, which directly affects calibration accuracy; it offers superior user-friendliness, efficiency, and accuracy. Further, we applied a joint tracking algorithm to approximate the calibration. Traditional methods require the information of multiple joints for calibration; however, Azure Kinect, the latest version of Kinect, requires the information of only one joint. The obtained result was further refined using the iterative closest point algorithm. We conducted several experimental tests that confirmed the enhanced efficiency and accuracy of the proposed method for multiple Kinect devices when compared to the conventional marker-based calibration.Graphic Abstract

Keywords

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools