Open Access

Open Access

ARTICLE

UOUU: User-Object Distance and User-User Distance Combined Method for Collaboration Task

College of Computer Science and Technology, Zhejiang University, Hangzhou, 310000, China

* Corresponding Author: Pengfei Wang. Email:

(This article belongs to the Special Issue: Recent Advances in Virtual Reality)

Computer Modeling in Engineering & Sciences 2023, 136(3), 3213-3238. https://doi.org/10.32604/cmes.2023.023895

Received 17 May 2022; Accepted 21 November 2022; Issue published 09 March 2023

Abstract

Augmented reality superimposes digital information onto objects in the physical world and enables multi-user collaboration. Despite that previous proxemic interaction research has explored many applications of user-object distance and user-user distance in an augmented reality context, respectively, and combining both types of distance can improve the efficiency of users’ perception and interaction with task objects and collaborators by providing users with insight into spatial relations of user-task object and user-user, less is concerned about how the two types of distances can be simultaneously adopted to assist collaboration tasks across multi-users. To fulfill the gap, we present UOUU, the user-object distance and user-user distance combined method for dynamically assigning tasks across users. We conducted empirical studies to investigate how the method affected user collaboration tasks in terms of collaboration occurrence and overall task efficiency. The results show that the method significantly improves the speed and accuracy of the collaboration tasks as well as the frequencies of collaboration occurrences. The study also confirms the method’s effects on stimulating collaboration activities, as the UOUU method has effectively reduced the participants’ perceived workload and the overall moving distances during the tasks. Implications for generalising the use of the method are discussed.Graphic Abstract

Keywords

Augmented reality (AR) superimposes virtual information on real-world objects [1], shaping an augmented space that allows users to interact with virtual-real hybrid objects [2]. The augmented space has several advantages, e.g., personalised information display according to the user-object distances and user-user distances [3]. These distances were used to reflect users’ interaction status and indicate their intention of interaction. For example, when a user approaches an exhibit at a closer physical distance, she probably has a strong interest in the target [4]. In previous studies on proxemic interaction, the spatial distance between a user and her surrounding physical artifacts (e.g., a monitor) is referred as the user-object distance (UOD) [5,6], and the spatial distance between one and the other individual users is referred as user-user distance (UUD) [7,8]. The two distances both act as an interaction modality that effectively mediates user-object and user-user interactions [9]. The processing of these distances was implemented through task offloading algorithms of edge computing [10]. Previous studies have explored the design and application of each type of the distance in various contexts, e.g., large advertising displays, fine art galleries, and video games [11,12]. However, much less is concerned about how the UOD and UUD can be combined as a method that facilitates collaboration tasks across multiusers in augmented reality.

Given the benefits of leveraging multi-user collaboration in augmented reality, the UOD and UUD have become an increasingly important topic. For example, UOD eliminates cognitive user load in user-screen information browsing tasks [13], and UUD mediates interpersonal communication and collaboration [14]. Although previous research has explored the design and applications of the UOD and UUD, respectively, the two distances remain as two separate concepts. Specifically, UOD-based interaction puts a strong focus on user-object interaction, whereas UUD-based interaction emphasises more on the interactive activities across users. The two concepts have respective cons and pros. For example, the UOD discourages users’ collaboration willingness with low cognitive loads, and the UUD requires users to learn and memorise task execution steps in advance. The centralised task learning effort reduces the user’s start up efficiency and increases task errors [15]. In this regard, neither the UOD nor the UUD is sufficient in supporting complicated collaboration tasks.

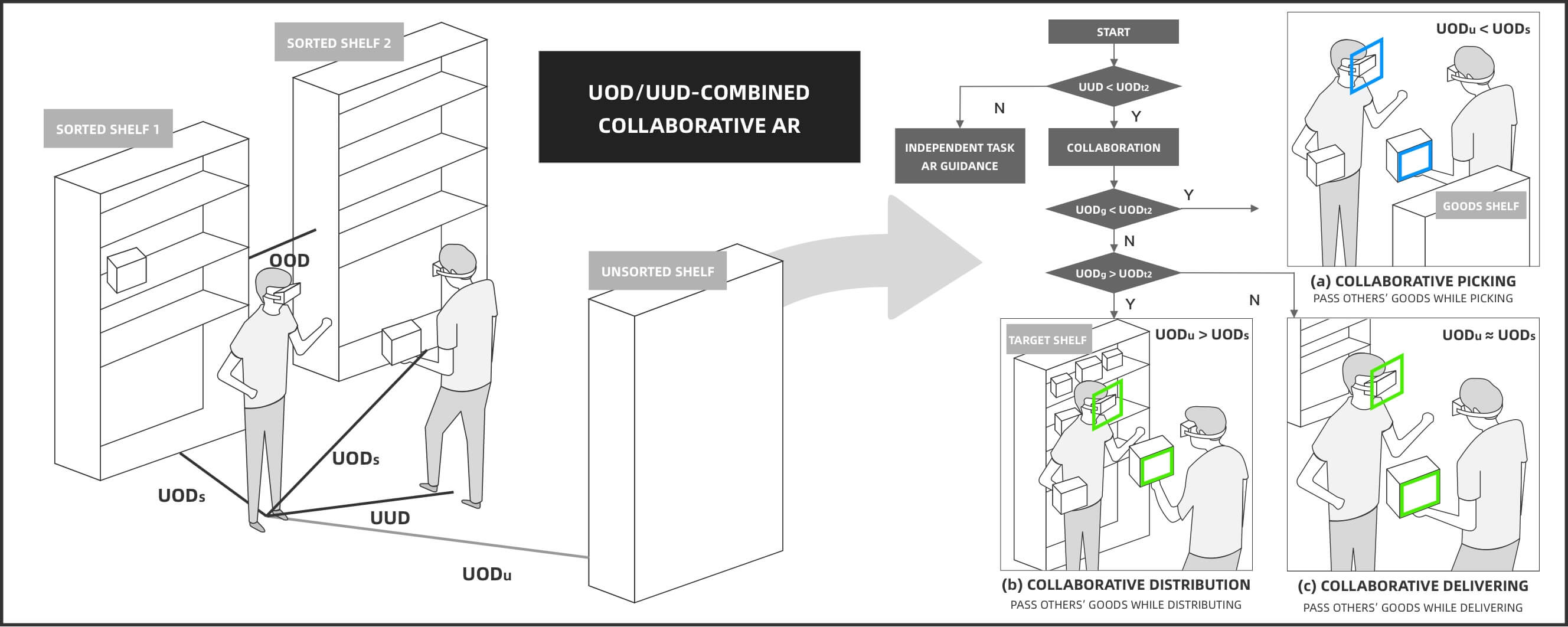

To understand how the UOD and UUD can be simultaneously adopted to assist collaboration tasks, the paper presents UOUU, a UOD and UUD combined collaboration method that is specialised for distributing collaboration tasks across users. The method measures the UOD and UUD during collaboration tasks, and displays corresponding user interfaces driven by task assignment mechanisms that determine when and where to assign collaborative tasks to which user. For example, when the UUD decreases, the users are to be assigned more collaboration tasks to support each other, which maintains task operation efficiency. We conducted empirical studies to investigate how the UOUU method affected multi-user collaboration in an augmented reality-based parcel sorting task.

The main contributions of this paper are two-fold. Firstly, it proposes the UOUU method that integrates the UOD and UUD to enable dynamic collaboration task assignment in an augmented reality context. Unlike the conventional studies that use the UOD or UUD as a separate method, the proposed method enables simultaneous use of the two types of distances. Secondly, it adds a new understanding of how the proposed method influences multi-user collaboration in augmented reality. It assesses the role of combined distances in terms of collaboration occurrences and efficiency.

The remainder of the paper is organised as follows. Section 2 reviews literatures of related research in UOD and UUD in the proximic interaction and augmented reality collaboration. Section 3 describes details of the UOUU method. Section 4 explains the design and evaluation of the method. Section 5 analyses the experiment results. Section 6 discusses the study findings and generalising implications, and Section 7 concludes the study.

2.1 User-Object Distance and User-User Distance in Proxemic Interaction

Proxemics interaction involves multiple factors such as distance, position, direction, and motion [6]. There are two specific types of distances that are frequently concerned in both single user and multi-user interaction. User-object distance (UOD) is the horizontal distance between a user and a target object and is located on a circular plane [16]. It explores how spatial relations between users and artifacts influence their interaction [17]. User-user distance (UUD) is the spatial distance between a user and another. It is often regarded as a medium that interprets collaboration based on human’s different requirements for space in proxemics [18]. According to the theory of human spatial relations that originated from Hall’s concept of proxemics, these spatial relations were categorised into a variety of invisible zones [19]. Kendon’s theory of the F-formation system discussed distance by articulating patterns of human behaviours in different spatial contexts [20]. Distance-driven interaction is regarded as a natural interaction method that responds to user’s behaviours, as it comes from what we have already experienced in the real world (e.g., we move closer to the target when we want to observe it with more detail).

The UOD is regarded as an effective modality in revealing user’s attention and enhancing the engagement. Research of UOD attempted to perceive user’s attention to the screen. A shorter UOD used to suggest an increase of user attention [21]. Researchers detected UOD between the user and screens to analyse the user’s pointing direction and attention of the screen [22]. UOD also encourages users to interact more with screens. For example, generating pattern variations based on UOD between the user and a large cylindrical display encouraged user’s sustainable interaction with the screen [23]. To facilitate interaction with maps on the large screen, zooming in and out of a map was controlled by decreasing and increasing UOD between the user and the large screen [24]. Despite these benefits, UOD only focuses on single user and objects and is incapable of dealing with user-user interaction during collaboration tasks.

UUD variations such as proximity and remoteness between users affect collaborative task performing [25]. It has been a parameter to articulate interpersonal relations in cross-device systems [26]. UUD is often used in large displays to identify what users are doing and their territory during collaboration [27]. Dostal et al. proposed a map on a large display that controlled the zooming multiple maps by the UUD, so to support collaborative work and improve user perception of the data set [28]. Shared AR content with location enabled users to build interdependencies in collaboration, UUD to the content publisher made it more attractive [29]. UUD mediated interaction pays attention to the insight into interpersonal relations but lacks focus on task performing on different object. Understanding the isolated user-user relations can guide user to start collaboration. However, it is insufficient to understand when collaboration is efficient and how to collaborate in different task stages. As these issues are tied to the user-object spatial relations when task operation requires continuous movements.

Previous research has separately adopted the UOD and UUD in various interaction applications, but they usually helped users to understand their relation with task objects or collaborators instead of both of them, users cannot directly adopt the guidance given by the method in collboarations [30]. Users need to pay attention to the other kind of distance and integrate it with system’s guidance to perform tasks, which hinders normal user behaviours [15]. Another concern is how to respond to the change of both UOD and UUD to enhance collaboration task performance [21]. Therefore, combining the two types of distances for analysis can provide direct task guidance. Combined distance analysis also reduces the additional cognitive load generated by the perception of other spatial relations. Therefore, combining two types of distance to coordinate collaborative interactions is promising to improve collaboration efficiency.

2.2 Collaboration in Augmented Reality

Augmented Reality (AR) integrates virtual objects and real environments and supported real-time interaction [1]. The hybrid space extends users’ perception of the real world in a context-sensitive manner and reduces interpretation of data and patterns, which also supplies more flexibility for multi-user collaboration [31]. AR presents shared information in a real environment which allows communication and interaction among co-located users and provides an intuitive interface for collaboration [32]. AR users’ performance on the perception of non-verbal information was improved in collaborative work [33]. Despite increasing research interest in augmented reality techniques and collaboration applications, how the distances could be seamlessly combined and implemented to mediate the augmented reality-based collaboration remains implicit [34].

Previous research in AR explored the collaborative observation, creation, and modification of virtual information and collaborators’ information sharing and receiving. In an application of information editing, a study transformed sound data into 3D stereo waveforms and superimposed it in the real world, enabling multi-users to view and modify the sound by altering 3D models of stereo waveform through hand gestures [35]. In AR collaboration for observing 3D models, context switching between the interface and the real environment increased the user’s workload. The number of collaboration occurrences decreased as the complexity of 3D models in AR increased [36]. In an application of information sharing, a 360 degree AR projection allows users to view and annotate real-time video streams and supports the ability to project them in the real environment [37]. Remote experts can be superimposed on the environment around a local worker as a virtual avatar. Sharing the gaze and posture of the remote expert enhanced communication and collaboration [38]. Furthermore, the virtual-physical hybrid space is also effective in reducing users’ cognitive loads by minimizing context switching [39].

Although there are many applications of AR based multi-user collaboration, the current interaction modalities and user interface paradigms are mainly derived from the conventional desktop metaphor, which is largely optimised for the individual user. This inspires us to consider the spatial characteristics of AR. And as distance-based interaction has been validated as an efficient spatial interaction modality, we combined them to further improve AR’s capability to support collaborative tasks.

2.3 Lessons Learned and Hypothesis Development

The review authenticates that UOD acts as a spatial interaction medium to improve perception efficiency by driving the decentralised task execution within the users’ existing experiences. UUD acts as a representation of the development of user-user relationships in group interactions, and its variations in shape and size symbolises user’s collaborative intentions such as synergistic and dyadic relationships. However, either of these distances alone lacks a focus on the task object or collaborator, the UOUU, which combines these two distances, is therefore a promising method for effectively leveraging both relations to address the challenges of when and how to collaborate in a collaborative task. AR provides users with a shared hybrid environment that maps virtual information to the real world, which allows users to focus more on group communication and task operation. Therefore, integrating UOD and UUD in AR allows for natural and efficient interaction of both task operation and collaboration. The current key research challenge is how to combine UOD and UUD to achieve more effective collaboration in AR.

Given the above understanding, we develop hypotheses as follows:

• H0: The UOD and UUD combined collaboration is not improved over the use of isolated UOD or UUD.

• H1: The UOUU improves participants’ collaboration occurrences.

• H2: The UOUU improves overall task efficiency.

3 UOD and UUD Combined Collaboration Method (UOUU)

In face-to-face collaboration, there are tasks that require users to frequently traverse between locations of objects and users. Production pipelines in the industry have been proven to improve task efficiency. Inspired by this, the production pipeline formed by assigning different task steps to different users based on spatial relations can reduce user’s movement and task context switching and improve efficiency. However, different from the normal production pipeline, when a user needs to move frequently during the interaction, it is difficult to distribute a fixed flow for user collaboration. How to dynamically allocate task steps to the specific user requires analysis of spatial relations between task objects and users.

UOUU instructs users to collaborate or work independently from a combined analysis of UOD and UUD, assigns optimal task flow guidance and forms a dynamic production line. The details of the method design are described as follows.

3.1 User-Object Distance Model

In a task with multiple objects, the UOD between the user and each object changes corresponding to different part of a task. This UOD defines the part of the task and users’ interaction intention. Interaction based on UOD improved the user’s cognitive efficiency [40]. Therefore, UOUU gradually provides operation guides in task performing while UOD changes instead of the centralized pre-learning. The threshold of this interaction scope is designed according to the user egocentric interaction model whose guidance gradually turns from inattention to recognition, observation, and operation [39] (The design of the corresponding interface varied according to task characteristics, and a set of examples is shown at the bottom of Fig. 1).

Figure 1: The distances in user-object interaction

This model divides the UOD into 4 different distance ranges:

• 0 to 0.6 m is operator distance (Dop), in which the users can directly touch task objects.

• 0.6 to 1.2 m is observer distance (Dob), in which the task object is the main object in the user’s field of vision.

• 1.2 to 2.4 m is passerby distance (Dp), in which the user may not identify the task objects.

• 2.4 m and above is world distance (Dw), in which the task object is part of the world environment and is hard to identify.

UOUU guides the user to operate the task on the object with visual cues of operation gestures at Dop, and guides the user to observe the object by presenting corresponding text and patterns at Dob, and reminds the user with a visual cue to point out the task object at Dp, and enhances the visual effect of cues to highlight the task object as the user regards task objects at Dw. Besides, UOUU instructs users to an object with a smaller UOD to perform the next task step to shorten the task path the user needs to travel.

The procedures of UOD in UOUU are as follows:

(1) instruct users to perform tasks at the closer object to optimise the task route.

In which the user needs to interact with n objects, the UOD to the task object

(2) gradually instruct the users from observation to operation by following user egocentric interaction model.

In which g(x) is the piecewise equation of the guidance interface of the UOD-driven part in UOUU. Operate(x) is the operation method that generates visual cues of operation gestures to guide the user on how to operate the task on the object. observe(x) is the observation method that generates relevant graphics and text to assist user in observing the task object, remind(x) is the reminder method that generates arrow visual cues pointing to objects to remind the user of the task objects’ location, and highlight(x) is the strong reminder method that generates visual cue of arrows and object’s outline to notify user.

In multi-user tasks, UUD indicates users’ willingness and probability of collaboration [41]. Previous research reported that collaboration was more effective with UUD-based interaction [42]. Therefore, UOUU instructs users to collaborate as UUD changes. The threshold of this interaction is designed according to Hall’s proxemic zones [19]. This model divides UUD into 4 distance ranges, 0 to 0.45 m is intimate distance (Di), 0.45 to 1.2 m is personal distance (Dps), 1.2 to 3.6 m is social distance (Ds), and beyond 3.6 m is public distance (Dps).

Users are unlikely to collaborate in the intimate distance. Therefore, UUD in the UOUU is designed for the other 3 distance ranges. As users can directly interact with collaborators within Dps, UOUU helps users cooperate with visual cues of collaboration actions; as the users can express and interpret their intentions within Ds, UOUU promotes users to communicate by presenting collaborative task-related text and patterns, and users regard collaborators are hard to identify within Dpb, UOUU enhances the visual effect of cues to remind users the position of the task object (a set of examples is shown at the bottom of Fig. 2).

Figure 2: The distances in user-user interaction

The interactions of the UUD in UOUU are as follows:

(1) instruct users to perform tasks at the closer collaborator to optimize the task route

In which there are n collaborators in the task, the UUD to user U0 is D0, to user U1 is D1… to user Un is Dn, which is calculated by Euclidian distance formula, and f(x) is the equation to find the minimum distance between them.

(2) gradually instruct user from communicating to collaborate by following the Hall’s proxemic zones.

In which g(x) is the piecewise equation of guidance interface of UUD-driven part in UOUU, collaborate(x) is the cooperate method that generates visual cues of cooperation gestures to guide user how to collaborate on the task object together, communicate(x) is the communication method that generates relevant graphics and text to assist user in learning collaborator and tasks, remind(x) is the reminder method that generates arrow visual cues pointing to remind the user of the collaborator’s location.

3.3 UOD and UUD Combined Collaboration Model

UOD and UUD focus on how to perform a task by individual user and when to collaborate separately. To address when and how to collaborate efficiently, we considered relations between UOD and UUD to drive collaboration.

To compare whether collaboration is more efficient, we took the route distance for collaboration as UUD (between the user and the closest collaborator). We took the route distance for an independent task as UOD (between the user and the closest object to interact with). UUD and UOD mentioned in remaining of Section 3 refer to these two. When UUD is shorter than UOD, collaboration is more efficient as it minimises the “makespan”, which refers to the effort of completing whole task [43]. There is possible path range for user U0 and user U1 to cooperate with task object O2(DC1, Fig. 3). There is the possible path range for cooperation with the U2 (DC2). The cooperation path range is smaller when UUD is smaller than UOD, which consumes less time and is relatively efficient in those collaborations that require face-to-face collaboration and frequent movement during the task.

Figure 3: UUD and UOD in a scene with multiple users and objects

The interactions of UOUU in the UUD-driven part are as follows:

Instruct the user to perform independently or collaborate by comparing UOD between the user and task object with UUD between the user and collaborator.

In which h(x) is the piecewise equation for allocating collaboration, f(x)UOD and f(x)UUD is the equation for finding the shortest UOD and UUD and is the same as formulae (1)–(3). g(x) UOD and g(x)UUD are the UOD and UUD-driven part in UOUU and are the same as formulae (2)–(4), g(x)UUD→ g(x)UOD means to execute g(x)UUD first and then execute g(x)UOD.

The study scenario requires the participants to move during task operation and its completion is related to the users’ position. Therefore, the parcel-sorting task that requires frequent movement in the warehouse to pick and distribute parcels becomes an appropriate scenario to verify the effectiveness of UOUU in certain tasks.

We first conducted a field study to understand collaboration scenarios in real logistics industries and validate the experimental scenario. We adopted the observation and interview method [44]. In the real warehouse, we observed and learned the operations that a warehouse operator needed to perform and worked as a warehouse operator for 7 days. In addition, we interviewed workers’ ethnography, educational background, work and collaboration experiences.

The parcel-sorting operation (Fig. 4) is the work of parcel-sorting according to the online customer order, which is the last step before packing and delivering. In this step, workers need to repeatedly pick goods for a half of a day and put them on the given shelf positions, and each shelf position corresponds to a package of an order. This process is divided into three: picking goods, scanning the bar code, checking the location, and distributing goods. Although there are possibilities that workers collaborate with each other, Collaborating is not always effective due to the time and cognition consumption, it occurs in the process of picking and handling goods by users, because the time of working independently is much higher, and the communication required by collaboration accounts for a lower proportion of the task completion time.

Figure 4: Logistics workers performing parcel sorting operations

During the field study, the participants faced two challenges in boosting the work speed. Firstly, they needed to switch frequently between the real world and the screen. After scanning the goods, workers needed to check the corresponding position of the goods and put them in the correct position. This process was mechanical and repetitive, but the workers were easily distracted by the multiple switches between the screen and the environment. The other one was the uncertain collaborator state. Workers needed to determine what each other was doing, whether the operation needed help, and whether collaboration here and now was more efficient than working alone with each other. Without a clear judgement on the above questions, workers were more likely to work alone. In contrast, the UOUU in this paper reduced the workload associated with virtual information and reality switching by adopting AR and enhancing the user’s perception of task and collaborator state through combined distance-based interaction, which addressed the above challenges.

Sixteen participants (7 females and 9 males) were recruited to participate in the experiments with a payment of 25 CNY. All the participants were between 20 and 31 years old with no physical disabilities (Mage = 23.4, SDage = 4.082). There are 4 undergraduate students, 6 master students, and 6 doctor students. Education background of 14 participants is in engineering and 2 in science. All the participants had no AR experience and were unfamiliar with each other before the experiment. The participants were positioned between shelves during the study, and the researcher stood away from the shelves (Fig. 5).

Figure 5: Participants in experiments (left: unsorted shelf, right: sorted shelf)

4.3 Experiment Settings and Procedures

We set up controlled experiments to validate the effectiveness of UOUU. And the independent variable was whether the users were assigned collaboration tasks based on combined distances. The dependent variables we investigated were the number of user’s collaboration occurrences and task efficiency. We used collaboration and task efficiency evaluation methods to assess the significance of their differences.

We set up a parcel sorting task that required participants to move frequently during the task. The scenario simulated the environment of a parcel sorting task in the real warehouse (Fig. 6). The experiment task had three steps:

(1) Participants picked and scanned goods on unsorted shelves.

(2) Participants sorted the goods according to the parcel information on the screen of headset.

(3) Participants distributed them to the correct location on the 2 sorted shelves for further packing and shipping.

Figure 6: UUD and UOD in the scene of coupled parcel-sorting task

The collaboration in the task required the participants to perform the corresponding task steps. The task steps operated by each participant were connected to form a dynamic production pipeline. In the parcel sorting task, UOUU coordinated participants with objects and other participants to form a lean workflow. Users need to consider these relations and build the process themselves in controlled groups.

There were three shelves in the experiment site (Fig. 7). One shelf stored unsorted goods and the other two shelves stored sorted ones. The distance between the unsorted shelf and sorted shelves is 3 m, and the distance between two sorted shelves was 1 m at the start and 4 m in the second round of groups 1 and 2. To simulate varied distances of different tasks in warehouse, we increased the distance between the 2 sorted shelves by 3 m in the second round. The participants were provided with twenty goods in random sizes and weights.

Figure 7: Settings of the sorted shelf and unsorted shelf

4.4 Implementation of the UOUU Method

The prototype followed the interaction of UOUU in the parcel sorting scene. The experiment was based on a repetitive two-person task that the corresponding information of objects and collaborators had been introduced prior to the experiment, and the whole task was performed within Dp. Therefore, the communicate(x) and collaborate(x) of UUD interaction and highlight(x) and observe(x) of UOD interaction were omitted. Gradual instruction is designed as an arrow at the bottom of the interface to remind users of performing tasks and collaborating step by step, and a rectangle shows the place where the user operates. Although we simplified the abstract collaboration method UOUU, it is still sufficient to verify the distance combination part of UOUU that explains how to mediate UOD and UUD driven part in collaboration.

The interactions of the UOUU experiment prototype are as follows:

In which the user needs to interact with n objects. f(x) is the equation to find the shortest UOD. g(x)UOD and g(x)UUD are interface equations of the UOD and UUD-driven part in UOUU. The h(x) is the equation that connects the UOD and UUD-driven part.

The graphic interface of UOUU displays a full-screen camera feed (Fig. 8). Besides, the interface contains 2 virtual graphics. One is a green arrow fixed at the bottom of the interface for gradual instruction of the direction of the next step. The direction of the arrow is generated from distance detection. The arrow presents gradual instructions to the suggested collaborator when collaborating is efficient in the experimental groups.

Figure 8: User interface of the UOUU

The other is a green rectangle that superimposes on AR markers of goods and shelves. The green rectangle on the goods is the response to scan goods correctly, and the method adds it to a task list to be performed. The green rectangle on the shelves indicates where the good should distribute at (Fig. 9). After distributing the goods from the unsorted shelf to the right position on the sorted shelf, the sorting task will be seen as completed. The interface reduces unnecessary elements (e.g., texts, numbers, and complex pattern) to avoid high cognitive loads.

Figure 9: User interface of controlled group (no arrow to collaborator)

The controlled group removed the UOD and UUD-combined analysis. It stopped analysis of when and how to collaborate efficiently. Participants needed to consider when and how to collaborate by themselves (Fig. 10).

Figure 10: User interface of both experimental and controlled group with arrow to the position of operation

We used two types of HMDs in the study. ARBOX HMD contained reflective optical lens and two external cameras (Fig. 11), and the other device was a Google Cardboard headset (Fig. 12), both were inserted with an Android phone (Google Pixel 3), and were randomly used across the participants, with the group 1 using an ARBOX and a Google Cardboard, and the group 2 doing the same, which makes no statistical difference on devices between groups but within the groups.

Figure 11: Two AR HMD equipment in the studies

Figure 12: HMD with AR cardboard headset (Google Cardboard 1)

The UOUU application is an Android application. We implemented rendering of AR experience and 3D information on AR markers through Vuforia AR API. We used image scanning to read parcel information and used depth of field (DOF) in unity to identify the parcel locations in real time supplemented with location API. The locations of shelves were recorded in the prototype as fixed location and switched after one round of the experiment. UOD and UUD were calculated by Euclidean distance based on location of user and object [44].

The procedures included preparation, task operation, and questionnaire. All the procedures were recorded by two cameras for further analysis. The participants were equally divided into two groups. The group 1 used the UOUU and the group 2 used the method and interface of the controlled group. For the second round of each group, the distance between two sorted shelves increased by three meters to imitate the random distance between sorted shelves in a real warehouse.

Experiment preparation: The participants were asked to fill out a printed consent form and questionnaires of demographic data and previous AR experience. The participants learned the operation for using the devices and the order-sorting task while a trial of task. All participants were informed to feel free to collaborate and complete tasks efficiently. In group 1, when participants performed the task, UOUU considered when and how to perform collaboration more efficiently at the real time according to criteria in Section 3. When the method suggested work independently was more efficient, participants needed to walk to unsorted shelves, pick up the parcel and look at AR mark through the HMD to scan it, follow AR instruction on the HMD and carry the good to the position in sorted shelf to complete the parcel-sorting task.

When the method suggested collaboration was efficient at one moment of independent task procedure, it instructed the participant to collaborate by an arrow pointing to the other user. The user interface in this application scene showed an arrow as gradual instruction to reduce variables. But it can be replaced by specific collaboration in other applications to achieve better performance. In group 2, the method instructed working independently. The participants needed to decide when and how to collaborate by themselves.

In the second round of experiment, one of the sorted shelves was moved two meters away. The other procedures were the same as in the round 1. After the task, the experimenters recorded the participants’ completion time and accuracy of parcel sorting. The participants were asked to fill out the questionnaire (Appendix A).

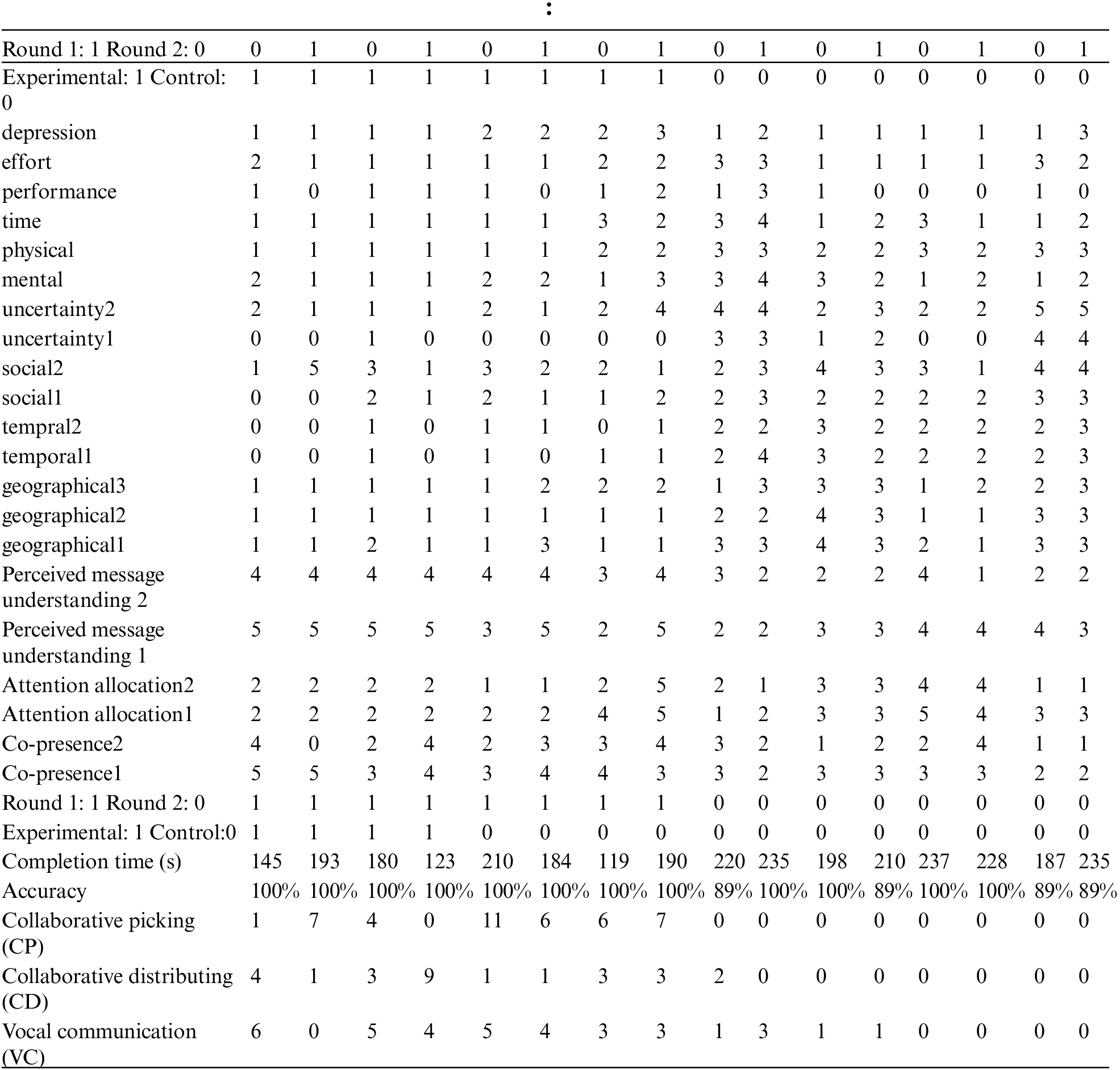

To reveal the influence of UOUU on collaboration and task efficiency, we analysed collaboration occurrences, task completion time and accuracy. The participants were asked to fill out questionnaires: NASA-TLX [45], NMM [46], and psychological distance scale [47]. Observation of the recorded videos was analysed with the video material coding and notes and followed an existing coding framework [36,48]. The topic of this paper is to improve collaborative work efficiency, so we carried out detecting objective efficiency, aided by subjective data (Appendix B).

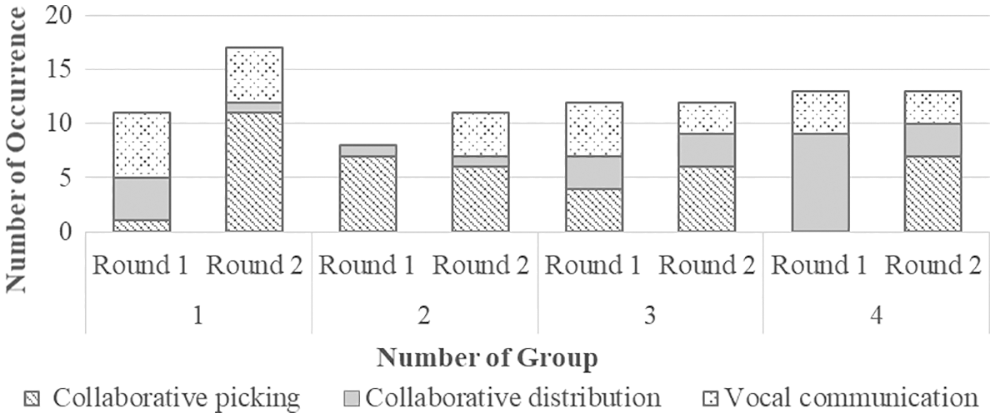

We analysed participants’ collaborative behaviours and categorised them into the following types according to the video material coding and notes framework mentioned above:

(a) CP (Collaborative Picking)–participant and collaborator are performing parcel-picking together and helping each other at the area near the unsorted shelf. This includes picking, passing, and scanning goods for the other and accepting this action accordingly.

(b) CD (Collaborative Distributing)–participant and collaborator are performing parcel-sorting and distributing together and helping each other at the area near the sorted shelf. This includes passing, sorting, and placing goods of the other on sorted shelf and accepting this action accordingly.

(c) VC (Vocal Communication)–active discussion and other vocal communications for promoting the task.

5.1.1 Collaboration Occurrences

We analysed participants’ collaborative behaviour according to the above types and followed a grounded coding framework to measure the influence of the method on collaborative behaviours during the task. Figs 13 and 14 provide detailed records of the occurrence number of the three collaboration styles in the group 1 and group 2. Each figure shows the first and second round of tasks. Specifically, four experimental and four control groups, including sixteen rounds were carried out. Compared with group 1, fewer collaborations occurred in the group 2.

Figure 13: Group 1 (experimental) occurrences of collaboration

Figure 14: Group 2 (controlled) occurrences of collaboration

Shapro-Wilk test showed none of VC (W = 0.743, p < 0.01), CP (W = 0.730, p < 0.01) and CD (W = 0.874, p < 0.05) followed a normal distribution. As independent variables consist two groups, we conducted the MannWhitney test. Group 1 showed a significant increase on number of occurrences of collaboration styles including CP (z = −3.246, p = 0.001), CD (z = −3.200, p = 0.001), and VC (z = −2.685, p = 0.007).

Between rounds 1 and 2, the MannWhitney test showed no significant differences on number of occurrences of collaboration styles including CP (z = −0.870, p > 0.1), CD (z = −0.883, p > 0.1), and VC (z = −0.967, p > 0.1).

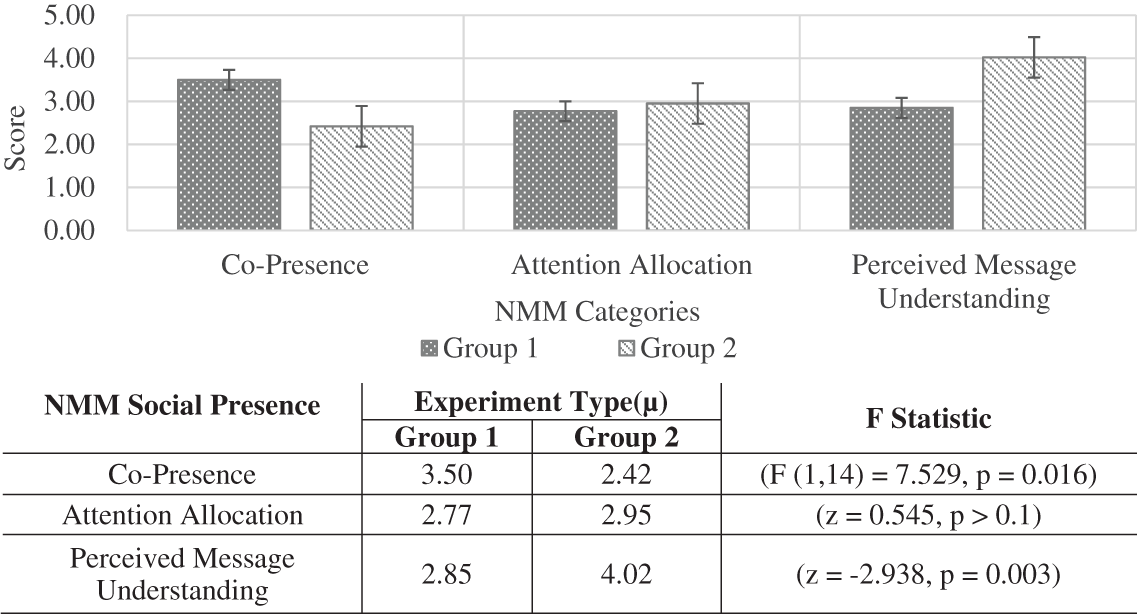

We took NMM and psychological distance to analyse perceived awareness of collaboration in terms of the sense of collaborator’s presence, communication, and shared experience. Social presence (Fig. 15) is how much participants notice the presence of their collaborator [49], and psychological distance (Fig. 16) is how different they think their existing experience is, which is important to investigate underlying awareness and reason for collaboration [50].

Figure 15: Comparison of NMM social presence statistics

Figure 16: Comparison of psychological distance statistics

Shapro-Wilk test showed co-presence (W = 0.943, p = 0.383) followed a normal distribution, but attention allocation (W = 0.821, p < 0.01) and perceived message understanding (W = 0.793, p < 0.01) did not, and co-presence met homogeneity of variance (F (1, 14) = 0.064, p = 0.805). MannWhitney test and ANOVA showed significant increase on NMM including co-presence (F (1, 14) = 7.529, p = 0.016), and Perceived Message Understanding (z = −2.938, p = 0.003) However, there is no significant difference in attention allocation (z = 0.545, p > 0.1), which indicates attention on their collaborator was not influenced.

Shapro-Wilk test showed social (W = 0.950, p = 0.066) followed a normal distribution, but uncertainty (W = 0.917, p < 0.01), temporal (W = 0.930, p < 0.05), and geographical (W = 0.874, p < 0.01) did not. The mean value of the psychological distance between participants in group 1 was lower than the group 2. MannWhitney test and ANOVA showed a significant difference in terms of social (F (1, 14) = 14.614, p = 0.000), temporal (z = −4.564, p = 0.000), and uncertainty (z = −3.427, p = 0.001), and no significant difference in term of geographical (z = −1.354, p > 0.1).

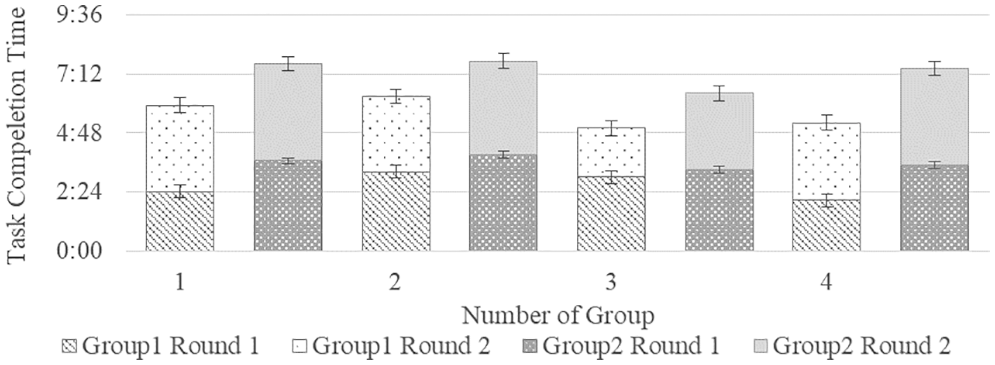

5.2 Task Completion Time, Accuracy, and Perceived Workload

5.2.1 Task Completion Time and Accuracy

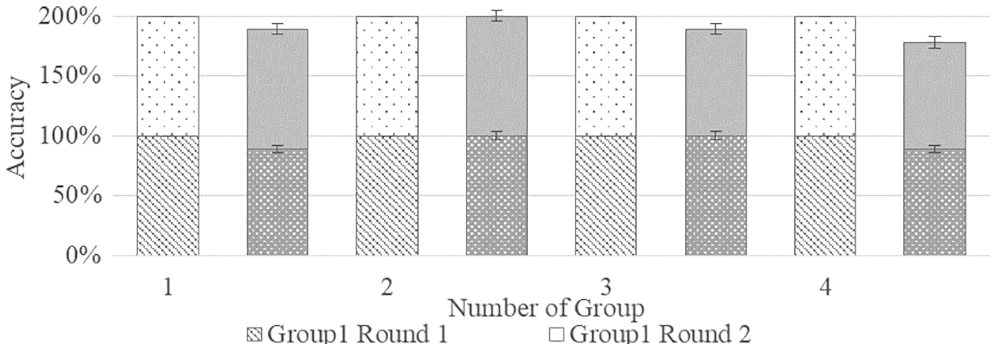

We recorded task completion time, and calculated accuracy (Figs 17 and 18). Shapro-Wilk test showed task completion time (W = 0.898, p = 0.075) followed a normal distribution, but accuracy (W = 0.546, p < 0.01) did not. And task completion time (F (1, 14) = 5.519, p = 0.034) met the homogeneity of variance. ANOVA and MannWhitney test suggested that a significantly decrease in task completion time in group 1 (F (1, 14) = 13.490, p = 0.003) and a significant increase in accuracy in group 1 (z = −2.236, p = 0.025).

Figure 17: Comparison of task completion time in groups 1 and 2

Figure 18: Comparison of accuracy in groups 1 and 2

In the second round, task completion time (F (1, 14) = 0.000, p = 0.983) met the homogeneity of variance. ANOVA and Shapro-Wilk task showed task completion time (z = −0.526, p > 0.1) and accuracy (z = 0.000, p > 0.1) showed no significant difference when the distance between 2 sorted shelves increased in the second round of each group. This shows that three meters increasement on the distance between two sorted shelves is not enough to affect the overall task performance, and participants spend more time on task recognition and confirmation.

We observed that the participants focused more on scanning and remembering target location even if this did not require long-term memory, which is more often in group 2 with less collaboration. Due to the repetitive nature of parcel-sorting task, participants were vulnerable to make errors and distract by irrelated items. We found some participants repeatedly scanned good and shelf several times to confirm whether they were operating correctly. In fact, time spent on delivering goods does not account for a high proportion of the entire task.

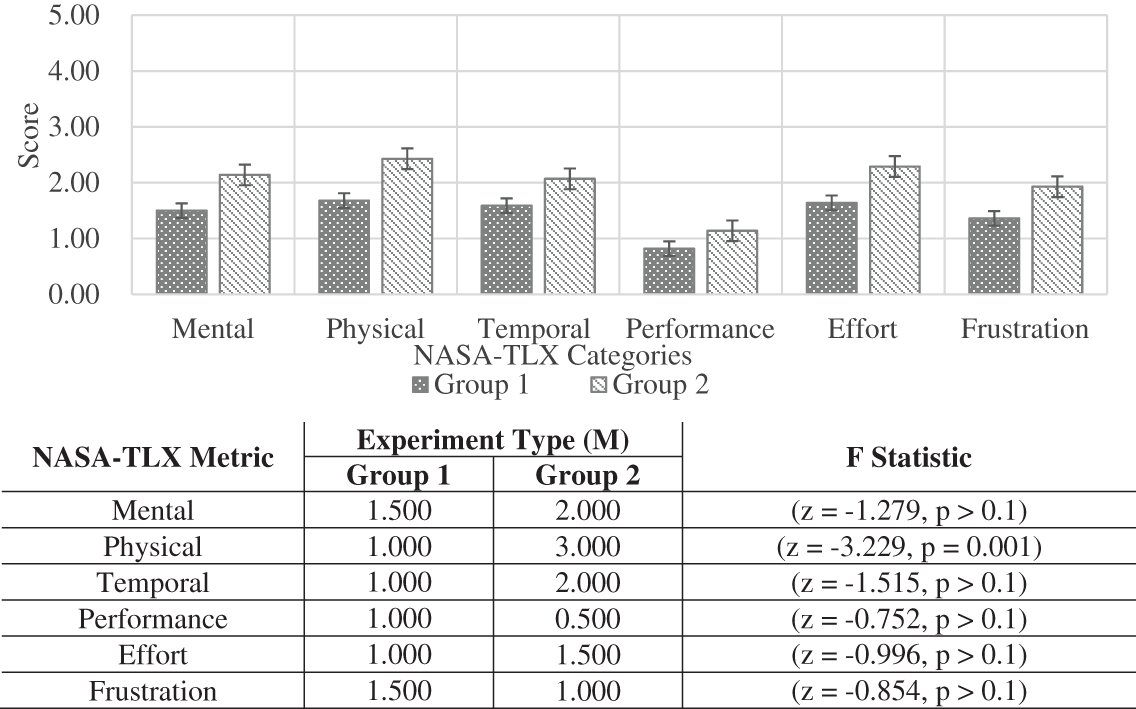

We used NASA-TLX to measure perceived workload, especially in terms of performance and effort categories that measure how the user perceived his performance in such workload (Fig. 19). The workload of all categories is relatively low, and all the average value are below 3. The mean workload value in all categories in group 1 is lower than in group 2.

Figure 19: Comparison of NASA-TLX statistics between 2 groups

Shapro-Wilk test showed all of NASA-TLX data did not follow regular distribution (p < 0.1). MannWhitney test showed scores in terms of physical (z = −3.229, p = 0.001) in group 1 are significantly lower than the group 2. However, there is no significant difference of workload in terms of mental, temporal, performance, effort and frustration.

We conducted an informal post-study interview to identify user’s perception and behaviors. We analyse the responses of 16 participants to the open-ended question of “What do you like and dislike about this system?”

Four participants in the experimental group reported the effect of UOUU on collaboration improvement. For example, participants mentioned: “it helps me understand my collaborators’ intentions and collaborate with each other faster”, “no need to make decisions in collaboration by myself”, “matching of collaborative tasks were conducted very fast”, “the prompts for sorting and dividing tasks are concise and clear” It can be seen that the UOUU can effectively convey the intent of the collaborator. And others can perceive the intention quickly.

In addition, the two participants in the experimental group supposed that they did not need to make decisions about collaboration, allowing them to collaborate more easily and achieve better task performance. In contrast, in the second group of experiments (control group), three participants gave feedback that: “after becoming distant from the collaborator, it felt like he was always out of my field of vision and it was a bit difficult to collaborate”, “I feel that I don’t know how to collaborate with others when there are no certain instructions.”, “I don’t know what the other person is doing when I want to collaborate”. This suggests the difficulty of judgment on collaboration willingness and perception of collaborators’ location and status were major limitations for participants in control group collaboration, and that the UOUU played a role in solving this problem.

Four participants supposed that augmented reality could improve the perception of collaboration, and this perception included the perception of the collaborators and the perception of the task object. This benefit was mentioned by participants in both the experimental and control group. For example, the participant mentioned: “The task process does not need to be memorized, the instructions are intuitive and clear, and you can directly determine the location of the task”, “after using it for a period of time, I feel that I can become more familiar with the collaborator, and the collaboration efficiency will become higher”, “the interface is simple, convenient and effective”. This indicated that using augmented reality technology to collaborate can increase participants’ perception of task objects and collaborators.

Among the “dislikes” given by the participants, ten participants mentioned complaints about the hardware. For example, “the augmented reality device is too heavy and uncomfortable to wear”, “I get dizzy after wearing it for a long time”, “sometimes the camera is out of focus”, which indicates that the current augmented reality device still needs to be optimized in terms of user experience. In addition, three participants thought that the augmented reality method was somewhat interesting, and it could gamify the uninteresting and repetitive tasks. “Augmented reality is quite interesting, it’s kind of like playing a game where you can see virtual things in the real world”.

The UOUU assisted participants in perceiving and communicating collaborative intent, improving and facilitating collaborative behaviour and enhancing task performance. The UOUU enhances the cognitive efficiency for participants to interact with objects and collaborators, and adds some fun. However, augmented reality suffers from technical limitations and its devices are still not highly user-usable because they are too bulky, and the user experience is not rated well enough.

Given the results (Table 1), alternative hypothesis h1 is supported and h2 is partially supported and null hypothesis is denied, suggesting the method’s effectiveness.

The results demonstrated the effectiveness of the UOUU on improving the participants’ overall task performance, collaboration occurrences and efficiency. The UOUU method significantly reduced the task completion time of operations and improved accuracy. The operation guidance based on combined UOD and UUD reduced the operation time for participants to interpret the spatial relations between them and objects and other users. More occurrences of collaboration reduced the possibility of making mistakes.

Compared with the control group, UOUU significantly increased the occurrence number of collaboration styles, as the method helped users to perceive their spatial relations with other user and task objects. Participants spent less time interpreting their situation to collaborate, which increased collaboration occurrences. Participants took less time to consider whether collaboration was efficient, which simplified collaboration and made them more willing to act collaboratively.

We adopted NMM social presence and psychological distance to measure collaboration relations [38]. The results showed a significant enhancement in the participant’s perception of the collaborator’s social presence in term of co-presence and perceived message understanding, and a significant reduction of psychological distance between participant and collaborator. This suggests the proposed method can deliver better perception of communication and collaborators’ presence, which further explains the improvement of participant’s task performance and awareness of collaboration. Previous studies have explored ways to change psychological distance [51], but less studies connect it with collaborative AR. Our research suggests that UOUU draw participant’s attention to collaborator and objects at the right time to reduce the time for generating communication and collaboration, which reduces psychological distance and promote relationship development. No significant difference in NMM attention allocation was explained as the proposed method has already helped participants to catch their collaborator and participant’s attention to the collaborator. As participants and collaborators perform tasks in the same place, there is no significant difference in geographical psychological distance.

Different from previous research that described partial spatial relations between participants and environment, we analysed distances composed of multiple UOD and UUD in collaborative work to form a more detailed spatial relation. Interpreting these spatial relations simultaneously by measuring and comparing distance on possible interaction routes can better understand the possibility and efficiency of interaction between user-object and user-user. Therefore, to fill this gap, we considered multiple UOD and UUD to help users enhance AR collaborative task performance. The UOUU assigned efficient task instruction gradually through measuring multiple UOD and UUD. Then, we conducted empirical research to explore whether adopting UOD and UUD-combined interaction can enhance collaboration and task performance.

The paper provided a new interaction method for the design of AR collaboration. Since this interaction approach was based on a face-to-face collaborative work scenario, it was applied to a wide range of collaborative work with varying distances. In addition to supporting collaboration, participants in the post-study interview mentioned that distance-driven interactions were more appealing to them and enhanced their engagement compared to traditional on-screen interactions, which has benefits on entertainment design for multi-player collaborative games and multi-player interactive devices. For other disciplines, the results are able to be used both in engineering collaboration to improve efficiency and in social relations disciplines to explore the interplay of group relations and interaction styles. In engineering, it can be applied to various production scenes that require flexible production lines instead of fixed ones according to position of workers and task objects. In the social sciences, the results of the UOUU can be used to explore methods of interaction that change the collaborative relationships of group activities as well as psychological distance, and to further investigate their biological principles.

There are several limitations of the study. For example, the experiment was based on the logistics industry, and the effectiveness of UOUU in other scenes that require frequent movement to carry out the task remains to be proven. The change in the relationship between participants is based on self-reported social presence and psychological distance, whose sociological mechanism still needs to be further explored. However, the two-person simple repetition task in the experimental scenario was representative of a range of collaborative tasks. To some extent the results of this paper are representative of numerous collaborative work scenarios with frequent distance variation.

The paper presents a UOD and UUD combined method, the UOUU method, for collaboration task assignment and conducts empirical studies to evaluate it to explore the influence of UOD and UUD combination on participants’ collaboration and task performance in the AR-based parcel sorting context. The results showed that the UOUU is an effective method that leverages the user-object and user-user distances to simulate collaboration in multi-user tasks. It successfully sensed these distances and accordingly assigned collaboration operations to the participants, thus promoting multi-user collaboration. The study evidence also indicates that the UOUU method enhanced the participant’s perception of the collaborator’s social presence.

Acknowledgement: The authors thank Yifei Shan, Zhongnan Huang, and Zihan Yan from Cross Device Computing Laboratory at Zhejiang University for prototype development and experiment assistance. We also thank all the participants.

Funding Statement: The work is supported by Zhejiang Provincial R&D Programme (Ref No. 2021C03128).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. Azuma, R. T. (1997). A survey of augmented reality. Presence-Virtual and Augmented Reality, 6(4), 355–385. https://doi.org/10.1162/pres.1997.6.4.355 [Google Scholar] [CrossRef]

2. Azuma, R., Baillot, Y., Behringer, R., Feiner, S., Julier, S. et al. (2001). Recent advances in augmented reality. IEEE Computer Graphics and Applications, 21(6), 34–47. https://doi.org/10.1109/38.963459 [Google Scholar] [CrossRef]

3. Radu, L., Joy, T., Bowman, Y., Bott, I., Schneider, B. (2021). A survey of needs and features for augmented reality collaborations in collocated spaces. Proceeding of ACM on Human-Computer Interaction, 5(CSCW1), 1–21. https://doi.org/10.1145/3449243 [Google Scholar] [CrossRef]

4. Zhang, W., Han, B., Hui, P., Gopalakrishnan, V., Zavesky, E. (2018). CARS: Collaborative augmented reality for socialization. Proceeding of 19th International Workshop on Mobile Computing Systems & Applications, pp. 25–30. Tempe, Arizona, USA. [Google Scholar]

5. Brock, M., Quigley, A., Kristensson, P. O. (2018). Change blindness in proximity-aware mobile interfaces. The 2018 CHI Conference on Human Factors in Computing Systems, pp. 1–7. Montreal QC, Canada. [Google Scholar]

6. Grønbæk, J. E., Knudsen, M. S., O’hara, K., Krogh, P. G., Vermeulen, J. et al. (2020). Proxemics beyond proximity: Designing for flexible social interaction through cross-device interaction. The 2020 CHI Conference on Human Factors in Computing Systems, pp. 1–14. Honolulu, HI, USA. [Google Scholar]

7. Zhou, H., Tearo, K., Waje, A., Alghamdi, E., Alves, T. et al. (2016). Enhancing mobile content privacy with proxemics aware notifications and protection. The 2016 CHI Conference on Human Factors in Computing Systems, pp. 1362–1373. San Jose, California, USA. [Google Scholar]

8. Kiyokawa, K., Billinghurst, M., Hayes, S. E., Gupta, A., Sannohe, Y. et al. (2002). Communication behaviors of co-located users in collaborative AR interfaces. Proceeding of International Symposium on Mixed and Augmented Reality, pp. 139–148. Darmstadt, Germany. [Google Scholar]

9. Grønbæk, J. E., Korsgaard, H., Petersen, M. G., Birk, M. H., Krogh, P. G. (2017). Proxemic transitions: Designing shape-changing furniture for informal meetings. The 2017 CHI Conference on Human Factors in Computing Systems, pp. 7029–7041. Denver, Colorado, USA. [Google Scholar]

10. Acheampong, A., Zhang, Y., Xu, X., Kumah, D. A. (2023). A review of the current task offloading algorithms, strategies and approach in edge computing systems. Computer Modeling in Engineering & Sciences, 134(1), 35–88. https://doi.org/10.32604/cmes.2022.021394 [Google Scholar] [CrossRef]

11. Aslan, I., Bittner, B., Müller, F., André, E. (2018). Exploring the user experience of proxemic hand and Pen input above and aside a drawing screen. The 17th International Conference on Mobile and Ubiquitous Multimedia, pp. 183–192. Cairo, Egypt. [Google Scholar]

12. Ghare, M., Pafla, M., Wong, C., Wallace, J. R., Scott, S. D. (2018). Increasing passersby engagement with public large interactive displays: A study of proxemics and conation. The 2018 ACM International Conference on Interactive Surfaces and Spaces, pp. 19–32. Tokyo, Japan. [Google Scholar]

13. Dostal, J., Kristensson, P. O., Quigley, A. (2014). Estimating and using absolute and relative viewing distance in interactive systems. Pervasive and Mobile Computing, 10, 173–186. https://doi.org/10.1016/j.pmcj.2012.06.009 [Google Scholar] [CrossRef]

14. Jansen, P., Fischbach, F., Gugenheimer, J., Stemasov, E., Frommel, J. et al. (2020). ShARe: Enabling Co-located asymmetric multi-user interaction for augmented reality head-mounted displays. The 33rd Annual ACM Symposium on User Interface Software and Technology, pp. 459–471. Virtual Event, USA. [Google Scholar]

15. Dourish, P. (2001). Where the action is: The foundations of embodied interaction. Cambridge, Massachusetts, London, England: MIT Press. [Google Scholar]

16. Renner, R. S., Velichkovsky, B. M., Helmert, J. R. (2013). The perception of egocentric distances in virtual environments-a review. ACM Computing Surveys, 46(2), 1–40. https://doi.org/10.1145/2543581.2543590 [Google Scholar] [CrossRef]

17. Greenberg, S., Marquardt, N., Ballendat, T., Diaz-Marino, R., Wang, M. (2011). Proxemic interactions: The new ubicomp? Interactions, 18(1), 42–50. https://doi.org/10.1145/1897239.1897250 [Google Scholar] [CrossRef]

18. Perry, A., Nichiporuk, N., Robert, T. K. (2016). Where does one stand: A biological account of preferred interpersonal distance. Social Cognitive and Affective Neuroscience, 11(2), 317–326. https://doi.org/10.1093/scan/nsv115 [Google Scholar] [PubMed] [CrossRef]

19. Hall, E. T. (1966). The hidden dimension. Garden City, NY: Doubleday, Cambridge University Press. [Google Scholar]

20. Kendon, A. (1990). Conducting interaction: Patterns of behavior in focused encounters, vol. 7. CUP Archive. New York, NY: Cambridge University Press. [Google Scholar]

21. Ballendat, T., Marquardt, N., Greenberg, S. (2010). Proxemic interaction: Designing for a proximity and orientation-aware environment. ACM International Conference on Interactive Tabletops and Surfaces, pp. 121–130. Saarbrücken, Germany. [Google Scholar]

22. Wu, C. J., Houben, S., Marquardt, N. (2017). EagleSense: Tracking people and devices in interactive spaces using real-time top-view depth-sensing. The 2017 CHI Conference on Human Factors in Computing Systems, pp. 3929–3942. Denver, Colorado, USA. [Google Scholar]

23. Beyer, G., Alt, F., Müller, J., Schmidt, A., Isakovic, K. et al. (2011). Audience behavior around large interactive cylindrical screens. The SIGCHI Conference on Human Factors in Computing Systems, pp. 1021–1030. Vancouver, BC, Canada. [Google Scholar]

24. Li, A. X., Lou, X. L., Hansen, P., Peng, R. (2016). Improving the user engagement in large display using distance-driven adaptive interface. Interacting with Computers, 28(4), 462–478. https://doi.org/10.1093/iwc/iwv021 [Google Scholar] [CrossRef]

25. Ju, W. G., Lee, B. A., Klemmer, S. R. (2007). Range: Exploring proxemics in collaborative whiteboard interaction. CHI ’07 Extended Abstracts on Human Factors in Computing Systems, pp. 2483–2488. San Jose, CA, USA. [Google Scholar]

26. Chakraborty, S., Stefanucci, J., Creem-Regehr, S., Bodenheimer, B. (2021). Distance estimation with social distancing: A mobile augmented reality study. 2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Bari, Italy. [Google Scholar]

27. Wallace, J. R., Iskander, N., Lank, E. (2016). Creating your bubble: Personal space on and around large public displays. The 2016 CHI Conference on Human Factors in Computing Systems, pp. 2087–2092. San Jose, California, USA. [Google Scholar]

28. Dostal, J., Hinrichs, U., Kristensson, P. O., Quigley, A. (2014). SpiderEyes: Designing attention-and proximity-aware collaborative interfaces for wall-sized displays. The 19th International Conference on Intelligent User Interfaces, pp. 143–152. Haifa, Israel. [Google Scholar]

29. Yue, Y., Ding, J., Kang, Y., Wang, Y., Wu, K. et al. (2019). A location-based social network system integrating mobile augmented reality and user generated content. 3rd ACM SIGSPATIAL International Workshop on Location-Based Recommendations, Geosocial Networks and Geoadvertising, pp. 1–4. New York, NY, USA. [Google Scholar]

30. Marshall, P., Rogers, Y., Pantidi, N. (2011). Using F-formations to analyse spatial patterns of interaction in physical environments. The ACM 2011 Conference on Computer Supported Cooperative Work, pp. 445–454. Hangzhou, China. [Google Scholar]

31. Cardoso, L.F, D. S., Queiroz, F. C., Zorzal, R, E. (2020). A survey of industrial augmented reality. Computers & Industrial Engineering, 139(1), 106159. [Google Scholar]

32. Billinghurst, M., Kato, H. (2003). Collaborative augmented reality. Communications of the ACM, 45(7), 64–70. https://doi.org/10.1145/514236.514265 [Google Scholar] [CrossRef]

33. Kiyokawa, K., Takemura, H., Yokoya, N. (2000). SeamlessDesign for 3D object creation. IEEE MultiMedia, 7(1), 22–33. https://doi.org/10.1109/93.839308 [Google Scholar] [CrossRef]

34. Kim, K., Billinghurst, M., Bruder, G., Duh, H. B. L., Welch, G. F. (2018). Revisiting trends in augmented reality research: A review of the 2nd decade of ISMAR (2008–2017). IEEE Transactions on Visualization and Computer Graphics, 24(11), 2947–2962. https://doi.org/10.1109/TVCG.2018.2868591 [Google Scholar] [PubMed] [CrossRef]

35. Park, S. (2020). ARLooper: Collaborative audiovisual experience with mobile devices in a shared augmented reality space. Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, pp. 1–4. Honolulu, HI, USA. [Google Scholar]

36. Wells, T., Houben, S. (2020). CollabAR–investigating the mediating role of mobile AR interfaces on Co-located group collaboration. The 2020 CHI Conference on Human Factors in Computing Systems, pp. 1–13. Honolulu, HI, USA. [Google Scholar]

37. Speicher, M., Cao, J., Yu, A., Zhang, H., Nebeling, M. (2018). 360Anywhere: Mobile Ad-hoc collaboration in any environment using 360 video and augmented reality. Proceedings of the ACM on Human-Computer Interaction, 2(EICS), 1–20. https://doi.org/10.1145/3229091 [Google Scholar] [CrossRef]

38. Bai, H., Sasikumar, P., Yang, J., Billinghurst, M. (2020). A user study on mixed reality remote collaboration with eye gaze and hand gesture sharing. The 2020 CHI Conference on Human Factors in Computing Systems, pp. 1–13. Honolulu, HI, USA. [Google Scholar]

39. Pederson, T., Surie, D. (2007). Towards an activity-aware wearable computing platform based on an egocentric interaction model. International Symposium on Ubiquitious Computing Systems, pp. 211–227. https://doi.org/10.1007/978-3-540-76772-5 [Google Scholar] [CrossRef]

40. Chen, W., Shan, Y., Wu, Y., Yan, Z., Li, X. (2021). Design and evaluation of a distance-driven user interface for asynchronous collaborative exhibit browsing in an augmented reality museum. IEEE Access, 9(1), 73948–73962. https://doi.org/10.1109/ACCESS.2021.3080286 [Google Scholar] [CrossRef]

41. Hennemann, S., Rybski, D., Liefner, I. (2012). The myth of global science collaboration—Collaboration patterns in epistemic communities. Journal of Informetrics, 6(2), 217–225. https://doi.org/10.1016/j.joi.2011.12.002 [Google Scholar] [CrossRef]

42. Hawkey, K., Kellar, M., Reilly, D., Whalen, T., Inkpen, K. M. (2005). The proximity factor: Impact of distance on co-located collaboration. The 2005 International ACM SIGGROUP Conference on Supporting Group Work, pp. 31–40. Sanibel Island, Florida, USA. [Google Scholar]

43. Zeng, Y., Tong, Y., Chen, L. (2019). Last-mile delivery made practical: An efficient route planning framework with theoretical guarantees. Proceedings of the VLDB Endowment, 13(3), 320–333. https://doi.org/10.14778/3368289.3368297 [Google Scholar] [CrossRef]

44. Patel, S. P., Upadhyay, S. H. (2020). Euclidean distance based feature ranking and subset selection for bearing fault diagnosis. Expert Systems with Applications, 154(1), 113400. https://doi.org/10.1016/j.eswa.2020.113400 [Google Scholar] [CrossRef]

45. Fabian, C., Arellano, J. L. H., Munoz, E. L. G., Macias, A. M. (2020). Development of the NASA-TLX multi equation tool to assess workload. International Journal of Combinatorial Optimization Problems and Informatics, 11(1), 50–58. [Google Scholar]

46. Osmers, N., Prilla, M., Blunk, O., Brown, G. G., Janßen, M. et al. (2021). The role of social presence for cooperation in augmented reality on head mounted devices: A literature review. The 2021 CHI Conference on Human Factors in Computing Systems, pp. 1–17. Yokohama, Japan. [Google Scholar]

47. Jones, C., Hine, D. W., Marks, A. D. G. (2017). The future is now: Reducing psychological distance to increase public engagement with climate change. Risk Analysis, 37(2), 331–341. https://doi.org/10.1111/risa.12601 [Google Scholar] [PubMed] [CrossRef]

48. Tang, A., Tory, M., Po, B., Neumann, P., Carpendale, S. (2006). Collaborative coupling over tabletop displays. The SIGCHI Conference on Human Factors in Computing Systems, pp. 1181–1190. Montréal, Québec, Canada. [Google Scholar]

49. Harms, C., Biocca, F. (2004). Internal consistency and reliability of the networked minds measure of social presence. Seventh Annual International Workshop: Presence 2004, pp. 1–7. Valencia, Spain. [Google Scholar]

50. Suh, A., Wagner, C. (2016). Explaining a virtual worker’s job performance: The role of psychological distance. International Conference on Augmented Cognition, pp. 241–252. Toronto, ON, Canada. [Google Scholar]

51. Liberman, N., Trope, Y. (2014). Traversing psychological distance. Trends in Cognitive Sciences, 18(7), 364–369. https://doi.org/10.1016/j.tics.2014.03.001 [Google Scholar] [PubMed] [CrossRef]

Appendix A: Questionnaire Design

Appendix B: Experiment Data

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools