Open Access

Open Access

REVIEW

A Survey of Convolutional Neural Network in Breast Cancer

School of Computing and Mathematical Sciences, University of Leicester, Leicester, LE1 7RH, UK

* Corresponding Author: Yu-Dong Zhang. Email:

(This article belongs to the Special Issue: Computer Modeling of Artificial Intelligence and Medical Imaging)

Computer Modeling in Engineering & Sciences 2023, 136(3), 2127-2172. https://doi.org/10.32604/cmes.2023.025484

Received 15 July 2022; Accepted 28 October 2022; Issue published 09 March 2023

Abstract

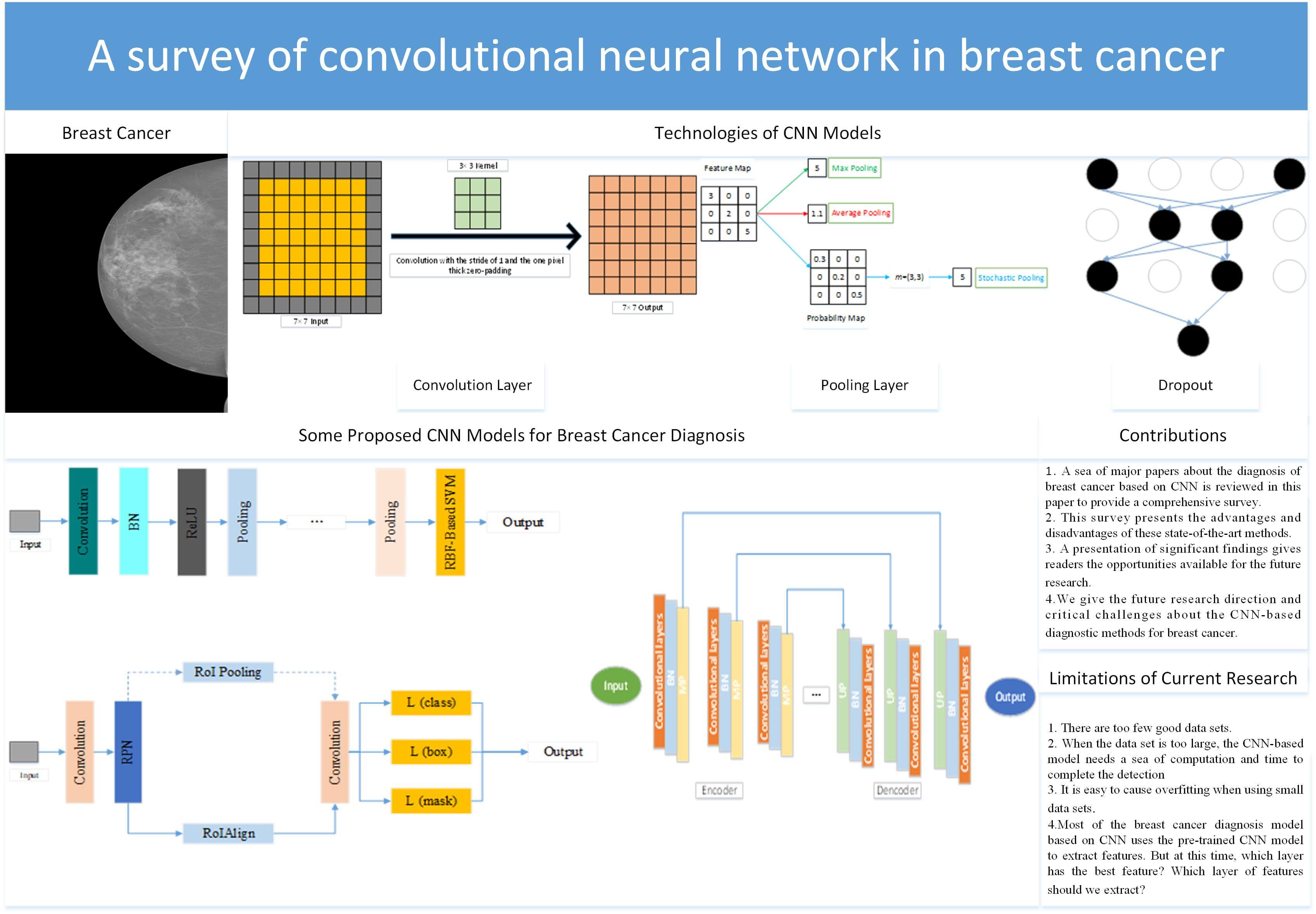

Problems: For people all over the world, cancer is one of the most feared diseases. Cancer is one of the major obstacles to improving life expectancy in countries around the world and one of the biggest causes of death before the age of 70 in 112 countries. Among all kinds of cancers, breast cancer is the most common cancer for women. The data showed that female breast cancer had become one of the most common cancers. Aims: A large number of clinical trials have proved that if breast cancer is diagnosed at an early stage, it could give patients more treatment options and improve the treatment effect and survival ability. Based on this situation, there are many diagnostic methods for breast cancer, such as computer-aided diagnosis (CAD). Methods: We complete a comprehensive review of the diagnosis of breast cancer based on the convolutional neural network (CNN) after reviewing a sea of recent papers. Firstly, we introduce several different imaging modalities. The structure of CNN is given in the second part. After that, we introduce some public breast cancer data sets. Then, we divide the diagnosis of breast cancer into three different tasks: 1. classification; 2. detection; 3. segmentation. Conclusion:Although this diagnosis with CNN has achieved great success, there are still some limitations. (i) There are too few good data sets. A good public breast cancer dataset needs to involve many aspects, such as professional medical knowledge, privacy issues, financial issues, dataset size, and so on. (ii) When the data set is too large, the CNN-based model needs a sea of computation and time to complete the diagnosis. (iii) It is easy to cause overfitting when using small data sets.Graphic Abstract

Keywords

For people all over the world, cancer is one of the most feared diseases and one of the major obstacles to improving life expectancy in countries around the world [1–3]. According to the survey, cancer is one of the biggest causes of death before the age of 70 in 112 countries. At the same time, cancer is the third and fourth leading cause of death in 23 countries [4–7].

Among all kinds of cancers, breast cancer is the most common cancer for women [8–12]. According to the data from the United States in 2017, there were more than 250,000 new cases of breast cancer [13]. 12% of American women may get breast cancer in their lifetime [14]. The data surveyed in 2020 showed that female breast cancer had become one of the most common cancers [4].

A large number of clinical trials have proved that if breast cancer is diagnosed at an early stage, it will give patients more treatment options and improve the treatment effect and survival ability [8,15–17]. Therefore, there are many diagnostic methods for breast cancer, such as biopsy [18].

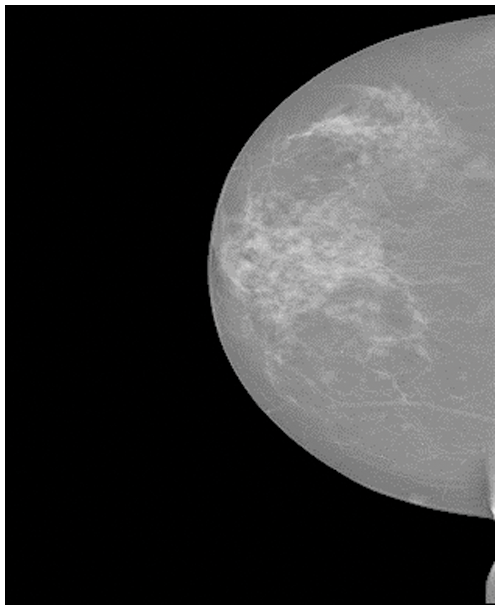

The image of breast cancer is shown in Fig. 1. Invasive carcinoma and carcinoma in situ are two types of breast cancer [19]. Carcinoma in situ cannot be upgraded in the body. About one-third of new breast cancer is carcinoma in situ [20]. Most newly diagnosed breast cancer is invasive. Invasive cancer begins in the mammary duct and can spread to other breast sites [21].

Figure 1: The breast cancer image [22]

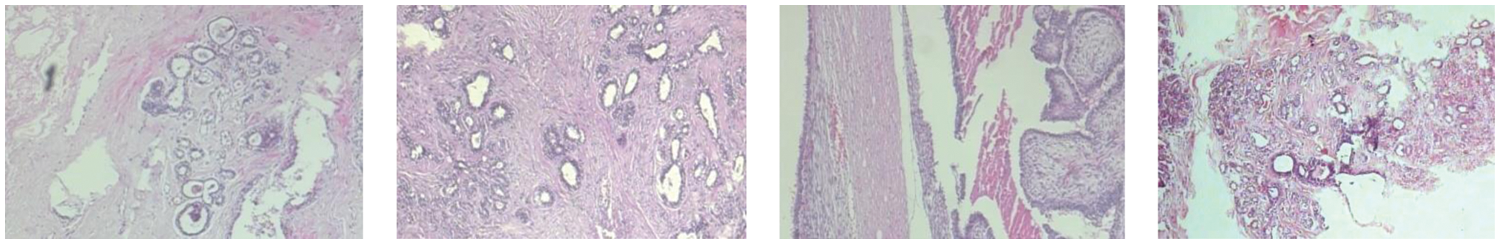

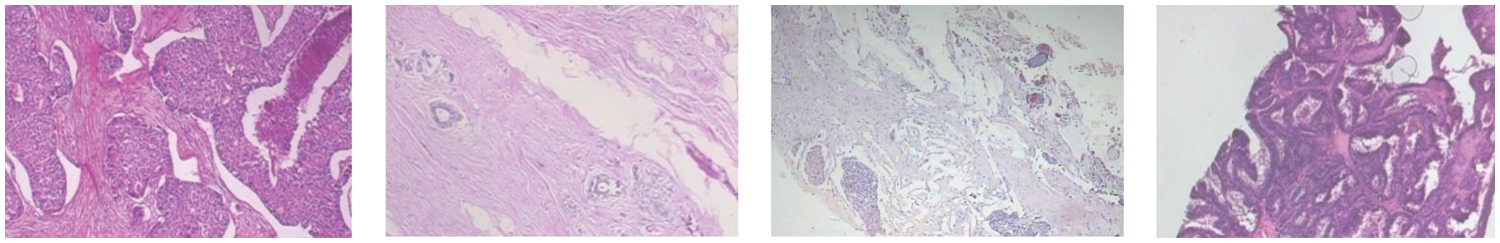

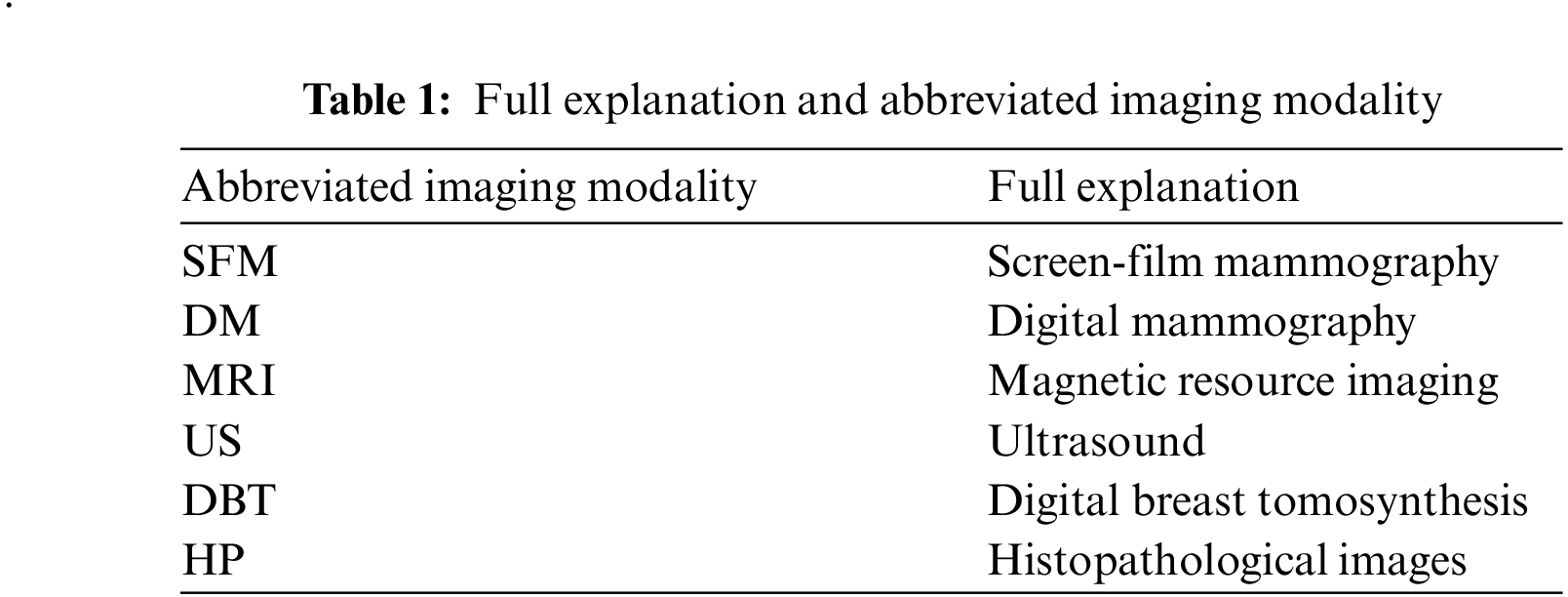

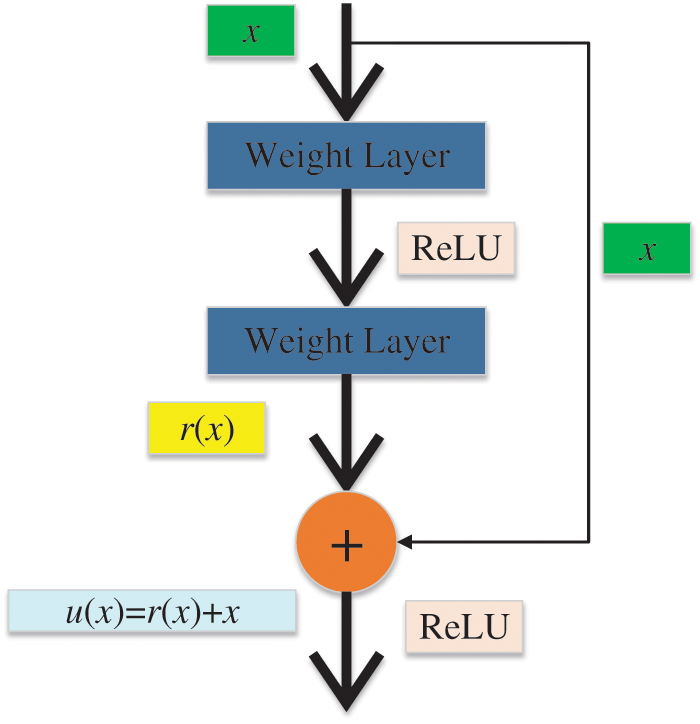

Sometimes, the breast cancer image could be divided into two categories, which are benign and malignant. The images of benign tumors and malignant tumors are given in Figs. 2 and 3. Several imaging modalities are used for the diagnosis and analysis of breast cancer [23–25]. The abbreviated imaging modality table is given in Table 1. (i) Screen-film mammography (SFM) is one of the most important imaging modalities for early breast cancer detection [26]. But SFM also has some disadvantages. First, the sensitivity of SFM is low for the detection of the breast with dense glandular tissue [27]. This disadvantage may be caused by the film. Because once the film is finished, it is impossible to improve it. So sometimes there are pictures with low contrast [28]. Furthermore, SFM is not digital. (ii) Digital mammography (DM) is one of the effective imaging modalities for early breast cancer detection [29,30]. At the same time, DM has always been the standard imaging modality for female breast cancer diagnosis and detection [31]. However, DM has some limitations. The specificity of DM is low, which could cause some biopsies [32]. Another limitation of DM is that patients may face high radiation exposure [27]. This may cause some health hazards to patients. (iii) Magnetic resource imaging (MRI) is suitable for clinical diagnosis and high-risk patients [33]. MRI is very sensitive to breast cancer [20]. MRI still has some problems. Compared with DM, the MRI detection cost is higher [34]. Although MRI has high sensitivity, its specificity is low [35]. (iv) Ultrasound (US) is one of the most common methods for the detection of breast cancer. The US has no ionizing radiation [36]. Therefore, compared with SFM and DM, the US is safer and has lower costs [37]. But the US is an imaging modality that depends on the operator [38]. Therefore, the success of the US in detecting and differentiating breast cancer lesions is largely affected by the operator. (v) Digital breast tomosynthesis (DBT) is a different imaging modality. Compared with traditional mammography, DBT can take less time for imaging [39] and provide more details of the dense chest [40]. One problem with DBT is that DBT may not detect malignant calcification when it is at the slice plane [41]. It also takes more time to read than DM [42]. (vi) Histopathological images (HP) can capture information about cell shape and structural information [43]. However, it is invasive and requires additional costs [44]. The details of these different imaging modalities are presented in Table 2.

Figure 2: The images of the benign tumors

Figure 3: The images of the malignant tumors

Medical imaging is usually done manually by experts (pathologists, radiologists, etc.) [45]. Through the above overview of several medical imaging, there are some problems in medical imaging [46]. Firstly, experts are required to manually analyze medical imaging, but there are few experts in this field in many developing countries [47]. Secondly, the process of manual analysis of medical imaging is very long and cumbersome [48]. Thirdly, when experts manually analyze medical imaging, they can be influenced by foreign factors, such as lack of rest, decreased attention, etc. [27].

With the continuous progress of computer science, computer-aided diagnosis (CAD) models for breast cancer have become a hot prospect [49]. Scientists have been studying CAD models for breast cancer for more than 30 years [50,51]. CAD models for breast cancer have the following advantages [52]: (i) CAD models can improve specificity and sensitivity [53]. (ii) Unnecessary examinations can be omitted by CAD models [54]. This can shorten the diagnosis time and reduce the cost. (iii) The CAD models can reduce the mortality rate by 30% to 70% [13]. With the development of computing power, the convolutional neural network (CNN) is one of the most popular methods for the diagnosis of breast cancer [55–57]. Recently, a sea of research papers has been published papers about breast cancer based on CNN [58–61]. However, these research papers only propose one or several methods, which cannot make readers fully understand the diagnosis technology of breast cancer based on the CNN model. Therefore, we complete a comprehensive review of the diagnosis of breast cancer based on CNN after reviewing a sea of recent papers. In this paper, readers can not only see the CNN-based diagnostic methods for breast cancer in recent decades but also know the advantages and disadvantages of these methods and future research directions. The main contributions of this survey are given as follows:

• A sea of major papers about the diagnosis of breast cancer based on CNN is reviewed in this paper to provide a comprehensive survey.

• This survey presents the advantages and disadvantages of these state-of-the-art methods.

• A presentation of significant findings gives readers the opportunities available for future research.

• We give the future research direction and critical challenges about the CNN-based diagnostic methods for breast cancer.

The rest structure of this paper is shown as Section 2 talks about CNN. Section 3 introduces the breast cancer data set. Section 4 presents the application of CNN in breast cancer. The conclusion is given in Section 5.

2 Convolutional Neural Network

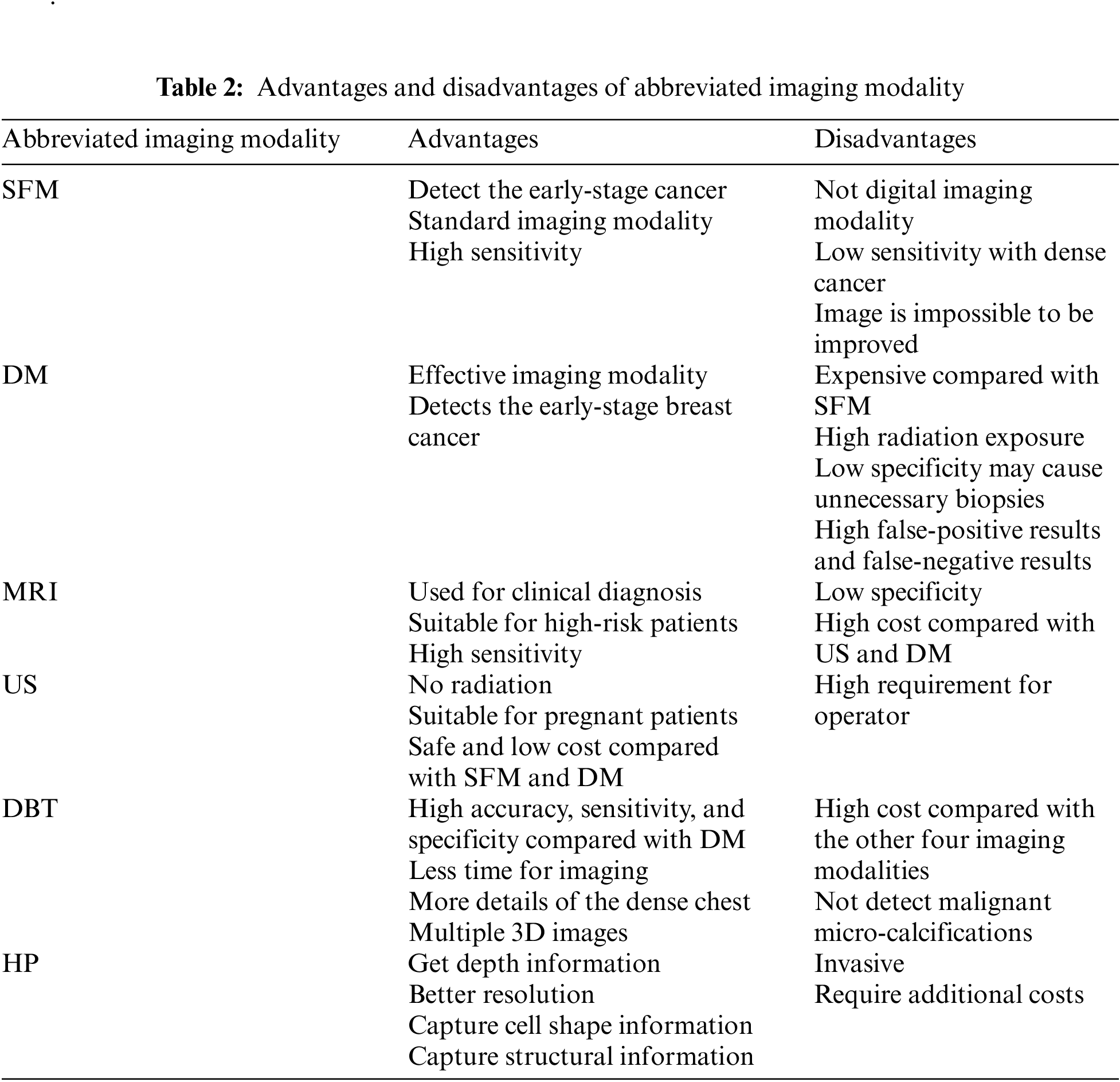

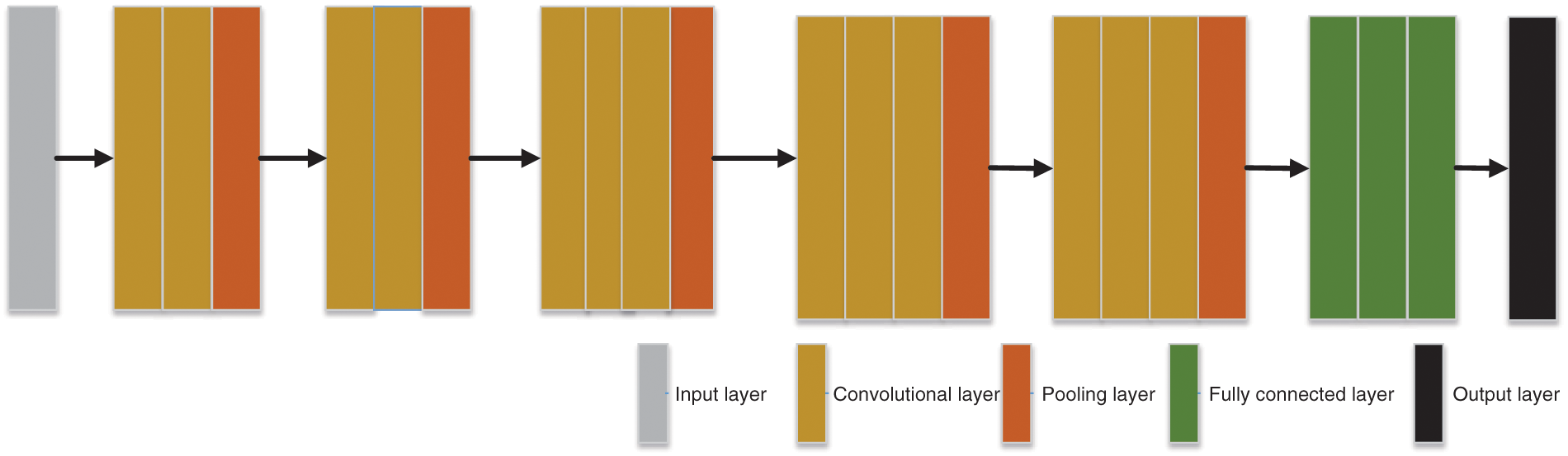

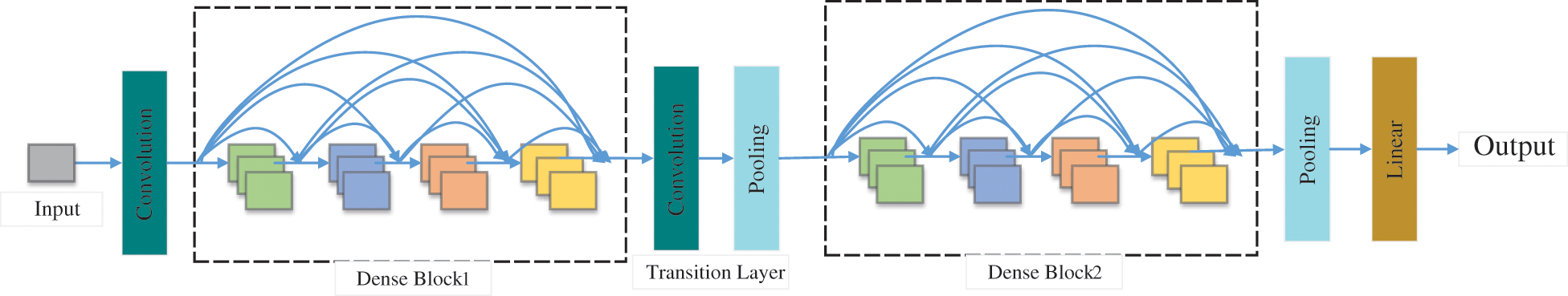

In the past few decades, the importance of medical imaging has been fully verified [62–66]. With medical imaging, people can help detect, diagnose and treat early diseases [33,67–69]. However, as analyzed above, medical imaging still has some shortcomings [70–73]. With the progress of CNN technology, lots of researchers use CNN to diagnose breast cancer [74–77]. A large number of studies have proved that CNN shows superior performance in breast cancer diagnosis [78–81]. CNN can be a solution for the continuous improvement of image analysis technology and transfer learning [82–84]. Recently, a large number of researchers take CNN as the backbone model for transfer learning, such as ResNet, AlexNet, DenseNet, and so on [85–87]. Some layers of CNN models are frozen, and the unfrozen layers are retrained with the data set [88–90]. Sometimes researchers use CNN models as feature extractors and select other networks as the classifiers [91–93], such as support vector machines (SVM) [94], randomized neural networks (RNNs) [95], etc. At present, lots of CNN models are used in breast cancer diagnosis [96], such as AlexNet, VGG, ResNet, U-Net, etc. [93,97,98]. CNN is a computing model composed of a sea of layers [99–102]. Fig. 4 shows the structure of a classic CNN model-VGG16 [103]. The residual learning and DenseNet block are given in Figs. 5 and 6.

Figure 4: The architecture of VGG16

Figure 5: The residual learning

Figure 6: The DenseNet block

The convolution layer is one of the most important components of CNN and usually connects the input layer [104–108]. The input is scanned by the convolution layer based on the convolution kernel for extracting features. Different convolution kernels will extract different features in the same input layer [109]. There may be multiple convolution layers in a CNN model [110]. Basic features are usually extracted by the front convolution layers. The convolution layers in the back are more likely to extract advanced features [88].

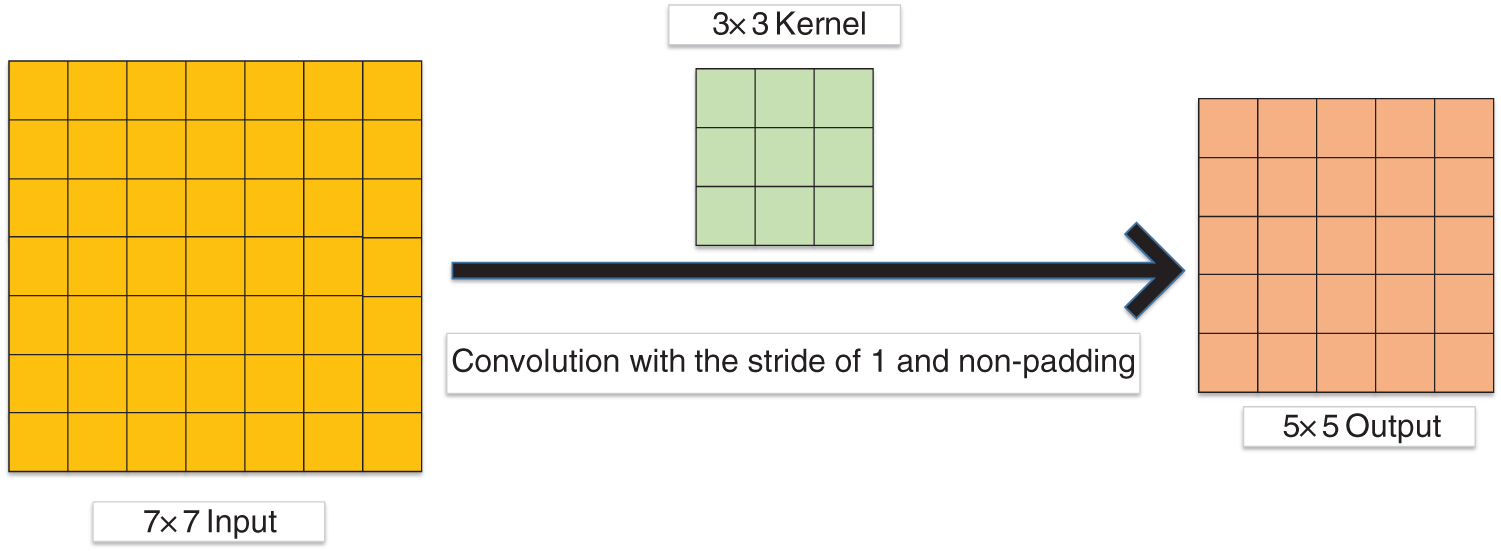

We first define the parameters of the convolution layer: the input image size is I × I, the convolution kernel is K × K, S represents the stride, the padding is P, and the output size is O × O. Padding refers to additional pixels used to supplement the zero value around the input image [104,111–113]. Stride refers to the step size of each convolution kernel sliding [114–116]. The formula is shown below:

Fig. 7 gives a sample of convolution. In Fig. 7, the stride and padding are set as 1 and 0, respectively. I = 7, K = 3, P = 0, S = 1, thus O = 5.

Figure 7: A sample of convolution

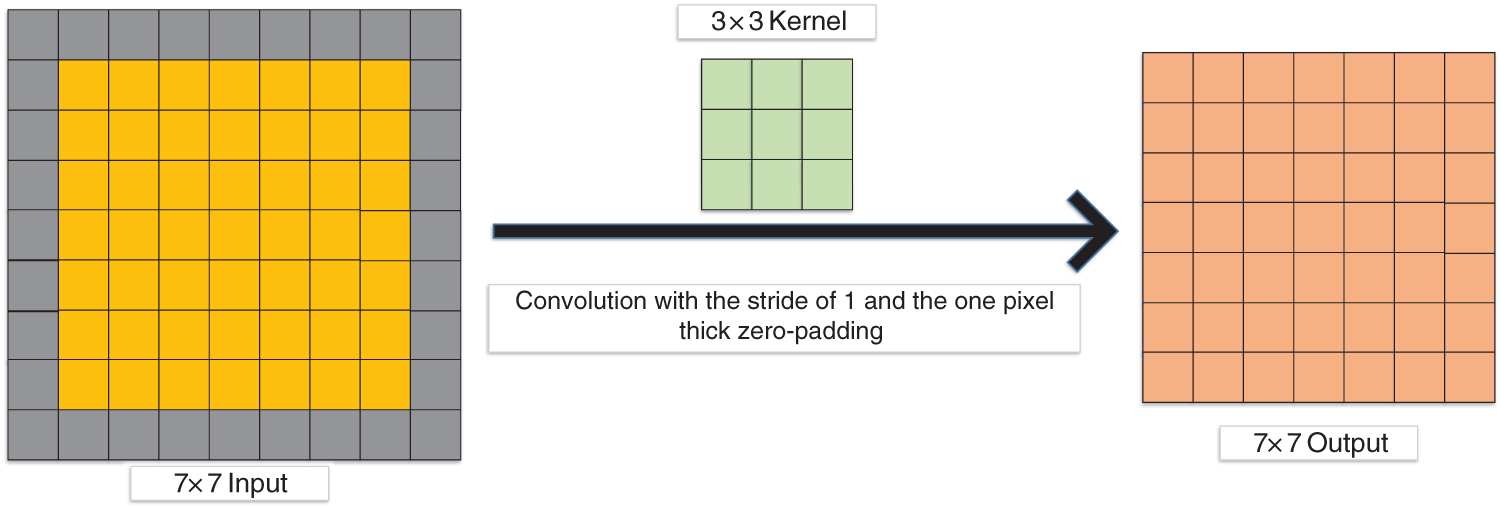

More and more researchers use zero padding [117] in the convolution layer. In Fig. 8, the output size is the same as the input size with the one zero-padding.

Figure 8: Convolution with the one-pixel thick zero-padding

The features from the input are extracted by the convolution layer [118–121]. After multiple convolutions, the feature dimension becomes higher and higher, resulting in too much data [122]. But too much data may contain too much redundant information [122–124]. This redundant information will not only increase the amount of training but also lead to overfitting problems [123,125–127]. At this time, some researchers could select the pooling layer to downsample the extracted features. The main functions of the pooling layer are (i) translation invariance and (ii) feature dimensionality reduction [124].

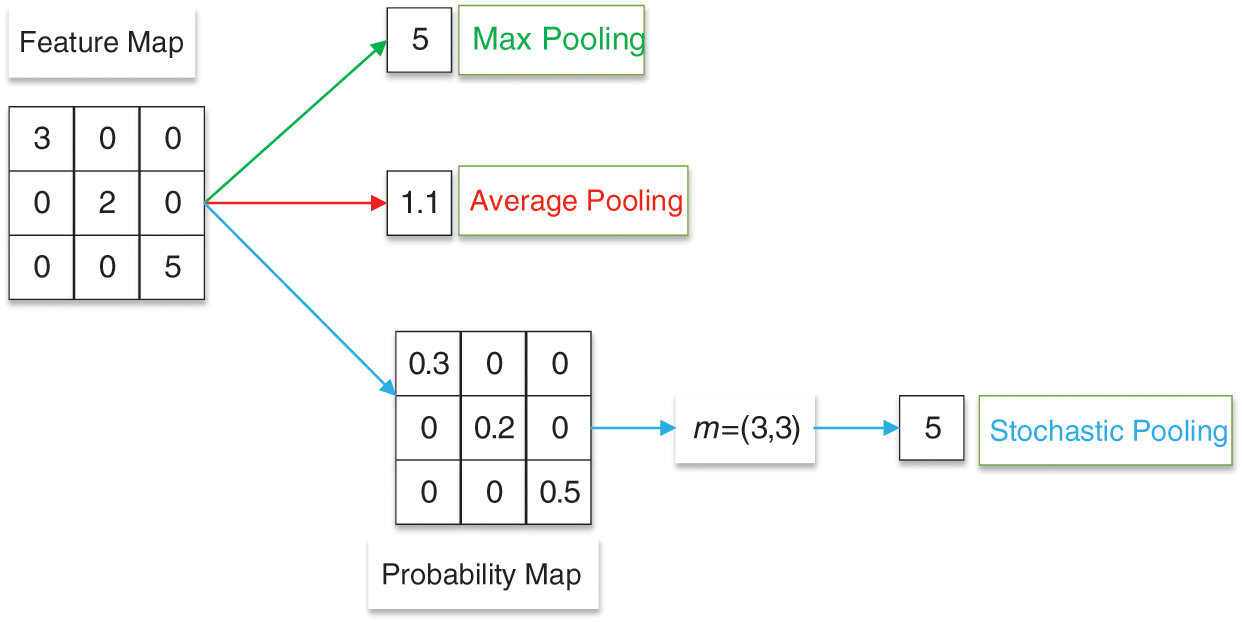

At present, the three main pooling methods are max pooling [128], average pooling [129], and stochastic pooling [130], as given in Fig. 9.

Figure 9: An example of max, average, and stochastic pooling

Max pooling is to obtain the maximum value of pixels in the specific area of the feature map in a certain step [129]. The formula of max pooling (

Average pooling is to average the pixels in a specific area of the feature map in a certain step [131]. The formula of average pooling (

where

Stochastic pooling selects the map response based on the probability map

Stochastic pooling outputs are picked from the multinomial distribution. The formula of stochastic pooling (

The nonlinearity is introduced into CNN through activation. Two traditional activation functions are Sigmoid [133] and Tanh [134]. The equation of Sigmoid is given as:

The Tanh is written as:

These two traditional activation functions do not perform well in convergence. The rectified linear unit (ReLU) [135] accelerates the convergence. The equation of ReLU is as follows:

There are some problems with the ReLU. When x is less than or equal to 0, the activation value is 0. In this case, leaky ReLU (LReLU) [136] is proposed. Compared with ReLU, when x is less than or equal to 0, the activation value is a small negative. The equation of LReLU is given as:

Based on LReLU, researchers proposed PReLU [137]. When x is less than or equal to 0, the slope is learned adaptively from the data. The PReLU is shown as:

where z is very small and decided by other parameters.

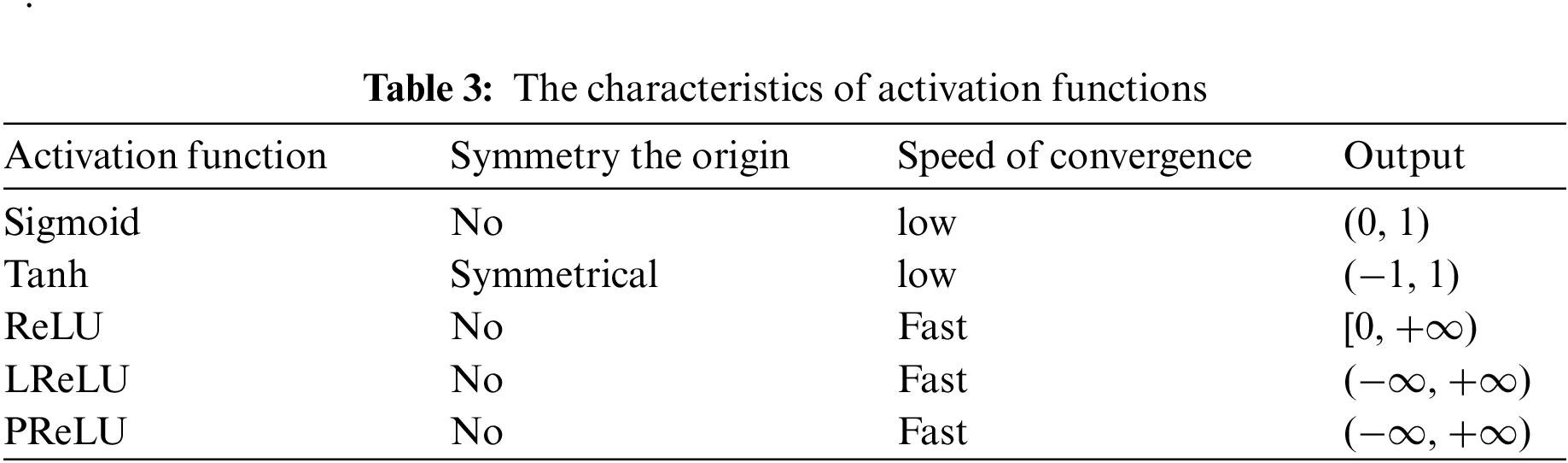

Each activation function has its characteristics, which is shown in Table 3.

The CNN model maps the input data to the feature space with the convolution layer, pooling layer, and activation function. The function of the fully connected layer is to map these to the sample space. The fully connected layer convolutes the feature map to obtain a one-dimensional vector, weighs the features, and reduces the spatial dimension.

CNN may consist of multi-layer fully connected layers. Global average pooling is proposed to substitute the fully connected layer, which greatly reduces parameters. However, global average pooling does not always perform better than the fully connected layer, such as in transfer learning.

The increasing depth of the CNN model increases the difficulty of adjusting the model. The input of each subsequent layer changes in the training. In this case, this could cause the disappearance of the gradient of the low-level network. The reason why the neural structure of a deep neural network converges more and more slowly is the gradient disappearance [138].

Batch normalization adjusts the input value of each layer to the standard normal distribution. The data is set as:

Firstly, calculate the mean value of batch

Secondly, calculate the variance:

Thirdly, perform the normalization:

where

Finally, two parameters are proposed to increase network nonlinearity:

where

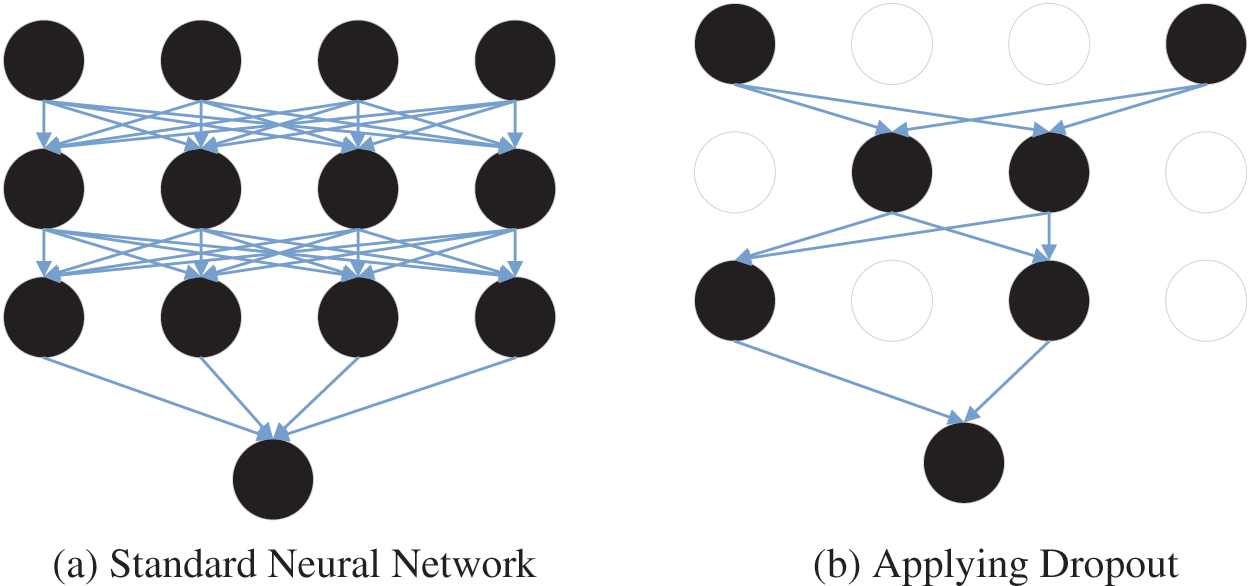

In the CNN model, too few training samples could lead to the overfitting problem. The overfitting problem is that the loss function of the CNN model is small and high accuracy is obtained during training, but the loss function is large, and the accuracy is low during testing. In this case, researchers usually select the dropout to prevent overfitting problems. In CNN model training, some nodes in the hidden layer are set as 0, as shown in Fig. 10. This reduces the interaction between hidden layers [139].

Figure 10: An example of the dropout

One of the important indexes used to evaluate the performance of a CNN model is the confusion matrix The confusion matrix is given in Table 4.

TP, FN, FP, and TN are true positive, false negative, false positive, and true negative, respectively.

However, the confusion matrix only counts numbers. Sometimes in the face of lots of data, it is difficult to measure the quality of the model simply by counting the numbers. Therefore, there are several other indicators for the basic statistical results.

1. Accuracy: It means the proportion of all samples with correct prediction.

2. Sensitivity (TPR): It indicates the proportion of positive cases recognized as positive cases in the positive cases

3. Specificity: It represents the proportion of negative cases recognized as negative cases in the negative cases.

4. Precision: It Indicates how many samples with positive predictions are positive.

5. F1-measure: It is the harmonic average of precision and recall.

6. FPR: When the result is negative, it predicts a positive value.

7. Receiver Operating Characteristic (ROC) curve: TPR and FPR are the y-axis and x-axis, respectively. From the definitions of FPR and TPR, it can be understood that the higher the TPR and the smaller the FPR, the more efficient the CNN model will be.

8. Area under Curve (AUC): It is between 0 and 1 and means the area under ROC. The model would be better with the larger AUC.

9. The Dice Similarity Coefficient (DSC) is usually used as the measurement to evaluate the quality of the segmentation. The DCS measures the overlap between manual segmentation (

where

10. The Mean Absolute Error (MAE) is the average distance between the predicted (

where m is the number of samples.

11. The Intersection over Union (IoU) evaluates the distance between the predicted value (

where

In recent years, a lot of data sets were produced and published. Researchers can use some of them for research. Table 5 shows the details of some public data sets.

For DDSM, all images are 299 × 299. The DDSM project is a collaborative effort at the Massachusetts General Hospital (D. Kopans, R. Moore), the University of South Florida (K. Bowyer), and Sandia National Laboratories (P. Kegelmeyer). Additional cases from Washington University School of Medicine were provided by Peter E. Shile, MD, Assistant Professor of Radiology, and Internal Medicine. There are a total of 55890 samples in the DDSM dataset. 86% of these samples are negative, and the rest are positive. All data is stored as tfrecords files.

The images in the CBIS-DDSM (Curated Breast Imaging Subset of DDSM) are divided into three categories: normal, benign, and malignant cases. This data set contains a total of 4067 images. The CBIS-DDSM collection includes a subset of the DDSM data selected and curated by a trained mammographer. The images have been decompressed and converted to DICOM format.

The Mammographic Image Analysis Society (MIAS) Database contains 322 images. Each image in this dataset is 1024 × 1024. MIAS is an organization of UK research groups interested in the understanding of mammograms and has generated a database of digital mammograms. Films taken from the UK National Breast Screening Programme have been digitized to a 50-micron pixel edge with a Joyce-Loebl scanning microdensitometer, a device linear in the optical density range 0–3.2, and representing each pixel with an 8-bit word.

The INbreast database contains 410 breast cancer images. The INbreast database is a mammographic database, with images acquired at a Breast Centre, located in Hospital de São João, Breast Centre, Porto, Portugal. These images were obtained from 115 patients. Among these 115 patients, 90 were women with double breasts, and the other 25 were mastectomies. Each double breast patient would have four images, and each mastectomy patient would have two images.

The Breast Cancer Histopathological Image Classification (BreakHis) consists of 5429 malignant samples and 2480 benign samples. So, there are 9109 samples in the BreakHis data set. This database has been built in collaboration with the P&D Laboratory–Pathological Anatomy and Cytopathology, Parana, Brazil These microscopic images of breast tumor tissue were collected from 82 patients using different magnifying factors (40×, 100×, 200×, and 400×).

4 Application of CNN in Breast Cancer

This diagnosis of breast cancer through CNN is generally divided into three different tasks: 1 Classification; 2 Detection; 3 Segmentation. Therefore, this section is presented in three parts based on three different tasks.

4.1 Breast Cancer Classification

In recent years, the CNN model has been proven to be successful and has been applied to the diagnosis of breast cancer [140]. Researchers would classify breast cancer into several categories based on CNN models. We would review the classification of breast cancer based on CNN in this section.

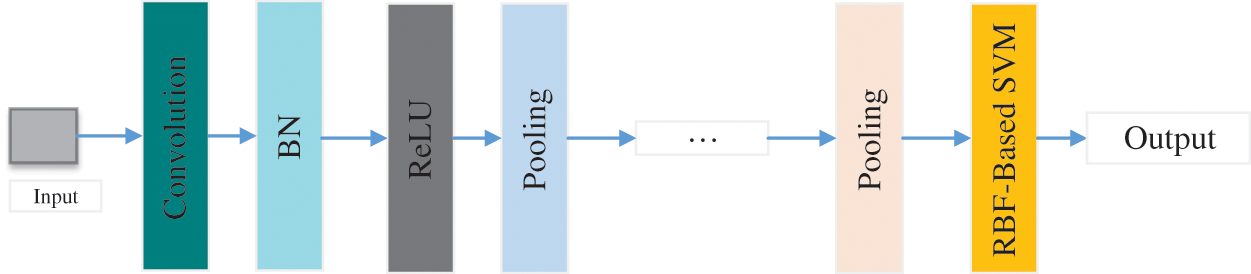

Alkhaleefah et al. [141] introduced a model combining CNN and support vector machine (SVM) classifier with radial basis function (RBF) for breast cancer image classification, as shown in Fig. 11. This method was roughly separated into three steps: Firstly, the CNN model was trained through breast cancer images. Secondly, the CNN model was fine-tuned based on the data set. Finally, the features extracted by the CNN model would be used as the input to RBF-Based SVM. They evaluated the proposed method based on the confusion matrix.

Figure 11: The structure of CNN+SVM

Liu et al. [142] introduced the fully connected layer first CNN (FCLF-CNN) method. This method added the fully connected layer before the convolution layer. They improved structured data transformation in two ways. The encoder in the first method was the fully connected layer. The second method was to use MSE losses. They tested different FCLF-CNN models and four FCLF-CNN models were ensembled. The FCLF-CNN model got 99.28% accuracy, 98.65% sensitivity, and 99.57% specificity for the WDBC data set, and 98.71% accuracy, 97.60% sensitivity, and 99.43% specificity for the WBCD data set.

Gour et al. [143] designed a network to classify breast cancer (ResHist). To obtain better classification results, they proposed a data enhancement technique. This data enhancement technique combined affine transformation, stain normalization, and image patch generation. Experiments show that ResHist had better classification results than traditional CNN models, such as GoogleNet, ResNet50, and so on. This method finally achieved 84.34% accuracy and 90.49% F1.

Wang et al. [144] introduced a hybrid CNN and SVM model to classify breast cancer. This method uses the VGG16 network as the backbone model. Because the data set was small, transfer learning was used in the VGG16 network. On the data set, they used the method of multi-model voting to strengthen the graph. At the same time, the image was also deformed. The accuracy of this method was 80.6%.

Yao et al. [145] introduced a new model to classify breast cancer. Extracting features from breast cancer images was based on CNN (DenseNet) and RNN (LSTM). Then the perceptron attention mechanism based on natural language processing (NLP) was selected to weight the extracted features. They used the targeted dropout in the model instead of the general dropout. They achieved 98.3% accuracy, 100% precision, 100% recall, 100% F1 for Bioimaging2015 Dataset.

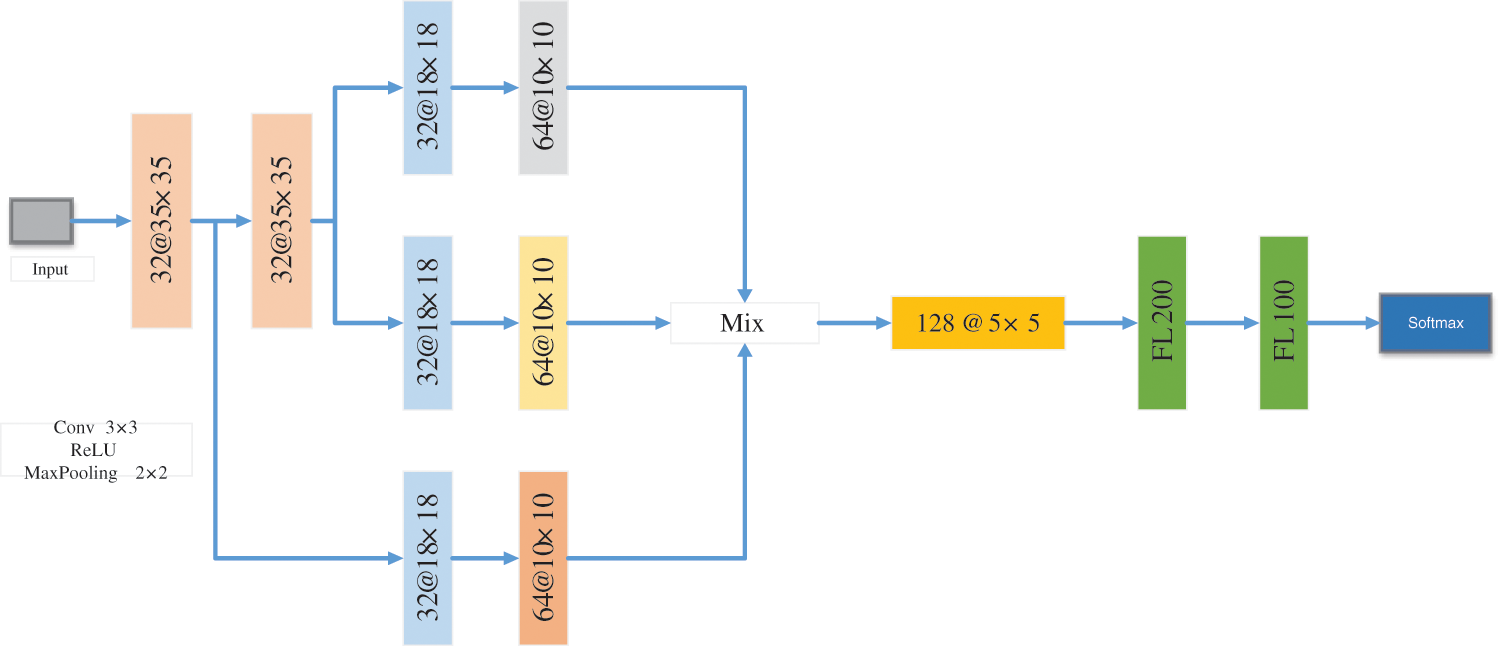

Ibraheem et al. [24] proposed a three-parallel CNN branch network (3PCNNB-Net) to classify breast cancer. The 3PCNNB-Net was separated into three steps. The first step was mainly feature extraction. There were three parallel CNN to extract features. The three CNN models were the same. The second step was to use the average layer to merge the extracted features. The flattened layer, BN, and softmax layer were used as the classification layer. The 3PCNNB-Net achieved 97.04% accuracy, 97.14% sensitivity, and 95.23% specificity.

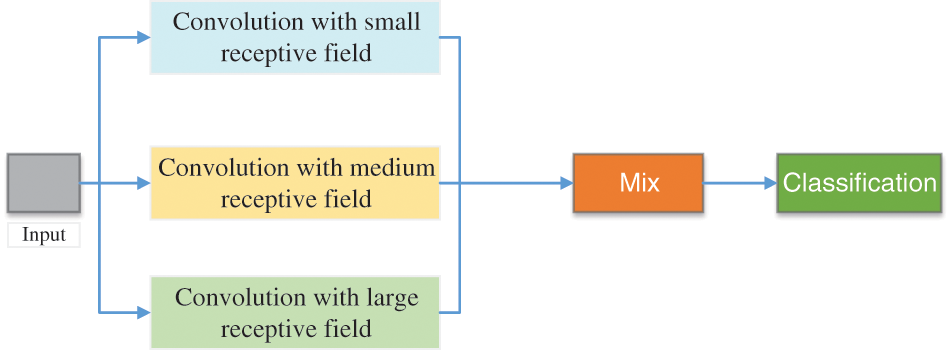

Agnes et al. [146] proposed a multiscale convolutional neural network (MA-CNN) to classify breast cancer, as presented in Fig. 12. They used extended convolution and used three dilated convolutions of different sizes to extract different levels’ features. At this time, these features were combined.

Figure 12: The structure of MA-CNN

Zhang et al. [115] designed an 8-layer CNN network for breast cancer classification (BDR-CNN-GCN). This network mainly consisted of three innovations. The first innovation was that they integrated BN and dropout. Second, they use rank-based stochastic pooling (RSP) instead of general maximum or average pooling. Finally, it was combined with two layers of graph convolutional network (GCN).

Wang et al. [147] introduced a breast cancer classification model according to CNN. In this paper, they selected inception-v3 as the backbone model for feature extraction of breast cancer images. And they did transfer learning to the inception-v3. This model got 0.886 sensitivity, 0.876 specificity, and 0.9468 AUC, respectively.

Saikia et al. [148] compared different classical CNN models in breast cancer classification. These classic CNN models used in this article were VGG16, VGG19, ResNet-50, and GoogLeNet-V3. The data set contained a total of 2120 breast cancer images.

Mewada et al. [149] introduced a new CNN-based model to classify breast cancer. In this new model, they added the multi-resolution wavelet transform. Spectral features were as important as spatial features in classification. Therefore, they integrated the features extracted from Haar wavelet with spatial features. They tested the new model on the BreakHist dataset and BCC2015 and obtained 97.58% and 97.45% accuracy, respectively.

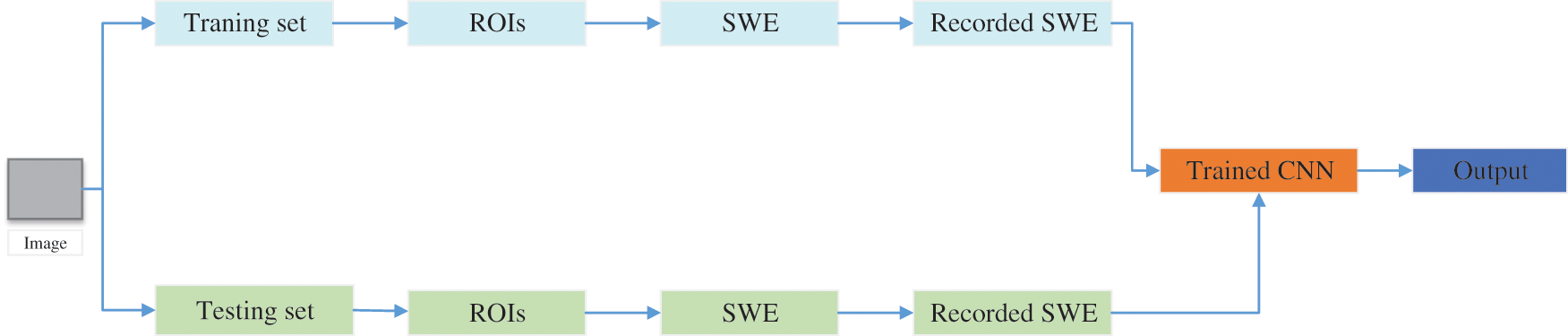

Zhou et al. [150] proposed a new model for automatically classifying benign and malignant breast cancer, as shown in Fig. 13. This model can directly extract features from images, thus eliminating manual operation and image segmentation. This method combined shear wave elastography (SWE) and the CNN model to classify breast cancer. This SWE-CNN model produced 95.7% specificity, 96.2% sensitivity, and 95.8% accuracy, respectively.

Figure 13: The structure SWE+CNN

Lotter et al. [151] introduced a multi-scale CNN for the classification of breast cancer. Firstly, the classifier was trained by segmenting the lesions in the image. Moreover, they trained the model by using the extracted features. They tested the multi-scale CNN on the DDSM dataset and obtained 0.92 AUROC.

Vidyarthi et al. [152] introduced a classification model combining CLAHE and CNN models for microscopic imaging of breast cancer. They tested the image preprocessing using CNN and without CNN. In this paper, they selected the BreakHist data set for testing. Finally, the hybrid model of CNN can get better classification results, which produces an accuracy of about 90%.

Hijab et al. [153] used a classical CNN model (VGG16) for breast cancer classification. They did some modifications to the VGG16. First, they selected the pre-trained VGG16 as the backbone model. Then they fine-tuned the backbone model. When fine-tuning, they froze all convolution layers except the last layer. The weights were updated by using random gradient descent (SGD). Finally, the fine-tuned VGG16 yielded 0.97 accuracy and 0.98 AUC.

Kumar et al. [154] proposed a self-made CNN model for breast cancer classification. Six convolutional layers, six max-pooling layers, and two fully connected layers are used to form the self-made CNN model. The ReLU activation function was selected in this paper. The self-made CNN model was tested on the 7909 breast cancer images and achieved 84% efficiency.

Kousalya et al. [155] compared the self-made CNN model with DensenNet201 for the classification of breast cancer. In the self-made CNN model, there were two convolutional layers, two pooling layers, one flattened layer, and two fully connected layers. They tested these two CNN models on the different learning rates and batch sizes. In conclusion, the self-made CNN models with Particle Swarm Optimization (PSO) can yield better specificity and precision.

Mikhailov et al. [156] proposed a novel CNN model to classify breast cancer. In this model, the max-pooling and depth-wise separable convolution were selected to improve the classification performance. What’s more, different activation functions were tested in this paper, which were ReLU, ELU, and Sigmoid. The novel CNN model with ReLU can achieve the best accuracy, which was 85%.

Karthik et al. [157] offered a novel stacking ensemble CNN framework for the classification of breast cancer. Three stacked CNN models were made for extracting features. They designed these three stacked CNN models. The features from these three stacked CNN models were ensembled to yield better classification performance. The ensemble CNN model achieved 92.15 accuracy, 92.21% F1-score, and 92.17% recall.

Nawaz et al. [158] proposed a novel CNN model for the multi-classification of breast cancer. In this model, DenseNet was used as the backbone model. The open data set (BreakHis data set) was selected to test the proposed novel model. The novel model could achieve 95.4% accuracy for the multi-classification of breast cancer.

Deniz et al. [159] proposed a new model for breast cancer classification, which obtained transfer learning and CNN models. The pre-trained VGG16 and AlexNet were used to extract features. These extracted features from these two pre-trained CNN models would be concatenated and then fed to SVM for classification. The model can achieve 91.30% accuracy.

Yeh et al. [160] compared CNN-based CAD and feature-based CAD for classifying breast cancer based on DBT images. In the CNN-based CAD, the feature extractor was the LeNet. After experiments, the LeNet-based CAD could yield 87.12% (0.035) and 74.85% (0.122) accuracy. In conclusion, the CNN-based CAD could outperform the feature-based CAD.

Gonçalves et al. [161] tested three different CNN models to classify breast cancer, which were ResNet50, DenseNet201, and VGG16. In these three CNN models, transfer learning was used to improve classification performance. Finally, the DenseNet could get 91.67% accuracy, 83.3% specificity, 100% sensitivity, and 0.92 F1-score.

Bayramoglu et al. [162] proposed two different CNN models for breast cancer classification. The single CNN model was used to classify a malignancy. The multi-task CNN (mt_CNN) model was used to classify malignancy and image magnification levels. The single CNN model and mt_CNN model could yield 83.25% and 82.13% average recognition rates, respectively.

Alqahtani et al. [163] offered a novel CNN model (msSE-ResNet) for breast cancer classification. In the msSE-ResNet, the residual learning and different scales were used to improve the results. The msSE-ResNet can achieve 88.87% accuracy and 0.9541 AUC.

For the classification of breast cancer based on CNN, there are some limitations. When these existing methods select the large public dataset, it will take a lot of training time. Five-fold cross-validation was used to evaluate some proposed methods in these papers. Even though some results were very good, there were still many unsatisfactory results. The details of these methods are given in Table 6.

We will review the detection of breast cancer based on CNN in this section [164]. Researchers use the CNN model to detect candidate lesion locations in breast images.

Sohail et al. [165] introduced a CNN-based framework (MP-MitDet) for mitotic nuclei recognition in pathological images of breast cancer. The framework can be divided into four steps. 1. refine the label, 2 Select split region, 3 Blob analysis, 4 cell refinement. The whole framework used an automatic tagger and the CNN model for training. More areas were selected according to the spot area. The MP-MitDet obtained 0.71 precision, 0.76 recall, 0.75 F1, and 0.78 area.

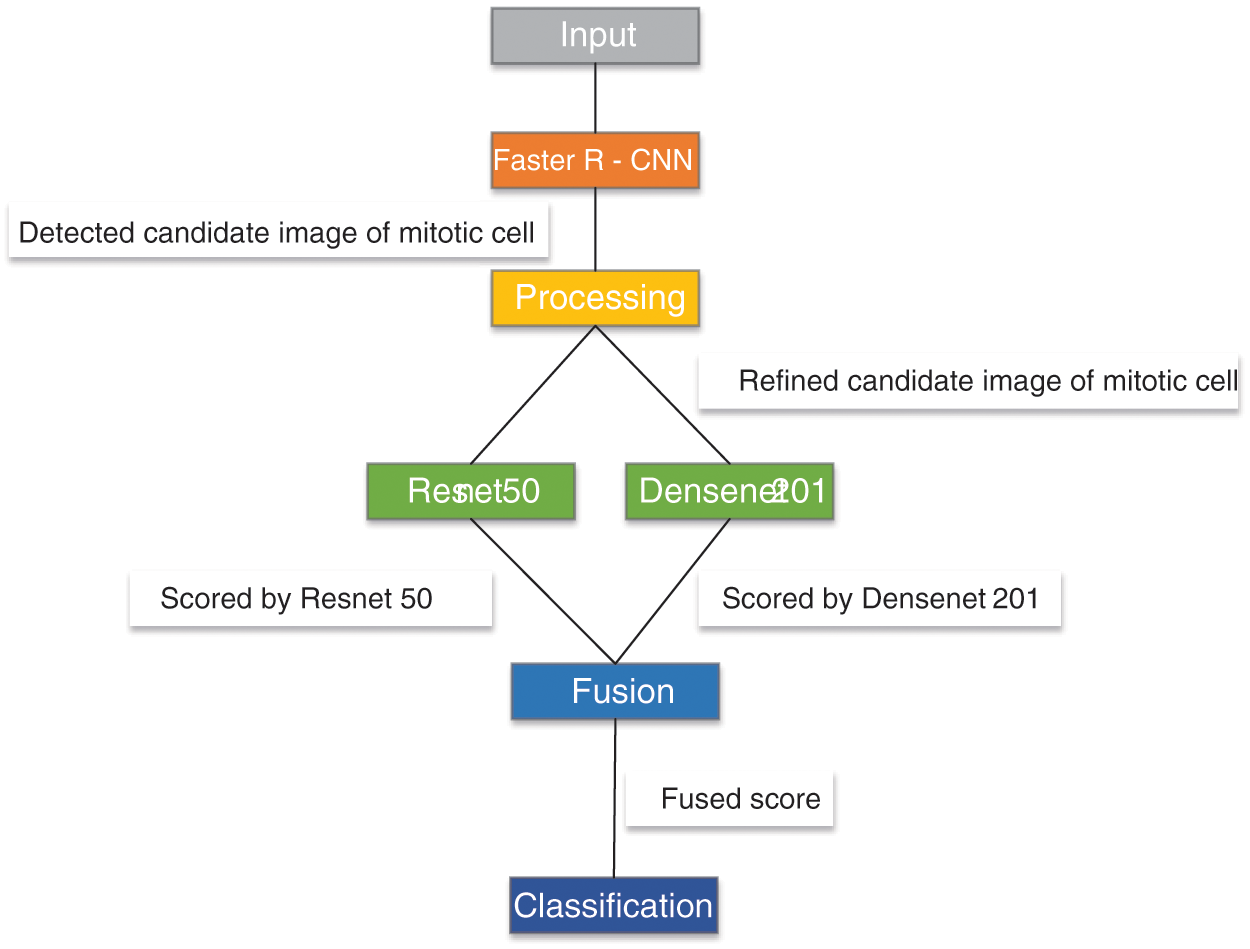

Mahmood et al. [166] proposed a low-cost CNN framework for automatic breast cancer mitotic cell detection, as shown in Fig. 14. This framework was composed of the faster regional convolutional neural network (Faster R-CNN) and deep CNN. They experimented with this model on two public datasets, which were ICPR 2012 and ICPR 2014. This model yielded 0.841 recall, 0.858 F1, and 0.876 precision for ICPR 2012 and 0.583 recall, 0.691 F1, and 0.848 precision for ICPR 2014.

Figure 14: The framework of R-CNN+CNN

Wang et al. [167] introduced a new model by CNN and US-ELM (CNN-GTD-ELM) to detect breast cancer X-rays. They designed an 8-layer CNN model for feature extraction of input images. They combined the extracted features with some additional features of the tumor. These combined features were the input to the ELM.

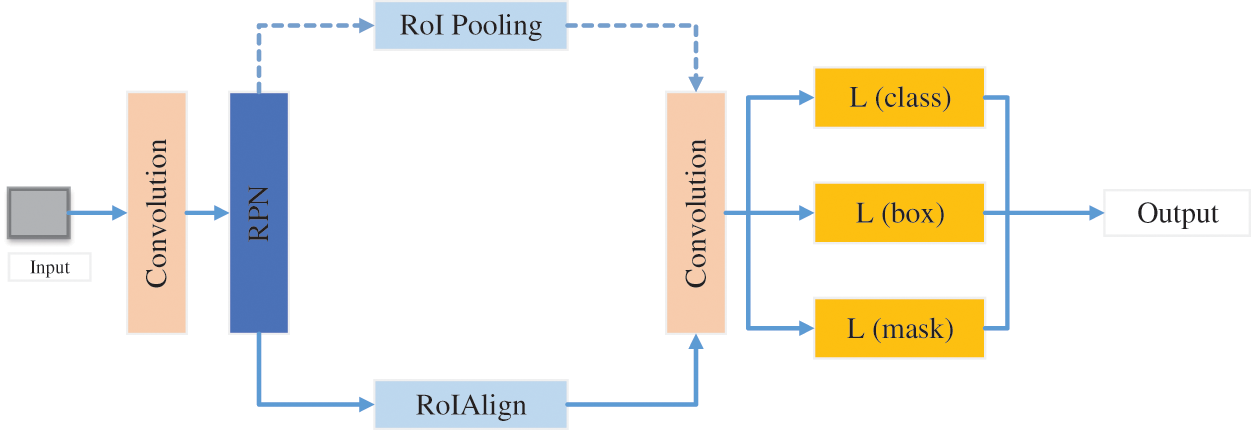

Chiao et al. [168] established a mask region detection framework based on CNN, as given in Fig. 15. This method detected the lesion of breast cancer and classify benign and malignant breast cancer. Finally, this framework achieved 0.75 average precision in detection and 85% accuracy in classification.

Figure 15: The structure of mask CNN

Das et al. [169] introduced Deep Multiple Instance Learning (MIL) based on CNN for breast cancer detection. This model did not rely on region learning marked by experts on WSI images. The MIL-CNN model achieved 96.63%, 93.06%, and 95.83% accuracy on the IUPHL, BreakHis, and UCSB data sets, respectively.

Melekoodappattu et al. [11] introduced a framework for breast cancer detection. The framework was mainly composed of CNN and image texture attribute extraction. They designed a 9-layer CNN model. In the extraction phase, they defined texture features and used Uniform Manifold Approximation and Projection (UMAP) to reduce the dimension of features. Then the multi-stage features were integrated for detection. They tested this model on two data sets which were MIAS and DDSM. This model obtained 98% accuracy and 97.8% specificity for the MIAS data set, and 97.9% accuracy and 98.3% specificity for the DDSM data set.

Zainudin et al. [170] designed three CNN models for mitosis and amitosis in breast cell detection. The layers of these three CNN were 6, 13, and 17, respectively. Experiments showed that the 17-layer CNN model achieved the best performance. Finally, the model achieved a 15.50% loss, 80.55% TPR, 84.49% accuracy, and 11.66% FNR.

Wu et al. [171] introduced a deep fused fully convolutional neural network (FF-CNN) for breast cancer detection. They selected the AlexNet model as the backbone model. They combined different levels of features to improve detection results. They used a multi-step fine-tuning method to reduce overfitting problems. The FF-CNN was tested on ICPR 2014 data set and obtained better detection accuracy and faster detection speed.

Gonçalves et al. [172] introduced a new framework for breast cancer detection. This new framework used two bionic optimization techniques to optimize the CNN model, which were particle swarm optimization and genetic algorithm. The authors used three CNN models, which were DenseNet-201, VGG-16, and ResNet-50. Experiments showed that the optimized network detection results were significantly improved. The F1 score of VGG-16 was increased from 0.66 to 0.92 and the F1 score of ResNet-50 was increased from 0.83 to 0.90. The F1 values of the three optimized networks were higher than 0.90.

Guan et al. [173] proposed two models to detect breast cancer. The first method was to train images by Generative Adversarial Network (GAN) and then put the trained images into CNN for experiments. The accuracy of this model was 98.85%. The second model was that they first select the VGG-16 model as the backbone model and then transferred the backbone model. The accuracy of this method was 91.48%. The authors combined the two methods, but the results of the combined model were not ideal.

Hadush et al. [174] proposed the breast mass abnormality detection model with CNN to reduce the artificial cost. Extracting features was completed by CNN. Then these features were input into the Region Proposed Network (RPN) and Region of Interest (ROI) of fast R-CNN for detection. Finally, the method achieved 92.2% AUC-ROC, 91.86% accuracy, and 94.67% sensitivity.

Huang et al. [175] presented a lightweight CNN model (BM-Net) to detect breast cancer. The lightweight CNN model consisted of MobileNet-V3 and bilinear structure. The MobileNet-V3 was the backbone model to extract the features. To save resources, they just replaced the fully connected layer with a bilinear structure. The BM-Net could achieve 0.88 accuracy and 0.71 score.

Mahbub et al. [176] proposed a novel model to detect breast cancer. They designed a CNN model, which consisted of six convolutional layers, five max-pooling layers, and two dense layers. The proposed model was composed of the designed CNN model and the fuzzy analytical hierarchy process model. The proposed model can get 98.75% accuracy to detect breast cancer.

Prajoth SenthilKumar et al. [177] used a pre-trained CNN model for the detection and analysis of breast cancer. They selected the VGG16 model as the backbone model. They detected breast cancer from the histology images based on the variability, cell density, and tissue structure. The model could get 88% accuracy.

Charan et al. [178] designed a 16-layers CNN model for the detection of breast cancer. The designed CNN model consisted of six convolution layers, four average-pooling layers, and one fully connected layer. The public data set (Mammograms-MIAS data set) was used for training and testing. The designed CNN model can achieve 65% accuracy.

Alanazi et al. [179] offered a novel CNN model for the detection of breast cancer. They designed a new CNN model and used three different classifiers to detect breast cancer. Three classifiers were K-nearest neighbor, logistic regression, and support vector machines, respectively. This new model can achieve 87% accuracy, which improved 9% accuracy than other ML methods.

Gonçalves et al. [180] presented a novel model to detect breast cancer. They proposed a new random forest surrogate to get better parameters in the pre-trained CNN models. The random forest surrogate was made of particle swarm optimization and genetic algorithms. Three pre-trained CNN models were used in this paper, which was ResNet50, DenseNet201, and VGG16. With the help of the proposed random forest surrogate, the F1-scores of DenseNet201 and ResNet50 could be improved from 0.92 to 1, and 0.85 to 0.92, respectively.

Guan et al. [181] applied the generative adversarial network (GAN) to generate more breast cancer images. The regions of interest (ROIs) form images to train GAN. Some augmentation methods were used to compare with GAN, such as scaling, shifting, rotation, and so on. They designed a new CNN model as the classifier. After experiments, the GAN can yield around 3.6% better than other transformations on the image augmentation.

Sun et al. [182] were inspired by human detection to propose a novel model for breast cancer detection based on the mammographic image. The mathematical morphology method was used to preprocess the images. The image template matching method was selected to locate the suspected regions of a breast mass. The PSO was used to improve the accuracy. The proposed model can achieve 85.82% accuracy, 66.31% F1-score, 95.38% recall, and 50.81% precision.

Chauhan et al. [183] used different algorithms to detect breast cancer. Three different algorithms were CNN, KNN, and SVM, respectively. They compared these three algorithms on the breast cancer data set. SVM could achieve 98% accuracy, KNN can yield 73% accuracy, and CNN could get 95% accuracy.

Gupta et al. [184] proposed a modified CNN model for the detection of breast cancer. The backbone of this model was ResNet. They modified the ResNet in three steps. Firstly, they used the dropout of 0.5. Then, the adaptive average pooling and adaptive max pooling were used by two layers of BN, the dropout, and the fully connected layer. The third step was the stride for down-sampling at 3 × 3 convolution. The modified CNN model could achieve 99.75% accuracy, 99.18% precision, and 99.37% recall, respectively.

Chouhan et al. [185] designed a novel framework (DFeBCD) for detecting breast cancer. In the DFeBCD, they designed the highway network based on CNN to select features. There were two classifiers, which were SVM and Emotional Learning inspired Ensemble Classifier (ELiEC). These two classifiers were trained by the selected features. This framework was evaluated by five-fold cross-validation and achieved 80.5% accuracy.

There are some limitations in the detection of breast cancer based on CNN. If the dataset used in the research paper is very large, a sea of computation and time is needed to complete the training. On the other hand, if the dataset used in the research paper is very small, it could cause an overfitting problem. Most of the breast cancer diagnosis model based on CNN uses the pre-trained CNN model to extract features. But at this time, which layer has the best feature? Which layer of features should we extract? The summary of CNN for breast cancer detection is shown in Table 7.

4.3 Breast Cancer Segmentation

In this chapter, we will review the segmentation of breast cancer based on CNN. The abnormal areas in breast images would be segmented based on the CNN model. Breast cancer image segmentation compares the similarity of feature factors between images and divides the image into several regions. Breast segmentation involves the removal of background region, pectoral muscles, labels, artifacts, and other defects add during image acquisition. The segmented area could be compared with the manually segmented area to verify the accuracy of the segmentation method.

Chen et al. [186] introduced a new model for the segmentation of breast cancer. This new framework mainly consisted of two steps. The first step was the segmentation CNN model. Another part was the structure of the QA network based on the ResNet-101 model. A structure was used to predict the quality of each slice. Another structure gave the DSC value.

Tsochatzidis et al. [6] introduced a new CNN model to segment breast masses. In this new CNN model, the convolution layer of each layer was modified. The loss function was also modified by adding an extra term. They evaluated the method on DDSM-400 and CBIS-DDSM datasets.

Lei et al. [56] developed a mask score region based on the R-CNN to segment breast tumors. The network consisted of five parts, namely, the regional suggestion network, the mask terminal, the backbone network, the mask scoring header, and the regional convolution neural network header. In this R-CNN model, the region of interest (ROI) was segmented by using the network blocks between module quality and region categories to build a direct correlation integration.

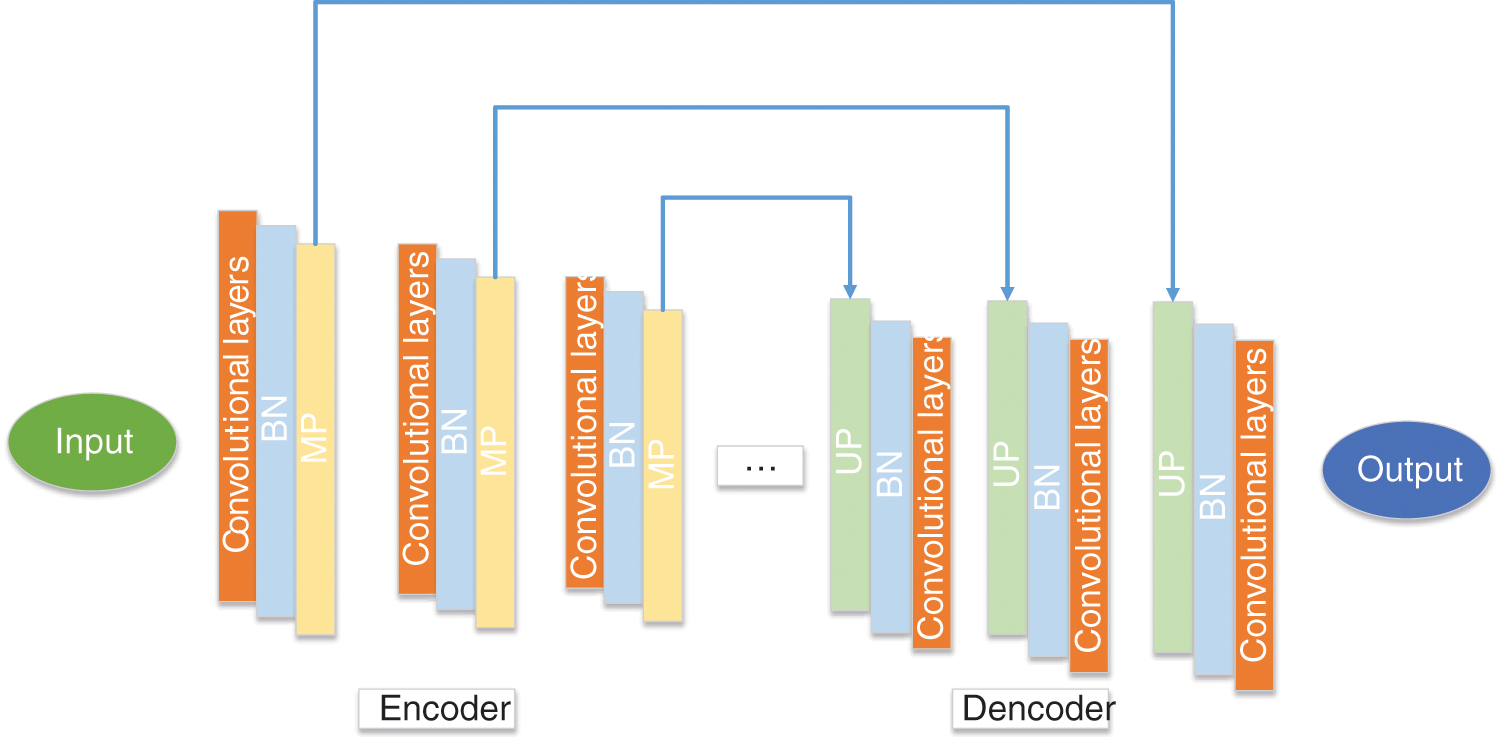

El Adoui et al. [187] proposed two CNN models to segment breast tumors in dynamic contrast-enhanced magnetic resonance imaging (DCE-MRI). The first CNN model was based on SegNet, as presented in Fig. 16. The second model was to select U-Net as the backbone model. 85% of the data sets were used for training, and the other 15% were used for validation. The first method obtained 68.88% IoU, and the second method obtained 76.14% IoU.

Figure 16: The structure of SegNet

Kakileti et al. [188] introduced a cascaded CNN architecture for breast cancer segmentation. This new model used a 5-stage V-net as the main encoding and decoding structure. To improve the accuracy, they used stridden convolutions, deconvolutions, and PReLU activation in this model. This new method obtained 91.6% overall Dice, 93.3% frontal Dice, 89.5% lateral Dice, and 91.9% oblique Dice.

Kumar et al. [189] introduced a dual-layered CNN model (DL-CNN) for breast cancer region recognition and segmentation. The first layer CNN was used to identify the possible region. The second layer CNN was used to segment and reduce false positive. They tested the model on breast image data sets and obtained 0.9726 at 0.39706 for True Positive Rate at False-positive per image.

Ranjbarzadeh et al. [90] proposed a new CNN with multiple feature extraction paths for the segmentation of breast cancer (MRFE-CNN), as shown in Fig. 17. To prevent deep structure, they enhanced the data set. This method can improve the detection of breast cancer tumor boundaries. They used Mini-MIAS and DDSM data sets to evaluate the MRFE-CNN. They obtained 0.936, 0.890, and 0.871 accuracy for normal, benign, and malignant tumors on Mini-MIAS, and 0.944, 0.915, 0.892 accuracy for normal, benign, and malignant tumors on DDSM.

Figure 17: The framework of MRFE-CNN

Atrey et al. [190] proposed a new CNN automatic segmentation system for breast lesions. This system was mainly based on their self-made CNN model. For the evaluation of this model, the authors used the bimodal database for bimodal evaluation. The two databases were MG and US. Finally, this model got 0.64 DCS, 0.53 JI for the MG, and 0.77 DSC, 0.64 JI for the US.

Irfan et al. [191] introduced a segmentation model with Dilated Semantic Segmentation Network (Di-CNN) for ultrasonic breast lesion images. This model was mainly composed of two CNN models. A CNN model was DenseNet201 for transfer learning. The second model is a self-made 24-layer CNN model. The features extracted from the two CNN models were fused. SVM was used as the classifier of this model. This model yielded 98.9% accuracy.

Su et al. [192] designed a fast-scanning depth convolution neural network (FCNN) for breast cancer segmentation. This model reduced the amount of calculation and the calculation time. It only took 2.3 s to split 1000 × 1000 images. The FCNN model got 0.91 precision, 0.82 recall, and 0.85 F1.

He et al. [193] proposed a novel network with the CNN model and transferring learning to classify and segment breast cancer. In this paper, two CNN models (AlexNet and GoogleNet) were selected as the backbone models. These two CNN models were used as the feature extractors and SVM was selected as the classifier. The segmentation of this model in breast cancer was similar to professional pathologists.

Soltani et al. [194] introduced a new model for automatic breast cancer segmentation. This method was based on the Mask RCNN. The backbone model used in this paper was detectron2. The model was tested on the INbreast data set and got 81.05% F1 and 95.87% precision.

Min et al. [195] introduced a new system (fully integrated CAD) for the automatic segmentation of breast cancer. The new system was composed of the detection-segmentation method and pseudo-color image generation. The detection-segmentation method was mainly with Mask RCNN. The public INbreast data set was chosen to test the new system. This system yielded a 0.88 Dice similarity index.

Arora et al. [196] proposed a model (RGU-Net) for breast cancer segmentation. The RGU-Net consisted of residual connection and group convolution in U-Net. There were several different sizes of encoder and decoder blocks. The conditional random field was selected to analyze the boundaries. The model was evaluated on the INbreast data set and produced 92.6% Dice.

Spuhler et al. [197] introduced a new CNN method (DCE-MRI) to segment breast cancer. The manual regions of interest were completed by the expert radiologist (R1). R2 and R3 were finished by a resident and another expert radiologist. Finally, the new model 0.71 Dice by using R1.

Atrey et al. [198] proposed a customized CNN for the segmentation of breast cancer based on MG and US. There were nine layers in this customized CNN model. Two convolutional layers, one max-pooling layer, one ReLU layer, one fully connected layer, one softmax layer, and a classification layer formed the whole customized CNN model. This model achieved 0.64 DSC and 0.53 JI for MG and 0.77 DSC and 0.64 JI for the US.

Sumathi et al. [199] proposed a new system to segment breast cancer. They used artificial bee colony optimization with fuzzy clustering to select features. Then, CNN was used as the classifier. This hybrid system could achieve 98% segmentation accuracy.

Xu et al. [200] designed an 8-layer CNN for the segmentation of breast cancer. This customized 8-layer CNN model consisted of 1–3 convolution layers, 1–3 pooling layers, a fully connected layer, and a softmax layer. This customized CNN model yielded 85.1% JSI.

Guo et al. [201] proposed a novel network to segment breast cancer. They designed a 6-layers CNN model, which consisted of two convolutional layers, two pooling layers, and two fully connected layers. The features were extracted by the customized CNN model and then fed to SVM. The proposed combined CNN-SVM achieved 0.92, 0.93, and 0.95 on the sensitivity index, DSC coefficient, and PPV.

Cui et al. [202] proposed a novel patch-based CNN model for the detection of breast cancer based on MRI. They designed a 7-layer CNN model, which consisted of four convolutional layers, two max-pooling layers, and one fully connected layer. The 7-layer CNN model achieved a 95.19% Dice ratio.

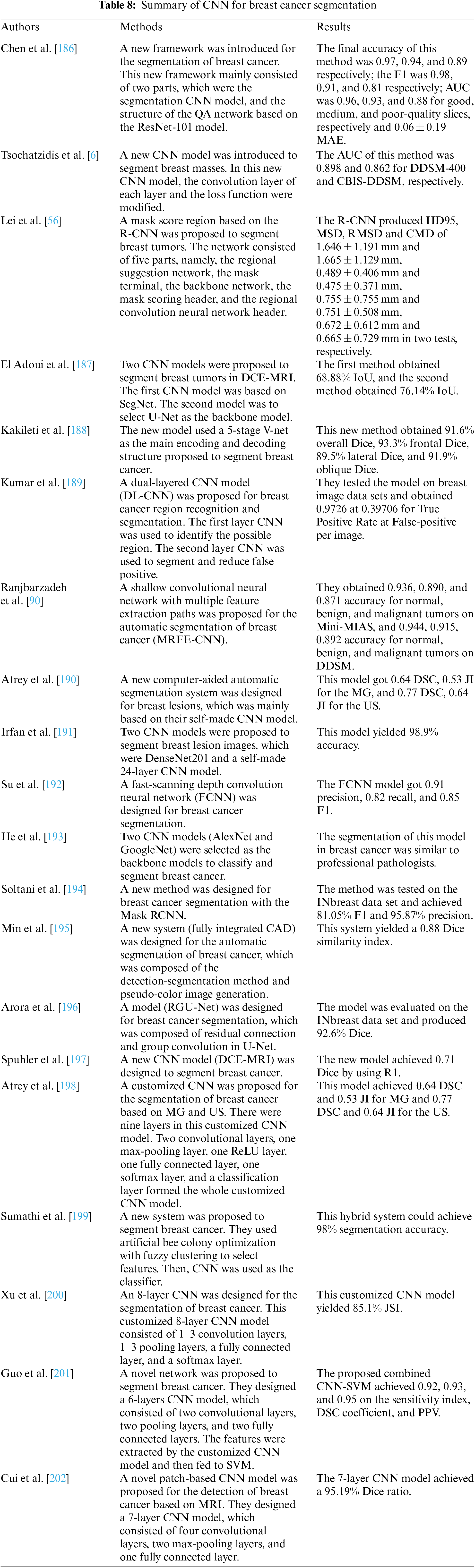

For the segmentation of breast cancer based on CNN, there are some limitations. These methods selected public datasets for experiments. But these public datasets need many expert doctors to label these images. What’s more, the application of unsupervised learning technology in the segmentation of breast cancer is not very good. The summary of CNN for breast cancer segmentation is shown in Table 8.

Recently, the diagnosis of breast cancer based on CNN has made rapid progress and success. This also makes more and more researchers devote more energy to a breast cancer diagnosis with CNN. We complete a comprehensive review of the diagnosis of breast cancer based on CNN after reviewing a sea of recent papers. In this paper, readers can not only see the CNN-based diagnostic methods for breast cancer in recent decades but also know the advantages and disadvantages of these methods and future research directions. The main contributions of this survey: (i) A sea of major papers about the diagnosis of breast cancer based on CNN is reviewed in this paper to provide a comprehensive survey; (ii) This survey presents the advantages and disadvantages of these state-of-the-art methods; (iii) A presentation of significant findings gives readers the opportunities available in the research interest; (iv) We give the future research direction and critical challenges about the CNN-based diagnostic methods for breast cancer.

Based on the papers we have reviewed, many techniques have been used to boost their proposed CNN models for the diagnosis of breast cancer. Many researchers used pre-trained CNN models or their customized CNN models to extract features from input. To reduce the training time and computational cost, some researchers replace some last layers of CNN models with other techniques, such as SVM, ELM, and so on. In some papers, researchers would select more than one CNN models to extract different features. Then, these different features would be ensembled and fed to classifiers for improving performance.

Although this breast cancer diagnosis with CNN has achieved great success, there are still some limitations. (i) There are too few good data sets. A good public breast cancer dataset needs to involve many aspects, such as professional medical knowledge, privacy issues, financial issues, dataset size, and so on. (ii) When the data set is too large, the CNN-based model needs a sea of computation and time to complete the detection. (iii) It is easy to cause overfitting when using small data sets. (iv) Most of the breast cancer diagnosis model based on CNN uses the pre-trained CNN model to extract features. But at this time, which layer has the best feature? Which layer of features should we extract? These problems have not been well solved in recent studies.

Even though this paper reviews a sea of recent research papers, there are still some limitations. First, this survey only pays attention to CNN for breast cancer diagnosis. There are some other CAD methods for breast cancer diagnosis. Second, this survey only focuses on two-dimensional images.

In the future, researchers can try more unlabeled data sets for breast cancer detection. Compared with labeled datasets, unlabeled datasets are less expensive and more numerous. What’s more, researchers can try more new methods for image feature extraction, such as EL, TL, xDNNs, U-Net, transformer, and so on.

This paper reviews the CNN-based breast cancer diagnosis technology in recent years. With the progress of CNN technology, the diagnosis accuracy of breast cancer is getting higher and higher. We summarize the limitations and future research directions of CNN-based breast cancer diagnosis technology. Although breast cancer diagnosis technology based on CNN has achieved great success and can be used as an auxiliary means to help doctors diagnose breast cancer, there is still much to be improved.

Funding Statement: This paper is partially supported by Medical Research Council Confidence in Concept Award, UK (MC_PC_17171); Royal Society International Exchanges Cost Share Award, UK (RP202G0230); British Heart Foundation Accelerator Award, UK (AA/18/3/34220); Hope Foundation for Cancer Research, UK (RM60G0680); Global Challenges Research Fund (GCRF), UK (P202PF11); Sino-UK Industrial Fund, UK (RP202G0289); LIAS Pioneering Partnerships Award, UK (P202ED10); Data Science Enhancement Fund, UK (P202RE237).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. Bray, F., Laversanne, M., Weiderpass, E., Soerjomataram, I. (2021). The ever-increasing importance of cancer as a leading cause of premature death worldwide. Cancer, 127(16), 3029–3030. https://doi.org/10.1002/cncr.33587 [Google Scholar] [PubMed] [CrossRef]

2. Desai, M., Shah, M. (2021). An anatomization on breast cancer detection and diagnosis employing multi-layer perceptron neural network (MLP) and convolutional neural network (CNN). Clinical eHealth, 4, 1–11. https://doi.org/10.1016/j.ceh.2020.11.002 [Google Scholar] [CrossRef]

3. Beeravolu, A. R., Azam, S., Jonkman, M., Shanmugam, B., Kannoorpatti, K. et al. (2021). Preprocessing of breast cancer images to create datasets for deep-CNN. IEEE Access, 9, 33438–33463. https://doi.org/10.1109/ACCESS.2021.3058773 [Google Scholar] [CrossRef]

4. Sung, H., Ferlay, J., Siegel, R. L., Laversanne, M., Soerjomataram, I. et al. (2021). Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA: A Cancer Journal for Clinicians, 71(3), 209–249. https://doi.org/10.3322/caac.21660 [Google Scholar] [PubMed] [CrossRef]

5. Heenaye-Mamode Khan, M., Boodoo-Jahangeer, N., Dullull, W., Nathire, S., Gao, X. et al. (2021). Multi-class classification of breast cancer abnormalities using deep convolutional neural network (CNN). PLoS One, 16(8), e0256500. https://doi.org/10.1371/journal.pone.0256500 [Google Scholar] [PubMed] [CrossRef]

6. Tsochatzidis, L., Koutla, P., Costaridou, L., Pratikakis, I. (2021). Integrating segmentation information into CNN for breast cancer diagnosis of mammographic masses. Computer Methods and Programs in Biomedicine, 200, 105913. https://doi.org/10.1016/j.cmpb.2020.105913 [Google Scholar] [PubMed] [CrossRef]

7. Xie, X. Z., Niu, J. W., Liu, X. F., Li, Q. F., Wang, Y. et al. (2022). DG-CNN: Introducing margin information into convolutional neural networks for breast cancer diagnosis in ultrasound images. Journal of Computer Science and Technology, 37(2), 277–294. https://doi.org/10.1007/s11390-020-0192-0 [Google Scholar] [CrossRef]

8. Waks, A. G., Winer, E. P. (2019). Breast cancer treatment: A review. Jama, 321(3), 288–300. https://doi.org/10.1001/jama.2018.19323 [Google Scholar] [PubMed] [CrossRef]

9. Zuluaga-Gomez, J., Al Masry, Z., Benaggoune, K., Meraghni, S., Zerhouni, N. (2021). A CNN-based methodology for breast cancer diagnosis using thermal images. Computer Methods in Biomechanics and Biomedical Engineering: Imaging & Visualization, 9(2), 131–145. https://doi.org/10.1080/21681163.2020.1824685 [Google Scholar] [CrossRef]

10. Sannasi Chakravarthy, S., Bharanidharan, N., Rajaguru, H. (2022). Multi-deep CNN based experimentations for early diagnosis of breast cancer. IETE Journal of Research, 1–16. https://doi.org/10.1080/03772063.2022.2028584 [Google Scholar] [CrossRef]

11. Melekoodappattu, J. G., Dhas, A. S., Kandathil, B. K., Adarsh, K. (2022). Breast cancer detection in mammogram: Combining modified CNN and texture feature based approach. Journal of Ambient Intelligence and Humanized Computing, 1–10. https://doi.org/10.1007/s12652-022-03713-3 [Google Scholar] [CrossRef]

12. Lu, J., Wu, Y., Xiong, Y., Zhou, Y., Zhao, Z. et al. (2022). Breast tumor computer-aided detection system based on magnetic resonance imaging using convolutional neural network. Computer Modeling in Engineering & Sciences, 130(1), 365–377. https://doi.org/10.32604/cmes.2022.017897 [Google Scholar] [CrossRef]

13. Ribli, D., Horváth, A., Unger, Z., Pollner, P., Csabai, I. (2018). Detecting and classifying lesions in mammograms with deep learning. Scientific Reports, 8(1), 1–7. https://doi.org/10.1038/s41598-018-22437-z [Google Scholar] [PubMed] [CrossRef]

14. Hance, K. W., Anderson, W. F., Devesa, S. S., Young, H. A., Levine, P. H. (2005). Trends in inflammatory breast carcinoma incidence and survival: The surveillance, epidemiology, and end results program at the National Cancer Institute. Journal of the National Cancer Institute, 97(13), 966–975. https://doi.org/10.1093/jnci/dji172 [Google Scholar] [PubMed] [CrossRef]

15. Tabar, L., Gad, A., Holmberg, L., Ljungquist, U., Group, K. C. P. et al. (1985). Reduction in mortality from breast cancer after mass screening with mammography: Randomised trial from the breast cancer screening working group of the Swedish National Board of Health and Welfare. The Lancet, 325(8433), 829–832. https://doi.org/10.1016/S0140-6736(85)92204-4 [Google Scholar] [PubMed] [CrossRef]

16. Sharma, G. N., Dave, R., Sanadya, J., Sharma, P., Sharma, K. (2010). Various types and management of breast cancer: An overview. Journal of Advanced Pharmaceutical Technology & Research, 1(2), 109–126. [Google Scholar]

17. McKinney, S. M., Sieniek, M., Godbole, V., Godwin, J., Antropova, N. et al. (2020). International evaluation of an AI system for breast cancer screening. Nature, 577(7788), 89–94. https://doi.org/10.1038/s41586-019-1799-6 [Google Scholar] [PubMed] [CrossRef]

18. Zebari, D. A., Ibrahim, D. A., Zeebaree, D. Q., Mohammed, M. A., Haron, H. et al. (2021). Breast cancer detection using mammogram images with improved multi-fractal dimension approach and feature fusion. Applied Sciences, 11(24), 12122. https://doi.org/10.3390/app112412122 [Google Scholar] [CrossRef]

19. Mihaylov, I., Nisheva, M., Vassilev, D. (2018). Machine learning techniques for survival time prediction in breast cancer. International Conference on Artificial Intelligence: Methodology, Systems, and Applications, pp. 186–194. Varna, Bulgaria, Springer. [Google Scholar]

20. Zhu, Z., Harowicz, M., Zhang, J., Saha, A., Grimm, L. J. et al. (2018). Deep learning-based features of breast MRI for prediction of occult invasive disease following a diagnosis of ductal carcinoma in situ: Preliminary data. In: Medical imaging 2018: Computer-aided diagnosis, vol. 10575, 105752W. Houston, Texas, USA, International Society for Optics and Photonics. [Google Scholar]

21. Grimm, L. J., Ryser, M. D., Partridge, A. H., Thompson, A. M., Thomas, J. S. et al. (2017). Surgical upstaging rates for vacuum assisted biopsy proven DCIS: Implications for active surveillance trials. Annals of Surgical Oncology, 24(12), 3534–3540. https://doi.org/10.1245/s10434-017-6018-9 [Google Scholar] [PubMed] [CrossRef]

22. Veta, M., Pluim, J. P., van Diest, P. J., Viergever, M. A. (2014). Breast cancer histopathology image analysis: A review. IEEE Transactions on Biomedical Engineering, 61(5), 1400–1411. https://doi.org/10.1109/TBME.2014.2303852 [Google Scholar] [PubMed] [CrossRef]

23. Zebari, D. A., Ibrahim, D. A., Zeebaree, D. Q., Haron, H., Salih, M. S. et al. (2021). Systematic review of computing approaches for breast cancer detection based computer aided diagnosis using mammogram images. Applied Artificial Intelligence, 35(15), 2157–2203. https://doi.org/10.1080/08839514.2021.2001177 [Google Scholar] [CrossRef]

24. Ibraheem, A. M., Rahouma, K. H., Hamed, H. F. (2021). 3PCNNB-Net: Three parallel CNN branches for breast cancer classification through histopathological images. Journal of Medical and Biological Engineering, 41(4), 494–503. https://doi.org/10.1007/s40846-021-00620-4 [Google Scholar] [CrossRef]

25. Mokhatri-Hesari, P., Montazeri, A. (2020). Health-related quality of life in breast cancer patients: Review of reviews from 2008 to 2018. Health and Quality of Life Outcomes, 18(1), 1–25. https://doi.org/10.1186/s12955-020-01591-x [Google Scholar] [PubMed] [CrossRef]

26. Köşüş, N., Köşüş, A., Duran, M., Simavlı, S., Turhan, N. (2010). Comparison of standard mammography with digital mammography and digital infrared thermal imaging for breast cancer screening. Journal of the Turkish German Gynecological Association, 11(3), 152–157. https://doi.org/10.5152/jtgga.2010.24 [Google Scholar] [PubMed] [CrossRef]

27. Murtaza, G., Shuib, L., Abdul Wahab, A. W., Mujtaba, G., Nweke, H. F. et al. (2020). Deep learning-based breast cancer classification through medical imaging modalities: State of the art and research challenges. Artificial Intelligence Review, 53(3), 1655–1720. https://doi.org/10.1007/s10462-019-09716-5 [Google Scholar] [CrossRef]

28. Debelee, T. G., Schwenker, F., Ibenthal, A., Yohannes, D. (2020). Survey of deep learning in breast cancer image analysis. Evolving Systems, 11(1), 143–163. https://doi.org/10.1007/s12530-019-09297-2 [Google Scholar] [CrossRef]

29. Liu, J., Zarshenas, A., Qadir, A., Wei, Z., Yang, L. et al. (2018). Radiation dose reduction in digital breast tomosynthesis (DBT) by means of deep-learning-based supervised image processing. In: Medical imaging 2018: Image processing, vol. 10574, 105740F. Houston, Texas, USA, International Society for Optics and Photonics. [Google Scholar]

30. Zhao, Z., Wu, F. (2010). Minimally-invasive thermal ablation of early-stage breast cancer: A systemic review. European Journal of Surgical Oncology, 36(12), 1149–1155. https://doi.org/10.1016/j.ejso.2010.09.012 [Google Scholar] [PubMed] [CrossRef]

31. Jalalian, A., Mashohor, S. B., Mahmud, H. R., Saripan, M. I. B., Ramli, A. R. B. et al. (2013). Computer-aided detection/diagnosis of breast cancer in mammography and ultrasound: A review. Clinical Imaging, 37(3), 420–426. https://doi.org/10.1016/j.clinimag.2012.09.024 [Google Scholar] [PubMed] [CrossRef]

32. Gilbert, F. J., Tucker, L., Gillan, M. G., Willsher, P., Cooke, J. et al. (2015). Accuracy of digital breast tomosynthesis for depicting breast cancer subgroups in a UK retrospective reading study (TOMMY trial). Radiology, 277(3), 697–706. https://doi.org/10.1148/radiol.2015142566 [Google Scholar] [PubMed] [CrossRef]

33. Antropova, N. O., Abe, H., Giger, M. L. (2018). Use of clinical MRI maximum intensity projections for improved breast lesion classification with deep convolutional neural networks. Journal of Medical Imaging, 5(1), 014503. https://doi.org/10.1117/1.JMI.5.1.014503 [Google Scholar] [PubMed] [CrossRef]

34. Griebsch, I., Brown, J., Boggis, C., Dixon, A., Dixon, M. et al. (2006). Cost-effectiveness of screening with contrast enhanced magnetic resonance imaging vs X-ray mammography of women at a high familial risk of breast cancer. British Journal of Cancer, 95(7), 801–810. https://doi.org/10.1038/sj.bjc.6603356 [Google Scholar] [PubMed] [CrossRef]

35. Kuhl, C. K., Schrading, S., Bieling, H. B., Wardelmann, E., Leutner, C. C. et al. (2007). MRI for diagnosis of pure ductal carcinoma in situ: A prospective observational study. The Lancet, 370(9586), 485–492. https://doi.org/10.1016/S0140-6736(07)61232-X [Google Scholar] [PubMed] [CrossRef]

36. Kelly, K. M., Dean, J., Comulada, W. S., Lee, S. J. (2010). Breast cancer detection using automated whole breast ultrasound and mammography in radiographically dense breasts. European Radiology, 20(3), 734–742. https://doi.org/10.1007/s00330-009-1588-y [Google Scholar] [PubMed] [CrossRef]

37. Shin, S. Y., Lee, S., Yun, I. D., Kim, S. M., Lee, K. M. (2018). Joint weakly and semi-supervised deep learning for localization and classification of masses in breast ultrasound images. IEEE Transactions on Medical Imaging, 38(3), 762–774. https://doi.org/10.1109/TMI.2018.2872031 [Google Scholar] [PubMed] [CrossRef]

38. Byra, M., Sznajder, T., Korzinek, D., Piotrzkowska-Wróblewska, H., Dobruch-Sobczak, K. et al. (2019). Impact of ultrasound image reconstruction method on breast lesion classification with deep learning. Iberian Conference on Pattern Recognition and Image Analysis, pp. 41–52. Madrid, Spain, Springer. [Google Scholar]

39. Fotin, S. V., Yin, Y., Haldankar, H., Hoffmeister, J. W., Periaswamy, S. (2016). Detection of soft tissue densities from digital breast tomosynthesis: Comparison of conventional and deep learning approaches. In: Medical imaging 2016: Computer-aided diagnosis, vol. 9785, pp. 228–233. Bellingham, Washington, USA, SPIE. [Google Scholar]

40. Zhang, J., Ghate, S. V., Grimm, L. J., Saha, A., Cain, E. H. et al. (2018). Convolutional encoder-decoder for breast mass segmentation in digital breast tomosynthesis. In: Medical imaging 2018: Computer-aided diagnosis, vol. 10575, 105752V. Houston, Texas, USA, International Society for Optics and Photonics. [Google Scholar]

41. Hooley, R. J., Durand, M. A., Philpotts, L. E. (2017). Advances in digital breast tomosynthesis. American Journal of Roentgenology, 208(2), 256–266. https://doi.org/10.2214/AJR.16.17127 [Google Scholar] [PubMed] [CrossRef]

42. Samala, R. K., Chan, H. P., Hadjiiski, L., Helvie, M. A., Wei, J. et al. (2016). Mass detection in digital breast tomosynthesis: Deep convolutional neural network with transfer learning from mammography. Medical Physics, 43(12), 6654–6666. https://doi.org/10.1118/1.4967345 [Google Scholar] [PubMed] [CrossRef]

43. Pang, T., Wong, J. H. D., Ng, W. L., Chan, C. S. (2020). Deep learning radiomics in breast cancer with different modalities: Overview and future. Expert Systems with Applications, 158, 113501. https://doi.org/10.1016/j.eswa.2020.113501 [Google Scholar] [CrossRef]

44. Mahmood, T., Li, J., Pei, Y., Akhtar, F., Imran, A. et al. (2020). A brief survey on breast cancer diagnostic with deep learning schemes using multi-image modalities. IEEE Access, 8, 165779–165809. https://doi.org/10.1109/Access.6287639 [Google Scholar] [CrossRef]

45. Kumar, G., Alqahtani, H. (2022). Deep learning-based cancer detection-recent developments, trend and challenges. Computer Modeling in Engineering & Sciences, 130(3), 1271–1307. https://doi.org/10.32604/cmes.2022.018418 [Google Scholar] [CrossRef]

46. Zou, L., Yu, S., Meng, T., Zhang, Z., Liang, X. et al. (2019). A technical review of convolutional neural network-based mammographic breast cancer diagnosis. Computational and Mathematical Methods in Medicine, 2019. https://doi.org/10.1155/2019/6509357 [Google Scholar] [PubMed] [CrossRef]

47. Gurcan, M. N., Boucheron, L. E., Can, A., Madabhushi, A., Rajpoot, N. M. et al. (2009). Histopathological image analysis: A review. IEEE Reviews in Biomedical Engineering, 2, 147–171. https://doi.org/10.1109/RBME.2009.2034865 [Google Scholar] [PubMed] [CrossRef]

48. Ertosun, M. G., Rubin, D. L. (2015). Probabilistic visual search for masses within mammography images using deep learning. 2015 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), pp. 1310–1315. Washington, USA, IEEE. [Google Scholar]

49. Hussein, I. J., Burhanuddin, M. A., Mohammed, M. A., Benameur, N., Maashi, M. S. et al. (2022). Fully-automatic identification of gynaecological abnormality using a new adaptive frequency filter and histogram of oriented gradients (HOG). Expert Systems, 39(3), e12789. https://doi.org/10.1111/exsy.12789 [Google Scholar] [CrossRef]

50. Tang, J., Rangayyan, R. M., Xu, J., El Naqa, I., Yang, Y. (2009). Computer-aided detection and diagnosis of breast cancer with mammography: Recent advances. IEEE Transactions on Information Technology in Biomedicine, 13(2), 236–251. https://doi.org/10.1109/TITB.2008.2009441 [Google Scholar] [PubMed] [CrossRef]

51. Yassin, N. I., Omran, S., El Houby, E. M., Allam, H. (2018). Machine learning techniques for breast cancer computer aided diagnosis using different image modalities: A systematic review. Computer Methods and Programs in Biomedicine, 156, 25–45. https://doi.org/10.1016/j.cmpb.2017.12.012 [Google Scholar] [PubMed] [CrossRef]

52. Xie, S., Yu, Z., Lv, Z. (2021). Multi-disease prediction based on deep learning: A survey. Computer Modeling in Engineering and Sciences, 128(2), 489–522. https://doi.org/10.32604/cmes.2021.016728 [Google Scholar] [CrossRef]

53. Doi, K. (2007). Computer-aided diagnosis in medical imaging: Historical review, current status and future potential. Computerized Medical Imaging and Graphics, 31(4–5), 198–211. https://doi.org/10.1016/j.compmedimag.2007.02.002 [Google Scholar] [PubMed] [CrossRef]

54. Sadaf, A., Crystal, P., Scaranelo, A., Helbich, T. (2011). Performance of computer-aided detection applied to full-field digital mammography in detection of breast cancers. European Journal of Radiology, 77(3), 457–461. https://doi.org/10.1016/j.ejrad.2009.08.024 [Google Scholar] [PubMed] [CrossRef]

55. Karimi Jafarbigloo, S., Danyali, H. (2021). Nuclear atypia grading in breast cancer histopathological images based on CNN feature extraction and LSTM classification. CAAI Transactions on Intelligence Technology, 6(4), 426–439. https://doi.org/10.1049/cit2.12061 [Google Scholar] [CrossRef]

56. Lei, Y., He, X., Yao, J., Wang, T., Wang, L. et al. (2021). Breast tumor segmentation in 3D automatic breast ultrasound using mask scoring R-CNN. Medical Physics, 48(1), 204–214. https://doi.org/10.1002/mp.14569 [Google Scholar] [PubMed] [CrossRef]

57. Salama, W. M., Aly, M. H. (2021). Deep learning in mammography images segmentation and classification: Automated CNN approach. Alexandria Engineering Journal, 60(5), 4701–4709. https://doi.org/10.1016/j.aej.2021.03.048 [Google Scholar] [CrossRef]

58. Agarwal, P., Yadav, A., Mathur, P. (2022). Breast cancer prediction on BreakHis dataset using deep CNN and transfer learning model. In: Data engineering for smart systems, pp. 77–88. Manipal University Jaipur, India, Springer. [Google Scholar]

59. Nazir, M. S., Khan, U. G., Mohiyuddin, A., Reshan, A., Saleh, M. et al. (2022). A novel CNN-inception-V4-based hybrid approach for classification of breast cancer in mammogram images. Wireless Communications and Mobile Computing, 2022. https://doi.org/10.1155/2022/5089078 [Google Scholar] [CrossRef]

60. Qin, C., Wu, Y., Zeng, J., Tian, L., Zhai, Y. et al. (2022). Joint transformer and multi-scale CNN for DCE-MRI breast cancer segmentation. Soft Computing, 26, 8317–8334. https://doi.org/10.1007/s00500-022-07235-0 [Google Scholar] [CrossRef]

61. Zainudin, Z., Shamsuddin, S. M., Hasan, S. (2021). Deep layer convolutional neural network (CNN) architecture for breast cancer classification using histopathological images. In: Machine learning and big data analytics paradigms: Analysis, applications and challenges, pp. 347–364. Springer. [Google Scholar]

62. Agarwal, R., Sharma, H. (2021). A new enhanced recurrent extreme learning machine based on feature fusion with CNN deep features for breast cancer detection. In: Advances in computer, communication and computational sciences, pp. 461–471. Singapore, Springer. [Google Scholar]

63. Saber, A., Sakr, M., Abou-Seida, O., Keshk, A. (2021). A novel transfer-learning model for automatic detection and classification of breast cancer based deep CNN. Kafrelsheikh Journal of Information Sciences, 2(1), 1–9. https://doi.org/10.21608/kjis.2021.192207 [Google Scholar] [CrossRef]

64. Shaila, S., Gurudas, V., Hithyshi, K., Mahima, M., PoojaShree, H. (2022). CNN-LSTM-based deep learning model for early detection of breast cancer. In: Data engineering and intelligent computing, pp. 83–91. Bengaluru, India, Springer. [Google Scholar]

65. Karuppasamy, A., Abdesselam, A., Hedjam, R., Zidoum, H., Al-Bahri, M. (2022). Recent CNN-based techniques for breast cancer histology image classification. The Journal of Engineering Research [TJER], 19(1), 41–53. https://doi.org/10.53540/tjer.vol19iss1pp41-53 [Google Scholar] [CrossRef]

66. Susilo, A. B., Sugiharti, E. (2021). Accuracy enhancement in early detection of breast cancer on mammogram images with convolutional neural network (CNN) methods using data augmentation and transfer learning. Journal of Advances in Information Systems and Technology, 3(1), 9–16. https://doi.org/10.15294/jaist.v3i1.49012 [Google Scholar] [CrossRef]

67. Hariharan, R., Dhilsath Fathima, M., Pitchai, A., Roy, V. J., Padhi, A. (2022). Detection and classification of breast cancer using CNN. In: Advance concepts of image processing and pattern recognition, pp. 109–119. Singapore, Springer. [Google Scholar]

68. Bal, A., Das, M., Satapathy, S. M., Jena, M., Das, S. K. (2021). BFCNet: A CNN for diagnosis of ductal carcinoma in breast from cytology images. Pattern Analysis and Applications, 24(3), 967–980. https://doi.org/10.1007/s10044-021-00962-4 [Google Scholar] [CrossRef]

69. Kumar, A., Sharma, A., Bharti, V., Singh, A. K., Singh, S. K. et al. (2021). MobiHisNet: A lightweight CNN in mobile edge computing for histopathological image classification. IEEE Internet of Things Journal, 8(24), 17778–17789. https://doi.org/10.1109/JIOT.2021.3119520 [Google Scholar] [CrossRef]

70. Liu, Y., Pu, H., Sun, D. W. (2021). Efficient extraction of deep image features using convolutional neural network (CNN) for applications in detecting and analysing complex food matrices. Trends in Food Science & Technology, 113, 193–204. https://doi.org/10.1016/j.tifs.2021.04.042 [Google Scholar] [CrossRef]

71. Bhatt, D., Patel, C., Talsania, H., Patel, J., Vaghela, R. et al. (2021). CNN variants for computer vision: History, architecture, application, challenges and future scope. Electronics, 10(20), 2470. https://doi.org/10.3390/electronics10202470 [Google Scholar] [CrossRef]

72. He, K., Ji, L., Wu, C. W. D., Tso, K. F. G. (2021). Using SARIMA–CNN–LSTM approach to forecast daily tourism demand. Journal of Hospitality and Tourism Management, 49, 25–33. https://doi.org/10.1016/j.jhtm.2021.08.022 [Google Scholar] [CrossRef]

73. Sharma, R., Sungheetha, A. (2021). An efficient dimension reduction based fusion of CNN and SVM model for detection of abnormal incident in video surveillance. Journal of Soft Computing Paradigm, 3(2), 55–69. https://doi.org/10.36548/jscp [Google Scholar] [CrossRef]

74. Rodriguez-Ruiz, A., Teuwen, J., Chung, K., Karssemeijer, N., Chevalier, M. et al. (2018). Pectoral muscle segmentation in breast tomosynthesis with deep learning. In: Medical imaging 2018: Computer-aided diagnosis, vol. 10575, 105752J. Houston, Texas, USA, International Society for Optics and Photonics. [Google Scholar]

75. Wang, J., Ding, H., Bidgoli, F. A., Zhou, B., Iribarren, C. et al. (2017). Detecting cardiovascular disease from mammograms with deep learning. IEEE Transactions on Medical Imaging, 36(5), 1172–1181. https://doi.org/10.1109/TMI.2017.2655486 [Google Scholar] [PubMed] [CrossRef]

76. Debelee, T. G., Amirian, M., Ibenthal, A., Palm, G., Schwenker, F. (2017). Classification of mammograms using convolutional neural network based feature extraction. International Conference on Information and Communication Technology for Develoment for Africa, pp. 89–98. Bahir Dar, Ethiopia, Springer. [Google Scholar]

77. Kooi, T., van Ginneken, B., Karssemeijer, N., den Heeten, A. (2017). Discriminating solitary cysts from soft tissue lesions in mammography using a pretrained deep convolutional neural network. Medical Physics, 44(3), 1017–1027. https://doi.org/10.1002/mp.12110 [Google Scholar] [PubMed] [CrossRef]

78. Hu, Z., Tang, J., Wang, Z., Zhang, K., Zhang, L. et al. (2018). Deep learning for image-based cancer detection and diagnosis−A survey. Pattern Recognition, 83, 134–149. https://doi.org/10.1016/j.patcog.2018.05.014 [Google Scholar] [CrossRef]

79. Chittineni, S., Edara, S. S. (2022). A novel CNN approach for detecting breast cancer from mammographic image. In: Machine learning and autonomous systems, pp. 361–370. Tamil Nadu, India, Springer. [Google Scholar]

80. Tripathi, R. P., Khatri, S. K., Baxodirovna, D. V. G. (2022). A transfer learning approach to implementation of pretrained CNN models for breast cancer diagnosis. Journal of Positive School Psychology, 6, 5816–5830. [Google Scholar]

81. Kolchev, A., Pasynkov, D., Egoshin, I., Kliouchkin, I., Pasynkova, O. et al. (2022). YOLOv4-based CNN model versus nested contours algorithm in the suspicious lesion detection on the mammography image: A direct comparison in the real clinical settings. Journal of Imaging, 8(4), 88. https://doi.org/10.3390/jimaging8040088 [Google Scholar] [PubMed] [CrossRef]

82. Liu, S., Liu, G., Zhou, H. (2019). A robust parallel object tracking method for illumination variations. Mobile Networks and Applications, 24(1), 5–17. https://doi.org/10.1007/s11036-018-1134-8 [Google Scholar] [CrossRef]

83. Devika, R., Rajasekaran, S., Gayathri, R. L., Priyal, J., Kanneganti, S. R. (2022). Automatic breast cancer lesion detection and classification in mammograms using faster R-CNN deep learning network. Issues and Developments in Medicine and Medical Research, 6, 10–20. https://doi.org/10.9734/bpi/idmmr/v6 [Google Scholar] [CrossRef]

84. Mahmoud, H., Alharbi, A., Khafga, D. (2021). Breast cancer classification using deep convolution neural network with transfer learning. Intelligent Automation & Soft Computing, 29(3), 803–814. https://doi.org/10.32604/iasc.2021.018607 [Google Scholar] [CrossRef]

85. Liu, S., Liu, X., Wang, S., Muhammad, K. (2021). Fuzzy-aided solution for out-of-view challenge in visual tracking under IoT-assisted complex environment. Neural Computing and Applications, 33(4), 1055–1065. https://doi.org/10.1007/s00521-020-05021-3 [Google Scholar] [CrossRef]