Open Access

Open Access

ARTICLE

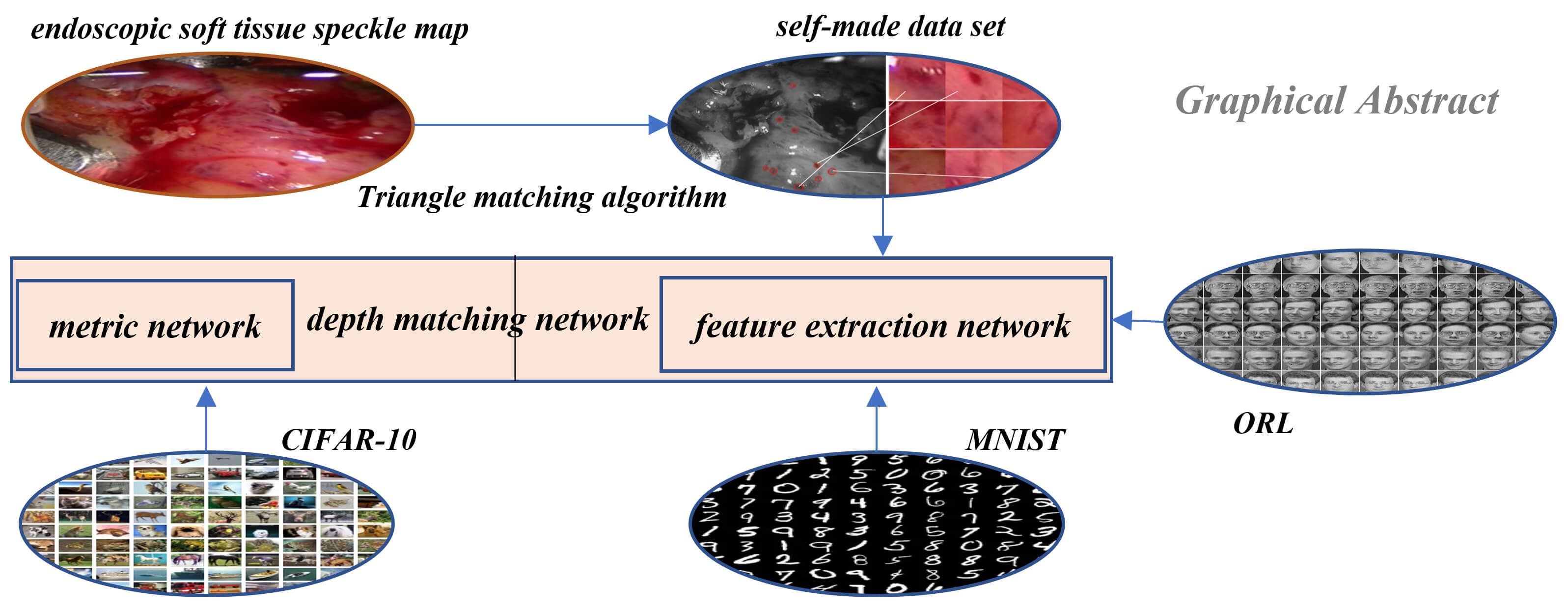

Soft Tissue Feature Tracking Based on Deep Matching Network

1 School of Automation, University of Electronic Science and Technology of China, Chengdu, 610054, China

2 College of Computer Science and Cyber Security, Chengdu University of Technology, Chengdu, 610059, China

3 Department of Geography and Anthropology, Louisiana State University, Baton Rouge, LA, 70803, USA

* Corresponding Authors: Mingzhe Liu. Email: ; Wenfeng Zheng. Email:

(This article belongs to the Special Issue: Computer Modeling of Artificial Intelligence and Medical Imaging)

Computer Modeling in Engineering & Sciences 2023, 136(1), 363-379. https://doi.org/10.32604/cmes.2023.025217

Received 28 June 2022; Accepted 15 September 2022; Issue published 05 January 2023

Abstract

Research in the field of medical image is an important part of the medical robot to operate human organs. A medical robot is the intersection of multi-disciplinary research fields, in which medical image is an important direction and has achieved fruitful results. In this paper, a method of soft tissue surface feature tracking based on a depth matching network is proposed. This method is described based on the triangular matching algorithm. First, we construct a self-made sample set for training the depth matching network from the first N frames of speckle matching data obtained by the triangle matching algorithm. The depth matching network is pre-trained on the ORL face data set and then trained on the self-made training set. After the training, the speckle matching is carried out in the subsequent frames to obtain the speckle matching matrix between the subsequent frames and the first frame. From this matrix, the inter-frame feature matching results can be obtained. In this way, the inter-frame speckle tracking is completed. On this basis, the results of this method are compared with the matching results based on the convolutional neural network. The experimental results show that the proposed method has higher matching accuracy. In particular, the accuracy of the MNIST handwritten data set has reached more than 90%.Graphic Abstract

Keywords

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools