Open Access

Open Access

ARTICLE

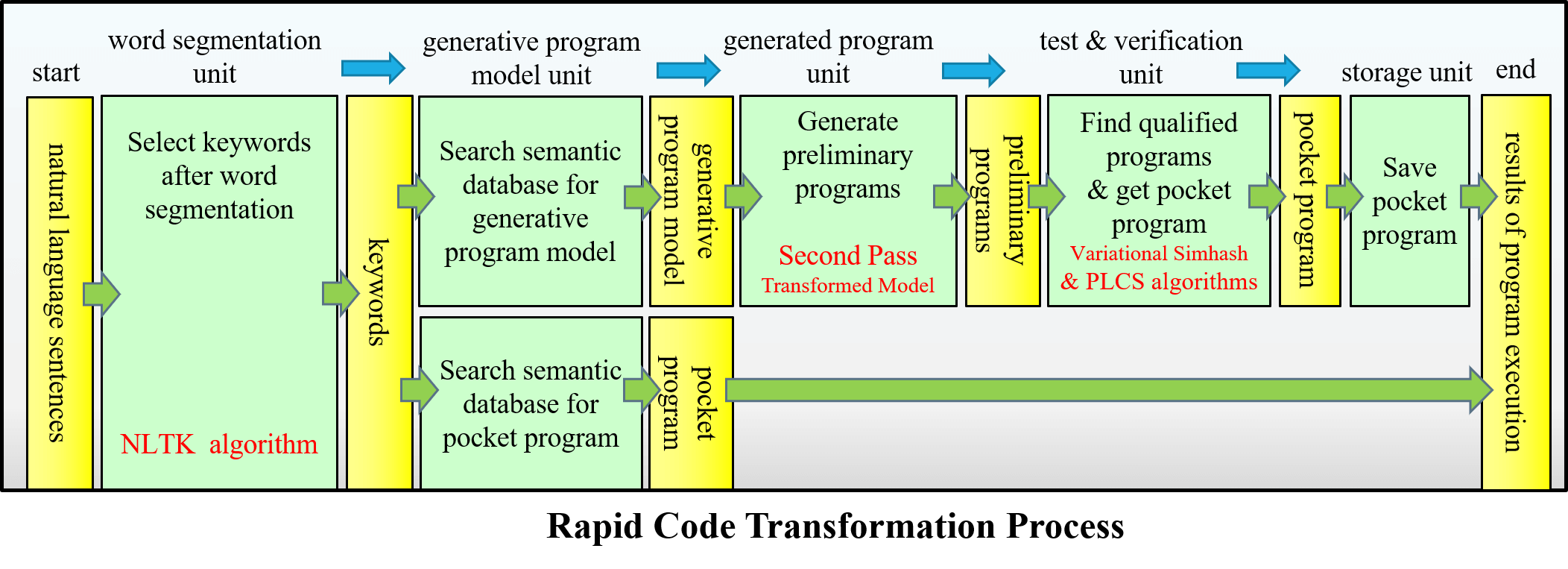

Implementation of Rapid Code Transformation Process Using Deep Learning Approaches

1 Department of Computer Science and Information Engineering, National University of Kaohsiung, Kaohsiung, 811, Taiwan

2 Department of Fragrance and Cosmetic Science, Kaohsiung Medical University, Kaohsiung, 811, Taiwan

* Corresponding Author: Hsiu-Fen Tsai. Email:

Computer Modeling in Engineering & Sciences 2023, 136(1), 107-134. https://doi.org/10.32604/cmes.2023.024018

Received 21 May 2022; Accepted 05 September 2022; Issue published 05 January 2023

Abstract

Our previous work has introduced the newly generated program using the code transformation model GPT-2, verifying the generated programming codes through simhash (SH) and longest common subsequence (LCS) algorithms. However, the entire code transformation process has encountered a time-consuming problem. Therefore, the objective of this study is to speed up the code transformation process significantly. This paper has proposed deep learning approaches for modifying SH using a variational simhash (VSH) algorithm and replacing LCS with a piecewise longest common subsequence (PLCS) algorithm to faster the verification process in the test phase. Besides the code transformation model GPT-2, this study has also introduced Microsoft MASS and Facebook BART for a comparative analysis of their performance. Meanwhile, the explainable AI technique using local interpretable model-agnostic explanations (LIME) can also interpret the decision-making of AI models. The experimental results show that VSH can reduce the number of qualified programs by 22.11%, and PLCS can reduce the execution time of selected pocket programs by 32.39%. As a result, the proposed approaches can significantly speed up the entire code transformation process by 1.38 times on average compared with our previous work.Graphic Abstract

Keywords

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools