Open Access

Open Access

REVIEW

Broad Learning System for Tackling Emerging Challenges in Face Recognition

1

School of Computer Science and Technology, Hainan University, Haikou, 570228, China

2

Shanghai Institute of Technology, Shanghai, 201418, China

3

Interscience Institute of Management and Technology, Bhubaneswar, 752054, India

* Corresponding Author: Wenfeng Wang. Email:

(This article belongs to the Special Issue: Enabled and Human-centric Computational Intelligence Solutions for Visual Understanding and Application)

Computer Modeling in Engineering & Sciences 2023, 134(3), 1597-1619. https://doi.org/10.32604/cmes.2022.020517

Received 28 November 2021; Accepted 15 April 2022; Issue published 20 September 2022

Abstract

Face recognition has been rapidly developed and widely used. However, there is still considerable uncertainty in the computational intelligence based on human-centric visual understanding. Emerging challenges for face recognition are resulted from information loss. This study aims to tackle these challenges with a broad learning system (BLS). We integrated two models, IR3C with BLS and IR3C with a triplet loss, to control the learning process. In our experiments, we used different strategies to generate more challenging datasets and analyzed the competitiveness, sensitivity, and practicability of the proposed two models. In the model of IR3C with BLS, the recognition rates for the four challenging strategies are all 100%. In the model of IR3C with a triplet loss, the recognition rates are 94.61%, 94.61%, 96.95%, 96.23%, respectively. The experiment results indicate that the proposed two models can achieve a good performance in tackling the considered information loss challenges from face recognition.Keywords

Computational intelligence in face recognition based on visible images has been rapidly developed and widely used in many practical scenarios, such as security [1–9], finance [10–19], and health care service [20–29]. However, limited by lighting conditions [30–33], facial expression [34,35], pose [36,37], occlusion [38,39], and other confounding factors [40], some valuable information are easily to be lost. Dealing with information loss is an emerging challenge for face recognition, which is few addressed in the previous studies. It is emergent to find a method for generating new datasets for tackling this challenge. Multi-sensor information fusion [41–50] analyzes and processes the multi-source information collected by sensors and combines them. The combination of multi-source information can be automatically or semi-automatically carried out [51–54]. In the fusion process of faces images, some valuable information for face recognition is possibly lost and in turn, can generate a more challenging dataset for us.

There are three main categories of sensor fusion-complementary, competitive, and co-operative [55]. These categories make multi-sensor information fusion more reliable and informative [56,57]. Such fusion has been widely used in the field of transportation [58–67], materials science [68–77], automation control systems [78,79], medicine [80–85], and agriculture [86–95]. The fusion strategy aims to create images with almost negligible distortion by maintaining the quality of the image in all aspects when compared with the original or source images [96,97]. Through the fusion of different types of images obtained in the same environment, the redundancy information can improve the reliability and fault tolerance of the data [98,99]. Visible sensors capture the reflected light from the surface of objects to create visible images [100–103], while infrared sensors obtain thermal images [104]. There are already many studies on the fusion of visible and thermal images [105–118] and such fusion has been integrated with face recognition [119–121]. Thermal images have some problems such as low contrast [122,123], blurred edge [124,125], temperature-sensitive [126–128], glass rejection [129–132], and little texture details [133,134]. The fusion of visible and thermal faces images will blur or hide some valuable information and therefore, can be employed to generate a challenging dataset for face recognition.

The objectives of this study are 1) to utilize information fusion technologies and generate more challenging datasets for face recognition and 2) to propose two models to tackle the challenge. Organization of the whole paper is as follows. In Section 2, we will introduce four fusion strategies (maximum-value fusion, minimum-value fusion, weighted-average fusion, PCA fusion) and complete the modelling approach. In Section 3, the proposed models will be performed on the fused challenging datasets and the model sensitivity will be evaluated by a cross validation with changes in the weights. Competitiveness and practicability of the two models are also discussed at the end the paper.

In this experiment, we used the TDIRE dataset and the TDRGBE dataset of the Tufts Face database, which respectively contains 557 thermal images and 557 visible images for 112 persons [135]. The 1st person has 3 images, the 31st person has 4 images, while each of other persons has 5 thermal images in the TDIRE dataset. Each person has the same number of visible images in the TDRGBE dataset and thermal images in the TDIRE dataset. First, we conducted face cutting on the total of 1114 images with CascadeClassifier from Opencv. Then we combined thermal and visible images of each person in the dataset to form a challenging dataset, which includes 557 fused images. Finally, we used the TDRGBE dataset as the training set and the fused dataset as the testing set to treat the problem considered in this study.

During the process of image fusion, we employ four different strategies to form a gradient of difficulty:

1) Select maximum value in fusion. The fused image is obtained by selecting the maximum value of each pixel from the corresponding position in each pair of thermal and visible images, as follows:

This strategy selects the maximum value of pixels to enhance the gray value of the fused image. It enables the fused image to prefer the information from the pixel at high exposure and high temperature. So, lots of valuable information will lose after fusion and render face a challenge for face recognition.

2) Select minimum value in fusion. This is a contrary strategy with respect to 1). The strategy selects the minimum value of pixels to decrease the gray value of the fused image. It may lose lots of valuable information. In our experiment, it enables the fused image to prefer the information from the pixel, which is at low exposure and low temperature.

3) Utilize a weighted average in fusion. The fused image is obtained by calculating the weighted value of each pair of input images at the corresponding position with an added fixed scalar

This strategy changes the signal-to-noise ratio of the fused image and weakens the images’ contrast. Some valuable signals appearing in only one of the pair images will lose, which forms a challenge.

4) Utilize the PCA (Principal Component Analysis) algorithm as a strategy in fusion by swapping the first component of the visible image with that of the corresponding thermal image, as follows:

The strategy reduces the image resolution and some information on spectral characteristics of the first principal component in the original images will be lost. This also forms a challenge.

Compared to general performance metrics, IR3C [136] is much more effective and efficient in dealing with face occlusion, corruption, illumination, and expression changes, etc. The algorithm could robustly identify the outliers and reduce their effects on the coding process by assigning adaptively and iteratively the weights to the pixels according to their coding residuals.

Details for computing the residual value and update the weights are summarized in Fig. 1.

Figure 1: Description of IR3C algorithm. W(t) is the estimated diagonal matrix.

We improve the IR3C algorithm with a triplet loss [137] to tackle the above challenges, as shown in Fig. 2.

Figure 2: Triplet loss on a pair of positive face and one negative face (in the purple dotted rectangle), along with the mechanism to minimize the loss (in the green dotted rectangle)

As shown in Fig. 2, the strategy of triplet loss is minimizing the loss among similar images (anchor images and positive images) and maximizing the loss among dissimilar images in Euclidean space. Margin is a threshold parameter to measure the distance between similar and dissimilar image pairs. In our experiments, we set margin as 0.2.

2.3 IR3C with Broad Learning System

Broad Learning System (BLS) was proposed by C. L. Philip Chen to overcome the shortcomings of learning in deep structure [138]. BLS shows excellent performance in classification accuracy. Compared to deep structure neural network, BLS learns faster. Therefore, we improve the IR3C algorithm with Broad Learning System to tackle the above challenges, as shown in Fig. 3.

Figure 3: Broad Learning System with increment of input data (images after IR3C encoding), enhancement nodes, and mapped nodes. Here,

3.1 Model Performance of IR3C with BLS

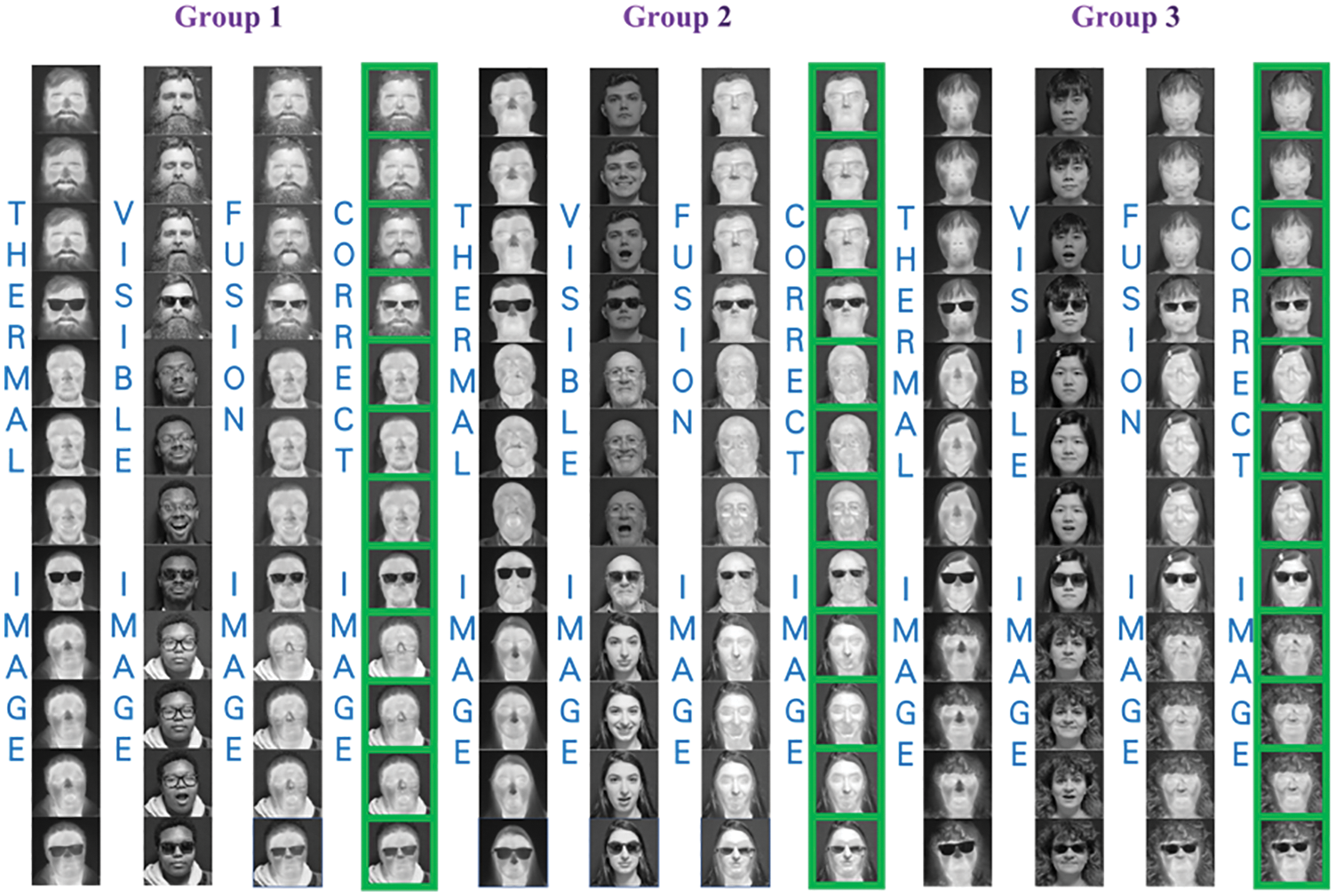

We employed four fusion methods to challenge our models. In each fusion method, we will display 36 results and divided them into three groups. In each group, the first column is thermal images, the second column is visible images, the third column is fusion results of the previous two columns, and the fourth column are correctly recognized images with green borders to distinguish them from the other columns.

Fig. 4 shows the experimental results of Select Maximum value, where “CORRECT IMAGE” means the image which is successfully recognized in our model. In this fusion method, the pixel with the most immense gray value in the original image is simply selected as the pixel of the fusion image, and the gray intensity of the fused pixel is enhanced.

Figure 4: The experimental results of Select Maximum value

From Fig. 4, we could find that the fusion images have less texture information. Selecting maximum value reduces the contrast of the whole image heavily. The recognition rate of this method is 100%. Compared with pure IR3C in [119], where the recognition rate (93.35%) is lower than ours. It shows that our model can tackle the challenge of information loss.

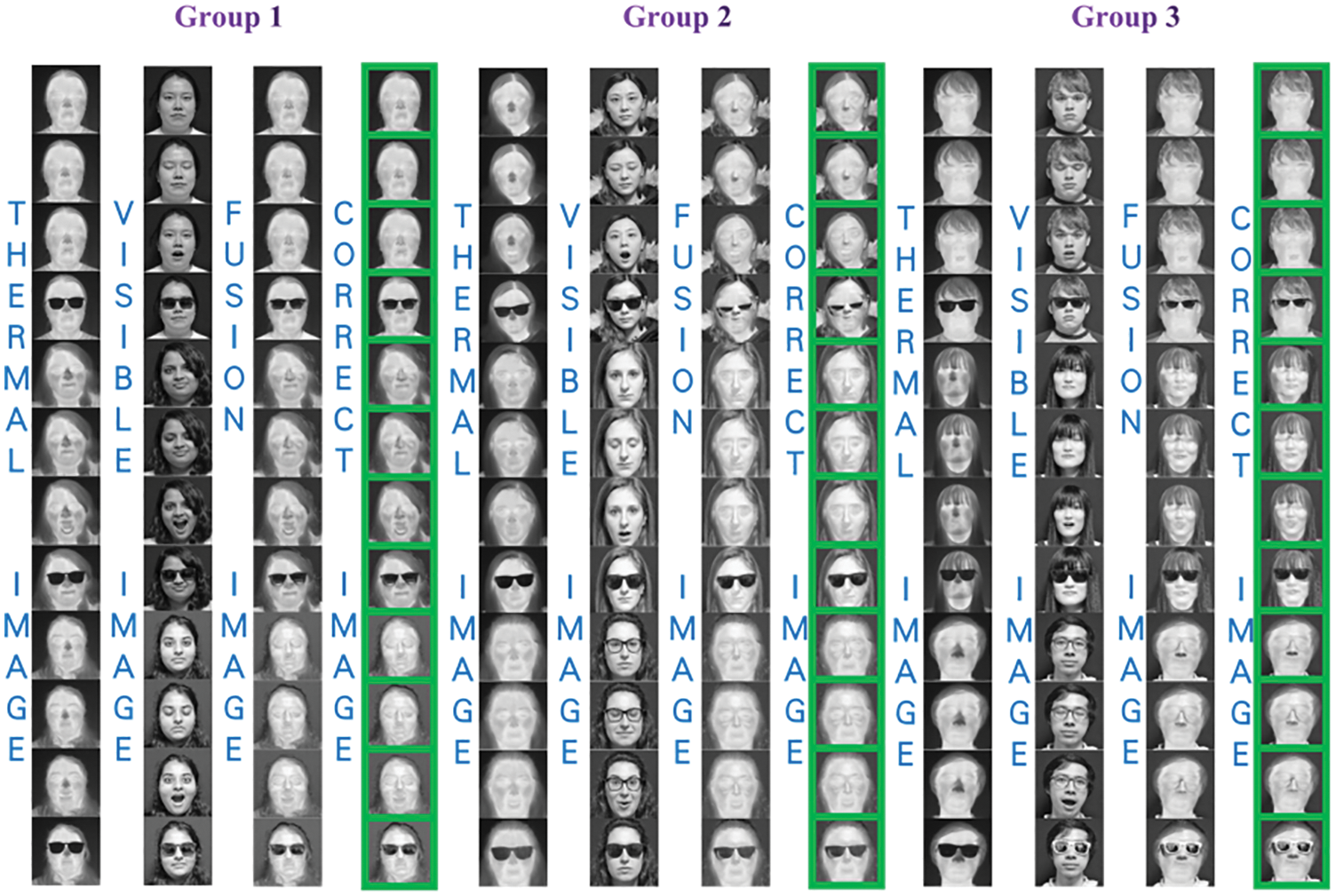

Fig. 5 shows the experimental results of select minimum value, where “CORRECT IMAGE” means the image which is successfully recognized in our model. In this fusion method, the pixel with the smallest gray value in the original image is selected as the pixel of the fusion image, and the gray intensity of the fused pixel is attenuated.

Figure 5: The experimental results of select minimum value

From Fig. 5, we could find that the fusion images have less texture information. Selecting minimum value reduces the contrast of the whole image. Due to image alignment and clipping, some valuable information is hidden. The recognition rate of our method is 100%, performing better than pure IR3C in [119], where the recognition rate of their experiment is 58.056%.

Figs. 6 and 7 show the experimental results of Weighted Average and PCA, respectively, where “CORRECT IMAGE” means the image which is successfully recognized in our model.

Figure 6: The experimental results of weighted average

Figure 7: The experimental results of PCA

The Weighted Average algorithm distributes the pixel values at the corresponding positions of the two images according to a certain weight and adds a constant to get the fused image. In the experiment, we set the weight to 0.5 and the scalar to 10. It is easy to implement and improves the signal-to-noise ratio of fused images. From Fig. 6, we found that the fusion images have more texture information from thermal and visible images than the former two methods. It is clear that the weighted average preserves the information of the original images effectively. However, some valuable information has still been weakened in this method. The recognition rate of this method is 100%, which is competitive with the recognition rate of pure IR3C (99.488%) in [119]. From Fig. 7, we could find that the fusion images keep the vital information from thermal and visible images compared with the select maximum and minimum value. It is clear that PCA preserves the information of the original images effectively. Nevertheless, lots of details have been weakened in this method. The recognition rate of this method is 100%, which is competitive with the recognition rate of pure IR3C (98.721%) in [119]. All the results in Figs. 4–7 shown that our model has a good performance in dealing with information loss.

3.2 Model Performance of IR3C with a Triplet Loss

We challenged our model with the same four fusion approaches as in Section 3.1.

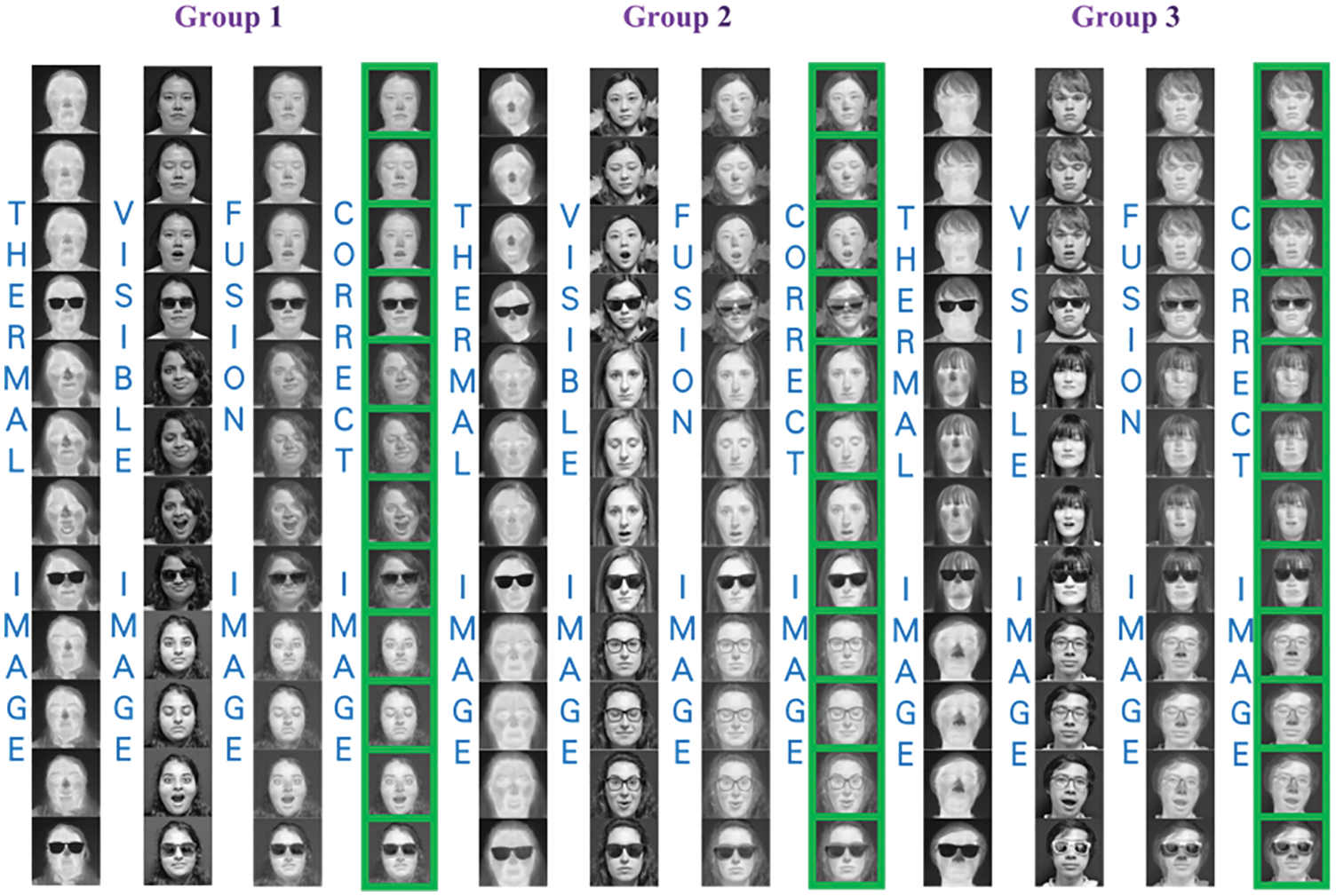

Fig. 8 shows the experimental results of Select Maximum value, where “CORRECT IMAGE” means the image which is successfully recognized in our model.

Figure 8: The experimental results of select maximum value

From Fig. 8, we could find that the thermal images have less information than visible images. In the fusion process, the value of pixels in thermal image are larger than that in visible image. Hence the fusion images have lost abundance texture information. The recognition rate of this method is 94.61%. Compared with pure IR3C in [119], where the recognition rate (93.35%) is lower than ours. It shows that our model has preponderance in overcoming the challenge of information loss.

Fig. 9 shows the experimental results of select minimum value, where “CORRECT IMAGE” means the image which is successfully recognized in our model.

Figure 9: The experimental results of select minimum value

From Fig. 9, we could find that the fusion images have lost some texture information. Selecting minimum value reduces the contrast of the whole image heavily. The recognition rate of our method is 94.61%, performing better than pure IR3C in [119], where the recognition rate of their experiment is 58.056%. It indicates that our model has improved the recognition rate greatly by solving the information loss.

Figs. 10 and 11 show the experimental results of Weighted Average and PCA, respectively, where “CORRECT IMAGE” means the image which is successfully recognized in our model.

Figure 10: The experimental results of weighted average

Figure 11: The experimental results of PCA

From Fig. 10, we found that the fusion images have contained more texture information from thermal and visible images than the former two methods. However, some valuable information has still been weakened in this method. The recognition rate of this method is 96.95%, which is lower than the recognition rate of pure IR3C (99.488%) in [119]. From Fig. 11, we could find that although the fusion images have maintained the primary information from thermal and visible images compared with the select maximum and minimum value, it still loses some valid information. The recognition rate of this method is 96.23%, which is lower than the recognition rate of pure IR3C (98.721%) in [119].

Despite the less competitive recognition rate of weighted average and PCA, we think the results are receivable. Compared with our previous work in [119], we adopted more images, 557 thermal images and 557 visible images are used in present work. All the results in Figs. 8–11 shown that our model has a good performance in dealing with information loss.

3.3 Analyses of the Sensitivity

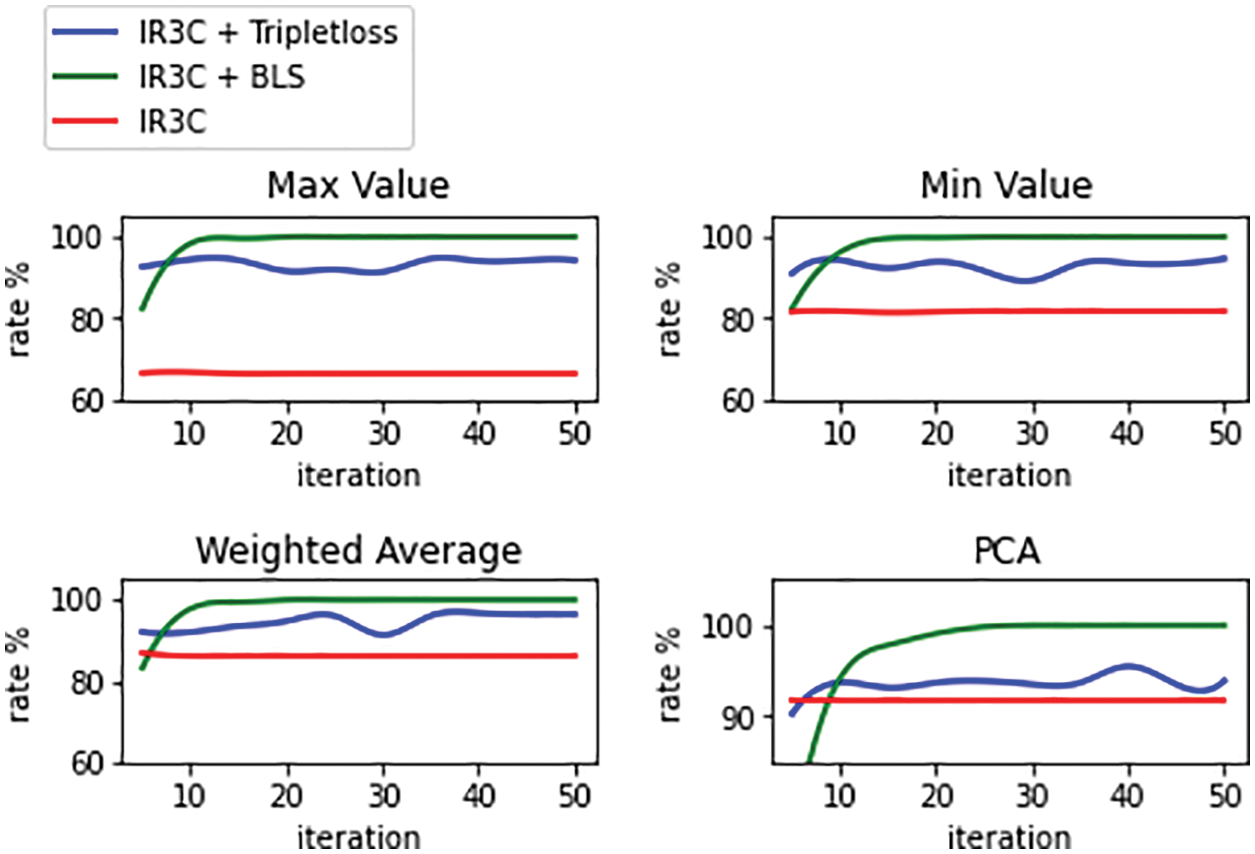

The recognition rates of the above four fusion techniques are shown in Table 1. In order to validate the sensitivity of parameters, we also conducted further experiments on our model. We change the iterations of IR3C, triplet loss, and BLS, from 5 to 50 (interval value is 5). After that, we got 10 points in each model and draw the curve graphs in Fig. 12. The recognition rates of variant iterations are shown in Fig. 12, where we changed the iterations.

Figure 12: Recognition rates of variant iterations

From Table 1 and Fig. 12, we found that PCA and weighted average maintain more valuable information than the other two methods. It is reasonable that PCA and weighted average contain the information from both thermal and visible images. Besides, although the four fusion methods posed challenges to our model, it still performed well. It shows that our model has good robustness for different fusion strategy. We also found that IR3C with BLS and IR3C with triplet loss can achieve high recognition rates in several iterations. Afterwards, the recognition rates maintain in high rates with relative stability.

Fig. 12 shows that IR3C has a preponderance in robustness. However, when facing the challenge of information loss, the recognition rate of pure IR3C is not satisfying. After we developed IR3C as IR3C with Broad Learning System in [138] and IR3C with triplet loss, the recognition rates improved greatly. From the curves in Fig. 12, we found that IR3C with BLS and IR3C with triplet loss can achieve high recognition rates in several iterations. Afterwards, the recognition rates maintain in high rates with relative stability.

3.4 Competitiveness and Practicability

Most previous studies focused the advantages of image fusion to overcome the shortcoming of a single image and face recognition was majorly based on visible images. Since capturing visible images requires external light, the environment’s illumination may dramatically affect the accuracy of face recognition [139,140]. Thermal images, particularly in the Long-Wave InfraRed (LWIR, 7 μm–14 μm) and Mid-Wave InfraRed (MWIR, 3 μm–5 μm) bands, are utilized to address limitations of the visible spectrum in low-light applications, which capture discriminative information from body heat signatures effectively [141–143]. A subsequent challenge is that some valuable information for face recognition is possible to be lost in the fusion process of visible and thermal images. Such fusion is utilized in this study to generate a challenging dataset for face recognition and we proposed IR3C with triplet loss to tackle the challenge in improving the recognition rate of an image in the case of information loss.

Differing from the previous studies, we used relative rough fusion methods (select maximum value in fusion, select minimum value in fusion, utilize a weighted average in fusion, utilize the PCA in fusion) to challenge the two models for face recognition. The corresponding recognition rates of IR3C with BLS are respectively 100%, 100%, 100%, 100%. The corresponding recognition rates of IR3C with a triplet loss are respectively 94.61%, 94.61%, 96.95%, 96.23%. Alternatively, the false alarm rates are 5.39%, 5.39%, 3.05%, 3.77%. It means the PCA fusion and the weighted average fusion maintain more valuable information than the other two methods. This is reasonable. PCA and weighted average contain the information from both thermal and visible images. Selecting the maximum or minimum value in fusion only chooses information of each pixel from one image, which leads to more information loss.

This research is also a continuous work of Wang et al. [119], where we used 391 thermal images and 391 visible images from Tufts Face databases. All the fusion strategies are included in [119], among which three recognition rates after weighted-average fusion, PCA fusion, maximum-value, and minimum-value fusion are 99.48%, 98.721%, 93.35%, and 58.056%, respectively. Benefitting from the BLS, recognition rates after these four strategies have been improved as 100%, 100%, 100%, and 100%, respectively. However, in the model of IR3C with a triplet loss, the recognition rates of the presented model after weighted-average fusion and PCA fusion are 96.95% and 96.23%, lower than the previous IR3C model, where the recognition rates are 99.48% and 98.721%. We think it is acceptable we used 571 thermal images and 571 visible images from the datasets. We found that these unrecognized images did not align well, and much valuable information was hidden. Some important information was not selected. It shows that lots of information are lost during the fusion process.

We not only developed IR3C as IR3C with BLS and IR3C with a triplet loss, but also analyzed the sensitivity of the model in further experiments. We altered the iterations of IR3C, IR3C with BLS, and IR3C with a triplet loss. Analyzing the curves of recognition rates, we found that our proposed models can tackle the information loss challenge steadily and reach high accuracy after several iterations.

The model can be further improved in the subsequent studies. During the experiments, we met some difficulties. Selecting hard triplets frequently often makes the training unstable, and mining hard triplets are time-consuming. In fact, it is hard to select. We suggest choosing mid-hard triplets in subsequent studies. IR3C has less requirement on the sharpness of an image. It can be applied to cheap devices with low-resolution. Besides, it performs well in face occlusion, corruption, lighting, and expression changes. We can use it to recognize a face obscured by a mask or in a dusky environment. With the help of BLS, we can train the model quickly. This presented a good basis and so we suggest to further improve the subsequent studies.

Various factors affect visible light face recognition rates, such as expression changes, occlusions, pose variations, and lighting [144–148]. Since the Tuft dataset we used in the experiment was collected under good lighting conditions, face pictures under low light conditions were not used in the fusion. Therefore, visible images contain more information than thermal images in this dataset. Previous studies have shown that fusion images can be applied to mask face recognition and low light [148]. It shows that image fusion based on thermal and visible images can significantly improve face recognition efficiency. In the next work, we will try to reduce the influence of secondary factors in the experiment. We will use more images from different environments for further research, such as occlusions, low light intensity. Through these subsequent environments, we hope to further explore the robustness of our model. We further analyzed the fusion images with wrong recognized results and found that the CascadeClassifier used to align the face region of different images was not perfect. This also resulted in distinct facial contours of the fused images, which means facial features were changed. Therefore, we will improve the method of image alignment and the fusion strategies in the subsequent studies.

In this paper, we propose two models, IR3C with a triplet loss and IR3C with BLS as a model to tackle the information loss challenge in face recognition. To generate a challenging dataset, we use four methods to fuse the images. In the model of IR3C with BLS, the recognition rates after the treatment with four fusion methods are respectively 100%, 100%, 100%, and 100%. In the model of IR3C with a triplet loss, the recognition rates after the treatment with four fusion methods are respectively 94.61%, 94.61%, 96.95%, 96.23%. Besides, we conducted sensitivity experiments on our two models by constantly changing the iterations of IR3C, BLS, and triplet loss. Experiment results show that our two models, the IR3C with BLS and IR3C with a triplet loss, can achieve good performance in tackling the considered challenge in face recognition. Nevertheless, the Tufts database was obtained under fine lighting conditions without corruption, making visible images contain more information than thermal images in the experiment. Further researches in more complicated environments and conditions are still necessary. This should be a next research priority.

Data Availability: All the data utilized to support the theory and models of the present study are available from the corresponding authors upon request.

Funding Statement: This research was funded by the Shanghai High-Level Base-Building Project for Industrial Technology Innovation (1021GN204005-A06), the National Natural Science Foundation of China (41571299) and the Ningbo Natural Science Foundation (2019A610106).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

References

1. Chen, Q. Q., Sang, L. (2018). Face-mask recognition for fraud prevention using Gaussian mixture model. Journal of Visual Communication and Image Representation, 55(8), 795–801. DOI 10.1016/j.jvcir.2018.08.016. [Google Scholar] [CrossRef]

2. Wang, Z. Z., Zhang, X., Yu, P. P., Duan, W. J., Zhu, D. J. et al. (2022). A new face recognition method for intelligent security. Applied Sciences, 10(3), 852. DOI 10.3390/app10030852. [Google Scholar] [CrossRef]

3. Ahmadian, K., Gavrilova, M. (2013). A multi-modal approach for high-dimensional feature recognition. The Visual Computer, 29(2), 123–130. DOI 10.1007/s00371-012-0741-9. [Google Scholar] [CrossRef]

4. Chellappa, R., Sinha, P., Phillips, P. J. (2010). Face recognition by computers and humans. Computer, 43(2), 46–55. DOI 10.1109/MC.2010.37. [Google Scholar] [CrossRef]

5. Akhtar, Z., Rattani, A. (2017). A face in any form: New challenges and opportunities for face recognition technology. Computer, 50(4), 80–90. DOI 10.1109/MC.2017.119. [Google Scholar] [CrossRef]

6. Karri, C. (2021). Secure robot face recognition in cloud environments. Multimedia Tools and Applications, 80(12), 18611–18626. DOI 10.1007/s11042-020-10253-5. [Google Scholar] [CrossRef]

7. Shermina, J., Vasudevan, V. (2012). Recognition of the face images with occlusion and expression. International Journal of Pattern Recognition and Artificial Intelligence, 26(3), 1256006. DOI 10.1142/S021800141256006X. [Google Scholar] [CrossRef]

8. Kumar, S., Singh, S. K., Singh, A. K., Tiwari, S., Singh, R. S. et al. (2018). Privacy preserving security using biometrics in cloud computing. Multimedia Tools and Applications, 77(9), 11017–11039. DOI 10.1007/s11042-017-4966-5. [Google Scholar] [CrossRef]

9. Gavrilescu, M. (2016). Study on using individual differences in facial expressions for a face recognition system immune to spoofing attacks. IET Biometrics, 5(3), 236–242. DOI 10.1049/iet-bmt.2015.0078. [Google Scholar] [CrossRef]

10. Liu, B., Wu, M. Y., Tao, M. Z., Wang, Q., He, L. Y. (2020). Video content analysis for compliance audit in finance and security industry. IEEE Access, 8, 117888–117899. DOI 10.1109/ACCESS.2020.3005825. [Google Scholar] [CrossRef]

11. Wei, J. (2021). Video face recognition of virtual currency trading system based on deep learning algorithms. IEEE Access, 9, 32760–32773. DOI 10.1109/ACCESS.2021.3060458. [Google Scholar] [CrossRef]

12. Chamikara, M. A. P., Bertok, P., Khalil, I., Liu, D., Camtepe, S. (2020). Privacy preserving face recognition utilizing differential privacy. Computers & Security, 97, 101951. DOI 10.1016/j.cose.2020.101951. [Google Scholar] [CrossRef]

13. Zhang, L. Z., Wang, Z. P. (2018). A multi-view camera-based anti-fraud system and its applications. Journal of Visual Communication and Image Representation, 55, 263–269. DOI 10.1016/j.jvcir.2018.06.016. [Google Scholar] [CrossRef]

14. Liu, S., Fan, Y. Y., Samal, A., Guo, Z. (2016). Advances in computational facial attractiveness methods. Multimedia Tools & Applications, 75(23), 16633–16663. DOI 10.1007/s11042-016-3830-3. [Google Scholar] [CrossRef]

15. Jahromi, M. N. S., Buch-Cardona, P., Avots, E., Nasrollahi, K., Escalera, S. et al. (2019). Privacy-constrained biometric system for non-cooperative users. Entropy, 21(11), 1033. DOI 10.3390/e21111033. [Google Scholar] [CrossRef]

16. Yang, Y. F. (2020). Research on brush face payment system based on internet artificial intelligence. Journal of Intelligent & Fuzzy Systems, 38(1), 21–28. DOI 10.3233/JIFS-179376. [Google Scholar] [CrossRef]

17. Lin, W. H., Wang, P., Tsai, C. F. (2016). Face recognition using support vector model classifier for user authentication. Electronic Commerce Research and Applications, 18, 71–82. DOI 10.1016/j.elerap.2016.01.005. [Google Scholar] [CrossRef]

18. Yang, J., Gao, Y., Ding, Y., Sun, Y. Y., Meng, Y. et al. (2019). Deep learning aided system design method for intelligent reimbursement robot. IEEE Access, 7, 96232–96239. DOI 10.1109/ACCESS.2019.2927499. [Google Scholar] [CrossRef]

19. Mcduff, D., Kaliouby, R. E., Picard, R. W. (2013). Crowdsourcing facial responses to online videos. IEEE Transactions on Affective Computing, 3(4), 456–468. DOI 10.1109/T-AFFC.2012.19. [Google Scholar] [CrossRef]

20. Li, L. X., Mu, X. H., Li, S. Y., Peng, H. P. (2020). A review of face recognition technology. IEEE Access, 8, 139110–139120. DOI 10.1109/ACCESS.2020.3011028. [Google Scholar] [CrossRef]

21. Picci, G., Scherf, K. S. (2016). From caregivers to peers: Puberty shapes human face perception. Psychological Science, 27(11), 1461–1473. DOI 10.1177/0956797616663142. [Google Scholar] [CrossRef]

22. Wilson, R., Pascalis, O., Blades, M. (2007). Familiar face recognition in children with autism; The differential use of inner and outer face parts. Journal of Autism and Developmental Disorders, 37(2), 314–320. DOI 10.1007/s10803-006-0169-z. [Google Scholar] [CrossRef]

23. Sabharwal, T., Gupta, R. (2019). Human identification after plastic surgery using region based score level fusion of local facial features. Journal of Information Security and Applications, 48, 102373. DOI 10.1016/j.jisa.2019.102373. [Google Scholar] [CrossRef]

24. Kurth, S., Moyse, E., Bahri, M. A., Salmon, E., Bastin, C. (2015). Recognition of personally familiar faces and functional connectivity in Alzheimer’s disease. Cortex, 67, 59–73. DOI 10.1016/j.cortex.2015.03.013. [Google Scholar] [CrossRef]

25. Joseph, R. M., Tanaka, J. (2003). Holistic and part-based face recognition in children with autism. Journal of Child Psychology & Psychiatry, 44(4), 529–542. DOI 10.1111/1469-7610.00142. [Google Scholar] [CrossRef]

26. Whittington, J., Holland, T. (2011). Recognition of emotion in facial expression by people with prader willi syndrome. Journal of Intellectual Disability Research, 55(1), 75–84. DOI 10.1111/j.1365-2788.2010.01348.x. [Google Scholar] [CrossRef]

27. Brown, L. A., Cohen, A. S. (2010). Facial emotion recognition in schizotypy: The role of accuracy and social cognitive bias. Journal of the International Neuropsychological Society, 16(3), 474–483. DOI 10.1017/S135561771000007X. [Google Scholar] [CrossRef]

28. Bidani, S., Priya, R. P., Vijayarajan, V., Prasath, V. B. S. (2020). Automatic body mass index detection using correlation of face visual cues. Technology and Health Care, 28(1),107–112. DOI 10.3233/THC-191850. [Google Scholar] [CrossRef]

29. Ruffman, T., Halberstadt, J., Murray, J. (2009). Recognition of facial, Auditory, and bodily emotions in older adults. Journals of Gerontology: Series B, 64(6), 696–703. DOI 10.1093/geronb/gbp072. [Google Scholar] [CrossRef]

30. Du, S., Ward, R. K. (2010). Adaptive region-based image enhancement method for robust face recognition under variable illumination conditions. IEEE Transactions on Circuits and Systems for Video Technology, 20(9),1165–1175. DOI 10.1109/TCSVT.2010.2045817. [Google Scholar] [CrossRef]

31. Rodriguez-Pulecio, C. G., Benitez-Restrepo, H. D., Bovik, A. C. (2019). Making long-wave infrared face recognition robust against image quality degradations. Quantitative InfraRed Thermography Journal, 16(3–4), 218–242. DOI 10.1080/17686733.2019.1579020. [Google Scholar] [CrossRef]

32. Al-Temeemy, A. A. (2019). Multispectral imaging: Monitoring vulnerable people. Optik, 180, 469–483. DOI 10.1016/j.ijleo.2018.11.042. [Google Scholar] [CrossRef]

33. Liu, S., Liu, H., John, V., Liu, Z., Blasch, E. (2020). Enhanced situation awareness through CNN-based deep multimodal image fusion. Optical Engineering, 59(5), 053103. DOI 10.1117/1.OE.59.5.053103. [Google Scholar] [CrossRef]

34. Samal, A., Iyengar, P. A. (1992). Automatic recognition and analysis of human faces and facial expressions: A survey. Pattern Recognition, 25(1), 65–77. DOI 10.1016/0031-3203(92)90007-6. [Google Scholar] [CrossRef]

35. Filippini, C., Perpetuini, D., Cardone, D., Chiarelli, A. M., Merla, A. (2020). Thermal infrared imaging-based affective computing and its application to facilitate human robot interaction: A review. Applied Sciences, 10(8), 2924. DOI 10.3390/app10082924. [Google Scholar] [CrossRef]

36. Chellappa, R., Wilson, C. L. (1995). Human and machine recognition of faces: A survey. Proceedings of the IEEE, 83(5), 705–741. DOI 10.1109/5.381842. [Google Scholar] [CrossRef]

37. Qi, B., John, V., Liu, Z., Mita, S. (2016). Pedestrian detection from thermal images: A sparse representation based approach. Infrared Physics & Technology, 76, 157–167. DOI 10.1016/j.infrared.2016.02.004. [Google Scholar] [CrossRef]

38. Zhang, H. C., Zhang, Y. N., Huang, T. S. (2013). Pose-robust face recognition via sparse representation. Pattern Recognition, 46(5), 1511–1521. DOI 10.1016/j.patcog.2012.10.025. [Google Scholar] [CrossRef]

39. Luo, C. W., Sun, B., Yang, K., Lu, T. R., Yeh, W. C. (2019). Thermal infrared and visible sequences fusion tracking based on a hybrid tracking framework with adaptive weighting scheme. Infrared Physics & Technology, 99, 265–276. DOI 10.1016/j.infrared.2019.04.017. [Google Scholar] [CrossRef]

40. Hassaballah, M., Aly, S. (2015). Face recognition: Challenges, achievements and future directions. IET Computer Vision, 9(4), 614–626. DOI 10.1049/iet-cvi.2014.0084. [Google Scholar] [CrossRef]

41. Di, P., Wang, X., Chen, T., Hu, B. (2020). Multisensor data fusion in testability evaluation of equipment. Mathematical Problems in Engineering, 2020, 7821070. DOI 10.1155/2020/7821070. [Google Scholar] [CrossRef]

42. Martins, G., de Souza, S. G., dos Santos, I. L., Pirmez, L., de Farias, C. M. (2021). On a multisensor knowledge fusion heuristic for the Internet of Things. Computer Communications, 176(3), 190–206. DOI 10.1016/j.comcom.2021.04.025. [Google Scholar] [CrossRef]

43. Tsai, P. H., Lin, Y. J., Ou, Y. Z., Chu, E. T. H., Liu, J. W. S. (2014). A framework for fusion of human sensor and physical sensor data. IEEE Transactions on Systems Man & Cybernetics Systems, 44(9), 1248–1261. DOI 10.1109/TSMC.2014.2309090. [Google Scholar] [CrossRef]

44. Markovic, G. B., Sokolovic, V. S., Dukic, M. L. (2019). Distributed hybrid two-stage multi-sensor fusion for cooperative modulation classification in large-scale wireless sensor networks. Sensors, 19(19), 4339. DOI 10.3390/s19194339. [Google Scholar] [CrossRef]

45. Fritze, A., Mnks, U., Holst, C. A., Lohweg, V. (2017). An approach to automated fusion system design and adaptation. Sensors, 17(3), 601. DOI 10.3390/s17030601. [Google Scholar] [CrossRef]

46. Abu-Mahfouz, A. M., Hancke, G. P. (2018). Localised information fusion techniques for location discovery in wireless sensor networks. International Journal of Sensor Networks, 26(1), 12–25. DOI 10.1504/IJSNET.2017.10007406. [Google Scholar] [CrossRef]

47. Aziz, A. M. (2014). A new adaptive decentralized soft decision combining rule for distributed sensor systems with data fusion. Information Sciences, 256, 197–210. DOI 10.1016/j.ins.2013.09.031. [Google Scholar] [CrossRef]

48. Ciuonzo, D., Rossi, P. S. (2014). Decision fusion with unknown sensor detection probability. IEEE Signal Processing Letters, 21(2), 208–212. DOI 10.1109/LSP.2013.2295054. [Google Scholar] [CrossRef]

49. Aeberhard, M., Schlichtharle, S., Kaempchen, N., Bertram, T. (2012). Track-to-track fusion with asynchronous sensors using information matrix fusion for surround environment perception. IEEE Transactions on Intelligent Transportation Systems, 13(4), 1717–1726. DOI 10.1109/TITS.2012.2202229. [Google Scholar] [CrossRef]

50. Ajgl, J., Straka, O. (2018). Covariance intersection in track-to-track fusion: Comparison of fusion configurations. IEEE Transactions on Industrial Informatics, 14(3), 1127–1136. DOI 10.1109/TII.2017.2782234. [Google Scholar] [CrossRef]

51. Luo, R. C., Chang, C. C., Lai, C. C. (2011). Multisensor fusion and integration: Theories, applications, and its perspectives. IEEE Sensors Journal, 11(12), 3122–3138. DOI 10.1109/JSEN.2011.2166383. [Google Scholar] [CrossRef]

52. Joshi, R., Sanderson, A. C. (1997). Minimal representation multisensor fusion using differential evolution. IEEE Transactions on Systems, Man, and Cybernetics–Part A: Systems and Humans, 29(1), 63–76. DOI 10.1109/3468.736361. [Google Scholar] [CrossRef]

53. Munz, M., Mahlisch, M., Dietmayer, K. (2010). Generic centralized multi sensor data fusion based on probabilistic sensor and environment models for driver assistance systems. IEEE Intelligent Transportation Systems Magazine, 2(1), 6–17. DOI 10.1109/MITS.2010.937293. [Google Scholar] [CrossRef]

54. Qu, Y. H., Yang, M. H., Zhang, J. Q., Xie, W., Qiang, B. H. et al. (2021). An outline of multi-sensor fusion methods for mobile agents indoor navigation. Sensors, 21(5), 1605. DOI 10.3390/s21051605. [Google Scholar] [CrossRef]

55. Durrant-Whyte, H. F. (1988). Sensor models and multisensor integration. The International Journal of Robotics Research, 7(6), 97–113. DOI 10.1177/027836498800700608. [Google Scholar] [CrossRef]

56. Fan, X. W., Liu, Y. B. (2018). Multisensor normalized difference vegetation index intercalibration: A comprehensive overview of the causes of and solutions for multisensor differences. IEEE Geoscience and Remote Sensing Magazine, 6(4), 23–45. DOI 10.1109/MGRS.2018.2859814. [Google Scholar] [CrossRef]

57. Da, K., Li, T. C., Zhu, Y. F., Fan, H. Q., Fu, Q. (2021). Recent advances in multisensor multitarget tracking using random finite set. Frontiers of Information Technology & Electronic Engineering, 22(1), 5–24. DOI 10.1631/FITEE.2000266. [Google Scholar] [CrossRef]

58. Zhou, Y. H., Tao, X., Yu, Z. Y., Fujta, H. (2019). Train-movement situation recognition for safety justification using moving-horizon TBM-based multisensor data fusion. Knowledge-Based Systems, 177, 117–126. DOI 10.1016/j.knosys.2019.04.010. [Google Scholar] [CrossRef]

59. Kashinath, S. A., Mostafa, S. A., Mustapha, A., Madin, H., Lim, D. et al. (2021). Review of data fusion methods for real-time and multi-sensor traffic flow analysis. IEEE Access, 9, 51258–51276. DOI 10.1109/ACCESS.2021.3069770. [Google Scholar] [CrossRef]

60. Chavez-Garcia, R. O., Aycard, O. (2016). Multiple sensor fusion and classification for moving object detection and tracking. IEEE Transactions on Intelligent Transportation Systems, 17(2), 525–534. DOI 10.1109/TITS.2015.2479925. [Google Scholar] [CrossRef]

61. Wang, Z. H., Zekavat, S. A. (2009). A novel semidistributed localization via multinode TOA–DOA fusion. IEEE Transactions on Vehicular Technology, 58(7), 3426–3435. DOI 10.1109/TVT.2009.2014456. [Google Scholar] [CrossRef]

62. Choi, K., Singh, S., Kodali, A., Pattipati, K. R., Sheppard, J. W. et al. (2009). Novel classifier fusion approaches for fault diagnosis in automotive systems. IEEE Transactions on Instrumentation and Measurement, 58(3), 602–611. DOI 10.1109/TIM.2008.2004340. [Google Scholar] [CrossRef]

63. Lai, K. C., Yang, Y. L., Jia, J. J. (2010). Fusion of decisions transmitted over flat fading channels via maximizing the deflection coefficient. IEEE Transactions on Vehicular Technology, 59(7), 3634–3640. DOI 10.1109/TVT.2010.2052118. [Google Scholar] [CrossRef]

64. Pei, D. S., Jing, M. X., Liu, H. P., Sun, F. C., Jiang, L. H. (2020). A fast RetinaNet fusion framework for multi-spectral pedestrian detection. Infrared Physics & Technology, 105, 103178. DOI 10.1016/j.infrared.2019.103178. [Google Scholar] [CrossRef]

65. Sun, W., Zhang, X. R., Peeta, S., He, X. Z., Li, Y. F. (2017). A real-time fatigue driving recognition method incorporating contextual features and two fusion levels. IEEE Transactions on Intelligent Transportation Systems, 18(12), 3408–3420. DOI 10.1109/TITS.2017.2690914. [Google Scholar] [CrossRef]

66. Xia, Y. J., Li, X. M., Shan, Z. Y. (2013). Parallelized fusion on multisensor transportation data: A case study in CyberITS. International Journal of Intelligent Systems, 28(6), 540–564. DOI 10.1002/int.21592. [Google Scholar] [CrossRef]

67. Yao, Z. J., Yi, W. D. (2014). License plate detection based on multistage information fusion. Information Fusion, 18, 78–85. DOI 10.1016/j.inffus.2013.05.008. [Google Scholar] [CrossRef]

68. Zhu, M., Hu, W. S., Kar, N. C. (2018). Multi-sensor fusion-based permanent magnet demagnetization detection in permanent magnet synchronous machines. IEEE Transactions on Magnetics, 54(11), 8110106. DOI 10.1109/TMAG.2018.2836182. [Google Scholar] [CrossRef]

69. Surzhikov, V. P., Khorsov, N. N., Khorsov, P. N. (2013). On the possible use of a multisensor test system for the study of electromagnetic emissions from a sample under load. Russian Journal of Nondestructive Testing, 49(11), 664–667. DOI 10.1134/S1061830913110077. [Google Scholar] [CrossRef]

70. Gunerkar, R. S., Jalan, A. K. (2019). Classification of ball bearing faults using vibro-acoustic sensor data fusion. Experimental Techniques, 43(5), 635–643. DOI 10.1007/s40799-019-00324-0. [Google Scholar] [CrossRef]

71. Cheng, B. Y., Jin, L. X., Li, G. N. (2018). Adaptive fusion framework of infrared and visual image using saliency detection and improved dual-channel PCNN in the LNSST domain. Infrared Physics & Technology, 92, 30–43. DOI 10.1016/j.infrared.2018.04.017. [Google Scholar] [CrossRef]

72. Yan, X., Qin, H. L., Li, J., Zhou, H. X., Yang, T. W. (2016). Multi-focus image fusion using a guided-filter-based difference image. Applied Optics, 55(9), 2230–2239. DOI 10.1364/AO.55.002230. [Google Scholar] [CrossRef]

73. Esfandyari, J., De Nuccio, R., Xu, G. (2011). Solutions for MEMS sensor fusion. Electronic Engineering & Product World, 54(7), 18–21. DOI 10.1016/j.ssc.2011.04.014. [Google Scholar] [CrossRef]

74. Verma, N., Singh, D. (2020). Analysis of cost-effective sensors: Data fusion approach used for forest fire application. Materials Today: Proceedings, 24, 2283–2289. DOI 10.1016/j.matpr.2020.03.756. [Google Scholar] [CrossRef]

75. Ferreira, M. S., Santos, J. L., Frazao, O. (2014). Silica microspheres array strain sensor. Optics Letters, 39(20), 5937–5940. DOI 10.1364/OL.39.005937. [Google Scholar] [CrossRef]

76. Psuj, G. (2017). Magnetic field multi-sensor transducer for detection of defects in steel components. IEEE Transactions on Magnetics, 53(4), 1–4. DOI 10.1109/TMAG.2016.2621822. [Google Scholar] [CrossRef]

77. Jiang, W., Hu, W. W., Xie, C. H. (2017). A new engine fault diagnosis method based on multi-sensor data fusion. Applied Sciences, 7(3), 280. DOI 10.3390/app7030280. [Google Scholar] [CrossRef]

78. Elfring, J., Appeldoorn, R., van Den Dries, S., Kwakkernaat, M. (2016). Effective world modeling: Multisensor data fusion methodology for automated driving. Sensors, 16(10), 1668. DOI 10.3390/s16101668. [Google Scholar] [CrossRef]

79. Luo, R. C., Chang, C. C. (2010). Multisensor fusion and integration aspects of mechatronics. IEEE Industrial Electronics Magazine, 4(2), 20–27. DOI 10.1109/MIE.2010.936760. [Google Scholar] [CrossRef]

80. Watts, J. A., Kelly, F. R., Bauch, T. D., Murgo, J. P., Rubal, B. J. (2018). Rest and exercise hemodynamic and metabolic findings in active duty soldiers referred for cardiac catheterization to exclude heart disease: Insights from past invasive cardiopulmonary exercise testing using multisensor high fidelity catheters. Catheterization and Cardiovascular Interventions, 91(1), 35–46. DOI 10.1002/ccd.27101. [Google Scholar] [CrossRef]

81. Pombo, N., Bousson, K., Araujo, P., Viana, J. (2015). Medical decision-making inspired from aerospace multisensor data fusion concepts. Medical Informatics, 40(3), 185–197. DOI 10.3109/17538157.2013.872113. [Google Scholar] [CrossRef]

82. Villena, A., Lalys, F., Saudreau, B., Pascot, R., Barre, A. et al. (2021). Fusion imaging with a mobile C-arm for peripheral arterial disease. Annals of Vascular Surgery, 71, 273–279. DOI 10.1016/j.avsg.2020.07.059. [Google Scholar] [CrossRef]

83. Naini, A. S., Patel, R. V., Samani, A. (2010). CT-enhanced ultrasound image of a totally deflated lung for image-guided minimally invasive tumor ablative procedures. IEEE Transactions on Biomedical Engineering, 57(10), 2627–2630. DOI 10.1109/TBME.2010.2058110. [Google Scholar] [CrossRef]

84. Balderas-Diaz, S., Martinez, M. P., Guerrero-Contreras, G., Miro, E., Benghazi, K. et al. (2017). Using actigraphy and mHealth systems for an objective analysis of sleep quality on systemic lupus erythematosus patients. Methods of Information in Medicine, 56(2), 171–179. DOI 10.3414/ME16-02-0011. [Google Scholar] [CrossRef]

85. Baur, A. D. J., Collettini, F., Ender, J., Maxeiner, A., Schreiter, V. et al. (2017). MRI-TRUS fusion for electrode positioning during irreversible electroporation for treatment of prostate cancer. Diagnostic & Interventional Radiology, 23(4), 321–325. DOI 10.5152/dir.2017.16276. [Google Scholar] [CrossRef]

86. Li, L. Q., Xie, S. M., Ning, J. M., Chen, Q. S., Zhang, Z. Z. (2019). Evaluating green tea quality based on multisensor data fusion combining hyperspectral imaging and olfactory visualization systems. Journal of the Science of Food and Agriculture, 99(4), 1787–1794. DOI 10.1002/jsfa.9371. [Google Scholar] [CrossRef]

87. Li, Z. T., Zhou, G., Zhang, T. X. (2019). Interleaved group convolutions for multitemporal multisensor crop classification. Infrared Physics & Technology, 102, 103023. DOI 10.1016/j.infrared.2019.103023. [Google Scholar] [CrossRef]

88. Du, X. X., Zare, A. (2020). Multiresolution multimodal sensor fusion for remote sensing data with label uncertainty. IEEE Transactions on Geoscience and Remote Sensing, 58(4), 2755–2769. DOI 10.1109/TGRS.2019.2955320. [Google Scholar] [CrossRef]

89. Castrignano, A., Buttafuoco, G., Quarto, R., Vitti, C., Langella, G. et al. (2017). A combined approach of sensor data fusion and multivariate geostatistics for delineation of homogeneous zones in an agricultural field. Sensors, 17(12), 2794. DOI 10.3390/s17122794. [Google Scholar] [CrossRef]

90. Chang, F. L., Heinemann, P. H. (2020). Prediction of human odour assessments based on hedonic tone method using instrument measurements and multi-sensor data fusion integrated neural networks. Biosystems Engineering, 200, 272–283. DOI 10.1016/j.biosystemseng.2020.10.005. [Google Scholar] [CrossRef]

91. Ignat, T., Alchanatis, V., Schmilovitch, Z. (2014). Maturity prediction of intact bell peppers by sensor fusion. Computers and Electronics in Agriculture, 104, 9–17. DOI 10.1016/j.compag.2014.03.006. [Google Scholar] [CrossRef]

92. Blank, S., Fohst, T., Berns, K. (2012). A biologically motivated approach towards modular and robust low-level sensor fusion for application in agricultural machinery design. Computers & Electronics in Agriculture, 89, 10–17. DOI 10.1016/j.compag.2012.07.016. [Google Scholar] [CrossRef]

93. Tavares, T. R., Molin, J. P., Javadi, S. H., Carvalho, H. W. P. D., Mouazen, A. M. (2021). Combined Use of VIS-NIR and XRF sensors for tropical soil fertility analysis: Assessing different data fusion approaches. Sensors, 21(1), 148. DOI 10.3390/s21010148. [Google Scholar] [CrossRef]

94. Zhou, Y. H., Zuo, Z. T., Xu, F. R., Wang, Y. Z. (2020). Origin identification of panax notoginseng by multi-sensor information fusion strategy of infrared spectra combined with random forest. Spectrochimica Acta Part A: Molecular and Biomolecular Spectroscopy, 226, 117619. DOI 10.1016/j.saa.2019.117619. [Google Scholar] [CrossRef]

95. Tavares, T. R., Molin, J. P., Nunes, L. C., Wei, M. C. F., Krug, F. J. et al. (2021). Multi-sensor approach for tropical soil fertility analysis: Comparison of individual and combined performance of VNIR. XRF, and LIBS Spectroscopies. Agronomy, 11(6), 1028. DOI 10.3390/agronomy11061028. [Google Scholar] [CrossRef]

96. Bhataria, K. C., Shah, B. K. (2018). A review of image fusion techniques. 2018 Second International Conference on Computing Methodologies and Communication (ICCMC), pp. 114–123. Erode, India. DOI 10.1109/ICCMC.2018.8487686. [Google Scholar] [CrossRef]

97. Sun, J., Zhu, H. Y., Xu, Z. B., Han, C. Z. (2013). Poisson image fusion based on markov random field fusion model. Information Fusion, 14(3), 241–254. DOI 10.1016/j.inffus.2012.07.003. [Google Scholar] [CrossRef]

98. Gong, Y. M., Ma, Z. Y., Wang, M. J., Deng, X. Y., Jiang, W. (2020). A new multi-sensor fusion target recognition method based on complementarity analysis and neutrosophic set. Symmetry, 12(9), 1435. DOI 10.3390/sym12091435. [Google Scholar] [CrossRef]

99. Gao, S. S., Zhong, Y. M., Li, W. (2011). Random weighting method for multisensor data fusion. IEEE Sensors Journal, 11(9), 1955–1961. DOI 10.1109/JSEN.2011.2107896. [Google Scholar] [CrossRef]

100. Meng, F. J., Shi, R. X., Shan, D. L., Song, Y., He, W. P. et al. (2017). Multi-sensor image fusion based on regional characteristics. International Journal of Distributed Sensor Networks, 13(11), 155014771774110. DOI 10.1177/1550147717741105. [Google Scholar] [CrossRef]

101. Jameel, A., Ghafoor, A., Riaz, M. M. (2014). Adaptive compressive fusion for visible/IR sensors. IEEE Sensors Journal, 14(7), 2230–2231. DOI 10.1109/JSEN.2014.2320721. [Google Scholar] [CrossRef]

102. Charoentam, O., Patanavijit, V., Jitapunkul, S. (2006). A robust region-based multiscale image fusion scheme for mis-registration problem of thermal and visible images. The 18th International Conference on Pattern Recognition (ICPR), pp. 669--672. Hong Kong, China, IEEE. [Google Scholar]

103. Tian, X., Yang, X., Yang, F., Qi, T. J. (2019). A visible-light activated gas sensor based on perylenediimide-sensitized SNO2 for NO2 detection at room temperature. Colloids and Surfaces A: Physicochemical and Engineering Aspects, 578, 123621. DOI 10.1016/j.colsurfa.2019.123621. [Google Scholar] [CrossRef]

104. Rivadeneira, R. E., SuArez, P. L., Sappa, A. D., Vintimilla, B. X. (2019). Thermal image super resolution through deep convolutional neural network. In: Karray, F., Campilho, A., Yu, A. (Eds.Image analysis and recognition. ICIAR 2019. Lecture notes in computer science, vol. 11663. Springer, Cham. DOI 10.1007/978-3-030-27272-2_37. [Google Scholar] [CrossRef]

105. Pourmomtaz, N., Nahvi, M. (2020). Multispectral particle filter tracking using adaptive decision-based fusion of visible and thermal sequences. Multimedia Tools and Applications, 79(25–26), 18405–18434. DOI 10.1007/s11042-020-08640-z. [Google Scholar] [CrossRef]

106. Hu, H. M., Wu, J. W., Li, B., Guo, Q., Zheng, J. (2017). An adaptive fusion algorithm for visible and infrared videos based on entropy and the cumulative distribution of gray levels. IEEE Transactions on Multimedia, 19(12), 2706–2719. DOI 10.1109/TMM.2017.2711422. [Google Scholar] [CrossRef]

107. Ren, L., Pan, Z. B., Cao, J. Z., Liao, J. W. (2021). Infrared and visible image fusion based on variational auto-encoder and infrared feature compensation. Infrared Physics & Technology, 117(2), 103839. DOI 10.1016/j.infrared.2021.103839. [Google Scholar] [CrossRef]

108. Bulanon, D. M., Burks, T. F., Alchanatis, V. (2009). Image fusion of visible and thermal images for fruit detection. Biosystems Engineering, 103(1), 12–22. DOI 10.1016/j.biosystemseng.2009.02.009. [Google Scholar] [CrossRef]

109. Zhou, H., Sun, M., Ren, X., Wang, X. Y. (2021). Visible-thermal image object detection via the combination of illumination conditions and temperature information. Remote Sensing, 13(18), 3656. DOI 10.3390/rs13183656. [Google Scholar] [CrossRef]

110. Hermosilla, G., Gallardo, F., Farias, G., San Martin, C. (2015). Fusion of visible and thermal descriptors using genetic algorithms for face recognition systems. Sensors, 15(8), 17944–17962. DOI 10.3390/s150817944. [Google Scholar] [CrossRef]

111. Yang, M. D., Su, T. C., Lin, H. Y. (2018). Fusion of infrared thermal image and visible image for 3D thermal model reconstruction using smartphone sensors. Sensors, 18(7), 2003. DOI 10.3390/s18072003. [Google Scholar] [CrossRef]

112. John, V., Tsuchizawa, S., Liu, Z., Mita, S. (2017). Fusion of thermal and visible cameras for the application of pedestrian detection. Signal Image & Video Processing, 11(3), 517–524. DOI 10.1007/s11760-016-0989-z. [Google Scholar] [CrossRef]

113. Yan, Y. J., Ren, J. X., Zhao, H. M., Sun, G. Y., Wang, Z. et al. (2018). Cognitive fusion of thermal and visible imagery for effective detection and tracking of pedestrians in videos. Cognitive Computation, 10(1), 94–104. DOI 10.1007/s12559-017-9529-6. [Google Scholar] [CrossRef]

114. Wang, S. F., Pan, B. W., Chen, H. P., Ji, Q. (2018). Thermal augmented expression recognition. IEEE Transactions on Cybernetics, 48(7), 2203–2214. DOI 10.1109/TCYB.2017.2786309. [Google Scholar] [CrossRef]

115. Chen, J., Li, X. J., Luo, L. B., Mei, X. G., Ma, J. Y. (2020). Infrared and visible image fusion based on target-enhanced multiscale transform decomposition. Information Sciences, 508, 64–78. DOI 10.1016/j.ins.2019.08.066. [Google Scholar] [CrossRef]

116. Du, Q. L., Xu, H., Ma, Y., Huang, J., Fan, F. (2018). Fusing infrared and visible images of different resolutions via total variation model. Sensors, 18(11), 3827. DOI 10.3390/s18113827. [Google Scholar] [CrossRef]

117. Li, Z., Hu, H. M., Zhang, W., Pu, S. L., Li, B. (2021). Spectrum characteristics preserved visible and near-infrared image fusion algorithm. IEEE Transactions on Multimedia, 23, 306–319. DOI 10.1109/TMM.2020.2978640. [Google Scholar] [CrossRef]

118. Ma, J. Y., Ma, Y., Li, C. (2018). Infrared and visible image fusion methods and applications: A survey. Information Fusion, 45, 153–178. DOI 10.1016/j.inffus.2018.02.004. [Google Scholar] [CrossRef]

119. Wang, C., Li, X. Q., Wang, W. F. (2020). Image fusion for improving thermal human face image recognition. The 5th International Conference on Cognitive Systems and Signal Information Processing (ICCSSIP), pp. 417--427. Zhuhai, China, Springer. DOI 10.1007/978-981-16-2336-3_39. [Google Scholar] [CrossRef]

120. Kanmani, M., Narasimhan, V. (2020). Optimal fusion aided face recognition from visible and thermal face images. Multimedia Tools and Applications, 79(25–26), 17859–17883. DOI 10.1007/s11042-020-08628-9. [Google Scholar] [CrossRef]

121. Chen, X. L., Wang, H. P., Liang, Y. H., Meng, Y., Wang, S. F. (2022). A novel infrared and visible image fusion approach based on adversarial neural network. Sensors, 22(1), 304. DOI 10.3390/s22010304. [Google Scholar] [CrossRef]

122. Zhou, H. L., Mian, A., Wei, L., Creighton, D., Hossny, M. et al. (2014). Recent advances on singlemodal and multimodal face recognition: A survey. IEEE Transactions on Human-Machine Systems, 44(6), 701–716. DOI 10.1109/THMS.2014.2340578. [Google Scholar] [CrossRef]

123. Park, S. B., Kim, G., Baek, H. J., Han, J. H., Kim, J. H. (2018). Remote pulse rate measurement from near-infrared videos. IEEE Signal Processing Letters, 25(8), 1271–1275. DOI 10.1109/LSP.2018.2842639. [Google Scholar] [CrossRef]

124. Tian, T., Mei, X. G., Yu, Y., Zhang, C., Zhang, X. Y. (2015). Automatic visible and infrared face registration based on silhouette matching and robust transformation estimation. Infrared Physics & Technology, 69, 145–154. DOI 10.1016/j.infrared.2014.12.011. [Google Scholar] [CrossRef]

125. Wang, Z. L., Chen, Z. Z., Wu, F. (2018). Thermal to visible facial image translation using generative adversarial networks. IEEE Signal Processing Letters, 25(8), 1161–1165. DOI 10.1109/LSP.2018.2845692. [Google Scholar] [CrossRef]

126. Shen, J. L., Zhang, Y. C., Xing, T. T. (2018). The study on the measurement accuracy of non-steady state temperature field under different emissivity using infrared thermal image. Infrared Physics & Technology, 94, 207–213. DOI 10.1016/j.infrared.2018.09.022. [Google Scholar] [CrossRef]

127. Wei, S. L., Qin, W. B., Han, L. W., Cheng, F. Y. (2019). The research on compensation algorithm of infrared temperature measurement based on intelligent sensors. Cluster Computing, 22, 6091–6100. DOI 10.1007/s10586-018-1828-5. [Google Scholar] [CrossRef]

128. Usamentiaga, R., Venegas, P., Guerediaga, J., Vega, L., Molleda, J. et al. (2014). Infrared thermography for temperature measurement and non-destructive testing. Sensors, 14(7), 12305–12348. DOI 10.3390/s140712305. [Google Scholar] [CrossRef]

129. Farokhi, S., Sheikh, U. U., Flusser, J., Yang, B. (2015). Near infrared face recognition using zernike moments and hermite kernels. Information Sciences, 316, 234–245. DOI 10.1016/j.ins.2015.04.030. [Google Scholar] [CrossRef]

130. Jo, H., Kim, W. Y. (2019). NIR reflection augmentation for deep learning-based NIR face recognition. Symmetry, 11(10), 1234. DOI 10.3390/sym11101234. [Google Scholar] [CrossRef]

131. Whitelam, C., Bourlai, T. (2015). On designing an unconstrained tri-band pupil detection system for human identification. Machine Vision and Applications, 26(7–8), 1007–1025. DOI 10.1007/s00138-015-0700-3. [Google Scholar] [CrossRef]

132. Kim, J., Ra, M., Kim, W. Y. (2020). A DCNN-based fast NIR face recognition system robust to reflected light from eyeglasses. IEEE Access, 8, 80948–80963. DOI 10.1109/ACCESS.2020.2991255. [Google Scholar] [CrossRef]

133. Bai, J. F., Ma, Y., Li, J., Li, H., Fang, Y. et al. (2014). Good match exploration for thermal infrared face recognition based on YWF-SIFT with multi-scale fusion. Infrared Physics & Technology, 67, 91–97. DOI 10.1016/j.infrared.2014.06.010. [Google Scholar] [CrossRef]

134. Chambino, L. L., Silva, J. S., Bernardino, A. (2020). Multispectral facial recognition: A review. IEEE Access, 8, 207871–207883. DOI 10.1109/ACCESS.2020.3037451. [Google Scholar] [CrossRef]

135. Panetta, K., Wan, Q., Agaian, S., Rajeev, S., Kamath, S. et al. (2018). A comprehensive database for benchmarking imaging systems. IEEE Transactions on Pattern Analysis and Machine Intelligence, 42(3), 509–520. DOI 10.1109/TPAMI.2018.2884458. [Google Scholar] [CrossRef]

136. Yang, M., Zhang, L., Yang, J., Zhang, D. (2013). Regularized robust coding for face recognition. IEEE Transactions on Image Processing, 22(5), 1753–1766. DOI 10.1109/TIP.2012.2235849. [Google Scholar] [CrossRef]

137. Schroff, F., Kalenichenko, D., Philbin, J. (2015). FaceNet: A unified embedding for face recognition and clustering. 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 815–823. Boston, MA, USA. DOI 10.1109/CVPR.2015.7298682. [Google Scholar] [CrossRef]

138. Chen, C. L. P., Liu, Z. L. (2018). Broad learning system: An effective and efficient incremental learning system without the need for deep architecture. IEEE Transactions on Neural Networks & Learning Systems, 29(1), 10–24. DOI 10.1109/TNNLS.2017.2716952. [Google Scholar] [CrossRef]

139. Lin, C. F., Lin, S. F. (2013). Accuracy enhanced thermal face recognition. Infrared Physics & Technology, 61, 200–207. DOI 10.1016/j.infrared.2013.08.011. [Google Scholar] [CrossRef]

140. Son, J., Kim, S., Sohn, K. (2016). Fast illumination-robust foreground detection using hierarchical distribution map for real-time video surveillance system. Expert Systems with Applications, 66, 32–41. DOI 10.1016/j.eswa.2016.08.062. [Google Scholar] [CrossRef]

141. Poster, D., Thielke, M., Nguyen, R., Rajaraman, S., Di, X. et al. (2021). A large-scale, time-synchronized visible and thermal face dataset, 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), pp. 1558–1567. Waikoloa, USA. DOI 10.1109/WACV48630.2021.00160. [Google Scholar] [CrossRef]

142. Bai, Y., Xing, T. W., Jiang, Y. D. (2014). Advances in infrared spectrum zoom imaging system research. Spectroscopy & Spectral Analysis, 34(12), 3419–3423. DOI 10.3964/j.issn.1000-0593(2014)12-3419-05. [Google Scholar] [CrossRef]

143. Li, L., Zhou, F. G., Zheng, Y., Bai, X. Z. (2019). Reconstructed saliency for infrared pedestrian images. IEEE Access, 7, 42652–42663. DOI 10.1109/ACCESS.2019.2906332. [Google Scholar] [CrossRef]

144. Sun, C. Q., Zhang, C., Xiong, N. X. (2020). Infrared and visible image fusion techniques based on deep learning: A review. Electronics, 9(12), 2162. DOI 10.3390/electronics9122162. [Google Scholar] [CrossRef]

145. Bi, Y., Lv, M. S., Wei, Y. J., Guan, N., Yi, W. (2016). Multi-feature fusion for thermal face recognition. Infrared Physics & Technology, 77, 366–374. DOI 10.1016/j.infrared.2016.05.011. [Google Scholar] [CrossRef]

146. Khaleghi, B., Khamis, A., Karray, F. O., Razavi, S. N. (2013). Multisensor data fusion: A review of the state-of-the-art. Information Fusion, 14(1), 28–44. DOI 10.1016/j.inffus.2011.08.001. [Google Scholar] [CrossRef]

147. Wang, C., Li, Y. P. (2010). Combine image quality fusion and illumination compensation for video-based face recognition. Neurocomputing, 73(7–9), 1478–1490. DOI 10.1016/j.neucom.2009.11.010. [Google Scholar] [CrossRef]

148. Pavlovi, M., Stojanovi, B., Petrovic, R., Stankvic, S. (2018). Fusion of visual and thermal imagery for illumination invariant face recognition system. 2018 14th Symposium on Neural Networks and Applications (NEUREL), pp. 1–5. Belgrade, Serbia. DOI 10.1109/NEUREL.2018.8586985. [Google Scholar] [CrossRef]

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools