Open Access

Open Access

ARTICLE

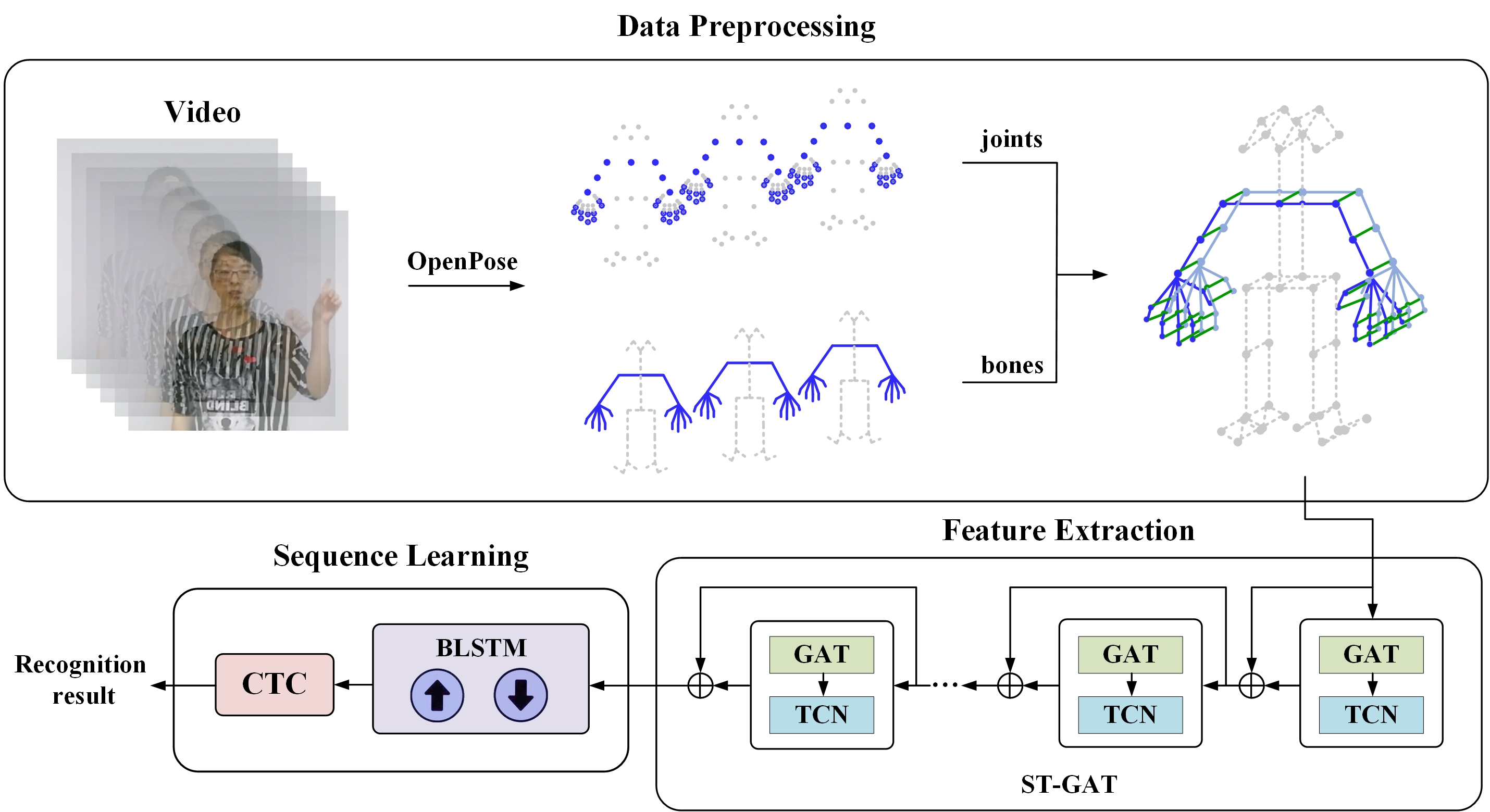

Continuous Sign Language Recognition Based on Spatial-Temporal Graph Attention Network

College of Information Science and Technology, Qingdao University of Science and Technology, Qingdao, 266061, China

* Corresponding Author: Shujun Zhang. Email:

Computer Modeling in Engineering & Sciences 2023, 134(3), 1653-1670. https://doi.org/10.32604/cmes.2022.021784

Received 04 February 2022; Accepted 05 May 2022; Issue published 20 September 2022

Abstract

Continuous sign language recognition (CSLR) is challenging due to the complexity of video background, hand gesture variability, and temporal modeling difficulties. This work proposes a CSLR method based on a spatial-temporal graph attention network to focus on essential features of video series. The method considers local details of sign language movements by taking the information on joints and bones as inputs and constructing a spatial-temporal graph to reflect inter-frame relevance and physical connections between nodes. The graph-based multi-head attention mechanism is utilized with adjacent matrix calculation for better local-feature exploration, and short-term motion correlation modeling is completed via a temporal convolutional network. We adopted BLSTM to learn the long-term dependence and connectionist temporal classification to align the word-level sequences. The proposed method achieves competitive results regarding word error rates (1.59%) on the Chinese Sign Language dataset and the mean Jaccard Index (65.78%) on the ChaLearn LAP Continuous Gesture Dataset.Graphic Abstract

Keywords

Cite This Article

Copyright © 2023 The Author(s). Published by Tech Science Press.

Copyright © 2023 The Author(s). Published by Tech Science Press.This work is licensed under a Creative Commons Attribution 4.0 International License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Submit a Paper

Submit a Paper Propose a Special lssue

Propose a Special lssue View Full Text

View Full Text Download PDF

Download PDF Downloads

Downloads

Citation Tools

Citation Tools