Open Access

Open Access

REVIEW

A Review of the Current Task Offloading Algorithms, Strategies and Approach in Edge Computing Systems

1

School of Computer Science and Technology, Anhui University, Hefei, 230039, China

2

School of Computer and Software, Nanjing University of Information Science and Technology, Nanjing, 210044, China

* Corresponding Author: Yiwen Zhang. Email:

(This article belongs to the Special Issue: Artificial Intelligence for Mobile Edge Computing in IoT)

Computer Modeling in Engineering & Sciences 2023, 134(1), 35-88. https://doi.org/10.32604/cmes.2022.021394

Received 12 January 2022; Accepted 18 March 2022; Issue published 24 August 2022

Abstract

Task offloading is an important concept for edge computing and the Internet of Things (IoT) because computationintensive tasks must be offloaded to more resource-powerful remote devices. Task offloading has several advantages, including increased battery life, lower latency, and better application performance. A task offloading method determines whether sections of the full application should be run locally or offloaded for execution remotely. The offloading choice problem is influenced by several factors, including application properties, network conditions, hardware features, and mobility, influencing the offloading system’s operational environment. This study provides a thorough examination of current task offloading and resource allocation in edge computing, covering offloading strategies, algorithms, and factors that influence offloading. Full offloading and partial offloading strategies are the two types of offloading strategies. The algorithms for task offloading and resource allocation are then categorized into two parts: machine learning algorithms and non-machine learning algorithms. We examine and elaborate on algorithms like Supervised Learning, Unsupervised Learning, and Reinforcement Learning (RL) under machine learning. Under the non-machine learning algorithm, we elaborate on algorithms like non(convex) optimization, Lyapunov optimization, Game theory, Heuristic Algorithm, Dynamic Voltage Scaling, Gibbs Sampling, and Generalized Benders Decomposition (GBD). Finally, we highlight and discuss some research challenges and issues in edge computing.Keywords

The pervasiveness of the smart Internet of Things (IoT) [1] enables many electric sensors and devices to be connected and generates a large amount of data flow [2]. IoT applications, such as smart homes, disease prevention and control, and telecommunication are affecting and transforming our lives with the introduction of the IoT. These applications are time-sensitive and take a lot of energy, memory, and computational resources. Although IoT devices are increasingly becoming more powerful, the battery, CPU, and memory are still insufficient when running large apps on a single device. Computational offloading is one of the remedies to the difficulties mentioned above, in which the computation workloads are transferred to another system for execution [3].

Meanwhile, with robust data center architectures, the cloud is a well-tested and used option that may enhance the resource capabilities of end devices. Furthermore, the cloud is well-equipped with the essential automation tools and features to provide the required transparency to end devices while concealing this resource extensions’ difficulty and logistical intricacies [4]. Consequently, the practice of offloading computation-intensive tasks of resource-intensive applications from the end devices to centralized Cloud infrastructure is a well-explored solution [4–6]. Nevertheless, applications such as media processing, online gaming, Augmented Reality (AR), Virtual Reality (VR), self-driving automotive applications, and recommendation systems [7–9] run on a wide range of mobile devices. When the focus of new applications shifted to high throughput and low latency communications, the cloud began to expose its significant drawbacks resulting from the resource constraints of these ubiquitous mobile devices. Running such resource-hungry applications requires fast response time data rates [10].

The need for alternative solutions arose due to the long distance between end devices and Cloud infrastructure, an unreliable, intermittent transport network, the cost of traversing the backhaul network, and the increased security surface throughout this long communication path [4]. Edge computing is a new computing paradigm that employs edge servers close to users. As alternative paradigms of edge computing, three related notions (technologies) are proposed: Open Edge Computing cloudlets [3], Multi-access Edge Computing (MEC) of European Telecommunications Standards Institute (ETSI) (2016), and Fog computing of OpenFog Consortium (2016) [11]. This novel infrastructure component, which establishes an extra resource layer between consumer devices and the cloud, can reduce rising bandwidth usage in backhaul, transport, and Cloud networks and communication latency and support real-time applications. Moreover, there is some research on AI’s optimization of edge performance [12]. End devices, for example, can now offload resource-intensive operations to a nearby Edge device, reducing total execution time while avoiding the need for additional communication routes to a distant Cloud infrastructure. This method, also known as task offloading or computation offloading, improves the user’s experience by reducing latency and improving energy efficiency for battery-powered devices [4]. Computation offloading entails dividing power-hungry mobile apps to use cloud resources from afar. Parts of code profiled as computation and energy heavy are identified for execution on cloud servers (partial offloading). Alternatively, the offloading method is used to decide whether to offload all tasks to a cloudlet or execute all tasks locally (Full offloading). Several research publications have highlighted the benefits of offloading to save energy and minimize system latency on mobile devices.

A distant or local execution technique, a transmission approach, and a result send-back procedure are all part of task offloading and resource allocation. The major components of the offloading scheme are as follows. (A) Job partitioning: If a task can be partitioned, we must divide it optimally before offloading it. If not, the entire task should be offloaded to an edge server or run locally. (B) Offloading decision: whether to offload to edge server(s) for execution or perform task locally. (C) Allocation of resources: determining the number of resources required. These resources include computing, communication, and energy, allocated for the tasks or components [11]. Fig. 1 shows a typical offloading process.

Figure 1: A typical offloading process

This study provides a thorough examination of task offloading in edge computing, including offloading strategies (full or partial), algorithms, and factors that influence offloading. The following are the contributions of this article:

1. A comprehensive survey of the current task offloading and resource allocation in edge computing, including offloading strategies, offloading algorithms, and factors affecting offloading, is presented.

2. We present the partitioning of offloading strategies into either full or partial offloading and present some comprehensive surveys.

3. We group several recent tasks offloading and resource allocation algorithms into two main categories. (i) machine learning algorithms and (ii) non-machine learning algorithms.

4. We elaborate on the factors affecting offloading and discuss some research challenges and issues.

The following is how the rest of the paper is structured: We examine related work to this article, an overview of offloading, and our paper selection criteria in Section 2. Offloading performance metrics and factors affecting offloading are presented in Section 3. Classification of offloading algorithms into two groups, machine learning, and non-machine learning algorithm and offloading strategies, are discussed in Section 4. Section 5 presents an open discussion and analysis of the various metrics, algorithms, systems, and utilized tools. A comparison of the major utilized offloading algorithms and some research challenges are presented in Section 6. Finally, a conclusion is presented in Section 7.

The authors of [7] studied offloading modeling in edge computing. The article discussed some major edge computing architectures and classified past computation offloading research into different groups. Furthermore, the authors explored various basic models proposed in offloading modeling, such as the channel, computation and communication, and energy harvesting models. The authors also go over several offloading modeling techniques, including (non-) convex optimization, Markov decision processes, game theory, Lyapunov optimization, and machine learning. In Mobile Cloud Computing, Ahmed et al. [13] investigated mobile application frameworks and analyzed optimization methodologies affecting design, deployment, and offloading performance. The review paper featured an appropriate classification as a benefit.

Saeik et al. [4] explored how the edge and cloud can be coupled to help with task offloading. They focused on mathematical, artificial intelligence, and control theory optimization processes that can be used to satisfy dynamic requirements in an end-to-end application execution strategy.

Shakarami et al. [10] proposed a survey paper on stochastic-based offloading approaches in various computation environments, including Mobile Cloud Computing (MCC), Mobile Edge Computing (MEC), and Fog Computing (FC), in which a classical taxonomy is presented to identify new mechanisms. Markov chain, Markov process, and Hidden Markov Models are the three core fields of the proposed taxonomy.

Shakarami et al. [14] proposed ML-based computation offloading strategies in the MEC environment in the form of classical taxonomy. Reinforcement learning-based mechanisms, supervised learning-based mechanisms, and unsupervised learning-based mechanisms are the three primary categories in the proposed taxonomy. These classes are then compared based on essential characteristics such as performance measures, case studies, strategies used, evaluation tools, and their benefits and drawbacks.

Zheng et al. [3] provided a complete overview of computation offloading in edge computing, covering scenarios, influencing variables, and offloading methodologies. Authors specifically covered crucial problems during the offloading process, such as whether to offload, where to offload, and what to offload.

Bhattacharya et al. [15] looked into the adaption techniques of offloading systems. By defining the variable characteristics in the offloading ecosystem, presenting offloading solutions that adapt to these parameters, and highlighting the accompanying gains in user Quality of Experience, a monocular picture of the task offloading challenge was presented.

Carvalho et al. [16] surveyed computation offloading in edge computing using artificial intelligence and non-artificial intelligence techniques. Under AI techniques, they considered machine learning-based techniques that provide promising results in overcoming the shortcomings of current approaches for computing offloading and categorized them into classes for better analysis. They went on to discuss a vehicular edge computing environment offloading use case. Guevara et al. [17] looked at the primary issues with QoS constraints for applications running in traditional computing paradigms like fog and cloud. After that, they demonstrated a typical machine learning classification method. The paper’s key benefit is that it professionally focuses on machine learning. Shan et al. [18] classified offloading methods into distinct purposes, such as minimizing execution delay, minimizing energy consumption, and minimizing the tradeoff between energy consumption and execution latency

However, the above surveys have several flaws, including a lack of paper selection criteria and consideration for other non-machine learning algorithms. The majority of the papers focused only on the machine learning aspect, ignoring the powerful algorithms that are not machine learning-based. Furthermore, several studies do not clearly distinguish between full and partial offloading. These are some of the areas we hope to address in our study. First, we want to look at both machine learning and non-machine learning algorithms, provide a clear definition of full and partial offloading and examine some of the recent research that has been done using both techniques.

With the prevalence of smart devices and cloud computing, the amount of data generated by Internet of Things devices has exploded. Furthermore, emerging IoT applications such as Virtual Reality (VR), Augmented Reality (AR), intelligent transportation systems, smart homes, smart health, smart factories. And other IoT applications require ultra-low latency for data communication and processing, which the cloud computing paradigm does not provide [19]. Mobile Edge Computing (MEC) is an efficient technique to bring user and edge servers closer by offloading a computation-intensive task on deployed computation servers at the user side and minimizing backhaul traffic created by apps to a remote cloud data center. MEC decreases the time it takes to complete calculation operations for delay-sensitive cloud computing applications and saves energy. The offloading process usually entails two important decisions: what tasks should be offloaded and where they should be offloaded to, or where the offloading should be done. The latter is particularly important because it directly influences the system’s quality of service and performance. Several researchers have proposed novel approaches to enhance offloading. Some approaches are MDP based, RL based and even link-prediction based. Zhang et al. [20] used the link-prediction approach in their offloading model; similarly, Liu et al. [21] adopted link-prediction in a recommendation system, making link-prediction a versatile approach. Unfortunately, there is not much research on link-prediction-based offloading currently. Offloading can take place between the device to device, a device to edge server, edge server to edge server, edge server to the cloud, cloud to the edge server, and device to cloud. Fig. 2 shows a typical MEC architecture and shows the various places offloading can occur. As illustrated, this structure has six divisions classified into three main layers.

Figure 2: MEC architecture

• At the device layer (Device to device), IoT gadgets have become an integral part of our daily lives in recent years. They are both data producers and data consumers. Mobile devices can provide a limited number of computing resources, and most of these resources are idle most of the time. When a mobile device’s compute capabilities are exceeded, it can split the application into smaller tasks and offload to adjacent mobile devices with idle processing resources. It can help reduce single device resource scarcity and increase overall resource consumption.

• At the edge layer (Device to edge server), most IoT devices cannot do sophisticated calculation tasks due to their low resources. A single device with limited resources (e.g., a smartphone) will not complete the tasks promptly. IoT devices can transfer compute activities to nearby edge servers, such as Cloudlets [22], or MEC servers [23], where they will be processed and evaluated, as shown in Fig. 1. It successfully lowers the cost and delay. It can help lessen the burden on embedded devices when running machine learning algorithms and enhance app performance.

• At the edge layer (edge server to edge server), edge computing can help IoT devices at the edge because of their computational capacity and quick response time. However, because a single edge server’s processing capacity is limited, numerous edge nodes must be combined to maintain load balance and share data to provide cooperative services, such as collaborative edge.

• At the cloud layer (edge server to Cloud server), edge servers (e.g., MEC, Cloudlet, and Fog) have the processing and storage capability to complete most operations at the edge layer. However, they still require cloud services to store access data in many instances. Smart Healthcare uses fog to cloud offload to keep patient records for a long time. Due to the widespread distribution of resources, the edge server and cloud service must collaborate to complete a task. Fog computing requires the original spatial information provided by the cloud during rescue missions to direct rescuers to do on-site search and rescue activities. The fog and cloud give jobs to each other in this fashion and work together to provide service.

• At the cloud layer (Device to Cloud server), IoT devices may need to offload tasks to a remote cloud server for data storage or processing in some instances. Heavy tasks can be offloaded to powerful distant centralized clouds (e.g., Amazon EC2 [24], Microsoft Azure [25], and Google), and enormous volumes of data can be stored on Cloud storage. Mobile Cloud Computing (MCC) [26] benefits mobile consumers by prolonging battery life, enabling advanced applications, and increasing data storage capacities. Mobile games can offload computationally heavy operations, such as graphics rendering, to the cloud and display the results on the screen to interact with consumers. It can improve the gaming experience while conserving battery life on mobile devices.

• At the device layer (Edge server to device), IoT devices may need to receive external data for calculations in some cases, such as temperature, humidity, and other environmental parameters. Due to its restricted function and resources, it is difficult for an IoT device to measure and retain this information in significant amounts. As a result, IoT devices can connect to edge servers such as Cloudlet or MEC, located near IoTs. Edge servers have the computing power and may gather data from various sources. It can effectively lower the cost of connecting to the cloud and the load on equipment and transmission latency.

• Task offloading can also be divided into full offloading and partial offloading. Full offloading, also known as binary or coarse-grained or total offloading, occurs when the entire task is migrated to the edge cloud or edge for execution or when the entire task is executed locally. In contrast, partial offloading, also known as fine-grained offloading or dynamic offloading, occurs when the entire task is dynamically transmitted as little code as possible and offloads only the computation-intensive parts of the application (more details on offloading categories in Section 4). Fig. 3 shows the roadmap of this paper.

Figure 3: Roadmap of this paper

A research approach for good papers in the MEC Offloading scenario is described in this part. Exploring and researching relevant papers is needed to build a more knowledge-rich survey.

This survey intends to look at the essential features and techniques used in papers over time and the main concerns and obstacles in offloading, such as factors affecting offloading. Because an important goal of the current survey is to cover the entire study of MEC offloading and highlight relevant outstanding issues, specific key research questions must be answered to handle corresponding concerns.

Q1. What technique or algorithm is utilized in ML and Non-ML based offloading approaches? See Subsections 4.1 and 4.2.

Q2. What performance metrics are usually utilized in ML and Non-ML based offloading approaches? See Section 3.

Q3. What factors affect both ML and Non-ML based offloading approaches? See Subsection 3.1.

Q4. What evaluation tools are utilized for assessing the ML and Non-ML based approaches? See Subsection 5.4.

Q5. What utilized systems are considered in both ML and Non-ML based approaches? See Subsection 5.3.

Q6. What are the future research directions and open perspectives of ML and Non-ML based offloading approaches? See Subsection 6.1.

Sections 3 to 6 try to elaborate more on the mentioned technical questions.

Suitable papers in the MEC have been explored in the academic databases. The principles of selecting articles in the process of exploring are summarized as follows:

1. Published papers between 2015 and 2021,

2. Published papers in the MEC,

3. Technical quality selection to choose appropriate papers in the MEC.

In the exploration process, appropriate keywords such as “mobile”, “edge”, “computing”, “offloading”, “MEC”, “unsupervised learning”, “deep learning”, “reinforcement learning”, “deep Q-networks”, “game theory”, “Lyapunov optimization”, “supervised learning”, “machine learning”, “convex optimization”, “heuristic”, “Markov-decision process”, and “resource allocation” were used. By narrowing the time limitations between 2015 and 2021, the exploration took place in April 2021. The outcome of the exploration was extraordinarily high in numbers since the issue of offloading spans many models in the literature, including stochastic and non-stochastic models with comprehensive techniques such as game theory, machine learning, and many more. As a result, 1147 articles were found due to the search. For the first step, 900 irrelevant papers were discarded by analyzing several essential components, such as the title, abstract, contributions, and conclusion. Following that, 152 papers were discarded as low quality after examining the organization of the remaining papers. As a result, the MEC approved 94 of the remaining articles. After removing 20 duplicate papers and 4 books, the remaining 71 papers related to ML and Non-ML are included in the current study, as shown in Fig. 4.

Figure 4: Paper selection

The following technical question is addressed in this section:

Q2: What performance metrics are usually utilized in ML and Non-ML based offloading approaches

Researchers in the task offloading domain consider several offloading metrics; these metrics are either considered jointly as a multi-objective problem or individually. This paper will briefly explain these offloading metrics in the task offloading domain. When solving the task offloading problem, several different objectives may be applied. An objective function helps to formulate these goals and guide the offloading solution formally and mathematically. The objectives come in the form of offloading metrics. The offloading metrics covered include latency, energy, cost, bandwidth, and response time.

A. Energy

The overall energy required by offloading comprises the energy consumed to send the task from the device to the server, the energy consumed to execute the task in the edge server, and the energy consumed to return the results to the device [23,27]. Because mobile and IoT devices are typically battery-powered, maximizing the battery’s lifetime through lowering the device’s energy usage is a big concern. Inevitably, it is logical to expect that the most significant energy savings can be achieved by using a full offloading technique. Several other energy suppliers must be considered even when a total offloading strategy is used. From a complete, network-wide perspective, it is easy to see how the problem is moved to the Edge or Cloud infrastructures. As a result, energy consumption minimization must be followed at all tiers of an end-to-end communication paradigm. A variety of measures can be used to assess this goal; the most frequent is the average power consumption, which is calculated by summing the power consumption of the hardware equipment. Energy consumption, presented as power use over time, is another option. Typically, reducing power use leads to reducing energy consumption as well.

B. Latency

The total time it takes to transmit the work to the edge servers, the time it takes to execute the task on the servers, and the time it takes to return the results to the device are all factors in the offloading requirement [28]. There are a lot of various delay components that contribute to this. The first delay source is task processing, which can occur when a task is performed locally on the device, at the edge, or in the cloud [29,30]. When offloading a task to a remote edge or cloud location, the transmission and propagation delays at the various infrastructure tiers must be considered. Furthermore, processing and queuing delays at various processing and forwarding devices must be considered. Finally, during the task offloading decision, appropriately splitting the task can be an additional delay factor [31]. The delay goal can be described as either minimizing the average delay of each activity or minimizing the overall delay of all the mobile application’s related tasks. This goal is equitable to the resources available and the network circumstances.

C. Cost

Different academics use different methods to calculate the cost of MEC offloading. The costs involved by delivering the tasks via the transmission media, executing them on the server, and receiving an acceptable answer from the request source are referred to as the cost. These costs are determined by the task’s location, reaction time, task demand, and task energy consumption. Since consumed energy and delay are the essential components in computing total cost, making a trade-off among these two relevant metrics is critical. As a result, overall execution costs are regarded similarly to the two previously described measures, which comprise local and remote execution costs, as well as the processing and buffering delays.

D. Bandwidth

A crucial constraint is the available bandwidth at the access network and how different users might share it to offload workloads. Nevertheless, its significant impact on task offloading performance can also be regarded as a goal. Proper spectrum allocation becomes critical because available bandwidth is limited, particularly in IoT and congested cellular networks. Spectrum allocation is frequently linked to each end user’s transmission rate and power level and the duration of each device’s transmission to distribute bandwidth utilization better. When attempting to deploy spectrum utilization effectively, a useful statistic is to analyze spectrum usage regarding the number of offloaded jobs, power transfer, and bandwidth consumption [32]. In light of the dynamic wireless settings, the best bandwidth scheduling must consider the time-varying channel condition and the task average response time.

E. Response Time

The overall time it takes for a user to receive a response is called response time. Changes in a system’s processing time and latency, which occur due to changes in hardware resources or utilization, can impact it. In the MEC scenario, response time is defined as the time between offloading tasks from local devices to remote servers and obtaining the appropriate response in the specified devices as a measure of performance. There are discrepancies between the system’s response time and latency. The overall time between initiating a request and receiving an appropriate response is called response time. Latency, on the other hand, is defined as the time it takes for a transmitted request to arrive at its destination and be processed.

3.1 Factors Affecting Offloading

The following technical question is addressed in this section:

Q3: What factors affect both ML and Non-ML based offloading approaches

1) Wireless Channel

When it comes to computing offloading, the state of the wireless network is a critical aspect that has a considerable impact on offloading decisions. Wireless is the most common method of communication between edge devices and servers. Wireless channels have reflection, refraction, and multipath fading, which are not present in wired channels [11]. The fluctuations in channel strength across time and frequency are a defining feature of the wireless channel. The variants can be divided into two groups: (I) Fading on a large scale occurs when the mobile moves a distance on the magnitude of the cell size. It is typically frequency-independent due to shadowing by massive objects like buildings and hills and distance due to signal path loss. (II) Small-scale fading is caused by constructive and destructive interference between the transmitter and receiver’s many signal channels; it occurs at the spatial scale of the carrier wavelength and is frequency dependent [33]. A typical channel’s accessibility pattern can be classified as stochastic or deterministic. The former is only available sometimes, but the latter is expected to be available at all times [10].

2) Bandwidth and Network Interference

The data send rate is determined by the bandwidth between the IoT device and the server, and the data transmit time is affected. In some circumstances, the data transmission time between the device and the server may outweigh the offloading delay, resulting in too long latency to meet the time constraint. Furthermore, network interference is difficult to anticipate, influenced by device mobility, bandwidth variance, network congestion, and the distance between devices and servers. Network interference significantly impacts the offloading system’s ability to fulfill latency-constrained applications [34].

3) End Device

Mobile devices, sensors, and IoT devices are just a few examples of edge devices. Their hardware architectures, processing capabilities, storage capacity, and operating systems are distinct. As a result, device heterogeneity will impact offloading effects such as execution time and energy usage. Moreover, device mobility significantly impacts offloading; if mobile users are continually moving around, this may generate interference amongst users. Furthermore, in the case of an IoV or a UAV, if the device, whether it is an automobile or a UAV, departs from the offloading range before the results obtained are provided, the device will most likely need to find other offloading sources, which will increase delay and waste device energy [35].

4) Near-End Device

The effect of computation offloading is mostly determined by the servers that provide processing task functionality. Before making an offloading decision, end devices should examine the computation capabilities of servers, available resources, distance, and access technologies. Computing capability refers to the rate with which data is processed at a server; it is one of the most critical, but not the only, considerations for deciding whether or not to offload. The response time is also affected by a lack of resources. When a task is offloaded to a server, but the CPU is overcrowded or occupied by other tasks, the task is paused and waits for the CPU, increasing response time and affecting offloading [36].

The following technical question is addressed in this section:

Q1. What technique or algorithm is utilized in ML and Non-ML based offloading approaches?

In this paper, we will group the offloading techniques adopted in mobile edge computing into two categories, (1) machine learning offloading algorithms, illustrated in Table 1, (2) non-machine learning offloading algorithms, illustrated in Table 2. First of all, most of the existing offloading reviews focus on machine learning methods, and provide very detailed classification methods [14]. Second, few reviews focus on non-machine learning methods. Therefore, this paper considers offloading methods into machine learning and non-machine learning, which can include all offloading comprehensively. Finally, dividing offloading into machine learning and non-machine learning can give us a better understanding of the development of offloading.

4.1 Machine Learning Offloading Algorithms

Here we will introduce several machine learning algorithms that have been adopted and invented by researchers to tackle problems with edge computing offloading. See Table 1 for more details.

4.1.1 What is Machine Learning?

According to IBM, machine learning is a branch of artificial intelligence (AI) and computer science that focuses on using data and algorithms to imitate the way that humans learn, gradually improving its accuracy [37]. Generally, we will define machine learning as an application of artificial intelligence (AI) that allows systems to learn and improve from experience without being explicitly programmed automatically. Machine learning focuses on developing computer programs that can access data and use it to learn for themselves [38]. Machine learning can be grouped into three categories.

Machine learning algorithms under supervised algorithms can use labeled data to apply what they have learned to new data and anticipate future events. The supervised learning algorithm creates an inferred function to generate predictions about the output values based on examining a known training dataset. After enough training, the algorithm can provide targets for any new instance. The supervised learning algorithm can also evaluate its output to the correct, expected result and detect failures, allowing the model to be modified as needed. Supervised learning is commonly utilized in bioinformatics, object identification, speech recognition, pattern recognition, handwriting recognition, and spam detection.

On the other hand, unsupervised machine learning algorithms are utilized when the trained data is not classed or annotated. Unsupervised learning investigates how machines might deduce a function from unlabeled data to determine underlying patterns. The algorithm does not sort out the proper output, but it examines the data and can deduce hidden patterns from unlabeled data using datasets. Liao et al. [39] utilized an unsupervised machine learning algorithm called clustering in their paper, Coronavirus Pandemic Analysis Through Tripartite Graph Clustering in Online Social Networks.

Reinforcement learning algorithms are machine learning algorithms that interact with their environment by creating actions and recognizing failures or rewards. Trial and error search and rewards are the most crucial aspects of reinforcement learning. This technology allows machines and software agents to dynamically select the appropriate behavior in a given environment to improve their efficiency. A primary reward feedback signal, called the reinforcement signal, is required for the agent to learn which behavior is superior. Model-based and model-free approaches are the two types of reinforcement learning methods in general. Model-based learning, which includes online and deep learning, is commonly used as a transition function, trial-and-error, and planning algorithms. Q-Learning (RL), Deep Q-Learning (DRL), are examples of model-free, which usually act as an erroneous model.

In this paper, the machine learning offloading algorithms that will be considered include; Support Vector Machine (SVM), Deep Neural Networks, Decision Tree (DT), Instance-Based Learning (IBL), Analytic Hierarchy Process (AHP), Markov Model (MM), Q-Learning, Deep Q-Learning, (or Deep Reinforcement Learning) and Actor-Critic Learning, example Deep Deterministic Policy Gradient (DDPG).

A) Support Vector Machine (SVM)

The Support Vector Machine (SVM) is a standard Supervised Learning technique that may solve classification and regression issues. However, it is primarily utilized in Machine Learning for Classification difficulties. Because the Support Vector Machine (SVM) can handle classification issues quickly [40,41], it may also be used to make offloading decisions by transforming decision problems into classification problems. It allows for more accurate and speedy offloading progress.

Wu et al. [42] used an SVM for a vehicular edge computing scenario. The authors proposed an efficient offloading algorithm based on SVM to satisfy the fast-offloading demand in vehicular networks. Majeed et al. [43] Investigated the accuracy of offloading decisions to a cloud server and its impacts on overall performance. They then proposed an accurate decision-making system for mobile systems’ adaptive and dynamic nature by utilizing SVM learning techniques for making offloading decisions locally or remotely. According to the authors, the proposed scheme can achieve up to 92% accuracy in decision-making and reduce energy consumption. Wang et al. [44] investigated the problem of energy minimization consumption for task computation and transmission in cellular networks. They formulated an optimization problem to minimize the energy consumption for task computation and transmission. They then proposed a Support Vector Machine (SVM)-based federated learning algorithm to determine the user association proactively. With the user association given, edge servers or BS can collect information related to the computational task of its associated users, using which the transmit power and task allocation of each user will be optimized, and the energy consumption of each user is minimized.

B) Deep Learning

DNN is a multi-layered ANN (Artificial Neural Network) commonly used for prediction, anomaly detection [45], and optimization issues to identify the best solution from under-trained input by modifying mathematics suitably. It is worth noting DNN itself can be vulnerable to attacks like adversarial samples [46]. While using the DNN technique, various parameters like the number of layers, initial weight, and learning rate must be carefully evaluated to avoid overfitting and computation time. These problems necessitate much computational power, in contrast to the offloading ecosystem’s time-and resource-intensive entities. There are several use cases of a neural network; Hou et al. [47] used an encoder-decoder module to solve a time series problem. Moreover, Zhang et al. [48] utilized a Multilayered Perceptron (MLP) and LSTM modules to build a recommendation system for predicting live streaming services. Moreover, Wang et al. [45] used a deep residual Convolutional Neural Network (CNN) for anomaly detection in Industrial Control Systems (ICS) based on transfer learning.

Ali et al. [49] proposed a novel energy-efficient offloading algorithm based on deep learning. To train an intelligent decision-making algorithm that selects an optimal set of application components based on users’ remaining energy, energy consumption by application components, network conditions, computational load, amount of data transfer, and delays in communication. Yu et al. [50] considered a small cell-based mobile edge cloud network and proposed a partial computation offloading framework (Deep supervised learning). The algorithm considers the varying wireless network state and the availability of resources to minimize the offloading cost for the MEC network. Huang et al. [28] aimed to solve the energy consumption problem and improve the quality of service, so they proposed a distributed deep learning-based offloading algorithm (DDLO) for MEC networks, where multiple parallel DNNs are used to generate offloading decisions. Zhao et al. [51] used the ARIMA-BP model to estimate the edge cloud’s computation capacity to optimize energy for delay restrictions in the MEC environment through selective offloading. They proposed ABSO (ARIMA-BP-based Selective Offloading). To acquire the offloading policy, they devised a selective offloading algorithm.

C) Decision Tree (DT)

The decision tree is a machine learning model that may achieve high accuracy in various tasks while also being very easy to understand. What distinguishes decision trees from other ML models is their clarity of information representation. After being trained, a decision tree’s “knowledge” is immediately articulated into a hierarchical structure. This structure organizes and presents information so that even non-experts can understand it. Offloading decision trees is useful because they set out the problem so that all choices may be evaluated and allow researchers to analyze the possible consequences of a decision comprehensively.

Rego et al. [26] proposed a scheme that involves using decision trees and software-defined networking to tackle general offloading challenges, specifically, the challenge of when to offload, where to offload, and what metrics to monitor. The proposed scheme further handles the offloading decision-making process and supports user mobility. Likewise, in [52], they proposed a scheme that utilized a decision tree for the offloading decision-making process and proposed an adaptive monitoring scheme to keep track of the metrics relevant to the offloading decision.

D) Instance Base Learning (IBL) (K-Nearest Neighbor (KNN))

K-Nearest neighbor is a regression and a classification machine learning algorithm or technique. However, in industry, it is mainly used to tackle classification and prediction problems. K-Nearest Neighbor assesses the labels of a set of data points surrounding a target data point to provide a prediction about the data point’s class. Because it delivers highly precise predictions, the KNN algorithm can compete with the most accurate model. As a result, the KNN algorithm can be used for applications that need high precision, such as computation and task offloading.

Crutcher et al. [53] took a new approach with their proposed model, integrating the k-Nearest Neighbor method (kNN). They used aspects from Knowledge-Defined Networking (KDN) to create realistic estimates for historical data offloading costs. A predicted metric exists for each dimension of the high-dimensional feature space. After assessing the costs of computation offloading, input features for a hyper-profile and position node are computed. An ML-based query, the kNN, is executed within this hyper-profile.

E) Analytic Hierarchy Process (AHP)

AHP is a simple and effective hierarchical strategy for analyzing and organizing multi-objective decisions based on mathematics and psychology. It consists of three parts: the ultimate aim or problem, all feasible solutions (referred to as alternatives), and the criteria to evaluate the alternatives. By quantifying its criteria and alternative possibilities and tying those parts to the broader purpose, AHP gives a coherent foundation for a needed conclusion. This method may suit decision issues in computation offloading contexts because it may prepare the decision-making process with the most relevant solutions and evaluate alternative options.

Sheng et al. [54] proposed a compute offloading approach that considers the performance of devices and the resources available on servers. Offloading decision-making, server selection, and task scheduling are the three primary stages of their system. The first stage used job sizes, computational requirements, the server’s computing capability, and network bandwidth. In the second stage, appropriate servers are chosen by executing the AHP and thoroughly analyzing candidate servers using multi-objective decision-making. They proposed a task scheduling model for an enhanced auction process in the third stage, taking time constraints and compute performance into account.

F) Q-Learning

Q-Learning is a model-free, off-policy reinforcement learning that will determine the best course of action given the state. The goal of Q-Learning is to learn a policy that will tell an agent what action to take in which situation. Q-Learning can handle problems with stochastic transitions and rewards without the need for adaptation. For any finite MDP, Q-Learning is the policy of maximizing the anticipated value. It can also help solve optimization decision-making problems by determining the best action-state selection policy. Hence Q-Learning is suitable for use to tackle offloading problems.

To deal with the uncertainty of MEC environments, Kiran et al. [55] presented an online learning mobility management (Q-Learning) system. Their proposed solution learns the best mobility management scheme from the environment through trial and error to reduce service delay. UEs use the highest Q-values in the current state to decide when to hand over. These Q-values will be adjusted regularly to maintain the system’s dynamic nature. To solve the fundamental objective, the decision-making constraint, Hossain et al. [56] suggested a standard RL method, especially Q-Learning. The decision-making process in an edge computing scenario is based on whether data will be offloaded to edge devices or handled locally. They used a cost function with latency and power consumption effect to achieve their goal.

G) Deep Q-Network (DQN)

Deep reinforcement learning blends artificial neural networks with reinforcement learning to allow agents to learn the optimum actions in a virtual environment to achieve their objectives. Deep Q-Learning is one type of deep reinforcement learning that combines function approximation and target optimization, matching state-action pairs to future rewards. Deep Q-Learning, also known as Deep Q Network, is a method for approximating the Q-value of a reinforcement learning architecture (Q-Learning), Q (s, a), using Deep Neural Networks (DQN). The state is given as an input, and the output is the Q-value of all potential actions.

In the MEC context, Huang et al. [32] presented a Deep Q-Network (DQN)-based multiple tasks offloading and resource allocation algorithm. They converted mixed-integer nonlinear programming into an RL problem, which discovers the best solution in this way. To reduce overall costs, they developed collaboratively offloading decision-making and allocating bandwidth. In their model, Chen et al. [57] used a finite-state discrete-time Markov chain to define the Markov Decision Process (MDP). They also employed a deep neural network-based solution (DQN) for the optimal task computation offloading in a dynamically Ultra-Dense Network (UDN) of MEC environment with enabled wireless charging mobile devices in the high dimensionality state space of MDP. Zhao et al. [58] proposed a deep reinforcement learning algorithm, DQN, to learn the offloading decision to optimize system performance. At the same time, the neural network is trained to predict offloading actions, and a multi-user MEC was considered where computational tasks are assisted by multiple Computational Access Points (CAP) by offloading the computationally intensive task to the CAP.

Furthermore, multiple bandwidth allocation algorithms to optimize the wireless spectrum for links between users and CAPs were developed. Tong et al. [59] generated tasks using a Poisson distribution and then suggested a novel DRL-based online algorithm (DTORA), which employs a deep reinforcement learning method called DQN to evaluate if the job should be offloaded and distributes computer resources or not. The mobility of users between base stations was studied to make the system more practical. Xu et al. [60] aimed to minimize power consumption by improving resource allocation in cloud radio access networks (CloudRANs). The authors expressed the resource allocation problem as a convex optimization problem and proposed a novel DRL-based framework based on DQN. In the proposed algorithm, deep Q-Learning is adopted for the online dynamic control based on the offline-built DNN. Shan et al. [61] introduced a hybrid of DRL and federated learning (FL) by proposing an intelligent resource allocation model, “DRL + FL.” In this model, an intelligent resource allocation algorithm DDQN-RA based on DDQN is proposed to allocate network and computing resources adaptively.

In contrast, the model integrates the FL framework with the mobile edge system to train the DRL agent in a distributed way. Ho et al. [62] defined a binary offloading model where the computation task is either executed locally or offloaded for MEC server execution. Which should be adaptive to the time-varying network conditions. Hence, a deep reinforcement learning-based approach was proposed to tackle the formulated nonconvex problem of minimizing computation cost in terms of system latency. Since the conventional RL method is not suitable for an ample action space, a DQN was considered in the proposed work. Chen et al. [63] investigated the situation where MEC is unavailable or cannot meet demand. They considered the surrounding vehicles a resource pool (RP) and split the complex task into smaller sub-task. They formulated the execution time for a complex task as a min-max problem. They then proposed a distributed computation offloading strategy based on DQN, that is, to find the best offloading policy to minimize the execution time of a complex task. Lin et al. [64] aimed to jointly optimize the offloading failure rate and the energy consumption of the offloading process. They established a computation offloading model based on MDP and later proposed an algorithm based on DQN and Simulated Annealing (SA-DQN). Alam et al. [65] studied the near-end network solution of computation offloading for mobile edge due to the problem of higher latency and network delay in the far-end network solution. Mobile devices’ mobility, heterogeneity, and geographical distribution introduce several challenges in computation offloading in the mobile edge. They modeled the problem as an MDP and proposed a code offloading algorithm based on Deep Q-Learning to minimize the execution time, latency, and energy. Chen et al. [66] investigated a stochastic computation offloading policy for mobile users in an ultra-dense sliced radio access network (UDS-RAN). They formulated the problem of stochastic computation offloading as an MDP, for which they proposed two DQN based online offloading algorithms, DARLING and Deep-SARL. They used an RL-based framework double DQN (DDQN) for computation offloading in a high dimensionality state space of MDP for a dynamically UDS-RAN of a MEC environment. They aimed to achieve a better long-term utility performance.

H) Actor-Critic Methods

Actor-Critics aims to combine the benefits of both value-based and policy-based approaches while removing all of their disadvantages. The actor receives the state as input and produces the best action. It effectively directs the agent’s behavior by learning the best policy (policy-based). On the other hand, the critic calculates the value function (value-based) or tells the actor how excellent or awful their actions are. The weights are updated in the form of a TD error. This scalar signal is the critic’s sole output and is responsible for all learning in both the actor and the critic. Actor-Critic methods can realize offloading computing without knowing the transition probabilities among different network states.

Ke et al. [67], in a heterogeneous vehicular network, proposed an offloading model known as ACORL. An adaptive computation offloading method based on DRL, specifically DDPG, can address continuous action space in vehicular networks. The proposed algorithm considered multiple stochastic tasks, wireless channels, and bandwidth. Moreover, the authors combined the Ornstein-Uhlenbeck (OU) noise vector to the action space. Huang et al. [68] considered a multi-user single server system, where multiple Wireless Devices (WDs) are connected to a single Access Point (AP) responsible for both transferring Radio Frequency (RF) energy and receiving computation offloading from WDs. They proposed a DRL Online Offloading (DROO) to implement a binary offloading decision-making strategy generated by a DNN, maximizing the weighted sum of computing rates of all wireless devices and decreasing computation time significantly. The proposed algorithm learns from previous experience to eliminate complex mix integer problems and improve the offloading decisions. To attain a minimum average task latency and energy consumption, Liu et al. [69] reduced the average latency and energy’s weighted sum by optimizing the task offloading decision-making. They proposed an optimization framework based on actor-critic and RL learning approaches to tackle computation offloading. Unlike the above methods, Wang et al. [70] considered a UAV-assisted MEC system, where the UAV provides computing resources to nearby users. The authors aimed to minimize the maximum processing delays in the entire period by optimizing user scheduling, task offloading ratio, UAV flight angle, and speed. They then formulated a nonconvex optimization problem and proposed a computation offloading algorithm based on DDPG. Chen et al. [71] considered a MEC-enabled MIMO system with stochastic wireless channels and task arrivals and proposed a dynamic offloading algorithm based on DDPG. They aimed to reduce the long-term average computation cost in terms of power consumption and to buffer delay at each user.

I) Markov Decision Process (MDP)

The Markov Decision Process (MDP) is a discrete-time stochastic control mechanism that can handle various problems. The result is partly random and partly under the control of the decision-makers. MDPs can be used to look at optimization problems solved by dynamic programming. MDP is highly suited for offloading optimization in cases requiring dynamic decision-making due to varying and changing environmental elements such as wireless channel characteristics and computational load. An MDP system is often made up of five tuples (E, S, A, P, R), where E stands for decision epochs, S for states, A for actions, P for state transition probability, and R for rewards. The five-tuple must be defined according to the offloading technique in this model. After that, we can develop the following: Bellman’s formula.

where

Zhang et al. [22] investigated mobile offloading in a system of intermittently linked cloudlets, looking at user mobility patterns and cloudlet admission control. Then, to establish an appropriate offloading policy for a mobile user in an infrequently connected cloudlet system, a Markov decision process (MDP) based approach was presented. The policy decides offloading or local execution activities based on the condition of the mobile user to achieve a minimum cost. Ko et al. [72] considered an edge-cloud enabled heterogenous network with diverse radio access networks and proposed ST-CODA (a spatial-temporal computation offloading decision algorithm). In their model, a mobile device decides where and when to process MDP tasks. They took into account energy consumption, processing time, and transmission cost. Then, the classic MDP algorithm, namely, the value iteration algorithm, is used to solve the formulated model. In Wei et al. [73], they demonstrated a single-user, single-server system before emphasizing the importance of data prioritization. Following that, the authors established distinct data priority levels. The system handles the data based on its priority level, with higher priority data contributing more to rewards.

Meanwhile, the authors assume that all connected probability distributions are unknown, which means that traditional MDP methods like the value iteration or policy iteration cannot be utilized. They instead employ the Q-Learning method. Zhang et al. [74] considered the movement of vehicles in a vehicular edge computing (VEC) scenario and then proposed a time-aware MDP-based offloading algorithm (TMDP) and a robust time-aware MDP-based offloading algorithm under certain and uncertain transition probabilities, respectively.

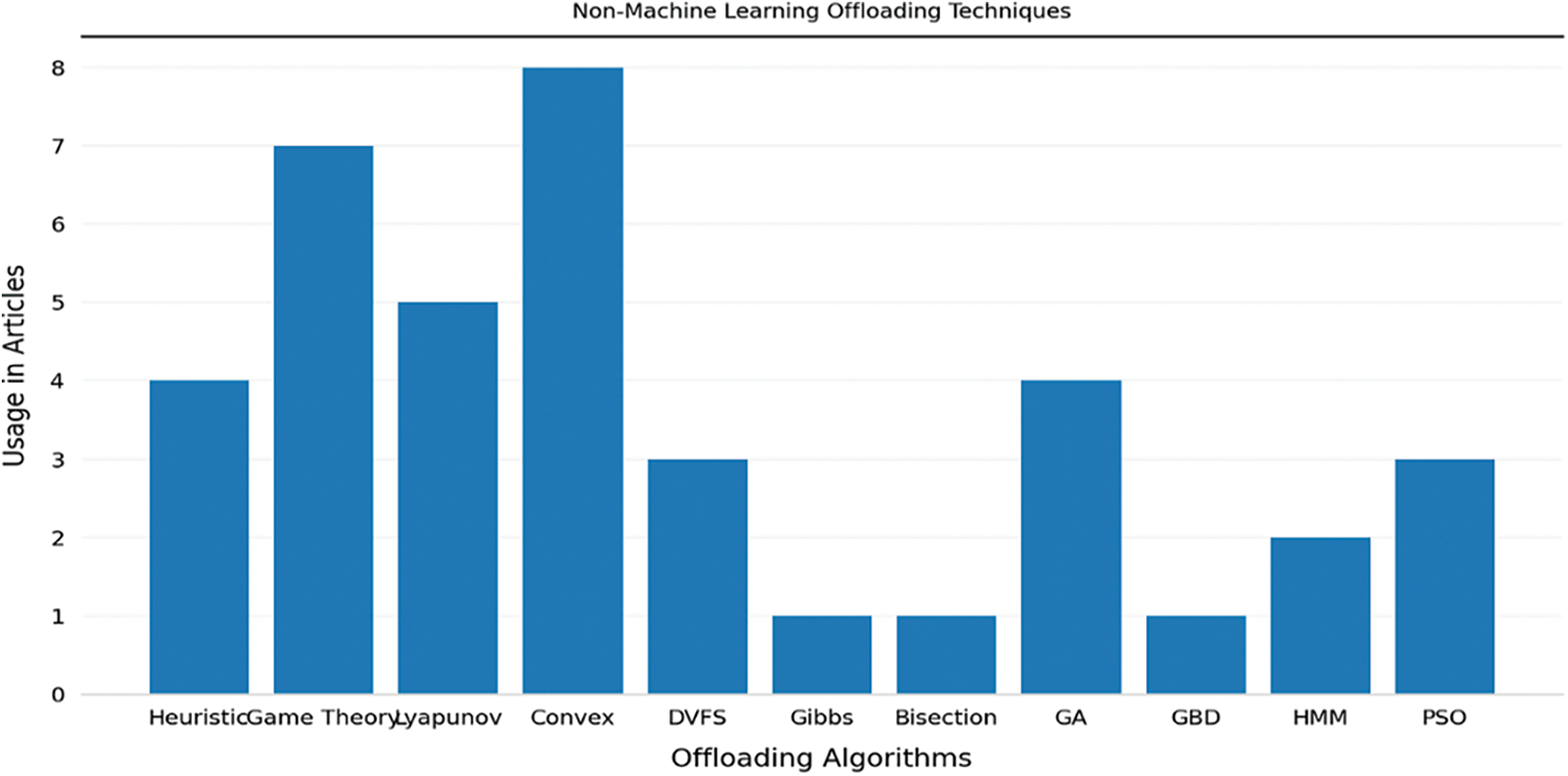

4.2 Non-Machine Learning Offloading Algorithms

Here we will introduce several non-machine learning algorithms that have been adopted and used in both academia and industry to tackle problems with edge computing offloading. As illustrated in Table 2.

A) Lyapunov Optimization

The use of the Lyapunov function to regulate a dynamical system optimally is referred to as Lyapunov optimization. In control theory, Lyapunov functions are frequently utilized to ensure various types of system stability. A multi-dimensional vector is frequently used to describe the status of a system at a given point in time. A nonnegative scalar measure of this multi-dimensional state is a Lyapunov function. When the system approaches undesired states, the function is typically defined to get huge. Control measures that cause the Lyapunov function to drift in the negative direction towards zero create system stability. The drift-plus-penalty approach for combined network stability and penalty minimization is obtained by adding a weighted penalty term to the Lyapunov drift and reducing the sum. The goal is to reduce both the drift and the penalty simultaneously. It is crucial to define the drift and penalty when utilizing Lyapunov optimization in offloading design. The drift in an offloading context could be the energy queue drift or the task queue drift. At the same time, the penalty is often the offloading goal, such as task dropping reduction or execution latency reduction. The ideal offloading decision and other parameters might then be found by reducing the drift-plus-penalty expression for each time slot. Because the drift-plus-penalty expression is usually a deterministic problem, the Lyapunov optimization has a substantially lower computational complexity than (non-) convex optimization. Furthermore, unlike MDP, it does not necessitate the probability distribution of the random event process.

In [80], MEC systems with multiple devices were considered. They formulated a power consumption minimization problem with task buffer stability constraint to investigate the tradeoff between computation task execution delay and mobile devices’ power consumption. Then proposed an online algorithm that decides the local execution and computation offloading policy based on Lyapunov optimization. Huang et al. [30] aimed to provide a low-latency energy-saving user interaction algorithm under different wireless conditions. So they proposed an adaptive offloading algorithm that can offload part of an application’s computation to a server dynamically according to the wireless environment changes based on Lyapunov optimization. The authors used the concept of call graphs to represent the relationship between individual components A compute, and transmission power minimization problem with delay and reliability constraints was formulated by Liu et al. [81]. The authors employ results from extreme value theory [82] to put a probabilistic constraint on user task queue lengths and characterize the occurrence of low probability events in terms of queue length or delay violation. Finally, the optimization problem was solved using Lyapunov optimization tools.

B) Game Theory

For constructing distributed methods, game theory is a valuable tool. It can be utilized in a multi-user offloading scenario where every other end device chooses a suitable technique locally to obtain a mutually acceptable offloading solution. Every end device makes an offloading decision, receives incentives (utility), and then updates the decision in this model. This approach is repeated until the rewards are no longer improved, i.e., until Nash equilibrium is reached. An iterative technique is commonly utilized to identify the Nash equilibrium. It is fundamentally an optimization problem for each end device, i.e., maximization of its utility. As a result, the Nash equilibrium can be obtained using a traditional optimization solution, such as the Karush-Kuhn-Tucker conditions.

Chen et al. [83] studied the multiuser computation offloading problem in multi-channel wireless interference and contention environments. Authors showed that it is NP-hard to compute a centralized optimal solution for mobile-edge cloud computing, so they adopted a game theory approach to achieve efficient computation offloading in a distributed way. The distributed computation offloading decision-making problem among mobile users was formulated as a computation offloading game and then proposed a distributed offloading algorithm to achieve a Nash equilibrium. Zhou et al. [84] investigated the partial computation offloading problem for multiuser in mobile in MEC environment with multiple wireless channels. The authors modeled a game theory approach for the computation overhead and then proved the existence of Nash equilibrium. The paper aims to minimize the computation overhead. Cui et al. [79] proposed an offloading algorithm based on evolutionary game theory and RL. Multiuser computation offloading under a dynamic environment was investigated; the authors then formulated the problem as a game model and proved that multiuser computation offloading has a unique Evolutionary Stability Strategy (ESS).

Also, Alioua et al. [85] proposed using game theory for computation offloading but instead in the context of an Un-man Aerial Vehicle (UAV) to assist in road traffic monitoring. They modeled the offloading decision-making problem as a sequential game, proved a Nash equilibrium, and proposed an algorithm to attain the Nash equilibrium. They aimed to improve the overall system utility by minimizing computation delay while optimizing the energy overhead and the computation cost. Liu et al. [86] studied fog to cloud offloading. They formulated the interaction between the fog and the cloud as a Stackelberg game to maximize the utilities of cloud service operators and edge owners by obtaining the optimal payment and computation offloading strategies and proved that the game is guaranteed to reach a Nash equilibrium. They then proposed two offloading algorithms to achieve low delay and reduced complexity, respectively. Anbalangan et al. [87] studied accumulated data at Macro base Stations (MBS). The MBS cannot accommodate all user demands and attempts to offload some users to nearby small cells, e.g., an access point (AP), and then investigate the trade-off between the economic incentive and the admittance of load between the MBS the AP to achieve optimal offloading. They proposed a software-defined networking-assisted Stackelberg game (SSG) model. In the model, the MBS aggregates users to the AP and prioritizes users experiencing a minor service. Kim et al. [88] proposed an optimal pricing scheme considering mobile users’ need for resources, formulated a single-leader-multi-user Stackelberg game model, and then utilized Stackelberg equilibrium to optimize the overall system utility, i.e., mobiles users and edge cloud utilities.

C) (Non) Convex Optimization

Because it is solvable, convex optimization is a robust tool for solving optimization issues. The offloading objective(s) is(are) defined as an objective function, and the offloading limitations are formulated as constraint functions in this paradigm. If the specified optimization model is convex, traditional approaches like the Lagrange duality method can solve it and accomplish the global optimization goal. If the offloading model is a non-convex optimization issue, converting it to a convex optimization is a standard solution.

1) Alternating Direction Method of Multipliers (ADMM)

The Alternate Direction Method of Multipliers (ADMM) is a strategy for resolving convex optimization problems by breaking them down into smaller, easier chunks. Small local subproblem solutions are coordinated using a decomposition-coordination technique to solve a global problem.

Wang et al. [89] formulated the computation offloading decision, resource allocation, and content caching strategy as a non-convex optimization problem. They then transformed the non-convex problem into a convex one. Furthermore, decomposed the convex problem to solve it in a distributed and efficient manner. They then developed and applied an Alternating Direction Method of Multipliers (ADMM) based on distributed convex optimization to solve the problem. Bi et al. [90] considered a Wireless Powered Transfer (WPT) mobile edge computing system and proposed an offloading algorithm based on ADMM and Coordinate Descent (CD). They aim to maximize the weighted sum computation rate of all Wireless Devices (WD) in the network. The authors combined the recent advancement in WPT and MEC. Each WD followed a full offloading approach in the proposed architecture, i.e., all computation is either performed locally or executed remotely.

2) Successive Convex Approximation

For decreasing a continuous function among several block variables, successive convex approximations such as the Block Coordinate Descent (BCD) method are commonly utilized. A single block of variables is optimized at each iteration of this approach, while the remaining variables are kept constant.

Tang et al. [91] jointly optimized a weighted sum of the time and energy consumption in user experience. They formulated a mixed overhead of time and energy minimization problem (Nonlinear problem). The proposed a mixed overhead full granularity partial offloading algorithm based on Block Coordinate Descent (BCD) approach to deal with every variable step by step to tackle the problem. Baidas [92] formulated a joint subcarrier assignment, power allocation, and computing resource allocation problem to maximize the network sum offloading efficiency. A low complexity algorithm is proposed to solve the problem. The algorithm relaxes the problem into two subproblems and then solves with a successive convex approximation algorithm and polynomial-time complex Kuhn-Munkres algorithm.

3) Interior Point Method (IPM)

Interior point algorithms are a type of algorithm used to solve inequalities as constraints in both linear and nonlinear convex optimization problems. The linear programming Interior-Point approach requires a linear programming model with a continuous objective function and twofold continuously differentiable constraints. Interior point techniques approach a solution inside or outside the feasible region but never from the edge.

Yang [93] proposed a joint optimization scheme for task offloading and resource allocation based on edge computing and 5G communication networks to improve task processing efficiency by reducing time delay and energy consumption of terminal tasks. The problem of computing task offloading is transformed into a joint optimization problem and then developed corresponding optimization algorithms based on the interior point method

4) Semidefinite Programming

Semidefinite Programming (SDP) is a convex optimization discipline concerned with the optimization of a linear objective function (a user-specified function that the user desires to decrease or maximize) over the crossing of the cone of positive semidefinite matrices with an affine space [94–96].

Chen et al. [97] set out to jointly optimize all users’ offloading decisions and communication resource allocation to reduce energy, computation, and delay costs for all users. They proposed an efficient approximate algorithm (MUMTO) Multi-user Multi-task offloading solution. A heuristic algorithm based on Semidefinite Relaxation (SDR), accompanied by restoration of the binary offloading decision and optimal communication resource allocation. To solve an NP-hard non-convex quadratically constraint quadratic optimization problem. Due to the inter-task dependency in various end devices, Liu et al. [27] sought to develop energy-efficient computation offloading. They used a Mixed Integer Programming problem (MIP) to solve an energy consumption minimization problem with two constraints: task dependency and the IoT service completion time deadline. To address this challenge and provide task computation offloading solutions for IoT sensors, they suggested an Energy-efficient Collaborative Task Computation Offloading (ECTCO) algorithm based on a semidefinite relaxation (SDR) and stochastic mapping technique.

D) Particle Swarm Optimization (PSO)

PSO is a metaheuristic optimization method that can solve continuous problems with imperfect information and computational limitations. As a result, this method may help offload optimization problems involving the ecosystem’s dynamic behavior without ensuring a globally optimal solution. The outcome is dependent on the arbitrary spawn variables because PSO is a stochastic optimization method.

Particle Swarm Optimization (PSO) is used by Liu et al. [98] to reduce latency and dependability. They used code partitioning to depict the reliability of task elements as a Directed Acyclic Graph (DAG). They used the chance of service failure of MEC-enabled Augmented Reality (AR) service to establish a trade-off between latency and dependability. They presented an Integer PSO (ISPO) algorithm due to the problem’s non-convexity. They then created a low-complexity heuristic technique for optimizing offloading because ISPO was not feasible in AR [10]. In Li et al. [99], authors formed a delay minimization problem. They proposed a computation offloading strategy dubbed EIPSO based on an improved PSO algorithm to reduce delay and improve load balancing of the MEC server.

E) Genetic Algorithm (GA)

At first look, GA appears to be a good fit for searching an ample state space for optimal solutions as an evolutionary computation technique. However, if the state space is not constructed correctly, GA will not discover an optimal solution in an acceptable amount of time. After that, the GA techniques are the best options when the optimizations are not possible at the local optimum. On the other hand, GA can be a candidate to solve many optimization problems because it requires several generations to create adequate outputs. However, it is only suitable for a select few. However, GA fails to achieve optimal results when measuring fitness is not straightforward.

Zhang et al. [100] studied the collaborative task offloading and data caching models. They proposed a hybrid of an online Lyapunov and genetic algorithms to tackle task offloading and data caching problems to minimize edge computation latency and energy consumption. The authors considered a multi-user MEC system, where multiple base stations serve multiple devices within its radio range through wireless cellular links. Similarly, Kuang et al. [101] also considered a multi-user, multi-server MEC system but instead focused on minimizing system communication latency and energy. Initially, an offloading algorithm based on backtracking was proposed, whose time complexity happens to be exponential with the number of servers. The authors proposed a genetic algorithm and a method based on a greedy strategy to reduce this time complexity.

Consequently, the authors proposed three offloading algorithms: backtracking, genetic, and greedy. They then compared the three algorithms in terms of the total cost of users, resource utilization of edge servers, and execution time. Zhao et al. [102] applied GA, too, but differently to achieve system optimality. They worked on a dynamic optimization offloading algorithm based on the Lyapunov method, namely LODCO. The original problem is time-dependent, converted to a per-time slot deterministic problem by Lyapunov optimization and using a perturbation parameter for each mobile device. Based on LODCO, they proposed their Multi-user Multi-server method by GA and Greedy approaches to achieve optimal results for the system. Shahryari et al. [103] considered a task offloading scheme that optimizes task offloading decision, server selection, and resource allocation and investigated the trade-off between task completion time and energy consumption. They then formulated the task offloading problem as a Mixed-Integer Nonlinear Problem (MINLP). They then proposed a sub-optimal offloading algorithm based on a Genetic Algorithm (GA) and Particle Swarm Optimization (PSO). Hussain et al. [104] studied the problem of multi-hop computation offloading in a Vehicular Fog Computing (VFC) network and later formulated a multi-objective, non-convex, and np-hard quadratic integer problem and then proposed CODE-V, a computation offloading with differential evolution in VFC. The proposed algorithm considered the constraint of service latency, hop-limit, and computing capacity and optimally solved the problem of offloading the decision-making process, MEC node assignment, and multi-hop path selection for computation offloading.

F) Hidden Markov Model (HMM)

HMM is a durable and statistical Markov-based model with effective learning parameters gained from raw data. It may be identified as a finite state machine because of its hidden and observable states. HMM uses the Viterbi algorithm to find the optimum path for the system’s probable following states, and by doing so, can perform better than a simple Markov model. However, because this technique is iterative, it is pretty resource and time-consuming to find the optimum path. With all of this, if HMM is chosen for the right problem and built effectively for that problem, it may forecast future system states in the decision-making nature of the offloading environment. Wang et al. [105] presented a smart HMM scheduling model that allows for dynamically modifying the processing technique while observing latency, energy, and accuracy in a mobile/cloud-based context Jazayeri et al. [106] set out to discover the ideal location for running offloading modules (end device, edge, or cloud). As a result, the authors presented a Hidden Markov model Auto-scaling Offloading (HMAO) based on HMM to discover the ideal place to execute offloading modules to make a tradeoff between energy usage and module execution time.

G) Other Algorithms

1) Heuristic Algorithms

In the style of a broad heuristic, a heuristic algorithm can generate an acceptable solution to a problem in a variety of actual settings, but for which there is no formal demonstration of correctness. NP-complete problems, a decision problem, are frequently solved using heuristic algorithms. Although solutions can be validated when offered, there are no known effective means to locate a solution fast and accurately in these circumstances. Heuristics can be used to generate a solution independently or combined with optimization techniques to offer a reasonable baseline. When approximate solutions are satisfactory but exact solutions are computationally expensive, heuristic algorithms are frequently used. Heuristic techniques might introduce rapid but sub-optimal results. Heuristics have the advantage of being simple algorithms designed to solve a specific problem with a short execution time.

Liao et al. [107] aimed to maximize the number of offloaded tasks for all UEs in uplink communication while maintaining low system latency. They formulated the problem as an NP-hard mix integer nonlinear problem and then proposed an efficient low complexity heuristic algorithm that provides a near-optimal solution. Ren et al. [108] studied three different offloading models, local compression, edge compression, and partial compression offloading. Close-form expressions of optimal resource allocation and minimum system delay for local and edge cloud compression are derived. A piecewise optimization problem was formulated for the partial compression based on a data segmentation strategy and then proposed an optimal joint computation resource allocation and communication algorithm. Xing et al. [109] aimed at minimizing computation delay subjected to individual energy constraints at the local user and the servers. They formulated the latency minimization problem as a mixed-integer nonlinear problem (MINLP) and then proposed a low complexity sub-optimal algorithm. You et al. [110] studied resource allocation for multi-user MEC systems based on time-division multiple access (TDMA) and orthogonal frequency-division multiple access (OFDMA). In the TDMA MEC system, the authors formulated the resource allocation problem as a convex optimization problem. The aim is to minimize mobile energy consumption under computation latency constraints. In the OFDMA MEC system, the resource allocation problem is formulated as a mix-integer problem (MIP); to solve this, a low complexity sub-optimal offloading algorithm is proposed by transforming the OFDMA problem into a TDMA problem. The corresponding resource allocation is derived by defining an offloading priority function and having close to optimal performance in simulations. Similar to [90], Xu et al. [111] considered a WPT combined with MEC and proposed a metaheuristic search approach to maximize the weighted sum computation rate of all WD in the network.

2) Dynamic Voltage Scaling (DVS)

Modifying power and speed settings on a computing device’s different CPUs, controller chips, and peripheral devices to optimize resource allotment for activities and optimum power savings when those resources are not needed is identified as Dynamic Voltage and Frequency Scaling (DVFS). By dynamically altering the voltage and frequency of a CPU, Dynamic Voltage and Frequency Scaling (DVFS) tries to reduce dynamic power usage. This method takes advantage of CPUs’ discrete frequency and voltage settings.

Wang et al. [112] investigated partial computation offloading using Dynamic Voltage Scaling (DVS) by jointly optimizing the computational speed, transmit power, and the offloading ratio of mobile devices. Their study aims to minimize energy consumption and latency. They formulated both the energy minimization and latency minimization problems as non-convex optimization problems. They then converted the energy minimization non-convex problem was converted into a convex problem with the variable substitution technique and obtained its optimal solution. For the latency minimization non-convex problem, a locally optimal algorithm with a univariate search technique was proposed for the optimal solution. Like Guo et al. [113] studied an energy-efficient dynamic computation offloading using Dynamic Voltage Frequency Scaling (DVFS) by jointly optimizing computation offloading selection, clock frequency control, and transmission power allocation. The authors presented an energy-efficient dynamic offloading and resource scheduling (eDors) policy to reduce energy consumption and application completion time by formulating an energy-efficient cost reduction problem.

3) Gibbs Sampling

Gibbs Sampling is a Monte Carlo Markov Chain method for approximating complex joint distributions by iteratively drawing one instance from each variable’s distribution, conditional on the current values of the other variables.

Yan et al. [114] considered a task dependency model where the input of a task at one wireless device requires the final task output at the other wireless device. They then investigated the optimal task offloading policy and resource allocation that minimizes the weighted sum of the wireless device’s energy consumption and task execution time. They then proposed efficient algorithms to optimize the resource allocation and task offloading decision-making to minimize the weighted sum of energy consumption and task execution delay, based on the bisection search method and Gibb’s sampling method.

4) Generalized Benders Decomposition