| Computer Modeling in Engineering & Sciences |  |

DOI: 10.32604/cmes.2022.018948

ARTICLE

COVID-19 Imaging Detection in the Context of Artificial Intelligence and the Internet of Things

1School of Math and Information Technology, Jiangsu Second Normal University, Nanjing, 211200, China

2State Key Laboratory of Millimeter Waves, Southeast University, Nanjing, 210096, China

3School of Engineering, Edith Cowan University, Joondalup, WA 6027, Australia

*Corresponding Authors: Shuwen Chen. Email: chenshuwen@126.com; Mackenzie Brown. Email: mackbrown@ieee.org

#These authors contributed equally to this work. Xiaowei Gu and Shuwen Chen are regarded as co-first authors

Received: 25 August 2021; Accepted: 11 January 2022

Abstract: Coronavirus disease 2019 brings a huge burden on the medical industry all over the world. In the background of artificial intelligence (AI) and Internet of Things (IoT) technologies, chest computed tomography (CT) and chest X-ray (CXR) scans are becoming more intelligent, and playing an increasingly vital role in the diagnosis and treatment of diseases. This paper will introduce the segmentation of methods and applications. CXR and CT diagnosis of COVID-19 based on deep learning, which can be widely used to fight against COVID-19.

Keywords: COVID-19; medical image; chest CT; CXR; IoMT; AI

COVID-19, which was first reported in December 2019, has rapidly spread to more than 200 countries and it has posed a serious threat to human lives. By July 30, 2021, there have been more than 190 million confirmed cases of COVID-19, including 4,202,000 deaths. The common symptoms of the disease include fever, dry cough, and some respiratory problems, whose control largely depends on a timely diagnosis. It is generally known that the reverse-transcription polymerase chain reaction (RT-PCR) test is the standard method for screening suspected cases [1]. However, the lab testing has some drawbacks. Firstly, the shortage of medical equipment and the demanding testing environments will limit the rapid screening of suspected cases. What's more, the RT-PCR test is time-consuming and usually takes 24–48 h because of laboratory processes. Finally, comparing to RT-PCR test, chest X-ray, and chest CT scan are more sensitive to COVID-19 infection [2], and their imaging equipment is easier to operate in practice. Therefore, the above advantages make medical imaging methods a necessary complement for early screening stage, and it also provides a huge help to clinicians. The latest China's diagnosis and treatment protocol for COVID-19 (trial version 8) also highlights the value of imaging for detecting COVID-19. For example, in Wuhan, China, if the features of X-ray or CT images are observed like the suspected cases of COVID-19 [3], though without clinical symptoms like coughing, fever, dyspnea, or muscle ache, also require isolation for further lab tests.

However, there is a cross-infection in the actual chest X-ray or CT scans. This may cause that COVID-19 infects doctors who are not sick or patients with other diseases. They take on the risk of cross-infection. Meanwhile, due to the increase of confirmed and suspected cases of COVID-19, it becomes a labor-intensive task for radiologists to manually mark the lung lesions and analyze many scanning reports timely and accurately. What's more, the increase will lead to a delay in the diagnosis of COVID-19 and missing the best time for treatment. So, it is important to develop fast-automatic segmentation and diagnosis method for COVID-19, which combines deep learning and AI technologies to speed up diagnosis.

Chest CT features of COVID-19 patients usually include ground glass opacity (GGO), consolidation, as well as other rare ones such as pleural or pericardial effusion [5], where GGO is a universal feature of all the chest CT findings. A study [6] points out that identifying these common features is important for a better identification of COVID-19.

However, CT imaging features of COVID-19 patients varied in different stages of the disease. Fig. 1 shows the CT images of COVID-19 patients at different stages and of normal subjects. In the early stage, both lungs often exhibit patchy or diffuse GGO, with thickened small blood vessels in the lesions. Besides, there is nodular and patchy high-density opacity under the pleura of bilateral inferior lobes. In the progressive stage, the two lung diseases change quickly, with multiple lesions fusing into huge sheets of consolidation and the lesion density increasing. After entering the absorption phase, the lesion area is slightly reduced, and the density is slowly decreased [7]. Meanwhile, due to the age and autoimmunity of patients and other factors, the analysis and classification of COVID-19 medical image will also be influenced to a certain extent.

Figure 1: [4] Chest CT images of laboratory-confirmed COVID-19 patient and one healthy subject. GGOS is highlighted with a red border in patients and compared with a green border in normal subjects. (a), (b) and (c) are lung CT images of COVID-19 patients in the early, progressive and absorption stages, respectively; (e), (f) and (g) are local magnification images of GGO in those three stages, respectively. (d) is a CT lung scan of a normal person, and (h) is the local magnification of a normal person

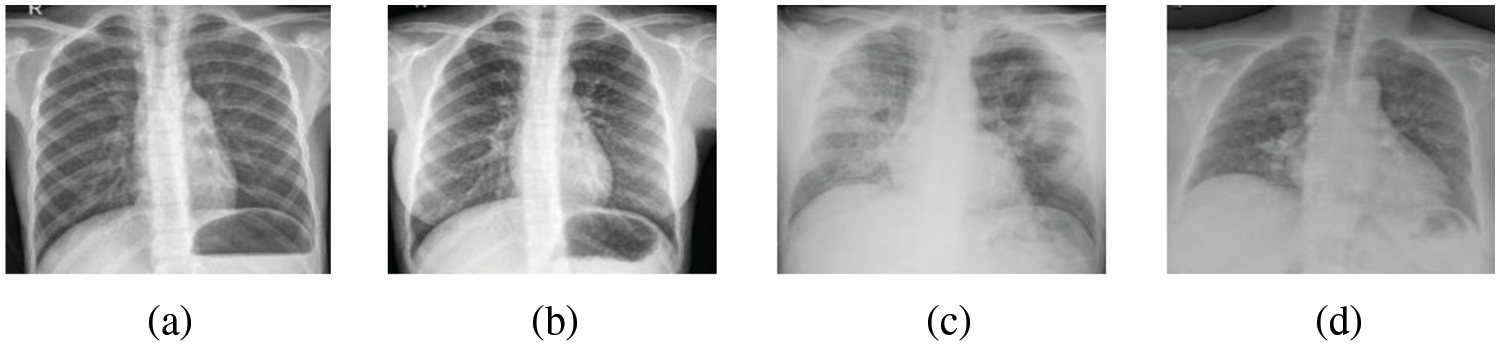

The representative feature of the chest X-ray images of COVID-19 in Fig. 2 is large blurred lungs, possibly with cleft thickening at night and a small amount of pleural effusion. When the patient gets worse, there is a shadow of diffuse consolidation in both lungs and white lungs may appear, sometimes accompanied by pleural effusion.

Figure 2: [8] (a) (b) is the X-ray image of normal people, (c) (d) is the X-ray image of COVID-19 patients. Images (c) (d) represent blurred lungs with a shadow of diffuse consolidation compared with those of normal people

Through reading the COVID-19 image diagnosis literatures, people find that most imaging diagnoses of COVID-19 are based on chest CT images, with less reference to X-ray images. This paper starts from the development of medical image segmentation technology, including segmentation methods which combine machine learning and artificial intelligence and their applications. Secondly, it also focuses on the diagnosis of COVID-19 and tries to use deep learning-based chest CT and X-ray imaging to diagnose COVID-19. The last part summaries and looks forward to the future of medical imaging diagnosis, hoping that this review will provide some help and guidance for doctors to carry out COVID-19 treatment. This review is mainly based on medical imaging studies related to COVID-19 prior to October 2021.

2 Medical Image Segmentation of COVID-19 and Its Applications

Segmentation refers to dividing an image into different regions according to the adjacent parallel features. Image segmentation is a complex and challenging task in biomedical engineering tasks, which is influenced by many factors such as noise, low contrast, illumination, and object irregularities. The goal of segmentation is to separate the area or object of interest from the rest of the body for quantitative measurement. Now segmentation methods have mainly been divided into three groups: manual, semi-automatic and automatic. Manual segmentation requires a lot of time and effort. Semi-automated segmentation is relatively prevalent, which is often integrated with software. The automatic segmentation method works doesn't require user intervention. The three methods have their own advantages and weaknesses. Even now, the segmentation problem remains challenging. Lots of research point out that although many AI systems have been used to assist diagnosis in clinical practice, segmentation studies of infection in chest CT and X-ray scans are still scarce.

In this section, Table 1 lists the latest existing medical image segmentation methods, summarizes some examples of application of COVID-19 image segmentation and classification methods in this section, as well as the results obtained from each method.

2.1 Performance Index of Medical Image Segmentation

Generally speaking, the image segmentation method based on CT scanning technology evaluates its own performance through indicators such as

where

In all references, experts try to achieve segmentation in various ways. Whether from the perspective of semantic segmentation, using U-Net and Seg-Net networks structure, or using neural network based on machine learning or deep learning, researchers have successfully achieved the purpose of image segmentation.

A. Using U-Net network and Seg-Net network for segmentation.

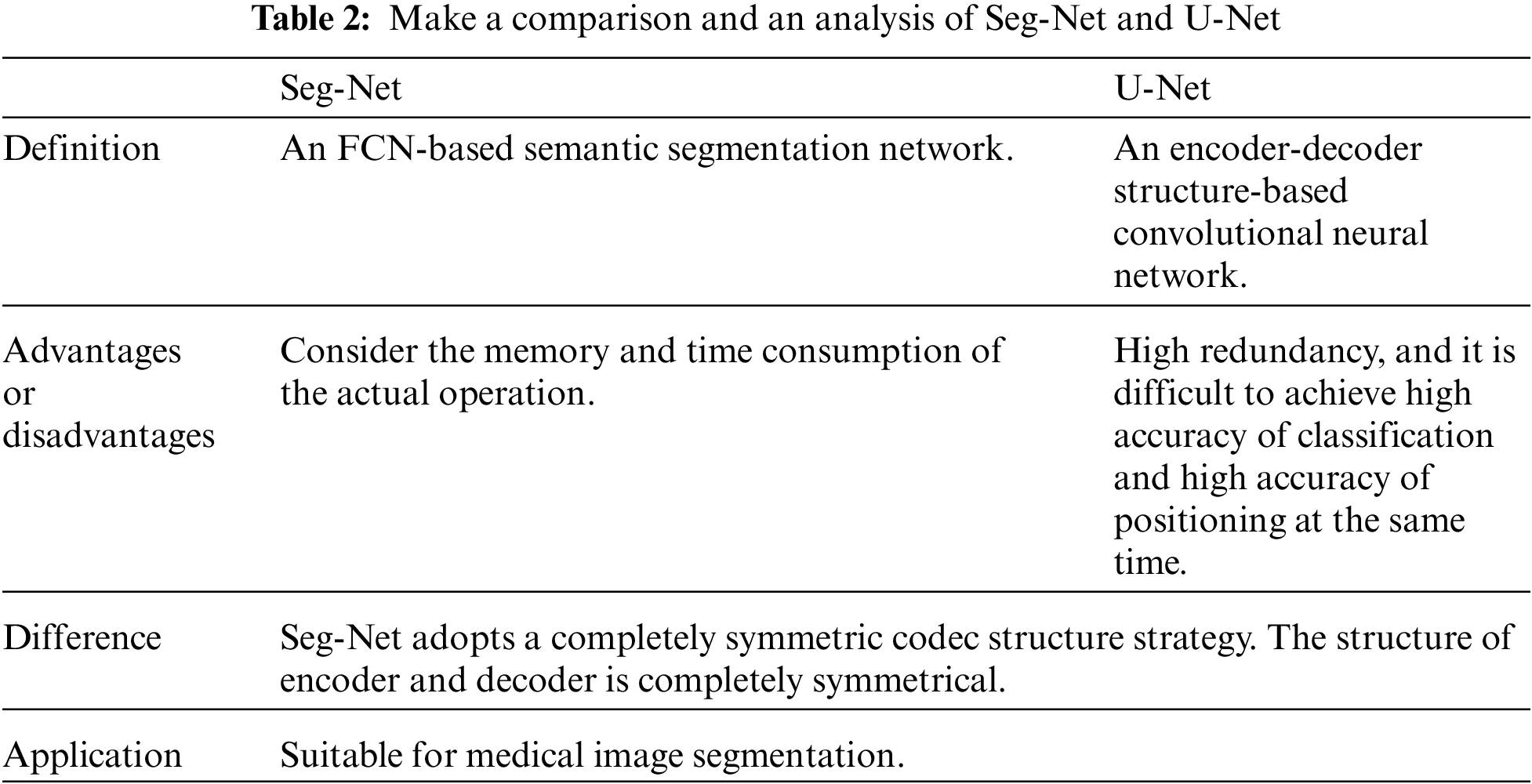

Compared with many articles, it can be found that U-Net and Seg-Net architectures are frequently applied in the field of image segmentation, and have become the key architectures in medical imaging. U-Net, as one of the earliest algorithms for semantic segmentation of convolutional networks, mainly consists of two parts: shrink and scale. The biggest feature of Seg-Net is that the encoder part uses the first 13 layers of convolutional network of VGG-16. Each encoder layer corresponds to a decoder layer, and the final output of the decoder is fed into the soft-Max classifier to generate class probability for each pixel independently. Both networks can detect diseased areas in medical images of the lungs of COVID-19 patients. In addition, the network structure diagram of U-Net and the network model of Seg-Net are presented in Figs. 3 and 4, and the comparison between U-Net and Seg-Net is made in Table 2.

Figure 3: U-Net network structure diagram

Figure 4: Seg-Net network model

A Seg-Net-based network is listed in [15], which uses the attention gate (AG) mechanism to automatically segment COVID-19 regions in CT images. AG is commonly used in the fields of natural image analysis, knowledge mapping, image description, and machine translation. What's more, AG can be easily integrated into the standard convolutional neural network, and the sensitivity and accuracy of the model can be significantly improved with very few computational quantities. The segmentation in paper [15] integrates AG into the standard Seg-Net model (as shown in Fig. 5), which improves the segmentation performance. In terms of the setting of image segmentation, the experiment also proves that the network model using AG can better highlight the characteristics of the local area of the image. In paper [16], in order to segment chest CT images of COVID-19 infected persons, a new deep convolutional neural network, COVID-19 Seg-Net, is established. The network consists of two parts: an encoder and a decoder. The encoder is divided into four layers, which obtain the encoded features by feature extractor and Pro-gressive Atrous Spatial Pyramid Pooling (PASPP). The decoder attempts to restore the features to their original input size. After the sigmoid function is activated, the final segmentation of the COVID-19 infected region can be obtained. This approach could be very beneficial for the initial screening of COVID-19 patients. It is worth noting that the PASPP module, which solves the problem of blurring the edges of images and highlights edges and locations of COVID-19 infections. In paper [9], an anti-noise framework is mentioned to learn segmentation tasks from noise labels and to treat lesions with different scales or appearance. First of all, an anti-noise die loss is applied, and then a COVID-19 pneumonia lesion segmentation network (COPLE-Net) is designed to treat different sizes or appearance lesions. In addition, how to collect completely clean labels for medical image segmentation is a challenge. Fig. 6 illustrates the JCS COVID-19 diagnostic system. Using this system, each suspected COVID-19 patient is diagnosed in 22 s by a classification model and each uninfected case within 1 s. By comparing these articles, although they all achieve image segmentation, it is obvious that the method, which based on Seg-Net network, can significantly highlight the global and local features of images. What's more, marking the lesion technology, turbidity area and location is conducive to the diagnosis of different doctors at different times, convenient for them to know the characteristics of the patient's image in time.

Figure 5: Proposed network architecture (Seg-Net), equipped with AG modules

Figure 6: The JCS COVID-19 diagnostic system

B. Using Inf-Net for segmentation.

The paper [10] put forward to segment the deep network with Inf-Net infection, which applying a parallel partial decoder to aggregate advanced features and creating a global map. Explicit edge attention and implicit reverse attention are utilized to model the boundary and enhance the representation. A semi-supervised segmentation framework based on a random selection propagation strategy is designed to alleviate the deficiency of the labeled data. The semi-supervised segmentation framework improves the segmentation ability and achieves better segmentation performance. Compared with unsupervised detection and segmentation, semi-supervised model can better identify the target region, which is suitable for COVID-19 detection (Fig. 7).

Figure 7: Overview of the proposed semi-supervised Inf-Net framework

C. Using Anam-Net for segmentation.

Compared with end-to-end learning, supervised and unsupervised methods, deep learning-based segmentation is more flexible and efficient, which is fully capable of detecting abnormal areas with low intensity contrast between diseased and healthy tissue. However, PASPP, which mentioned in Section A, can only improve the segmentation of fuzzy images, and it cannot do anything for those abnormal images. Therefore, in paper [11], researchers developed a novel lightweight convolutional neural network based on deep learning, known as Anam-Net, which is designed for abnormal COVID-19 segmentation in chest CT images, and introduces a label-based weighting strategy of network cost function. Fig. 8 shows the key steps involved in segmenting COVID-19 anomalies. Anam-Net is fully automated, ensuring full turnaround time for segmentation. Compared with common methods, Anam-Net network has advantages such as lower computational complexity and greater application value in clinical environment. In [12], the segmentation network also focused on deep learning, but the difference is that the network proposed by them is 3D-based IoT. The feature weight of global information is calibrated through the local cross-channel information interaction mechanism, and features are extracted to improve the performance of feature representation. In the encoder, a pyramid fusion module with multi-scale global information interaction is proposed to enhance the performance of the network, thereby indirectly improving the segmentation performance of the 3D network to the lesion area. However, for some lesions with strange shapes and different sizes, it is necessary to change its accuracy.

Figure 8: Key steps of the proposed approach for automated segmentation of abnormalities in chest CT images

Although Inf-Net has some limitations, this model still achieves satisfactory results in the segmentation of infected regions [13]. In clinical practice, it is frequently essential to classify COVID-19 patients, and then segment the infected area to facilitate treatment. Inf-Net mainly focuses on the segmentation of pulmonary infection. However, it is also good at monitoring longitudinal disease changes and performing large-scale screening treatments, with great potential to evaluate the diagnosis of COVID-19 by quantifying the area of infection. The paper [11] proposes Anam-Net segmentation for abnormal COVID-19 images. Compared with other models, it has very low computational complexity, can be widely deployed in clinical settings, and enable rapid evaluation of abnormal COVID-19 images [16].

2.3 The Application of COVID-19 Segmentation

This model uses a dual-channel CNN pipeline to automatically segment COVID-19 pulmonary infection tissue from CT images by making up three unequal input images [13]. In the case of infection with fuzzy boundary or wide range of lesions, the utilization of dual CNN pipeline will have more benefits such as reasonably simplifying the procedure and decreasing the time cost. Fig. 9 implements the two-way CNN model using three different inputs. Two methods are employed in the model, one is to extract five convolution layers of global features, the other is to extract two convolution layers of local features, and the local and global investigation windows are 25 × 25 and 60 × 60. The focused module LA is based on the development of a dual-branch composite network DCN [14], which can amass the network on the infected sites, and can be applied in the early screening of COVID-19. Considering that comorbidities may be a risk factor for the exacerbation of COVID-19, the paper [17] attempted to apply the multi-tasking U-Net network, which is a tool to establish a quantitative segmentation model, to assess the impact of comorbidities on COVID-19 patients. In the article, they evaluate the influence of comorbidities through visual and quantitative data. Though the network, they extracted features from CT images over a network to separate lesions from lungs, and classify whether the lesions are consolidated or not. The final output serves as the lesion volume of the underlying disease group and the non-underlying disease group. The flow chart is shown in Fig. 10. To ensure the reliability of multi-task networks, U-Net network is firstly used to segment the lesion areas of patients, and then the segmentation results are examined manually. It is found that more than 95% of the lesion region are segmented accurately, indicating that the performance of the multi-task U-Net network has stability and accuracy, proving that U-Net network can be used for CT image segmentation of COVID-19 patients. The potential challenge is that the incubation period of comorbidity varies from person to person, resulting in uncertain CT images and delayed judgment.

Figure 9: Implemented two-path CNN model using three distinct inputs

Figure 10: A process diagram for assessing the effects of comorbidities

At the same time, people need to actively address the double challenges of comorbidities and COVID-19. The latest research results of Chinese expert Zhong Nanshan's team show that chronic obstructive pulmonary disease (COPD), diabetes, hypertension and malignant tumors are closely related to the clinical response of novel coronavirus infection. COVID-19 patients with comorbidities have a significantly higher risk of ICU admission and death than those without comorbidities. This reminds clinicians of the need for knowing patients’ medical history, as well as adequate personal protection and closer medical observation for COVID-19 patients with multiple comorbidities.

In short, the image segmentation is playing a very important role in automated screening and detection of lesion area of COVID-19 CT or X-ray images, helping radiologists to make a rapid judgement for the next diagnosis and treatment in a short time.

3 Medical Image Diagnosis of COVID-19 Based on Machine Learning

Machine learning is a multidisciplinary discipline, where a computer uses existing data to train an algorithmic model, and then uses the model to predict the future. Usually, machine learning uses regression algorithm, neural network, support vector machine, clustering and other methods to build models. As a type of machine learning, deep learning uses related networks to complicate models. Deep learning network are mechanisms that mimic the human brain for interpreting images, sounds, text and data, which has driven the rapid development of artificial intelligence. It not only gives the machine learning more practical applications, but also extends the overall scope of artificial intelligence, such as medical imaging. At present, due to the low cost and high sensitivity of imaging technology, X-ray and chest CT images have been widely used in the diagnosis of pulmonary infection by radiologists. While chest CT scans contain hundreds of sections, which is difficult for radiologists to handle. It is better to use a machine than to waste human resources on it. Therefore, computer vision method based on deep learning has broad application prospects in medical images, and it is very necessary to use artificial intelligence (AI) to assist diagnosis, which allows doctors to treat patients more effectively.

3.1 Chest X-Ray Diagnosis of COVID-19 with Deep Learning

The control of COVID-19 largely depends on correct diagnosis. AI-based X-ray equipment is already available around the world, and results can be obtained soon. Although it is less sensitive than chest 3D CT imaging, it can be used for the early detection of COVID-19, trying to avoid patients wasting treatment time during the diagnosis stage. At the same time, artificial intelligence technology is organically combined with chest X-ray image to improve the efficiency of diagnosis [18].

The paper [19] proposed to use the RESNET-50 deep learning model to extract features from chest X-ray images. All the operations are shown in the flow chart in the Fig. 11. In the paper, three different virus types: COVID-19, normal pneumonia and viral pneumonia are supported to be researched by SVM algorithm. These images are feature extracted using residual network (RESNET-50), and one of the convolutional neural network models is used to obtain the classification performance of support vector machines. Table 3 summarizes the performance results of three different VM algorithms. The experimental results prove that the deep learning-based model can directly extract characteristic and generate results even with limited COVID-19 data. It is confirmed that the approach used is more effective than the study conducted on COVID-19 using manual feature extraction methods. Zhou et al. [20] analyzed the chest X-ray images of COVID-19 patients, and puts forward a method combining image recombination with RESNET vector machine carefully. Their idea is to slice the lung from the original image, divide it into smaller pieces, and combine those pieces randomly into regular images. Then the reconstructed image is sent into the deep residual coding block for feature extraction. Finally, the extracted features are used as the recognition input of support vector machine (SVM). The specific process is shown in Fig. 12.

Figure 11: Flow diagram

Figure 12: The specific process

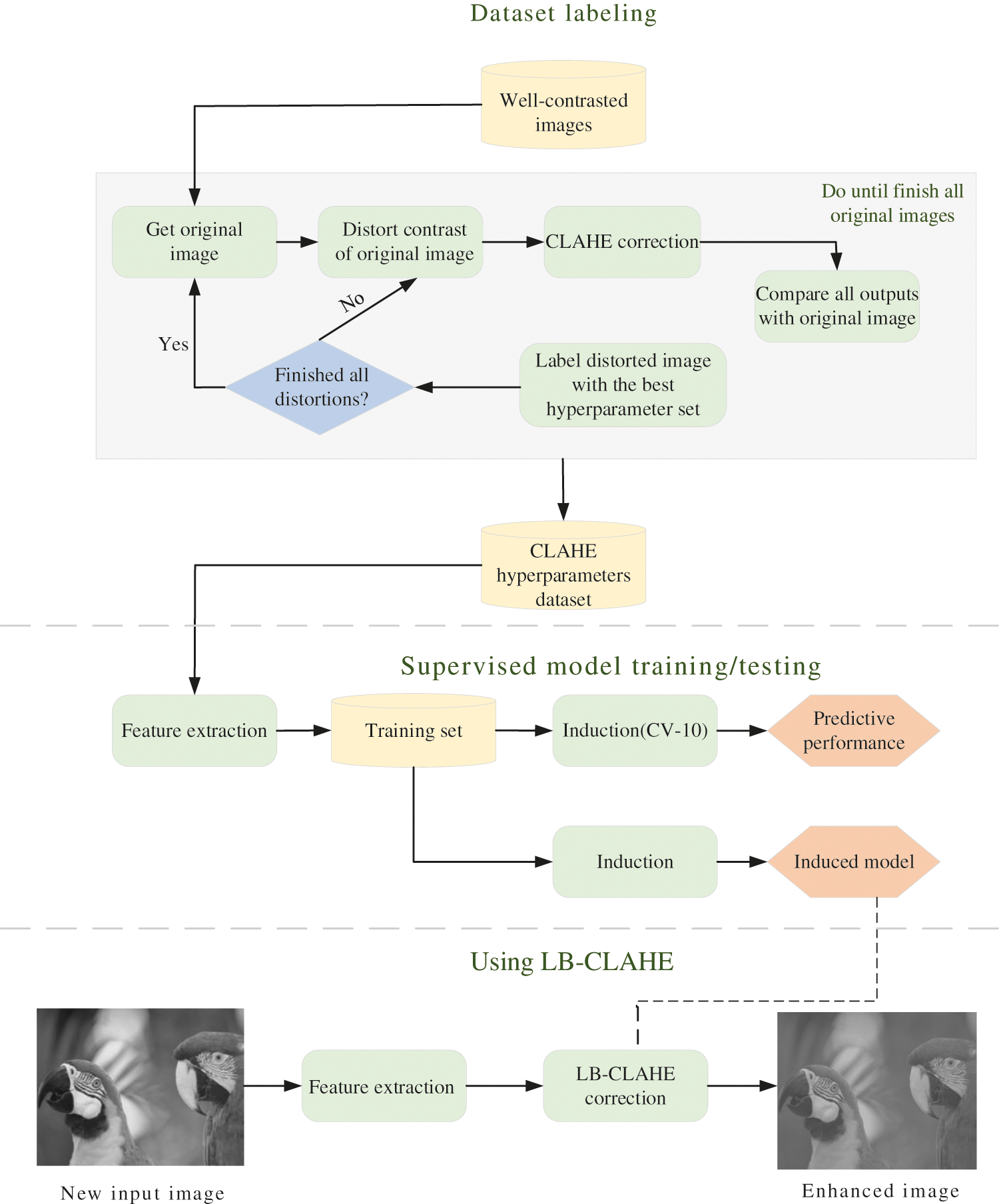

In order to study the pattern of chest images of COVID-19 patients, it is a good choice to take CNN as a deep learning algorithm. In addition, the study uses a pre-trained model as a dataset [21], including 100 COVID-19 chest radiographs and 100 normal chest radiographs. The COVID-19 chest radiograph dataset is available through GitHub [22] and the normal chest radiograph data set can also be gained through Kaggle [23]. Contrast-Restricted Histogram Equalization (CLAHE) is used to improve image quality. Target recognition and image enhancement in medical images are realized by combining CNN and CLAHE, especially for COVID-19 virus detection in chest radiograph images. The purpose of the study is to compare and measure the accuracy of technologies. With the aid of HE, GC and CLAHE, the outcomes indicate that GC has the prime sensitivity and CLAHE has prime accuracy. The key factors of CLAHE are the clip limit (CL) and the number of textures (NT) [24]. The experimental data demonstrates that the rule set is 99% correct, and the verification rate is 97%, which signifies that the basic model test method of CLAHE is improved by applying CNN. The implementation of CLAHE assists to boost the quality of the classified image and enhance the contrast of the image. Fig. 13 reveals the dataset labeling procedure, the LB-CLAHE usage, and the supervised model building. On the other hand, a new AI system based on chest CT images is designed and a model called CCSHNet for COVID-19 diagnosis [25] can generate cross-sectional images of the scanned area from processing images taken from different angles. This method provides three-dimensional volume data that highlights additional spatial features and is also used to generate high-quality images. Among them, SAPNF algorithm is developed to optimize two optimal models characterized by PTM and NLR. They consider CCSHNet as a first-line diagnosis for COVID-19 and other lung infections, helping radiologists make an accurate diagnosis based on CT.

Figure 13: The overall process of using the CLAHE method

In paper [26], wavelets of varying frequency and finite duration, is taken as a start and a method of automatic diagnosis of COVID-19 in chest X-ray images based on wavelet theory is proposed. This method integrates multi-resolution analysis into the disease recognition network. In this dataset, there are 1439 images in these three categories, with 678 normal cases, 132 COVID-19 cases and 629 viral pneumonia cases. The results will be compared with four latest technologies (DarkCovidNet, Flat-EfficientNet B3, Hierarchy-EfficientNet B3, Detate-ResNet18). The performance of the four different technologies is shown in Table 4. These techniques use deep learning models to diagnose COVID-19 from chest X-ray images [27].

In [27], Abbas et al. adopt a COVID-19 image classification method based on class decomposition, with considerate confidence in the classification and processing of coronavirus. In [28], it is hoped to realize the diagnosis of COVID-19 by both plane classification and hierarchical classification, and effectively train the deep neural network based on the structure of convolutional network under the limited shape of image data set. In paper [29], experts choosed deep learning algorithm based on the convolutional neural network and VGG-16 transfer learning model to discuss X-ray images of COVID-19 patients. The X-ray detection of COVID-19 patients on their chests using Contrast Limited Adaptive Histogram Equalization (CLAHE) and Convolutional Neural Networks (CNN) is carried out in several stages as illustrated in Fig. 14. In the study of [30], ground truth segmentation templates of infected regions are published in the hope of diagnosing COVID-19 with x-ray images. In addition, the man-machine cooperation method is also proposed considering the slow speed and high cost of manual segmentation. which realizes to implement the generation of infection images in X-ray images to diagnose COVID-19. To improve segmentation performance, experts combine focus loss and dice loss to train segmentation network using a mixed loss function. In the face of a large class imbalance scenario, the common method is to add a weighting factor

Figure 14: Detailed procedures for X-ray examination of the chest of COVID-19 patients using contrast finite adaptive histogram equalization (CLAHE) and convolutional neural network (CNN)

The final experimental results successfully achieve 81.72% sensitivity and 83.20% F1-score.

Overall, most studies use X-ray images to classify COVID-19 patients and non-COVID-19 patients. To obtain more accurate and reliable data, the researchers propose to detect COVID-19 virus patients based on convolution and deep learning strategies. As a result of the restricted image data of COVID-19, it is hard to gain a large amount of data and conduct more experimental tests, so there are still some doubts in its clinical suitability. In the next time, the focus will be put on early detection of COVID-19 virus diagnosis [31].

3.2 Chest CT Diagnosis of COVID-19 with Deep Learning

Convolutional neural network is an extraordinary deep neural network model, generally divided into one-dimensional, two-dimensional, and three-dimensional. As the simplest, One-dimensional convolution is a linear space, essentially as the convolution of a word vector. It is usually used in low-dimensional space such as natural language processing and sequence model. Two-dimensional convolution is an extension of one-dimensional, which is often used in computer vision and image processing. The specific idea of three-dimensional convolution is the same as the previous two. And the training method of 3D convolutional neural network is similar to convolutional neural network. Therefore, as the most advanced at present, 3D convolutional neural network has been widely used in the medical field, such as CT image and video processing. In the medical field, two-dimensional convolutional neural networks are widely used, such as in the processing of CT images and X-ray images.

CNN is a development of the traditional Neural Network proposed in 1968 [32]. Due to limited computing power and lack of available data to identify cases of infection from lung X-ray images, it is possible to build a custom convolutional neural network (CNN) from scratch using many historical lungs X-ray images provided by experts but is obviously inefficient. Considering that CNN is good at processing pixel input of original images, the paper intends to adopt a new model based on global and local feature organization to study the possibility of COVID-19 lesions. In terms of input images, three different input images are designed, including original image, fuzzy clustering image and coded image. Various tumors or lesions caused by COVID-19 can be detected in time when automated feature extraction is performed.

Because of its performance on CT images is similar to other types of viral pneumonia, it has been difficult for researchers to identify COVID-19. Therefore, there are a lot of studies on how to distinguish COVID-19 patients from non-COVID-19 patients. The paper [33] included a new PSSPNN model that can be used to classify COVID-19, secondary tuberculosis, community-captured pneumonia and healthy subjects, but the method still needs to be improved. In addition, Li et al. attempt to develop a CAD system for screening COVID-19 CT images. The paper [34] introduces a 2DCNN, which takes a series of CT slices as input and 2D-RESNET-50 as backbone to extract CNN features from each slice of the CT series. Then they use the maximum pooling operation to combine these features, and the resulting map is provided to a fully connected layer, which can generate probability scores for each class. This paper [35] used a three-dimensional segmentation model, named VNET [36], to segment lesion candidates from CT images.

With the rapid growth of COVID-19, chest CT images have become the main basis for radiologists to collect the information of patients because of their high sensitivity and low cost. However, due to the high infectivity of the epidemic, medical workers are at great risks when collecting COVID-19 CT data. To solve the problem, researchers propose a CT image synthesis method based on conditional generation antagonistic network, which can also effectively generate high quality and realistic COVID-19 CT images for deep learning-based medical imaging tasks. Results reveal that this way is beneficial to the synthesis effort of COVID-19 CT images. For better measurements, a new AVNC model [37] has emerged which unites attention mechanisms and improves multiplexing data enhancement to extend data sets.

Since COVID-19 is highly infectious, to prevent possible infection of healthcare workers when they contact COVID-19 patients, researchers put forward a novel CGAN structure that contains a multi-resolution discriminator and a global-local generators. Both the discriminator and the generator adopt dual network to plan to emulate the local and global information of CT images respectively. Simultaneously, this dual structure has a communication mechanism of information exchange, which is helpful to generate lively CT images with stable global structure and rich local details [38].

Besides direct segmentation and 3D networking, the authors [39] also proposed a new multi-task original attention learning strategy to realize COVID-19 screening in volume chest CT images. To be specific, by designing an up-front focus on residual learning (PARL) block, two RESNET-based branches are integrated into an end-to-end training model framework. Within these data blocks, hierarchical attention information from the detection branch of lesion regions is transferred to the COVID-19 classification branch to learn a more discriminative representation. This approach is becoming increasingly accurate in locating the lesion area and allows additional surveillance information to improve the performance of the COVID-19 classification task. Experimental results manifest that this method exceeds other state-of-the-art COVID-19 screening methods [40]. It is worth noting that in this paper [41], an end-to-end multi-input deep convolutional attention network (MIDCAN) is established to improve the diagnostic performance of AI by combining X-ray and CT, which uses the convolutional block attention module (CBAM), and the sensitivity of the experimental results reached about 98%. Fig. 15 is MIDCAN model. The input on the left is “Input-X-ray” where X-ray images are passed into the network, and the X-ray enters A2 and generates the output A7, which is then flattened into A8. Similarly, the input on the right is “Input CT”, the CT enters B2 and generates the output B7, which is then flattened into B8. Finally, the features of X-ray and CT are connected to each other by connection function. Meanwhile, the MIDCAN model can process two images simultaneously. Compared with ordinary neural networks, attention network has more obvious advantages [42]. Therefore, the application prospect of MIDCAN is worth looking forward to. And the use of MIDCAN in clinic can be greatly improved in the future and the accuracy of medical diagnosis can be improved. In order to meet the needs of CT image data, a CT image synthesis method based on a conditional generative adversarial network is presented in [43]. It completes deep learning-based medical image tasks through CGAN structure and effectively generates high-quality and real CT images. By using both the global and local generators, the global and local information in CT images is extracted efficiently, and the realistic CT images are generated successfully [44].

Figure 15: MIDCAN model

Medical imaging methods have been widely employed in medical diagnosis so far, especially chest X-ray and chest CT [45]. As the most widely used diagnostic X-ray examination in medical practice, it is of great significance for early clinical research and diagnosis. Traditionally, quantitative analysis of medical images requires boundary segmentation and extraction of the target image, which is usually done by doctors themselves. But in a particular period like novel Coronavirum-19, given the shape and difficulty of the various organs, and the large number of images to segment, it is difficult for doctors to deal with large numbers of patients in a short time. Therefore, researchers are focusing on intelligent segmentation and diagnosis, and hope that the development of IoT and AI will contribute to the treatment of COVID-19 [46,47].

This paper reviews the COVID-19 image segmentation and diagnosis techniques, introducing medical image segmentation methods and applications in detail. Through CT and X-ray images, researchers use different methods based on deep learning to diagnose COVID-19, and illustrates that rapid automatic segmentation and AI diagnosis technology play an important role in COVID-19 prevention and control. This paper also studies AI and IoT, trying to apply these technologies to medical image segmentation, COVID-19 diagnosis, patients’ monitoring, and so on. It is hoped that these methods and technologies will be effective in curbing the spread of COVID-19.

The intelligent diagnosis has the capacity of automatic screening and timely analysis, which reduces the possibility of misdiagnosis and improves radiologists’ working efficiency. But it is worth noting that the current study still has many limitations. CT or X-ray images of patients with lesions have blurred boundary, large lesion area or consolidation, which makes it difficult to segment medical images and accurately diagnose diseases. In the future, with the increasing demand for COVID-19 diagnosis, medical image segmentation technology complemented by dual CNN channels, deep neural network (COVID Seg-Net) or DCN model will improve the accuracy and sensitivity of segmentation technology. Experts will also attempt to apply AI and IoT to the overall segmentation and diagnosis of COVID-19, and realize automatic screening, analysis, then report generation to make quick judgments by using artificial intelligence. In particular, images of small lesions such as COVID-19 can be flagged and concluded. In the next few years, regarding the construction of IoT, researchers will use other approaches, such as using computer modeling (CM) to help develop a COVID-19 vaccine, which makes IoT play a more important role in medicine. In the future, there is a strong belief that AI and IoT can play a more useful role in fighting pneumonia. Experts are studying the potential of machine learning to diagnose diseases more accurately and to develop better and safer vaccines by predicting how viruses are likely to evolve in the future. 5G will also be more widely used. Those security concerns will also be addressed soon.

Funding Statement: This work was supported by the Open Project of State Key Laboratory of Millimeter Wave, Southeast University, China, under Grant K202218.

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. He, J. L., Luo, L., Luo, Z. D., Lyu, J. X., Ng, M. Y. et al. (2020). Diagnostic performance between CT and initial real-time RT-PCR for clinically suspected 2019 coronavirus disease (COVID-19) patients outside Wuhan, China. Respiratory Medicine, 168, 105980. DOI 10.1016/j.rmed.2020.105980. [Google Scholar] [CrossRef]

2. Tabik, S., Gómez-Ríos, A., Martín-Rodríguez, J. L., Sevillano-García, I., Rey-Area, M. et al. (2020). COVIDGR dataset and COVID-SD Net methodology for predicting COVID-19 based on chest X-ray images. Journal of Biomedical and Health Informatics, 24(12), 3595–3605. DOI 10.1109/JBHI.2020.3037127. [Google Scholar] [CrossRef]

3. Wang, J., Chen, Z., Lang, X., Wang, S., Chen, Z. (2021). Quantitative evaluation of infectious health care wastes from numbers of confirmed, suspected and out-patients during COVID-19 pandemic: A case study of Wuhan. Waste Management, 126, 323–330. DOI 10.1016/j.wasman.2021.03.026. [Google Scholar] [CrossRef]

4. Elaziz, M. A., Ewees, A. A., Yousri, D., Alwerfali, H. S. N., AI-Qaness, M. A. A. (2020). An improved marine predators algorithm with fuzzy entropy for multi-level thresholding: Real world example of COVID-19 CT image segmentation. Access, 8, 125306–125330. DOI 10.1109/ACCESS.2020.3007928. [Google Scholar] [CrossRef]

5. Niu, R., Ye, S., Li, Y., Ma, H., Xie, X. et al. (2021). Chest CT features associated with the clinical characteristics of patients with COVID-19 pneumonia. Annals of Medicine, 53(1), 169–180. DOI 10.1080/07853890.2020.1851044. [Google Scholar] [CrossRef]

6. Pan, F., Ye, T., Sun, P., Gui, S., Liang, B. et al. (2020). Time course of lung changes on chest CT during recovery from 2019 novel coronavirus (COVID-19) pneumonia. Radiology, 295(3), 715–721. DOI 10.1148/radiol.2020200370. [Google Scholar] [CrossRef]

7. Wang, S., Kang, B., Ma, J., Zeng, X., Xu, B. et al. (2021). A deep learning algorithm using CT images to screen for corona virus disease (COVID-19). European Radiology, 31, 6096–6104. DOI 10.1007/s00330-021-07715-1. [Google Scholar] [CrossRef]

8. Alghamdi, H. S., Amoudi, G., Elhag, S., Saeedi, K., Nasser, J. et al. (2021). Deep learning approaches for detecting COVID-19 from chest X-ray images: A survey. Access, 9, 20235–20254. DOI 10.1109/ACCESS.2021.3054484. [Google Scholar] [CrossRef]

9. Wang, G., Liu, X., Li, C., Xu, Z., Ruan, J. et al. (2020). A noise-robust framework for automatic segmentation of COVID-19 pneumonia lesions from CT images. Transactions on Medical Imaging, 39(8), 2653–2663. DOI 10.1109/TMI.2020.3000314. [Google Scholar] [CrossRef]

10. Fan, D. P., Zhou, T., Ji, G. P., Zhou, Y., Chen, G. et al. (2020). Inf-Net: Automatic COVID-19 lung infection segmentation from CT images, in IEEE transactions on medical imaging. Transactions on Medical Imaging, 39(8), 2626–2637. DOI 10.1109/TMI.2020.2996645. [Google Scholar] [CrossRef]

11. Paluru, N., Dayal, A., Jenssen, H. B., Sakinis, T., Yalavarthy, P. K. et al. (2021). Anam-Net: Anamorphic depth embedding-based lightweight CNN for segmentation of anomalies in COVID-19 chest CT images. Transactions on Neural Networks and Learning Systems, 32(3), 932–946. DOI 10.1109/TNNLS.2021.3054746. [Google Scholar] [CrossRef]

12. Zheng, R., Zheng, Y., Dong, Y. (2021). Improved 3D U-Net for COVID-19 chest CT image segmentation. Scientific Programming, 2021, 1–9. DOI 10.1155/2021/8721464. [Google Scholar] [CrossRef]

13. Ramin, R., Saeid, J., Malika B, G., Amir, A., Mohd, N. A. R. et al. (2021). Lung infection segmentation for COVID-19 pneumonia based on a cascade convolutional network from CT images. BioMed Research International, 2021, 16. DOI 10.1155/2021/5544742. [Google Scholar] [CrossRef]

14. Liang, Z., Huang, J. X., Li, J., Chan, S. (2020). Enhancing automated COVID-19 chest X-ray diagnosis by image-to-image GAN translation. International Conference on Bioinformatics and Biomedicine (BIBM), pp. 1068–1071. Seoul, Korea (South). [Google Scholar]

15. Zhao, Q., Wang, H., Wang, G. (2021). LCOV-NET: A lightweight neural network for COVID-19 pneumonia lesion segmentation from 3D CT images. 2021 IEEE 18th International Symposium on Biomedical Imaging (ISBI), pp. 42–45. Nice, France. [Google Scholar]

16. Yan, Q., Wang, B., Gong, D., Luo, C., You, Z. et al. (2021). COVID-19 chest CT image segmentation network by multi-scale fusion and enhancement operations. Transactions on Big Data, 7(1), 13–24. DOI 10.1109/TBDATA.2021.3056564. [Google Scholar] [CrossRef]

17. Zhang, C., Yang, G., Cai, C., Xu, Z., Wang, J. et al. (2020). Development of a quantitative segmentation model to assess the effect of comorbidity on patients with COVID-19. European Journal of Medical Research, 25(1), 49. DOI 10.1186/s40001-020-00450-1. [Google Scholar] [CrossRef]

18. Wu, Y. H., Gao, S. H., Mei, J., Xu, J., Fan, D. P. et al. (2021). JCS: An explainable COVID-19 diagnosis system by joint classification and segmentation. Transactions on Image Processing, 30, 3113–3126. DOI 10.1109/TIP.83. [Google Scholar] [CrossRef]

19. Narin, A. (2020). Detection of COVID-19 patients with convolutional neural network based features on multi-class X-ray chest images. Medical Technologies Congress (TIPTEKNO), 2020, 1–4. DOI 10.1109/TIPTEKNO50054.2020. [Google Scholar] [CrossRef]

20. Zhou, C., Song, J., Zhou, S., Zhang, Z., Xing, J. (2021). COVID-19 detection based on image regrouping and ResNet-SVM using chest X-ray images. IEEE Access, 9, 81902–81912. DOI 10.1109/ACCESS.2021.3086229. [Google Scholar] [CrossRef]

21. Simonyan, K., Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. Computer Science. [Google Scholar]

22. Software source code hosting service platform. https://github.com/. [Google Scholar]

23. Data science, machine learning competitions and sharing platforms. https://www.kaggle.com/. [Google Scholar]

24. Campos, G. F. C., Mastelini, S. M., Aguiar, G. J., Mantovani, R. G., Barbon, S. et al. (2019). Machine learning hyperparameter selection for contrast limited adaptive histogram equalization. EURASIP Journal on Image and Video Processing, 59, 2019. DOI 10.1186/s13640-019-0445-4. [Google Scholar] [CrossRef]

25. Wang, S. H., Nayak, D. R., Guttery, D. S., Zhang, X., Zhang, Y. D. (2021). COVID-19 classification by CCSHNet with deep fusion using transfer learning and discriminant correlation analysis. Information Fusion, 68, 131–148. DOI 10.1016/j.inffus.2020.11.005. [Google Scholar] [CrossRef]

26. Mehrotra, A., Singh, K. K. (2014). Detection of 2011 tohoku tsunami induced changes in Rikuzentakata using normalized wavelet fusion and probabilistic neural network. Disaster Advances, 7(2), 1–8. DOI 10.1109/isce.2013.6570127. [Google Scholar] [CrossRef]

27. Abbas, A., Abdelsamea, M. M., Gaber, M. M. (2021). Classification of COVID-19 in chest X-ray images using DeTraC deep convolutional neural network. Applied Intelligence, 51(2), 854–864. DOI 10.1007/s10489-020-01829-7. [Google Scholar] [CrossRef]

28. Luz, E., Silva, P., Silva, R. P., Silva, L., Menotti, D. et al. (2021). Towards an effective and efficient deep learning model for COVID-19 patterns detection in X-ray images. Research on Biomedical Engineering, 2020, 2004–5717. DOI 10.1007/s42600-021-00151-6. [Google Scholar] [CrossRef]

29. Umri, B. K., Akhyari, M. W., Kusrini, K. (2020). Detection of COVID-19 in chest X-ray image using CLAHE and convolutional neural network. 2020 2nd International Conference on Cybernetics and Intelligent System (ICORIS), pp. 1–5. Manado, Indonesia. [Google Scholar]

30. Degerli, A., Ahishali, M., Yamac, M., Kiranyaz, S., Chowdhury, M. E. H. et al. (2021). COVID-19 infection map generation and detection from chest X-ray images. Health Information Science and Systems, 9, 15. DOI 10.1007/s13755-021-00146-8. [Google Scholar] [CrossRef]

31. Ashour, A. S., Eissa, M., Wahba, M. A., Elsawy, R. A., Mohamed, W. S. et al. (2021). Ensemble-based bag of features for automated classification of normal and COVID-19 CXR images. Biomedical Signal Processing and Control, 68, 102656. DOI 10.1016/j.bspc.2021.102656. [Google Scholar] [CrossRef]

32. Hubel, D. H., Wiesel, T. N. (1968). Receptive fields and functional architecture of monkey striate cortex. The Journal of Physiology, 195, 215–243. DOI 10.1113/jphysiol.1968.sp008455. [Google Scholar] [CrossRef]

33. Wang, S. H., Zhang, Y., Cheng, X., Zhang, X., Zhang, Y. D. (2021). PSSPNN: PatchShuffle stochastic pooling neural network for an explainable diagnosis of COVID-19 with multiple-way data augmentation. Computational and Mathematical Methods in Medicine, 2021, 6633755. DOI 10.1155/2021/6633755. [Google Scholar] [CrossRef]

34. Li, L., Qin, L., Xu, Z., Yin, Y., Wang, X. et al. (2020). Artificial intelligence distinguishes COVID-19 from community acquired pneumonia on chest CT. Radiology, 296(2), 200905. DOI 10.1148/radiol.2020200905. [Google Scholar] [CrossRef]

35. Butt, C., Gill, J., Chun, D., Babu, B. A. (2020). Deep learning system to screen coronavirus disease 2019 pneumonia. Applied Intelligence, 2020(5), 1122–1129. DOI 10.1007/s10489-020-01714-3. [Google Scholar] [CrossRef]

36. Milletari, F., Navab, N., Ahmadi, S. A. (2016). V-Net: Fully convolutional neural networks for volumetric medical image segmentation. 2016 Fourth International Conference on 3D Vision (3DV), pp. 565–571. DOI 10.1109/3DV.2016.79. Stanford, CA, USA. [Google Scholar] [CrossRef]

37. Wang, S. H., Fernandes, S., Zhang, Y. D. (2021). AVNC: Attention-based VGG-style network for COVID-19 diagnosis by CBAM. Sensors Journal, 2021(99), 1. DOI 10.1109/JSEN.2021.3139626. [Google Scholar] [CrossRef]

38. Yang, G., Li, G., Kong, Y., Pan, T., Wu, J. et al. (2018). Automatic segmentation of kidney and renal tumor in CT images based on 3D fully convolutional neural network with pyramid pooling module. 2018 24th International Conference on Pattern Recognition, pp. 3790–3795. Beijing, China. [Google Scholar]

39. Wang, J., Bao, Y., Wen, Y., Lu, H., Xiang, Y. et al. (2020). Piror-attention residual learning for more discriminative COVID-19 screening in CT images. IEEE Transactions on Medical Imaging, 39(8), 2572–2583. DOI 10.1109/TMI.42. [Google Scholar] [CrossRef]

40. Wu, X. Y., Huang, H. X., W, W., Huang, Y. X. (2020). The application of artificial intelligence in medical imaging and cancer treatment decision. China School Medicine, 35(3), 235–238. DOI 1001-7062(2021)03-0235-04. [Google Scholar]

41. Zhang, Y., Zhang, Z., Zhang, X., Wang, S. H. (2021). MIDCAN: A multiple input deep convolutional attention network for covid-19 diagnosis based on chest CT and chest X-ray. Pattern Recognition Letters, 150, 8–16. DOI 10.1016/j.patrec.2021.06.021. [Google Scholar] [CrossRef]

42. Zhang, Y., Zhang, X., Zhu, W. (2021). ANC: Attention network for COVID-19 explainable diagnosis based on convolutional block attention module. Computer Modeling in Engineering & Sciences, 127(3), 1037–1058. DOI 10.32604/cmes.2021.015807. [Google Scholar] [CrossRef]

43. Jiang, Y., Chen, H., Loew, M., Ko, H. (2021). COVID-19 CT image synthesis with a conditional generative adversarial network. IEEE Journal of Biomedical and Health Informatics, 25(2), 441–452. DOI 10.1109/JBHI.2020.3042523. [Google Scholar] [CrossRef]

44. Zhao, H., Shi, J., Wang, X., Qi, X., Jia, J. (2016). Pyramid scene parsing network. Proceedings of the International Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6230–6239. Honolulu, HI, USA. [Google Scholar]

45. Elkorany, A. S., Elsharkawy, Z. F. (2021). COVIDetection Net: A tailored COVID-19 detection from chest radiography images using deep learning. Optik, 231, 166405. DOI 10.1016/j.ijleo.2021.166405. [Google Scholar] [CrossRef]

46. Zhang, Y., Wang, Q., Yuan, S. Y. H. (2021). Introduction to the special issue on computer modelling of transmission, spread, control and diagnosis of COVID-19. Computer Modeling in Engineering & Sciences, 127(2), 385–387. DOI 10.32604/cmes.2021.016386. [Google Scholar] [CrossRef]

47. Chen, S. W., Gu, X. W., Wang, J. J., Zhu, H. S. (2021). AIoT used for COVID-19 pandemic prevention and control. Contrast Media & Molecular Imaging, 3257035, 23. DOI 10.1155/2021/3257035. [Google Scholar] [CrossRef]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |