| Computer Modeling in Engineering & Sciences |  |

DOI: 10.32604/cmes.2022.019447

ARTICLE

Underwater Diver Image Enhancement via Dual-Guided Filtering

1College of Information Science and Technology, Dalian Maritime University, Dalian, 116026, China

2Department of Computer and Information Science, University of Pennsylvania, Philadelphia, PA 19104, USA

*Corresponding Authors: Weishi Zhang. Email: teesiv@dlmu.edu.cn; Jingchun Zhou. Email: zhoujingchun@dlmu.edu.cn

Received: 25 September 2021; Accepted: 09 November 2021

Abstract: The scattering and absorption of light propagating underwater cause the underwater images to present low contrast, color deviation, and loss of details, which in turn make human posture recognition challenging. To address these issues, this study introduced the dual-guided filtering technique and developed an underwater diver image improvement method. First, the color distortion of the underwater diver image was solved using white balance technology to obtain a color-corrected image. Second, dual-guided filtering was applied to the white balanced image to correct the distorted color and enhance its details. Four feature weight maps of the two images were then calculated, and two normalized weight maps were constructed for multi-scale fusion using normalization. To better preserve the obtained image details, the fusion image was histogram-stretched to obtain the final enhanced result. The experimental results validated that this method has improved the accuracy of underwater human posture recognition.

Keywords: Multi-scale fusion; image enhancement; guided filter; underwater diver images

Computer vision technology is developing rapidly and is continuously being applied in new fields, including human-centered applications. For example, computer vision technology can be used for human posture recognition, human body position detection, etc. At present, there are many human body recognition technologies, most of which are based on deep learning technology [1–3]. Although human body recognition in normal lighting has been well developed, recognition results in underwater environments are not ideal. This is because the absorption of light underwater causes a loss of detailed information about the target. Therefore, accurate human posture recognition in underwater environments still has many challenges.

One way to address issues with underwater human posture recognition is enhancing the underwater image to obtain an image that is close to one taken in a terrestrial environment. This allows the classical human body recognition method to work properly. Researchers have proposed various methods for improving underwater images. According to whether the physical principle of underwater light propagation is applied, the processing methods fall mainly into two categories: image enhancement and image restoration.

Image restoration mainly depends on the physical principle of underwater light. The underwater imaging model is built through physical principles. A simplified underwater image model is calculated:

where

He et al. [4] developed a dehazing algorithm–-DCP–-specifically for outdoor image scenes. Their method assumes that at least one channel of a sunny image contains very low pixel values (close to 0). Chiang et al. [5] combined this DCP algorithm with a wavelength-dependent compensation algorithm to make the algorithm suitable for underwater environments and remove fog and correct color in underwater images.

Galdran et al. [6] established a model to restore underwater images since red light attenuates faster underwater. However, this model does not apply to all images, as some will have varying degrees of red channel saturation. Berman et al. [7] considered spectral profiles of different water types and simplified image restoration process to single-image defogging by estimating the attenuation ratio of blue-red and blue-green channels. Fattal [8] deduced a model to restore the scene transfer map based on the colored lines in a hazy scene and described a model dedicated to generating a complete and regularized transfer map based on estimations of noise and dispersion. Yang et al. [9] built an improved model based on dark channel reflection illumination decomposition and local backscattered illumination estimation and obtained images with sharp edge details and improved colors. Peng et al. [10] first used depth-related color changes to estimate the ambient light and scene transmission map utilizing the difference between the observed intensity and the ambient light. And they introduced adaptive color correction based on this estimation. This method, which achieved good results, was used for images degraded by light scattering and absorption. Zhou et al. [11] introduced secondary guided transfer map and proposed a restoration method for underwater image. An improved guided filter was used to optimize the transfer map. However, despite these various underwater image restoration methods, accurately estimating various parameters of the optical image model is still difficult because of the complexity of the underwater environment and illumination conditions. Therefore, such restoration methods need to be further optimized.

Numerous research studies have attempted to enhance the underwater image quality. The image fusion has been found to be an effective way for underwater image enhancement. In 2012, Ancuti et al. [12] built a fusion-based model for image enhancement using the Laplacian contrast weight, local contrast weight, and saliency weight. This multi-scale fusion pyramid strategy obtained good results; the images had improved sharpness, contrast, and color distribution. In 2017, Ancuti et al. [13] improved the proposed fusion method, proposing an approach that utilized red channel compensation before fusion. Additionally, a new weight calculation method was used to obtain dark area display enhancement, improved overall contrast, and edge sharpening.

Zhou et al. [14] proposed the multi feature fusion method by fusing the image after color correction and guided filtering and then enhancing the edge to obtain balanced color and improved contrast. Fu et al. [15] established a two-stage underwater image improvement model for underwater images, seeking to correct colors and enhance contrast. Fu et al. [16] also applied the Retinex method to underwater image enhancement to obtain images with better visual effects and colors in another study. However, their method was relatively complex, requiring multiple iterations. Zhuang et al. [17] proposed a Bayesian Retinex algorithm with multi order gradient priors of reflectance and illumination. Most of the proposed enhancement methods enhanced the qualities of underwater images, however, they still did not fundamentally solve the degradation of underwater images.

Artificial intelligence (AI) is advancing rapidly and has been applied to a wide range of fields. Researchers have begun to incorporate AI-assisted analysis to underwater imaging improvement models as well. To ameliorate the color shift and increase the contrast of underwater images, Li et al. [18] presented a multi-media transmission guide that used an underwater image enhancement network embedded in color space. Guo et al. [19] built a multi-scale dense generation confrontation network to solve underwater image color distortion, underexposure, and blur. To do so, they proposed a residual multi-scale dense block in the generator. Li et al. [20] used a weakly supervised color transfer algorithm to ameliorate the color deviation. To address the inability of real-time and adaptive underwater enhancement methods, Chen et al. [21] built a recovery scheme with a generative confrontation network (GAN-RS). In this scheme, a multi-branch discriminator removes underwater noise while preserving the image content. Furthermore, a loss function based on an underwater index trains the evaluation branch of underwater noise suppression. Li et al. [22] proposed Zero-DCE, which formulated light enhancement as a task of image-specific curve estimation with a deep network. Compared to image enhancement and restoration methods, deep learning methods require many high-quality image datasets. However, since lossless underwater images are hard to obtain, deep learning methods usually cannot be fully trained and validated. Furthermore, Anwar et al. [23] comprehensively investigated underwater image enhancement methods with assistance of deep learning and found that methods with deep learning lag the most advanced traditional methods in most cases.

Therefore, this study proposed an underwater diver image improvement method with dual-guided filtering. This method ensured correction of the diver image’s color and enhanced the image’s details and contrast. Additionally, it was suitable for diver images in various underwater environments. The main innovations of this study are:

(1) The method managed to improve the single underwater diver image quality without the need to consider complex image degradation models.

(2) The images obtained through different technologies had different characteristics, and the advantages of these results were combined through fusion methods to obtain the final enhanced images.

(3) Experiments were performed and compared with other advanced techniques. Both qualitative and quantitative methods were analyzed. The results showed that the approach introduced could accurately correct the color of underwater diver images, enhance detailed information, and improve the effectiveness of human posture recognition.

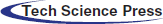

The flow of the method introduced in this paper is shown in Fig. 1. Each stage will be described in detail below.

Figure 1: Summary of the introduced method

The attenuation rate of light changes for different wavelengths. Generally, the shorter the wavelength, the stronger the penetration ability. Among red, green, and blue channels, the red light disappears first as it has the longest wavelength. However, in turbid or plankton-rich waters, blue light will be attenuated due to the absorption of organic matter, resulting in color distortion in the diver image. Therefore, the color deviation must be corrected before image enhancement. Because the average value of the red channel is minimal, applying conventional color correction methods like the gray world may lead to overcompensation for the red channel, resulting in over-saturation of that channel. Since the wavelength of green light is relatively short, the attenuation of green light in the underwater environment is small, and the preservation is relatively complete [13]. Thus, some green channels can be used to make up for the attenuation of the red and green channels. According to the gray world hypothesis theory [24], the mean values of the three channels should be the same. Therefore, the compensation of the red channel should be proportional to the difference between the mean values of the red and green channels.

First, the three channels were normalized according to the dynamic distribution and limited to [0,1]. The compensation coefficient of the red channel was as follows:

where

When the blue channel was in turbid or plankton-rich water, it was greatly attenuated because of the absorption of marine organisms. In these instances, the blue channel needed to be compensated. For the blue channel, there were also the following:

where,

After color attenuation compensation, the gray world white balance method was used for color correction. First, the gain coefficients of each channel were obtained as follows:

where

Underwater diver images become blurred due to the influence of light scattering. To ensure a more natural visual effect in the output image, an adaptive contrast stretching was applied to stretch elements in range

where C represents different channels, and

However, the white balance method can only recover color information; it cannot enhance the edge and detail information. Thus, a fusion-based method was applied to recover the lost details and edge information.

Underwater diver images are influenced by light attenuation and thus have low contrast. The white balance method can largely alleviate the color deviation, but recovering the details of the degraded image is difficult under some complex conditions. Therefore, the image must be enhanced to improve the visual effect. He et al. [25] found that guided filtering has an outstanding edge-preserving characteristics and could improve computational efficiency.

To prevent the color information of the image from being affected while the contrast of the image was enhanced, the image was transformed from RGB channel to Lab channel when the guided filtering was applied, and only the L channel was processed [14]. Guided filtering can be calculated as (8):

where

The enhanced L channel was determined in (9):

where λ is a dynamic parameter. To make the image fully enhanced, set λ to 5.

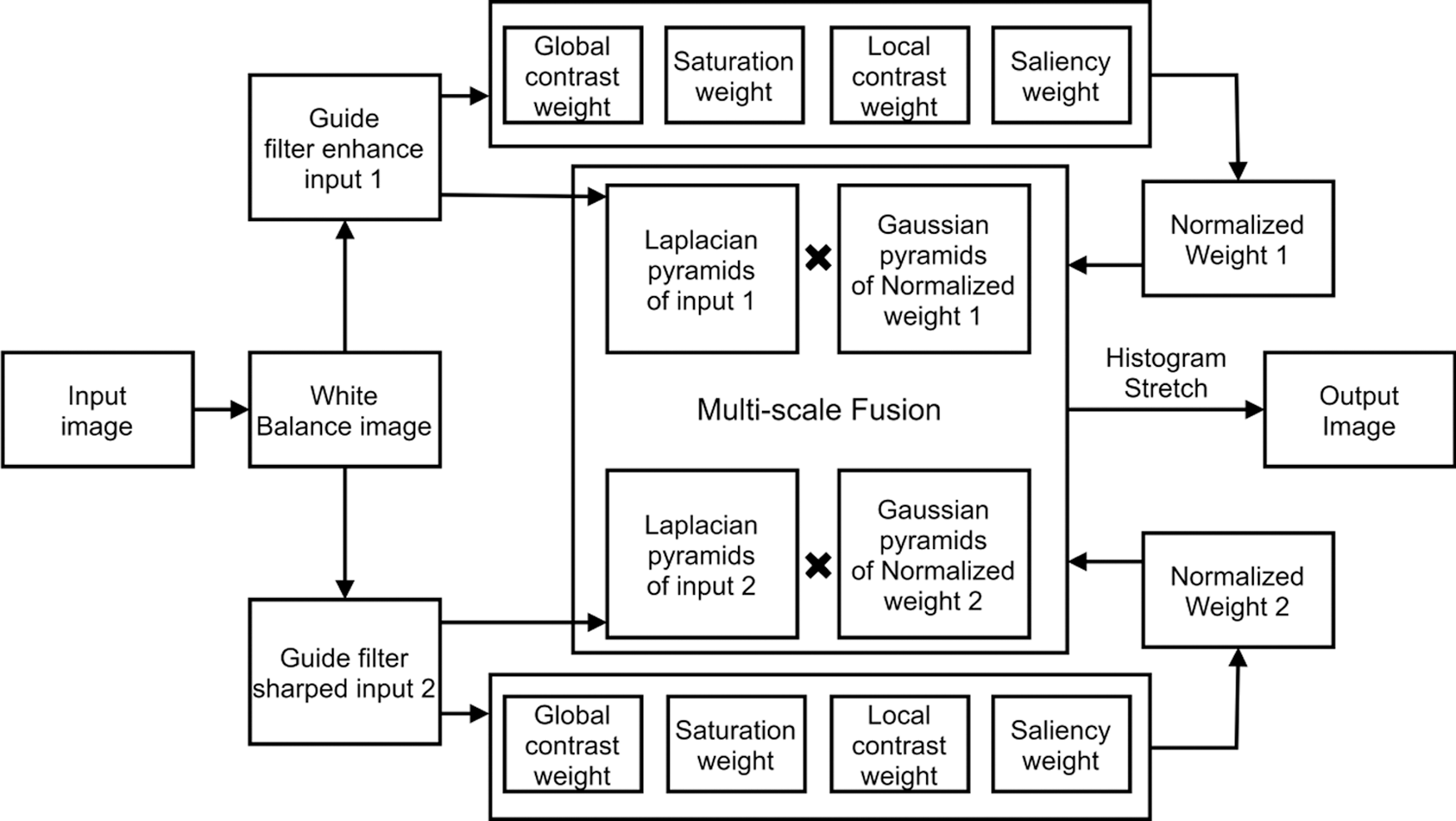

As shown in Fig. 2, the image enhanced by guided filtering retained the effect of white balance color correction. It had better contrast and detail information, and the distribution of the RGB histogram was more balanced.

Figure 2: Results of our image improvement approach: (a) raw image, (b) white balance image, and (c) guided filter image. In the corresponding RGB histogram distribution plot below, the abscissa refers to the pixel values, and the ordinate refers to the normalized frequency

Underwater diver images are usually fuzzy and lack detail. Guided filtering-enhanced images have greater brightness and contrast, but some details are still lost. Therefore, this study introduced a sharpened version underwater image as another input to compensate for the loss of detail in the underwater diver image and reduce the degradation caused by light scattering. In the present study, the unsharpened mask method [13, 26] was utilized to sharpen the image.

In the traditional sharpening method, a Gaussian kernel is first used to smooth the original image, which is then subtracted from the original image. Finally, the difference is added to the original image. In this study, however, a guided filter was used instead of a Gaussian kernel to smooth the image, since it had good edge-preserving characteristics [25]. Inspired by MSR [27], a guided filter with low, medium, and high scales was selected to obtain three different scale versions of the image, and the average value was calculated to obtain a more accurate smooth image.

In this study, multiple smooth images were obtained by selecting guided filtering with different regularization coefficients, and a weight value was assigned to each smooth image. These images were combined into a final smooth image, as shown below:

where

To obtain the edge detail image, the smoothed image was subtracted from the input image. Finally, the edge detail image was linearly fused with the input image to obtain the sharpened image. The final sharpened result was formulated as follows:

where s is the control factor of sharpening degree. After many experiments, the value was 1.5.

As shown in Fig. 3, the approach introduced obtained more obvious texture information than the raw image.

Figure 3: Image sharpened result. From left to right: (a) raw image, (b) sharpened image

After image sharpening and detail enhancement, the white balance image was used as the two fused input images. The image fusion effect was enhanced by extracting the special weight map of the image. Considering the low contrast and fuzzy details of underwater images, four weights were selected for the input image: global contrast weight, local contrast weight, saturation weight, and significance weight. The weight map was used to increase the proportion of pixels with higher weight values in the resulting image [13].

Laplace filter could enhance the image edge and texture to a certain extent. In this study, we denoted

Saturation weight enables the fusion process to use the highly saturated region to adapt to the color information, but the saturation weight will reduce the contrast to some extent. Therefore, global contrast and local contrast weight must be introduced to solve the low contrast.

The saturation weight was first used to convert the image to Lab space, then the standard deviation between the RGB and L channels was calculated. The specific calculation method was applied:

where

Saliency weight was used to highlight some objects in the underwater environment that are more easily perceived by human vision. Fu et al. [28] developed a collaborative saliency detection algorithm with clustering to obtain a more accurate saliency map by integrating contrast and spatial clues.

Contrast cues mainly represent the uniqueness of visual features of a single image, which are calculated as follows:

where ni represents the pixel number of Ci, N represents the total pixel number, and uk refers to the cluster center associated with the cluster Ck. L2 norm is used for distance calculation on the feature space.

Spatial cues are calculated assuming the central area of the image can attract people’s attention more than other areas:

where

This study also introduced local contrast weight [13] to address the local detail loss issue. The local contrast weight highlighted areas in the input image where there were more local pixel value changes and increased the transition between the lighter and darker areas. Letting

where

To take full advantage of the above four weight maps, a multi-scale fusion method was introduced; the saturation weight map could balance the color difference, the global contrast weight map could improve the overall contrast, and the saliency weight graph could highlight prominent objects that lost saliency in the underwater images. Finally, the local contrast weight was employed to polish the image details.

The four weight maps were merged into a weight map W with normalization, and the fused image was calculated:

where

To avoid the artifacts that may appear in simple linear weighted fusion, the Laplacian pyramid fusion method was introduced, and the normalized weight map was deconstructed into multi-scale Laplacian pyramids. Additionally, the Gaussian pyramid was applied to decompose the input image. Finally, the obtained Gaussian pyramid and Laplacian pyramid were fused on multiple scales.

First, the normalized weight map was obtained as follows:

where

Laplacian pyramid decomposition and Gaussian pyramid decomposition were performed on the fused input image and its normalized weight map, respectively, and the fusion was performed on multiple scales, as follows:

where

To obtain the final output image, the output pyramid was sampled, as expressed in (19):

where

The color and contrast of the fused image were greatly improved after these steps, but some areas were still too dark or too bright. To get a high-quality image, these parts had to be removed to generate more reasonable image brightness. The over-bright and over-dark regions were removed by stretching the pixels whose size was between the pixel values at quantile 0.1% and 99.9% in each channel to [0,255].

where M represents an array of pixels in a certain channel sorted in ascending order, C refers to the number of image pixels, T refers to a threshold (T = 0.01), and

6 Experiment Results and Discussion

This section compares the method introduced in this paper with the existing restoration approaches for underwater images. Each method was analyzed qualitatively and quantitatively. The selected comparison methods were UDCP [29], RBE [16], TSP [15], IBLA [10], ULAP [30], GDCP [31], RGHS [26], UWCNN [32], and WaterNet [33]. All experiments were run on Win10 PC, AMD ryzen 5 4600u, and MATLAB r2020b.

A total of 890 images from the UIEB [33] dataset was utilized to verify the introduced method. The UIEB dataset is widely used. The dataset was divided into two categories: Data A, about underwater divers, and Data B, about images of other underwater scenes.

First, the experimental results were analyzed qualitatively, and some objective metrics were then used for quantitative analysis. Next, the average value was calculated according to the quantitative metric results of all images in the dataset, and a box plot was created to demonstrate the superiority of the approach introduced in this paper.

For qualitative analysis, eight images were selected from the two categories in the dataset.

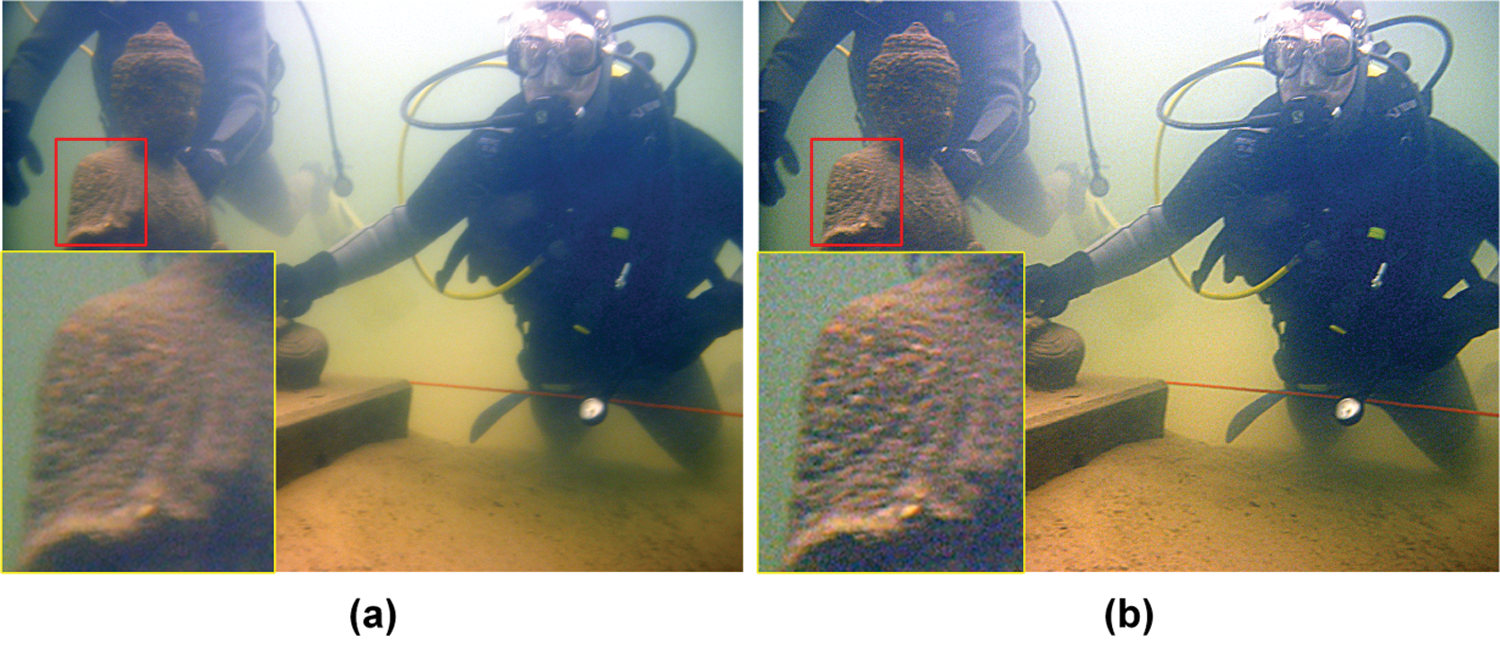

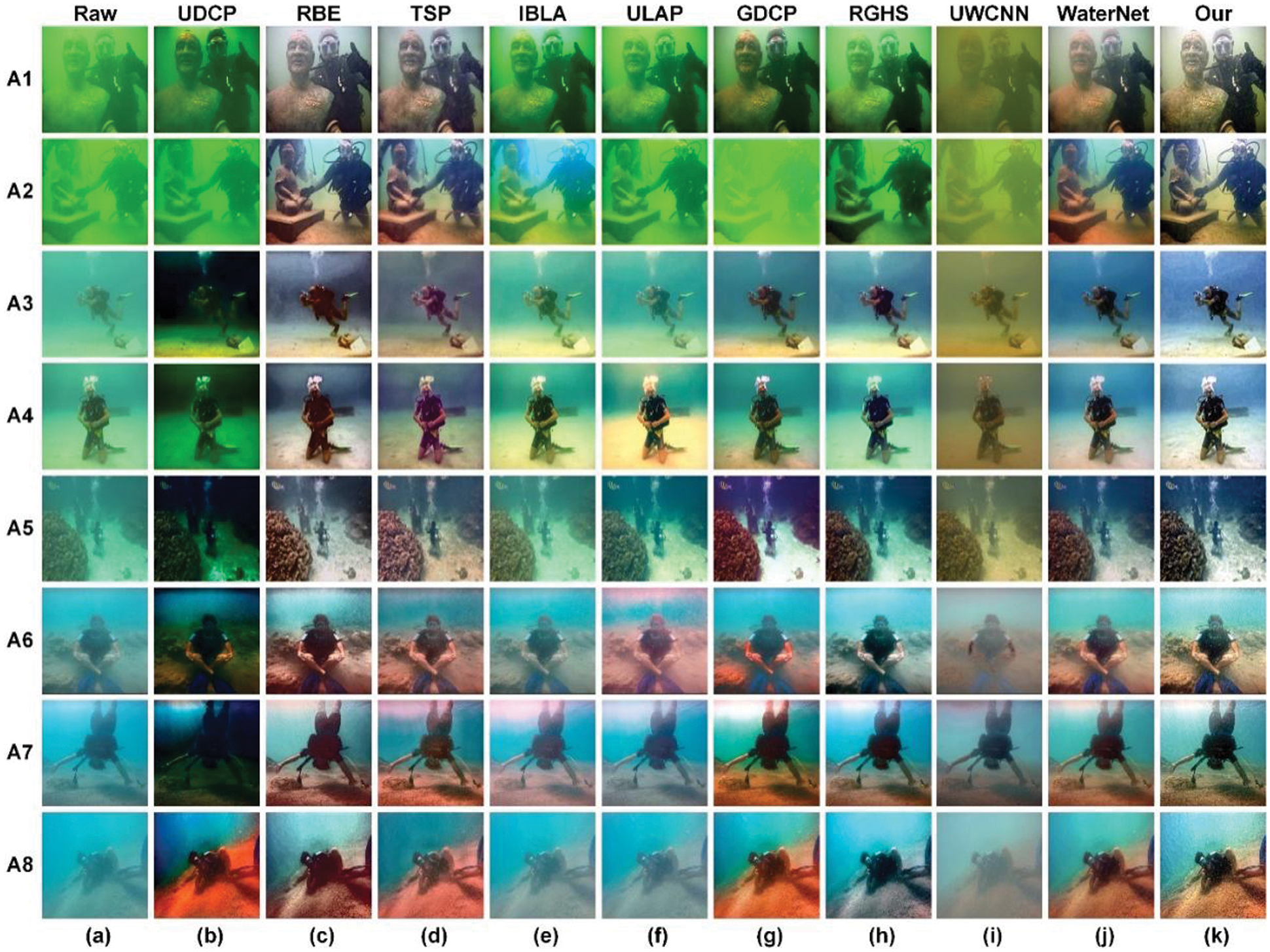

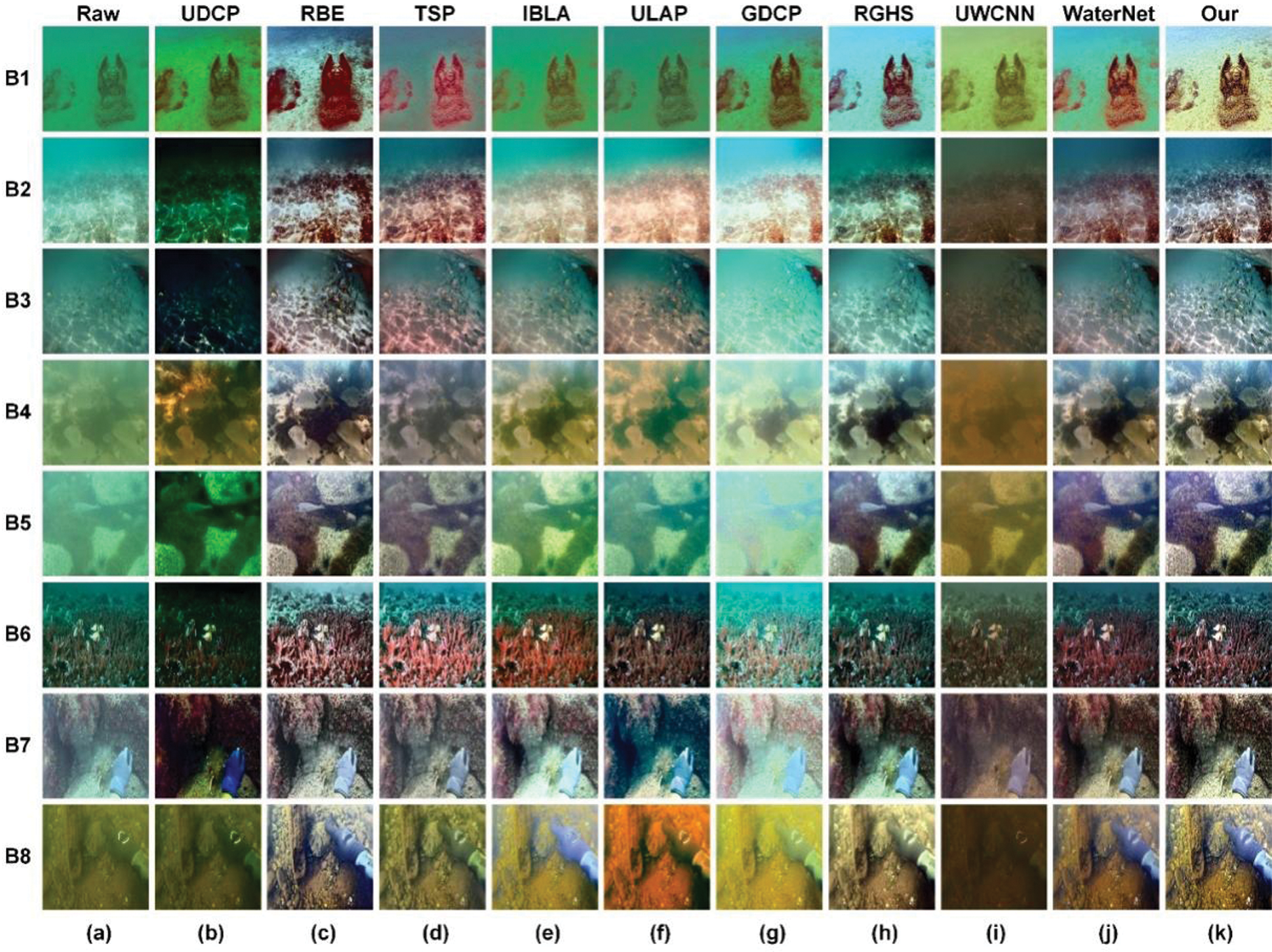

Figs. 4 and 5 show the resulting images obtained by different imaging improvement methods.

The UDCP approach applies DCP to estimate the transmission map according to the blue-green channel, but it does not take the attenuation of the red channel into consideration, which is prone to color deviation. Additionally, the overall brightness of the image is low. For example, a green color cast appeared in images A3, A4, and B1, while a red color cast appeared in images A8, B4, and B7. RBE first performs color correction and then solves the underexposure and blur based on Retinex. However, because of the oversaturation of the red channel, the images generally show red, such as images B1, and B2. TSP adopts a two-step approach to improve the image. It mainly solves color deviation and low contrast through pixel value transformation. However, due to its inability to select an accurate target image when adjusting the contrast, this method does not improve the contrast much. This results in red channel oversaturation, such as that seen in images A6, A7, A8, B1, B2, and B3. IBLA restores the image by estimating the transmission image and background light. However, these estimates are not accurate, resulting in color correction and contrast enhancement that are not obvious. As a result, the results have different degrees of color deviation, and the contrast is not largely enhanced. ULAP estimates the background light based on the maximum intensity difference between the red and blue-green channels. However, because of the limited improvement of the red and blue channels, the color degradation of its output image is not completely removed. For example, A1, A2, and B1 showed green color cast; A7, and A8 had a blue color shift; A6, B2, and B8 images showed a red cast. Additionally, the contrast of these images was low. This was because ULAP has difficulty accurately estimating the background light in some images with serious green color cast, resulting in a poor restoration effect.

Figure 4: The qualitative evaluation result of eight selected images from Data A: (a) Raw images, (b) UDCP, (c) RBE, (d) TSP, (e) IBLA, (f) ULAP, (g) GDCP, (h) RGHS, (i) UWCNN, (j) WaterNet, (k) Our method

Figure 5: The qualitative evaluation result of eight selected images from Data B: (a) Raw images, (b) UDCP, (c) RBE, (d) TSP, (e) IBLA, (f) ULAP, (g) GDCP, (h) RGHS, (i) UWCNN, (j) WaterNet, (k) Our method

Like UDCP, GDCP estimates the ambient light through the color change related to the water depth and then obtains the scene transmission map. However, this method is based on IFM and does not apply to all underwater environments. Furthermore, although it can improve contrast and brightness to a certain extent, the method can introduce color deviations when enhancing images, such as those found in images A2, B2, and B5. RGHS first equalizes the blue-green channel and then redistributes the histogram using the dynamic parameters related to the original image and underwater wavelength attenuation. Although the contrast of the image can be enhanced, the detailed information is not recovered well as the approach ignores the relationship between image degradation and scene depth, resulting in serious color deviation, such as that seen in images A1, A2, and B1. UWCNN applies the deep learning method to underwater diver image enhancement, but the combination of UWCNN with the physical model did not obtain ideal results. Severe color deviation remained in the obtained images, and the low contrast and low brightness were not solved. WaterNet constructs a special dataset to train the CNN network. Still, due to the lack of real images in the underwater environment, backscattering obtained by WaterNet is difficult to eliminate. Although the color deviation of the image was resolved, the details of some images were not restored, such as images A1, A2, B7, etc.

In contrast, the present method did not introduce color cast, over-enhancement, or under-enhancement. As a result, it obtained satisfactory images with color, contrast, brightness, and clearer texture details.

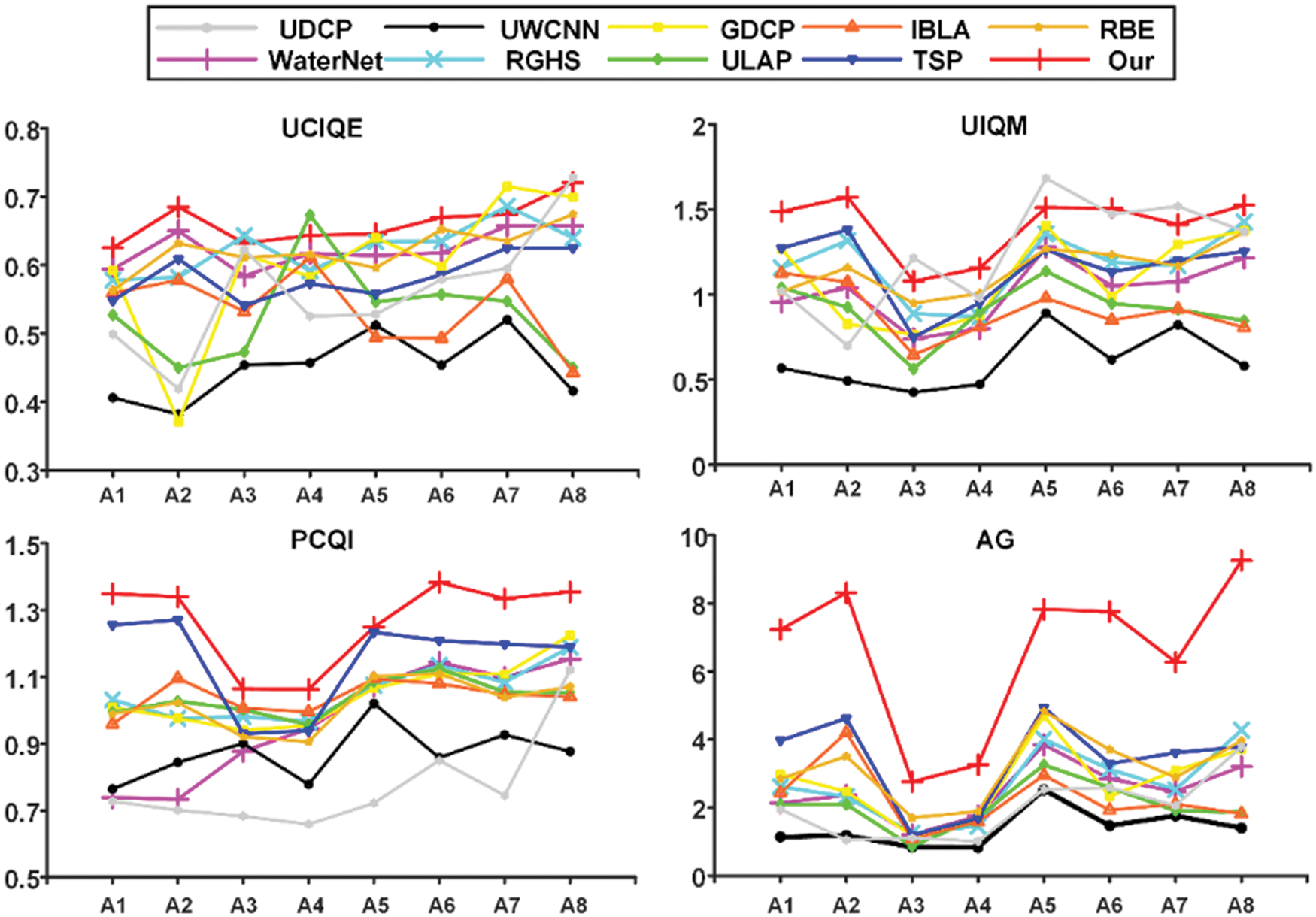

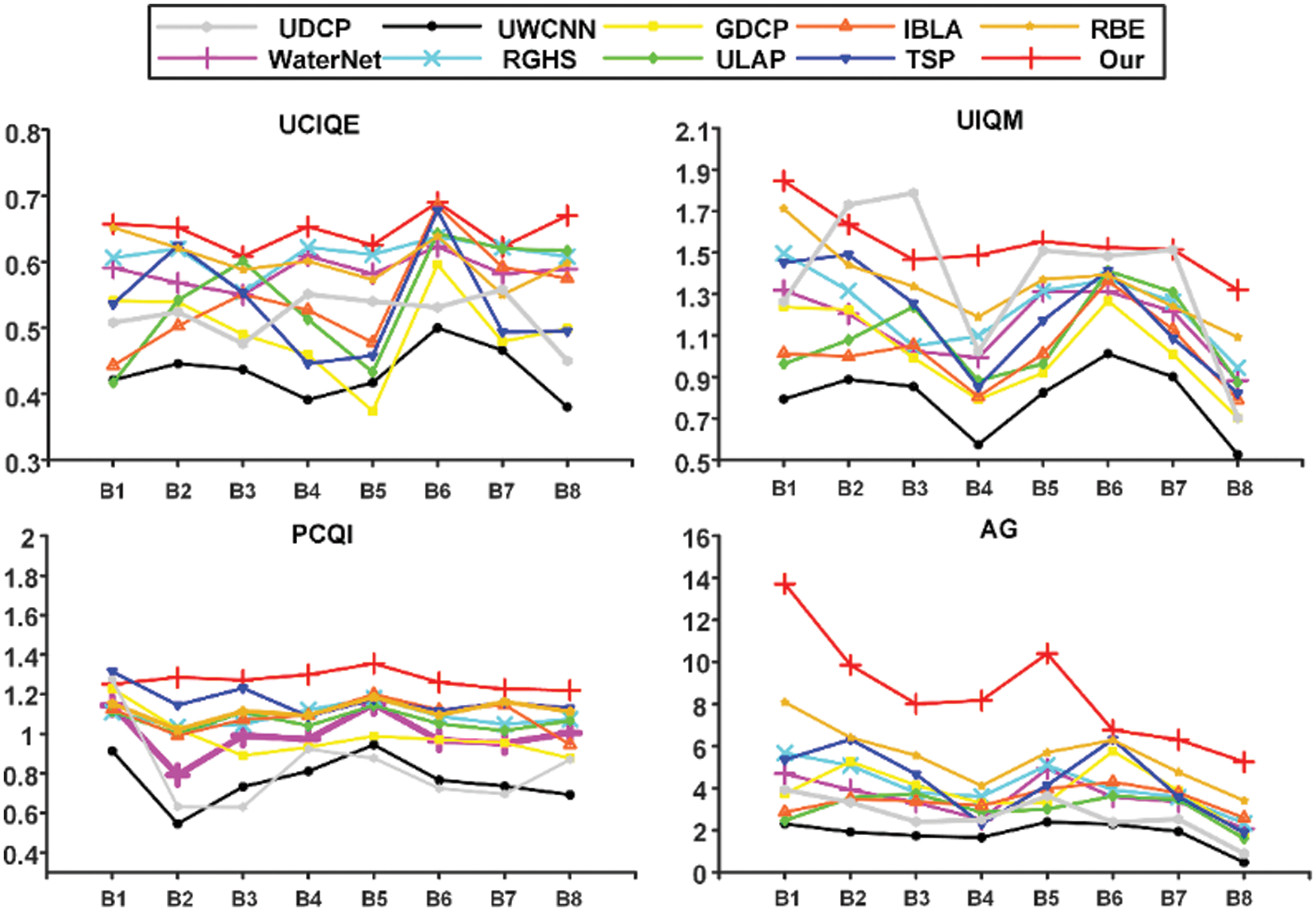

Evaluation of underwater images often focuses on whether the color, contrast, and overall visual quality of the image have been improved. Such evaluation requires objective methods. This section presents this study’s approach and other methods using four objective metrics: average gradient (AG), underwater image quality measurement (UIQM), underwater image quality measurement (UCIQE), and patch-based contrast quality (PCQI). UIQM and UCIQE indicators are used particularly for underwater image evaluation, mainly to evaluate the image’s color richness, clarity, and contrast. PCQI represents the contrast of the image, while AG mainly indicates the sharpness of the image. Higher PCQI and AG values indicate better contrast and more detailed texture information of an image. The objective evaluation results of the images shown in Figs. 4 and 5 were presented using a broken line diagram, as shown in Figs. 6 and 7. The abscissa represents the image sequence number, and the ordinate represents the result value from different methods.

Figure 6: Results of objective evaluation of Fig. 4

Figure 7: Results of objective evaluation of Fig. 5

As seen in the broken line diagram, the UCIQE, UIQM, PCQI, and AG values obtained by the present approach were higher than those acquired by other approaches in most cases. Among the UCIQE values, A3 was lower than RGHS, A4 was lower than ULAP, and A7 was lower than GDCP because UCIQE is a linear addition of color concentration, saturation, and contrast. Thus, an excessively high factor will lead to a high UCIQE value. As seen in Fig. 4, the RGHS, ULAP, and GDCP methods resulted in supersaturated images with color deviation. Since UCIQE does not fully consider color deviation and artifact [33], the UCIQE value was high. For A3, A5, A7, B2, and B3, the UIQM value of the present approach was slightly lower than that of the UDCP. As shown in Figs. 4 and 5, the UDCP approach resulted in low brightness and contrast, as well as serious color cast. For B1 images, the PCQI value of the present method was slightly lower than TSP and UDCP, while other images had higher PCQI values than other methods. Additionally, the AG values of all experimental images of the present approach were higher than other approaches. Thus, the contrast and detail enhancement method found in this study effectively enhanced valuable information and improved contrast.

To objectively evaluate the effect of the method further, the average value was calculated, and a box plot, as shown in Fig. 8, was drawn according to each objective metric for all underwater images in the UIEB dataset to represent the discrete distribution of objective metrics in different methods. The average value is displayed in Table 1, in which the best measurement results are bold and underlined. Compared with most other advanced methods, our method had the first UCIQE, PCQI, and AG indicators and the second UIQM. However, compared with the first UDCP method, the difference was only 0.07.

Figure 8: Box plot of four objective metrics in UIEB dataset. M1: UDCP, M2: RBE, M3: TSP, M4: IBLA, M5: ULAP, M6: GDCP, M7: RGHS, M8: UWCNN, M9: WaterNet, M10: Our

As shown in the box plot in Fig. 7, On each box, the central mark represents the median, and the bottom and top edges of the box illustrate the upper and lower quartile, respectively. The whiskers above and below the box show the minimum and maximum value, respectively. red marks represent outliers. It can be seen from the box plot that for UCIQE and UIQM, the median and maximum values of our method are only less than those of UDCP method, but higher than those of other methods, and the results are relatively stable. On the other hand, for PCQI and AG, our approach has better performance than other approaches.

Thus, comparison showed that the four objective metrics obtained by the present method achieved good results. Our method first compensated for the red channel, then performed gray world white balance to alleviate the color distortion of the image, and then obtained the detail-enhanced and sharpened images based on guided filtering. Finally, the obtained images were multi-scale fused and histogram-stretched to obtain the enhanced result. The brightness, saturation, and contrast of the obtained images were balanced, with no obvious color cast.

Thus, our method could obtain images with significantly improved clarity, contrast, chroma, and brightness. Furthermore, all objective evaluation indices were high and had certain robustness. Therefore, our approach is suitable for underwater diver image enhancement in most environments.

Human posture recognition is the basis of most human-centered computer vision applications. This section will illuminate the influence of the enhanced underwater diver image on human posture recognition. A method proposed by Cao et al. [1] was used to effectively detect the two-dimensional posture of multiple people in the image. This method is based on deep learning and uses Partial Affinity Field (PAFs) learning to associate body parts with individuals in the image, to achieve real-time performance, maintain high accuracy and can accurately recognize human posture in an environment with normal lighting.

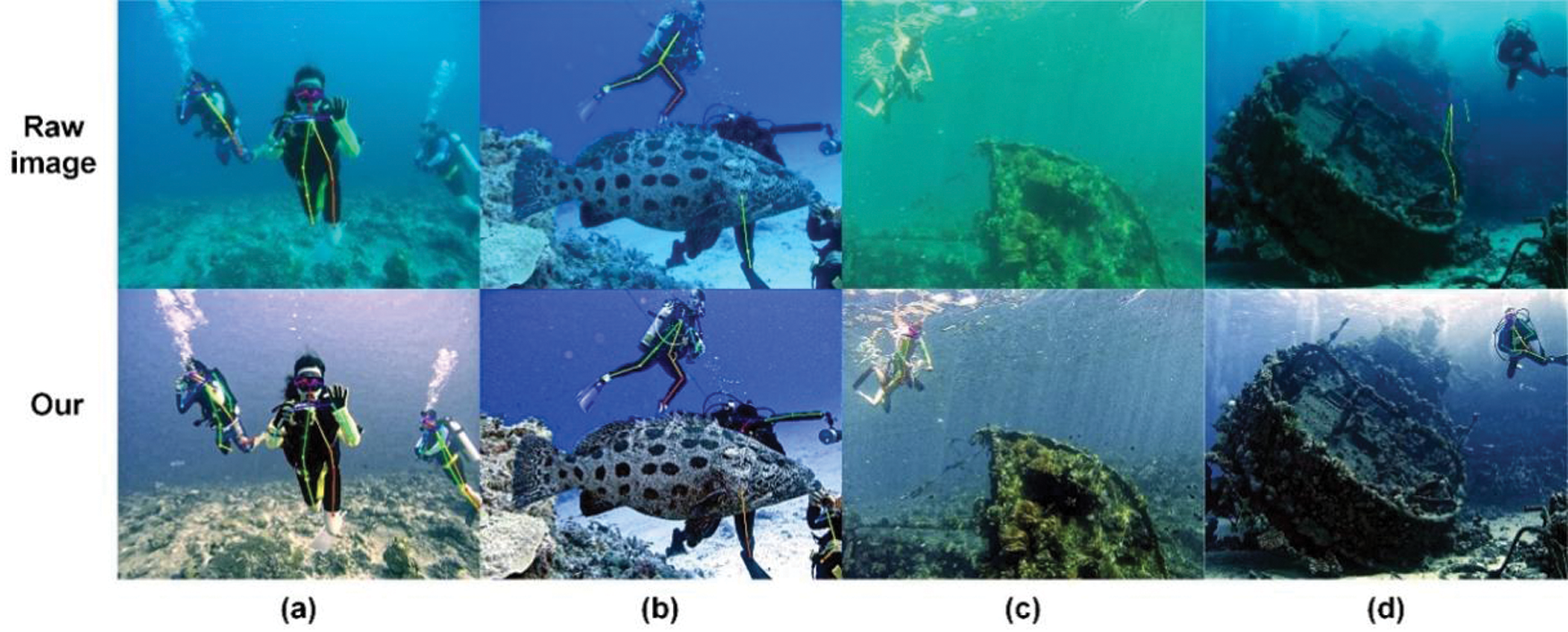

As shown in Figs. 9a and 9b, only some divers could be identified in the raw images, and the legs and arms of some could not be accurately identified. In contrast, all divers could be completely identified in the enhanced image, and the recognition results were more accurate. As seen Figs. 9c and 9d, the divers could not be identified from the raw image, and other targets were identified as divers in the image (d). Because the image was affected by the underwater environment, it had color deviation, and the edge information was lost. In contrast, the enhanced image was closer to the daily environment. Thus, the results showed that the present approach could remarkably enhance the posture recognition of underwater divers.

Figure 9: Experimental results of underwater human posture recognition: (a) Scene 1, (b) Scene 2, (c) Scene 3, (d) Scene 4.

This paper presents an underwater diver image enhancement approach based on double-guided filtering. First, color correction was performed to restore the color cast. Second, a guided filter was used to enhance details and sharpen the image, and multi-scale fusion was performed to enhance the edge and contrast to avoid artifact. Finally, the areas where the image was too bright or dark were removed by histogram stretching. Our method significantly alleviated the color distortion of the underwater diver image, enhanced the contrast and detail information, obtained an image like that in a normal lighting environment, highlighted the edge information of the underwater diver, made the diver’s image more identifiable, and improved underwater human posture recognition. Many experiments showed that the method had certain advantages over others. By comparing with the most advanced approaches, our approach has relatively large advantages in various objective indicators. It is robust for underwater diver image enhancement and can effectively improve underwater human posture recognition.

Acknowledgement: Thanks to the data set provided by the joint laboratory of the Dalian University of Technology and Zhangzidao Group. We are also extremely grateful to the anonymous reviewers for their critical comments on the manuscript.

Funding Statement: National Natural Science Foundation of China (No. 61702074); the Liaoning Provincial Natural Science Foundation of China (No. 20170520196); the Fundamental Research Funds for the Central Universities (Nos. 3132019205 and 3132019354).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. Cao, Z., Simon, T., Wei, S. E., Sheikh, Y. (2017). Realtime multi-person 2D pose estimation using part affinity fields. arXiv: 1611.08050. http://arxiv.org/abs/1611.08050. [Google Scholar]

2. Chou, C. J., Chien, J. T., Chen, H. T. (2018). Self adversarial training for human pose estimation. 2018 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASCHonolulu, HI, USA, pp. 17–30. DOI 10.23919/APSIPA.2018.8659538. [Google Scholar] [CrossRef]

3. Chu, X., Yang, W., Ouyang, W., Ma, C., Yuille, A. L. et al. (2017). Multi-context attention for human pose estimation. arXiv: 1702.07432. http://arxiv.org/abs/1702.07432. [Google Scholar]

4. He, K., Sun, J., Tang, X. (2011). Single image haze removal using dark channel prior. IEEE Transactions on Pattern Analysis and Machine Intelligence, 33(12), 2341–2353. DOI 10.1109/TPAMI.2010.168. [Google Scholar] [CrossRef]

5. Chiang, J. Y., Chen, Y. C. (2012). Underwater image enhancement by wavelength compensation and dehazing. IEEE Transactions on Image Processing: A Publication of the IEEE Signal Processing Society, 21(4), 1756–1769. DOI 10.1109/TIP.2011.2179666. [Google Scholar] [CrossRef]

6. Galdran, A., Pardo, D., Picón, A., Alvarez-Gila, A. (2015). Automatic red-channel underwater image restoration. Journal of Visual Communication and Image Representation, 26(4), 132–145. DOI 10.1016/j.jvcir.2014.11.006. [Google Scholar] [CrossRef]

7. Berman, D., Levy, D., Avidan, S., Treibitz, T. (2020). Underwater single image color restoration using haze-lines and a new quantitative dataset. IEEE Transactions on Pattern Analysis and Machine Intelligence, 43(8), 2822–2837. DOI 10.1109/TPAMI.2020.2977624. [Google Scholar] [CrossRef]

8. Fattal, R. (2014). Dehazing using color-lines. ACM Transactions on Graphics, 34(1), 1–14. DOI 10.1145/2651362. [Google Scholar] [CrossRef]

9. Yang, M., Sowmya, A., Wei, Z., Zheng, B. (2020). Offshore underwater image restoration using reflection-decomposition-based transmission map estimation. IEEE Journal of Oceanic Engineering, 45(2), 521–533. DOI 10.1109/JOE.2018.2886093. [Google Scholar] [CrossRef]

10. Peng, Y. T., Cosman, P. C. (2017). Underwater image restoration based on image blurriness and light absorption. IEEE Transactions on Image Processing: A Publication of the IEEE Signal Processing Society, 26(4), 1579–1594. DOI 10.1109/TIP.2017.2663846. [Google Scholar] [CrossRef]

11. Zhou, J., Liu, Z., Zhang, W., Zhang, D., Zhang, W. (2021). Underwater image restoration based on secondary guided transmission map. Multimedia Tools and Applications, 80(5), 7771–7788. DOI 10.1007/s11042-020-10049-7. [Google Scholar] [CrossRef]

12. Ancuti, C., Ancuti, C. O., Haber, T., Bekaert, P.2012 Enhancing underwater images and videos by fusion. IEEE Conference on Computer Vision and Pattern Recognition, pp. 81–88. Providence, RI, USA. DOI 10.1109/CVPR.2012.6247661. [Google Scholar] [CrossRef]

13. Ancuti, C. O., Ancuti, C., de Vleeschouwer, C., Bekaert, P. (2018). Color balance and fusion for underwater image enhancement. IEEE Transactions on Image Processing: A Publication of the IEEE Signal Processing Society, 27(1), 379–393. DOI 10.1109/TIP.2017.2759252. [Google Scholar] [CrossRef]

14. Zhou, J., Zhang, D., Zhang, W. (2021). A multifeature fusion method for the color distortion and low contrast of underwater images. Multimedia Tools and Applications, 80(12), 17515–17541. DOI 10.1007/s11042-020-10273-1. [Google Scholar] [CrossRef]

15. Fu, X., Fan, Z., Ling, M., Huang, Y., Ding, X. (2017). Two-step approach for single underwater image enhancement. International Symposium on Intelligent Signal Processing and Communication Systems, pp. 789–794, Xiamen, China. DOI 10.1109/ISPACS.2017.8266583. [Google Scholar] [CrossRef]

16. Fu, X., Zhuang, P., Huang, Y., Liao, Y., Zhang, X. P. et al. (2014). A retinex-based enhancing approach for single underwater image. IEEE International Conference on Image Processing, pp. 4572–4576. Paris, France. DOI 10.1109/ICIP.2014.7025927. [Google Scholar] [CrossRef]

17. Zhuang, P., Li, C., Wu, J. (2021). Bayesian retinex underwater image enhancement. Engineering Applications of Artificial Intelligence, 101(1), 104171. DOI 10.1016/j.engappai.2021.104171. [Google Scholar] [CrossRef]

18. Li, C., Anwar, S., Hou, J., Cong, R., Guo, C. et al. (2021). Underwater image enhancement via medium transmission-guided multi-color space embedding. IEEE Transactions on Image Processing: A Publication of the IEEE Signal Processing Society, 30, 4985–5000. DOI 10.1109/TIP.2021.3076367. [Google Scholar] [CrossRef]

19. Guo, Y., Li, H., Zhuang, P. (2020). Underwater image enhancement using a multiscale dense generative adversarial network. IEEE Journal of Oceanic Engineering, 45(3), 862–870. DOI 10.1109/JOE.2019.2911447. [Google Scholar] [CrossRef]

20. Li, C., Guo, J., Guo, C. (2018). Emerging from water: Underwater image color correction based on weakly supervised color transfer. IEEE Signal Processing Letters, 25(3), 323–327. DOI 10.1109/LSP.2018.2792050. [Google Scholar] [CrossRef]

21. Chen, X., Yu, J., Kong, S., Wu, Z., Fang, X. et al. (2019). Towards real-time advancement of underwater visual quality with GAN. IEEE Transactions on Industrial Electronics, 66(12), 9350–9359. DOI 10.1109/TIE.2019.2893840. [Google Scholar] [CrossRef]

22. Li, C., Guo, C., Chen, C. L. (2021). Learning to enhance low-light image via zero-reference deep curve estimation. IEEE Transactions on Pattern Analysis and Machine Intelligence (Early Access). DOI 10.1109/TPAMI.2021.3063604. [Google Scholar] [CrossRef]

23. Anwar, S., Li, C. (2020). Diving deeper into underwater image enhancement: A survey. Signal Processing: Image Communication, 89(6), 115978. DOI 10.1016/j.image.2020.115978. [Google Scholar] [CrossRef]

24. Buchsbaum, G. (1980). A spatial processor model for object colour perception. Journal of the Franklin Institute, 310(1), 1–26. DOI 10.1016/0016-0032(80)90058-7. [Google Scholar] [CrossRef]

25. He, K., Sun, J., Tang, X. (2013). Guided image filtering. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(6), 1397–1409. DOI 10.1109/TPAMI.2012.213. [Google Scholar] [CrossRef]

26. Huang, D., Wang, Y., Song, W., Sequeira, J., Mavromatis, S. (2018). Shallow-water image enhancement using relative global histogram stretching based on adaptive parameter acquisition. In: Schoeffmann, K., Chalidabhongse, T. H., Ngo, C. W., Aramvith, S., O’Connor, N. E. et al. (Eds.MultiMedia modeling, vol. 10704, pp. 453–465. Springer International Publishing, Springer, Cham Publishing. DOI 10.1007/978-3-319-73603-7_37. [Google Scholar] [CrossRef]

27. Jobson, D. J., Rahman, Z., Woodell, G. A. (1997). A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Transactions on Image Processing: A Publication of the IEEE Signal Processing Society, 6(7), 965–976. DOI 10.1109/83.597272. [Google Scholar] [CrossRef]

28. Fu, H., Cao, X., Tu, Z. (2013). Cluster-based co-saliency detection. IEEE Transactions on Image Processing: A Publication of the IEEE Signal Processing Society, 22(10), 3766–3778. DOI 10.1109/TIP.2013.2260166. [Google Scholar] [CrossRef]

29. Drews JrP., do Nascimento, E., Moraes, F., Botelho, S., Campos, M. et al. (2013). Transmission estimation in underwater single images. IEEE International Conference on Computer Vision Workshops, pp. 825–830. Sydney, NSW, Australia. [Google Scholar]

30. Song, W., Wang, Y., Huang, D., Tjondronegoro, D. (2018). A rapid scene depth estimation model based on underwater light attenuation prior for underwater image restoration. In: Hong, R., Cheng, W. H., Yamasaki, T., Wang, M., Ngo, C. W. (Eds.Advances in multimedia information processing–-PCM 2018, vol. 11164, pp. 678–688. Springer International Publishing, Springer, Cham Publishing. DOI 10.1007/978-3-030-00776-8_62. [Google Scholar] [CrossRef]

31. Peng, Y. T., Cao, K., Cosman, P. C. (2018). Generalization of the dark channel prior for single image restoration. IEEE Transactions on Image Processing, 27(6), 2856–2868. DOI 10.1109/TIP.2018.2813092. [Google Scholar] [CrossRef]

32. Li, C., Anwar, S., Porikli, F. (2020). Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognition, 98(1), 107038. DOI 10.1016/j.patcog.2019.107038. [Google Scholar] [CrossRef]

33. Li, C., Guo, C., Ren, W., Cong, R., Hou, J. et al. (2019). An underwater image enhancement benchmark dataset and beyond. IEEE Transactions on Image Processing: A Publication of the IEEE Signal Processing Society, vol. 29, pp. 4376–4389. DOI 10.1109/TIP.2019.2955241. [Google Scholar] [CrossRef]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |