| Computer Modeling in Engineering & Sciences |  |

DOI: 10.32604/cmes.2021.014896

ARTICLE

Quantile Version of Mathai-Haubold Entropy of Order Statistics

1Department of Mathematics, College of Science, King Khalid University, Abha, 62529, Saudi Arabia

2Statistical Research and Studies Support Unit, King Khalid University, Abha, 62529, Saudi Arabia

3Department of Mathematical Sciences, IUST, Kashmir, 192231, India

4Department of Mathematics, Faculty of Science, Al al-Bayt University, Mafraq, 25113, Jordan

5Department of Mathematics, Govt. Degree College Kilam, Higher Education, J & K, 192231, India

*Corresponding Author: Ibrahim M. Almanjahie. Email: imalmanjahi@kku.edu.sa

Received: 06 November 2020; Accepted: 18 March 2021

Abstract: Many researchers measure the uncertainty of a random variable using quantile-based entropy techniques. These techniques are useful in engineering applications and have some exceptional characteristics than their distribution function method. Considering order statistics, the key focus of this article is to propose new quantile-based Mathai-Haubold entropy and investigate its characteristics. The divergence measure of the Mathai-Haubold is also considered and some of its properties are established. Further, based on order statistics, we propose the residual entropy of the quantile-based Mathai-Haubold and some of its property results are proved. The performance of the proposed quantile-based Mathai-Haubold entropy is investigated by simulation studies. Finally, a real data application is used to compare our proposed quantile-based entropy to the existing quantile entropies. The results reveal the outperformance of our proposed entropy to the other entropies.

Keywords: Shannon entropy; Mathai-Haubold entropy; quantile function; residual entropy; order statistics; failure time; reliability measures

The order statistics are considered in a varied scope of complicated problems, including characterization of a probability distribution, quality control, robust statistical estimation and identifying outliers, analysis of a censored sample, the goodness of fit-tests, etc. Based on order statistics, the usage of the recurrence relationships for moments is well recognized by many researchers (see, for instance, Arnold et al. [1], Malik et al. [2]). For an enhancement, many recurrence relations and identities for the order statistics moments originating from numerous particular continuous probability distributions (i.e., gamma, Cauchy, normal, logistic, and exponential) have been reviewed by Samuel et al. [3] and Arnold et al. [1].

Based on a random sample of

with

Shannon [5] was the first author who introduced the entropy idea for a random variable (r.v.)

Here,

When

Mathai et al. [6] and Sebastian [7] discussed the main property allied with Eq. (3). In other words, applying the maximum entropy and using its normalization version together with energy restrictions will result in the well-recognized pathway-model as provided by Mathai [8]. However, this model contains many special cases of familiar probability distributions.

Theoretical surveys and applications employing the measurement information are distributional dependents, and they may be found to be not appropriate in circumstances once the distribution is analytically not tractable. Hence, utilizations of quantile function are considered as an alternative method, where

We refer the readers to Nair et al. [9] and Sunoj et al. [10] and references therein for more details about quantile function. Recently, Sunoj et al. [11] studied Shannon entropy and as well as its residual and introduced quantile versions of them defined as

and

respectively, where

For Shannon past entropy, Sunoj et al. [11] also introduced its quantile version and defined it as

In the present paper, we work with the order statistics, propose the quantile-based version of M-H entropy and discuss its properties. The M-H divergence measure is also considered and we establish some of its distribution free properties. In addition, we introduce the version of the quantile-based residual for the M-H entropy and prove some characterization results. To the best of our knowledge, the results presented here, treat a research gap that has not been addressed or studied systematically by others, which was the primary motivation of our paper.

The paper is outlined as follows. Section 2 is devoted to the construction of our quantile-based M-H entropy and its properties. Next, expressions for the quantile-based version of M-H entropy for some life-time distributions are presented in Section 3. A quantile-based generalized divergence measure of

2 Quantile Based M-H Entropy of

Wong et al. [12], Park [13], Ebrahimi et al. [14] and Baratpour et al. [15] are the authors who discuss in detail the aspects of information-theoretic based on order statistics. Paul et al. [16] considered the M-H entropy and, based on record values, studied some of its essential properties. For

where

where

Remark 2.1: For

which is the quantile entropy of

3 Expressions for Some Distributions

In the following, we provide expressions for Quantile-based M-H entropy of order statistics for some life time distributions:

(i) Govindarajulu’s Distribution: The quantile version and the corresponding density functions, respectively, are

Using Eq. (6), we can easily obtain quantile-based M-H Entropy of

Similarly, based on the quantile and quantile density functions, we obtain the quantile-based for the M-H Entropy (

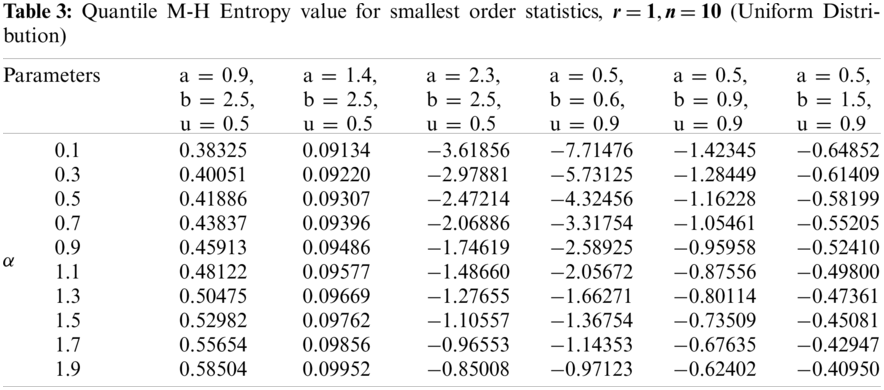

(ii) Uniform Distribution:

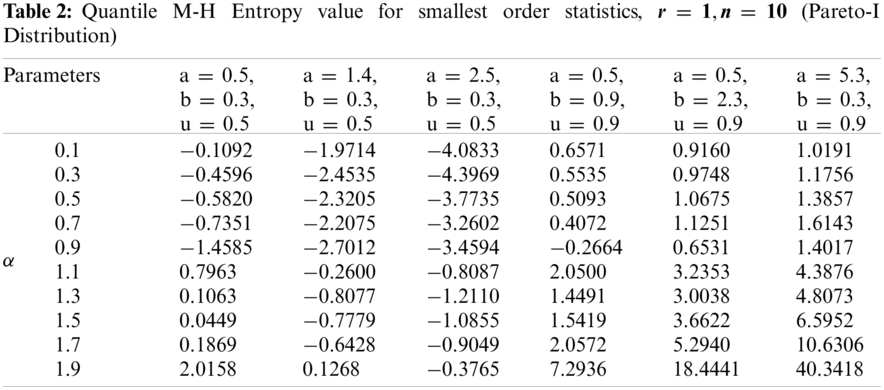

(iii) Pareto-I Distribution:

(iv) Exponential distribution:

(v) Power distribution

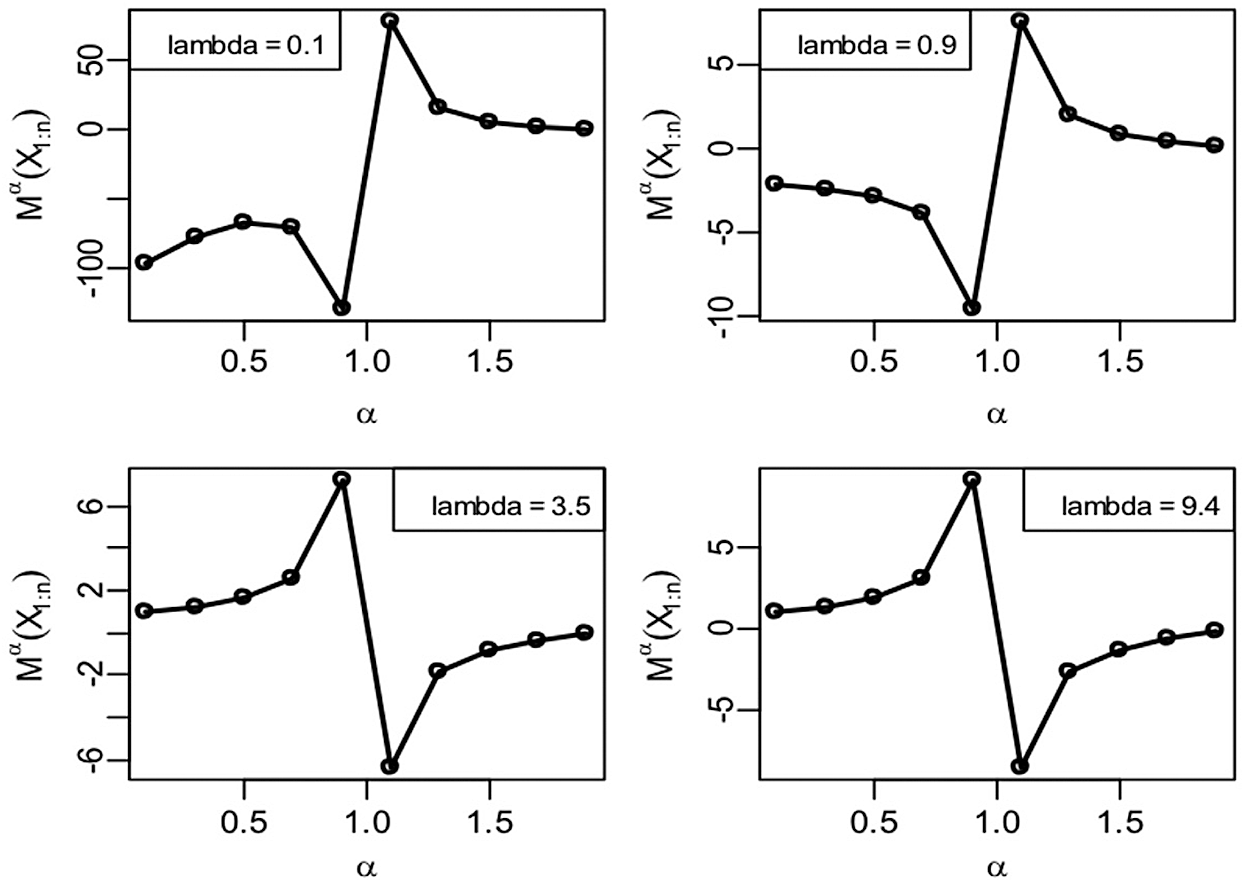

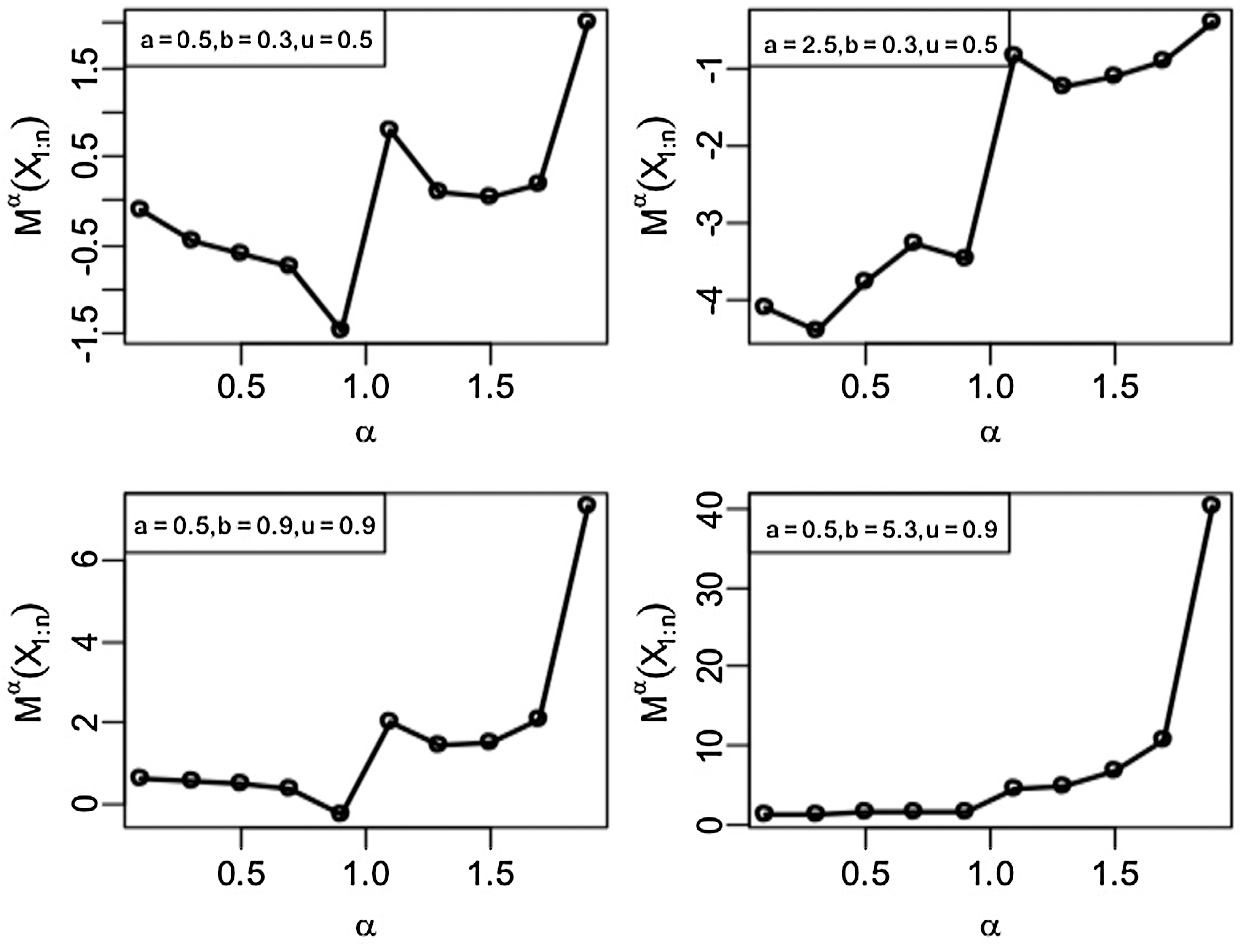

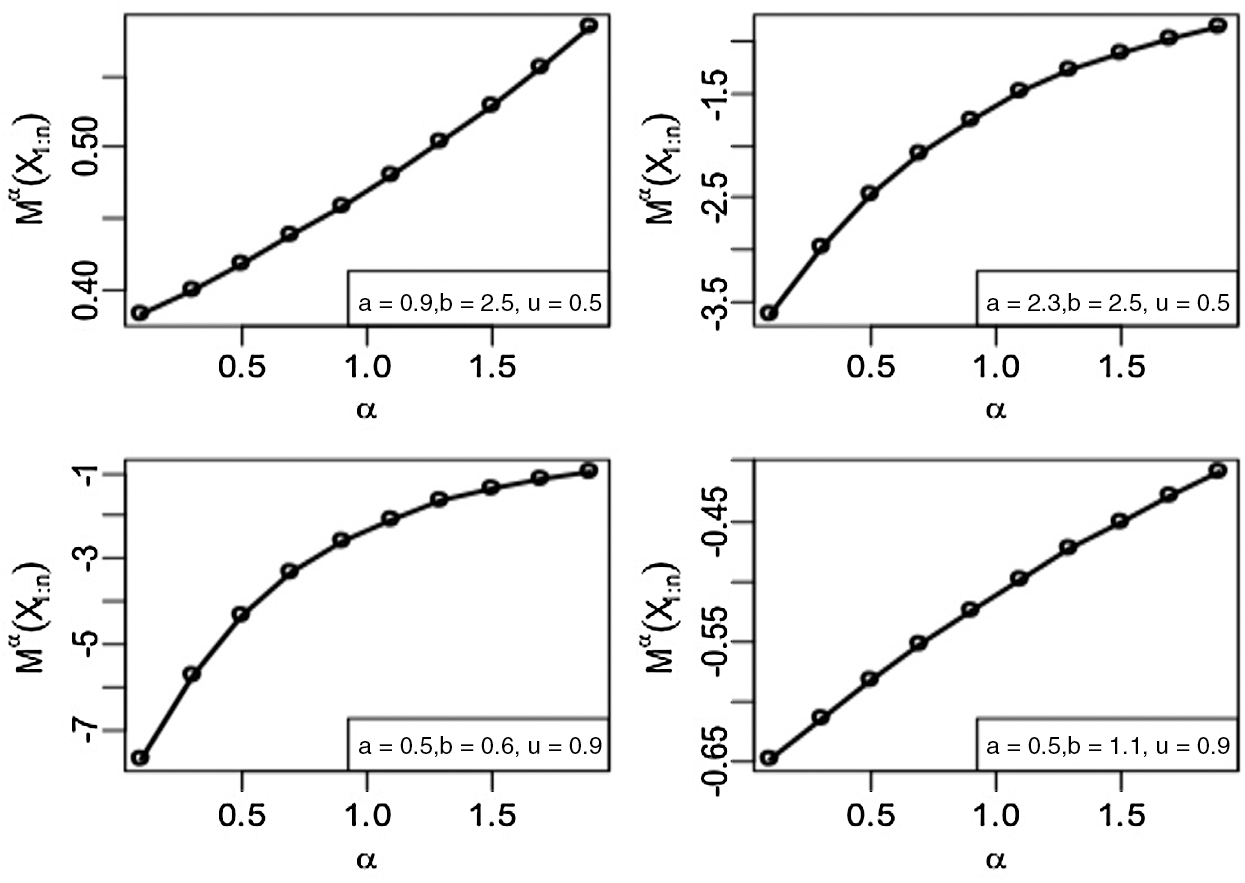

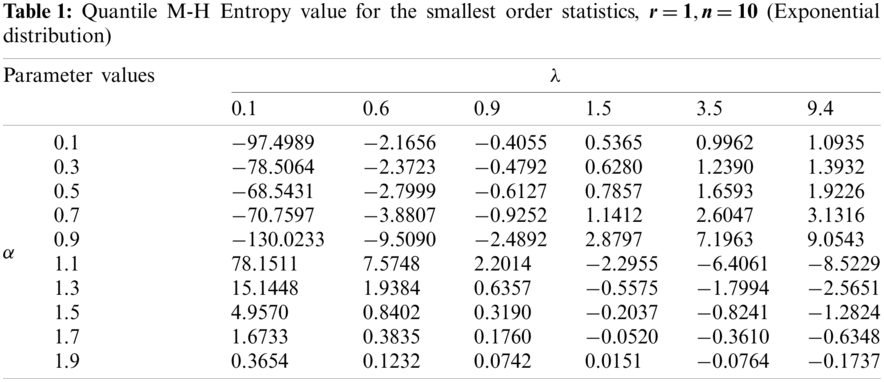

Figs. 1–3 give the quantile version of M-H entropy plots of smallest order statistics under exponential, Pareto-I and uniform distributions, respectively.

Figure 1: Quantile M-H entropy plots of smallest order statistics (Exponential distribution)

Figure 2: Quantile M-H entropy plots of smallest order statistics (Pareto-I Distribution)

For an increasing value of parameters

Figure 3: Quantile M-H entropy plots of smallest order statistics (Uniform Distribution)

Clearly, we see from Tabs. 1–3 that the entropy values under exponential, Pareto-I and uniform distributions portray the same behaviour as discussed in the graphical plots.

4 Quantile-Based Generalized Divergence Measure of

Different measures deal with the dissimilarity or the distance between two probability distributions. Certainly, these measures are essential in theory, inferential statistics, applied statistics and data processing sciences, such as comparison, classification, estimation, etc.

Assume

With order

where, for

Theorem 4.1: The quantile-based-generalized divergence measure between the

Proof: From equation Eq. (8), we have

Now, using the value of

Using the fact that

which is a distribution-free. Hence, the theorem is proved.

5 M-H Quantile Residual Entropy for

Entropy functions are very popular in the applications of finance and tectonophysics, machine learning, reliability theory, etc. However, in reliability and real-life applications, the life test time is truncated at a specific time, and in such situations, Eq. (2) is not an appropriate measure. Therefore, Shannon's entropy is not an adequate measure when we have knowledge about the component's current age, which can be used when determining its uncertainty. Ebrahimi [14] describes a more practical approach that considers the use of age, defined as

with

The M-H residual entropy for the

where

Considering the

The following theorem will state important result.

Theorem 5.1: Considering the

Proof: Using equation Eq. (10), we obtain

Differentiate both sides with respect to (w.r.t)

where

Next, we make the derivation of the quantile form of M-H residual entropy of the

(i) Govindarajulu’s Distribution

The quantile for the Govindarajulu distribution is

and the corresponding density is

The quantile residual entropy function of M-H of

Similarly, based on the quantile and quantile density functions, we obtain the quantile-based residual M-H Entropy of

(ii) Uniform Distribution

(iii) Pareto-I Distribution

(iv) Exponential distribution

(v) Power distribution

Based on residual M-H quantile entropy of order statistics

Definition 5.1:

The following lemma is useful in proving the results in monotonicity of

Lemma 5.1: Let

Theorem 5.2: Let

(i) For

(ii) For

Proof: (i) The quantile density of

Thus, we have

From the given condition,

is increasing in

Since

Let

6 Characterization Theorems Based on M-H Quantile Residual Entropy

This section provides some characterizations for the quantile M-H residual entropies of the smallest and largest order statistics. The corresponding quantile M-H residual entropy can be determined by substituting

Now, we define the hazard and the reversed hazard functions for the quantile version which are, respectively, corresponding to the well-recognized hazard rate and reversed hazard rate functions, as

In numerous practical circumstances, the uncertainty is essentially not identified with the future. Therefore, it can likewise allude to the past. This thought empowered Crescenzo et al. [19] to build up the idea of past entropy on

with

The quantile form of past M-H residual entropy of

For sample maxima

Next, we state some properties based on quantile M-H residual entropy of the smallest order statistics.

Theorem 6.1: Let

if and only if:

(i)

(ii)

(iii)

Proof: Assume that the conditions in Eq. (15) are held. Then, using Eq. (12), we have

Therefore, using

This implies

Theorem 6.2: For the exponential distribution, the difference between quantile-based M-H residual entropy of the life-time of the series system

Proof: For the exponential distribution, we have

Therefore,

which complete the prove of Theorem 6.2.

Theorem 6.3: Let

if and only if

Proof: The quantile and quantile density functions for the power distribution are, respectively,

It is simple to show that

Conversely, let Eq. (17) is valid. Thus, using Eq. (14), we determine

Substituting

Taken the derivative (w.r.t)

where

7 Simulation Study and Application to Real Life Data

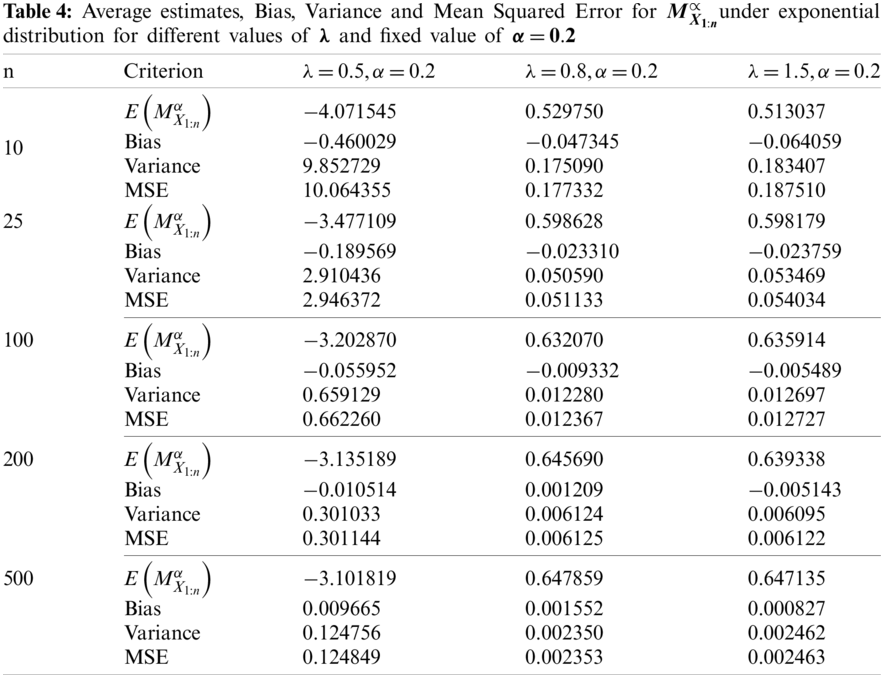

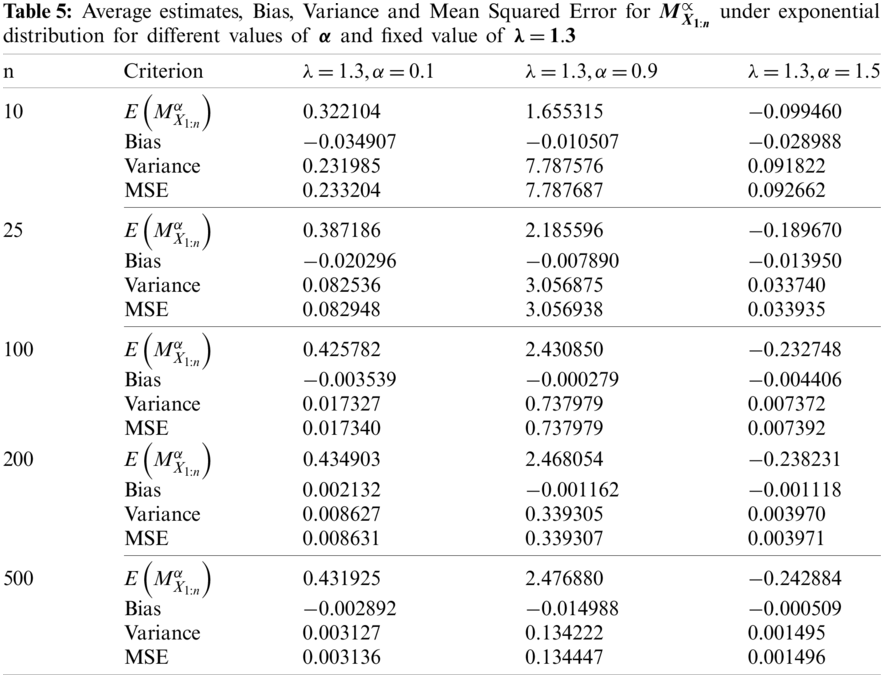

In this paper, the quantile-based M-H entropy is proposed for some distributions. However, based on the available real data and to keep the simulation study related to the application part, we investigate the performance of the quantile-based M-H entropy for the exponential distribution.

We conducted simulation studies to investigate the efficiency of the quantile-based M-H entropy estimators of smallest order statistics for exponential distribution

From the results of the simulation study (see Tabs. 4 and 5), conclusions are drawn regarding the behaviour of the entropy estimator in general, which are summarized below:

1. The ML estimates of

2. When sample size n is increased, the MSE and variance of

7.2 Application to Real Life Data

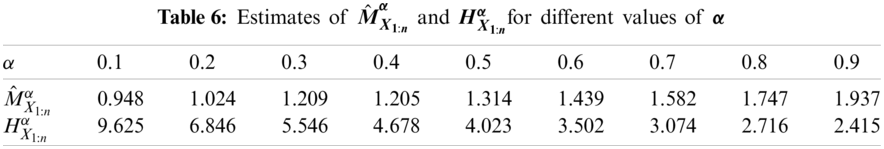

The real data in this section represents the failure times of 20 mechanical components that were used previously by Murthy et al. [20] for investigating some of Weibull models. The data values are: 0.067, 0.068, 0.076, 0.081, 0.084, 0.085, 0.085, 0.086, 0.089, 0.098, 0.098, 0.114, 0.114, 0.115, 0.121, 0.125, 0.131, 0.149, 0.160, 0.485. We use this data for two main purposes: (i) for investigating the performance of our quantile-base M-H entropy (

Based on this data, we used first the maximum likelihood method to estimate the exponential distribution parameter,

It should be noted that the estimated values of

The key focus of this article is to propose new quantile-based Mathai-Haubold entropy and investigate its characteristics. We also considered the divergence measure of the Mathai-Haubold and established some of its properties. Further, based on order statistics, we propose the residual entropy of the quantile-based Mathai-Haubold and some of its property results are proved. The performance of the proposed quantile-based Mathai-Haubold entropy is investigated by simulation studies and also by using real data application example. The proposed quantile-based Mathai-Haubold entropy's performance is investigated by simulation studies and by using a real data application example. We found that the ML estimates of

Quantile functions are efficient and equivalent alternatives to distribution functions in modeling and analysis of statistical data. The scope of these functions and the probability distributions are essential in studying and analyzing real lifetime data. One reason is that they convey the same information about the distribution of the underlying random variable X. However, even if sufficient literature is available on probability distributions' characterizations employing different statistical measures, little works have been observed for modeling lifetime data using quantile versions of order statistics. Therefore, future work is necessary for enriching this area, and for this reason, we give precise recommendations for future research. First, the results obtained in this article are general because they can be reduced to some of the results for quantile based Shannon entropy for order statistics once parameter approaches unity. Recently, a quantile version of generalized entropy measure for order statistics for residual and past lifetimes was proposed by Kumar et al. [22]. Nisa et al. [23] presented a quantile version of two parametric generalized entropy of order statistics residual and past lifetimes and derived some characterization results. Moreover, Qiu [24] studied further results on quantile entropy in the past lifetime and gave the quantile entropy bounds in the past lifetime for some ageing classes. The ideas presented by these mentioned papers can be somehow combined/merged with our results in this paper to produce more results and properties for the quantile-based Mathai-Haubold. Second, Krishnan [25] recently introduced a quantile-based cumulative residual Tsallis entropy (CRTE) and extended the quantile-based CRTE in the context of order statistics. Based on these new results and our proposed quantile M-H entropy in this paper, one can follow Krishnan [25] and derive a quantile-based cumulative residual M-H entropy and extend it in the context of order statistics. Finally, Krishnan [25] also proposed a cumulative Tsallis entropy in a past lifetime based on quantile function. As an extension, the cumulative M-H entropy in a past lifetime based on quantile function can also be derived.

Acknowledgement: The authors would like to thank the anonymous reviewers and the editor for their useful suggestions and comments which increase and improve the quality of this paper.

Funding Statement: Authors thank and appreciate funding this work by the Deanship of Scientific Research at King Khalid University through the Research Groups Program under the Grant No. (R.G.P. 2/82/42).

Conflicts of Interest: The authors declare that they have no conflicts of interest to report regarding the present study.

1. Arnold, B. C., Blakrishan, N., Nagraja, N. H. (1992). A first course in order statistics. New York: John Wiley and Sons. [Google Scholar]

2. Malik, J. H., Balakrishnan, N., Ahmad, S. E. (1998). Recurrence relations and identities for moments of order statistics I: Arbitrary continuous distributions. Communication in Statistics–Theory and Methods, 17(8), 2623–2655. DOI 10.1080/03610928808829762. [Google Scholar] [CrossRef]

3. Samuel, P., Thomas, P. Y. (2000). An improved form of a recurrence relation on the product moment of order statistics. Communication in Statistics-Theory and Methods, 29(7), 1559–1564. DOI 10.1080/03610920008832563. [Google Scholar] [CrossRef]

4. David, H. A. (1981). Order statistics. Second Edition. New York: John Willey and Sons. [Google Scholar]

5. Shannon, C. E. (1948). A mathematical theory of communication. Bell System Technical Journal, 27, 379–423 and 623–656. DOI 10.1002/j.1538-7305.1948.tb01338.x. [Google Scholar] [CrossRef]

6. Mathai, A., Haubold, H. (2007). On generalized entropy measures and pathways. Physica A: Statistical Mechanics and its Applications, 385(2), 493–500. DOI 10.1016/j.physa.2007.06.047. [Google Scholar] [CrossRef]

7. Sebastian, N. (2015). Generalized pathway entropy and its applications in diffiusion entropy analysis and fractional calculus. Communications in Applied and Industrial Mathematics, 6(2), 1–20. DOI 10.1685/journal.caim.537. [Google Scholar] [CrossRef]

8. Mathai, A. (2005). A pathway to matrix-variate gamma and normal densities. Linear Algebra and its Applications, 396, 317–328. DOI 10.1016/j.laa.2004.09.022. [Google Scholar] [CrossRef]

9. Nair, N. U., Sankaran, P. G. (2009). Quantile-based reliability analysis. Communication in Statistics-Theory and Methods, 38, 222–232. DOI 10.1080/03610920802187430. [Google Scholar] [CrossRef]

10. Sunoj, S. M., Krishnan, A. S., Sankaran, P. G. (2017). Quantile-based entropy of order statistics. Journal of the Indian Society for Probability and Statistics, 18(1), 1–17. DOI 10.1007/s41096-016-0014-4. [Google Scholar] [CrossRef]

11. Sunoj, S. M., Sankaran, P. G. (2012). Quantile based entropy function. Statistics and Probability Letters, 82(6), 1049–1053. DOI 10.1016/j.spl.2012.02.005. [Google Scholar] [CrossRef]

12. Wong, K. M., Chen, S. (1990). The entropy of ordered sequences and order statistics. IEEE Transaction Information Theory, 36, 276–284. DOI 10.1109/18.52473. [Google Scholar] [CrossRef]

13. Park, S. (2007). The entropy of consecutive order statistics. IEEE Transaction Information Theory, 41(6), 2003–2007. DOI 10.1109/18.476325. [Google Scholar] [CrossRef]

14. Ebrahimi, N. (1996). How to measure uncertainty in the residual lifetime distribution. Sankhya SerA, 58, 48–56. [Google Scholar]

15. Baratpour, S., Ahmadi, J., Arghami, N. R. (2007). Some characterizations based on entropy of order statistics and record values. Communications in Statistical-Theory and Methods, 36, 47–57. DOI 10.1080/03610920600966530. [Google Scholar] [CrossRef]

16. Paul, J., Yageen, P. (2019). On some properties of Mathai-Haubold entropy of record values. Journal of the Indian Society for Probability and Statistics, 20(1), 31–49. DOI 10.1007/s41096-019-00061-y. [Google Scholar] [CrossRef]

17. Kullback, S., Leibler, R. A. (1951). On information and sufficiency. Annals of Mathematical Statistics, 22, 79–86. DOI 10.1214/aoms/1177729694. [Google Scholar] [CrossRef]

18. Dar, J. G., Al-Zahrani, B. (2013). On some characterization results of life time distributions using Mathai-Haubold residual entropy. International Organization of Scientific Research, 5(4), 56–60. DOI 10.9790/5728-0545660. [Google Scholar] [CrossRef]

19. Crescenzo, A. D., Longobardi, M. (2002). Entropy based measure of uncertainty in past lifetime distribution. Journal of Applied probability, 39(2), 434–440. DOI 10.1239/jap/1025131441. [Google Scholar] [CrossRef]

20. Murthy, D. N. P., Xie, M., Jiang, R. Y. (2004). Weibull models. USA: John Wiley & Sons. [Google Scholar]

21. Kumar, V. R. (2018). A quantile approach of Tsallis entropy for order statistics. Physica A: Statistical Mechanics and its Applications, 503, 916–928. DOI 10.1016/j.physa.2018.03.025. [Google Scholar] [CrossRef]

22. Kumar, V., Singh, N. (2019). Quantile-based generalized entropy of order (α, β) for order statistics. Statistica, 78(4), 299–318. DOI 10.6092/issn.1973-2201/8525. [Google Scholar] [CrossRef]

23. Nisa, R., Baig, M. A. K. (2019). Characterization results based on quantile version of two parametric generalized entropy of order statistics. Journal of Probability and Mathematical Statistics, 1, 1–17. DOI 10.23977/jpms.2019.11001. [Google Scholar] [CrossRef]

24. Qiu, G. (2019). Further results on quantile entropy in the past lifetime. Probability in the Engineering and Informational Sciences, 33(1), 149–159. DOI 10.1017/s0269964818000062. [Google Scholar] [CrossRef]

25. Krishnan, A. S. (2019). A study on some information measures using quantile functions (Ph.D. Thesis). Cochin University of Science and Technology, India. [Google Scholar]

| This work is licensed under a Creative Commons Attribution 4.0 International License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. |